COOPERATIVE TELEOPERATION TASK IN VIRTUAL

ENVIRONMENT

Influence of Visual Aids and Oral Communication

Sehat Ullah, Samir Otmane and Malik Mallem

IBISC Laboratory, University of Evry, 40 rue de Pelvoux, Evry, France

Paul Richard

LISA Laboratory, University of Angers, 62 av Notre Dame du Lac, France

Keywords:

CVEs, Cooperative teleoperation, Parallel robots, SPIDAR, Human performance, Multimodal feedback.

Abstract:

Cooperative virtual environments, where users simultaneously manipulate objects, is one of the subfields of

Collaborative virtual environments (CVEs). In this paper we simulate the use of two string based parallel

robots in cooperative teleoperation task, Two users setting on separate machines connected through local net-

work operate each robot. In addition, the article presents the use of sensory feedback (i.e shadow, arrows and

oral communication) and investigates their effects on cooperation, presence and users performance. Ten vol-

unteers subject had to cooperatively perform a peg-in-hole task. Results revealed that shadow has a significant

effect on task execution while arrows and oral communication not only increase users performance but also

enhance the sense of presence and awareness. Our investigations will help in the development of teleoperation

systems for cooperative assembly, surgical training and rehabilitation systems.

1 INTRODUCTION

A CVE is a computer generated world that enables

people in local/remote locations to interact with syn-

thetic objects and representations of other participants

within it. The applications of such environments are

in military training, telepresence, collaborative de-

sign and engineering and entertainment. Interaction

in CVE may take one of the following form (Otto

et al., 2006): Asynchronous: It is the sequential ma-

nipulation of distinct or same attributes of an object,

for example a person changes an object position, then

another person paints it. Another example is, if a per-

son moves an object to a place, then another person

moves it further.

Synchronous: It is the concurrent manipulation of dis-

tinct or the same attributes of an object, for example

a person is holding an object while another person is

painting it, or when two or many people lift or dis-

place a heavy object together.

In order to carry out a cooperative task efficiently,

the participants need to feel the presence and actions

of others and have means of communication with each

other. The communication may be verbal or non ver-

bal such as pointing to, looking at or even through

gestures or facial expressions. We implement the VE

designed for cooperative work in replicated architec-

ture and seek solution to network load/latency and

consistency in unique way. Similarly to make cooper-

ative work easier and intuitive we augment the envi-

ronment with audio and visual aids. Moreover we in-

vestigate the effect of these sensory feedback on user

performance in a peg-in-hole task.

This section is followed by the related work, Sec-

tion 3 describes the proposed system. Section 4 dis-

cusses the experiment and results analysis. Section 5

is dedicated to conclusion future work.

2 RELATED WORK

A lot of work has already been done in the field

of CVE, for example MASSIVE provides a collabo-

rative environment for teleconferencing (Greenhalgh

and Benford, 1995). Most of this collaborative work

is pertinent to the general software sketch and the un-

derlying network architecture (Chastine et al., 2005;

Shirmohammadi and Georganas, 2001). Basdogan

et al. have investigated the role of force feedback

in cooperative task. They connected two monitors

374

Ullah S., Otmane S., Mallem M. and Richard P.

COOPERATIVE TELEOPERATION TASK IN VIRTUAL ENVIRONMENT - Influence of Visual Aids and Oral Communication.

DOI: 10.5220/0002166703740377

In Proceedings of the 6th International Conference on Informatics in Control, Automation and Robotics (ICINCO 2009), page

ISBN: 978-989-674-000-9

Copyright

c

2009 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

and haptic devices to a single machine (Basdogan

et al., 2001). Similarly, Eva-lotta et al. have reported

the effect of force feedback over presence, awareness

and task performance in a CVE. They connected two

monitors and haptic devices to a single host (Sall-

nas et al., 2000). Other important works that support

the cooperative manipulation of objects in a VE in-

clude (Jordan et al., 2002; Alhalabi and Horiguchi,

2001) but all theses systems require heavy data ex-

change between two nodes to keep them consistent.

Visual and auditory substitution has already been

used both in single user VR and teleoperation systems

to provide pseudohaptic feedback. The sensory sub-

stitution may be used as a redundant cue, due to lack

of appropriate haptic device or to avoid the possible

instabilities in case of real force feedback (Richard

et al., 1996).

3 DESCRIPTION OF THE

SYSTEM

We present our system that enables two remote users(

connected via LAN), to cooperatively manipulate

virtual objects using string based parallel robots in

the VE. In addition we present the use of visual

(shadow and arrows) aids and oral communication to

facilitate the cooperative manipulation.

Figure 1: Illustration of the virtual environment.

The VE for cooperative manipulation has a simple

cubic structure, consisting of three walls, floor and

ceiling. Furthermore the VE contains four cylinders

each with a distinct color and standing lengthwise in

a line. In front of each cylinder at some distance there

is a torus with same color. We have modeled two SP-

IDAR (3DOF) to be used as robots (Richard et al.,

2006)(see figure 1). At each corners of the cube a

motor for one of the SPIDAR has been placed. The

end effectors of the SPIDARs have been represented

by two spheres of distinct color. Each end effector

Figure 2: Illustration of the appearance of arrow.

uses 4 wires (same in color) for connection with its

corresponding motors.

We use two spheres which are identic in size but

different in colors (one is red and the other is blue)

to represent the two users. Each pointer controls the

movements of an end effector. Once a pointer col-

lides with its corresponding end effector, the later will

follow the movements of the former. In order to lift

and/or transport a cylinder the red end effector will

always rest on right and blue on left of the cylinder.

3.1 Use of Visual Aid and Oral

Communication in Cooperative

Work

Cooperative work is really a challenging research

area, for example the co-presence and awareness

about collaborator’s actions is essential. Similarly the

cooperating persons should also have some feedback

to know, when they can start together, or if there is

some interruption during task. For this purpose we

exploit visual(arrow and shadow) feedback and oral

communication.

If any user moves to touch a cylinder on its proper

side, an arrow appears pointing in the opposite direc-

tion of the force applied by the end effector. The

arrow indicates the collision between an end effec-

tor and cylinder. Similarly during the transportation,

if any user looses control of the cylinder, his/her ar-

row will disappear and the cylinder will stop moving.

Here the second user will just wait for the first one to

come back in contact with the cylinder. It means that

the two users will be aware of each other’s status via

arrows during task accomplishment.(see figure 2)

In order to have the knowledge of perspective po-

sitions of various objects in the VE, we make use of

shadow (see figure 1) for all objects in the environ-

ment. The shadows not only give information about

the two end effector’s contact with cylinder but also

provide feedback about the cylinder’s position with

COOPERATIVE TELEOPERATION TASK IN VIRTUAL ENVIRONMENT - Influence of Visual Aids and Oral

Communication

375

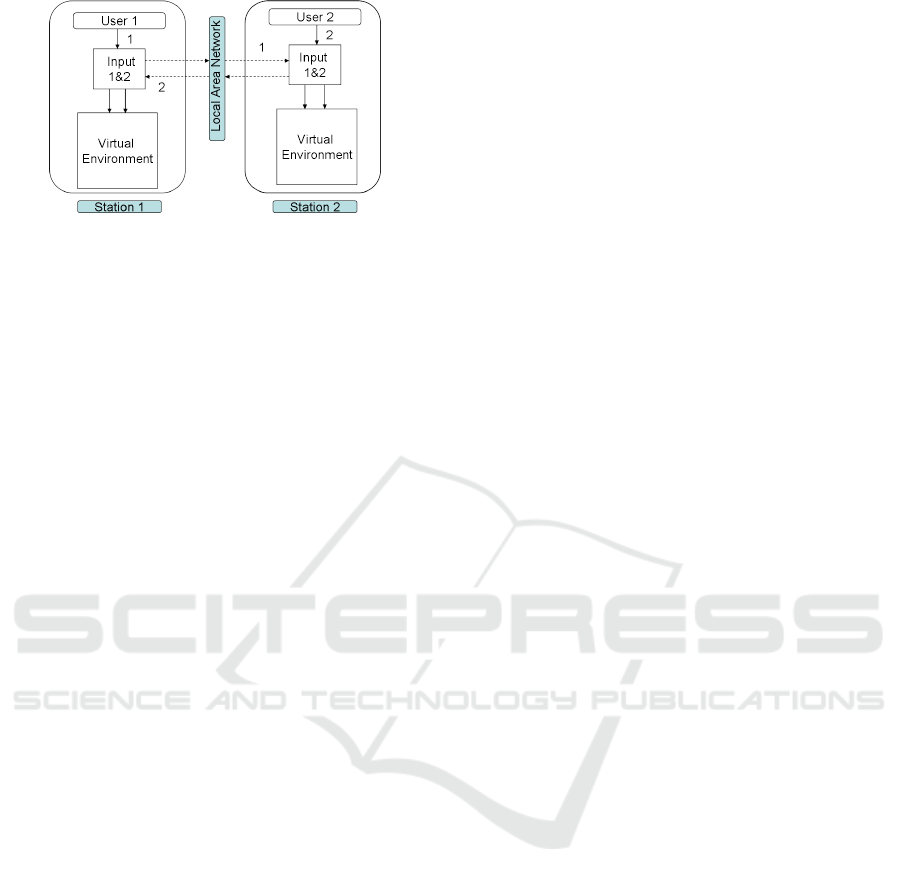

Figure 3: Illustration of the framework of cooperative vir-

tual environment.

respect to its corresponding torus during transporta-

tion.

Normally human beings frequently make use of

oral communication while performing a collaborative

or/and cooperative task. In order to accomplish the

cooperative work in a more natural manner, we in-

clude a module for oral communication in our system.

For this purpose we use TeamSpeak software that al-

lows the two users to communicate over the network

using a headphone equipped with microphone (tea, ).

3.2 Framework for Cooperative VE

The framework plays a very important role in the suc-

cess of collaborative and/or cooperative VEs. We use

a complete replicated approach and install the same

copy of the VE on two different machines. As the fig-

ure 3 depicts each VR station has a module which ac-

quires the input from the local user. This input is not

only applied to the local copy of the VE, but is also

sent to the remote station. It means that a single user

simultaneously controls the movement of two point-

ers (in our case a sphere) at two different stations, so

if this pointer triggers any event at one station, it is

also simultaneously applied at other station. In order

to have reliable and continuous bilateral streaming be-

tween the two stations, we use a peer-to-peer connec-

tion over TCP protocol. Here it is also worth mention-

ing that the frequently exchanged data between the

two stations is the position of the two pointers where

each is controlled by a user.

3.3 Experimental Setup

We installed the software on two pentium 4 type per-

sonal computers connected through Local network.

Each machine had processor of 3GHZ and 1GB mem-

ory. Each system is equipped with standard graphic

and sound cards. Both the systems used 24 inch plate

LCD tv screen for display. Similarly each VR system

is equipped with a patriot polhemus (pat, ) as input

device. The software was developed using C++ and

OpenGL Library.

4 EXPERIMENTATION

4.1 Procedure

Ten volunteers including five male and five female

participated in the experimentations. They were mas-

ter and PhD students. All the participants performed

the experiment with same person who was expert of

the domain and also of proposed system. They were

given a pre-trial in which they experienced all feed-

back. The users needed to start the application on

their respective machines. After the successful net-

work connection between the two computer the user

could see the two spheres (red and blue) as well as the

two end effector of SPIDARs on their screens. See-

ing the two spheres they were required to bring their

polhemus controlled spheres in contact with their re-

spective end effectors (i.e red+red and blue+blue ).

The red sphere was assigned to the expert while the

subjects were in charge of the blue one. In order to

pickup the cylinder the expert needs to touch it from

right while the subject should rest on its left. The

experiment was carried out under the following four

conditions. C1= only shadow, C2= shadow + arrows,

C3= shadow + arrows + oral communication, C4= No

aid All the ten groups performed the experiment us-

ing distinct counter balanced combinations of the four

conditions. We recorded the task completion time for

each cylinder. The time counter starts for a cylinder

once the two end effectors have an initial contact with

it, and stops when it is properly placed in the torus.

4.2 Task

The task was to cooperatively pick up a cylinder and

put it into the torus. The users were required to place

all the cylinder in their corresponding toruses in a sin-

gle trial. Each group performed exactly four trials un-

der each condition. The order of selection was also

the same for all groups i.e to start from the red, go on

sequentially and finish at yellow.

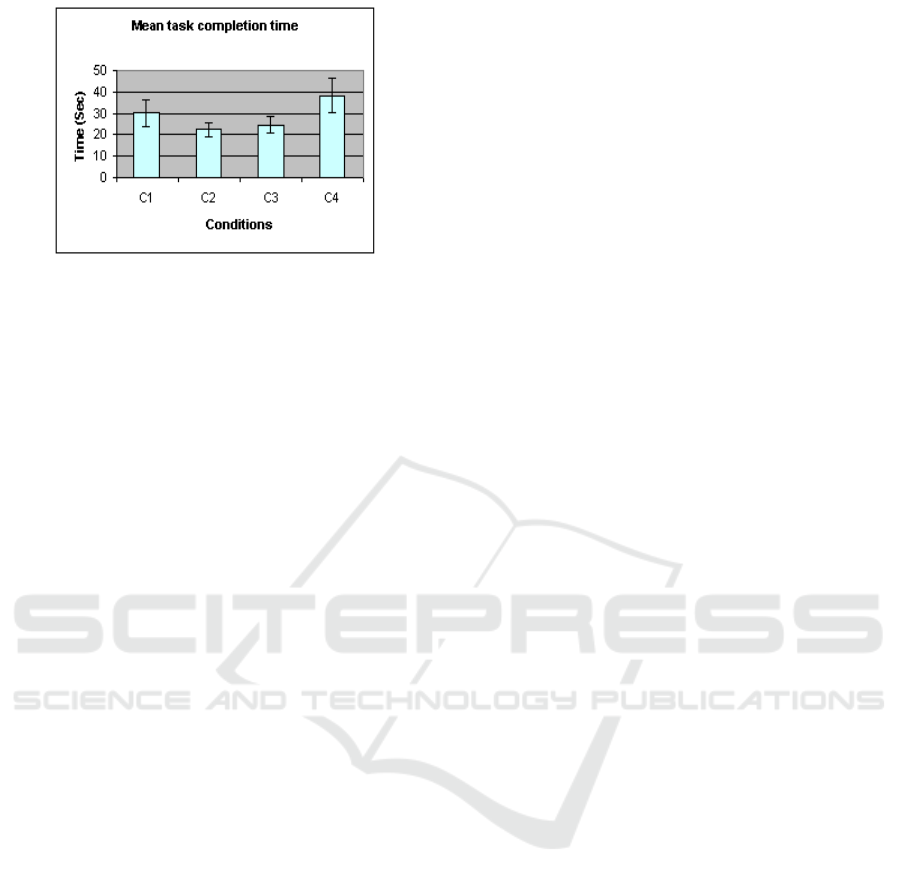

4.3 Task Completion Time

For task completion time the ANOVA (F(3,9)= 16.02,

p < 0.005) is significant. Comparing the task comple-

tion time of C1 and C2, We have 30.07 sec (std 6.17)

and 22.39 sec (std 3.10) respectively with a signifi-

cant ANOVA. This result shows that arrow has an in-

fluence on task performance. Similarly comparing C4

ICINCO 2009 - 6th International Conference on Informatics in Control, Automation and Robotics

376

Figure 4: Task completion time under various conditions.

(mean 38.31 sec , std 7.94) with C1 also gives signif-

icant ANOVA. This indicates that only ”shadow” as

compare to ”No aid” also increases user performance.

Now we compare the mean 22.39 sec (std 3.10) of

C2 with that of C3 (24.48 sec std 3.93), the ANOVA

result is not significative. It shows that users had al-

most the same level of performance under C2 and C3.

On the other hand the comparison of C2, C3 with C4

(mean 38.31 sec , std 7.94) both have statistically sig-

nificant results (see figure 4).

5 CONCLUSIONS

In this paper we simulate the use of two string based

parallel robots in cooperative teleoperation task, two

users setting on two separate machines connected

through local network operated each robot. In addi-

tion the article proposed the use of sensory feedback

(i.e shadow, arrows and oral communication) and in-

vestigated their effects on cooperation, co-presence

and users performance. We observed that visual cues

(arrows and shadow) and oral communication greatly

helped users to cooperatively manipulate objects in

the VE. These aids,specially arrows and oral commu-

nication also enabled the users to perceive each others

actions. Our investigations will help in the develop-

ment of teleoperation systems for cooperative assem-

bly, surgical training and rehabilitation systems. Fu-

ture work may be carried out to integrate the modality

of force feedback.

REFERENCES

www.polhemus.com.

www.teamspeak.com/.

Alhalabi, M. O. and Horiguchi, S. (2001). Tele-handshake:

A cooperative shared haptic virtual environment. in

Proceedings of EuroHaptics, pages 60–64.

Basdogan, C., Ho, C.-H., Srinivasan, M. A., and Slater, M.

(2001). Virtual training for a manual assembly task.

In Haptics-e, volume 2.

Chastine, J. W., Brooks, J. C., Zhu, Y., Owen, G. S., Har-

rison, R. W., and Weber, I. T. (2005). Ammp-vis: a

collaborative virtual environment for molecular mod-

eling. In VRST ’05: Proceedings of the ACM sympo-

sium on Virtual reality software and technology, pages

8–15, New York, NY, USA. ACM.

Greenhalgh, C. and Benford, S. (1995). Massive: a col-

laborative virtual environment for teleconferencing.

ACM Transactions on Computer Human Interaction,

2(3):239–261.

Jordan, J., Mortensen, J., Oliveira, M., Slater, M., Tay,

B. K., Kim, J., and Srinivasan, M. A. (2002). Col-

laboration in a mediated haptic environment. The 5th

Annual International Workshop on Presence.

Otto, O., Roberts, D., and Wolff, R. (2006). A review on ef-

fective closely-coupled collaboration using immersive

cve’s. In VRCIA ’06: Proceedings of the 2006 ACM

international conference on Virtual reality continuum

and its applications, pages 145–154. ACM.

Richard, P., Birebent, G., Coiffet, P., Burdea, G., Gomez,

D., and Langrana, N. (1996). Effect of frame rate

and force feedback on virtual object manipulation.

PRESENCE : Massachusetts Institute of Technology,

5(1):95–108.

Richard, P., Chamaret, D., Inglese, F.-X., Lucidarme, P.,

and Ferrier, J.-L. (2006). Human-scale virtual envi-

ronment for product design: Effect of sensory sub-

stitution. International Journal of Virtual Reality,

5(2):3744.

Sallnas, E.-L., Rassmus-Grohn, K., and Sjostrom, C.

(2000). Supporting presence in collaborative environ-

ments by haptic force feedback. ACM Trans. Comput.-

Hum. Interact., 7(4):461–476.

Shirmohammadi, S. and Georganas, N. D. (2001). An end-

to-end communication architecture for collaborative

virtual environments. Comput. Netw., 35(2-3):351–

367.

COOPERATIVE TELEOPERATION TASK IN VIRTUAL ENVIRONMENT - Influence of Visual Aids and Oral

Communication

377