FOCUSING WEB CRAWLS ON LOCATION-SPECIFIC

CONTENT

Lefteris Kozanidis, Sofia Stamou and George Spiros

Computer Engineering and Informatics Department, Patras University, Greece

Keywords: Location-sensitive web search, Focused crawling, Geo-referenced index.

Abstract: Retrieving relevant data for location-sensitive keyword queries is a challenging task that has so far been

addressed as a problem of automatically determining the geographical orientation of web searches. Unfortu-

nately, identifying localizable queries is not sufficient per se for performing successful location-sensitive

searches, unless there exists a geo-referenced index of data sources against which localizable queries are

searched. In this paper, we propose a novel approach towards the automatic construction of a geo-referenced

search engine index. Our approach relies on a geo-focused crawler that incorporates a structural parser and

uses GeoWordNet as a knowledge base in order to automatically deduce the geo-spatial information that is

latent in the pages’ contents. Based on location-descriptive elements in the page URLs and anchor text, the

crawler directs the pages to a location-sensitive downloader. This downloading module resolves the geo-

graphical references of the URL location elements and organizes them into indexable hierarchical struc-

tures. The location-aware URL hierarchies are linked to their respective pages, resulting into a geo-

referenced index against which location-sensitive queries can be answered.

1 INTRODUCTION

Locality is an important parameter in web search.

According to the study of (Wang et al., 2005a) 14%

of web queries have geographical intentions, i.e.

they pursue the retrieval of information that relates

to a geographical area. Moreover, (Wang et al.,

2005b) found that 79% of the web pages in .gov

domain contain at least one geographical reference.

Although location-sensitive web searches are gain-

ing ground (Himmelstein, 2005), still search engines

are not very effective in identifying localizable que-

ries (Welch and Cho, 2008). As an example, con-

sider the query [pizza restaurant in Lisbon] over

Google and assume that the intention of the user

issuing the query is to obtain pages about pizza res-

taurants that are located in Lisbon, Portugal. How-

ever, the page that Google retrieves second (as of

October 2008) in the list of results is about a pizza

restaurant in Lisbon New Hampshire, although New

Hampshire does not appear in the query keywords.

Likewise, for the query [Athens city public schools]

the pages that Google returns (up to position 20) are

about schools in Athens City Alabama rather than

Athens (Greece), although Alabama is not specified

as a search keyword. As both examples demonstrate,

ignoring the geographic scope of web queries and

the geographic orientation of web pages, results into

favouring popular pages over location –relevant

pages in the search engine results. Thus, retrieval

effectives is harmed for a large number of queries.

Currently, there are two main strategies towards

dealing with location-sensitive web requests. The

first approach implies the annotation of the indexed

pages with geospatial information and the equipment

of search engines with geographic search options

(e.g. Northern Light GeoSearch). In this direction,

researchers explore the services of available gazet-

teers (i.e. geographical indices) in order to associate

toponyms (i.e. geographic names) to their actual

geographic coordinates in a map (Markowetz, et al.,

2004) (Hill, 2000). Then, they store the geo-tagged

pages in a spatial index against which geographic

information retrieval is performed. The main draw-

backs of this approach are: First, traditional gazet-

teers do not encode spatial relationships between

places and as such their applicability to web retrieval

tasks is limited. Most importantly, general-purpose

search engines perform retrieval simply by exploring

the matching keywords between documents and que-

ries and without discriminating between topically

and geographically relevant data sources.

The second strategy suggests processing both

queries and query matching pages in order to the

geographic orientation of web searches (Yu and Cai,

2007). Upon detecting the geographic scope of que-

244

Kozanidis L., Stamou S. and Spiros G.

FOCUSING WEB CRAWLS ON LOCATION-SPECIFIC CONTENT.

DOI: 10.5220/0001823002440249

In Proceedings of the Fifth International Conference on Web Information Systems and Technologies (WEBIST 2009), page

ISBN: 978-989-8111-81-4

Copyright

c

2009 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

ries, researchers have proposed different functions

for scoring the geographical vicinity between the

query and the search results in order to enable geo-

graphically-sensitive web rankings (Martins et al.,

2005) Again, such techniques, although useful, they

require extensive computations on large volumes of

data before deciphering the geographic intention of

queries and thus they have a limited scalability in

serving dynamic queries that come from different

users with varying geographic intentions.

In this paper, we address the problem of improv-

ing the geographically-oriented web searches from

the perspective of a conventional search engine that

performs keyword rather than spatial searches. In

particular, we propose a technique that automatically

builds a geo-referenced search engine index against

which localizable queries are searched. The novelty

of our approach lies on the fact that, instead of post-

processing the indexed documents in order to derive

their location entities (e.g. cities, landmarks), we

introduce a geo-focused web crawler that automati-

cally identifies pages of geographic orientation, be-

fore these are downloaded and processed by the en-

gine’s indexing modules.

In brief, our crawler operates as follows. Given a

seed list of URLs and a number of tools that are lev-

eraged from the NLP community the crawler looks

for location-specific entities in the page URLs and

anchor text. For the identification of location enti-

ties, the crawler explores the data encoded in Ge-

oWordNet (Buscaldi and Roso, 2008). Based on the

location-descriptive elements in the page’s structural

content, the crawler directs the pages to a location-

sensitive downloading module. This module re-

solves the geographic references of the identified

location elements and organizes them into indexable

hierarchical structures. Every structural hierarchy

maintains URLs of pages whose geographic refer-

ences pertain to the same place. Moreover, location-

aware pages are linked to each other according to the

proximity (either spatial or semantic) of their loca-

tion names. Based on the above process, we end up

with a geo-referenced search engine index against

which location-sensitive queries can be searched.

The rest of the paper is organized as follows. We

start with an overview of relevant works. In section

3, we introduce our geo-focused crawler and we

describe how it operates for populating a search en-

gine index with geo-referenced data. In section 4, we

discuss the advantages of our crawler and we report

some preliminary results.

2 RELATED WORK

Related work falls into two main categories, namely

focused crawling and Geographic Information Re-

trieval (GIR). GIR deals with indexing, searching

and retrieving geo-referenced information sources.

Most of the works in this direction identify the geo-

graphical aspects of the web either by focusing on

the physical location of web hosts (Borges et al.,

2007) or by processing the web pages’ content in

order to extract toponyms (Smith and Mann, 2003)

or other location-descriptive elements (Amitay et al.,

2004). Ding et al. (2000) adopted a gazetteer-based

approach and proposed an algorithm that analyzes

resource links and content in order to detect their

geographical scope. To obtain spatial data from the

web pages’ contents, Silva et al. (2006) and Fu et al.,

(2005) rely on geographic ontologies. One such on-

tology is GeoWordNet (Buscaldi and Roso, 2008)

that emerged after enriching WordNet (Fellbaum,

1998) toponyms with geographical coordinates. Be-

sides the identification of geographically-oriented

elements in the pages’ contents, researchers have

proposed various schemes for ranking search results

for location-aware queries according to their geo-

graphical relevance (Yu and Cai, 2007).

Our study relates also to existing works on focus-

ing web crawls to specific web content. In this re-

spect, most of the proposed approaches are con-

cerned with focusing web crawls on pages dealing

with specific topics (Chakrabarti et al., 1999)

(Chung and Clarke, 2002).

In the recent years, the exploitation of focused

crawlers has been addressed in the context of geo-

graphically-oriented data. Exposto et al (2005) stud-

ied distributed crawling by means of the geographi-

cal partition of the web and by considering the

multi-level partitioning of the reduced IP web link

graph. Later, Gao et al. (2006) proposed a method

for geographically focused collaborative crawling.

Their crawling strategy considers features like the

URL address of a page, content, anchor text of links,

etc. to determine the way and the order in which

unvisited URLs are listed in the crawler’s queue so

that geographically focused pages are retrieved.

Although, our study shares a common goal with

existing works on geographically-focused crawls,

our approach for identifying location-relevant web

content is novel in that it integrates GeoWordNet for

deriving the location entities that the crawler consid-

ers. This way, our method eliminates any prior need

for training the crawler with a set of pages that are

pre-classified in terms of their location denotations.

3 GEO-FOCUSED CRAWLING

To build our geo-focused crawler, there are two

challenges that we need to address: how to make the

FOCUSING WEB CRAWLS ON LOCATION-SPECIFIC CONTENT

245

crawler identify web sources of geographic orienta-

tion, and how to organize the unvisited URLs in the

crawler’s frontier so that pages of great relatedness

to the concerned locations are retrieved first.

In the course of our study, we relied on a gen-

eral-purpose web crawler that we parameterized in

order to focus its web walkthroughs on geographi-

cally specific data. In particular, we integrated to a

generic crawler a URL and anchor text parser in

order to extract lexical elements from the pages’

structural content and we used GeoWordNet as the

crawler’s backbone resource against which to iden-

tify which of the extracted meta-terms correspond to

location entities. GeoWordNet contains a subset of

WordNet synsets that correspond to geographical

entities and which are inter-connected via the hierar-

chical semantic relations. In addition, all location

entities in GeoWordNet are annotated with their

corresponding geographical coordinates.

Given a seed list of URLs, the crawler needs to

identify which of these correspond to pages of geo-

graphic orientation and thus they should be visited.

To judge that, the crawler incorporates a structural

parser that looks for the presence of location entities

in the page URL and the anchor text of the page

links. To identify location entities in the URL, the

parser simply processes the

admin-c section of the

whois entry of a URL, since in most cases this

section corresponds exactly to the location for which

the information in the page is relevant (Markowetz

et al., 2004). In addition, to detect location entities in

the anchor text of a link in a page, the parser oper-

ates within a sliding window of 50 tokens surround-

ing the link in order to extract the lexical elements

around it. To attest which of the terms in the page

URL and anchor text represent location entities, we

rely on the data encoded in GeoWordNet. The basic

steps that the crawler follows to judge if a page is

geographically-focused are illustrated in Figure 1.

The intuition behind applying structural parsing

to the URLs in the crawler’s seed list is that pages-

containing location entities in their URLs and anchor

text links, have some geographic orientation and as

such they should be visited by the crawler. Based on

the above steps, the crawler filters its seed list and

removes URLs of non-geographic orientation. The

Input: seed list of URLs (U), parser (P), GeoWordNet (GWN)

Output: annotated URLs with geographic orientation G(U)

For each URL u in U do

Use parser P to identify meta-terms

/*detect location entiries*/

For each meta-term t in u do

Query GWN using t

If found

Add t(u) in G(u)

end

end

end

Figure 1: Identifying URLs of geographic orientation.

remaining URLs, denoted as G(U) are considered to

be geographically-focused and are those on which

the crawler focuses its web visits.

Having selected the seed URLs on which the

crawler’s web walkthroughs should focus, the next

step is to organize the geographically-oriented URLs

in the crawler’s frontier. URLs’ organization practi-

cally translates into ordering URLs according to

their probability of guiding the crawler to other loca-

tion-relevant pages. Next, we present our approach

towards ordering unvisited URLs in the crawler’s

queue so as to ensure crawls of maximal coverage.

3.1 Ordering URLs in the Crawler’s

Frontier

A key element in all focused crawling applications is

ordering the unvisited URLs in the crawler’s frontier

in a way that reduces the probability that the crawler

will visit irrelevant sources. To account for that, we

have integrated in our geo-focused crawler a prob-

abilistic algorithm that estimates for every URL in

the seed list the probability that it will guide the

crawler to geographically-relevant pages. In addi-

tion, our algorithm operates upon the intuition that

the links contained in a page with high geographic-

relevance have increased probability of guiding the

crawler to other geographically oriented pages.

To derive such probabilities, we designed our al-

gorithm based on the following dual assumption.

The stronger the spatial correlation is between the

identified URL location entities, the increased the

probability that the URL points to geographic con-

tent. Moreover, the more location entities are identi-

fied in the anchor text of a link, the greater the prob-

ability that the link’s visitation will lead the crawler

to other geographically-oriented pages.

To estimate the degree of spatial correlation be-

tween the location entities identified for a seed URL,

we proceed as follows. We map the identified loca-

tion entities to their corresponding GeoWordNet

nodes and we compute the distance of their coordi-

nates, using the Map 24 AJAX API 1.28 (Map 24).

Considering that the shortest the distance between

two locations the increased their spatial correlation,

we compute the average distance between the URL

location entities and we apply the [1-avg. distance]

formula to derive their average spatial correlation.

We then normalize average values so that these

range between 1 (indicating high correlation) and 0

(indicating no correlation) and we rely on them for

inferring the probability that the considered URL

points to geographically-relevant content. This is

done by investigating how the average spatial corre-

lation of the URL location entities is skewed to-

wards 1. Intuitively, a highly skewed spatial correla-

WEBIST 2009 - 5th International Conference on Web Information Systems and Technologies

246

tion suggests that the URL has a clear geographic

orientation and thus it should be retrieved.

On the other hand, to estimate the probability

that a geographically-focused URL will guide the

crawler to other geographically-oriented pages, we

rely on the distribution of location entities in the

anchor text of the links that the page URL contains.

Recall that while processing anchor text, we have

already derived the location entities that are con-

tained in it. Our computations rely on the intuition

that the more location entities the anchor text of a

link contains, the more likely it is that the given link

will guide the crawler to a geographically oriented

page. To quantify the probability that a link in a

page points to a location-relevant resource, we com-

pute the percentage of the anchor text terms that

correspond to toponyms in GeoWordNet. This way,

the increased the fraction of toponyms in the anchor

text of a link, the greater the probability that this link

points to a geographically oriented page. Based on

the average values that the combination of the above

metrics deliver, our algorithm computes an overall

ranking score for every URL in the crawler’s seed

list and prioritizes URLs in the crawler’s queue ac-

cordingly (i.e. the URL of the highest rank value

appears first in the list). Figure 2, illustrates the steps

of our algorithm for ordering geographically-specific

URLs in the crawler’s frontier.

Based on the above process, the algorithm goes

over the data in the crawler’s seed list and estimates

for every seed URL the probability that it points to a

location-relevant page. Then, the crawler starts its

web visits from the URL with the highest probability

of being geographically-focused.

Moreover, as the crawler comes across new links

in the contents of the geographically focused pages

that it retrieves, our algorithm examines the anchor

text of these links and estimates for every link the

probability that it points to a location-relevant page.

Links with some probability of pointing to location-

relevant content are added in the crawler’s frontier

so that their pages are retrieved in future crawls. The

crawling priority of the newly added links (i.e. the

ordering of the URLs in the crawler’s frontier) is

determined by their Rank(u) values.

This way, we order URLs in the crawler’s queue

so as to ensure that every web visit remains focused

on location-specific content and that the crawler’s

frontier gets updated with new URLs that point to

geographically-specific content.

3.2 Toward a Geo-Referenced Index

So far, we have presented our geo-focused crawler

and we have described the algorithm that the crawler

integrates for organizing URLs in the frontier, so as

Input: G(U), GWN, Map24 Resource

Output: ordered URLs in the crawler’s frontier

For each URL u in U do

/*Compute coordinates of location entities in u*/

Extract all location entities t from u

For each t in u do

Query GWN using t

Retrieve coordinates of t

Add coordinates to t, c(t)

end

/*Compute avg. spatial correlation of location entities in u*/

For all c(t) in u do

Compute paired c(t) distance using Map24

Compute avg. distance of all c(T) in u

Use 1-avg.distance as avg. spatial correlation of c (T) in u

If avg. spatial correlation (u,T) >0.5

Add u to crawler’s frontier

end

end

/*Compute location focus in the anchor text of links in u*/

Extract anchor text for all links in u L(u)

For every link l in L(u) do

Compute location focus (l) =

= |# location entities in anchor (l)| / |# terms in anchor (l)|

If location focus (l) >0

Add l to crawler’s frontier

end

end

/* Compute ranking values for URLs u in frontier*/

For each u in frontier do

Compute Rank (u) =

= avg. spatial correlation (u) + location focus (u, l) /2

end

Return URLs ordered by Rank (u) values

Figure 2: Ordering URLs in the focused crawler’s frontier.

to ensure successful and affordable geographically-

specific crawls. We now turn our discussion on how

we process the crawled pages in order to index them

into a geo-referenced repository of data sources.

Crawled web pages are directed to a download-

ing module that retrieves their contextual data and

employs a vector space model (Salton et al., 1975)

for representing their contents. Every page is mod-

eled as a vector of terms that constitute the indexing

keywords of the page. To build our geo-referenced

index, we start with the identification of the page

keywords that denote location entities. In this re-

spect, we look the keywords up in GeoWordNet and

those found are extracted and further processed in

order to resolve their geographic references. Geo-

graphic references’ resolution practically translates

into determining the geographical orientation of the

page that contains the identified location entities. To

derive that, we map the location keywords of a page

to their corresponding GeoWordNet nodes and we

explore their hierarchical relations. Terms that are

linked to each other under a common location node

are deemed to be geographically relevant, i.e. they

refer to the same place.

To verbalize the geographic reference of a page,

we use the name of the location node under which

the page location entities are organized. Then, we

rely on the hierarchical relations among the location

entities of common geographic references in order to

represent the geographic orientation of the entire

page contents. That is, we model the geographic

FOCUSING WEB CRAWLS ON LOCATION-SPECIFIC CONTENT

247

orientation of a page as a small location hierarchy,

the root of which denotes the broad geographic area

to which the page refers (e.g. country name) and the

intermediate and leaf nodes represent specific loca-

tions in that area that the page discusses (e.g. city

names, landmarks, etc.). Having modelled the geo-

graphic orientation of every retrieved page as a

structural hierarchy, we label the hierarchies’ nodes

with their corresponding geographic coordinates that

we take from GeoWordNet.

At the end of this process, we end up with a set

of location hierarchies, each one representing a dif-

ferent geographical area. Every location hierarchy

constitutes a hierarchical index under which we store

the URLs of the pages that refer to that place. This

way, we end up with a geo-referenced index that

groups pages into location hierarchies according to

the relatedness of their geographic entities. This in-

dex can then be utilized for answering location-

sensitive queries.

4 DISCUSSION

In this paper, we introduced a novel approach to-

wards implementing a geo-focused web crawler and

we presented a method for building a geo-referenced

search engine index. Our crawler identifies pages of

geographic orientation simply by exploring the pres-

ence of location entities in the page URLs and an-

chor text. In this direction, the crawler consults the

data encoded in GeoWordNet and employs a number

of heuristics for deducing the pages and the order in

which these should be retrieved. Moreover, we have

presented a method for automatically building a geo-

referenced web index that conventional search en-

gines could employ for answering location-sensitive

queries. The innovations of our work pertain to the

following. First, our crawler automatically identifies

the geographic focus of a page without any prior

need for processing the page’s contents. Moreover,

our focused crawler runs completely unsupervised,

diminishing thus computational overheads associ-

ated with building training examples for learning the

crawler to detect its visitations’ foci. In addition, the

crawler directs the retrieved pages to a downloading

module, which processes their contents and indexes

them into location-aware hierarchies.

To evaluate the performance of our geo-focused

crawler, we run two preliminary experiments. In the

first experiment, we validated the crawler’s accuracy

in identifying geographically-relevant data, whereas

in the second experiment, we assessed the geo-

graphic-coverage of the crawler’s visits. To begin

with our experiments, we compiled a seed list of

URLs from which the crawler would start its web

walkthroughs. In selecting the seed URLs, we relied

on the pages organized under the Dmoz categories

[Regional: North America: United States] out of

which we picked a total set of 10 random URLs and

we used them for compiling the crawler’s seed list.

Based on these 10 seed URLs, we run our crawler

for a period of one week during which the crawler

downloaded a total set of 2.5 million pages as geo-

graphically specific data sources. Based on those

pages, we measured the accuracy of our geo-focused

crawler in retrieving geographically-relevant data.

To quantify the crawler’s accuracy, we estimated the

fraction of the pages that the crawler retrieved as

geographically relevant from all the visited pages

that have some geographic orientation. Formally, we

define accuracy as:

retrieved visited

accuracy P / P =

where |P

retieved

| denotes the number of pages that the

crawler retrieved as geographically-focused and

|P

visited

| denotes the number of geographically-

oriented pages that the crawler visited. To assess

which of the pages (visited and retrieved) do have

geographic orientation, we processed their contents

and looked for location entities among their ele-

ments, using GeoWordNet as our reference source.

Pages containing location entities in their contents

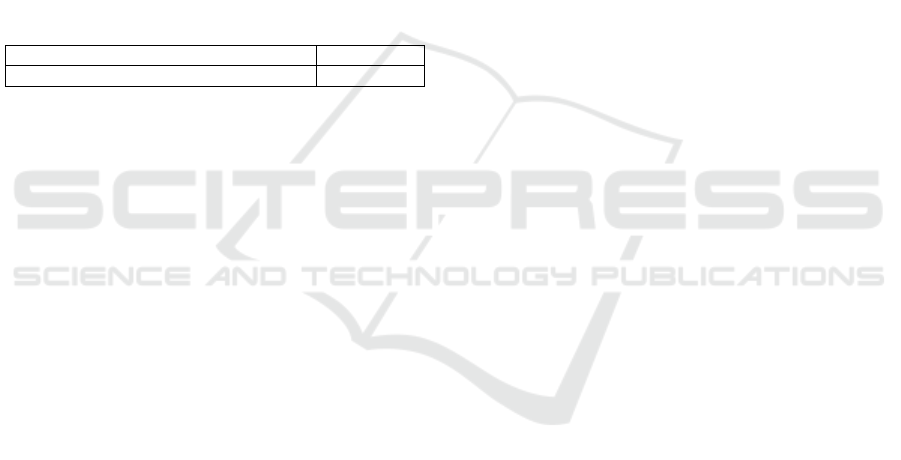

are deemed as geographically-oriented. Results, re-

ported in Table 1, indicate that our crawler has over-

all 89.28% accuracy in identifying geographically-

relevant data in its web visits.

Table 1: Geo-focused crawling accuracy.

As a second evaluation step, we estimated the

geographic coverage of the crawler’s visits, i.e. the

number of different location names that the crawler

identifies in the web pages it comes across. To quan-

tify the crawler’s geographic coverage, we measured

the fraction of distinct geographic entities in the re-

trieved pages’ contents. Formally, we compute the

crawler’s geographic coverage as:

distinct total

Coverage E / E = where E

distinct

denotes

the number of unique location entities in the page

contents and E

total

denotes the total number of loca-

tion entities. Results, reported in Table 2, show that

our geo-focused crawler can successfully retrieve

sources pertaining to distinct geographical areas,

ensuring thus complete and qualitative web crawls.

Table 2: Crawler’s coverage of location entities.

Number of all location entities identified 1,265

Number of distinct location entities 1,029

Crawler’s geographic coverage 81.34%

Geographically-oriented visited pages 2.8 million

Geographically-oriented retrieved pages 2.5 million

Geo-focused crawling accuracy 89.28%

WEBIST 2009 - 5th International Conference on Web Information Systems and Technologies

248

Finally, we compared our crawler’s accuracy in

identifying geographically relevant web pages to the

accuracy of a classification-aware crawler. For this

experiment, we built a Bayesian classifier and we

used it to score every URL in the crawler’s seed list

with respect to their corresponding geographic cate-

gories in the Dmoz directory. For scoring geo-

graphically-relevant URLs, we relied on the seman-

tic relations between the Dmoz category names and

the keywords extracted form the anchor text of the

respective URLs, using WordNet. We then used the

above set of scored URLs as the classifier’s training

data. Having trained the classifier we integrated it in

a crawling module, which we run against the seed

URLs of our previous experiment for one week. At

the end of crawling, we computed the accuracy of

the classification-aware crawler and we compared it

to the accuracy of our geo-focused crawler. In Table

3, we report the comparison results.

Table 3: Comparison results.

Geo-focused crawling accuracy 89.28%

Classification-aware crawling accuracy 71.25%

Results indicate that our geo-focused crawler has

improved performance compared to the performance

of the classifier-based crawler. This, coupled with

the fact that our geo-focused crawler does not need

to undergo a training phase imply the potential of

our geo-focused crawler towards retrieving geo-

graphically-specific web data. Currently, we are

running a large-scale focused crawling experiment

in order to evaluate the effectiveness of our ranking

algorithm in ordering URLs in the crawler’s frontier.

ACKNOWLEDGEMENTS

Lefteris Kozanidis is funded by the PENED 03ED_413

research project, co-financed 25% from the Greek Minis-

try of Development-General Secretariat of Research and

Technology and 75% from E.U.-European Social Fund.

REFERENCES

Amitay, E., Har’El, N., Silvan, R., Soffer, A. 2004. Web-

a-where: geo-tagging web content. In

Proceedings of

the 27

th

Annual Intl. SIGIR Conference.

Borges, K., Laender, A., Mederios, C., Davis, C. 2007.

Discovering geographic locations in web pages using

urban addresses. In

Proceedings of the 4

th

Intl Work-

shop on GIR

Buscaldi, D., Roso, P. 2008. Geo-WordNet: automatic

georeferencing of WordNet. In

Proceedings of the 6

th

Intl. LREC Conference.

Chakrabarti, S., van den Berg, M., Dom, B. 2000. Focused

crawling: a new approach to topic-specific web re-

sources discovery.

Computer Networks, 31(11-16):

1623-1640.

Chung, C., Clarke, C.L.A., 2002. Topic-oriented collabo-

rative crawling, In

CIKM Conference, pp. 34-42.

Ding, J., Gravano, L., Shivakumar, N. 2000. Computing

geographical scopes of web resources. In

Proceedings

of the VLDB Conference

.

Exposto, J., Macedo, J., Pina, A., Alves, A., Rufino, J.

2005. Geographical partition for distributed web

crawling. In

Proceedings of the 2

nd

GIR Workshop.

Fellbaum, Ch. (ed.) 1998.

WordNet: An Electronic Lexical

Database

, MIT Press.

Fu, G., Jones, C.R., Abdelmoty, A. 2005. Building a geo-

graphical ontology for intelligent spatial search on the

Web. In

Proceedings of the IASTED Intl. Conference

on Databases and Applications

. pp. 167-172.

Gao, W., Lee, H.C., Miao, Y. 2006. Geographically fo-

cused collaborative crawling. In

Proceedings of the

WWW Conference

.

GeoWordNet. Available at: http://www.dsic.upv.es/ gru-

pos/nle/downloads-new.html

Hill, L. 2000. Core elements of digital gazetteers: place-

ments, categories and footprints. In

Research and Ad-

vanced Technology of Digital Libraries

.

Himmelstein, M. 2005. Local search: the internet is yellow

pages. In Computer, v.38, n.2, pp. 26-34.

Map 24. Available at: http://developer.navteq.com/site/

global/zones/ms/downloads.jsp.

Markowetz, A., Brinkhoff, T., Seeger, B., 2004. Geo-

graphic information retrieval. In

Web Dynamics.

Martins, B., Silva, M.J., Andrade, L. 2005. Indexing and

ranking on Geo-IR systems. In

Proceedings of the 2

nd

Intl. Workshop on GIR

.

Salton, G., Wong, A., Yang, S.C. 1975. A vector space

model for automatic indexing. In

Communications of

the ACM,

Vol.18, No.11, pp. 631-620.

Silva, M.J., Martins, B., Chaves, M., Cardoso, N., Afonso,

A.P. 2006. Adding geographic scopes to web re-

sources. In

Computers, Environment and Urban Sys-

tems

, vol. 30, pp. 378-399.

Smith, D., Mann, G. 2003. Bootstrapping toponyms classi-

fiers. In

Proceedings of the HLT-NAACL Workshop on

Analysis of Geographic References

, pp. 45-49.

Wang, L., Wang, C., Xie, X., Forman, J., Lu, Y.S., Ma,

W,Y., Li, Y. 2005a. Detecting dominant locations

from search queries. In

Proceedings of the SIGIR Con-

ference

.

Wang, C., Xie, X., Wang, L., Lu, Y.S., Ma, W,Y. 2005b.

Detecting geographic locations from web sources. In

Proceedings of the 2

nd

Intl. Workshop on GIR.

Welch, M., Cho, J. 2008. Automatically Identifying Local-

izable Queries. In Proceedings of the SIGIR Confer-

ence.

Yu, B., Cai, G. 2007. A query-aware document ranking

method for geographic information retrieval. In

Pro-

ceedings of the 4

th

Intl Workshop on GIR.

FOCUSING WEB CRAWLS ON LOCATION-SPECIFIC CONTENT

249