IMAGE SIGNAL PROCESSING FOR VISUAL DOOR PASSING

WITH AN OMNIDIRECTIONAL CAMERA

Luis-Felipe Posada, Thomas Nierobisch, Frank Hoffmann and Torsten Bertram

Chair of Control System Engineering, Technische Universit¨at Dortmund, Dortmund, Germany

Keywords:

Omnidirectional vision, Door passing, Robotic behaviour, Visual navigation, Door detection, Visual servoing.

Abstract:

This paper proposes a novel framework for vision based door traversal that contributes to the ultimate goal

of purely vision based mobile robot navigation. The door detection, door tracking and door traversal is ac-

complished by processing omnidirectional images. In door detection candidate line segments detected in the

image are grouped and matched with prototypical door patterns. In door localisation and tracking a Kalman

filter aggregates the visual information with the robots odometry. Door traversal is accomplished by a 2D

visual servoing approach. The feasibility and robustness of the scheme are confirmed and validated in several

robotic experiments in an office environment.

1 INTRODUCTION

Vision plays an increasingly prominent role in au-

tonomous navigation of mobile robots (DeSouza and

Kak, 2002). This development is on the one hand

driven by the exponential increase in performance of

modern cameras and computers at increasingly eco-

nomic costs. On the other hand robotic researchers

learn to harvest the broad spectrum of robust and ef-

fective low-, mid- and high-level vision algorithms

developed by the computer vision community over

the past two decades for the purpose of robot localisa-

tion, map building and navigation. In that context this

paper contributes to the development of vision based

robot navigation.

Door traversal is a vital skill for autonomous mo-

bile robots operating in indoor environments. Robust

and reliable door passing is feasible with laser range

scanners as their angular resolution provides suffi-

cient information to distinguish among open doors

and other objects such as tables and shelves. How-

ever 2D laser range scanner are not suitable for the

detection of closed or partially opened doors (Jens-

felt, 2001). Equipment of mobile robots with a laser

range scanner contributes significantly to the overall

cost of the mobile platform.

Door passing that relies on sonar sensors is fea-

sible in some scenarios but is in general not robust

and reliable enough to be applicable in all office envi-

ronments (Budenske and Gini, 1994). In the context

of door detection and door passing vision provides

an economic albeit reliable alternative to proximity

sensors such as sonar or laser range scanner. How-

ever, the robust visual detection and localization of

doors remains a challenging task despite a number of

successful implementations in the past (Eberst et al.,

2000; Shi and Samarabandu, 2006; Stoeter et al.,

2000; Patel et al., 2002; Murillo et al., 2008).

The authors in (Stoeter et al., 2000) detect doors

by means of a monocular camera in conjunction with

sonar. The visual door detection is restricted to large

views and rests on the assumption of a priori knowl-

edge of the door and corridor dimensions. The final

door detection at close range relies on sonar informa-

tion only.

In (Monasterio et al., 2002) the authors employ a

Sobel edge detector combined with dilatation and a

filtering operation to detect doors in monocular im-

ages. The final door identification employs an arti-

ficial neural network to classify the presence or ab-

sence of a door based on the sonar data. Initially

the robot approaches the door based on the pose es-

timated from visual information. The final approach

and door traversal relies on sonar data. The approach

rests on the assumption that the robot initially already

faces the door, which excludes more realistic scenar-

ios in which the robot travels along a corridor with

parallel orientation to the doors.

The door traversal approach by (Eberst et al.,

2000) is robust with respect to individual pose er-

rors, scene complexity and lighting conditions as door

hypothesis are filtered and verified for consistency

472

Felipe Posada L., Nierobisch T., Hoffmann F. and Bertram T. (2009).

IMAGE SIGNAL PROCESSING FOR VISUAL DOOR PASSING WITH AN OMNIDIRECTIONAL CAMERA.

In Proceedings of the Fourth International Conference on Computer Vision Theory and Applications, pages 473-480

DOI: 10.5220/0001791404730480

Copyright

c

SciTePress

across multiple views. The door detection relies on

a binocular pan-tilt camera system whereas our ap-

proach uses an omnidirectional camera. An omni-

directional view offers the advantage that an initial

scan of the environment for doors executed by ro-

tating the robot base becomes obsolete. In addition

the omniview guarantees that the door remains visi-

ble throughout the entire door traversal whereas with

a conventionalperspective camera the door eventually

leaves the field of the view such that the final stage of

door traversal is performed open loop. The omniview

also offers an advantage in scenarios with semi open

doors in which the robot still detects the door in the

rear view after it has passed the door leaf.

Omnidirectional vision for door traversing has

also been investigated by (Patel et al., 2002). How-

ever the suggested solution is based on depth infor-

mation obtained from a laser sensor to guide a mobile

platform through a doorway.

To our best knowledge there is still no proposed

solution that handles the three problems of door de-

tection, localisation and door traversal in a coherent

purely vision based framework. The majority of pro-

posed solutions rely on range sensors in one way or

another. Even though the underlying methods for im-

age processing and door frame recognition are stan-

dard, our approach is novel as it provides a robust

and coherent solution to the entire door detection and

navigation problem relying in omnidirectional vision

only.

Images contain a large amount of information

which necessitates the filtering, extraction and inter-

pretation of those image features that are relevant to

the task. Similar to other approaches in the past our

door detection scheme relies on a door frame model

composed of two vertical door posts in conjunction

with a horizontal top segment. The image process-

ing of edges involves edge detection, thinning, gap

bridging, pruning and edge linking. Individual edge

segments are aggregated into lines by means of line

approximation, line segmentation, horizontal and ver-

tical line selection and line merging. Finally the lines

extracted from the omnidirectional image are com-

pared with the door frame model which upon success-

fully matching constitute door hypotheses. These hy-

potheses are tracked over multiple frames and even-

tually confirmed. The position of the door relative to

robot frame is estimated by a Kalman filter that aggre-

gates the robot motion with the door perception. The

vision based door recognition and traversal problem

is structured into the three steps: 1) door detection 2)

door localisation and tracking and 3) door traversal

which are discussed in the three following sections

and are illustrated in figure 1. Section 4 reports ex-

Figure 1: A) Vision based door detection, door localisation

and tracking B) Door traversal by visual servoing.

perimental results of the door traversal in our office

environment. The paper concludes with a summary

and outlook in section 5.

2 DOOR DETECTION

The mobile robot Pioneer 3DX is equipped with an

omnidirectional camera which provides a 360

◦

view

of the scene. Catadioptric cameras employ a com-

bination of lenses and mirrors. Our camera obeys

the single viewpoint property, which is a requirement

for the generation of pure perspective images from

the sensed images. A formal treatment of catadiop-

tric systems is provided by (Baker and Nayar, 1998;

Geyer and Daniilidis, 2001).

The robot navigates through the environment by

means of a topological map. The door detection al-

gorithm is designated to detect doors from arbitrary

robot view points, including lateral and rear views.

The door detection relies on door frame recogni-

tion and thus rests on the reasonable assumption that

the door frame contrasts with the surrounding back-

ground. The map provides no prior information on

door locations, however in conjunction with a mis-

sion plan it enables the robot to either traverse the left

or right of two opposite doors in a corridor.

The door detection is composed into three subse-

quent fundamental steps: image processing, line pro-

cessing and door frame recognition. The image pro-

cessing is executed in the image space; hence, the pro-

cessed entities are pixels. The line processing is per-

formed in Cartesian coordinates and the entities han-

dled are lines. Finally, the detected lines and their spa-

tial relationship are interpreted to recognize the door.

Figure 2 illustrates the visual door detection with piv-

otal processing steps.

IMAGE SIGNAL PROCESSING FOR VISUAL DOOR PASSING WITH AN OMNIDIRECTIONAL CAMERA

473

2.1 Image Processing

Histogram equalization is applied to the original im-

age I

O

with the objective of obtaining full dynamic

range of gray levels. Edges define regions in the

image at which the intensity level changes abruptly.

Our scheme employs the well known Canny edge

detection algorithm (Canny, 1986) not only because

it is still the most common edge detector in the vi-

sion community, but because is maximizes the signal-

to-noise ratio and generates a single response per

edge. The Canny edge detector consists of a Gaus-

sian smoothing filter with a kernel of nine pixels

and σ = 1.85, non-maximum suppression and edge

thresholding with lower and upper thresholds of 15

and 30.

Morphological image processing is a useful tech-

nique for noise removal, image enhancement and im-

age segmentation. The image obtained from mor-

phological processing, edge detection and contrast

stretching is shown in figure 2b. The following mor-

phological operations are applied to the binary edge

image.

• Thinning is a so called hit-or-miss transforma-

tion that is similar to erosion and dilation. The

structuring element is applied to all pixels, in case

the image pixels match the structuring element

the correspondingimage pixel is set to foreground

(1), otherwise it is set to background (0).

• Gap Bridging bridges disconnected groups of

pixels. Gap bridging is also defined in terms of

hit-or-miss transformations and is applied itera-

tively until it produces no further changes to the

image.

• Pruning is an operation to complement thinning

as it is intended to remove spurs that remain after

thinning.

• Isolated Pixel Removal eliminates all isolated

foreground pixels that are surrounded by back-

ground pixels.

The edge detection and morphological processes

characterizes individual pixels as edge pixels, but do

not consider their connectivity. The edge linking

groups these pixels into sets of connected pixels bet-

ter suited for subsequent processing. We applied edge

linking by contour following described in (Kovesi,

2000) as shown in figure 2c.

2.2 Line Processing

The previous operations are performed on pixel level,

whereas line processing decomposes a curved con-

tour into a sequence of straight lines. The generated

straight line segments are classified into horizontal,

vertical and randomly oriented lines, of which the first

two are relevant for door detection.

Several techniques are reported in the literature for

edge linking and boundary detection such as global

processing via Hough transform (Duda and Hart,

1972), RANSAC fitting or local processing by linking

pixels analyzing relations in a small neighborhood.

Our experience in experiments reveals that the Hough

transform fails to detect many meaningful line struc-

tures required for door detection.

We employ a split approach to partition the linked

contours into straight line segments. A straight line

through two points (x

1

,y

1

) and (x

2

,y

2

) is represented

by:

x(y

1

− y

2

) + y(x

2

− x

1

) + y

2

x

1

− y

1

x

2

= 0 (1)

Contours are represented by ordered lists of con-

nected pixels. In the first step the contour is globally

approximated by a straight line connecting the first

and final pixel. The pixel (x,y) of maximum orthogo-

nal distance

d =

x(y

1

− y

2

) + y(x

2

− x

1

) + y

2

x

1

− y

1

x

2

)

p

(x

1

− x

2

)

2

+ (y

1

− y

2

)

2

(2)

to the line is identified. The original line is split

into two straight line segments, in which the max-

imum distance pixel becomes the end point of the

first segment and the starting point of the second seg-

ment. This segmentation is recursively repeated until

all pixels are located with a distance of less than 2 pix-

els with respect to their associated straight line. The

straight line segmentation results are shown in figure

2d.

The resulting lines are classified into vertical lines,

that are potential candidates for door posts, horizontal

lines as candidates for the horizontal door case and

others not part of door frames.

The lines are classified according to the angle be-

tween the line itself and the radial line going through

the central principal point. Lines with an angle of 15

◦

are considered vertical and with an angle of 45

◦

hori-

zontal. All other lines are discarded. These thresholds

are obtained in several tests with different omnidirec-

tional images containing doors of different position

and size. The result of the segmentation is shown in

figure 2e.

The upper horizontal part of the door case are ex-

pected to emerge in the central region of the omnidi-

rectional image corresponding to higher elevation. In

addition the segment is supposed to be of a minimal

length. The horizontal candidate lines are selected if

their length is between 5 % and 20 % of the image

radius and the distance to the center is between 17 %

VISAPP 2009 - International Conference on Computer Vision Theory and Applications

474

(a) Original image (b) Morphological processing,

edge detection and contrast

stretching

(c) Edge linking

(d) Line approximation of (c) (e) Vertical/Horizontal line seg-

mentation of (d)

(f) Deletion of incorrect lines in (e)

(g) Merging collinear lines in (f) (h) Double/Single lines segmenta-

tion of (g) and deletion of incorrect

vertical lines

(i) Detected door frames in (h)

Figure 2: Overview of image processing steps in door detection.

and 28 % of the image radius. The results of the door

frame line selection are shown in figure 2f.

Since the vertical door posts are substantially

longer, it is fairly likely that the corresponding line

segments are disconnected due to noise, non-uniform

illumination and other effects. Therefore it is neces-

sary to merge disconnected vertical lines prior to the

detection of vertical door posts. Lines are merged if

they are collinear and the gap between the segments

is small compared to their overall length. The results

of vertical line merging are shown in figure 2g.

Door frames often generate two parallel edges in

the image corresponding to the inner and outer edge

of the door case. Prior to door detection parallel,

nearby edges of similar length are grouped into so

called double vertical and horizontal lines. For the

purpose of door pose estimation the double line is ge-

ometrically represented by the center of both lines.

IMAGE SIGNAL PROCESSING FOR VISUAL DOOR PASSING WITH AN OMNIDIRECTIONAL CAMERA

475

The presence of double lines is a strong indicator for

a door. Eventually all lines are either double vertical

lines, single vertical lines, double horizontal lines and

single horizontal lines as shown in figure 2h.

2.3 Door Frame Recognition

The final step in the door detection comprehends the

matching between plausible combinations of verti-

cal and horizontal lines with multiple potential door

frame patterns. In general a door frame is described

by two vertical and one horizontal line which end-

points coincide. In practice part of the door frame

might be occluded such that two line configurations

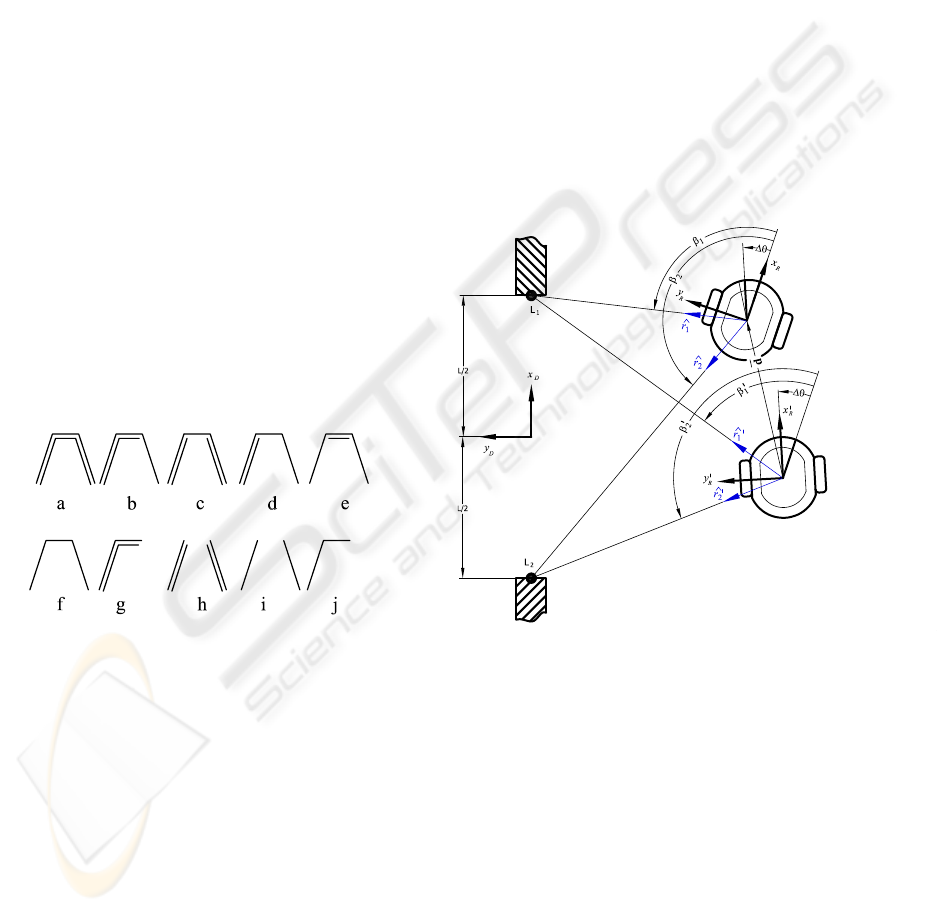

are also considered. Figure 3 shows the possible com-

binations of single and double lines that are matched

with the lines detected in the image. These door

patterns are inspired by the work of (Munoz-Salinas

et al., 2004), which defines simple and double door

frames. The patterns containing double lines are

more meaningful and are therefore matched first. The

matching proceeds from the most distinctive pattern

a) to less discriminative structure j). In our final im-

plementation only the patterns a) to h) are eventually

associated with doors as the remaining patterns i) and

j) are ambiguous and tend to produce too many false

positives. The results after the door pattern matching

are shown in figure 2i.

Figure 3: Possible door frame patterns sorted by priority.

3 DOOR LOCALISATION,

TRACKING AND TRAVERSAL

3.1 Door Localisation and Tracking

Door localisation estimates the robots current pose

(x,y,θ) with respect to the door. In case of monoc-

ular cameras the robot pose is usually recovered by

triangulation of features from multiple captures taken

from different viewpoints. In the literature this local-

ization scheme is known as bearing only localization.

The built-in odometer estimates the relative robot mo-

tion between consecutive viewpoints. Since both the

measurement and the motion are subject to noise and

errors, the robot position with respect to the door is

estimated with an extended Kalman filter (EKF). The

state prediction of the EKF relies on the odometry

motion model, which describes the relative robot mo-

tion between two consecutive poses by three basic

motions: an initial rotation δ

rot1

followed by a straight

motion δ

trans

and a final rotation δ

rot2

. The odometry

model predicts the relative robot motion between con-

secutive states (Thrun et al., 2005):

x

−

t

=

x

−

t

y

−

t

θ

−

t

=

x

+

t−1

y

+

t−1

θ

+

t−1

+

δ

trans

cos(θ

t−1

+ δ

rot1

)

δ

trans

sin(θ

t−1

+ δ

rot1

)

δ

rot1

+ δ

rot2

(3)

in which the superscript (-) denotes the a priori esti-

mate of the process model and the superscript(+) in-

dicates the a posteriori estimate after the correction

step.

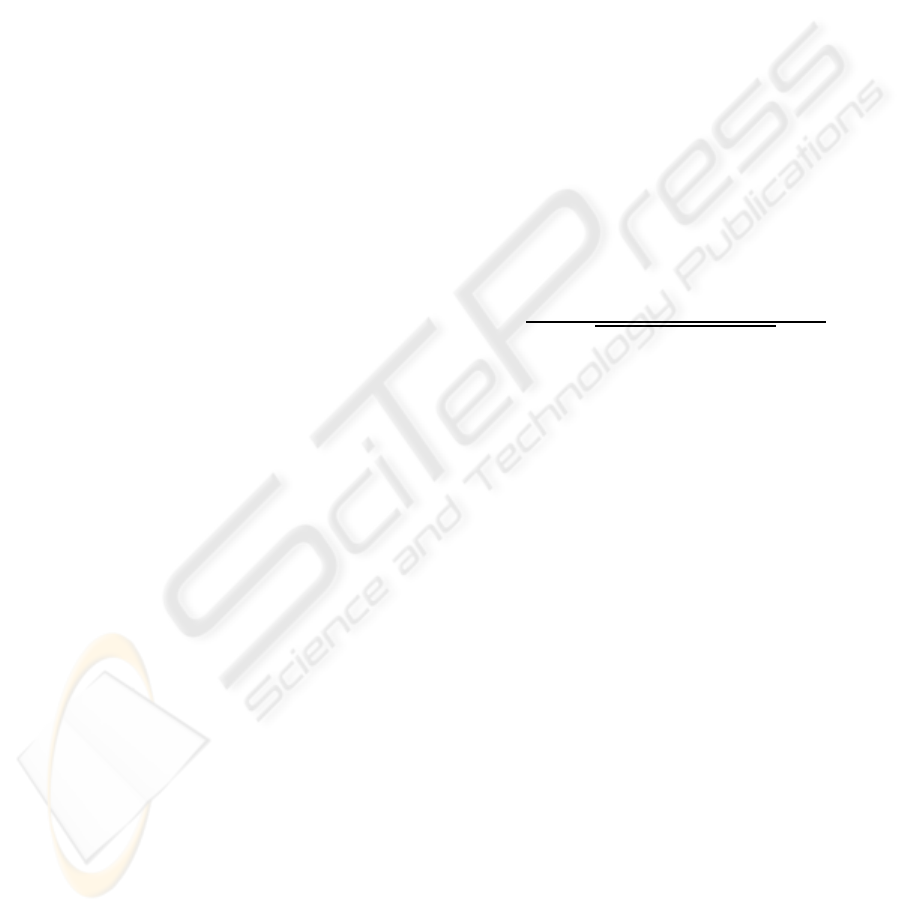

Figure 4: Robot position from bearing.

Figure 4 shows the door coordinate frame <

x

D

,y

D

> located at the center of the door and the

two robot coordinate frame at two consecutive poses

< x

′

R

,y

′

R

> and < x

R

,y

R

>.

The location of the door posts L

1

,L

2

in robocentric

coordinates are represented by

r

1,2

= k

1,2

cos(β

1,2

)

sin(β

1,2

)

(4)

The door post L

1

,L

2

with respect to the current

robot coordinate frame are recovered by triangulation

from the two robot poses according to

r

1,2

= k

1,2

ˆ

r

1,2

= k

′

1,2

ˆ

r

′

1,2

− d (5)

VISAPP 2009 - International Conference on Computer Vision Theory and Applications

476

in which d denotes the relative motion between both

poses.

Solving equations 4 and 5 for k

1,2

, the door pose

with respect to the current robot coordinate frame is

given by:

x

d

y

d

θ

d

=

(k

1

cos(β

1

) + k

2

cos(β

2

))/2

(k

1

sin(β

1

) + k

2

sin(β

2

))/2

arctan

k

1

sin(β

1

)−k

2

sin(β

2

)

k

1

cos(β

1

)−k

2

sin(β2)

(6)

The robot pose with respect to the door frame pre-

dicted from the bearing angles is computed as:

z

t

=

−x

d

cos(θ

d

) − y

d

sin(θ

d

)

x

d

sin(θ

d

) − y

d

cos(θ

d

)

−θ

d

(7)

In the correction step of the Kalman filter the pos-

teriori state estimate is obtained by:

x

+

t

= x

−

t

+ K

t

(z

t

− x

−

t

) (8)

in which the Kalman gain K

t

depends on the ratio

of measurement and process covariance. The mea-

surement error covariance is determined by a prior

off-line analysis of door post triangulation accuracy

to σ

2

x

= 1 , σ

2

y

= 1 and σ

2

θ

= 0.5. The Kalman filter

is initialized based on the first two consecutive mea-

surements of door post bearings.

3.2 Door Traversal

Typically the door is detected in the image for the first

time at a separation between robot and door of about

two to three meters. The door is tracked continuously

by means of the Kalman filter while the robot con-

tinues its motion parallel to the corridor. The robot

stops once it is located laterally with respect to the

door center. At this instance it executes a 90 ° turn

towards the door while continuously tracking its rela-

tive orientation.

Before initiating the traversal the open door state

is verified from a single image and a sonar scan as

a failsafe. The region between the door posts is an-

alyzed in terms of its texture. A homogeneous tex-

ture indicates a closed door, whereas random texture

implies an open door. The sonar scan is merely a fail-

safe confirmation of the visual classification, no sonar

range data is needed for controlling the subsequent

traversal. The robot traverses the door at constant ve-

locity by centering itself with respect to the continu-

ously tracked door posts. The visual servoing controls

the robots turn rate such both door posts remain equi-

lateral in the omnidirectional view. The Kalman filter

is no longer applied as the depth information becomes

unreliable at close range and is not needed for guiding

the robot through the door.

4 EXPERIMENTAL RESULTS

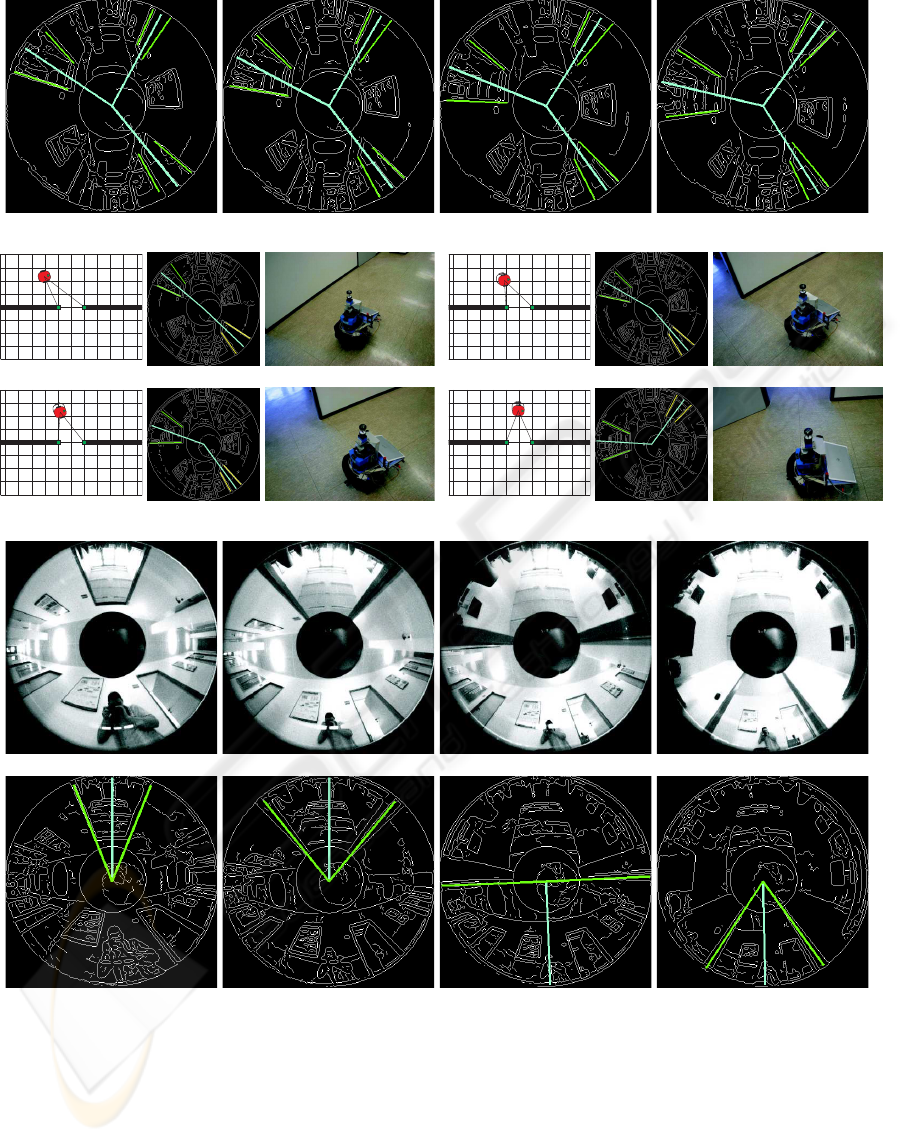

A typical scenario is depicted in Figure 5 consisting

of door detection (A), door localisation and tracking

(B) and door traversal (C). Similar traversal scenar-

ios with different doors, illumination and directions

of approach have been repeated successfully and con-

sistently about hundred times. The door detection al-

gorithm runs at a frame rate of 20 Hz on a standard

2.4 GHz processor and an image size of 388x388 pix-

els. False positives and false negatives in the door

detection mainly occur due to noise, occlusion, illu-

mination effects and confusion with door like objects

such as shelves. To render the detection algorithm

even more robust, the door frames are tracked over

consecutive images during the motion. Initial false

positives are eventually rejected in subsequent cap-

tures. This validation step is of particular importance

for the Kalman filter localization.

The algorithm is tested on 1000 manually labeled

images taken from video sequences capturedin the of-

fice environment of our department. The enviroment

is cluttered with objects such as pillars, cabinets or

frames that could be confused with doors. The data

set contains views taken from the corridor but also

from the inside of offices. We assume that our vision

based, topological localisation and navigation scheme

guides the robot to the vicinity of the door. Thus there

is need for our scheme to detect remote or occluded

doors.

The initial door detection is shown in row (A).

False positives in single images for doors amount to

3%, false negatives occur 5% of the time.

Row (B) of figure 5 depicts the state estimate and

measurements of the Kalman in terms of robot pose

relative to the door (left), the tracked door frames

in the omnidirectional view (center) and an external

view of the scene (right). During the tracking and ap-

proach phase the third door is occluded by a person

standing in front of the door. The robot proceeds from

its initial position about 1.5m along the corridor away

from the door (top left) until is longitudinally aligned

with the door center (bottom left) and completion of

the 90 ° turn (bottom right).

The door traversal stage is depicted in row (C)

starting initially heading towards the door (left), ap-

proaching the door (center left), passing the door

(center right) and after the traversal (right). Notice,

that at all times, the detected door posts form an equi-

lateral triangle with the image center indicating accu-

rate alignment of the robot with the door.

IMAGE SIGNAL PROCESSING FOR VISUAL DOOR PASSING WITH AN OMNIDIRECTIONAL CAMERA

477

A) Vision based door detection

−2.5 −2 −1.5 −1 −0.5 0 0.5 1 1.5 2 2.5

−2

−1.5

−1

−0.5

0

0.5

1

1.5

2

m

m

−2.5 −2 −1.5 −1 −0.5 0 0.5 1 1.5 2 2.5

−2

−1.5

−1

−0.5

0

0.5

1

1.5

2

m

m

−2.5 −2 −1.5 −1 −0.5 0 0.5 1 1.5 2 2.5

−2

−1.5

−1

−0.5

0

0.5

1

1.5

2

m

m

−2.5 −2 −1.5 −1 −0.5 0 0.5 1 1.5 2 2.5

−2

−1.5

−1

−0.5

0

0.5

1

1.5

2

m

m

B) Vision based door location and tracking

C) Visual servoing for door traversal

Figure 5: Experimental evaluation of the visual door passing behavior.

5 CONCLUSIONS

This paper introduced a novel framework for vision

based door traversal of a mobile robot. The door

detection, door tracking and door traversal rely on

omnidirectional images only. The door detection is

based on the matching of detected line segments with

prototypical door patterns. The door localisation and

tracking aggregates the visual measurements with the

robots odometry information in a Kalman filter. The

door traversal follows a 2D visual servoing approach

with extracted door posts as image features. The prac-

tical usefulness and robustness of the approach are

confirmed in several experiments in an office environ-

VISAPP 2009 - International Conference on Computer Vision Theory and Applications

478

ment. The proposed scheme contributes to our overall

objective of achieving purely vision based robot navi-

gation and localisation in indoor environments. It has

been successfully integrated and tested with other vi-

sual behaviors such as goal point reaching and obsta-

cle avoidance.

REFERENCES

Baker, S. and Nayar, S. (1998). A Theory of Catadioptric

Image Formation. In IEEE Int. Conference on Com-

puter Vision (ICCV), pages 35–42.

Budenske, J. and Gini, M. (1994). Why is it so difficult

for a robot to pass through a doorway using ultrasonic

sensors? In IEEE Int. Conference on Robotics and

Automation, volume 4, pages 3124 – 3129.

Canny, J. (1986). A computational approach to edge de-

tection. IEEE Trans. Pattern Anal. Mach. Intell.,

8(6):679–698.

DeSouza, G. and Kak, A. (2002). Vision for mobile robot

navigation: A survey. IEEE Trans. on Pattern Analysis

and Machine Intelligence, 24(2):237–267.

Duda, R. O. and Hart, P. E. (1972). Use of the hough trans-

formation to detect lines and curves in pictures. Com-

mun. ACM, 15(1):11–15.

Eberst, C., Andersson, M., and Christensen, H. (2000).

Vision-based door-traversal for autonomous mobile

robots. In IEEE/RSJ International Conference on In-

telligent Robots and Systems,IROS 2000, volume 1,

pages 620 – 625.

Geyer, C. and Daniilidis, K. (2001). Catadioptric projective

geometry. Int. J. of Computer Vision, 4(3):223–243.

Jensfelt, P. (2001). Approaches to Mobile Robot Localiza-

tion in Indoor Environments. PhD thesis, KTH.

Kovesi, P. D. (2000). MATLAB and Octave functions

for computer vision and image processing. The

University of Western Australia. Available from:

<http://www.csse.uwa.edu.au/∼pk/research/matlabfns/>.

Monasterio, I., Lazkano, E., Rano, I., and Sierra, B. (2002).

Learning to traverse doors using visual information.

Trans. of Mathematics and Computers in Simulation,

vol.60.

Munoz-Salinas, R., Aguirre, E., M., G.-S., and Gonzlez,

A. (2004). Door-detection using computer vision

and fuzzy logic. WSEAS Transactions on Systems,

10(3):3047–3052.

Murillo, A. C., Kosecka, J., Guerrero, J. J., and Sagues, C.

(2008). Visual door detection integrating appearance

and shape cues. Robotics and Autonomous Systems,

56(6):512–521.

Patel, S., Jung, S. H., Ostrowski, J. P., Rao, R., and Tay-

lor, C. J. (2002). Sensor based door navigation for

a nonholonomic vehicle. In IEEE Int. Conference

on Robotics and Automation, Washington,DC, pages

3081–3086.

Shi, W. and Samarabandu, J. (2006). Investigating the per-

formance of corridor and door detection algorithms in

different environments. In Int.Conference on Informa-

tion and Automation, ICIA 2006, pages 206–211.

Stoeter, S., Le Mauff, F., and Papanikolopoulos, N. (2000).

Real-time door detection in cluttered environments.

In Proc. IEEE Int. Symposium on Intelligent Control,

pages 187 – 192.

Thrun, S., Burgard, W., and Fox, D. (2005). Probabilis-

tic Robotics (Intelligent Robotics and Autonomous

Agents). The MIT Press.

IMAGE SIGNAL PROCESSING FOR VISUAL DOOR PASSING WITH AN OMNIDIRECTIONAL CAMERA

479