DYNAMICS OF TRUST EVOLUTION

Auto-configuration of Dispositional Trust Dynamics

Christian Damsgaard Jensen and Thomas Rune Korsgaard

Department of Informatics and Mathematical Modelling

Technical University of Denmark, Bld. 321, Richard Petersens Plads, Kgs. Lyngby, Denmark

Keywords:

Security auto-configuration, trust evolution, trust dynamics.

Abstract:

Trust management has been proposed as a convenient paradigm for security in pervasive computing. The part

of a trust management system that deals with trust evolution normally requires configuration of system pa-

rameters to indicate the user’s propensity to trust other users. Such configurations are not intuitive to ordinary

people and significantly reduce the usability of the system. In this paper, we propose a dynamic trust evolution

function that requires no initial configuration, but automatically adapts the behaviour of the system based on

the user’s experiences. This makes the proposed trust evolution function particularly suitable for embedding

into mass produced consumer products.

1 INTRODUCTION

Ubiquitous computing (Weiser, 1991) offers a vision

of the world of tomorrow in which there are comput-

ing devices embedded in everything: vehicles, house-

hold equipment, home entertainment systems, med-

ical equipment, clothing and even in human beings.

These computing devices will communicate with one

another and exchange information in order to help

people with tasks in a wide range of everyday con-

texts. Some of the devices will be powerful comput-

ers, while others are tiny chips without much com-

puting power, but suitable for embedding in clothing

or within the organs of the human body. Regard-

less of their capabilities, the devices are expected to

be connected at all times and in all locations, and to

interact across traditional organisational boundaries.

Common for many of these devices is that they will

be owned, managed and operated by ordinary people

who are generally unable to understand the security

implications of configuring and using such devices. It

is therefore important to develop security abstractions

that ordinary people are able to understand and man-

age with a minimum of effort.

Trust management has been proposed as a con-

venient security metaphor that most people under-

stand and which offers the possibility of security auto-

configuration (Seigneur et al., 2003b). Existing work

on trust management (Blaze et al., 1999; Grandison

and Sloman, 2000; Cahill et al., 2003) focuses on

making specific, security related, decisions based on

general policies that do not necessarily include ex-

plicit information about context, location, credentials

or the identity of other users, e.g., entity recogni-

tion may replace entity authentication (Seigneur et al.,

2003a). In the context of trust management, it is

generally agreed that trust is formed by a number

of interaction based factors, such as personal expe-

rience, recommendations (e.g., credentials) and the

reputation of the other party, but environmental fac-

tors (situational trust) and individual factors (dispo-

sitional trust) also play an important role (McKnight

and Chervany, 1996). In particular, dispositional trust

captures the user’s propensity to trust other people,

which is an important configuration parameter in a

trust based security mechanism.

Previous work on trust evolution (Jonker and

Treur, 1999; Jonker et al., 2004; Gray et al., 2006) op-

erates with the notion of trust dynamics, which deter-

mine how trust values change over time and how trust

decays when it is not actively maintained.

1

Six differ-

ent types of trust dynamics have been identified, but

the trust update functions that have been proposed to

implement them are all statically defined. This means

that the trust update function must be configured by

the users before a device is used for the first time and

there is no way to account for changes in people’s dis-

positional trust based on their experience, e.g., to re-

flect that people who are frequently cheated are likely

to become less trusting.

1

This decay is often referred to as ageing or discounting

of old experiences.

509

Damsgaard Jensen C. and Rune Korsgaard T. (2008).

DYNAMICS OF TRUST EVOLUTION - Auto-configuration of Dispositional Trust Dynamics.

In Proceedings of the International Conference on Security and Cryptography, pages 509-517

DOI: 10.5220/0001921305090517

Copyright

c

SciTePress

In this paper we propose a dynamic trust evolu-

tion model, which captures the way a person’s inclina-

tion to trust another person depends on the experience

gained through interactions with that other person.

This means that consistent good experiences will not

only result in a high level of trust at a given time, but

will also result in a more optimistic outlook toward

the other person, i.e., that trust will grow more rapidly

in the future compared to other people with whom the

user may have more mixed experiences. We focus this

work on the dynamics of trust evolution, which means

that we do not distinguish between the different forms

of interaction based factors. Recommendations and

reputations may be considered equivalent to personal

experience once the trust in the recommender or the

reputation system has been taken into account. One of

the defining properties of the proposed dynamic trust

evolution model is that it requires no initial configura-

tion of the individual trust factors, which means that it

is well suited for implementation in software, which

is loaded into small embedded devices developed for

a mass market.

The rest of this paper is organised as follows. Sec-

tion 2 defines the trust model and defines a simple

graphical representation to show the relationship be-

tween experience and trust. The underlying trust evo-

lution model is presented in Section 3 and the dy-

namic aspects of the trust evolution model are defined

in Section 4. A preliminary evaluation of the pro-

posed model is presented in Section 5 and our con-

clusions and some directions for future work are pre-

sented in Section 6.

2 TRUST MODEL

The trust model defines how trust is represented in

the system in the form of trust values and determines

the types of operations that can be performed on these

trust values. These operations primarily relate to the

way trust values are updated to reflect the evolution of

trust in other parties.

In this paper we follow the definition of trust val-

ues made by Stephen Marsh, who defines trust as a

variable in the open interval ]−1;1[, where -1 is com-

plete distrust and 1 is complete trust (Marsh, 1994).

The model distinguishes between entities that

have been encountered before and for whom personal

experience has been recorded and strangers for whom

an initial trust value must be based on some system

defaults. Entities about whom experience has been

recorded are entered into a Ring of Trust which stores

their trust value and the parameters that determine the

way trust in each entity evolves.

2.1 Trust Evolution

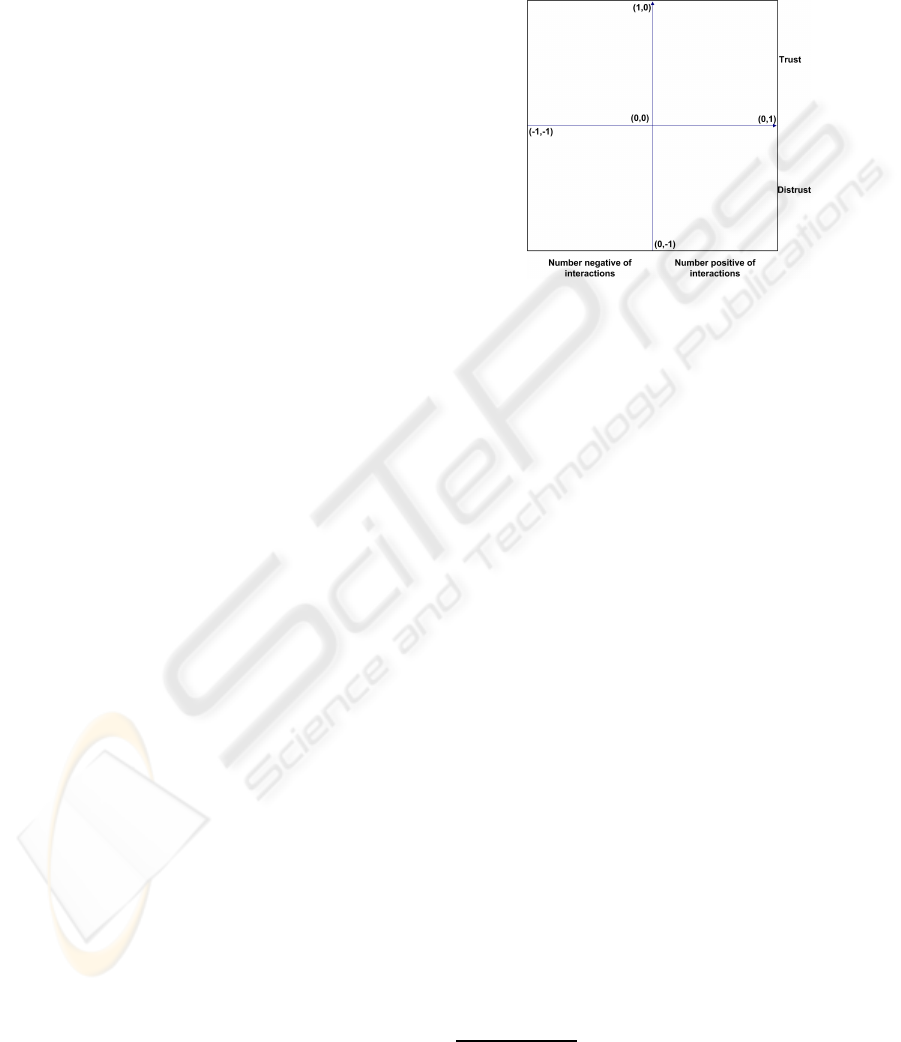

In order to facilitate our discussion of trust evolu-

tion, we introduce a two-dimensional coordinate sys-

tem from -1 to 1 on both the X-axis and the Y-axis

(cf. Figure 1).

Figure 1: Coordinate system used to represent trust.

Trust is represented as a function, where the

trust value is represented on the Y-axis, determining

whether the trustee is in trust or distrust.

2

Each trustee

has her own function in this coordinate system. The

X-axis is determined by the relationship between pos-

itive and negativeexperiences. Calculating an X value

from the previous interactions, gives the possibility of

calculating a trust value as function of the X value.

These definitions result in a trust function, which

will be present in the 1

st

quadrant and in the 3

rd

quad-

rant. The 4

th

quadrant is not considered, because it

does not make sense to distrust an entity if there is a

majority of positive experience, which would be the

case if the function was placed in the 4

th

quadrant.

Likewise, a situation where the function is present

in the 2

nd

quadrant corresponds to a scenario where

the user has trust and a majority negative interactions.

The actual trust evolution function is defined in Sec-

tion 4.1.

2.2 Ring of Trust

Each trustee that the trustor interacts with should

have a different trust profile, and therefore a differ-

ent curve, because of individual and subjective opin-

ions about their interactions. Each curve is regarded

as an instance of the trust model that represents each

trustee. In order to keep track of these curves we have

a trust management system. The core of this trust

management system defines a Ring of Trust (RoT),

2

We refer to this relationship between experience and

trust value as the trust evaluation function or sometimes

simply the curve.

SECRYPT 2008 - International Conference on Security and Cryptography

510

which is the database that keeps track of all the in-

formation that is extracted from encounters with other

users in a pervasive computing environment. The in-

formation stored in the RoT consists primarily of the

number of positive and negative experiences and the

parameters needed to construct the curve. This RoT

is consulted in all operations relating to trust initiali-

sation and trust updates.

3 TRUST EVOLUTION MODEL

The trust evolution model has three main parts:

• Initial trust. Defines how a new trustee in the Ring

of Trust is initialised.

• Trust dynamics. Defines the speed that a trustee

in the Ring of Trust progresses in trust.

• Trust evolution model. Defines the actual func-

tion used for trust evolution, which also provides

the basis for the dynamic trust evolution model

(cf. Section 4).

These first two parts are inspired by Jonker and

Treur’s model of trust dynamics (Jonker and Treur,

1999), but the dynamic trust evolution model is a

novelextension of the more statically defined trust up-

date functions defined in their work. The three parts

of our trust evolution model are described further in

the following.

3.1 Initial Trust

Interacting with other entities in a pervasive comput-

ing environmentopens the possibility of the newly en-

countered entity to become trusted. When a new en-

tity is introduced into the system, it has to have some

sort of initial trust value. Jonker and Treur identify

two types of initial trust: initially trusting and ini-

tially distrusting, where both these approaches require

a configuration of trust.

The type of trust evolution function to use depends

on the user’s dispositional trust and would normally

have to be configured into all her devices when they

are initially deployed. The configuration would be

used to determine what kind of a person a user is:

• Does the user want to make quick progress or is

the user more cautious? A cautious user needs

a lot more positive interactions before she trusts

another user, than an optimistic user needs.

• Does it take long to develop trust in another per-

son or does the user only need one or two positive

results?

• When a trusted person suddenly acts different

than expected, will this destroy the relation ship

or is the user more forgiving and needs several be-

trayals in order to loose trust?

With a system that requires an initial trust config-

uration, upon installation or initiation the user would

have to decide on the questions above. This would

determine what kind of person the user is in terms of

trust and help define her trust evolution function. Peo-

ple, however, change their opinion over time to be-

come more optimistic or cautious; often without be-

ing consciously aware that they changed their mind.

Another point is that this configuration might not be

the same for all the people in the user’s ring of trusted

persons.

Our goal is to have a system, which needs no con-

figuration upon initialisation, but allows a user to de-

velop different trust dynamics toward different enti-

ties depending on their interaction history. We there-

fore define a separate trust model for each person

that the user is interacting with, hence the individual

curves in the RoT. In order to avoid configuration the

system has a neutral view of new users. Therefore

all new users are given the initial trust value 0. And

since there is no interactions with this new user, the

user starts the trust function in point (0,0).

3.2 Trust Dynamics

Trust Dynamics describe the way positive or nega-

tive experiences influence trust. Every time a user

interacts with another entity, the user is asked to tell

whether the experience was positive or negative. This

feedback can be used to determine the kind of rela-

tionship between the trustee and the trustor. With this

feedback the trust function is also updated (cf. Sec-

tion 3.3). The following six types of trust dynamics

have been identified:

Blindly positive defines a trust profile, where a

trustor trusts a trustee blindly after a set of pos-

itive experiences. After this set of experiences the

trustee is trusted blindly for all future interactions,

no matter what.

Blindly negative defines the opposite of blindly pos-

itive. After a number of negative experiences the

trustor will never trust the trustee again, and the

trustee will have unconditional distrust, no matter

what.

Slow positive, fast negative dynamics, defines a

trustor that requires a lot of positive experiences

to build trust in a trustee, but it only takes a few

negative experiences to spoil the build up trust.

DYNAMICS OF TRUST EVOLUTION - Auto-configuration of Dispositional Trust Dynamics

511

Balanced slow defines a trustor that progresses

slowly on building trust and slowly on loosing

trust.

Balanced fast defines a trustor that progresses fast

on building trust and looses it fast as well.

Fast positive, slow negative dynamics define a

trustor that takes a few positive experiences to

build trust to a trustee but takes a lot of negative

experience to spoil it again.

These six approaches describe how the trust value

on the Y-axis varies as a function of the recorded ex-

perience on the X-axis. One way to implement this,

would be to use different granularities on the X-axis,

depending on the trust dynamics, so if the user is de-

fined as fast positive and slow negative, the granular-

ity of the X-axis will be coarse, i.e., there will only be

a few steps from 0 to 1, when adding positive expe-

rience, but fine when subtracting negative experience

from the X-value.

However, choosing this approach to trust dynam-

ics would be difficult to implement, due to the require-

ment that the implemented system has to be able to

work without configuration. It is difficult to determine

through the users actions which of the six approaches

the trustor would use toward a trustee.

We therefore propose an approach, where expe-

rience influences the trust dynamics by changing the

shape of the trust evolution function, thus making it

dynamic.

3.3 Trust Evolution Function

Jonker and Treur define 16 required properties for a

trust evolution function, but only 10 of these are rel-

evant for developing the trust evolution function for

our trust evolution model; the last 6 properties are

not considered, because they deal with approximation

of the trust evolution function. As we shall see later

(cf. Section 5.1), the dynamic trust evolution function

defined by our model does not originate from a data

set but from a mathematical formula, so there is no

need for approximation.

When designing a system that has no configura-

tion, it is impossible to tell what kind of person is

using the system. Therefore the system takes a neu-

tral point of view when a new user is introduced in

the Ring of Trust. The first curve is a linear func-

tion, f(x) = x, which corresponds to a balanced trust

dynamics that is neither slow nor fast.

Every time the user has an experience with an-

other entity, the user is asked to indicate whether it

was a positive or a negative experience. This feed-

back is used to update the trust evolution function, so

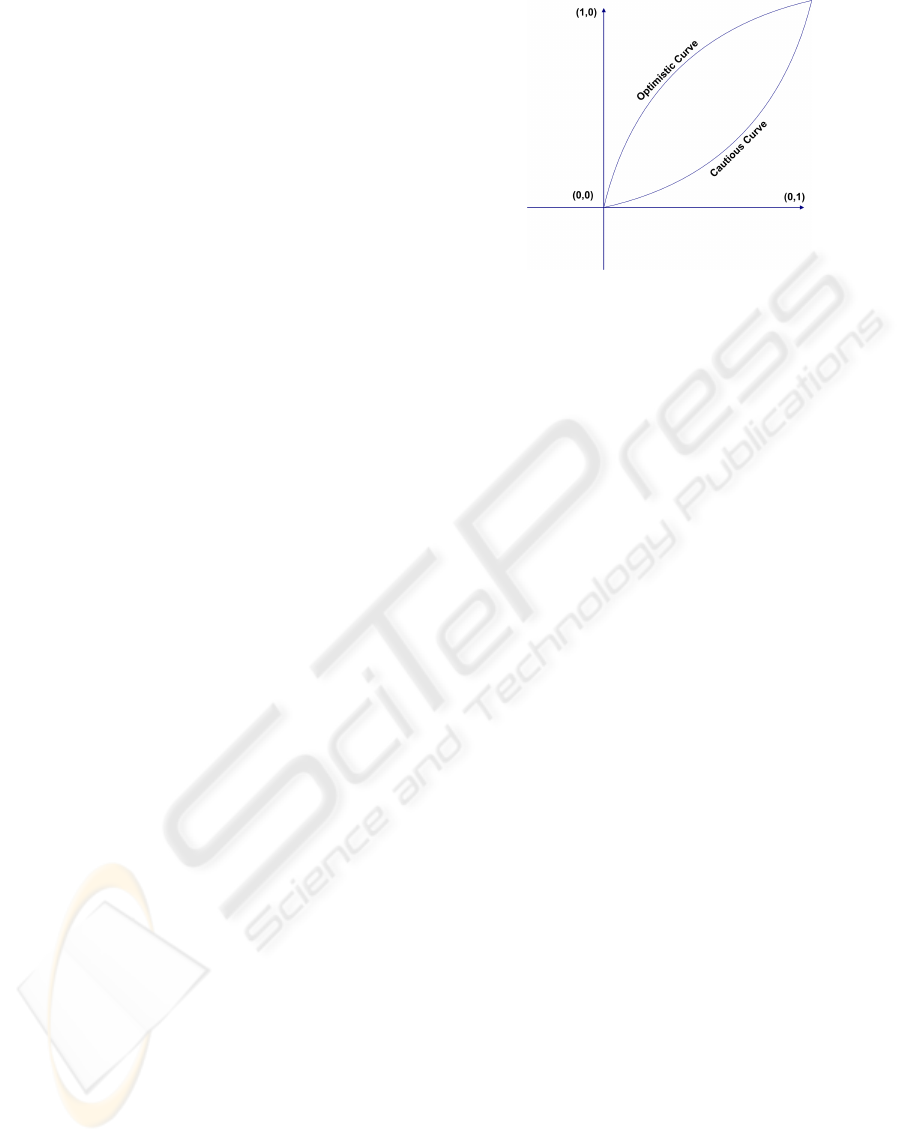

Figure 2: Trust evolution function, the cautious and the op-

timistic curve.

that it may transparently adapt to the kind of trust pro-

file the user has with respect to the other party.

In general, people can be more optimistic or cau-

tious when it comes to evolving trust. A user is op-

timistic if the user tends to trust a person based on a

few experiences. If a person is more cautious it takes

more interactions to obtain a high trust value, where

optimistic persons get a high trust value fast. The op-

timistic and cautions curve is shown in Figure 2.

We see that both curves ends in trust value 1,

but the optimistic curve will have higher trust values

while approaching 1. Basically this means that an op-

timist calculates a higher trust value based on fewer

interactions. On the other hand, this leads to trust that

is based on less experience and therefore more easily

misplaced.

4 DYNAMIC TRUST EVOLUTION

As previously mentioned, the trust evolution function

must be able to change over time based on feedback

from the user. It is sometimes possible to determine

this feedback implicitly, e.g., a music recommenda-

tion system may recommend a song to the user, but

the recommendation system may infer that this was

a poor recommendation if the user asks for another

song after a few bars. In many cases, however, the

user will have to provide this feedback explicitly. The

feedback provides an idea of how risk willing the user

is.

Basically the system must deal with six different

situations when getting feedback from the user, and

altering the user trust values in the RoT:

1. A user can be in the trust region (1

st

quadrant) and

have a positive experience.

2. A user can be in the trust region (1

st

quadrant) and

have a negative experience.

SECRYPT 2008 - International Conference on Security and Cryptography

512

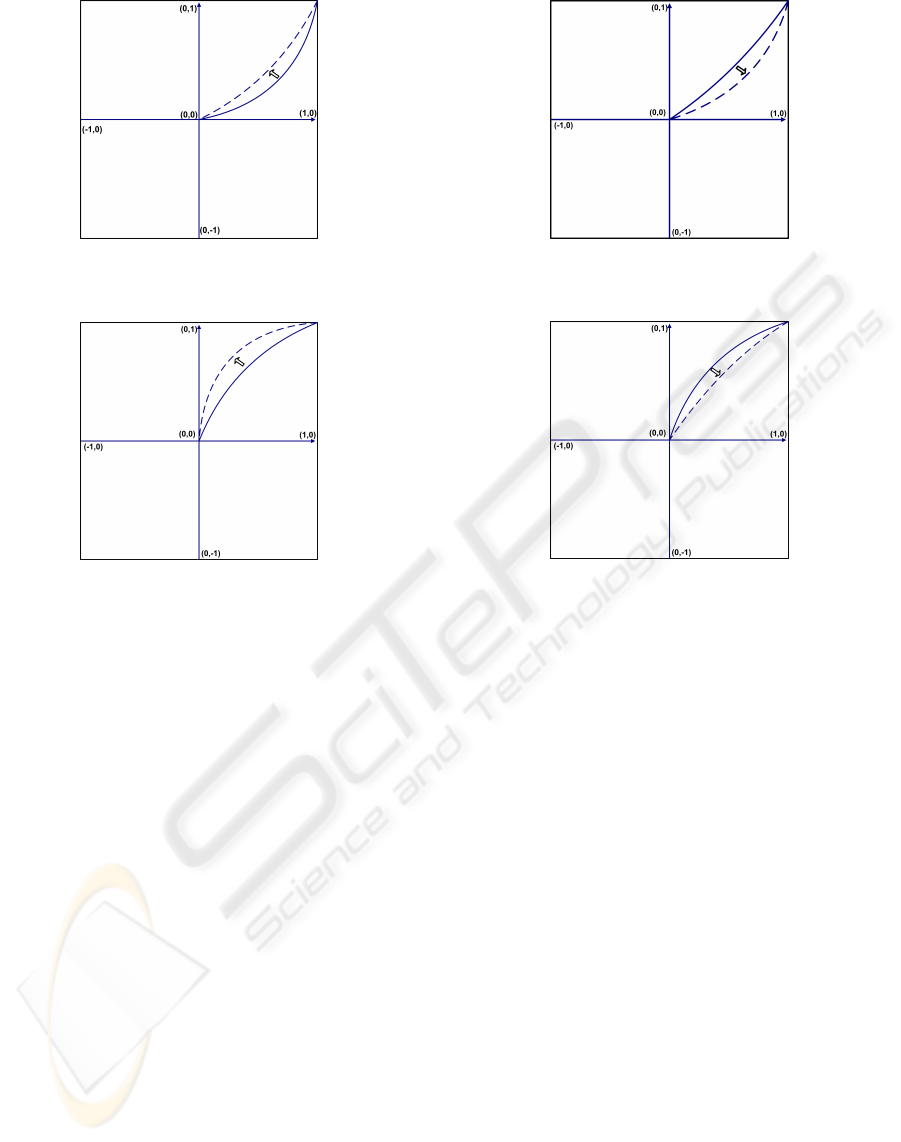

Figure 3: A user with a cautious curve has a positive expe-

rience, and the curve is updated accordingly.

Figure 4: A user with a optimistic curve has a positive ex-

perience, and the curve is updated accordingly.

3. A user can be in the distrust region (3

rd

quadrant)

and have a positive experience.

4. A user can be in the distrust region (3

rd

quadrant)

and have a negative experience.

5. The user can be in Origin (0, 0), and have a posi-

tive experience.

6. The user can be in Origin (0, 0), and have a nega-

tive experience.

In the scenario where the user is in the trust region

and has a positive experience, this should lead to an

improvement in trust for the entities that provided the

service. For the optimistic and the cautious curve the

development in the evolution curve would look like

Figure 3 and Figure 4 respectively.

The evolution function extends from the full-line

curve to the dashed curve. We see that there is a dif-

ference in the progress of trust, depending on whether

the user is an optimistic person or a cautious person.

On the other hand if the experience is negative

then the trust value must be decreased for those en-

tities that provided the service which was unsatisfac-

tory; this is shown in Figure 5 and Figure 6.

The function moves from the full-line curve to the

dashed, and again there is a different progression in

Figure 5: A user with a cautious curve has a negative expe-

rience, and the curve is updated accordingly.

Figure 6: A user with a optimistic curve has a negative ex-

perience, and the curve is updated accordingly.

trust depending on whether the user is cautious or op-

timistic.

In this model it is only possible to have a cautious

curve when a negative experience has been recorded

and only possible to have an optimistic curve if there

has been a positive interaction, which corresponds

well to human intuition.

This approach for changing the trust evolution

function does also apply to the distrust region. If the

user has a negative experience, then the trust evolu-

tion curve is pushed further down toward (0, -1) and

if the user encounters a positive experience, then the

curve is pushed further up against (-1, 0).

We therefore need to define a dynamic trust evolu-

tion function that captures the behaviour that we de-

scribed above. One such function is presented in the

following.

4.1 Dynamic Trust Evolution Function

As described in Section 3.3 the initial trust function is

defined as the function f(x) = x, which gives a neutral

trust dynamics upon initialisation of a user in the RoT.

Using a simple linear formula, however does not

meet the requirements that we defined above, because

we are unable to represent the ways that a user’s pro-

DYNAMICS OF TRUST EVOLUTION - Auto-configuration of Dispositional Trust Dynamics

513

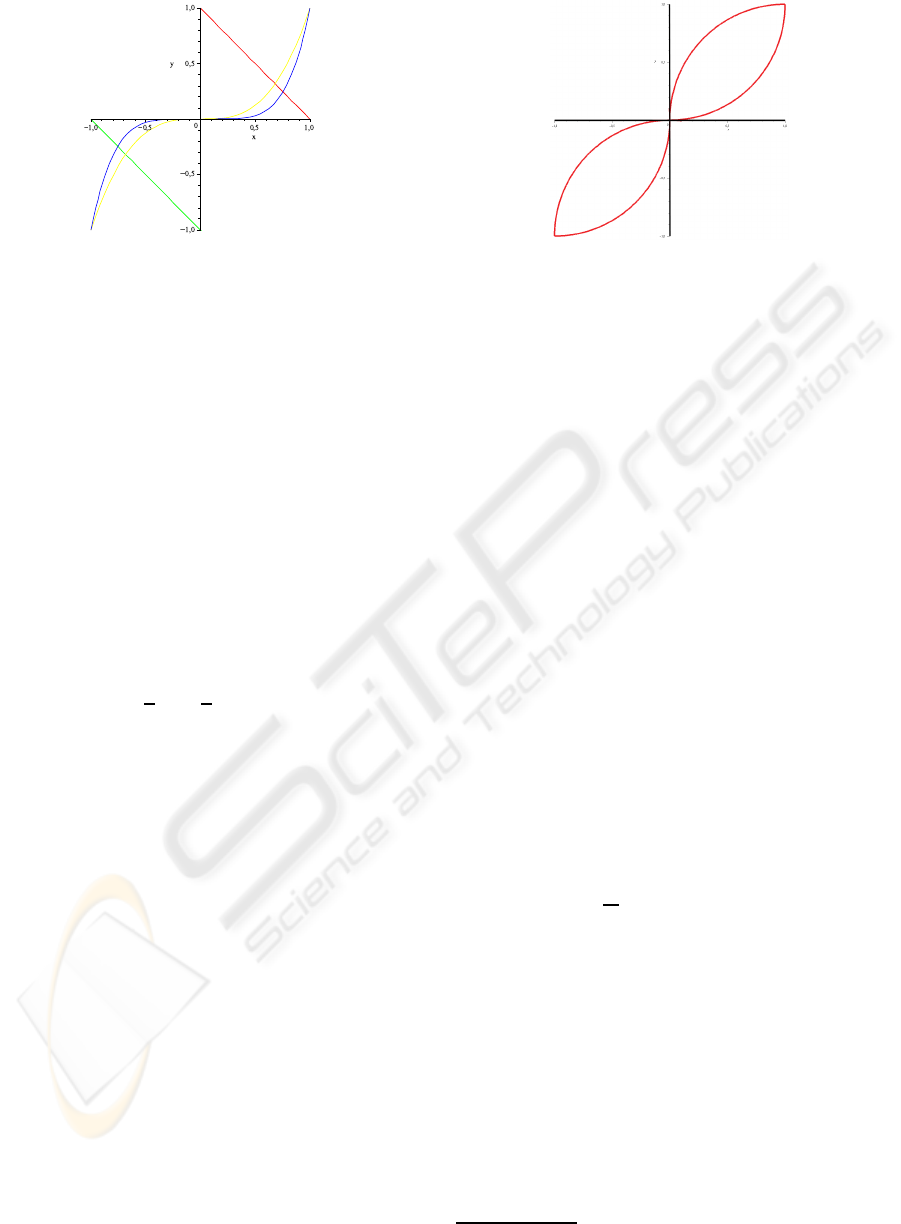

Figure 7: Trust Evolution Function represented with a poly-

nomial expression.

file is changed into a more optimistic or cautious

curve with a linear expression. Another approach

would be to represent the function as a polynomial

function with different degrees power. The disad-

vantage of using a polynomial function is that it is

not mirrored in f(x) = −x + 1 and f(x) = −x − 1,

as shown in Figure 7. By having a function expres-

sion that is not weighted equally, the steps closest to 0

on the X axis are much less significant than the steps

closest to 1 and -1, which would not be fair as step

toward trust should only depend on the curves param-

eters and not the functional expression of the curve.

A third approach (and the approach chosen) is to

represent the trust function as a superellipse (or Lam´e

curve). The superellipse is represented by the for-

mula:

x

a

n

+

y

b

n

= 1

The superellipse can be adjusted by tweaking the

parameters a, b and n. The parameter a and b repre-

sent the radius of the superellipse, and if they are set

to 1 then the radius is 1, which fits the function with

in the interval defined in Section 2.1. In this case it is

only necessary to operate in the interval -1 to 1, and

therefore is not necessary to adjust the a and b pa-

rameters. Hence we only need to store and adjust the

parameter n to manage the shape of the dynamic trust

evolution function.

However, the superellipse needs some adjust-

ments in order to fit the desired curves as shown on

Figure 3–Figure 6. Four sets of functions are defined

in order to fit the different curves needed:

• Optimistic curve in trust. See Equation 1.

• Cautious curve in trust. See Equation 2.

• Optimistic curve in distrust. See Equation 3.

• Cautious curve in distrust. See Equation 4.

The four different curves are defined like this,

where the parameter a and b are set to 1

|x− 1|

n

+ |y|

n

= 1 (1)

Figure 8: The trust function plotted where a = 1, b = 1 and

n = 2.

|x|

n

+ |y− 1|

n

= 1 (2)

|x|

n

+ |y+ 1|

n

= 1 (3)

|x+ 1|

n

+ |y|

n

= 1 (4)

These equations can be solved and the resulting

contributions to the trust evolution function are shown

in Table 1. The curves corresponding to these equa-

tions are plotted in Figure 8, where n is 2:

This implementation has the advantage that the

neutral trust function, that has been assumed to be lin-

ear, is automatically represented for n=1.

4.2 Trust Dynamics

In our approach to trust dynamics we have made some

assumptions based on the experimental research by

Jonker, Treur, Theeuwes and Schalken (Jonker et al.,

2004). From their research, we see that after 5 succes-

sive positive interactions and no previous interactions,

the trust value is almost at a maximum. Therefore we

make the definition that after 10 successive positive

interactions the trust value should be at a maximum.

Based on the coordinate system where the x value

goes from -1 to 1, we define a positive experience to

an increase to be

1

10

of the possible interval. As the

experiment is based on no previous actions this inter-

val would be from 0 to 1, hence a positive experience

should increase the x value by 0.1.

The same experimental research shows that neg-

ative interactions reach almost maximum distrust on

5 recommendations as well, so we define a negative

interaction should decrease the x value by 0.1.

The dynamic trust evaluation function has to dis-

tinguish between 5 different scenarios: 1) neutral

user

3

; 2) optimistic user in trust; 3) cautious user in

trust; 4) optimistic user in distrust; 5) cautious user in

distrust. The corresponding trust update functions are

shown in Table 1.

3

In the first interaction all users are considered neutral,

where x = 0 and n = 1.

SECRYPT 2008 - International Conference on Security and Cryptography

514

Table 1: Trust values for the different scenarios.

Scenario T(t)

1 T(t) = 0

2 T(t) = (−|(x− 1)

n

| + 1)

1

n

3 T(t) = −(−|x

n

| + 1)

1

n

+ 1

4 T(t) = (−|x

n

| + 1)

1

n

− 1

5 T(t) = (−|(x+ 1)

n

| + 1)

1

n

The x value and n value are updated, so that x = x +

0.1 and n = n+0.1 if the experience was positive and

x = x− 0.1 and n = n− 0.1 if it was negative.

4

The model presented in this paper does not take

temporal aspects into account. This is, however, easy

to include in the model by registering when interac-

tions take place and adjust the experience from the

interactions according to their age (this effectively

implements the forgetability proposed by Jonker et

al. (Jonker et al., 2004)). The following example il-

lustrates how this could be done.

We define that after 3 months an interaction is only

worth 50% of its original value and after 9 months it

is only worth 25% and after a year it is not worth any-

thing any more. If a person has 2 positive experiences

within the last week, a negative experience that is 4

months old, a negative experience that is 10 months

old and a positive experience that is 2 years old the X

value will be calculated as following:

XValue = 0.1+0.1−0.1·50%−0.1·25%+0. 1·0% = 0.125

5 EVALUATION

The proposed dynamic trust evolution model has been

evaluated with respect to the required properties de-

fined by Jonker and Treur and a prototype has been

implemented in a recommendation system for an on-

line service.

5.1 Properties of the Trust Evolution

Function

First of all, we wish to determine whether our trust

evolution function satisfies the 10 required properties,

identified by Jonker and Treur.

Future Endependence. Our definition of the dy-

namic trust evolution function only depends on

4

The adjustment of the n value is only based on experi-

ence, but it works well for the scenarios we have tested it

with.

previous interactions as defined by the trust dy-

namics.

Monotonicity. The dynamic trust evolution function

is monotonic, because the functions make sure

that a higher X value can never give a lower trust

value than the actual trust value.

Indistinguishable Past. We cannot determine the

trust evolution curve (n value) or the trust dynam-

ics (X value) by analysing the trust value

Maximal Initial Trust. There is a maximum trust

value of 1.

Minimal Initial Trust. There is a minimum trust

value of -1.

Positive Trust Extension. The proposed trust update

function is monotone and continuous on the do-

main, so positive experiences will increase the

trust value, thus meeting the requirements of pos-

itive trust extension.

Negative Trust Extension. The proposed trust up-

date function is monotone and continuous on the

domain, so negative experiences will reduce the

trust value, thus meeting the requirements of neg-

ative trust extension.

Degree of Memory Based. The trust evolution func-

tion will forget about the past with the definition

of forgetability.

Degree of Trust Dropping. It is possible to change

the acceleration of trust dropping by the feedback

from the user. By having a larger n value on a

cautious curve trust drops faster.

Degree of Trust Gaining. It is possible to change

the acceleration of trust gaining by the feedback

from the user. By having a larger n value on an

optimistic curve trust is gained faster.

5.2 Practical Experience

The proposed dynamic trust evolution model has been

implemented in the Wikipedia Recommendation Sys-

tem (WRS) (Korsgaard and Jensen, 2008), which al-

lows users of the Wikipedia to record and share their

opinions on the quality of articles that they read in the

Wikipedia. The WRS treats the Wikipedia as a legacy

system, which means that it has been implemented

without modifying the Wikipedia infrastructure or the

underlying wiki engine. A brief overviewof this work

is presented in the following,but we refer the reader to

Thomas Korsgaard’s M.Sc. Thesis (Korsgaard, 2007)

for a more detailed description and evaluation of the

system.

DYNAMICS OF TRUST EVOLUTION - Auto-configuration of Dispositional Trust Dynamics

515

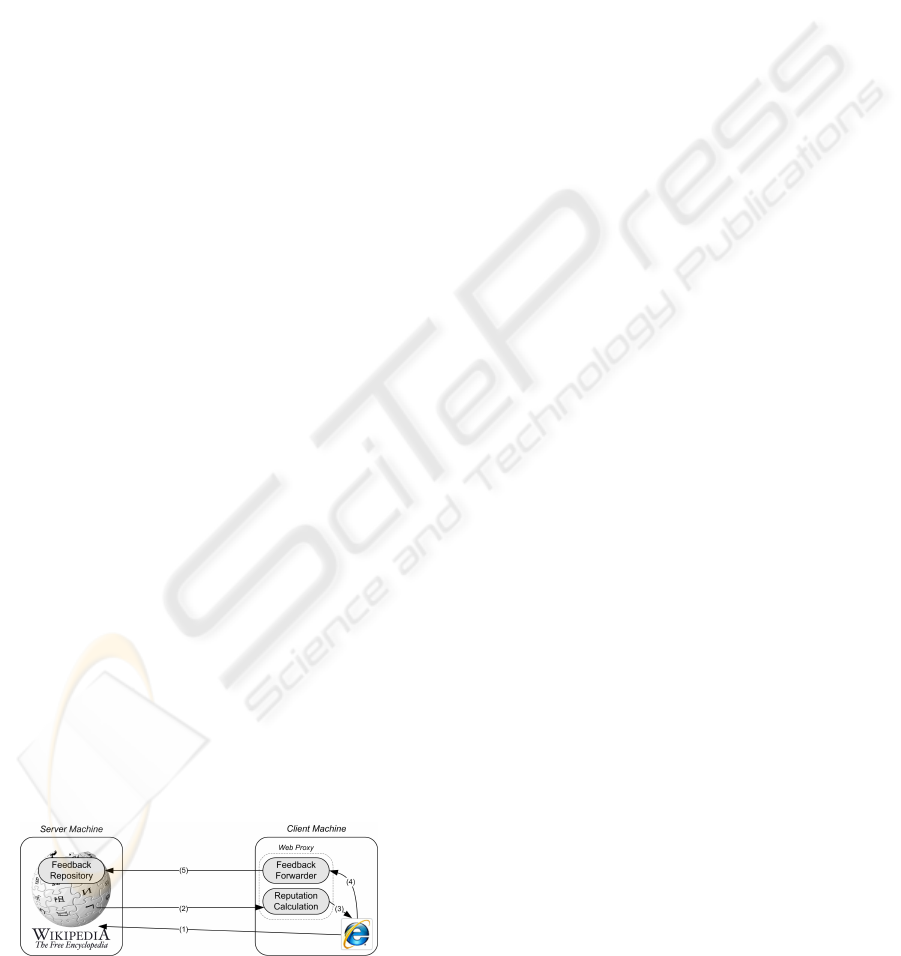

5.2.1 WRS Overview

Implementation of a recommender system on top of

a legacy web-based system requires the ability to

rewrite the content read from the Wikipedia servers

(to insert the recommendations) and to capture and

store the feedback (the recommendations) from the

clients. A simple way to do this is to insert a web-

proxy between the user and the Wikipedia. This archi-

tecture is shown in Figure 9, where the proxy executes

on the user’s own computer along with the browser.

The browser must be configured to use the local

web proxy (this is how users opt in), which inter-

cepts all requests to the Wikipedia (1). The proxy re-

trieves the article from the Wikipedia (2) along with

the recommendations that are used to calculate the

reputation score for the article. The article is rewrit-

ten to include the reputation score and forwarded to

the browser (3). The user now has an indication of the

quality of the article and may decide to provide feed-

back regarding the quality of the page and the utility

of the reputation score (4). The user’s indication of

the utility of the score is used to determine whether

the recommendation provided a positive or negative

experience and the user’s own rating is stored in the

feedback repository in the Wikipedia (5). The differ-

ent components are described in greater details in the

following.

5.2.2 Recommendation Repository

Treating the Wikipedia as a legacy system means

that there is no access to the Wikipedia’s underly-

ing SQL database, so recommendations have to be

stored somewhere else. There are two obvious so-

lutions to this problem, either to develop a separate

decentralized database infrastructure or to store the

recommendations as HTML comments in the pages

of the Wikipedia.

A scheme with decentralised databases, where

each user keeps a private database of ratings given

to articles, introduces a series of problems. First of

all, the amount of data kept may grow very large and

the database can become too big for private users to

Figure 9: Overview of the Wikipedia Recommender Sys-

tem.

maintain. Secondly, it introduces a problem with ex-

changing ratings between users, which is made more

difficult by the fact that not all users are online all the

time, so some ratings are not available all the time.

Finally, decentralised databases open up for potential

security flaws, because users must provide external

access to a database on their personal computer.

Storing recommendations directly in Wikipedia

pages benefits from the fundamental Wiki philosophy

of providing a central repository of information that

all users can easily modify. Moreover, storing rec-

ommendations in HTML comments means that they

will not be shown by existing web browsers, so the

WRS is invisible to users who do not participate in

the system. This also means that comments are al-

ways online and recommendations are only stored in

one place. The downside to this approach is that rec-

ommendations have to be protected against fabrica-

tion and modification attacks, but this may be done

by standard cryptographic techniques for authenticity

and authentication as described elsewhere (Korsgaard

and Jensen, 2008). Storing recommendations in the

Wikipedia also leaves them open to vandalism, but it

is easy to revert the existing article to an earlier ver-

sion of the article if the page is vandalised. When a

Wikipedia page is vandalised, the page normally re-

stores quite fast (eBlogger, 2005).

5.2.3 Reputation Calculation

The calculation of a reputation value for an article

is based on the recommendation repository, which

stores all the ratings that other users have given the ar-

ticle. Each recommendation consists of five elements:

The mark The rating that the user has given the arti-

cle.

The user The registered Wikipedia user name of the

user who gave the mark. The name is chosen by

the user when registering with the Wikipedia, but

there may be no link to the user’s real identity.

The version The version number of the article that

the recommendation relates to.

The article name The name of the article is inserted

into the recommendation, in order to prevent that

recommendations are copied to other articles.

The hash The hash protects the integrity of the the

user name, the mark and the version. The title of

the Wikipedia article, the mark, and the version

are concatenated and signed with self-signed cer-

tificate, where the public key is kept at the user’s

personal user page. This prevents ratings from

being tampered with, moved to other pages or

moved to a later version of an article.

SECRYPT 2008 - International Conference on Security and Cryptography

516

The first three elements are used to calculate the

reputation, while the two last elements are included

to ensure the integrity and authenticity of the recom-

mendations. The calculation of the reputation score

is based on the dynamic trust evolution model defined

in this paper.

5.2.4 Summary

The dynamic trust evolution model was simple to im-

plement and required no particular configuration by

the users of the WRS. Our preliminary evaluation of

the ratings of recommendations (trust values) deliv-

ered by the WRS indicate that the model behaves as

expected and that the results conform to human intu-

itions.

6 CONCLUSIONS

In this paper, we addressed the problem of auto-

configuration of a trust based security mechanism for

pervasive computing. We presented a novel dynamic

trust evolution function that requires no initial con-

figuration by the user, which makes it particularly

suitable for embedding into mass produced consumer

products. Our evaluation shows that the proposed

function satisfies all the requirements of a trust evo-

lution function and preliminary experiments indicate

results that correspond to human intuition.

Directions for future work include an extension of

the proposed dynamic trust evaluation function to in-

corporate temporal aspects of trust, such as ageing

and forgetability along the lines suggested in Sec-

tion 4.2. We would also like to explore different rates

of adjustments of the shape of the dynamic trust evo-

lution function, i.e., the rate of change of the n value.

REFERENCES

Blaze, M., Feigenbaum, J., Ioannidis, J., and Keromytis, A.

(1999). The role of trust management in distributed

systems security. In Secure Internet Programming,

volume 1603 of Lecture Notes in Computer Science,

pages 185–210. Springer Verlag.

Cahill, V., Gray, E., Seigneur, J.-M., Jensen, C., Chen,

Y., Shand, B., Dimmock, N., Twigg, A., Bacon,

J., English, C., Wagealla, W., Terzis, S., Nixon, P.,

di Marzo Serugendo, G., Bryce, C., Carbone, M.,

Krukow, K., and Nielsen, M. (2003). Using trust for

secure collaboration in uncertain environments. IEEE

Pervasive computing, 2(3):52–61.

eBlogger (2005). EBlogger: On vandalism.

http://eblogger.blogsome.com/2005/10/

24/on-vandalism, accessed 10 June 2008.

Grandison, T. and Sloman, M. (2000). A survey of trust in

internet application. IEEE Communications Surveys

& Tutorials, 3(4):2–16.

Gray, E., Jensen, C., O’Connell, P., Weber, S., Seigneur,

J.-M., and Chen, Y. (2006). Trust evolution poli-

cies for security in collaborative ad hoc applications.

Electronic Notes in Theoretical Computer Science,

157(3):95–111.

Jonker, C. M., Schalken, J., Theeuwes, J., and Treur, J.

(2004). Human experiments in trust dynamics. In

Proceedings of the Second International Conference

on Trust Management (iTrust), pages 206–220, Ox-

ford, U.K.

Jonker, C. M. and Treur, J. (1999). Formal analysis of

models for the dynamics of trust based on experi-

ences. In Proceedings of the 9th European Workshop

on Modelling Autonomous Agents in a Multi-Agent

World : Multi-Agent System Engineering (MAAMAW-

99), pages 221–231, Berlin, Germany.

Korsgaard, T. R. (2007). Improving trust in the wikipedia.

Master’s thesis, Department of Informatics and Math-

ematical Modelling, Technical University of Den-

mark.

Korsgaard, T. R. and Jensen, C. (2008). Reengineering the

wikipedia for reputation. In Proceedings of the 4th

International Workshop on Security and Trust Man-

agement, Trondheim, Norway.

Marsh, P. S. (1994). Formalising Trust as a Computational

Concept. PhD thesis, Department of Mathematics and

Computer Science, University of Stirling.

McKnight, D. and Chervany, N. (1996). The Meanings of

Trust. Technical Report 96-04, University of Min-

nesota, Management Informations Systems Research

Center.

Seigneur, J.-M., Farrell, S., Jensen, C., Gray, E., and Chen,

Y. (2003a). End-to-end trust in pervasive computing

starts with recognition. In Proceedings of the First In-

ternational Conference on Security in Pervasive Com-

puting, Boppard, Germany.

Seigneur, J.-M., Farrell, S., Jensen, C., Gray, E., and Chen,

Y. (2003b). Towards security auto-configuration for

smart appliances. In Proceedings of the Smart Objects

Conference, Grenoble, France.

Weiser, M. (1991). The computer for the 21st century. Sci-

entific American Special Issue on Communications,

Computers, and Networks.

DYNAMICS OF TRUST EVOLUTION - Auto-configuration of Dispositional Trust Dynamics

517