RESEARCH ON LEARNING-OBJECT MANAGEMENT

Erla Morales

1

, Francisco García

2

and Ángela Barrón Ruiz

1

1

Department of Theory and History of Education

University of Salamanca, Salamanca, Campus Canalejas, Pº de Canalejas, 169, 37008, Salamanca, Spain

2

Department of Computer Science, University of Salamanca, Plaza de los Caídos s/n, 37008 Salamanca, Spain

Keywords: Metadata, learning objects, knowledge management.

Abstract: Although LO management is an interesting subject to study due to the current interoperability potential, it is

not promoted very much because a number of issues remain to be resolved. LOs need to be designed to

achieve educational goals, and the metadata schema must have the kind of information to make them

reusable in other contexts. This paper presents a pilot project in the design, implementation and evaluation

of learning objects in the field of university education, with a specific focus on the development of a

metadata typology and quality evaluation tool, concluding with a summary and analysis of the end results.

1 INTRODUCTION

Many studies have been done on the concept of

learning objects (LOs) but no consensus has been

reached on a standard definition or on the technical

and pedagogical requirements. Specifications are

being developed but have yet to be normalized, and

the use of metadata schemas is still under discussion.

This has prevented LO creation and management

from becoming common practice.

This paper presents our research on the design,

implementation and evaluation of a prototype LO

management tool for e-learning systems, containing

quality criteria designed to enable LOs to be

standardized and attuned to educational needs. The

prototype was built on the basis of our own

knowledge model, and comprises specific metadata

value spaces for classifying LOs into the LOM “5.

Educational” metadata category (IEEE LOM, 2002).

The paper begins by outlining the development

of an initial prototype learning object (LO1) and

determines what type of metadata should be applied

(section 2). It goes on to describe how we

implemented and evaluated LO1 using our LO

evaluation tool (section 3); then describes how the

results of those trials were used to produce a second

prototype (LO2), which was also implemented and

evaluated (section 4). Finally it presents our

conclusions and plans for the next stages of our

work (section 5).

2 LO DESIGN AND PROPOSED

METADATA TYPOLOGY

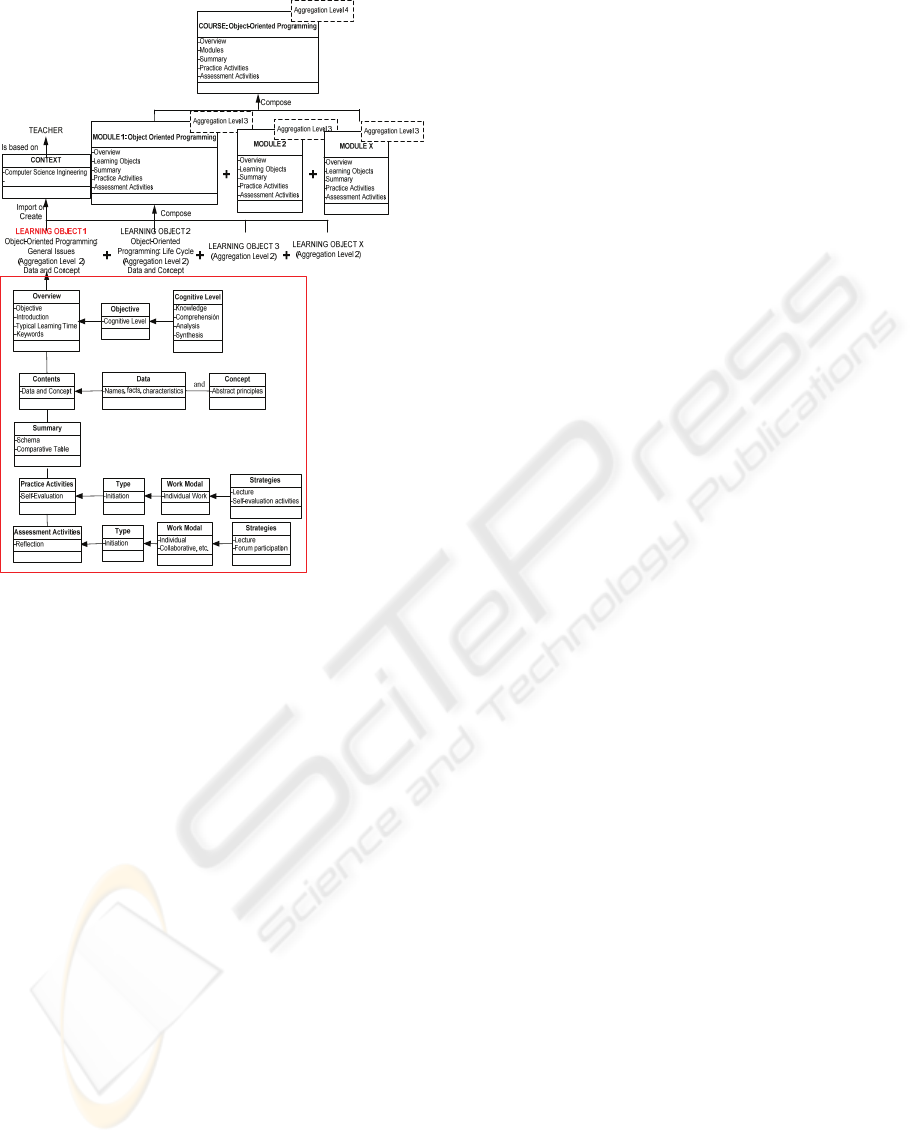

The first task to create our initial prototype learning

object (LO1) was to chose a context in which to

conduct our trials: the Object-Oriented Programming

(OOP) option of the Computer Science course at

Salamanca University (Morales, García, Barrón and

Gil, 2007c). We then defined a set of specific

learning objectives with which we built a knowledge

model (figure 1) that served to produce a basic unit

of learning for designing LO1, entitled “Object-

Oriented Programming: General Issues” (Morales,

García and Barrón, 2007b).

One of the key goals here was to enable a

knowledge model to be used to standardize LOs,

which is crucial for them to be tailored to

educational needs, taking into account key elements

for learning (Morales, García and Barrón, 2007a).

Sound LO management requires the

incorporation of reliable metadata, but the viability

of the only metadata schema currently regarded as a

standard (IEEE LOM, 2002) has been called into

question because it uses vast quantities of ill-defined

types of data, and some of its metadata categories do

not make it clear what kind of information has to be

added, thus further complicating the task of LO

management (Morales, García and Barrón, 2006).

559

Morales E., García F. and Barrón Ruiz Á. (2008).

RESEARCH ON LEARNING-OBJECT MANAGEMENT.

In Proceedings of the Tenth International Conference on Enterpr ise Information Systems - AIDSS, pages 559-562

DOI: 10.5220/0001710705590562

Copyright

c

SciTePress

Figure 1: Knowledge Model of LO1.

Although the lack of clarity in the IEEE LOM

standard makes its value spaces hard to interpret.

We set out to address this issue – and, hence, to

enable suitable LO management data to be

introduced into learning environments – by devising

a set of definitions to clarify the content of each

value space in the LOM “5. Educational” category:

• 5.1 Interactivity Type: expositive

LOs featuring a very low interactivity level,

with students receiving information yet

remaining unable to interact with the content

• 5.2 Learning Resource Type: web pages

• 5.3 Interactivity Level: low

LOs with an expositive interactivity level – minimal

student participation (web pages with few links)

• 5.4 Semantic Density: medium

LO content designed to promote smooth

learning and application of knowledge

• 5.5 Intended End User Role: learners

• 5.6 Context: university level

• 5.7 Typical Age Range: Unspecified

• 5.8 Difficulty: easy

Information is easily associated with previous

knowledge

We then incorporated these definitions into our

prototype LO1.

3 LO1 IMPLEMENTATION AND

EVALUATION

Having designed LO1 based on our knowledge

model and incorporating our proposed metadata

typology, we set about implementing it with Moodle

together with the following additional elements:

• a pdf file: so that our sample students could

print out the LO content

• a self-assessment section: so that they could

see how much they knew about the content,

and to repeat the test whenever necessary

• a forum: so that learners and teachers could

discuss the content

• an evaluation tool: for the students to rate the

quality of LO1.

Current proposals for learning resource evaluation

tools include web sites (Marqués, 2003; Torres,

2005) and multimedia tools, (Marqués, 2000), and

other proposals have been made for assessing the

quality of LOs taking into account their instructional

use-oriented design (Williams, 2000) and

sequencing (Zapata, 2006). We drew on these to

design an instrument that would enable learners to

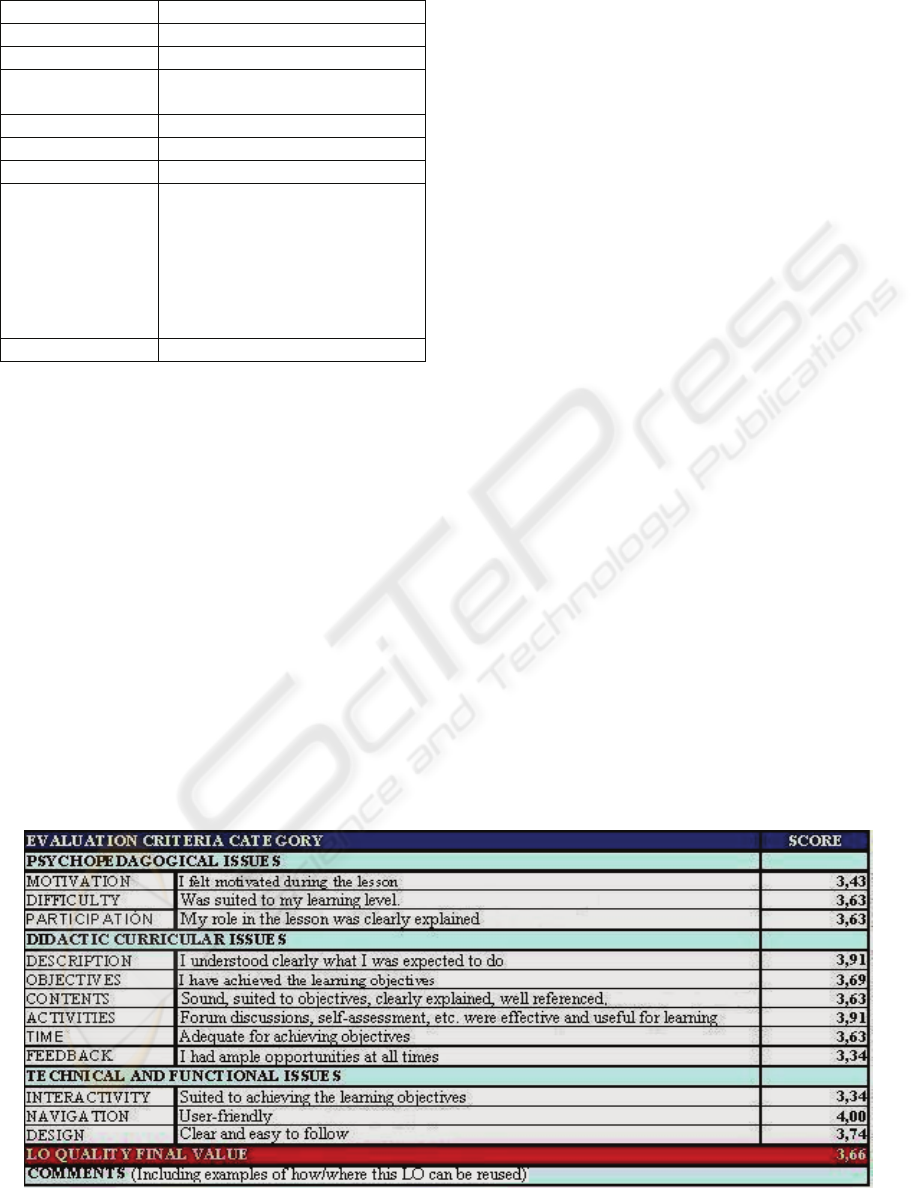

assess the value/quality of their LOs (see figure 2).

Our sample students were able to access the LO and

the evaluation tool via Moodle and to rate them on a

scale of 1 to 4: 1= very poor; 2=poor; 3=satisfactory;

4=high; 5=very high.

As seen in figure 2 (above), the evaluation tool

was designed to gather qualitative and quantitative

data about LO1.

The qualitative results show a general agreement

on its quality. The highest scoring value was the

difficulty level (3.87), followed by the objectives

and content (3.82). These results reflect our sample

students’ approval of the content in terms of its

quantity, consistency, reliability and so on.

Navigation was considered well-designed and user-

friendly (3.79).

The students were slightly less happy with the

overall design of LO1 (3.74), and suggested a

number of possible improvements. They also made a

number of positive comments on the feedback

(3.66). ‘Activities’ and ‘interactivity’ were rated

satisfactory (3.51), as was the lowest scoring

criterion: ‘motivation’ (3.41).

The feedback gained from the space provided in

LO evaluation tool for students to make comments

provided very useful pointers for us to see what

needed to be improved when developing our second

prototype (LO2).

ICEIS 2008 - International Conference on Enterprise Information Systems

560

Table 1: LO1 quality rating incorporated into LOM.

9. Classification

9.1 Purpose Quality

9.2 Taxon Path

9.2.1 Source Table 1. LO Eval. Rating

Scale

9.2.2 Taxon CA*: 3.64 (high)

9.2.2.1 Id CA: 3.64 (high)

9.2.2.2 Entry High

9.3 Description LO considered high quality by

sample students. Lowest

scoring quantitative items

were ‘motivation’, ‘activities’

and ‘interactivity’. Qualitative

feedback suggested adding a

glossary and examples…

9.4 Keyword quality, value, high, CA_3.64.

*CA: CALIDAD (quality)

To input the quantitative and qualitative data on

the quality of LO1 into our metadata typology, we

used the LOM “9. Classification” metatada category

in combination with our own LO quality rating

classification scheme. We believe that quality

measurement using a scale should be introduced into

the “9. Classification” metadata category. Table 1

shows our prototype adaptation using the final

quality score taken from the LO1 evaluation results.

Adding a quality value to the LO metadata

category would help locate and retrieve an LO

through a search based on keywords (e.g. quality,

value, high, etc.) An alphanumeric value (e.g.

CA_3.64). makes it possible to define a specific

vocabulary for running an LO search.

The sample students’ comments provided useful

pointers for producing an enhanced and more user-

friendly design for our second prototype (LO2), with

a different font, larger characters and links to further

reading.. The actual content of LO2 followed on

from LO1, taking the learning objectives to a more

advanced level.

4 LO2 IMPLEMENTATION AND

EVALUATION

LO2 was implemented in the same learning

environment as LO1, and was evaluated with an

enhanced version of our quality evaluation tool

(figure 2).

The final score reflects a similarly high average

quality rating on the part of our sample students

(3.66). The highest scoring item was ‘navigation’

(4.00), followed by ‘description’ and ‘activities’

(self-assessment) (3.91), both of which figure in the

Didactic Curricular Issues category.

Content design was considered high quality

(3.74), as were three other didactic-curricular issues:

– achievement of objectives (3.69), learning time,

and LO content (3.63) – and one psycho-

pedagogical issue: ‘difficulty’ (3.63) .

Student comments were even more positive for

LO2 than LO1, expressing their approval of the new

section with references, links to further reading, a

glossary and a list of acronyms.

Some, however, considered that the screen

resolution was better but needed further

improvement: there were still too many scroll bars

and accessing table cells remained an impediment to

sightless users.

Having completed our evaluation, we

incorporated the overall LO2 quality rating into the

corresponding LOM “9. Classification” metadata

Figure 2: LO2 Evaluation Results.

RESEARCH ON LEARNING-OBJECT MANAGEMENT

561

category, using the LO classification scheme based

on our proposed metadata typology (Morales, García

and Barrón, 2007b).

Our proposed adaptation of the LOM “9.

Classification” metadata category comprises the key

quantitative and qualitative data collected with our

LO quality evaluation tool. In presenting a summary

of learners’ comments on LO quality, item “9.3.

Description” provides a useful means of further

improving that quality.

5 CONCLUSIONS

Our prototype knowledge model sought to

demonstrate how LOs can be established as a basic

unit of learning, taking into account key educational

needs. It can be used to adapt an LO to a specific

type of course at university level.

The LO quality evaluation tool enabled us to

collect a wide range of information useful for

improving both LO1 and LO2. In attributing a

numerical value to LO quality, the rating scale

helped specify exactly which data to incorporate into

the metadata schema.

It is important to remember that metadata

editors today only classify LOs according to specific

established purposes. We used the LOM “9.

Classification” metadata category because we

believe it useful for defining and adapting new LO

classification schemes that would allow users to

acquire and manage LOs suited to their own

individual needs.

Finally, the results obtained with the LO quality

evaluation tool helped highlight exactly what

improvements needed to be made. Sorting

evaluation criteria into different categories made it

possible to evaluate the LOs from both pedagogical

and technical points of view.

Our future work will focus on developing an

LO creation tool based on our knowledge model. We

will also seek to improve the quality of LOs by

taking into account the accessibility issues that are

crucial to LO management.

ACKNOWLEDGEMENTS

This work was co-financed by the Spanish Ministry

of Education and Science, the FEDER-KEOPS

project (TSI2005-00960) and the Junta de Castilla y

León local government project (SA056A07).

REFERENCES

IEEE LOM. 2002. IEEE 1484.12.1-2002 Standard for

Learning Object Metadata. Retrieved June, 2007, from

http://ltsc.ieee.org/wg12.

Marquèz, P. 2000. Elaboración de materiales formativos

multimedia. Criterios de calidad. Disponible en

http://dewey.uab.es/pmarques.

Marquèz, P. 2003. Criterios de calidad para los espacios

Web de interés educativo. Disponible en

http://dewey.uab.es/pmarques/caliWeb.htm

Morales, E. M., García, F. J., Barrón, Á. 2007a. Key

Issues for Learning Objects Evaluation. In ICEIS'07,

Ninth International Conference on Enterprise

Information Systems. Vol 4. pp. 149-154. INSTICC

Press.

Morales, E. M., García, F. J. & Barrón, Á. 2006. Quality

Learning Objects Management: A proposal for e-

learning Systems. In ICEIS’06. 8th International

Conference on Enterprise Information Systems

Artificial Intelligence and Decision Support Systems

Volume Pages 312-315. INSTICC Press. ISBN 972-

8865-42-2. 2006. (B3).

Morales, E. M., García, F. J., Barrón, A. 2007b. Improving

LO Quality through Instructional Design Based on an

Ontological Model and Metadata J.UCS. Journal of

Universal Computer Science, vol 13. nº 7. pp. 970-

979. ISSN 0948-695X

Morales, E.M., García, A.B., Barrón, A., Gil, A.B. 2007c.

Gestión de Objetos de Aprendizaje de calidad: Caso de

estudio. En SPDECE’07. IV Simposio Pluridisciplinar

sobre Objetos y Diseños de Aprendizaje Apoyados en

la Tecnología. ISBN 978-84-8373-992-1.

Torres, L. 2005. Elementos que deben contener las

paginas web educativas. Pixel-Bit: Revista de medios

y educación, Nº. 25, 2005, pags. 75-83.

Williams D. D. 2000 Evaluation of learning objects and

instruction using learning objects. In D. A. Wiley (Ed.),

The instructional use of LOs,

http://reusability.org/read/chapters/williams.doc

Zapata R. M. 2006. Calidad en entornos virtuales de

aprendizaje y secuenciación de Learning objects (LO).

Actas del Virtual Campus 2006. V Encuentro de

Universidades & eLearning, 111-119 ISBN 84-689-

6289-92.

ICEIS 2008 - International Conference on Enterprise Information Systems

562