DESIGNING MOBILE MULTIMODAL ARTEFACTS

Tiago Reis, Marco de Sá and Luís Carriço

HCIM, LaSIGE, DI, FCUL, University of Lisbon, Campo Grande, Lisbon, Portugal

Keywords: PDA, Multimodal Interaction, Mobile Design, Usability, Low fidelity prototypes, High fidelity prototypes.

Abstract: Users’ characteristics and their different mobility stages sometimes reduce or eliminate their capability to

perform paper-based activities. The support of such activities and their extension through the utilization of

non paper-based modalities introduces new perspectives on their accomplishment. We introduce mobile

multimodal artefacts and an artefact framework as a solution to this problem. We briefly explain the main

tools of this framework and detail two versions of the multimodal artefact manipulation tool: a visual

centred and eye-free version. The design and evaluation process of the tool is presented including several

usability tests.

1 INTRODUCTION

Multimodal interaction provides users with

interaction modes, beyond the usual

keyboard/mouse for input and visual display for

output. Usually, multimodal systems combine

different modalities according to the user’s

characteristics or/and surrounding environments,

enabling them to interact with the system adequately

according to their situation at a given time (Gibbon,

2000). These systems have only started to be used

and seriously researched in the past 15 to 20 years

(Oviatt, 2003), as they became more feasible from a

technological point of view.

The additional interaction modes included on a

multimodal system can be used either in a

complementary way (to supplement the other

modalities), in a redundant manner (to provide the

same information through more than one modality),

or as an alternative to the other modalities (to

provide the same information through a different

modality) (Oviatt, 1999).

Multimodalities are particularly well suited for

mobile systems given the varying constraints placed

on both the user and the surrounding environment

(Hurtig, 2006). Adaptability is a key issue on these

systems, as users can, in some circumstances, take

advantage of a single modality (or a group of

modalities) according to their needs (Gibbon, 2000).

The use of non-conventional interaction modalities

becomes crucial when concerning human-machine

interaction for users with special needs (e.g.,

visually impaired users). In these cases the objective

is not to complement the existing modalities of a

system with new ones but to replace them with

adequate ones (Blenkhom, 1998; Burger, 1993;

Bloyd, 1990).

In this paper, we present a mobile multimodal

framework developed to support/extend paper-based

procedures and activities. This framework enables

users to create, distribute, manipulate and analyse

the utilization of artefacts based on several kinds of

media: text, audio, video and combinations of these.

Users can easily produce, distribute and use

multimodal: questionnaires, exams, role play games,

books, tutorials, guides, prototypes, simple

applications, etc. Furthermore, they are able to study

the utilization of the created artefacts, which

provides them with valuable usability and usage

information.

As we describe our framework, we focus on the

modalities included as an alternative or complement

of the previously existing ones. The following

section describes the related work on mobile

multimodal systems developed for many different

purposes. After, we introduce our multimodal

artefact-based framework, we describe the design

and evaluation of its multimodal characteristics and,

finally, we present our conclusions and future work.

78

Reis T., de Sá M. and Carriço L. (2008).

DESIGNING MOBILE MULTIMODAL ARTEFACTS.

In Proceedings of the Tenth International Conference on Enterprise Information Systems - HCI, pages 78-85

DOI: 10.5220/0001708400780085

Copyright

c

SciTePress

2 RELATED WORK

Current paper-based activities and practices are

highly disseminated and intrinsic to our daily lives.

Particular cases such as therapeutic and educational

procedures, which rely strongly on paper-based

activities, assume special importance due to their

critical content. However, given the underlying

medium, some of the activities fall short of their

goals. Moreover, the ability to introduce digital data

and multimodalities can enhance the activities and

facilitate users’ lives. Mobile multimodal

applications have been emerging more as the

technology evolution starts to enable their support.

Several systems, which combine different

interaction modalities on mobile devices, have

already been developed. The approaches vary in the

combination of modalities that, generally, suit

different but specific purposes, which address the

users’ needs and surrounding environments.

Studies on multimodal mobile systems have

shown improvements when compared to their

unimodal versions (Lai, 2004) and several

multimodal systems have been introduced on

different domains. For instance, mobile systems that

combine different interaction modalities in order to

support and extend specific paper-based activities

have been used with success in art festivals (Signer,

2006) and museums (Santoro, 2007). The latter also

supports visually impaired user interaction. Still,

both are extremely specific, targeting activities that

occur in particular, and controlled, environments.

Other approaches focus mainly on the

combination of interaction modalities in order to

eliminate ambiguities inherent to a specific

modality: speech recognition (Hurtig, 2006)

(Lambros, 2003). However, once again, they focus

specific domains and use the different modalities

only as a complement to each other.

Closer to our goals ACICARE (Serrano, 2006)

provides a good example of a framework that was

created to enable the development of multimodal

mobile phone applications. These rely on: command

based speech recognition, keypad (for input) and

visual display (for output). The framework allows

rapid and easy development of multimodal

interfaces, providing automatic usage capture that is

used on the evaluation of the multimodal interface.

However, the creation and analysis of these

interfaces cannot be done in a graphical way, thus

not enabling users with no programming experience

to take profit of this tool. Moreover, modalities

relying on video are not considered and the

definition of behaviour that responds either to the

user interaction, navigation or to external events

falls out of their purposes.

Finally, none of the work found in the available

bibliography enables users, without programming

experience, to create, distribute, analyse and

manipulate multimodal artefacts that suit different

purposes, users and environments. Furthermore,

most of the existing multimodal mobile applications

rely on a server connection to perform their tasks,

limiting their mobility and pervasive use.

3 MOBILE MULTIMODAL

ARTEFACT FRAMEWORK

The original framework was developed to enable the

creation and manipulation of mobile artefacts that

support and extend paper-based procedures and

activities. As the framework utilization evolved, we

faced new challenges that clearly pointed to its

extension through the inclusion of multimodalities.

Four main tools compose the framework: the

Creation Tool allows users to create multimodal

interactive/proactive artefacts (e.g., role play games,

dynamic questionnaires and activity guides); the

Manipulation Tool enables the instantiation and

manipulation of the artefacts (e.g. playing the

games, filling the questionnaires and registering

activities); the Analysis Tool, actually a set of tools,

provides mechanisms to analyse and annotate

artefact manipulation and results (e.g. see how and

when the game was played, the questionnaires were

filled); and the Synchronization Tool, handles the

transfer of artefacts and results between devices. All

tools are available for Microsoft’s OS in

Desktop/TabletPCs and PDAs/Smartphones and

were developed in C#. A simpler J2ME version,

tested in PalmOS Garnet 5.4, is also available.

In this paper, we focus on the creation and the

manipulation tool, since those were the main targets

of the multimodal extensions and the analysis and

synchronization tools required only minor

modifications.

3.1 Mobile Multimodal Artefacts

Artefacts are an abstract entity composed by an

ordered set of pages and a set of rules. Pages contain

one or more elements. These are the interaction

building blocks of artefacts (e.g., labels, selectors)

and are arranged in space and time within a page.

Rules can alter the sequence of pages (e.g., <skip

to page X>) or determine their characteristics (e.g.,

DESIGNING MOBILE MULTIMODAL ARTEFACTS

79

<hide element Y>). Rules are triggered by user

responses (e.g., <if answer is “yes”>, interaction

(e.g., <on 3 answer modifications>), navigation

(e.g., <on 4 visits to this page>), or by external

events (e.g., <when elapsed time is 10s>) defining

the artefacts’ behaviour.

3.1.1 Basic Output Elements

These elements present content and put forward size

(e.g., fixed-size), time (e.g. reproduction speed

limits) and audio-related (e.g. recommended

volume) characteristics; they may also include

interaction (e.g., scrolling, play/pause buttons) that,

however, does not correspond to user responses. The

following variant is provided:

• Text/image/audio/video labels present textual,

image, audio or video content or a combination of

audio with image or text.

Simple artefacts, such as tutorials, guides and digital

books can be built with these elements.

3.1.2 Full Interactive Elements

These elements expect user responses which can be

optional or compulsory and may have default values.

The elements may have content (e.g., options of a

choice element), thus inheriting the characteristics of

basic ones, or gather it from user responses (e.g.

inputted text). The following elements are available:

• Audible text entries allow users to enter text and

optionally ear their own entered text when the

device is able to support a text-to-speech (TTS)

package available on the manipulation tool.

• Audio/video entries enable users to record an

audio/video stream – quality and dimension

attributes may be defined.

• Audible Track bars allow users to choose one

value from a numeric scale – scale, initial value

and user selection are conveyed visually and/or

audibly.

• Text/image/audio choices permit users to select

one or more items from an array of possible

options – items may be text, images, audio or a

combination of audio streams with text or images;

presentation characteristics such as the number of

visible/audible options (e.g., drop down/manual

play) may be defined.

• Audible 2D selectors allow users to interact with

images or drawings by picking one screen point

or a predefined region (like a 2D choice) –

audible output is available for point (coordinates)

selection and regions (recorded audio) selection

and navigation.

• Visible Time selectors allow users to select an

excerpt (a time interval) within an audio or video

stream - predefined excerpts can be defined and

correspondence to values may be set (corresponds

to the 2D selector, but on a time dimension).

The user’s responses entered in elements may be

used within rules to control artefact behaviour. As

such, the entire set of elements and rules can be used

to compose fairly elaborated adaptive artefacts.

3.1.3 Materialization

Artefacts, in their persistent form, are represented in

a XML or in a relational database format. XML is

used in mobile and desktop versions whereas

databases are restricted to desktop/tablet platforms.

3.2 Creation Tool

The artefact creation wizard is the application that

allows users to create, arrange and refine artefacts.

Overall, the process of creating artefacts is driven by

a simple to use interface that comprises three steps:

creating elements by defining their content and

interaction characteristics; organizing the sequence

of elements/pages; and defining behaviours. Each

element type can be edited by its own dedicated

editor. A preview of the resulting page is always

available and constantly updated. Rules also have its

own editor that can be invoked in the context of its

trigger (element, page, artefact or external element).

The whole editing process incorporates and

enforces usability guidelines (e.g., type and amount

of content, location of elements and adjustment to

the device's screen), preventing users from creating

poor artefacts regarding their interactivity and

usability. Besides generic guidelines, domain

specific ones can be added to the tool. As such, the

tool enables the creation of sophisticated

applications by non expert programmers

3.3 Manipulation Tool

The Manipulation Tool (in Figure 1) materializes

artefacts. It provides mechanisms to load artefacts,

instantiate pages and elements and arrange elements

as needed, navigate through pages (as defined by the

corresponding rules) and to collect and keep user

responses. The tool permits artefact locking (disable

modification of responses), through timeout or user

command (e.g. at the end of the artefact), auto-save

of responses and navigation status and on-request

summaries. This tool also includes a logging

mechanism that, if enabled, gathers information

ICEIS 2008 - International Conference on Enterprise Information Systems

80

about artefact usage for subsequent analysis. The

gathered data is composed by time-stamped entries

including clicks with location, (re)chosen options,

typed characters, etc. Logs and users responses are

stored in XML files or in a database depending on

the platform and database availability.

Figure 1: Manipulation Tool (visual version).

Navigation and interaction were kept simple and

were substantially changed with the introduction of

the multimodal dimension. We assumed devices

without physical keys, in view of specific usage

contexts (e.g. the user is already holding a stylus)

and aligned with current trends of some emerging

mobile devices. If keys/joystick are available in the

device, mapping is straightforward for most of the

interaction and the user can combine visual and

physical solutions as desired.

Two versions of the tool are available. Both are

the result of a user centred design approach that

included several evaluation steps.

3.3.1 Visual Version

The visual version of the tool takes primarily a direct

manipulation strand. Users’ responses are directly

entered on elements. For text/speech/video entries,

once directly selected, a specific input gadget can be

used (virtual keyboard, microphone and camera).

For audio/video playback an element control was

added near the element/item (in Figure 1) allowing

simple play/pause options. Navigation through pages

is achieved with the arrow buttons at the extremes of

the page & artefact control bar (in Figure 1).

An alternative localized interaction approach is

also available as a consequence of the requirements

gathered during user evaluation. For that, a focus

mechanism was added to all elements and items -

expecting or not user responses. Focus feedback is

visual (see Figure 1) and audible. Four new buttons

were included in the page & artefact control bar. The

arrow-up and arrow-down buttons allow focus

changing within a page. The two central buttons

permit selection/answering (left) and audio/video

playback (right) on the element/item with the focus.

As such, all the interactions, except the text entry,

can be done through the bar.

Both alternatives are configurable. The central

buttons of the page & artefact control bar or the

element controls can disappear as required. The bar

can be moved to one of the four boundaries.

3.3.2 Eyes Free Version

The Eyes-free tool (Figure 2) is a configuration that

eliminates the need for a visual output, provided that

an audible presentation is defined for all elements of

the artefact. User action modes are restricted to

haptics and voice. A haptic card (Figure 2, on the

centre), placed on top of the mobile device’s touch

screen, enables the interaction mapping.

Figure 2: Eyes-free Manipulation Tool.

To simplify navigation, only two navigation

buttons are available. These run through all the

elements of an artefact without considering the page

(an essentially visual concept). The remaining screen

is used as a T9 keyboard for text/number input and

the haptic card holes map into these components

(virtual T9 keys and toolbar virtual buttons).

The feedback on the users’ input and the

navigation information within the artefact/elements

reproduce only the audio output. Users are aware of

their own text/audio/video responses, because the

input was either recorded or it can be synthesized

through a TTS package.

4 DESIGN AND EVALUATION

During the design of the multimodal elements and

the redesign of the artefacts, we followed a user

centred approach specifically directed to mobile

interaction design (Sá, 2007).

Requirements were gathered from a wide set of

paper-based activities that could benefit from the

introduction of new modalities. These considered

different environments (e.g., class rooms,

DESIGNING MOBILE MULTIMODAL ARTEFACTS

81

gymnasiums), users (e.g., visually impaired users)

and behaviours (e.g., walking, running). Tasks were

elicited from psychotherapy (e.g., scheduling,

registering activities and thoughts), education (e.g.,

performing exams and homework, reading and

annotating books), personal training (e.g., using

guides and exercise lists), etc. These were modelled

into use cases and diagrams that were employed to

define multiple scenarios. The scenarios contemplate

different usage contexts throughout distinct

dimensions (e.g., users, devices, settings, locations).

As these scenarios gained form, several low-

fidelity prototypes were created. On an initial phase,

they were evaluated and refined by the development

team. Afterwards, potential users tested the

prototypes within some of the previously defined

scenarios. The newly identified requirements were

then considered on the implementation of high

fidelity prototypes, which were subsequently used in

a similar evaluation cycle, with a larger group of

users. Users’ procedures were filmed in order to

provide us with usage and usability information that

was crucial to our conclusions. After the test, the

users answered a usability questionnaire where they

pointed the experienced difficulties.

The developed low and high fidelity prototypes

addressed both versions of the manipulation tool

(visual and eye-free), focussing the usability tests in

the visual and audible aspects respectively. In both

cases, the prototype was a form composed of seven

pages, each with a question (basic output element)

and an answer holder. For the latter different types

of interactive elements were used (e.g. choices,

entries).

In all tests users had to accomplish two tasks: (1)

fill the form and (2) change their answers on some

specific questions. Results were rated as follows:

one point was credited if the user was able to

successfully fill/change the answer at the first

attempt; half a point was credited if the user was

able to successfully answer at the second attempt; no

points were attributed in any other case. The time

spent on the overall test was registered.

4.1 Low-Fidelity Prototypes

The tests involved 11 persons, all students (7 male, 4

female), none visually impaired, between 18 and 30

years old. They were familiar with computers, mp3

players and mobile phones but not with PDA’s,

listenable or multimodal interfaces. Approximately

half of the users tested the prototype walking on a

noisy environment. The rest of them made the test

sitting down in a silent environment. The researchers

simulated the application behaviour and audio

reproduction (Wizard of Oz approach).

4.1.1 Visual Version

We used a rigid card prototyping frame that mimics

a real PDA in size and weight characteristics (see

Figure 3). For each test, seven replaceable cards,

each representing one page, were drawn to imitate

the application. Two major audio/video control

variants were assessed: one based on the element

controls (two tests) and another on the single page &

artefact control bar (one test). All users performed

all tests, but the order of tests was defined to

minimize the learn factor.

Figure 3: PDA prototype.

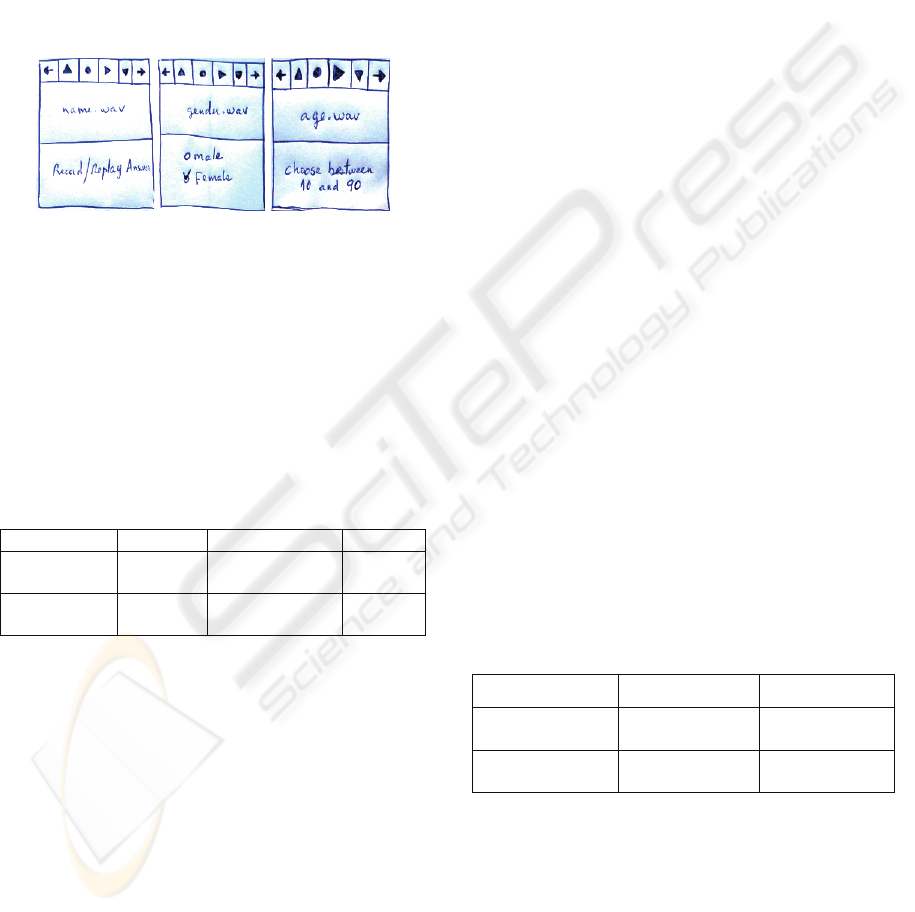

Element Control. one control is inserted for each

media element/item. Two design alternatives were

tried: one relies on the media element controls only

("El. Ctrl" - see Figure 4) and the other includes

additional text ("El. Ctrl + Text" - see Figure 5).

Figure 4: All contain a page navigation bar (top), an audio

label (middle) and an interaction element (bottom) – the

latter is (from left to right) an audio entry, an audio choice

and an audible track bar.

Figure 5: Same as for Figure 4 but with text.

ICEIS 2008 - International Conference on Enterprise Information Systems

82

During the test, we have noticed that all the users

were manipulating the prototype with both hands

(one holding the device and the other interacting

with the cards). The test results (in table 1) show that

the additional text information improved the time

and success rate significantly. In the final

questionnaire users confirmed the difficulties of

interacting without the textual information.

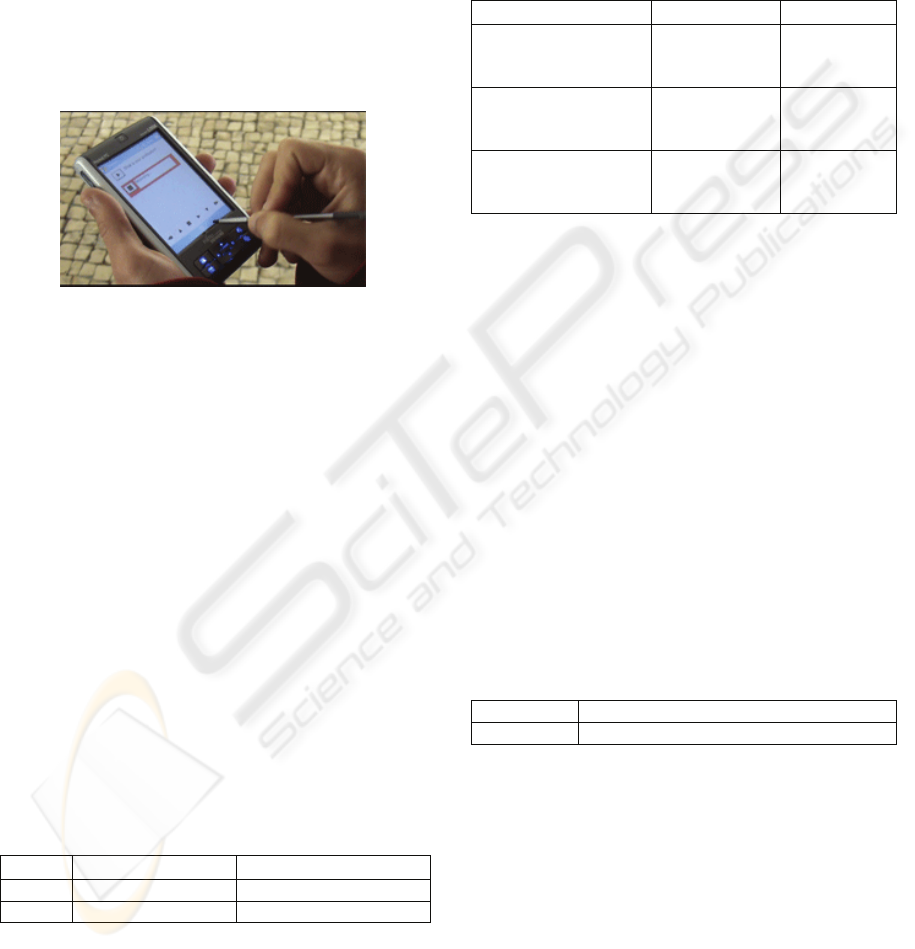

Page/artefact Control. a control bar is available for

the interaction with all the media elements/items,

within a page/artefact (Pg. Bar - see Figure 6).

Figure 6: Same as for Figure 5, but navigation bar was

replaced by the full page & artefact control bar (top).

During the test, we have noticed that the users

manipulating this type of control used only one hand

(holding the device and interacting with it using the

same hand). We have also noticed that the bar’s

position made users cover the artefact while

manipulating it. The test results (in table 1) show

that page control bar alternative suits movement

situations better than the element control variant.

Table 1: Average evaluation/time of the visual version.

El. Ctrl El. Ctrl + Text Pg. Ctrl

STOPPED

71.4%

3.5 min

100%

3 min

100%

3 min

WALKING

71.4%

5.5 min

100%

4.5 min

100%

3 min

Considering both quantitative and qualitative

results, we have decided to create high fidelity

prototypes with a configurable solution, allowing

both element control and page &artefact control.

The decision was based in the fact that, although the

latter performs better or equally to the former (with

text), two handed interaction is often used in a

sitting situation. Besides, with the current diversity

of devices, this design option will allow us to

evaluate both alternatives for different screen sizes,

which we expect to have some impact on results. In

any case, we also decided to locate the bar on the

bottom of the screen device instead of the top.

4.1.2 Eye-free Version

We used the same prototyping frame, but with a

single card only. The card contained only the page &

artefact control bar. Sounds were defined to notify a

new working page, the required/possible interaction

(dependent on the elements' type) and the interaction

feedback. Two alternatives were evaluated: one

relies on earcons and the other on voice prompts.

Again, all users performed all tests with an

appropriate order. Since the bar usage was not an

issue in these tests, the knowledge acquired on the

visual version was not a problem. Nevertheless, the

virtual application page sequence was modified (the

researcher issued sounds corresponding to a

different page order).

Earcons. “abstract, synthetic tones" were defined

and repeated for each notification (see above). The

meaning of the sounds was carefully explained

before the test.

The results (in table 2) show that the users failed

some operations. We believe some of these

problems could be overcome with training or/and

with a better choice of sounds. The comments

reported on the post-tests questionnaire corroborate

these findings.

Voice Prompts. succinct phrases were defined and

repeated for each notification. The user could skip

the information by pressing forward. The test results

(last column of table 2) show that this approach

assured the correct filling of the questionnaire, but

also increased the time to accomplish it. This is

because voice prompts are a lot longer than the

earcons, and the users did not realize that they could

skip them.

Table 2: Average evaluation/time for eye-free version.

Ercons Voice prompts

STOPPED

85.7%

4.5min

100%

6min

WALKING

87.8%

4.3min

100%

6.5min

Considering the evaluation results voice prompts

seemed a preferable solution. Moreover, from the

video analysis and the users' final comments, the 4

navigation buttons (two for pages and two for

elements) were found superfluous. The high-fidelity

prototypes adopted 2 buttons and voice prompts.

DESIGNING MOBILE MULTIMODAL ARTEFACTS

83

4.2 High-Fidelity Prototypes

These tests involved: 20 persons, all students (10

male, 10 female), none visually impaired, between

17 and 38 years old, familiar with computers, mp3

players and mobile phones, but not with PDA’s,

listenable or multimodal interfaces.

4.2.1 Visual Version

The evaluation of the visual version (Figure 7) was

done by 10 of the 20 persons involved on the high-

fidelity prototypes' testing.

Figure 7: Evaluation of the high-fidelity prototype.

Half of this population has performed the test using

the element control (EC + Text) and the other half

did it through page/artefact control bar (Pg. Bar),

both in stationary situations. The purpose of this

particular evaluation was not to choose the best

interaction option according to the user’s mobility

stage, but to understand if people: (1) were capable

of using our interfaces correctly; (2) felt comfortable

interacting with them; and (3) thought they could

perform school exams on it.

The results (in table 3) clearly indicate some

interaction issues, on the first attempt. Namely,

people were not sure on how to manipulate

audio/video entries, time selectors and audible track

bars. Nevertheless, on a second utilization the results

have improved substantially, suggesting a very short

learning curve (in table 3, last row).

Table 3: Comparing average success and speed for

element/item control VS page/artefact control on the1

st

and 2

nd

attempts.

EC + Text Pg. Bar

1

st

try

88.5% in 2.5 min 80% in 2.6 min

2

nd

try

100% in 2,7 min 100% in 2.6 min

During the video analysis of these tests, we were

able to identify some other problems. The most

significant were: (1) button feedback (audio and

visual) was not enough - some people were not sure

whether if they pressed some buttons or not; (2) in

some situations, regarding the page/artefact control

bar, people were not sure which button to use in

order to perform specific actions - here again,

graphical feedback was not enough.

The users’ answers, expressed in the post-test

questionnaire (in table 4) revealed good acceptance.

Table 4: Users’ evaluation of the high-fidelity prototype.

EC + Text Pg. Bar

It was easy for me to

accomplish the

purposed activities.

80%

70%

I think this

application is easy to

use.

80%

70%

I would use this

application to

perform an exam

80%

60%

The overall results of this evaluation suggested

some minor modifications on our final prototype.

These were considered and implemented during the

integration of the new modalities on the

Manipulation Tool as described above.

4.2.2 Eye-free Version

The evaluation of the eye-free version was done by

the 10 remainder persons. We developed a prototype

without any graphical information, besides 4 buttons

(back, record, play and forward) in the place of the

control bar. On the other hand, this version provides

audio content, voice prompts for navigation and

interaction requests, and audio feedback. The

prototype simulated, as much as possible, usage

scenarios found by a blind person.

Table 5: Average evaluation/time on the voice prompt

eye-free version of the high-fidelity prototype.

Voice prompt

STOPPED 100% in 7 min

The test results (table 5) have proven that people

were able to use the application. However, task

accomplishment time (when compared to the visual

version) and the users’ comments, suggested some

changes. Although the users were informed that they

could skip navigation/interaction information in

order accelerate their task’s accomplishment, all of

them reported an excessive use of the voice prompts.

In view of that and of the previous tests, we

adopted a configurable solution for the final

prototype: users can choose whether to use voice

prompts, earcons or a combination of both.

ICEIS 2008 - International Conference on Enterprise Information Systems

84

5 CONCLUSIONS AND FUTURE

WORK

In this paper, we have presented a framework that

supports the creation, distribution, manipulation and

analysis of mobile multimodal artefacts. We have

focused the design and evaluation of a manipulation

tool that enables users to manipulate such artefacts.

Our evaluation results have shown that these

artefacts can extend paper-based activities through

non paper-based modalities. Moreover, these results

have also proven the ability to support an eyes-free

mode directed for visually impaired users.

Our future work plans involve making a new,

wider, set of tests addressing the evaluation of the

whole framework. The integration of the existing

analyses components within the multimodal artefacts

enables us to perform tests on real life scenarios,

gathering useful usability information that will lead

us to new challenges. We intend to test the eyes-free

version of the manipulation tool on visual impaired

persons aiming school activities such as homework

and exams. We also envision the test of this

framework on non paper based activities such as

physiotherapy homework, that can be filmed in

order to provide the therapist with information on

how well his/her patients perform their given tasks.

ACKNOWLEDGEMENTS

This work was supported by EU, LASIGE and FCT,

through project JoinTS and through the Multiannual

Funding Programme.

REFERENCES

Blenkhorn P., Evans D. G., 1998. Using speech and touch

to enable blind people to access schematic diagrams.

Journal of Network and Computer Applications, Vol.

21, pp. 17-29.

Boyd, L.H., Boyd, W.L., Vanderheiden, G.C., 1990. The

Graphical User Interface: Crisis, Danger and

OpportuniQ. Journal of Visual Impairment and

Blindness, Vol. 84, pp. 496-502.

Burger, D., Sperandio, J., 1993 (ed.) Non-Visual Human-

Computer Interactions: Prospects for the visually

handicapped. Paris: John Libbey Eurotext. pp. 181-

194.

Gibbon, D., Mertins, I. and Moore, R., 2000. Handbook of

Multimodal and Spoken Dialogue Systems.

Resources,Terminology and Product Evaluation.

Kluwer.

Hurtig, T., 2006. A mobile multimodal dialogue system

for public transportation navigation evaluated. In

Procs. of HCI’06. ACM Press pp. 251-254.

Lai, J., 2004. Facilitating Mobile Communication with

Multimodal Access to Email Messages on a Cell

Phone. In Procs. of CHI’04. ACM Press, New York,

pp. 1259-1262.

Lambros, S., 2003. SMARTPAD : A Mobile Multimodal

Prescription Filling System. A Thesis in TCC 402

University of Virginia.

Oviatt, S., 1999. Ten myths of multimodal interaction,

Communications of the ACM, Vol. 42, No. 11, pp. 74-

81.

Oviatt, S., 1999. Mutual disambiguation of recognition

errors in a multimodal architecture. Procs. CHI’99.

ACM Press: New York, pp. 576-583.

Oviatt, S., 2003. Multimodal interfaces. In The Human-

Computer Interaction Handbook:

Fundamentals,Evolving Technologies and Emerging

Applications, (ed. by J. Jacko and A. Sears), Lawrence

Erlbaum Assoc., Mahwah, NJ, chap.14, 286-304.

Sá, M., Carriço, L., 2007. Designing for Mobile Devices:

Requirements, Low-Fi Prototyping and Evaluation,

Human-Computer Interaction. Interaction Platforms

and Techniques. In Procs. HCI International ’07. Vol.

4551/2007, pp. 260-269. Beijing, China.

Santoro, C., Paternò, F., Ricci, G., Leporini, B., 2007. A

Multimodal Museum Guide for All. In Mobile

interaction with the Real World Workshop, Mobile

HCI, Singapore.

Serrano, M., Nigay, L., Demumieux, R., Descos, J.,

Losquin, P., 2006. Multimodal interaction on mobile

phones: development and evaluation using ACICARE.

In Procs. Of Mobile HCI’06. Vol. 159, pp 129 - 136,

Helsinki, Finland

Signer, B., Norrie, M., Grossniklaus, M., Belotti, R.,

Decurtins, C., Weibel, N., 2006. PaperBased Mobile

Access to Databases. In Procs. of theACM SIGMOD

pp: 763-765.

DESIGNING MOBILE MULTIMODAL ARTEFACTS

85