A RECURSIVE FRISCH SCHEME ALGORITHM

FOR COLOURED OUTPUT NOISE

J. G. Linden and K. J. Burnham

Control Theory and Applications Centre, Coventry University, Priory Street, Coventry, U.K.

Keywords:

Adaptive Estimation, Errors-in-varibles, Recursive Identification, System Identification.

Abstract:

A recursive (adaptive) algorithm for the identification of dynamical linear errors-in-variables systems in the

case of coloured output noise is developed. The input measurement noise variance as well as the auto-

covariance elements of the coloured output noise sequence are determined via two separate Newton algo-

rithms. The model parameter estimates are obtained by a recursive bias-compensating instrumental variables

algorithm with past noisy inputs as instruments, thus allowing the compensation for the explicitly computed

bias at each discrete-time instance. The performance of the developed algorithm is demonstrated via simula-

tion.

1 INTRODUCTION

Linear time-invariant (LTI) errors-in-variables (EIV)

models are characterised by an exact linear relation-

ship between input and output signals where both

quantities are assumed to be corrupted by additive

measurement noise (S¨oderstr¨om, 2007b). An EIV

model representation can be advantageous, if the aim

is to gain a better understanding of the underlying

process rather than prediction. One interesting ap-

proach for the identification of dynamical systems

within this framework is the so-called Frisch scheme

(Beghelli et al., 1990), which yields estimates of the

model parameters as well as the measurement noise

variances. The dynamic Frisch scheme presented in

(Beghelli et al., 1990; S¨oderstr¨om, 2007a) assumes

that the additive disturbances on the system input and

output are white. Such an assumption, however, can

be rather restrictive since the output noise often not

solely consists of measurement uncertainties, but also

aims to account for process disturbances, which are

usually correlated in time. In order to overcome this

shortcoming, the Frisch scheme has recently been ex-

tended to the coloured output noise case (S¨oderstr¨om,

2008). This paper develops a recursive (adaptive) for-

mulation of the algorithm developed in (S¨oderstr¨om,

2008), which allows the estimates to be calculated

online as new data arrives. Recursive algorithms for

the white noise case have been considered in (Linden

et al., 2008; Linden et al., 2007).

The paper is organised as follows. Section 2

presents the EIV identification problem and intro-

duces some notational conventions. Section 3 reviews

the offline Frisch scheme procedure for the white

noise as well as the coloured noise case. Section 4

develops the recursive algorithm and Section 5 pro-

vides a numerical example. Conclusions are given in

Section 6.

2 PROBLEM STATEMENT AND

NOTATION

In this paper, a discrete-time, LTI single-input single-

output (SISO) EIV system is considered, which is de-

scribed by

A(q

−1

)y

0

i

= B(q

−1

)u

0

i

, (1)

where i is an integer valued time index and

A(q

−1

) , 1+a

1

q

−1

+ ... + a

n

a

q

−n

a

, (2a)

B(q

−1

) , b

1

q

−1

+ ... + b

n

b

q

−n

b

(2b)

are polynomials in the backward shift operator q

−1

,

which is defined such that x

i

q

−1

= x

i−1

. The noise-

free input u

0

i

and output y

0

i

are unknown and only

the measurements

u

i

= u

0

i

+ ˜u

i

, (3a)

y

i

= y

0

i

+ ˜y

i

(3b)

are available, where ˜u

i

and ˜y

i

denote input and out-

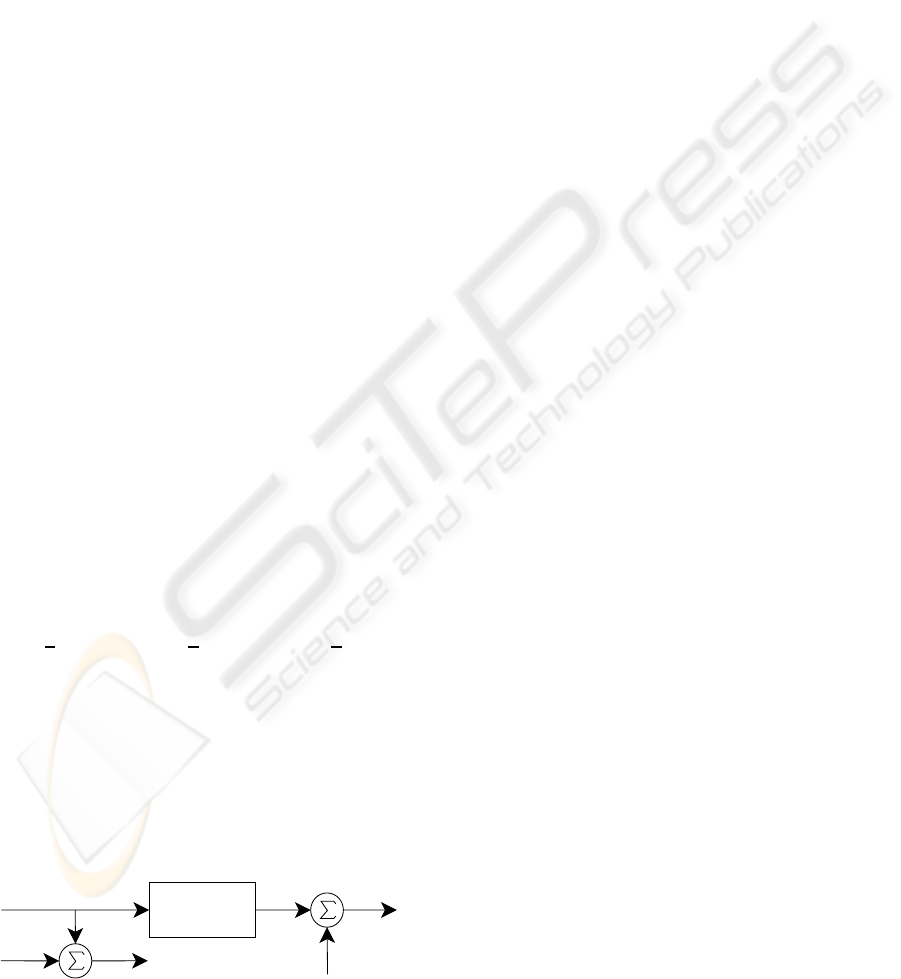

put measurement noise, respectively. Such a setup is

depicted in Figure 1. The following assumptions are

introduced:

163

G. Linden J. and J. Burnham K. (2008).

A RECURSIVE FRISCH SCHEME ALGORITHM FOR COLOURED OUTPUT NOISE.

In Proceedings of the Fifth International Conference on Informatics in Control, Automation and Robotics - SPSMC, pages 163-170

DOI: 10.5220/0001496701630170

Copyright

c

SciTePress

A1. The dynamic system (1) is asymptotically sta-

ble, i.e. A(q

−1

) has all zeros inside the unit

circle.

A2. All system modes are observable and control-

lable, i.e. A(q

−1

) and B(q

−1

) have no com-

mon factors.

A3. The polynomial degrees n

a

and n

b

are known

a priori with n

b

≤ n

a

.

A4. The true input u

0

i

is a zero-mean ergodic pro-

cess and is persistently exciting of sufficiently

high order.

A5a. The sequence ˜u

i

is a zero-mean, ergodic, white

noise process with unknown variance σ

˜u

.

A5b. The sequence ˜y

i

is a zero-mean, ergodic noise

process with unknown auto-covariance se-

quence {r

˜y

(0), r

˜y

(1), ··· }.

A6. The noise sequences ˜u

i

and ˜y

i

are mutually un-

correlated and uncorrelated with u

0

i

.

The auto-covariance elements in A5b are defined by

r

˜y

(τ) , E [ ˜y

k

˜y

k−τ

], (4)

where E[·] denotes the expected value operator.

Within this paper covariance matrices of two column

vectors v

k

and w

k

are denoted

Σ

vw

, E

v

k

w

T

k

, Σ

v

, E

v

k

v

T

k

, (5)

whilst vectors consisting of covariance elements are

denoted

ξ

vc

, E [v

k

c

k

] (6)

with c

k

being a scalar. The corresponding estimated

sample covariance elements are denoted in a similar

manner

ˆ

Σ

k

vw

,

1

k

k

∑

i=1

v

k

w

T

k

,

ˆ

Σ

k

v

,

1

k

k

∑

i=1

v

k

v

T

k

,

ˆ

ξ

k

vc

,

1

k

k

∑

i=1

v

k

c

k

.

(7)

In addition, the parameter vectors are formed by

θ ,

a

T

b

T

T

=

a

1

... a

n

a

b

1

... b

n

b

T

,

(8a)

¯

θ ,

¯a

T

b

T

T

=

1 θ

T

T

, (8b)

u

0

i

y

0

i

y

i

˜y

i

˜u

i

u

i

system

Figure 1: Errors-in-variables setup.

which gives an alternative description of (1)-(3) by

¯

ϕ

T

0

i

¯

θ = 0, (9a)

¯

ϕ

i

=

¯

ϕ

0

i

+

˜

¯

ϕ

i

, (9b)

where the regression vector is given by

ϕ

i

,

ϕ

T

y

i

ϕ

T

u

i

T

(10)

, [

−y

i−1

... −y

i−n

a

u

i−1

... u

i−n

b

]

T

,

¯

ϕ

i

,

¯

ϕ

T

y

i

ϕ

T

u

i

T

, [−y

i

ϕ

T

i

]

T

. (11)

The noise-free regression vectors ϕ

0

i

,

¯

ϕ

0

i

and the vec-

tors containing the noise contributions

˜

ϕ

i

,

˜

¯

ϕ

i

are de-

fined in a similar manner. The identification problem

is now given by:

Problem 1. Given k samples of noisy input-output

data {u

1

, y

1

, ..., u

k

, y

k

}, determine an estimate of the

augmented parameter vector

ϑ ,

a

1

... a

n

a

b

1

... b

n

b

σ

˜u

r

˜y

(0) ·· · r

˜y

(n

a

)

T

. (12)

Throughoutthis paper, the convention is made that

estimated quantities are marked by a ˆ whilst time de-

pendent quantities have a sub- or superscript k, e.g.

ˆ

Σ

k

ϕ

for a sample covariance matrix corresponding to

Σ

ϕ

.

3 FRISCH SCHEME

3.1 White Noise Case

If the least squares (LS) estimator is directly applied

to estimate the parameters of the EIV system (1)-(3),

the estimates will generally be biased (S¨oderstr¨om,

2007a). However, if the statistical nature of the noise

sequences is known, it is possible to compensate for

the bias. The Frisch scheme belongs to the class of

such bias-compensating LS techniques. The compen-

sated normal equations are given by

ˆ

Σ

k

ϕ

−

ˆ

Σ

k

˜

ϕ

ˆ

θ

k

=

ˆ

ξ

k

ϕy

, (13)

where

ˆ

Σ

k

ϕ

and

ˆ

ξ

k

ϕy

are defined by (7). In the case of

white noise sequences the compensating matrix

ˆ

Σ

k

˜

ϕ

is

diagonal and given by

ˆr

k

˜y

(0)I

n

a

0

0

ˆ

σ

k

˜u

I

n

b

, (14)

where I

n

denotes the identity matrix of dimension n.

Within the Frisch scheme, the variances

ˆ

σ

k

˜u

, ˆr

k

˜y

(0) of

input and output measurement noise, respectively, are

ICINCO 2008 - International Conference on Informatics in Control, Automation and Robotics

164

determined such that the extended compensated nor-

mal equations equate to zero

0 =

ˆ

Σ

k

¯

ϕ

−

ˆ

Σ

k

˜

¯

ϕ

ˆ

¯

θ

k

(15)

=

"

ˆ

Σ

k

¯

ϕ

y

ˆ

Σ

k

¯

ϕ

y

ϕ

u

ˆ

Σ

k

ϕ

u

¯

ϕ

y

ˆ

Σ

k

ϕ

u

#

−

ˆr

k

˜y

(0)I

n

a

+1

0

0

ˆ

σ

k

˜u

I

n

b

!

ˆ

¯

θ

k

,

i.e. such that

ˆ

Σ

k

¯

ϕ

−

ˆ

Σ

k

˜

¯

ϕ

is singular. By utilising the

Schur complement, the input noise variance can be

expressed as a nonlinear function of the output noise

variance and vice versa (Beghelli et al., 1990)

ˆr

k

˜y

(0) = λ

min

ˆ

Σ

k

¯

ϕ

y

−

ˆ

Σ

k

¯

ϕ

y

ϕ

u

h

ˆ

Σ

k

ϕ

u

− σ

˜u

I

n

b

i

−1

ˆ

Σ

k

ϕ

u

¯

ϕ

y

,

(16a)

ˆ

σ

k

˜u

= λ

min

ˆ

Σ

k

ϕ

u

−

ˆ

Σ

k

ϕ

u

¯

ϕ

y

h

ˆ

Σ

k

¯

ϕ

y

− r

˜y

(0)I

n

a

+1

i

−1

ˆ

Σ

k

¯

ϕ

y

ϕ

u

,

(16b)

where λ

min

denotes the minimum eigenvalue operator.

Equation (16) together with (15) defines a whole set

of possible solutions depending on the choice of σ

˜u

or r

˜y

(0), respectively. In order to uniquely solve the

identification problem, another equation is required.

Several choices are discussed in (Hong et al., 2007).

3.2 Coloured Noise Case

Now assume that ˜y

k

is no longer white, i.e. it is corre-

lated or coloured. For this case, the matrices

ˆ

Σ

k

¯

ϕ

and

ˆ

Σ

k

˜

¯

ϕ

in (15) can be expressed in block form as

ˆ

Σ

k

¯

ϕ

=

× × ×

−

ˆ

ξ

k

ϕ

y

y

ˆ

Σ

k

ϕ

y

ˆ

Σ

k

ϕ

y

ϕ

u

−

ˆ

ξ

k

ϕ

u

y

ˆ

Σ

k

ϕ

u

ϕ

y

ˆ

Σ

k

ϕ

u

, (17a)

ˆ

Σ

k

˜

¯

ϕ

=

× × ×

−

ˆ

ξ

k

˜

ϕ

y

˜y

ˆ

Σ

k

˜

ϕ

y

0

0 0

ˆ

σ

˜u

I

k

n

b

, (17b)

where the first row consists of arbitrary entries × and

ˆ

Σ

k

˜

ϕ

y

=

ˆr

k

˜y

(0) ·· · ˆr

k

˜y

(n

a

− 1)

.

.

.

.

.

.

.

.

.

ˆr

k

˜y

(n

a

− 1) ··· ˆr

k

˜y

(0)

(18)

is a dense matrix, whilst the remaining entries in (17)

are in accordance with (7). Consequently, the n

a

+ n

b

compensated normal equations in the case of corre-

lated output noise are given by

"

ˆ

Σ

k

ϕ

y

ˆ

Σ

k

ϕ

y

ϕ

u

ˆ

Σ

k

ϕ

u

ϕ

y

ˆ

Σ

k

ϕ

u

#

−

"

ˆ

Σ

k

˜

ϕ

y

0

0

ˆ

σ

k

˜u

I

n

b

#!

ˆ

θ

k

=

"

ˆ

ξ

k

ϕ

y

y

−

ˆ

ξ

k

˜

ϕ

y

˜y

ˆ

ξ

k

ϕ

u

y

#

.

(19)

Now consider the Frisch equation (16b) which be-

comes

ˆ

σ

k

˜u

= λ

min

(B

k

), (20)

with

B

k

,

ˆ

Σ

k

ϕ

u

−

ˆ

Σ

k

ϕ

u

¯

ϕ

y

h

ˆ

Σ

k

¯

ϕ

y

−

ˆ

Σ

k

˜

ϕ

y

i

−1

ˆ

Σ

k

¯

ϕ

y

ϕ

u

(21)

and it remains to specify n

a

+ 1 equations for the de-

termination the auto-covariance elements

ˆ

ρ

k

y

,

ˆr

k

˜y

(0) ˆr

k

˜y

(1) ·· · ˆr

k

˜y

(n

a

)

T

. (22)

In (S¨oderstr¨om, 2008) several possibilities to obtain

the remaining equations are discussed. It is shown

that a covariance-matching criterion, as used in (Di-

versi et al., 2003), as well as correlating the residuals

with past outputs, which correspondsto an instrumen-

tal variable (IV) -like approach with outputs as instru-

ments, cannot be successful since it always leads to

more unknowns than equations. However, by corre-

lating the residuals, denoted ε

k

, with past inputs, the

remaining equations are obtained for the asymptotic

case via

E

¯

ζ

k

ε

k

= 0, (23)

where the instruments are given by

¯

ζ

k

=

u

k−n

b

−1

·· · u

k−n

b

−l

T

(24)

and the residuals are obtained via

ε

k

= A(q

−1

)y

k

− B(q

−1

)u

k

= y

k

− ϕ

T

k

θ. (25)

This yields

ξ

¯

ζy

− Σ

¯

ζϕ

θ = 0, (26)

which can be expressed in block form, and using sam-

ple statistics, as

h

ˆ

Σ

k

¯

ζϕ

y

ˆ

Σ

k

¯

ζϕ

u

i

ˆ

θ

k

=

ˆ

ξ

k

¯

ζy

, (27)

where the length l of the instrument vector

¯

ζ

k

must

satisfy l ≥ n

a

+ 1 in order to obtain at least n

a

+ 1

additional equations for the determination of

ˆ

ρ

k

y

.

In (S¨oderstr¨om, 2008), two algorithms have been

proposed to solve the resulting (nonlinear) estima-

tion problem. Here, the two step algorithm, which

makes use of the separable LS technique is consid-

ered. Whilst in the white noise case the estimate of

θ is obtained from the compensated normal equations

after the noise variances have been determined, this

approach is conceptually different as outlined in the

remainder of this Section.

A RECURSIVE FRISCH SCHEME ALGORITHM FOR COLOURED OUTPUT NOISE

165

3.2.1 Step 1

Note that

ˆ

ρ

k

y

only appears in the first n

a

equations of

(19) and by combining the last n

b

equations of the LS

normal equations (19) with the n

a

+ 1 IV equations

(27), one can express

"

ˆ

Σ

k

ϕ

u

ϕ

y

ˆ

Σ

k

ϕ

u

−

ˆ

σ

k

˜u

I

n

b

ˆ

Σ

k

¯

ζϕ

y

ˆ

Σ

k

¯

ζϕ

u

#

ˆ

θ

k

=

"

ˆ

ξ

k

ϕ

u

y

ˆ

ξ

k

¯

ζy

#

. (28)

which constitute n

a

+ n

b

+ 1 equations in n

a

+ n

b

+ 1

unknowns (

ˆ

θ and

ˆ

σ

k

˜u

). Equation (28) is an overdeter-

mined system of normal equations with its first part

obtained from the bias compensated LS and the sec-

ond part given by the IV estimator, which uses de-

layed inputs. Moreover, it is nonlinear due to the mul-

tiplication of

ˆ

θ

k

with

ˆ

σ

k

˜u

.

In order to estimate θ and σ

˜u

, (28) can be re-

expressed as

ˆ

Σ

k

¯

δϕ

−

ˆ

σ

k

˜u

¯

J

ˆ

θ

k

=

ˆ

ξ

k

¯

δy

, (29)

where

ˆ

Σ

k

¯

δϕ

and

ˆ

ξ

k

¯

δy

are defined by (7) with

¯

δ

i

,

ϕ

T

u

i

¯

ζ

T

i

T

=

u

i−1

... u

i−n

b

u

i−n

b

−1

... u

i−n

b

−l

T

,

(30)

whilst

¯

J is given by

¯

J ,

0 I

n

b

0 0

. (31)

Note that (29) can be interpreted as a bias-

compensated IV approach, where the instrument vec-

tor

¯

δ

i

is constructed from past measured inputs. Intro-

ducing for convenience

ˆ

G

k

,

ˆ

Σ

k

¯

δϕ

−

ˆ

σ

k

˜u

¯

J, (32)

the estimates for σ

k

˜u

and θ

k

are obtained by minimis-

ing the (nonlinear) LS costfunction

min

ˆ

θ

k

,

ˆ

σ

k

˜u

ˆ

G

k

ˆ

θ

k

−

ˆ

ξ

k

¯

δy

2

(33)

which is minimised w.r.t. σ

k

˜u

and θ

k

. If

ˆ

σ

k

˜u

is assumed

to be fixed, an explicit expression for

ˆ

θ

k

is given by

the well-known LS solution

ˆ

θ

k

=

ˆ

G

†

k

ˆ

ξ

k

¯

δy

, (34)

where

ˆ

G

†

k

, (

ˆ

G

T

k

ˆ

G

k

)

−1

ˆ

G

T

k

denotes the Moore-Penrose

pseudo inverse. Using the separable LS approach

(Ljung, 1999, p. 335), the problem is reduced to an

optimisation in one variable only by substituting (34)

in (33). Consequently,

ˆ

σ

k

˜u

can be obtained via

ˆ

σ

k

˜u

= argmin

ˆ

σ

k

˜u

V

k

(35)

with

V

k

=

ˆ

G

k

ˆ

G

†

k

ˆ

ξ

k

¯

δy

−

ˆ

ξ

k

¯

δy

2

=

h

ˆ

ξ

k

¯

δy

i

T

ˆ

ξ

k

¯

δy

−

h

ˆ

ξ

k

¯

δy

i

T

ˆ

G

k

ˆ

G

T

k

ˆ

G

k

−1

ˆ

G

T

k

ˆ

ξ

k

¯

δy

. (36)

Once

ˆ

σ

k

˜u

is obtained,

ˆ

θ

k

is given by (34). Since the

solution of (35) should satisfy V

k

= 0, the value of

V

k

indicates whether the optimisation algorithm has

converged to a global or local minimum (S¨oderstr¨om,

2008).

3.2.2 Step 2

In order to determine the estimates for the auto-

correlation sequence

ˆ

ρ

k

y

the remaining n

a

normal

equations

h

ˆ

Σ

k

ϕ

y

−

ˆ

Σ

k

˜

ϕ

y

ˆ

Σ

k

ϕ

y

ϕ

u

i

ˆ

θ

k

=

ˆ

ξ

k

ϕ

y

y

−

ˆ

ξ

k

˜

ϕ

y

˜y

(37)

together with the Frisch equation (20) are considered.

Equation (37) can be expressed as

ˆ

Σ

k

˜

ϕ

y

ˆa

k

−

ˆ

ξ

k

˜

ϕ

y

˜y

=

h

ˆ

Σ

k

ϕ

y

ˆ

Σ

k

ϕ

y

ϕ

u

i

ˆ

θ

k

−

ˆ

ξ

k

ϕ

y

y

, (38)

where only the left hand side depends on

ˆ

ρ

k

y

. In addi-

tion, (38) is affine in

ˆ

ρ

k

y

, hence it can be re-expressed

as

H

k

ˆ

ρ

k

y

= h

k

, (39)

where H

k

is a n

a

× n

a

+ 1 matrix built up from ele-

ments of

ˆ

¯a

k

and h

k

is a vector of length n

a

given by

the right hand side of (38). This is a system of equa-

tions with more unknowns than equations, but the set

of all possible solutions can be formalised as

ρ

k

y

= α

k

N(H

k

) + H

†

k

h

k

, (40)

where N(·) denotes the nullspace and α

k

is a scalar

factor. It is necessary to distinguish between the input

measurement noise variance obtained by (35) in step

1, and the quantity which would be obtained by the

Frisch equation (20). Therefore, introduce

ˆ

ς

k

, λ

min

(B

k

(α

k

)), (41)

where the matrix B

k

is now a function of α

k

. Using

(41) it is possible to search for that α

k

which is in best

agreement with the previously determined

ˆ

σ

k

˜u

, i.e.

ˆ

α

k

= arg min

α

k

||J

k

||

2

2

, (42)

ICINCO 2008 - International Conference on Informatics in Control, Automation and Robotics

166

where the cost function

J

k

,

ˆ

σ

k

˜u

−

ˆ

ς

k

(43)

measures the distance between the input noise vari-

ance estimate

ˆ

σ

k

˜u

determined in Step 1 and the input

noise variance estimate

ˆ

ς

k

which is obtained using

the n

a

normal equations (37) together with the Frisch

equation (41) depending on the choice of α

k

. Once

ˆ

α

k

is determined, it is substituted in (40) to obtain

ˆ

ρ

k

y

, the

searched estimate of the auto-covariance elements of

the coloured output measurement noise ˜y

k

.

4 RECURSIVE SCHEME

4.1 Step 1

4.1.1 Recursive Update of Covariance Matrices

In order to satisfy the requirements of a recursive al-

gorithm to store all data in a finite dimensional vector,

the covariance matrices are updated via

ˆ

Σ

k

¯

ϕ

=

ˆ

Σ

k−1

¯

ϕ

+ γ

k

¯

ϕ

k

¯

ϕ

T

k

−

ˆ

Σ

k−1

¯

ϕ

,

ˆ

Σ

0

¯

ϕ

= 0, (44a)

ˆ

Σ

k

¯

ζ

¯

ϕ

=

ˆ

Σ

k−1

¯

ζ

¯

ϕ

+ γ

k

¯

ζ

k

¯

ϕ

T

k

−

ˆ

Σ

k−1

¯

ζ

¯

ϕ

,

ˆ

Σ

0

¯

ζ

¯

ϕ

= 0, (44b)

where the normalising gain γ

k

is given by

γ

k

,

γ

k−1

λ+ γ

k−1

, γ

0

= 1 (45)

with 0 < λ ≤ 1 being the forgetting factor giving ex-

ponential forgetting. From (44), the block matrices

required in (28) and (37) are readily obtained.

4.1.2 Recursive Update of

ˆ

σ

k

˜u

For the determination of

ˆ

σ

k

˜u

, an iterative optimisation

procedure can be utilised to minimise (36) where it

is iterated once at each step, leading to a recursive

scheme (Ljung and S¨oderstr¨om, 1983; Ljung, 1999).

Here, an iterative Newton method is utilised for this

purpose, however other choices are also possible. The

Newton method given by (Ljung, 1999, p. 326) is

σ

k

˜u

= σ

k−1

˜u

−

V

′′

k

−1

V

′

k

, (46)

where V

′

k

and V

′′

k

denote the first and second order

derivative of V

k

with respect to σ

k

˜u

evaluated at σ

k−1

˜u

.

The formulas for the derivatives are given in Appen-

dices A and B, respectively.

Remark 1. In order to stabilise the algorithm, it

might be advantageous to restrict the search for the

input measurement noise variance to the interval

0 ≤σ

˜u

≤ σ

max

˜u

, (47)

where σ

max

˜u

is the maximal admissible value for σ

˜u

,

which can be computed from the data as discussed

in (Beghelli et al., 1990). Alternatively, a positive

constant can be chosen for the maximum admissible

value, if such a-priori knowledge is available.

4.1.3 Recursive Update of

ˆ

θ

k

In order to obtain a recursive expression for

ˆ

θ

k

, an ap-

proach is adopted here, similar to that in (Ding et al.,

2006), where the bias of the recursive LS estimate is

compensated at each time step k.

Ignoring the influence of

ˆ

σ

k

˜u

in (28), the uncom-

pensated overdetermined IV normal equations can be

expressed as

1

k

k

∑

i=1

ϕ

u

i

¯

ζ

i

ϕ

T

y

i

ϕ

T

u

i

ˆ

θ

IV

k

=

1

k

k

∑

i=1

ϕ

u

i

¯

ζ

i

y

i

, (48)

where

ˆ

θ

IV

k

denotes the uncompensated (biased) esti-

mate of θ. Since one unknown, namely

ˆ

σ

k

˜u

, has al-

ready been obtained, it is sufficient to consider n

a

+n

b

equations only

1

, by disregarding the last equation of

(48). Thus the uncompensated IV estimate is given as

ˆ

θ

IV

k

=

"

1

k

k

∑

i=1

δ

i

ϕ

T

i

#

−1

1

k

k

∑

i=1

δ

i

y

i

, (49)

where δ

i

is obtained by deleting the last entry of

¯

δ

i

. In

order to obtain an explicit expression for the bias, the

linear regression formulation

y

i

= ϕ

T

i

θ+ e

i

(50)

is substituted in (49) which gives

ˆ

θ

IV

k

=

"

1

k

k

∑

i=1

δ

i

ϕ

T

i

#

−1

1

k

k

∑

i=1

δ

i

ϕ

T

i

θ+ e

i

= θ+

"

1

k

k

∑

i=1

δ

i

ϕ

T

i

#

−1

1

k

k

∑

i=1

δ

i

e

i

(51)

By substituting e

i

= −

˜

ϕ

i

θ+ ˜y

i

it follows that

ˆ

θ

IV

k

= θ+

"

1

k

k

∑

i=1

δ

i

ϕ

T

i

#

−1

1

k

k

∑

i=1

δ

i

˜y

i

−

"

1

k

k

∑

i=1

δ

i

ϕ

T

i

#

−1

1

k

k

∑

i=1

δ

i

˜

ϕ

T

i

θ. (52)

The vector δ

i

is uncorrelated with ˜y

i

which means that

the middle part of the sum in (52) diminishes in the

1

This corresponds to a basic IV estimator where the

number of unknowns is equal to the length of the instru-

ment vector.

A RECURSIVE FRISCH SCHEME ALGORITHM FOR COLOURED OUTPUT NOISE

167

asymptotic case, whereas

lim

k→∞

1

k

k

∑

i=1

ϕ

u

i

ζ

i

˜

ϕ

T

y

i

˜

ϕ

T

u

i

T

=

0 σ

˜u

I

n

b

0 0

. (53)

Consequently, for k → ∞ (52) becomes

θ

IV

= θ− σ

˜u

Σ

−1

δϕ

Jθ, (54)

where J is obtained by deleting the last row of

¯

J in

(31). Equation (54) gives rise to the recursive bias

compensation update equation for

ˆ

θ

k

ˆ

θ

k

=

ˆ

θ

IV

k

+

ˆ

σ

k

˜u

h

ˆ

Σ

k

δϕ

i

−1

J

ˆ

θ

k−1

, (55)

where the uncompensated parameter estimate

ˆ

θ

IV

k

can

be recursively computed via a recursive IV (RIV) al-

gorithm (Ljung, 1999, p. 369) given by

ˆ

θ

IV

k

=

ˆ

θ

IV

k−1

+ L

k

y

k

− ϕ

T

k

ˆ

θ

IV

k−1

, (56a)

L

k

=

P

k−1

δ

k

1−γ

k

γ

k

+ ϕ

T

k

P

k−1

δ

k

, (56b)

P

k

=

1

1− γ

k

"

P

k−1

−

P

k−1

δ

k

ϕ

T

k

P

k−1

1−γ

k

γ

k

+ ϕ

T

k

P

k−1

δ

k

#

. (56c)

with the only difference being that P

k

is scaled such

that

h

ˆ

Σ

k

δϕ

i

−1

= P

k

. (57)

This avoids the matrix inversion in (55) by substitut-

ing (57) in (55).

4.2 Step 2

In order to solve (42) recursively, the Newton method

is applied where it is iterated once as new data arrives.

Consequently, the first and second order derivative of

the cost function J

k

in (43) are to be determined w.r.t.

α

k

, which are denoted J

′

k

and J

′′

k

, respectively. These

are given by

J

′

k

= −2

ˆ

σ

k

˜u

−

ˆ

ς

k

ˆ

ς

′

k

, (58a)

J

′′

k

=

ˆ

ς

′

k

, (58b)

where

ˆ

ς

′

k

denotes the derivative of

ˆ

ς

k

w.r.t. α

k

and

for which an approximation is derived in Appendix

C. The recursive update for

ˆ

α

k

is therefore given by

ˆ

α

k

=

ˆ

α

k−1

−

J

′′

k

−1

J

′

k

, (59)

whilst

ˆ

ρ

k

y

=

ˆ

α

k

N(H

k

) + H

†

k

h

k

. (60)

2000 4000 6000 8000 10000

0.5

1

1.5

2000 4000 6000 8000 10000

−0.9

−0.8

−0.7

2000 4000 6000 8000 10000

1

2

3

true

RFS

RIV

σ

˜u

a

1

b

1

k

Figure 2: Recursive estimates for θ and σ

˜u

using the

recursive Frisch scheme (RFS) and the biased recursive

instrumental-variables (RIV) solution of the uncompen-

sated normal equations.

5 SIMULATION

To compare the results of the recursive Frisch scheme

(RFS) with the non-recursivealgorithm, the system is

chosen similar to that of Example 2 in (S¨oderstr¨om,

2008), i.e. a LTI SISO system with n

a

= n

b

= 1, and

characterised, using (12), by

ϑ =

−0.8 2 1 1.96 1.37

T

. (61)

The values for r

˜y

(0) and r

˜y

(1) arise by generating the

output noise by the auto-regressive model

˜y

k

=

1

1− 0.7q

−1

v

k

, (62)

where v

k

is a zero-mean white process with unity vari-

ance. The system is simulated for 10, 000 samples us-

ing a zero mean, white and Gaussian distributed input

signal of unity variance. The corresponding signal-to-

noise ratio for input and output is given by 10.60dB

and 39.12dB, respectively.

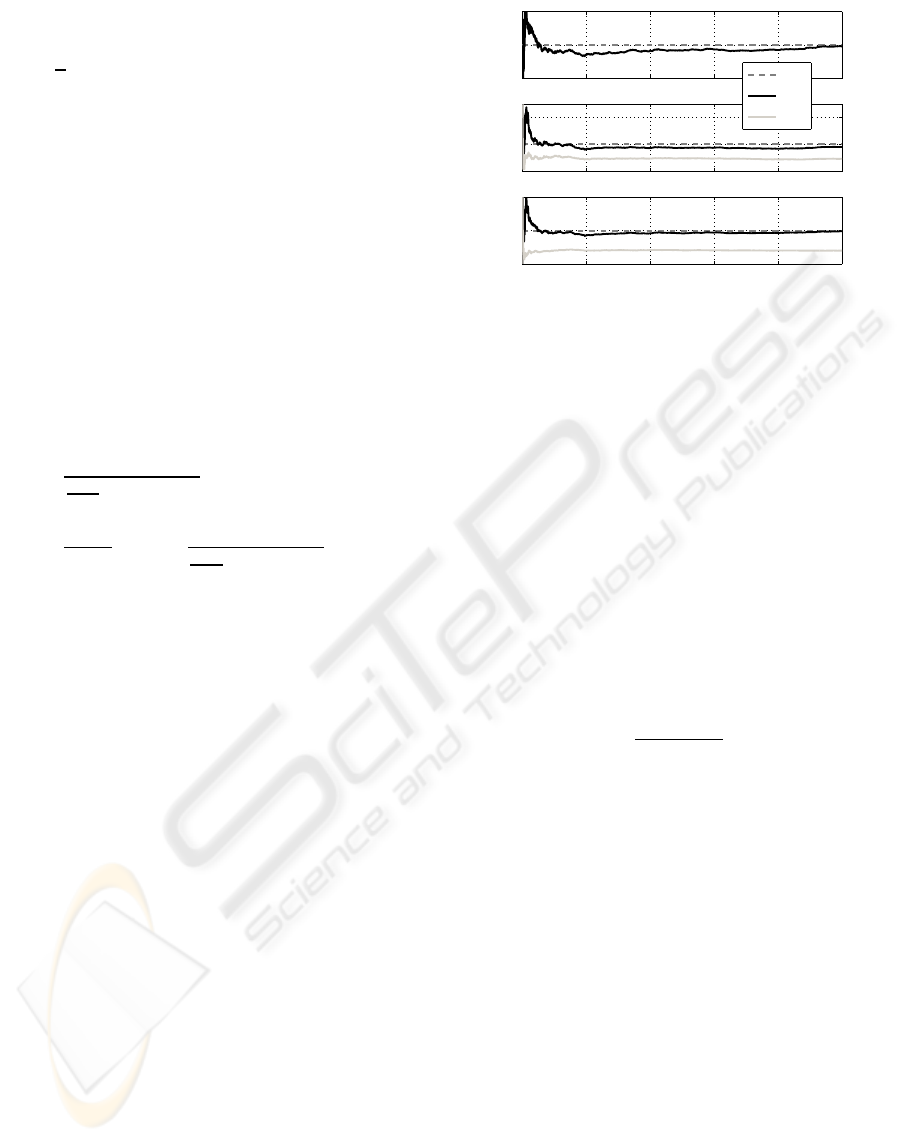

Choosing λ = 1, the results for Step 1 are shown

in Figure 2. The first subplot shows that the New-

ton method is able to recursively estimate the input

measurement noise variance σ

˜u

. The remaining two

subplots compare the RIV solution

ˆ

θ

IV

k

of the uncom-

pensated normal equations with the recursively com-

pensated Frisch scheme estimate

ˆ

θ

k

. As expected, the

RIV is biased whilst the the RFS successfully com-

pensates for this.

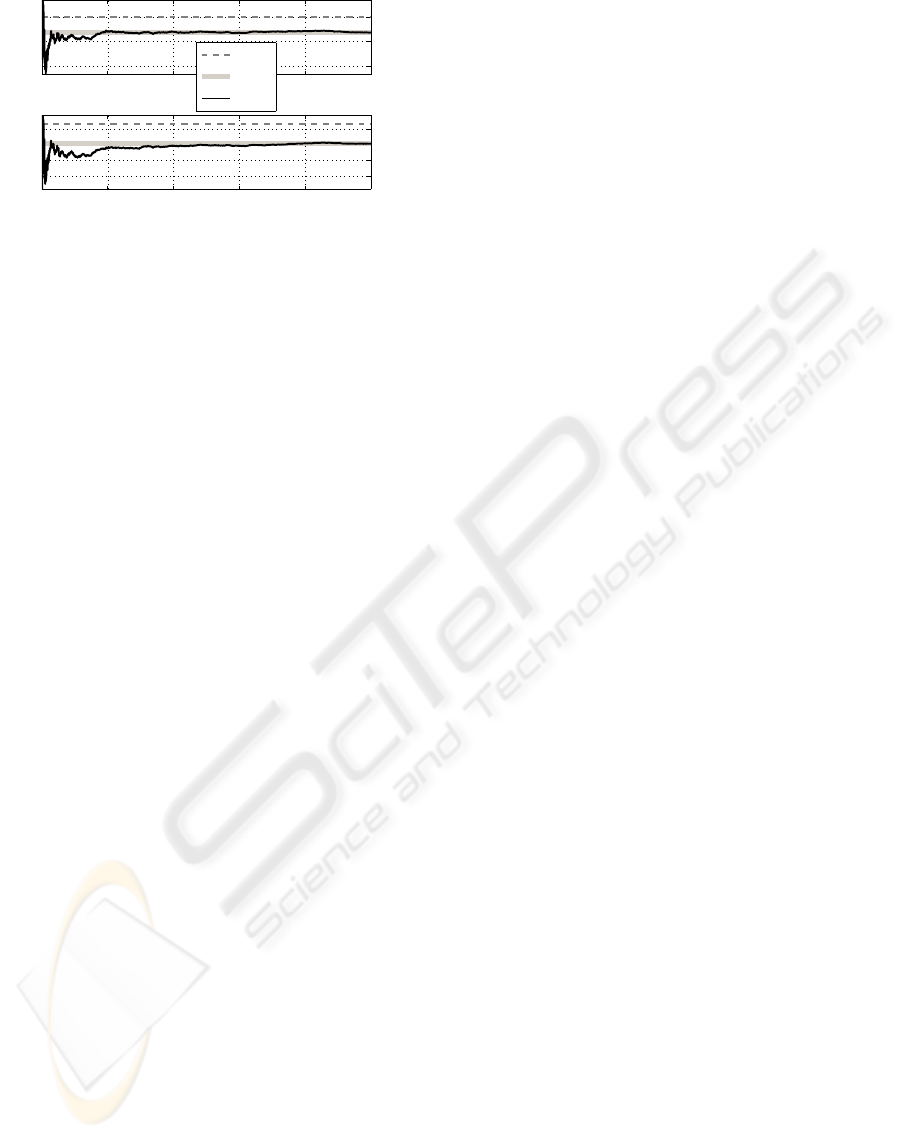

Figure 3 shows the estimates of ρ

y

obtained in

Step 2 for both the RFS as well as the off-line case.

In contrast to the results obtained in Step 1, the qual-

ity of the estimates obtained in Step 2 for

ˆ

ρ

k

y

is in-

ferior. This is in agreement with the results re-

ported in (S¨oderstr¨om, 2008), where a Monte-Carlo

ICINCO 2008 - International Conference on Informatics in Control, Automation and Robotics

168

2000 4000 6000 8000 10000

−2

0

2

2000 4000 6000 8000 10000

−2

−1

0

1

true

offline

RFS

r

˜y

(0)r

˜y

(1)

k

Figure 3: Recursive estimates for r

˜y

(0) and r

˜y

(1) using the

recursive Frisch scheme (RFS).

analysis shows poor performance for

ˆ

ρ

k

y

in the non-

recursivecase. The important observationto note here

is that the recursively obtained estimates of r

˜y

(0) and

r

˜y

(0) coincide with their off-line counterparts after

k = 10, 000 recursions. It is also observed that the

values of ˆr

k

˜y

(0) (the estimated variance of the output

measurement noise) occasionally exhibits a negative

sign during the first 500 recursion steps. This could

be avoided by projecting the estimates, such that

0 <

ˆ

Σ

k

˜

¯

ϕ

y

<

ˆ

Σ

k

¯

ϕ

y

−

ˆ

Σ

k

¯

ϕ

y

ϕ

u

h

ˆ

Σ

k

ϕ

u

i

−1

ˆ

Σ

k

ϕ

u

¯

ϕ

y

(63)

is satisfied (S¨oderstr¨om, 2008).

6 CONCLUSIONS

The Frisch scheme for the coloured output noise case

has been reviewed and a recursive algorithm for its

adaptiveimplementation has been developed. The pa-

rameter vector is estimated utilising a recursive bias-

compensating instrumental variables approach, where

the bias is compensated at each time step. The input

measurement noise variance and the output measure-

ment noise auto-covariance elements are obtained via

two (distinct) Newton algorithms. A simulation study

illustrates the performance of the proposed algorithm.

Further work could concern computational as-

pects of the algorithm as well as its extension to the

bilinear case.

REFERENCES

Beghelli, S., Guidorzi, R. P., and Soverini, U. (1990). The

Frisch scheme in dynamic system identification. Au-

tomatica, 26(1):171–176.

Ding, F., Chen, T., and Qiu, L. (2006). Bias compensation

based recursive least-squares identification algorithm

for MISO systems. IEEE Trans. on Circuits and Sys-

tems, 53(5):349–353.

Diversi, R., Guidorzi, R., and Soverini, U. (2003). Algo-

rithms for optimal errors-in-variables filtering. Sys-

tems & Control Letters, 48:1–13.

Hong, M., S¨oderstr¨om, T., Soverini, U., and Diversi, R.

(2007). Comparison of three Frisch methods for

errors-in-variables identification. Technical Report

2007-021, Uppsala University.

Linden, J. G., Vinsonneau, B., and Burnham, K. J. (2007).

Fast algorithms for recursive Frisch scheme system

identification. In Proc. CD-ROM IAR & ACD Int.

Conf., Grenoble, France.

Linden, J. G., Vinsonneau, B., and Burnham, K. J.

(2008). Gradient-based approaches for recursive

Frisch scheme identification. To be published at the

17th IFAC World Congress 2008.

Ljung, L. (1999). System Identification - Theory for the

user. PTR Prentice Hall Infromation and System Sci-

ences Series. Prentice Hall, New Jersey, 2nd edition.

Ljung, L. and S¨oderstr¨om, T. (1983). Theory and Practice

of Recursive Identification. M.I.T. Press, Cambridge,

MA.

S¨oderstr¨om, T. (2007a). Accuracy analysis of the Frisch

scheme for identifying errors-in-variables systems.

Automatica, 52(6):985–997.

S¨oderstr¨om, T. (2007b). Errors-in-variables methods in sys-

tem identification. Automatica, 43(6):939–958.

S¨oderstr¨om, T. (2008). Extending the Frisch scheme for

errors-in-variables identification to correlated output

noise. Int. J. of Adaptive Contr0l and Signal Proc.,

22(1):55–73.

APPENDIX

A First Order Derivative of V

k

Denoting (·)

′

the derivative w.r.t.

ˆ

σ

k

˜u

and introducing

f

k

,

ˆ

G

T

k

ˆ

ξ

k

¯

δy

, F

k

,

ˆ

G

T

k

ˆ

G

k

, (64)

it holds that

f

′

k

= −

0

ˆ

ξ

k

ϕ

u

y

, (65a)

F

′

k

=

"

0

ˆ

Σ

k

ϕ

u

ϕ

y

T

ˆ

Σ

k

ϕ

u

ϕ

y

2

ˆ

σ

k−1

˜u

I

n

b

− 2

ˆ

Σ

k

ϕ

u

#

, (65b)

F

−1

k

′

= −F

−1

k

F

′

k

F

−1

k

(65c)

and the first order derivative is given by

V

′

k

= −

f

T

k

F

−1

k

f

k

′

= − f

′

k

T

F

−1

k

f

k

− f

T

k

F

−1

k

′

f

k

− f

T

k

F

−1

k

f

′

k

. (66)

A RECURSIVE FRISCH SCHEME ALGORITHM FOR COLOURED OUTPUT NOISE

169

B Second Order Derivative of V

k

Utilising the product rule, the second order derivative

is given by

V

′′

k

= − f

′

k

T

F

−1

k

′

f

k

− f

′

k

T

F

−1

k

f

′

k

− f

′

k

T

F

−1

k

′

f

k

− f

T

k

F

−1

k

′′

f

k

− f

T

k

F

−1

k

′

f

′

k

− f

′

k

T

F

−1

k

f

′

k

− f

T

k

F

−1

k

′

f

′

k

= −2 f

′

k

T

F

−1

k

′

f

k

− 2 f

′

k

T

F

−1

k

f

′

k

− f

T

k

F

−1

k

′′

f

k

− 2 f

T

k

F

−1

k

′

f

′

k

(67)

with

F

−1

k

′′

= − F

−1

k

′

F

′

k

F

−1

k

− F

−1

k

F

′′

k

F

−1

k

− F

−1

k

F

′

k

F

−1

k

′

,

(68a)

F

′′

k

=

0 0

0 2I

n

b

. (68b)

C Derivative of

ˆ

ς

k

The idea is to linearise the Frisch equation (20) us-

ing perturbation theory, in order to approximate the

derivative of

ˆ

ς

k

w.r.t. α

k

. The derivation here is con-

ceptually similar to that given in Appendix II.B of

(S¨oderstr¨om, 2007a), but with the linearisation car-

ried out around

ˆ

ϑ

k−1

rather than the ‘true’ parameters.

Assume that at time instance k − 1,

ˆ

ϑ

k−1

satisfies

the extended compensated normal equations

"

ˆ

Σ

k−1

¯

ϕ

y

−

ˆ

Σ

k−1

˜

ϕ

y

ˆ

Σ

k−1

¯

ϕ

y

ϕ

u

ˆ

Σ

k−1

ϕ

u

¯

ϕ

y

ˆ

Σ

k−1

ϕ

u

−

ˆ

σ

k−1

˜u

I

n

b

#

ˆ

¯a

k−1

ˆ

b

k−1

= 0 (69)

which are rewritten for ease of notation as

A − B C

C

T

D −

ˆ

σ

k−1

˜u

I

a

b

= 0. (70)

Similarly, introduce the notation at time instance k as

A − B C

C

T

D −

ˆ

σ

k

˜u

I

a

b

= 0. (71)

Let

ˆ

σ

k

˜u

denote the estimate obtained via (35). Alterna-

tively, if

ˆ

Σ

k

˜

ϕ

y

is known, the input measurement noise

could be obtained using (20) and denote this quantity

ς

k

. Using perturbation theory for eigenvalues yields

ς

k

= λ

min

{B

k

(α

k

)} = λ

min

{B

k−1

(α

k−1

) + ∆B

k

}

≈ ς

k−1

+

b

T

∆B

k

b

b

T

b

, (72)

where the perturbation is given by (cf. (21))

∆B

k

= B

k

(α

k

) − B

k−1

(α

k−1

)

= D − C

T

[A − B ]

−1

C − D + C

T

[A − B]

−1

C

= D − C

T

F

−1

C − D + C

T

F

−1

C (73)

with F , [A − B ] and F , [A − B]. Substituting (73)

in (72) yields

ς

k

− ς

k−1

≈

b

T

b

T

b

D − D + C

T

F

−1

C − C

T

F

−1

C

b

=

b

T

b

T

b

(D − D)b +

b

T

Xb

b

T

b

, (74)

where X can be expressed as

X = C

T

F

−1

C − C

T

F

−1

C

+ C

T

F

−1

C − C

T

F

−1

C

+ C

T

F

−1

C − C

T

F

−1

C

=

C

T

− C

T

F

−1

C + C

T

F

−1

(C − C )

− C

T

F

−1

(F − F )F

−1

C (75)

and by combining (74) and (75), it holds that

b

T

b

ς

k

− ς

k−1

≈ b

T

(D − D)b

+ b

T

C

T

− C

T

F

−1

C b

+ b

T

C

T

F

−1

(C − C )b

− b

T

C

T

F

−1

(F − F )F

−1

C b.

(76)

Now, the first row of (70) gives

a = −F

−1

Cb (77)

and by assuming that F

−1

C b ≈ −a, (76) finally sim-

plifies to

b

T

b

ς

k

− ς

k−1

≈ b

T

(D − D)b

− b

T

C

T

− C

T

a

− a

T

(C − C )b

− a

T

(F − F )a, (78)

where F is the only element depending on α

k

. There-

fore,

dς

k

dα

k

≈

d

dα

k

a

T

(A − B )a

b

T

b

= −

a

T

dB

dα

k

a

b

T

b

(79)

or equivalently

d

dα

k

λ

min

{B

k

(α

k

)} ≈ −

ˆ

¯a

T

k−1

ˆ

b

T

k−1

ˆ

b

k−1

d

dα

k

ˆ

Σ

k

˜

¯

ϕ

y

ˆ

¯a

k−1

.

(80)

Since Σ

k

˜

¯

ϕ

y

consists of the quantities ˆr

k

˜y

(0), ..., ˆr

k

˜y

(n

a

), it

remains to determine

d

dα

k

ˆ

ρ

k

y

=

h

d

dα

k

ˆr

k

˜y

(0) ·· ·

d

dα

k

ˆr

k

˜y

(n

a

)

i

T

(81)

which, due to (40), is given by

d

dα

k

ˆ

ρ

k

y

= N(H

k

). (82)

ICINCO 2008 - International Conference on Informatics in Control, Automation and Robotics

170