FACIAL EXPRESSION RECOGNITION BASED ON FUZZY LOGIC

M. Usman Akram, Irfan Zafar, Wasim Siddique Khan and Zohaib Mushtaq

Department of Computer Engineering, EME College, NUST, Rawalpindi, Pakistan

Keywords:

Human Computer Interaction (HCI), Mamdani-type, Region Extraction, Feature Extraction.

Abstract:

We present a novel scheme for facial expression recognition from facial features using Mamdani-type fuzzy

system. Facial expression recognition is of prime importance in human-computer interaction systems (HCI).

HCI has gained importance in web information systems and e-commerce and certainly has the potential to

reshape the IT landscape towards value driven perspectives. We present a novel algorithm for facial region

extraction from static image. These extracted facial regions are used for facial feature extraction. Facial fea-

tures are fed to a Mamdani-type fuzzy rule based system for facial expression recognition. Linguistic models

employed for facial features provide an additional insight into how the rules combine to form the ultimate

expression output. Another distinct feature of our system is the membership function model of expression

output which is based on different psychological studies and surveys. The validation of the model is further

supported by the high expression recognition percentage.

1 INTRODUCTION

Facial expressions play a vital role in social commu-

nication. Computers are increasingly becoming the

part of human social circle through human computer

interaction (HCI). “Human-Computer Interaction (or

Human Factors) in MIS is concerned with the ways

humans interact with information, technologies, and

tasks, especially in business, managerial, organiza-

tional, and cultural contexts” (Dennis Galletta, ).

HCI is a bidirectional process. Till now interac-

tion has been one sided, i.e., from humans to com-

puters. In order to achieve the maximum benefits out

of this link, communication is also required the other

way around. Computers need to understand human

emotions in order to respond and react correctly to

human actions. Human face is the richest source of

human emotions. Hence facial expression recognition

is the key to understanding human emotions. Ekman

has given evidence about the universality of facial ex-

pressions (Ekman and Friesen, 1978),(Ekman, 1994)

and also proposed six basic human emotions (Ekman,

1993).

Facial expression recognition systems usually ex-

tract facial expression parameters from a static face

image. This process is called feature extraction.

These extracted features are then fed to a classifier

system for facial expression recognition. In this pa-

per we present the complete system for facial expres-

sion recognition that takes a static image as input and

gives the expression as output. The core of our system

is a Mamdani-type Fuzzy Rule Based system which

is used for facial expression recognition from facial

features. The major advantages that come with the

use of fuzzy based system are its flexibility and fault-

tolerance. Fuzzy logic can be used to form linguistic

models (Koo, 1996) and comes with a solid qualita-

tive base, hence fuzzy systems are easier to model.

Fuzzy systems have been used in many classification

and control problems (Klir and Yuan, 1995) includ-

ing facial expression recognition (Ushida and Yam-

aguchi, 1993).

We present a Mamdani-type fuzzy system for fa-

cial expression recognition. This system recognizes

six basic facial expressions namely fear, surprise, joy,

sad, disgust and anger. Normal/Neutral is an addi-

tional expression and is often categorized as one of

the basic facial expressions. So, total output expres-

sions for our system are seven.

2 FACIAL REGION

EXTRACTION AND FEATURE

EXTRACTION MODULES

We have employed top-down approach for facial ex-

pression recognition. Firstly, the input image is pre-

383

Usman Akram M., Zafar I., Siddique Khan W. and Mushtaq Z. (2008).

FACIAL EXPRESSION RECOGNITION BASED ON FUZZY LOGIC.

In Proceedings of the Third International Conference on Computer Vision Theory and Applications, pages 383-388

DOI: 10.5220/0001089603830388

Copyright

c

SciTePress

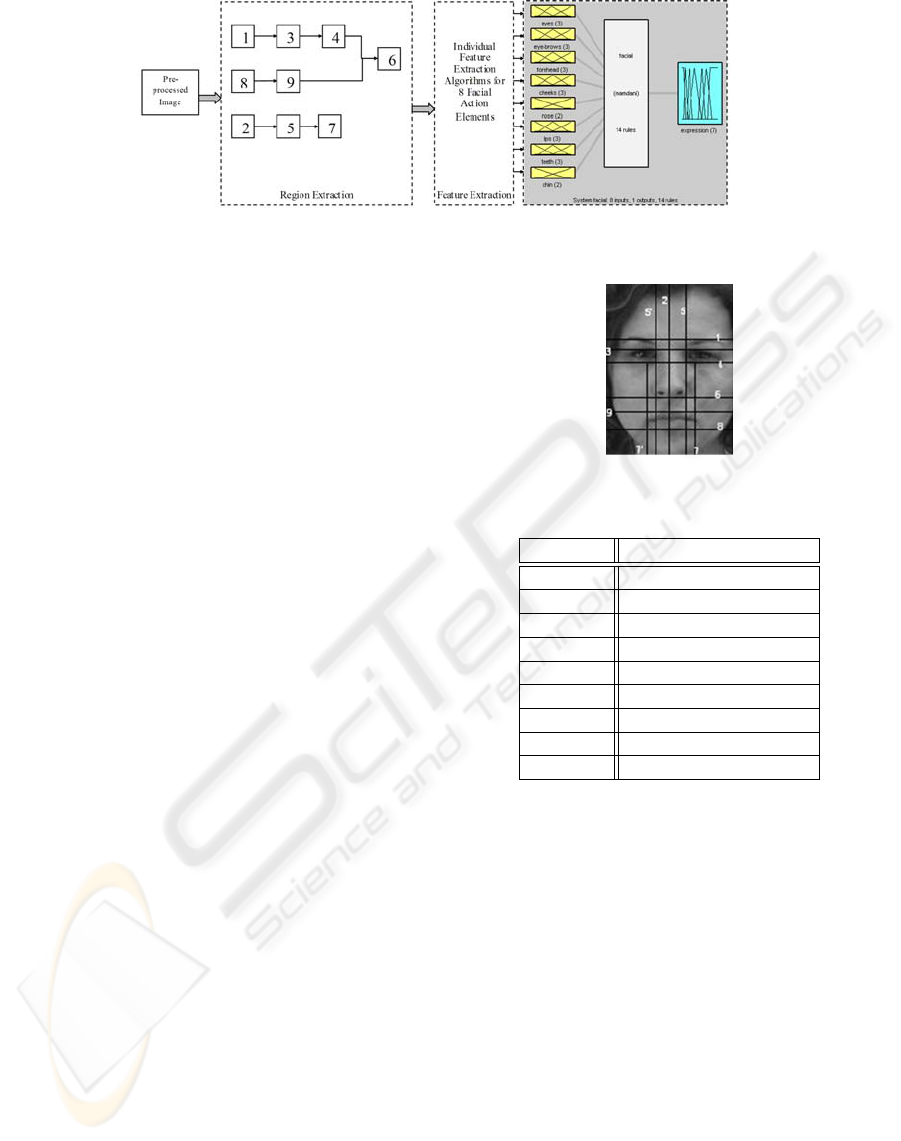

Figure 1: Overall System Block Diagram.

processed for face extraction from background. This

image is then fed to the Region Extraction Module for

extraction of regions for eight basic facial action ele-

ments (Shafiq, 2006). Feature Extraction Module fur-

ther processes these 8 extracted regions for finding the

facial action values associated with every region. Fa-

cial action values (scaled from 0 to 10) are fed into a

Mamdani-type Fuzzy System for ultimate expression

output. Our system is divided into four basic modules

as shown in fig. 1.

2.1 Pre-Processing Module

Pre-Processing Module (PPM) involves the extraction

of face from background. This image is then scaled

according to system specifications.

2.2 Region Extraction Module

Extracted face is then fed to the Region Extraction

Module (REM). We have defined eight basic facial

action elements namely eyes, eye-brows, nose, fore-

head, cheeks, lips, teeth and chin. Regions for all

facial action elements are extracted by REM. We

have defined 9 basic image lines for region extraction.

These lines along with their semantic significance are

listed in table I (see fig. 2).

Forehead region is marked above eyebrows. Line

1 represents ‘Eyebrows Top’. Its position is deter-

mined by the vertical flow traversal of image from top

to bottom. As the face is traversed below line 1, next

important line to be marked is line 3. Line 3 signifies

‘Eyes Top’. Line 4 lies further below line 3. Line 4

represents ‘Eyes Bottom’. Lines 1, 3 and 4 help to

mark Eye, Eyebrows and Forehead regions as shown

in fig. 3 (A,B,C).

Vertical flow traversal from the bottom of the ex-

tracted face image initially detects line 8 (‘Lips Bot-

tom’). Line 9 is marked above line 8 and represents

‘Lips Top’. These two lines are important in marking

Lips, Teeth and Chin regions (see fig. 3. (E,F)). Line

2 represents ‘Face Middle’. Horizontal traversal first

Figure 2: Image Lines for Region Extraction.

Table 1: Lines for Region Extraction.

Line No. Semantic Significance

1 Eyebrows Top

2 Face Middle

3 Eyes Top

4 Eyes Bottom

5 Eyes Inner Corner

6 Face Middle

7 Lips Outer Corner

8 Lips Bottom

9 Lips Top

detects line 5 and then line 7. These lines represent

‘Eyes Inner Corner’ and ‘Lips Outer Corner’ respec-

tively. Line 6 is marked by vertical traversal between

line 9 and line 4. These lines mark Nose and Cheeks

region (see fig. 3. (D,G)).

This region extraction algorithm is also shown in

fig. 1. Regions extracted for these basic facial action

elements are shown in figure 3.

2.3 Feature Extraction Module

Feature Extraction Module (FEM) uses these ex-

tracted regions to find the facial action values for all

facial action elements. Specialized algorithms are de-

signed for finding the facial action values that are

scaled from 0-10 (Shafiq, 2006). These outputs fur-

ther act as crisp inputs to the fuzzy based expression

recognition module. Expression Recognition Module

(ERM) is explained in section III.

VISAPP 2008 - International Conference on Computer Vision Theory and Applications

384

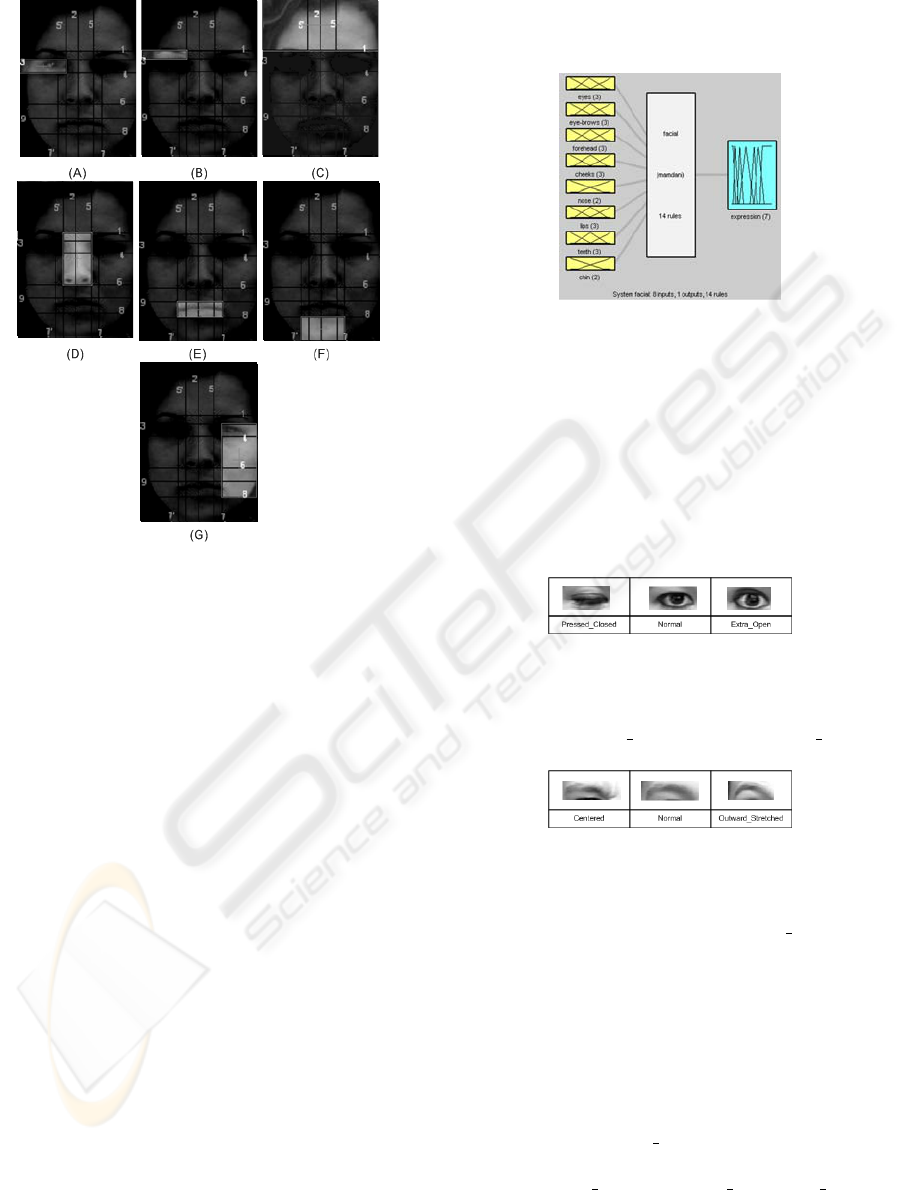

Figure 3: (A) Eye Region : Bounded by 3-4-5, (B) Eye-

brows Region: Bounded by 1-3-5,(C) Forehead Region :

Bounded by 1, (D) Nose Region: Bounded by 1-6-5-5’,

(E) Lips Region : Bounded by 8-9-5-5’, (F) Chin Region:

Bounded by 8-5-5’, (G) Cheeks Region : Bounded by 4-8-

7.

3 EXPRESSION RECOGNITION

MODULE

ERM is a Mamdani-type fuzzy rule based system.

The knowledge base (KB) in general rule based fuzzy

systems is divided into two components.

3.1 Data-Base (DB)

Data base of a fuzzy system contains the scaling fac-

tors for inputs and outputs. It also has the membership

functions that specify the meaning of linguist terms

(Ralescu and Hartani, 1995).

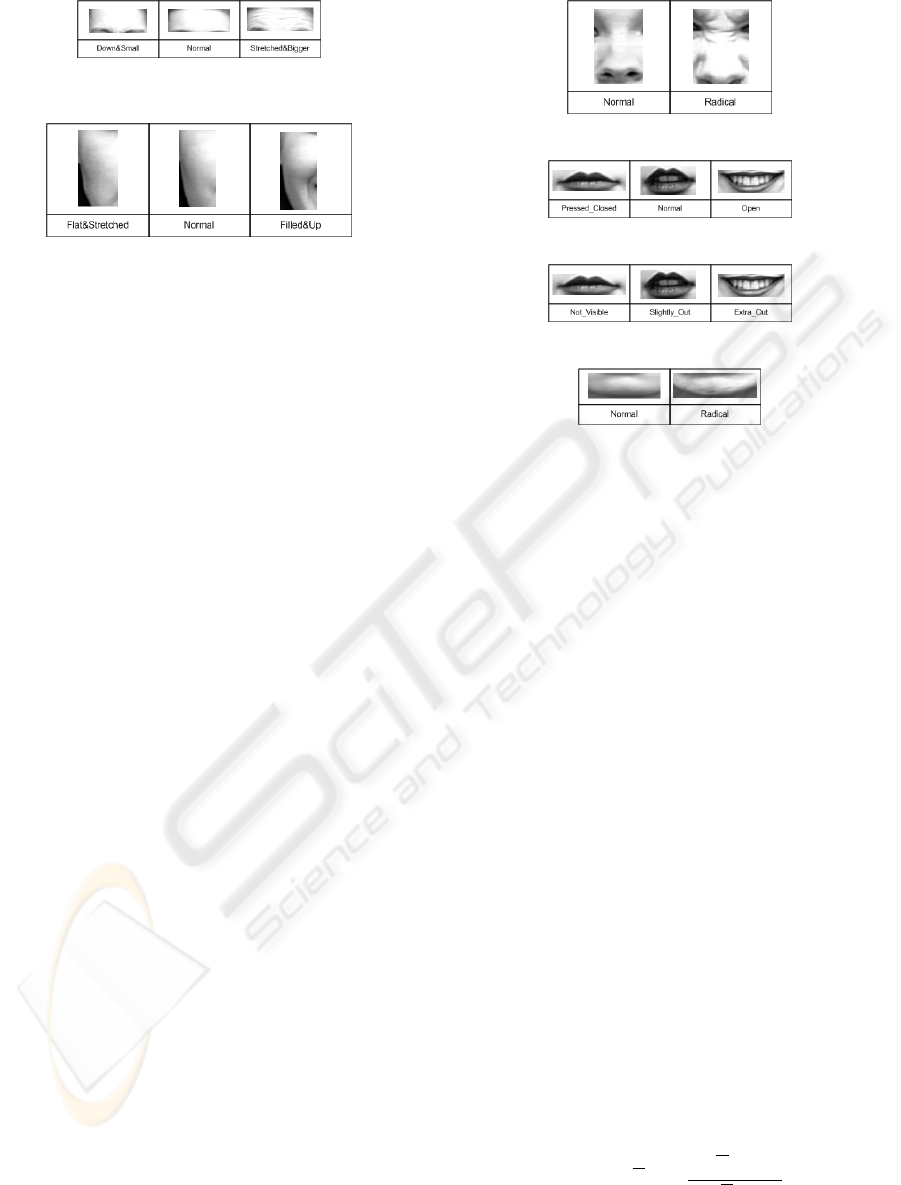

3.1.1 Inputs and Output

Eight basic facial action elements considered for ex-

pression output are eyes, eye brows, forehead, nose,

chin, teeth, cheeks and lips. States of these facial el-

ements act as input to the fuzzy system. These inputs

are scaled from 0-10. The inputs are mapped to their

respective fuzzy sets by input membership functions

(MFs). The system diagram shows the inputs and out-

put in fig. 4.

Figure 4: Fuzzy System Architecture.

3.1.2 Input MFs

The inputs that have three input Membership Func-

tions (MFs) have two MFs at each extreme and one

MF in the middle. Inputs with two MFs have one at

each extreme. Figures 5 to 12 show MF set examples

for all facial action elements. The inputs are denoted

as:

Figure 5: MF Set Examples for Eyes.

µ

A

(Eyes)

where A = {Pressed Closed, Normal, Extra Open}

Figure 6: MF Set Examples for Eyebrows.

µ

B

(Eyebrows)

where B = {Centered, Normal, Outward Stretched}

µ

C

(Forehead)

where C =

{Down&Small, Normal, Stretched&Bigger}

µ

D

(Cheeks)

where D = {Flat&Stretched, Normal, Filled&U p}

µ

E

(Nose)

where E = {Normal, Radical}

µ

F

(Lips)

where L = {Pressed Closed, Normal, Open}

µ

G

(Teeth)

where G = {Not Visible, Slightly Out, Extra Open}

µ

H

(Chin)

where H = {Normal, Radical}

FACIAL EXPRESSION RECOGNITION BASED ON FUZZY LOGIC

385

Figure 7: MF Set Examples for Forehead.

Figure 8: MF Set Examples for Cheeks.

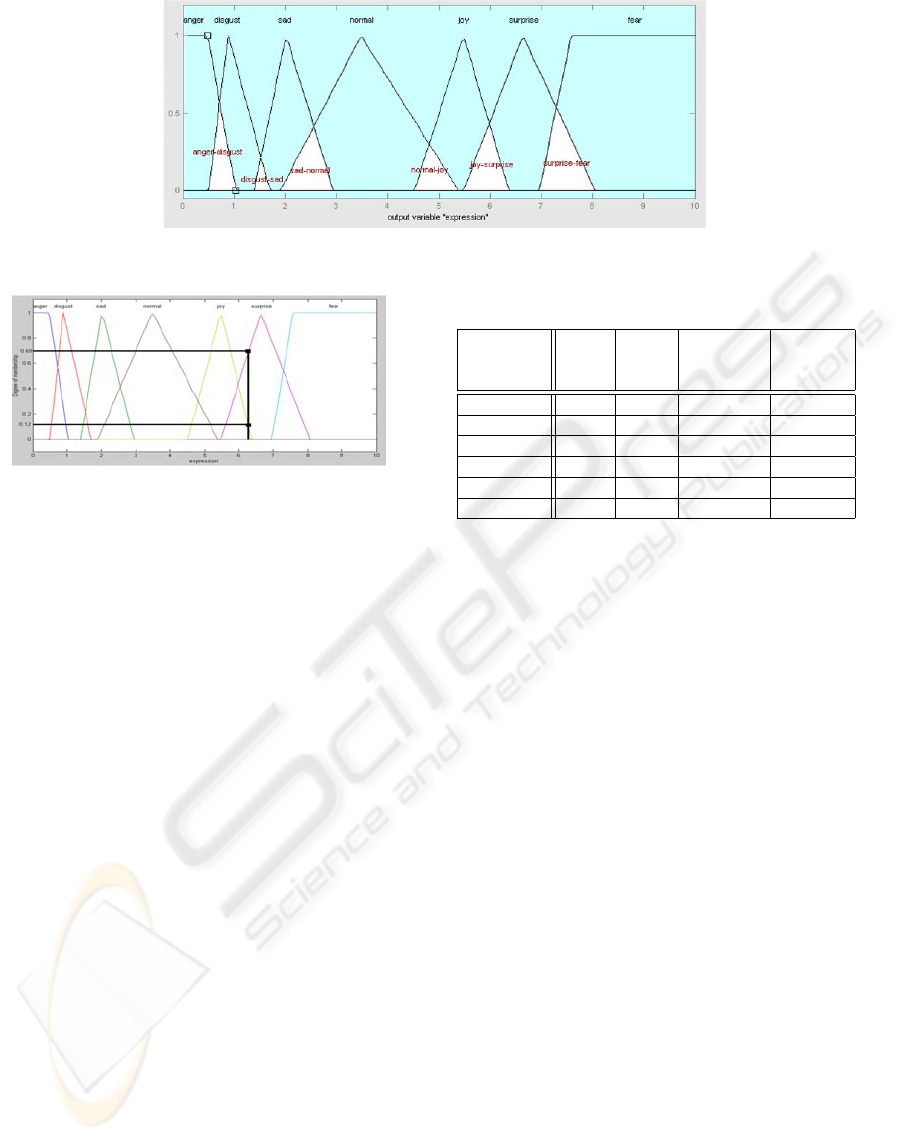

3.1.3 Output MFs

Output ‘expression’ has seven output MFs represent-

ing the basic facial expressions. The distinctive fea-

ture of our system is the design of ‘expression MFs’.

They are scaled from 0-10. This grouping of the facial

expressions is the characteristic of our system and is

shown in fig. 13.

Starting from the extreme left of fig. 5, anger and

disgust are commonly confused for similarity. It is

evident from survey in (Sherri C. Widen and Brooks,

2004)that these two categories are overlapping. So

it makes sense to group them together. In (Sherri

C. Widen and Brooks, 2004), authors have also shown

that sad is also confused with disgust. The percentage

of people who confused disgust with sad was lesser

than those who confused anger and disgust. So, it

makes sense that overlapping area for disgust-sad is

lesser than that of anger-disgust. In the center, normal

(or neutral) expression bridges sad and joy. Facial fea-

tures tend to be like surprise as the joy becomes ex-

treme (Carlo Drioli and Tesser, 2003). Towards the

extreme right, fear takes over surprise. Studies have

shown that fear is often mis-recognized as surprise

(Diane J. Schiano and Sheridan, 2000). Output ‘ex-

pression’ membership functions are given as:

µ

O

(Expression)

where O =

{Anger, Disgust, Sad, Normal, Joy, Surprise, Fear}

3.2 Rule-Base (RB)

Rule base (RB) consists of the collection of fuzzy

rules. Fuzzy rules used in our system can be divided

in two types.

3.2.1 Major (Categorizing) Rules

Major rules classify the six basic facial expressions

for the face. They model the six basic expressions

Figure 9: MF Set Examples for Nose.

Figure 10: MF Set Examples for Lips.

Figure 11: MF Set Examples for Teeth.

Figure 12: MF Set Examples for Chin.

using AND combination of the states of the facial el-

ements. Major rules represent the typical state of all

basic expressions. Major rules have higher weight as

compared to minor rules.

3.2.2 Minor (Non-Categorizing Rules)

Minor rules give the flexibility to the system pro-

viding smooth transition between adjacent basic fa-

cial expressions. By adjacent facial expressions we

mean the overlap between two facial expressions such

as fear-surprise, anger-disgust and joy-surprise, as

shown in figure 13. They have lesser weight as com-

pared to major rules.

3.3 Defuzzification

Centroid method is used for Defuzzification. This

method was particularly chosen because of its com-

patibility with rule-system employed. Centroid

method gave smoother results than other methods.

Maximum Height method was also employed but it

resulted in choppy results and nullified effect of clas-

sification of rules as major and minor.

Centroid method calculates center of area of the

combined membership functions (D. H. Rao, 1995).

A well know formula for finding center of gravity is

given in (Runkler, 1996) as:

F

−1

COG

(A) =

R

x

µA(x)xdx

R

x

µA(x)dx

Defuzzification gives one crisp output. But crisp

output is not suitable to our system. It is commonly

VISAPP 2008 - International Conference on Computer Vision Theory and Applications

386

Figure 13: MFs Plot for ‘expression’ showing Overlap Regions (Muid Mufti, 2006).

Figure 14: Mixed Expression Output.

observed that more than one basic expressions over-

lap to form the ultimate complex output expression

(Sherri C. Widen and Brooks, 2004). So the output of

our system may also be the overlap of two expression

outputs. This is explained with the help of the figure

14. It shows the result of our system when centroid

defuzzification method’s crisp output is 6.3. The out-

put 6.3 corresponds to 14.8% joy and 85.2% surprise.

4 RESULTS & COMPARISON

The focus of our system is on reducing design com-

plexity and increasing the accuracy of expression

recognition. Fuzzy Neural Nets (FNN) have been

used by authors in (Kim and Bien, 2003) for ‘per-

sonalized’ recognition of facial expressions. The suc-

cess rate achieved by them reaches 94.3%, but only

after the training phase. In (Lien, 1998), authors used

Hidden Markov Models (HMM) employing Facial

Action Coding System (FACS) (Ekman and Friesen,

1978). The success rate achieved by them varied

from 81% to 92%, using different image processing

techniques. In (Ayako Katoh, 1998), authors used

self-organizing ‘Maps’ for this purpose. Other tech-

niques like HMM are further used in (Xiaoxu Zhou,

2004). We have extensively tested our system us-

ing grayscale transforms of FG-NET facial expression

image database (Wallhoff, 2006). This database con-

tains images gathered from 18 different individuals.

Every individual has performed six basic expressions

Table 2: Results and Comparison.

Expression Maps HMM Case Mamdani

Based Fuzzy

(%) (%) Reasoning System

Surprise 80 90 80 95

Disgust 65 - 80 100

Joy 90 100 76 100

Anger 43 80 95 70

Fear 18 - 75 90

Sad 18 - 95 70

(plus neutral) three times. Comparison of our fuzzy

system with Case Based Reasoning system was also

done (M. Zubair Shafiq, 2006),(Shafiq and Khanum,

2006).

Table II gives the comparison of our system with

others systems employing different techniques.

5 CONCLUSIONS AND FUTURE

WORK

Facial expression recognition is the key to next gen-

eration human-computer interaction (HCI) systems.

We have chosen a more integrated approach as com-

pared to most of the general applications of FACS.

Extracted facial features are used in a collective man-

ner to find out ultimate facial expression.

Fuzzy classifier systems have been designed for

facial expression recognition in the past (Ushida and

Yamaguchi, 1993),(Kuncheva, 2000)(Kim and Bien,

2003). Our Mamdani-type fuzzy system shows clear

advantage in design simplicity. Moreover, it gives a

clear insight into how different rules combine to give

the ultimate expression output. Our system success-

fully demonstrates the use of fuzzy logic for recogni-

tion of basic facial expressions. Our future work will

focus on further improvement of fuzzy rules. We are

also developing hybrid systems (in (Assia Khanam

and Muhammad, 2007)) and Genetic Fuzzy Algo-

rithms (GFAs) for fine tuning of membership func-

FACIAL EXPRESSION RECOGNITION BASED ON FUZZY LOGIC

387

tions and rules for performance improvement (Fran-

cisco Herrera, 1997).

REFERENCES

Assia Khanam, M. Z. S. and Muhammad, E. (2007). Cbr:

Fuzzified case retreival approach for facial expression

recognition. pp. 162-167, 25th IASTED International

Conference on Artificial Intelligence and Aplications,

February 2007, Innsbruck, Austria.

Ayako Katoh, Y. F. (1998). Classification of facial expres-

sions using self-organizing maps. 20th International

Conference of the IEEE Engineering in Medicine and

Biology Society, Vol. 20, No 2.

Carlo Drioli, Graziano Tisato, P. C. and Tesser, F. (2003).

Emotions and voice quality. Experiments with Sinu-

soidal Modeling, VOQUAL’03, Geneva, August 27-

29.

D. H. Rao, S. S. S. (1995). Study of defuzzification meth-

ods of fuzzy logic controller for speed control of a dc

motor. IEEE Transactions, 1995, pp. 782- 787.

Dennis Galletta, P. Z. Human-computer interaction and

management information systems: Applications. Ad-

vances in Management Information Systems Series

Editor.

Diane J. Schiano, Sheryl M. Ehrlich, K. R. and Sheridan,

K. (2000). Face to interface: Facial affect in (hu)man

and machine. ACM CHI 2000 Conference on Human

Factors in Computing Systems, pp. 193-200.

Ekman, P. (1993). Facial expression and emotion. Ameri-

can Psychologist, Vol. 48, pp. 384-392.

Ekman, P. (1994). Strong evidence for universals in facial

expressions: A reply to russell’s mistaken critique.

Psychological Bulletin, pp.268-287.

Ekman, P. and Friesen, W. (1978). Facial action coding sys-

tem: Investigator’s guide. Consulting Psychologists

Press.

Francisco Herrera, L. M. (1997). Genetic fuzzy systems. A

Tutorial. Tatra Mt. Math. Publ. (Slovakia).

Kim, D.-J. and Bien, Z. (2003). Fuzzy neural networks

(fnn) - based approach for personalized facial expres-

sion recognition with novel feature selection method.

The IEEE Conference on Fuzzy Systems.

Klir, G. and Yuan, B. (1995). Fuzzy sets and fuzzy logic -

theory and applications. Prentice-Hall.

Koo, T. K. J. (1996). Construction of fuzzy linguistic model.

Proceedings of the 35th Conference on Decision and

Control, Kobe, Japan, 1996, pp.98-103.

Kuncheva, L. I. (2000). Fuzzy classifier design. pp. 112-

113.

Lien, J.-J. J. (1998). Automatic recognition of facial ex-

pressions using hidden markov modelsand estima-

tion of expression intensity. Phd Dissertation, The

Robotics Institute, Carnegie Mellon University, Pitts-

burgh, Pennsylvania.

M. Zubair Shafiq, A. K. (2006). Facial expression recog-

nition system using case based reasoning system. pp.

147-151, IEEE International Conference on Advance-

ment of Space Technologies.

Muid Mufti, A. K. (2006). Fuzzy rule-based facial expres-

sion recognition. CIMCA-2006, Sydney.

Ralescu, A. and Hartani, R. (1995). Some issues in fuzzy

and linguistic modeling. IEEE Proc. of International

Conference on Fuzzy Systems.

Runkler, T. A. (1996). Extended defuzzification methods

and their properties. IEEE Transactions, 1996, pp.

694-700.

Shafiq, M. Z. (2006). Towards more generic modeling for

facial expression recognition: A novel region extrac-

tion algorithm. International Conference on Graphics,

Multimedia and Imaging, GRAMI-2006, UET Taxila,

Pakistan.

Shafiq, M. Z. and Khanum, A. (2006). A ‘personalized’ fa-

cial expression recognition system using case based

reasoning. 2nd IEEE International Conference on

Emerging Technologies, Peshawar,pp.630-635.

Sherri C. Widen, J. A. R. and Brooks, A. (May 2004).

Anger and disgust: Discrete or overlapping categories.

In APS Annual Convention, Boston College, Chicago,

IL.

Ushida, H., T. T. and Yamaguchi, T. (1993). Recognition of

facial expressions using conceptual fuzzy sets. Proc.

of the 2nd IEEE International Conference on Fuzzy

Systems, pp. 594-599.

Wallhoff, F. (2006). Facial expressions and emotions

database. Technische Universitt Mnchen 2006.

http://www.mmk.ei.tum.de/ waf/fgnet/feedtum.html.

Xiaoxu Zhou, Xiangsheng Huang, Y. W. (2004). Real-

time facial expression recognition in the interactive

game based on embedded hidden markov model. In-

ternational Conference on Computer Graphics, Imag-

ing and Visualization (CGIV’04).

VISAPP 2008 - International Conference on Computer Vision Theory and Applications

388