A SUBJECTIVE SURFACES BASED SEGMENTATION FOR THE

RECONSTRUCTION OF BIOLOGICAL CELL SHAPE

Matteo Campana, Cecilia Zanella, Barbara Rizzi

Electronics, Computer Science and Systems Department (DEIS), Bologna University, Bologna, Italy

Paul Bourgine

CREA-Ecole Polytechnique, Paris, France

Nadine Peyri´eras

CNRS-DEPSN, Gif sur Yvette, France

Alessandro Sarti

Electronics, Computer Science and Systems Department (DEIS), Bologna University, Bologna, Italy

Keywords:

Image Segmentation, Surface Geometry and Shape Extraction, Biological Image Analysis.

Abstract:

Confocal laser scanning microscopy provides nondestructive in vivo imaging to capture specific structures that

have been fluorescently labeled, such as cell nuclei and membranes, throughout early Zebrafish embryogene-

sis. With this strategy we aim at reconstruct in time and space the biological structures of the embryo during

the organogenesis. In this paper we propose a method to extract bounding surfaces at the cellular-organization

level from microscopy images. The shape reconstruction of membranes and nuclei is obtained first with an

automatic identification of the cell center and then a subjective surfaces based segmentation is used to extract

the bounding surfaces.

1 INTRODUCTION

In vivo imaging of embryo opens the way to a bet-

ter understanding of complex biological processes at

the cellular level. The challenge is to reconstruct at

hight resolution the structure and the dynamics of

cells throughout the organogenesis and understand-

ing the processes that regulate their behavior. With

this studies we expect to be able to measure relevant

parameters, such as proliferation rate, highly relevant

for investigating anti-cancer drug effects in vivo.

Achieving this goal requires the development of

an automated method for extracting the surfaces that

represent the cell shapes. We expect to avoid any

manual intervention, such as manual cell identifica-

tions, which would be a so hight time-consuming pro-

cess taking into account the huge amount of objects

contained in every image.

Sarti et al. (Sarti et al., 2000a), realized a similar

study in a previous work where confocal microscopy

images were processed to extract the shape of nuclei.

However, in that case, the analysed volumes were not

acquired from a living organism but from pieces of

fixed tissues. The next sections will introduce the

SubjectiveSurfaces based segmentation algorithm de-

signed to be applied to the living organism scenario.

In Section 2 we illustrate the strategies adopted

for image acquisition, filtering and automated cells

identification. In Section 3 we describe the segmen-

tation algorithm designed for nucleus and membrane

shape reconstruction. The Section 4 briefly explains

the technologies utilized for implementing and test-

ing the algorithm. In the last section we show some

results.

2 IMAGE ACQUISITION AND

PREPROCESSING

High resolution time-lapse microscopy imaging of

living organisms is best achievedby multiphoton laser

microscopy (MLSM) (Gratton et al., 2001). It allows

555

Campana M., Zanella C., Rizzi B., Bourgine P., Peyriéras N. and Sarti A. (2008).

A SUBJECTIVE SURFACES BASED SEGMENTATION FOR THE RECONSTRUCTION OF BIOLOGICAL CELL SHAPE.

In Proceedings of the Third International Conference on Computer Vision Theory and Applications, pages 555-558

DOI: 10.5220/0001086005550558

Copyright

c

SciTePress

imaging the Zebrafish embryo (Megason and Fraser,

2003), engineered with two distinct fluorescent pro-

teins to highlight all nuclei and membranes during

early embryonic stages.

Optical sections of the organism are obtained by

detecting the fluorescent radiation coming from the

laser excited focal plane. The 3-D spatial sampling

of the embryo is achieved by changing the focal

plane depth. Every image produced is defined on

a grid composed by 512 pixels in X and Y direc-

tion. The spatial resolution is 0.584793 µm in X,

Y and 1.04892 µm in Z. The acquisition of 3-D im-

ages has been repeated throughout the early embry-

onic development, from 3.5 hours post fertilization

for 4 hours(at 28

o

C) (Kimmel et al., 1995).

The automated or semi-automated reconstruction

of the cell contours can be achieved through a pro-

cedure consisting of three sequential tasks: filtering,

cells detection, and segmentation. The first denoising

task should obviously increase the signal-to-noise ra-

tio without corrupt the boundary of cells that should

be reconstructed. The geodesic curvature filtering

(Sarti et al., 2000a), (Rizzi et al., 2007) has been used

to achieve by this task.

A common feature that can be used to identify the

cells from images, is the spherical or better the el-

lipsoidal shape of the nuclei. The goal to recognize

the shape of cells has been achieved implementing an

algorithm (Melani et al., 2007) based on the general-

ized 3-D Houg Transform (Ballard, 1981) and able to

detecting the centers of the nuclei. The centers have

been used separately as the initial condition for the

segmentation of every nucleus and membrane.

3 IMAGE SEGMENTATION

We implemented a Subjective Surfaces technique that

extracts separately the membranes and nuclei bound-

aries. This method (Sarti et al., 2000b), (Sarti et al.,

2002) is particularly useful for the segmentation of in-

complete contours, because it allows the reconstruc-

tion and the integration of lacking information. The

analysed images, especially the membranes images,

are characterized by a signal which is almost unde-

tectable or even absent in some regions. In such situa-

tions, the Subjective Surfaces technique should allow

the completion of lacking-portions of objects.

3.1 Subjective Surfaces Technique

In order to explain the algorithm, let us consider the

3-D image to be processed I : (x,y,z) → I(x,y,z)

as a real positive function defined in some domain

M ⊂ R

3

. As a first step an initial hypersurface S

i

(S

i

: (x,y,z) → (x, y,z,Φ

0

)) is defined in the same do-

main M of the image I starting from an initial func-

tion Φ

0

. The algorithm is applied to every single ob-

ject contained in the images. For every cell, a differ-

ent function Φ

0

is defined starting from the center of

the object detected by the Hough Transform based al-

gorithm. There are some alternative forms for Φ

0

, for

example Φ

0

= −αD or Φ

0

= α/D , where D is the

3-D distance function from the reference point.

The Subjective Surfaces technique allows to de-

tect the object shape by evolving the initial function

Φ

0

througha flow that depend from the characteristics

of the surface S

i

and from the local characteristics of

the image. As defined in the well-know Geodesic Ac-

tive Contours technique (GAC) (Caselles et al., 1997)

the motion equation which drives the hypersurface

evolution is:

Φ

t

= gH|∇Φ| + ∇g· ∇Φ; H = ∇·

∇Φ

|∇Φ|

(1)

As in GAC the H is the mean curvature except for

a parameter ε introduced in H expression to weigh

the matching of level curves; The edge indicator g is a

representation of the structures contained in the image

and its gradient is a force field that points in the edges

direction.

The entire hypersurface is driven under a speed

law dependent on the image gradient, whereas in clas-

sical formulation of Level Set methods, as in GAC,

the evolution affects only a particular front or level.

The first term on the right side of (1) is a parabolic

motion that evolves the hypersurface in normal direc-

tion with a velocity weighted by the mean curvature

and by the edge indicator g, slowing down near the

edges (where g → 0). The second term on the right

is a pure passive advection along the velocity field

−∇g whose direction and strength depend on posi-

tion. This term attracts the hypersurface in the di-

rection of the image edges. In regions with subjective

contours, continuation of existing edge fragments, ε is

negligible and (1) can be approximated by a geodesic

flow, allowing the boundary completion with curves

of minimal length (i.e. straight lines).

A classical expression (Perona and Malik, 1990)

of the edge indicator g can be :

g(x,y,z) =

1

1+ (|∇G

σ

(x,y,z) ∗ I(x,y,z)|/β)

n

(2)

where G

σ

(x,y,z) is a Gaussian kernel with standard

deviation σ, (∗) denotes the convolution and n is 1 or

2. The value of g is close to 1 in flat areas (|∇I| →

0) and close to 0 where the image gradient is high

(edges). Thus, the minima of g denote the position of

edges and its gradient is a force field that can be used

VISAPP 2008 - International Conference on Computer Vision Theory and Applications

556

to drive the evolution, because it always points in the

local edge direction.

The nuclei and membranes images behave in a

completely different way in terms of edge detection:

the thickness of membranes signal is about 3 or 4 vox-

els, whereas nuclei are solid objects. These specific

features require using different functions for the de-

tection of edges position in nuclei and membranes im-

ages. In nuclei images, the contours to be segmented

are located in the regions where image gradient is

higher and the minima of (2) denote the position of

the edges (Fig. 1(a)). On the contrary, the function

(2) can’t be applied on membranes images because it

revealsa double contour, on the internal and the exter-

nal side of the cell. An alternative edge indicator has

been defined using the image itself (not its gradient)

as contours detector. We can use the intensity infor-

mation to locate the position of the edges, because

the membranes images contain high intensity regions,

where the labeled membrane structure has been ac-

quired, versus low intensity background regions. The

edge indicator we used is:

g(x,y,z) =

1

1+ (|G

σ

(x,y,z) ∗ I(x,y,z)|/β)

2

(3)

As we expected, its minima locate the contours in the

middle of the membranes thickness (Fig. 1(b)).

4 IMPLEMENTATION AND

VISUAL INSPECTION

The segmentation algorithm has been implemented

and tested using a framework designed to visual-

ize and processing 3-D time lapse images (Campana

et al., 2007).

The segmented surfaces are extracted as the iso-

surfaces of the functions Φ obtained after the seg-

mentation. These surfaces are represented and stored

through a VTK PolyData format (Schroeder et al.,

1998). The segmented surfaces have been superim-

posed to the original non-processed image (raw data)

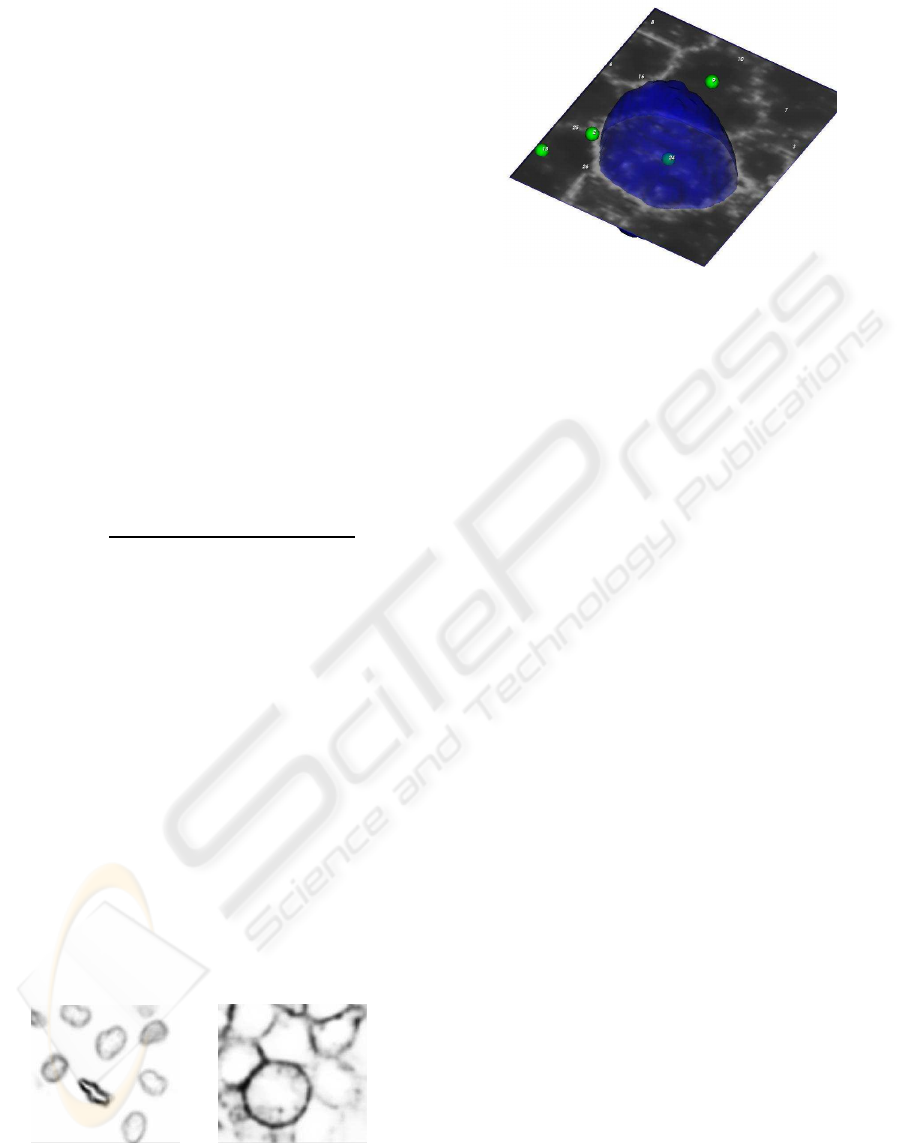

(a) Nuclei channel. (b) Membranes

channel.

Figure 1: Images of the edge indicators: membranes (a) and

nuclei (b).

Figure 2: In figure the membrane image is analyzed by a

cutting plane, the center of cell number 24 is represented by

the green sphere, and its membrane boundary is displayed

as a blue surface.

and to detected nuclei in order to easily validate the

reconstruction process (Fig. 2). The position of every

nucleus detected inside each volume has been repre-

sented by a small green sphere labeled by the number

that identifies the cell.

The visual inspection realized on preliminary

membrane segmented surfaces demonstrated a not ex-

act extraction of the boundaries. This problem occurs

in membrane images because they are corrupted by a

weak nuclei signal, more intense during mitosis. In

order to solve this problem the membranes segmenta-

tion requires an additional preprocessing with the goal

to remove this interfering signal. First a separation of

the nuclei signal from the background is simply ob-

tained by a thresholding of nuclei images. The signal

obtained by this thresholding is then used to remove

the interfering signal from membranes images.

After segmentation, the intensity distribution of

the function Φ is typically associated to a bimodal

histogram with a values range between 0 and 255, be-

cause of linear rescaling. The highest intensity peak

(near to 255) corresponds to the segmented object, the

lowest one to the background. The segmented sur-

faces can be extracted as the isosurfaces correspond-

ing to the intermediate value 128.

5 RESULTS

As we expected the algorithm is able to complete the

missing boundaries. the Fig.3 shows the image and

the segmented surfaces of dividing membranes dur-

ing telophase. As is possible observe from the result-

ing surfaces, the portion of image with missing mem-

brane boundaries, underlined with a red circle, is well

completed and extracted.

A SUBJECTIVE SURFACES BASED SEGMENTATION FOR THE RECONSTRUCTION OF BIOLOGICAL CELL

SHAPE

557

(a) (b) (c)

Figure 3: Membrane segmentation of a dividing cell.

(a)Membranes signal. (b)Slice of the segmented surfaces.

(c)Segmented surfaces.

(a) (b) (c)

Figure 4: Segmentation of a cell throughout mitosis. Mem-

brane and nucleus shape are respectively represented with

green and red surfaces.

The visual inspection of different segmented sur-

faces reveals some problems in the reconstruction of

objects characterized by flat shapes such as epithe-

lial cells and nuclei of dividing cells. The Fig. 4

show the shape of nuclei and membranes when the

cell is close to the division. Before undergoing divi-

sion, cells become spherical, whereas nuclei staining

elongates as the chromosomes arrange in the future

cell division plane. It should be noted that the nucleus

size is slightly underestimated in the last two parts.

This is due to the parabolic regularization term in the

motion equation (1), which prevents the segmented

surface to reach the contour if it is concave and with

high curvature. Excluding these particular shapes the

nuclei and membranes surfaces seem to be pretty well

reconstructed if compared with the acquired images.

In Fig. 5 and Fig. 4 we show some surfaces obtained

with the algorithm.

We thank all the members of the Embryomics and

BioEmergences projects for our very fruitful interdis-

ciplinary interaction.

REFERENCES

Ballard, D. (1981). Generalizing the hough transform to

detect arbitrary shapes. Pattern Recognition, 13:111–

122.

Campana, M., Rizzi, B., Melani, C., Bourgine, P., Peyri-

ras, N., and Sarti, A. (2007). A framework for 4-d

biomedical image processing visualization and analy-

sis. In GRAPP 08-International Conference on Com-

puter Graphics Theory and Applications.

(a) Membranes. (b) Nuclei.

Figure 5: Segmentation of an entire subvolume.

Caselles, V., Kimmel, R., and Sapiro, G. (1997). Geodesic

active contours. International Journal of Computer

Vision, 22:61–79.

Gratton, E., Barry, N. P., Beretta, S., and Celli, A.

(2001). Multiphoton fluorescence microscopy. Meth-

ods, 25(1):103–110.

Kimmel, C. B., Ballard, W. W., Kimmel, S. R., Ullmann,

B., and Schilling, T. F. (1995). Stages of embryonic

development of the zebrafish. Dev. Dyn., 203:253–

310.

Megason, S. and Fraser, S. (2003). Digitizing life at the

level of the cell: high-performance laser-scanning mi-

croscopy and image analysis for in toto imaging of

development. Mech. Dev., 120:1407–1420.

Melani, C., Campana, M., Lombardot, B., Rizzi, B.,

Veronesi, F., Zanella, C., Bourgine, P., Mikula, K.,

Pe-yrieras, N., and Sarti, A. (2007). Cells tracking in

a live zebrafish embryo. In Proceedings 29th Annual

International Conference of the IEEE EMBS, pages

1631–1634.

Perona, P. and Malik, J. (1990). Scale-space and edge detec-

tion using anisotropic diffusion. IEEE Trans. Pattern

Anal. Mach. Intell., 12:629–639.

Rizzi, B., Campana, M., Zanella, C., Melani, C., Cunderlik,

R., Kriv, Z., Bourgine, P., Mikula, K., Pe-yrieras, N.,

and Sarti, A. (2007). 3d zebra fish embryo images

filtering by nonlinear partial differential equations. In

Proceedings 29th Annual International Conference of

the IEEE EMBS.

Sarti, A., de Solorzano, C. O., Lockett, S., and Malladi, R.

(2000a). A geometric model for 3-d confocal image

analysis. IEEE Transactions on Biomedical Engineer-

ing, 47(12):1600–1609.

Sarti, A., Malladi, R., and Sethian, J. A. (2000b). Subjective

surfaces: A method for completing missing bound-

aries. In Proceedings of the National Academy of Sci-

ences of the United States of America, pages 6258–

6263.

Sarti, A., Malladi, R., and Sethian, J. A. (2002). Subjec-

tive surfaces: A geometric model for boundary com-

pletion. International Journal of Computer Vision,

46(3):201–221.

Schroeder, W., Martin, K., and Lorensen, B. (1998). The Vi-

sualization Toolkit. Prentice Hall, Upper Saddle River

NJ, 2nd edition.

VISAPP 2008 - International Conference on Computer Vision Theory and Applications

558