The Computation of Semantically Related Words:

Thesaurus Generation for English, German, and Russian

Reinhard Rapp

University of Tarragona, Spain

Abstract. A method for the automatic extraction of semantically similar words

is presented which is based on the analysis of word distribution in large mono-

lingual text corpora. It involves compiling matrices of word co-occurrences and

reducing the dimensionality of the semantic space by conducting a singular

value decomposition. This way problems of data sparseness are reduced and a

generalization effect is achieved which considerably improves the results. The

method is largely language independent and has been applied to corpora of

English, German, and Russian, with the resulting thesauri being freely avail-

able. For the English thesaurus, an evaluation has been conducted by comparing

it to experimental results as obtained from test persons who were asked to give

judgements of word similarities. According to this evaluation, the machine gen-

erated results come close to native speaker’s performance.

1 Introduction

In his paper “Distributional Structure” Zelig S. Harris [3] hypothesized that words

that occur in the same contexts tend to have similar meanings. This finding is often

referred to as distributional hypothesis. It was put into practice by Ruge [12] who

showed that the semantic similarity of two words can be computed by looking at the

agreement of their lexical neighborhoods. For example, the semantic similarity of the

words street and road can be derived from the fact that they both frequently co-occur

with words like car, drive, map, traffic, etc. If on the basis of a large corpus a matrix

of such co-occurrences is compiled, then the semantic similarities between words can

be determined by comparing the vectors in the matrix. This can be done using any of

the standard vector similarity measures such as the cosine coefficient.

Since Ruge’s pioneering work, many researchers, e.g. Schütze [14], Rapp [9], and

Turney [16] used this type of distributional analysis as a basis to determine

semantically related words. An important characteristic of some algorithms (e.g. [12,

2, 7]) is that they parse the corpus and only consider co-occurrences of word pairs that

are in a certain relation to each other, e.g. a head-modifier, verb-object, or subject-

object relation. Others do not parse but perform a singular value decomposition

(SVD) on the co-occurrence matrix, which also could be shown to improve results [4,

5]. As an alternative to the SVD, Sahlgren [13] uses random indexing which is

computationally less demanding.

In the current paper we use an improved variant of the Landauer & Dumais algo-

rithm as described in Rapp [10, 11]. It does not perform a syntactic analysis but

Rapp R. (2007).

The Computation of Semantically Related Words: Thesaurus Generation for English, German, and Russian.

In Proceedings of the 4th International Workshop on Natural Language Processing and Cognitive Science, pages 71-80

DOI: 10.5220/0002414500710080

Copyright

c

SciTePress

reduces the dimensionality of the semantic space by performing an SVD. The pro-

gram is applied to corpora of three languages, namely English, German, and Russian,

and large thesauri of related words are generated for each of these languages.

2 Corpora

Since our algorithm is based on a similarity measure relying on co-occurrence data,

corpora are required from which the co-occurrence counts can be derived. If – as in

this case – a measure for the success of the system is the results’ plausibility to human

judgment, it is advisable to use corpora that are as typical as possible for the language

environment of native speakers.

For English, we chose the British National Corpus (BNC), a 100-million-word

corpus of written and spoken language that was compiled with the intention of pro-

viding a balanced sample of British English [1]. As for the German corpus, due to

lack of a balanced corpus, we used 135 million words of the newspaper Frankfurter

Allgemeine Zeitung (years 1993 to 1996). For Russian, we used the Russian Reference

Corpus, a corpus of about 50 million words.

1

Since these corpora are relatively large, to save disk space and processing time we

decided to remove all function words from the texts. This was done on the basis of a

list of approximately 600 German and another list of about 200 English function

words. These lists were compiled by looking at the closed class words (mainly arti-

cles, pronouns, and particles) in an English and a German morphological lexicon (for

details see [6]) and at word frequency lists derived from our corpora. In the case of

the Russian Reference Corpus, part-of-speech information was given for all words,

and function words were removed on this basis. By eliminating function words, we

assumed we would lose little information: Function words are often highly ambiguous

and their co-occurrences are mostly based on syntactic instead of semantic patterns.

We also decided to lemmatize our corpora. Since we were interested in the simi-

larities of base forms only, it was clear that lemmatization would be useful as it re-

duces the sparse-data problem. For English and German we conducted a partial lem-

matization procedure that was based only on a morphological lexicon and did not take

the context of a word form into account. This means that we could not lemmatize

those ambiguous word forms that can be derived from more than one base form.

However, this is a relatively rare case. (According to [6], 93% of the tokens of a

German text had only one lemma.) In the case of Russian, no special processing was

necessary since lemma information was already given in the corpus as provided by

Serge Sharoff.

3 TOEFL data for evaluation

As described in Rapp [11], it is desirable to evaluate the results of the different al-

gorithms for computing semantic similarity. For doing so, many possibilities can be

1

See http://corpus.leeds.ac.uk/serge/bokrcorpora/index-en.html. This corpus was kindly pro-

vided by Serge Sharoff.

72

thought of and have been applied in the past. For example, [2] used available

dictionaries as a gold standard, Lin [7] compared his results to WordNet, and [4] used

experimental data taken from the synonym portion of the Test of English as a Foreign

Language (TOEFL). As described by Turney [17], the advantage of the TOEFL data

is that it has gained considerable acceptance among researchers.

The TOEFL is an obligatory test for non-native speakers of English who intend to

study at a university with English as the teaching language. The data used by Lan-

dauer & Dumais had been acquired from the Educational Testing Service and com-

prises 80 test items. Each item consists of a problem word embedded in a sentence

and four alternative words, from which the test taker is asked to choose the one with

the most similar meaning to the problem word. For example, given the test sentence

“Both boats and trains are used for transporting the materials” and the four alterna-

tive words planes, ships, canoes, and railroads, the subject would be expected to

choose the word ships, which is supposed to be the one most similar to boats.

Landauer & Dumais [4] found that their algorithm for computing semantic

similarities between words has a success rate comparable to the human test takers

when applied to the TOEFL synonym test. Whereas the algorithm got 64.4% of the

questions right (i.e. the correct solution obtained the best rank among the four alter-

native words), the average success rate of the human subjects was 64.5%.

2

Other

researchers were able to improve the performance to 69% [9], 72% [13], 74% [16]

and 81.25% [15]. This gives the impression that the quality of the simulation is above

human level.

However, it has often been overlooked that the 64.5% performance figure

achieved by the test takers relates to non-native speakers of English, and that native

speakers perform significantly better. On the other hand, the simulation programs are

usually not designed to make use of the context of the test word, so they neglect some

information that may be useful for the human subjects.

In order to approach both issues, we presented a test sheet with the TOEFL test

words, together with the alternative words, but without the sentences, to five native

and five non-native speakers of English, drawn from staff at the Macquarie University

in Sydney [11] We asked them to select among the alternative words the one that,

according to their personal judgment, was closest in meaning to the given word. Two

of the native speakers got all 80 items correct, another two got 78 correct, and one got

75 correct. As expected, the performance of the non-native speakers was considerably

worse. Their numbers of correct choices were 75, 70, 69, 67, and 66.

On average, the performance of the native speakers was 97.75%, whereas the per-

formance of the non-native speakers was 86.75%. Remember that the performance of

the non-native speakers in the TOEFL test, although they had the context of each test

word as an additional clue, was only 64.5%. The discrepancy of more than 20% be-

tween our non-native speakers and the TOEFL test takers can be explained by the fact

that most of our subjects had spent many years in English speaking countries and thus

had a language proficiency far above average. More importantly, our native speakers’

results indicate that the performance of the above mentioned algorithms is clearly

below human performance. So the impression from the Landauer & Dumais [4] paper

that human-like quality has been obtained is obviously wrong unless one only looks at

second language learners with a relatively poor proficiency.

2

This performance figure was provided by the Educational Testing Service, with the number of

test takers being unknown.

73

As we did not have any data comparable to the TOEFL synonym test for German

and Russian, the evaluation was only conducted for English.

4 Algorithm

Our algorithm is a modified version of Landauer & Dumais [4] and consists of the

following four steps (see also [10, 11]):

1. Counting word co-occurrences

2. Applying an association measure to the raw counts

3. Singular value decomposition

4. Computing vector similarities

4.1 Counting Word Co-occurrences

For counting word co-occurrences, as in most other studies a fixed window size is

chosen and it is determined how often each pair of words occurs within a text window

of this size. Choosing a window size usually means a trade-off between two parame-

ters: specificity versus the sparse-data problem. The smaller the window, the more

salient the associative relations between the words inside the window, but the more

severe the sparse data problem. In our case, with ±2 words, the window size looks

rather small. However, this can be justified since we have reduced the effects of the

sparse data problem by using a large corpus and by lemmatizing the corpus. It also

should be noted that a window size of ±2 applied after elimination of the function

words is comparable to a window size of ±4 applied to the original texts (assuming

that roughly every second word is a function word).

Based on the window size of ±2, we computed the co-occurrence matrix for the

corpus. By storing it as a sparse matrix, it was feasible to include all of the approxi-

mately 375 000 lemmas occurring in the BNC.

4.2 Applying an Association Measure

Although semantic similarities can be successfully computed based on raw word co-

occurrence counts, the results can be improved when the observed co-occurrence-fre-

quencies are transformed by some function that reduces the effects of different word

frequencies. For example, by applying a significance test that compares the observed

co-occurrence counts with the expected co-occurrence counts (e.g. the log-likelihood

test) significant word pairs are strengthened and incidental word pairs are weakened.

Other measures applied successfully include TF/IDF and mutual information. In the

remainder of this paper, we refer to co-occurrence matrices that have been trans-

formed by such a function as association matrices. However, in order to further im-

prove similarity estimates, in this study we apply a singular value decomposition

(SVD) to our association matrices (see section 4.3). To our surprise, our experiments

clearly showed that the log-likelihood ratio, which was the transformation function

that gave good similarity estimates without SVD [9], was not optimal when using

74

SVD. Following Landauer & Dumais [4], we found that with SVD some entropy-

based transformation function gave substantially better results than the loglikelihood

ratio. This is the formula that we use:

⎟

⎠

⎞

⎜

⎝

⎛

−⋅+=

∑

k

kjkjijij

ppfA )log()1log(

with

j

kj

kj

c

f

p =

Hereby f

ij

is the co-occurrence frequency of words i and j and c

j

is the corpus fre-

quency of word j. Indices i, j, and k all have a range between one and the number of

words in the vocabulary n. The right term in the formula (sum) is entropy. As usual

with entropy, it is assumed that 0 log(0) = 0. The entropy of a word reaches its maxi-

mum of log(n) if the word co-occurs equally often with all other words in a vocabu-

lary, and it reaches its minimum (zero) if it co-occurs only with a single other word.

Let us now look at how the formula works. The important part is taking the loga-

rithm of f

ij

thus dampening the effects of large differences in frequency. Adding 1 to

f

ij

provides some smoothing and prevents the logarithm from becoming infinite if f

ij

is

zero. A relatively modest, but noticeable improvement (in the order of 5% when

measured using the TOEFL-data) can be achieved by multiplying this by the entropy

of a word. This has the effect that the weights of rare words that have only few (and

often incidental) co-occurrences are reduced.

Note that this is in contrast to Landauer & Dumais [4]who suggest not to multiply

but to divide by entropy. The reasoning is that words with a salient co-occurrence

distribution should have stronger weights than words with a more or less random

distribution. However, as shown empirically, in our setting multiplication leads to

clearly better results than division.

4.3 Singular Value Decomposition

Landauer & Dumais [4] showed that the results can be improved if before computing

semantic similarities the dimensionality of the association matrix is reduced. An

appropriate mathematical method to do so is singular value decomposition. As this

method is rather sophisticated, we can not go into the details here. A good description

can be found in Landauer & Dumais [4]. The essence is that by computing the

Eigenvalues of a matrix and by truncating the smaller ones, SVD allows to signifi-

cantly reduce the number of columns, thereby (in a least squares sense) optimally pre-

serving the Euclidean distances between the lines [14].

For computational reasons, we were not able to conduct the SVD for a matrix of

all 374,244 lemmas occurring in the BNC.

Therefore, we restricted our vocabulary to

all lemmas with a BNC frequency of at least 20. To this vocabulary all problem and

alternative words occurring in the TOEFL synonym test were added. This resulted in

a total vocabulary of 56,491 words. In the association matrix corresponding to this vo-

cabulary all 395 lines and 395 columns that contained only zeroes were removed

which led to a matrix of size 56,096 by 56,096.

By using a version of Mike Berry’s SVDPACK

3

software that had been modified

by Hinrich Schütze, we transformed the 56,096 by 56,096 association matrix to a ma-

trix of 56,096 lines and 300 columns. This smaller matrix has the advantage that all

subsequent similarity computations are much faster. As discussed in Landauer &

3

http://www.netlib.org/svdpack/

75

Dumais [4], the process of dimensionality reduction, by combining similar columns

(relating to words with similar meanings), is believed to perform a kind of gen-

eralization that is hoped to improve similarity computations (even critics concede at

least a smoothing effect).

4.4 Computation of Semantic Similarity

The computation of the semantic similarities between words is based on comparisons

between their dimensionality reduced association vectors. Our experience is that the

sparse data problem is usually by far not as severe for the computation of vector

similarities (second-order dependency) as it is – for example – for the computation of

mutual information (first-order dependency). The reason is that for the computation of

vector similarities a large number of association values are taken into account, and

although each value is subject to a sampling error, these errors tend to cancel out over

the whole vector. Since association measures such as mutual information usually only

take a single association value into account, this kind of error reduction cannot be ob-

served here.

For vector comparison, among the many similarity measures found in the litera-

ture, we decided to use the cosine coefficient. The cosine coefficient computes the

cosine of the angle between two vectors.

5 Results and Evaluation

The processing of the German and the Russian corpus was done in analogy to English

as described above. That is, the size of vocabulary has been chosen to be in the same

order of magnitude,

4

and all parameters remained the same. Only for Russian the

number of dimensions was chosen to be 250 instead of 300. The reason is that the

Russian corpus is considerably smaller than the English and the German ones. It

therefore carries less information and requires fewer dimensions.

5

To give a first impression, Table 1 shows the top most similar words to a few English

examples as computed using SVD, the cosine-coefficient, and a vocabulary of 56,096

words. Although these results look plausible, a quantitative evaluation is always de-

sirable. For this reason we used our system for solving the TOEFL synonym test and

compared the results to the correct answers as provided by the Educational Testing

Service. Remember that the subjects had to choose the word most similar to a given

stimulus word from a list of four alternatives. In the simulation, we assumed that the

system made the right decision if the correct answer was ranked best among the four

alternatives. This was the case for 74 of the 80 test items which gives us an accuracy

of 92.5%. For comparison, recall that the performance of our human subjects had

been 97.75% for the native speakers and 86.75% for our highly proficient non-native

4

For German the 39,745 words with a corpus frequency of 100 or higher were chosen, for

Russian the 57,058 words with a frequency above 19.

5

After removal of the function words, the English corpus contained 50,486,400 tokens, the

German corpus 59,307,629, and the Russian corpus 42,792,750.

76

speakers. This means our program’s performance is in between the two levels with

about equal margins towards both sides.

Results analogous to Table 1 are given for German and Russian in Tables 2 and 3.

To make the interpretation easier, for all words appearing in the tables translations are

provided. For the Russian data this has been kindly done by Alexander Perekrestenko.

Note that for Russian the transliteration from the Cyrillic to the Latin alphabet as al-

ready provided by the Russian Reference Corpus has been used.

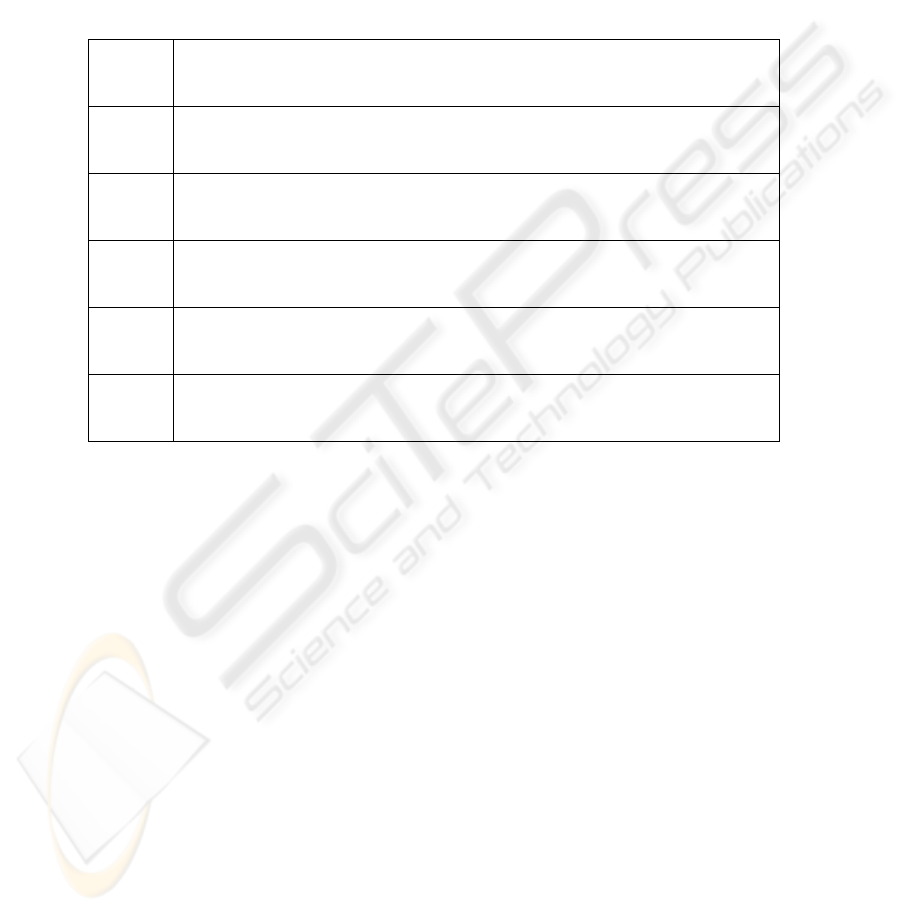

Table 1. Semantic similarities for some English words as computed. The lists are ranked

according to the cosine coefficient.

enor-

mously

greatly (0.52), immensely (0.51), tremendously (0.48), considerably

(0.48), substantially (0.44), vastly (0.38), hugely (0.38), dramatically

(0.35), materially (0.34), appreciably (0.33)

flaw

Shortcomings (0.43), defect (0.42), deficiencies (0.41), weakness (0.41),

fault (0.36), drawback (0.36), anomaly (0.34), inconsistency (0.34),

discrepancy (0.33), fallacy (0.31)

issue

question (0.51), matter (0.47), debate (0.38), concern (0.38), problem

(0.37), topic (0.34), consideration (0.31), raise (0.30), dilemma (0.29),

discussion (0.28)

build

building (0.55), construct (0.48), erect (0.39), design (0.37), create (0.37),

develop (0.36), construction (0.34), rebuild (0.34), exist (0.29), brick

(0.27)

discrep-

ancy

disparity (0.44), anomaly (0.43), inconsistency (0.43), inaccuracy (0.40),

difference (0.36), shortcomings (0.35), variance (0.34), imbalance (0.34),

flaw (0.33), variation (0.33)

essen-

tially

primarily (0.50), largely (0.49), purely (0.48), basically (0.48), mainly

(0.46), mostly (0.39), fundamentally (0.39), principally (0.39), solely

(0.36), entirely (0.35)

6 Discussion

In section 3 the performances of some other systems that also had been evaluated on

the TOEFL synonym test have been given. The best performance of corpus-based

systems we are aware of was reported by Terra & Clarke [15] which is 81.25%. It was

obtained using a terabyte web corpus (53 billion words) and pointwise mutual

information as the similarity measure. Although our corpus is several orders of mag-

nitude smaller, by using SVD with a performance of 92.5% we were able to signifi-

cantly improve on this result, which is an indication that the generalization effect

claimed for SVD actually works in practice. This finding is also confirmed by our

previous performance of only 69% achieved on the BNC without SVD [9].

Given these results, for the task of computing semantically related words it seems

not essential to perform a syntactical analysis beforehand in order to determine the

dependency relations between words, as for example done by Pantel & Lin [8].

Although – depending on the corpus used – the results can be somewhat noisy, it

should be noted that – in case only paradigmatic relations are of interest – there is the

77

possibility of filtering the output lists according to part of speech which should re-

move most of the noise.

But even if no filtering is performed, the results of the fully unsupervised ap-

proach which only relies on algebra largely agree with human intuition. Neglecting

the pre-processing step of partial lemmatization, which essentially served the purpose

of keeping our co-occurrence matrix small enough for SVD processing, no linguistic

resources, neither a lexicon nor syntactic rules, are required. The algorithm considers

any string of characters that is delimited by blanks or punctuation marks as a word,

applies the SVD to an association matrix derived from the co-occurrences of the

words in a corpus, and finally comes up with lists of similar words that highly agree

with human intuitions.

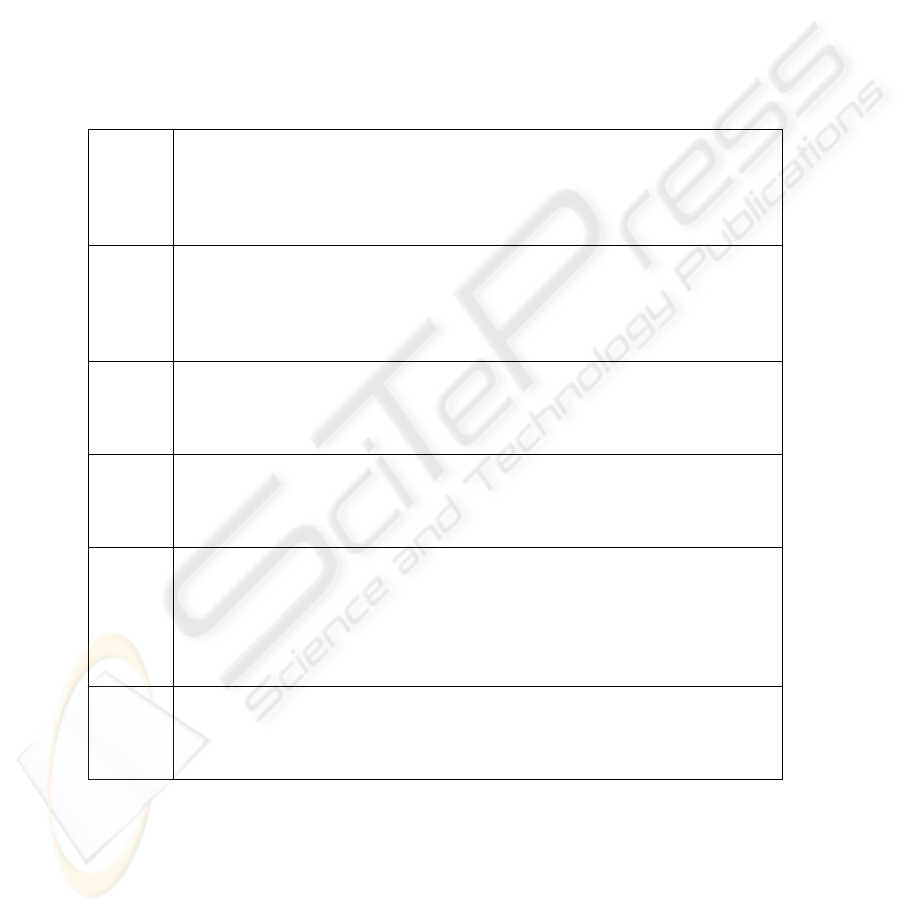

Table 2. Semantic similarities for some German words as computed. For each word, an English

translation is given in square brackets.

ärgerlich

[angry]

peinlich [embarrassing](0.51), bedauerlich [regrettable] (0.47),

unangenehm [unpleasant] (0.45), empörend [infuriating] (0.44),

bedenklich [precarious] (0.42), ärgern [to annoy] (0,41), unverständlich

[incomprehensible] (0.39), nerven [to annoy] (0.39), skandalös

[scandalous] (0.39), deprimieren [to depress] (0.38)

Dar-

steller

[per-

former]

Schauspieler [actor] (0.64), Regisseur [director] (0.57), Sänger [vocalist]

(0.56), Hauptrolle [leading part] (0.55), inszenieren [to stage-manage]

(0.49), Filmemacher [moviemaker] (0.49), Tänzer [dancer] (0.48),

Choreograph [choreographer] (0.47), Komödie [comedy] (0.47), filmen

[filming] (0.45)

dennoch

[never-

theless]

gleichwohl [nonetheless] (0.76), trotzdem [although] (0.64), indes

[however] (0,60), zwar [indeed] (0.59), allerdings [but] (0.57), deshalb

[therefore] (0.56), immerhin [anyhow] (0.56), freilich [sure enough]

(0.55), indessen [meanwhile] (0.54), zudem [furthermore] (0.51)

Gesang

[sing-

ing]

Lied [song] (0.62), singen [to sing] (0.53), Klang [sound] (0.52), Melodie

[melody] (0.49), Musik [music] (0.47), Trommeln [drumming] (0.46),

Orgel [organ] (0.46), Hymnus [hymn] (0.46), Arie [aria] (0.45), Ballade

[ballad] (0.45)

Magi-

strat

[magi-

strate]

Stadtparlament [city parliament] (0.60), Stadtverordnetenversammlung

[city council meeting] (0.58), Stadtrat [city council] (0.55), Stadtver-

ordnete [city councillor] (0.48), Gemeinderat [municipal council] (0.48),

Abgeordnetenhaus [house of representatives] (0.46), Bürgermeisterin

[mayoress] (0.46), Kreistag [district council] (0.45), Bürgerschaft

[citizenship] (0.41), Stadtoberhaupt [mayor] (0.41)

Spott

[ridi-

cule]

Häme [malice] (0.54), Empörung [outrage] (0.46), Neid [enviousness]

(0.43), Mitleid [compassion] (0.42), Unverständnis [lack of under-

standing] (0.41), Polemik [polemic] (0.41), Wut [fury] (0.41), Zorn

[anger] (0.41), ironisch [ironical] (0.39), Unmut [displeasure] (0.39)

78

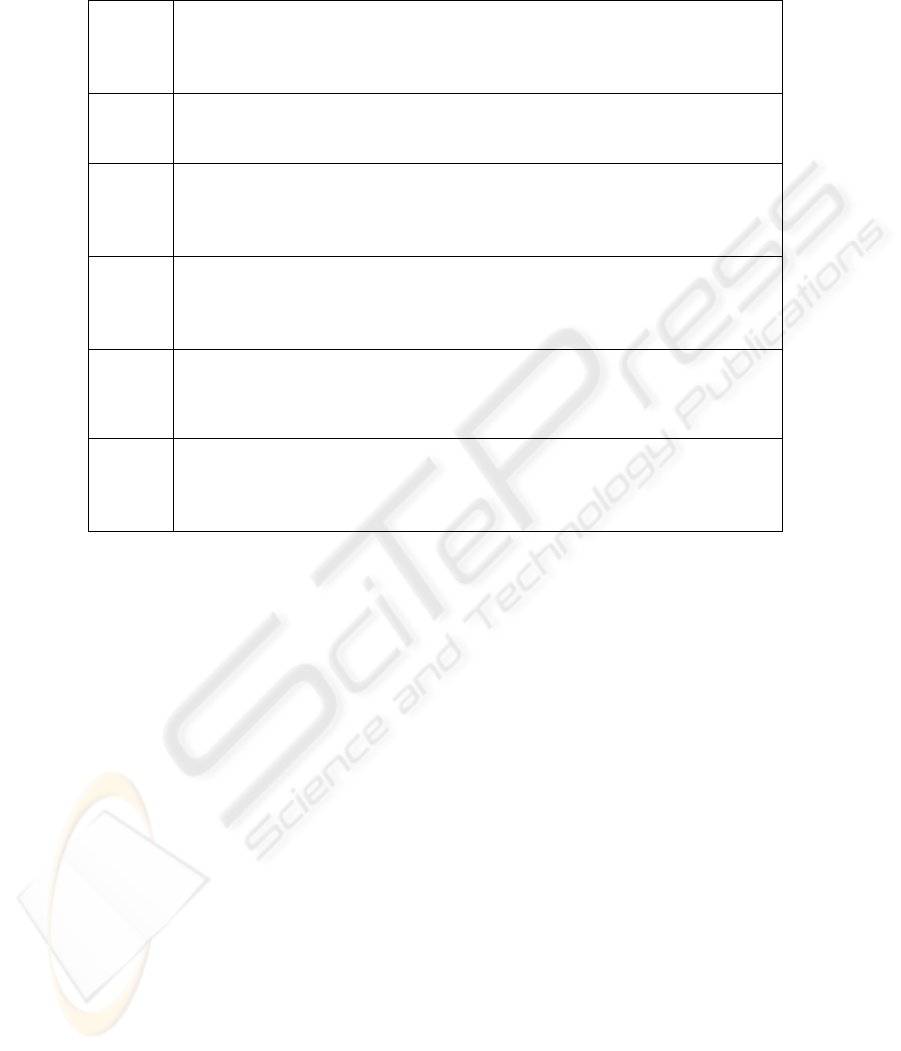

Table 3. Semantic similarities for some Russian words as computed. For each word, an English

translation is given in square brackets.

festivalq

[festi-

val]

koncert [concert] (0.62), vystavka [exhibition] (0.58), spektaklq

[performance, show] (0.57), teatr [theater] (0.52), balet [ballet] (0.51),

konkurs [competition] (0.49), forum [forum] (0.49), opera [opera] (0.49),

gastrolq [(performance) tours] (0,49), kamernyj [chamber (adj.)] (0.48)

'dolzh-

nostq'

[post]

post [post] (0.59), zvanie [rank] (0.49), naznachatq [to appoint] (0.46),

otstavka [resignation] (0.46), naznachenie [appointment] (0.45), oklad

[salary] (0.45), uvolqnjatq [to fire] (0.42)

dom

[house]

kvartira [flat] (0.59), ulica [street] (0.54), zdanie [building] (0.54), dvor

[garden] (0.53), gorod [town] (0.48), komnata [room] (0.45), domik

[small house] (0.43), dacha [summer cottage] (0.39), semqja [family]

(0.38), derevnja [village] (0.38)

doma-

shnij

[dome-

stic]

domashnie [domestic] (0.55), koshka [cat] (0.32), zhivotnoe [animal]

(0.31), kompqjuter [computer] (0.31), privychnyj [accustomed] (0.30),

ujut [coziness] (0.29), kuxnja [kitchen] (0.29), xozjajstvo [facilities]

(0.28), piwa [food] (0.27), kurica [hen] (0.27)

begatq

[to run]

xoditq [to walk] (0.53), prygatq [to jump] 0.53, gonjatq [to race] (0.52),

metatqsja [to race in panic] 0.50, nositqsja [to rush] (0.49), broditq [to

wander] (4.5), gonjatqsja [to chase] (0.40), pobezhalyj [the color of a

burnt steel] (0.40), polzatq [to creep] (0,40), chistitq [to clean] (0.40)

davno

[long

ago]

pora [period] (0.55), nedavno [recently] (0.46), davnym-davno [very long

ago] (0.44), uzkij [narrow] (0,43), sej [sow! (imperative)] (0,42), kogda-to

[once upon a time] (0.39), navsegda [forever] (0.38), nikogda [never]

(0.38), rano [early] 0.35, teperq [now, nowadays] 0.34

7 Summary and Prospects

We have presented a statistical method for the corpus-based automatic computation of

related words which has been evaluated on the TOEFL synonym test. Its performance

on this task favorably compares to other purely corpus-based approaches and suggests

that sophisticated and language dependent syntactic processing is not essential.

The automatically generated sample thesauri of related words for English, German

and Russian, each comprising in the order of 50,000 entries, are freely available from

the author. Although, unlike other thesauri, at the current stage they do not distinguish

between different kinds of relationships between words, there is one advantage over

manually created thesauri: Given a certain word, not only a few related words are

listed. Instead, all words of a large vocabulary are ranked according to their similarity

to the given word. Since even at the higher ranks the distinctions obtained seem

meaningful, this is an important feature that is indispensable for some kinds of ma-

chine processing, e.g. for word sense disambiguation and induction.

Future work that we envisage includes applying our method to corpora of other

languages, adding multi-word units to the vocabulary, and to find solutions to the

problem of word ambiguity that has not been dealt with here.

79

Acknowledgements

This research was supported by the German Research Society (DFG) and by a Marie

Curie Intra-European Fellowship within the 6th European Community Framework

Programme. The evaluation part of this research was conducted during my stay at the

Centre for Language Technology of Macquarie University, Sydney. I would like to

thank Robert Dale and his research group for the pleasant cooperation. Many thanks

also go to Serge Sharoff and Alexander Perekrestenko for their support of this work.

References

1. Burnard, L.; Aston, G. (1998). The BNC Handbook: Exploring the British National Corpus

with Sara. Edinburgh University Press.

2. Grefenstette, G. (1994). Explorations in Automatic Thesaurus Discovery. Dordrecht:

Kluwer.

3. Harris, Z.S. (1954). Distributional structure. Word, 10(23), 146–162.

4. Landauer, T. K.; Dumais, S. T. (1997). A solution to Plato’s problem: the latent semantic analysis

theory of acquisition, induction, and representation of knowledge. Psychological Review, 104(2),

211–240.

5. Landauer, T.K.; McNamara, D.S.; Dennis, S.; Kintsch, W. (eds.) (2007). Handbook of Latent

Semantic Analysis. Lawrence Erlbaum.

6. Lezius, W.; Rapp, R.; Wettler, M. (1998). A freely available morphology system, part-of-

speech tagger, and context-sensitive lemmatizer for German. In: Proceedings of COLING-

ACL 1998, Montreal, Vol. 2, 743-748.

7. Lin, D. (1998). Automatic retrieval and clustering of similar words. In: Proceedings of COLING-

ACL 1998, Montreal, Vol. 2, 768–773.

8. Pantel, Patrick; Lin, Dekang (2002). Discovering word senses from text. In: Proceedings of

ACM SIGKDD, Edmonton, 613–619.

9. Rapp, R. (2002). The computation of word associations: comparing syntagmatic and

paradigmatic approaches. Proceedings of 19th COLING, Taipei, ROC, Vol. 2, 821–827.

10. Rapp, R. (2003). Word sense discovery based on sense descriptor dissimilarity. In:

Proceedings of the Ninth Machine Translation Summit, New Orleans, 315–322.

11. Rapp, R. (2004). A freely available automatically generated thesaurus of related words. In:

Proceedings of the Fourth International Conference on Language Resources and

Evaluation (LREC), Lisbon, Vol. II, 395–398.

12. Ruge, G. (1992). Experiments on linguistically based term associations. Information

Processing and Management 28(3), 317–332.

13. Sahlgren, M. (2001). Vector-based semantic analysis: representing word meanings based on

random labels. In: A. Lenci, S. Montemagni, V. Pirrelli (eds.): Proceedings of the ESSLLI

Workshop on the Acquisition and Representation of Word Meaning, Helsinki.

14. Schütze, H. (1997). Ambiguity Resolution in Language Learning: Computational and

Cognitive Models. Stanford: CSLI Publications.

15. Terra, E., Clarke, C.L.A. (2003). Frequency estimates for statistical word similarity

measures. Proceedings of HLT/NAACL, Edmonton, Alberta, May 2003.

16. Turney, P.D. (2001). Mining the Web for synonyms. PMI-IR versus LSA on TOEFL. In:

Proc. of the Twelfth European Conference on Machine Learning, 491– 502.

17. Turney, P.D. (2006). Similarity of Semantic Relations. Computational Linguistics, 32(3),

379–416.

80