POLICY BASED QOS MONITORING

Automated Learning Strategies for Policy Enhancement

Pedro A. Aranda Gutierrez

1

, David Wagner

2

, Ilka Miloucheva

2

1

Telefonica R&D, Madrid, Spain

2

Fraunhofer Institute, Schloss Birlinghoven, Germany

Christof Brandauer, Ulrich Hofmann

Salzburg Research, Österreich

Keywords: QoS measurement policy, policy repository, heterogeneous access IP network, learning component,

reinforcement learning, supervised learning.

Abstract: A challenge of today’s measurement architectures for QoS/SLA monitoring in heterog

eneous network

environment is enhanced intelligence in order to minimise measurements and derive automatically

optimised measurement strategies for the network operators. Such optimisations can be done with different

goals – avoid redundant measurements, sharing of measurements for different QoS monitoring goals and

enhancement of measurement strategies considering QoS/SLA measurement requests. For automated

optimisation of measurement strategies, QoS measurement policies are proposed whose parameters are

adapted dynamically based on specified learning algorithms and rules. For the policy adaptation different

kinds of learning can be used, as for instance reinforcement and supervised learning. The integration of the

proposed policy based strategies into policy management architecture is discussed. A learning component

collecting rules and algorithms for measurement policy adaptation is proposed which can be used by

different tools of a policy management system. A graphical user interface (GUI) for a realistic policy based

measurement scenario is discussed which aims to optimise the measurement strategies of the network

operator.

1 INTRODUCTION

Advanced architectures for monitoring of QoS

parameter and Service Level Agreement (SLAs)

offer automated measurement facilities and

techniques for data mining and analysis of

measurement data. Examples for such architectures

are CMToolset (Miloucheva et al., 1997), (Hofmann

et al., 2001), INTERMON toolkit (Miloucheva,

Aranda, Hetzerand Nassri,2004), (Miloucheva,

Hetzer and Guitierres, 2004), (Miloucheva,

Hetzerand Nassri, 2004), MoME architecture

(Brandauer et al., 2007), (IST-MOME, see ref).

In such architectures measurement scenarios are

use

d to achieve the specific requirements for data

mining and analysis of measurement data

dependencies, as for instance:

- Effect

of inter-domain routing and BGP-4

protocol behaviour on QoS parameter values (see

(Gutierrez et al, 2004)),

- Traffic and c

ongestion impact on the QoS of

applications (see (Miloucheva, Hetzer and

Guitierres, 2004)),

- Dat

a mining and dependency analysis

(Miloucheva, Hetzerand Nassri, 2004),

- Anom

aly detection (Gutierrez, Anzaloni and

Müller, 2003),

- Su

pport of proactive and reactive bandwidth

planning (Hetzer et al., 2006),

- Opt

imisation of on-demand multimedia content

delivery (Hetzer, Milouchevaand Jonas, 2006).

Although there are different a

pproaches to integrate

analysis and modelling facilities for different tasks

into the QoS/SLA monitoring architectures there is

still a challenge arising from redundant

measurements performed with such tools.

291

A. Aranda Gutierrez P., Wagner D., Miloucheva I., Brandauer C. and Hofmann U. (2007).

POLICY BASED QOS MONITORING - Automated Learning Strategies for Policy Enhancement.

In Proceedings of the Second International Conference on Wireless Information Networks and Systems, pages 275-281

DOI: 10.5220/0002151402750281

Copyright

c

SciTePress

Even if the measurement goals are different (e.g.

bandwidth planning, anomaly detection) it is

possible that redundant measurements are performed

whose results can be inferred from other requested

measurements. This leads to significant load of the

network infrastructure by needless measurement

overhead.

To avoid this overhead additional facilities

integrated in the QoS/SLA monitoring infrastructure

are considered which are aimed to analyse the

measurement scenarios based on their descriptions

and the dependencies of their results. Such

“intelligent” facility can be designed to optimise the

QoS measurements for a given period of time

considering the requirements of the different users

and applications, for which measurements are done.

By this, avoidance of redundant measurements and

sharing of measurement results for different tasks

can be achieved.

In this paper, in order to support the automatic

minimisation of measurements and sharing of

measurement results for the requested QoS

monitoring tasks, policies and learning algorithms

are used. Policies specify which measurements have

to be done for the different users and applications.

Learning algorithms analyse the established policies

and corresponding measurement scenarios with the

goal to minimise the measurement overhead and

share measurement results.

Design considerations of the policy oriented QoS

monitoring architecture allowing minimisation of

measurements are discussed in this paper.

The paper is organised as follows.

Section 2 gives a brief overview of QoS/SLA

monitoring architectures with integrated data mining

functions. The general approach of learning for

optimisation of measurement scenario suite and their

parameters is discussed in section 3. In section 4 the

design of a learning component in a policy based

measurement system is presented. Section 5

describes a scenario based on measurement policies

for optimisation of measurement strategies.

2 POLICY BASED QOS/SLA

MONITORING

Advanced QoS/SLA monitoring architectures are

aimed at automation of measurements and their

analysis for specific tasks. Example of such

architectures are CMToolser (Miloucheva et al.,

1997), (Hofmann et al., 2001), INTERMON

(Miloucheva, Aranda, Hetzerand

Nassri,2004),(Miloucheva, Hetzer and Guitierres,

2004), (Miloucheva, Hetzerand Nassri, 2004),

MoMe (Brandauer et al., 2007),(IST-MOME, see

ref), Skitter (CAIDA’s Skitter project web page),

Surveyor (Kalidindi, 1999), SPAND (Seshan et al.,

1997).

QoS/SLA monitoring architectures can be based on

active or passive measurement scenarios, which are

stored in appropriate measurement data repositories

for further processing.

A raising problem of such architectures is the large

volume of measurement data and the great

measurement overhead, which consumes resources

of the network infrastructure.

One approach to solve the problem is to use Very

Large scale Data Base (VLDB) design of

measurement data repositories occupying magnetic

storage in the

terabyte range and containing billions

of table rows and to improve

the efficiency of the

operations concerning the measurement data base

(Gray, 2004).

Another approach is proposed in this paper which is

based on QoS monitoring whose measurements are

specified using policies (goals) on different

refinement levels.

Examples for policy actions, which are invoked

when specific events or conditions take place, are:

- VoIP Quality measurement between two end-

systems;

- Traffic load monitoring at a specific router,

when the router is considered for traffic

forwarding;

- Monitor anomalies of routing path.

Policies are defined by condition and actions

sequences. In the case of policy based QoS/SLA

monitoring, the measurement policies are described

based on actions including measurement scenarios.

P: <condition> <action>

Policies can be specified using appropriate user-

friendly Graphical User Interfaces (GUIs) similar to

the GUIs of the available measurement architectures.

The QoS/SLA monitoring GUI translates the input

parameters into policy descriptions, which can be

more effectively processed based on the “condition,

action” relationships.

Learning algorithms can be integrated in the policy

monitoring architecture in order to improve the

policies and avoid repeated measurements, as well

as to support sharing of measurement data.

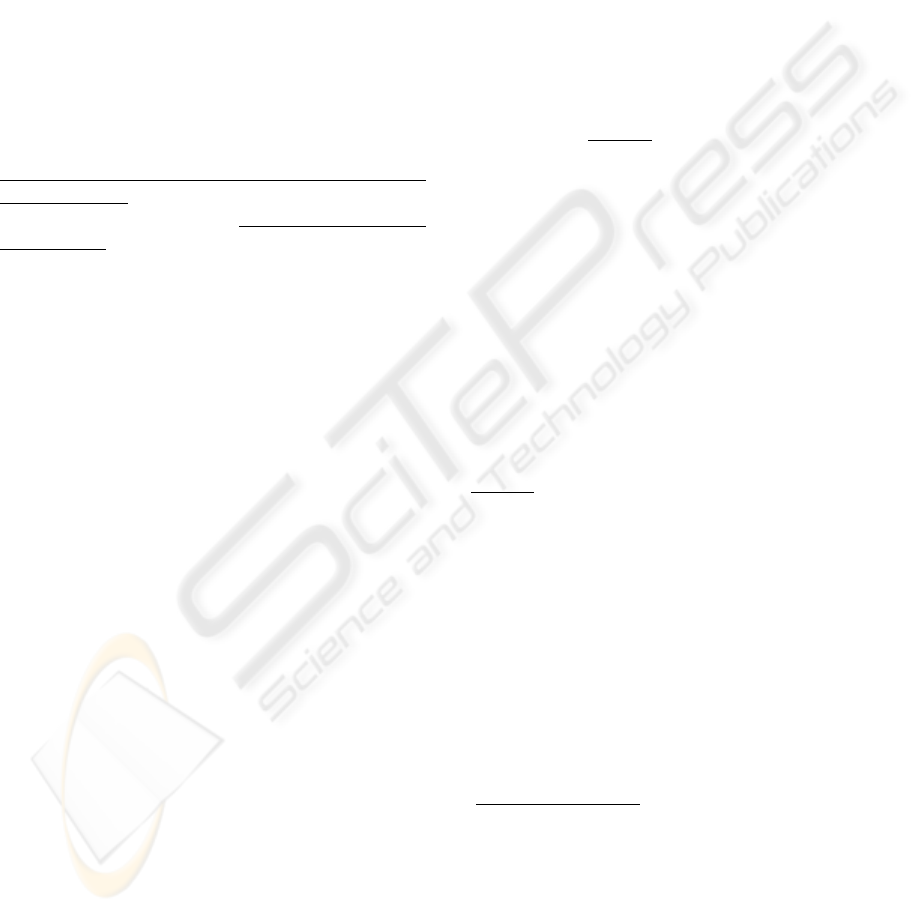

The policy based QoS/SLA monitoring architecture is

shown in figure 1:

WINSYS 2007 - International Conference on Wireless Information Networks and Systems

292

GUI

–

Policy based QoS / SLA Monitoring

Measurement policies (goals)

-

specified by conditions and

actions

Monitoring data base

including measurement

scenarios

Learning for

measuremen

t

minimisation

Measurement tools and

Technologies

hC

Figure 1: Policy based QoS/ SLA monitoring.

3 MEASUREMENT POLICIES

AND THEIR ADAPTATION

The measurement policy model is derived from the

IETF policy framework and was enhanced with

concepts for automated learning and adaptation.

3.1 QoS Measurement Policies

Network management policies are considered as

rules to administer, manage and control access to the

network resources by applications and users (see

RFC 3198 (Westerinen et al., 2001)). Policies

express business goals and consist of condition and

actions for management of parameters of the

networks (Moore et al., 2001), (Moore, 2003),

(Sahita et al., 2003).

IETF QoS management is addressed by the QoS

Policy Information Model (QPIM) (Snir et al.,

2003). QoS policies are mainly focussed on

management of IntServ and DiffServ resource

allocation by the network administrator. IETF also

focussed on management of network device QoS

data path mechanisms using policies (Moore et al,

2004).

A new type of QoS management policies aimed at

management of measurement strategies, is

considered in this paper.

QoS measurement policies are aimed at configuring

and/or adapting of QoS/SLA measurements in

heterogeneous network environments depending on

events, network capabilities and preferences

provided by the different actors (i.e. users, service

providers and/or network operators).

Each network provider has QoS measurement

policies to measure and report the quality of the

network depending on the QoS/SLA. Measurement

policies can be used to select appropriate

measurements or tasks, such as proactive QoS

planning, QoS problem and anomaly detection.

The proposed measurement policies introduce some

new aspects considering current IETF framework.

Such are:

1. The focus of the policy actions is

configuration of measurement scenarios and

corresponding measurement tools. This

includes the control of parameters of

measurement scenarios, as well as the set of

measurement scenarios required to provide a

measurement action.

2. Measurement policies can be defined for

different kind of policy actors (i.e. users,

service providers and network operators).

The relationships between the policy actors

can be used to infer adaptation of policy

parameters.

Measurement policies can be specified in a user-

oriented language as “high level” goals which are

translated into executable procedures and

corresponding data structures.

P

meas

: <meas_condition> <measurement_action>

<meas_condition> : < netw_event> I < actor_preference>

<netw_event> : <congestion> I <failure> I <anomaly> I

<learning_event>

<meas_action> : “set” <meas_scen> I “ref” <meas_scen>

I “update” <meas_scen>

The definition shows the structure of measurement

policy conditions (<meas_condition>) and actions

(<meas_action>).

The measurement action can be:

- Establishment of new measurement scenario

(“set”<meas_scenario>),

- Reference to existing measurement scenario

(“ref” <meas_scenario>) and

- Update of parameter of measurement scenarios

(“update” <meas_scenario>).

Measurement scenarios can be represented abstractly

by the following expressions:

<meas_scenario>:

<tool><meas_par><meas_result><meas_topoligy><time_

spec><meas_param>

The expression gives the usual configuration

parameters of a measurement scenario:

POLICY BASED QOS MONITORING - Automated Learning Strategies for Policy Enhancement

293

- Tools (<tool>) used for measurements and their

installation parameters, which can depend on the

network;

- Measured application QoS parameters

(<meas_par>), which are measured, as for

instance delay, traffic, response time;

- Measurement result (<meas_result>) is specified

by the required granularity of the measurements

and other parameters;

- Measurement topology (<meas_topology>)

specifies the network elements between the

measurements are performed;

- Scenario execution time specifies the frequency

and the time interval, in which the scenario is

executed (<time_spec>).

Analysing the dependencies of the measurement

scenario parameters and changing appropriately

specific parameters of the measurement scenario, the

measurements performed by the QoS/SLA

architecture can be minimised.

3.2 Learning for Measurement Policy

Optimisation

The policies and their corresponding measurement

scenarios can be adapted dynamically to support

more efficient QoS/SLA of applications with

monitoring data and to detect more efficiently

problems in the heterogeneous infrastructure.

Parameters of the measurement scenarios can be

adapted dynamically using learning techniques. The

learning algorithms can be of different kinds of

complexity and design using theoretical approaches

discussed in the state-of-the art (Sutton 1998),

(Bertsekas et al., 1996).

Supervised and reinforcement learning can be used

for improvement of measurement policies:

- Reinforcement learning (Sutton 1998) is a

theoretical approach to study dynamically the

impact of the environment and improve

automatically the used policies. Reinforcement

learning algorithms are based on knowledge of

environment. There are different reinforcement

learning technique, such as Q-learning (Watkins

et al., 1992), informed reinforcement learning

(Croonenborghs et al., 2004) and relational

reinforcement learning (Driessens et al., 2002).

- The supervised learning assumes a “teacher

signal” that explicitly tells the correct output for

every input pattern (Urbancic, 1996). The main

task is focussed on the mapping of input patterns

to target output values.

Considering measurement policies, reinforcement

learning strategies can be used for example to

automate the search for the most appropriate

measurements, thus reducing measurement

overhead. Reinforcement learning algorithms, which

use knowledge from the networking environment

and operational events to update the parameters of

the measurement policy parameters, can be used to:

- Adapt the measurement topology

(meas_topology) of policies based on the actual

network topology;

- Change measurement parameters (meas_par)

based on congestion, traffic changes and other

events derived from environment.

The supervised learning algorithms can be used

basically to adapt parameters of measurement

policies considering dependencies of the actors of

policies.

The network operator checks the requested

measurements defined in the policies of service

providers and end-users and changes their

parameters using a simple learning algorithm.

Considering the hierarchical actor dependencies,

supervised learning can also be based on processing

and adaptation of policies from measurement

parameters of other policies

In order to improve the policy specifications,

learning can be done in top-down and bottom-up

manner considering the hierarchical relationships of

the policy actors. Hierarchical relationships of

policies can be defined based on the dependencies of

the policy actors. For instance, network providers

can be interested in monitoring of different QoS

characteristics, such as QoS parameter, anomalies,

traffic measurements, route path quality and other

(Miloucheva, Aranda, Hetzerand Nassri,2004). For

the specification of monitoring and measurement

tasks, ontology can be used, which allow to share

and access the knowledge about measured QoS by

the different policy actors.

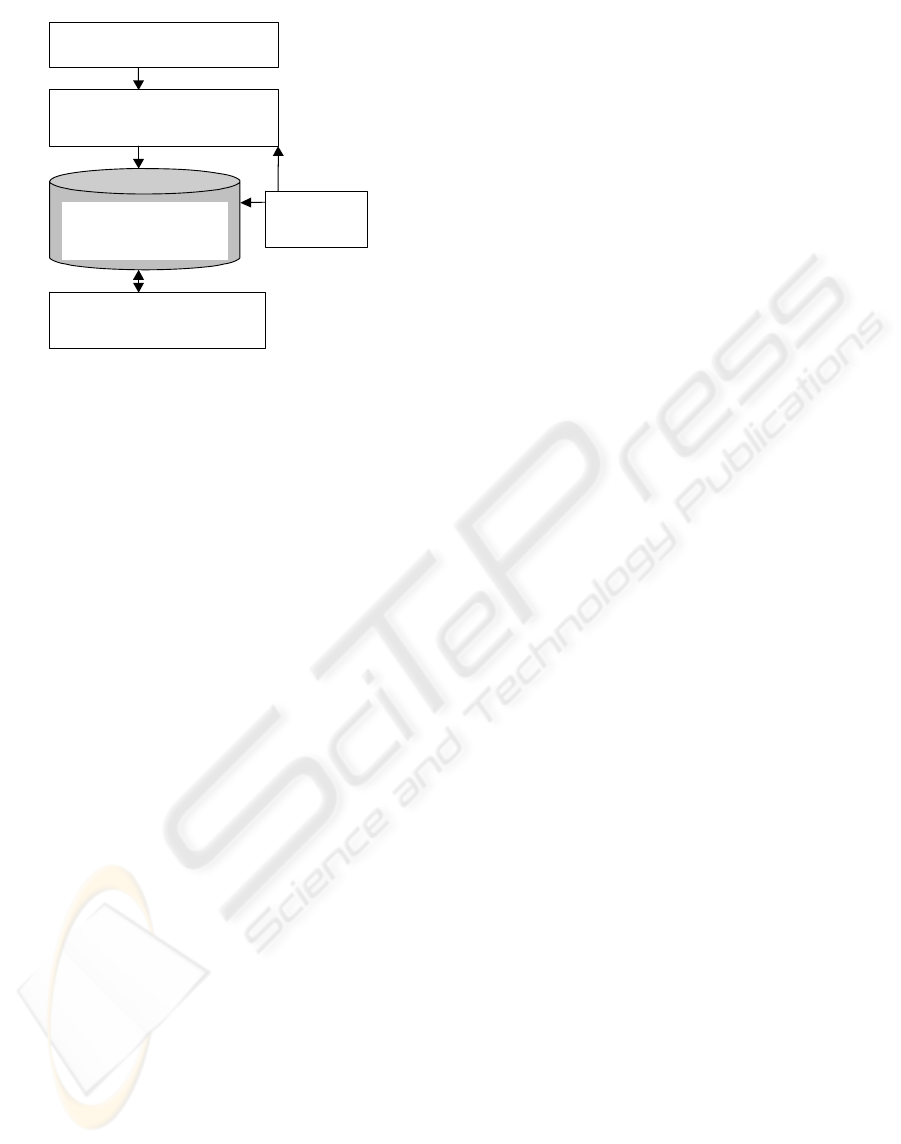

An example is given in figure 2, which shows how

the measurement policies of different actors can be

improved in top-down and bottom-up manner using

supervised learning methods.

WINSYS 2007 - International Conference on Wireless Information Networks and Systems

294

Bottom-up

Learning and adaptation

of policies based

on actor’s hierarchy

Network o

p

erator

policies for QoS

measurement

- updates the set of

measurements based on

users’s and service

provider’s preferences

Service

p

rovider

policies for QoS

measurement

User

p

olicies for QoS

measurements and its

dynamic

reconfiguration

Automated ada

p

tation

of service provider’s

default measurement

policies for the user

profile

Selection of

p

referred

measurement scenario

for particular user

Automated

ada

p

tation of default

measurement

p

olicies

for the user profile

Top-down

Learning and adaptation

of policies based

on actor’s hierarchy

Inheritance of default

measurement policies or

selection of new

p

olicies for

particular user

Inheritance of default

measurement policies or

selection of new one for

particular user

Confi

g

uration and

reconfiguration of

default measurement

policies for user

profiles

Figure 2: Learning approaches for enhancement of

measurement policy.

In the bottom-up learning approach, the network

operator checks the requested measurements defined

in the policies of the service providers and end-users

and adapts the parameters of his own measurement

policies in order to avoid measurements, which are

not requested by the policies of the other actors.

In the top-down learning approach, the end users

and service provider can also automatically adapt

their policies considering the goals of the network

operator.

4 LEARNING COMPONENT

For automatic policy adaptation a learning

component including different kinds of learning

algorithms can be integrated in the policy

management architectures.

The learning component can be considered as a

collection of learning algorithms, which are used by

different functional modules of a policy

management system. This allows enhanced

management of the adaptations, which are done

based on different kinds of learning in the system.

Currently, the policy based management framework

defined by the IETF (Westerinen et al., 2001),

(Moore et al., 2001), (Moore, 2003), (Sahita et al.,

2003), (Snir et al., 2003), is based on interaction of

Policy Management Application (PMA), Policy

repository – containing the policies, Policy

Enforcement Point (PEP) -and Policy Decision Point

(PDP).

The integration of the learning component in the

IETF policy management architecture is shown in

figure 3:

Learning

component

Figure 3: IETF policy management architecture enhanced

with learning component.

In the enhanced policy management architecture, the

learning component is used to integrate learning

algorithms supporting different levels of the

architecture. This means that learning algorithms,

contained in a common learning component, can be

used during the Policy Configuration phase by the

Policy Management Application and during the

Policy Decision phase by the PDP.

This design supports:

- Enhanced data mining to infer policy changes

based on learning;

- Reusability of learning algorithms for different

tasks, because the learning algorithms are

contained in a common package of modules;

- Common functions for access and execution of

learning procedures used by the different system

components (Policy Management Interfaces and

Policy Decision Point).

5 SCENARIOS AND INTERFACE

FOR MEASUREMENT POLICY

Let’s consider different policy actors, such as user,

service provider and network operators, which

require QoS measurements in heterogeneous

environment using policies. These policy actors can

define their measurement strategies for a

heterogeneous environment using the interfaces for

predefined measurement policy configuration

integrated in the Application Preference Manager.

Such an interface of a policy actor (i.e. GUI of a

policy management application), proposed in the

framework of NETQOS project, is given in figure 4:

POLICY BASED QOS MONITORING - Automated Learning Strategies for Policy Enhancement

295

Figure 4: Interface for configuration of measurement

policies for heterogeneous networks.

The configuration of a measurement policy by a

policy actor is based on specification of

measurement strategy type, application type and the

access networks, for which the measurements are

performed. The measurement strategy can target, for

instance, end-to-end delay and depends on the

application type.

Using the proposed interface, the end-users and

service providers can specify their measurement

requests to the NETQOS monitoring and

measurement subsystem (Brandauer et al., 2007) as

policies, which are stored in the repository. Learning

algorithms can be used to analyse the set of

measurement specifications and derive the most

appropriate measurement suite for particular access

networks and end-systems. Based on the optimised

measurement scenarios, the measurement policies of

the network providers can be improved.

This optimisation allows the network operator to

avoid redundant measurements although considering

requests from users and service providers.

6 CONCLUSIONS

This paper discusses an approach for integration of

measurement policies and learning algorithms in

existing QoS/SLA monitoring architectures. The

proposed policy based measurement reduces

measurement overhead in the network by detecting

redundant measurements and optimising

measurement strategies of network administrators.

Further work is aimed at design and integration of

QoS measurement ontology, which enables the

knowledge sharing, modelling and presentation

using standardised techniques, as well as formal

analysis of the dependencies between measurements.

ACKNOWLEDGEMENTS

This work was supported by the European project

NETQOS (IST project, see ref.).

REFERENCES

I. Miloucheva, D. Hetzer, O. Bonnesz, U. Hofmann: "The

CM Toolset for Remote Test and Performance

Analysis", International Conference for Multimedia

Protocols and Systems, Santjago, Chile, Nov. 1997.

U. Hofmann, I. Miloucheva, Distributed Measurement and

Monitoring in IP Networks (CMToolset for

AQUILA

DiffServ), IEEE Networking Conference, Orlando,

June 2001

I. Miloucheva, P.A.Aranda.Gutierrez, D. Hetzer, A.

Nassri, M. Beoni, INTERMON architecture for

complex QoS analysis in inter-domain environment

based on discovery of topology and traffic impact, 2nd

workshop on Inter–domain performance and

simulation, Budapest, March, 2004.

I. Miloucheva, D. Hetzer, P.A. Guitierres, Performance

Management for Efficient QoS Provision and

Resource Utilisation in Broadband Internet

Infrastructure, Broadband Society Workshop , 24-25

August, Setubal, Portugal, 2004.

I. Miloucheva, D. Hetzer, A. Nassri, Data mining

approach to study Quality of Voice over IP

Applications, Data Mining Conference, Malaga,

Spain, September, 2004.

C. Brandauer, T. Fichtel, P. Dorfinger, "On the

Monitoring- and Measurement subsystem in

NETQOS", In Proceedings of Interworking, Santiago

Chile, January 2007.

IST-MOME Monitoring and Measurement Coordination

Action http://www.ist-mome.org.

P. A. Aranda Gutierrez, I.Miloucheva, Integrating Inter-

domain routing analysis in management strategies for

large scale IP networks, Next Generation Teletraffics

and Wired/Wireless Advanced Networking, Febr.

2004.

I. Miloucheva, A. Anzaloni, E. Müller, A practical

approach to forecast Quality of Service parameters

considering outlier, First international workshop on

Inter-domain performance and simulation (IPS),

Salzburg 20-21 February, 2003.

D. Hetzer, I. Miloucheva, “Adaptable bandwidth planning

for enhanced QoS support in user-centric broadband

architectures, World Telecommunications Congress

(WTC ), Budapest, 30 April – 3 Mai, 2006.

D. Hetzer, I. Miloucheva, K. Jonas, "Resource reservation

in Advance for Content On-demand Services",

WINSYS 2007 - International Conference on Wireless Information Networks and Systems

296

Networks Conference, Nov. 6 - 9, New Delhi, India,

2006.

CAIDA’s Skitter project web page,

http://www.caida.org/tools/measurement/skitter/

S. Kalidindi, M.J. Zekausus, “Surveyor: An Infrastructure

For Internet Performance Measurements”, June, 1999.

S. Seshan, M. Stemm, R. H. Katz, “SPAND: Shared

Passive Network Performance Discovery”, USENIX

Symposium on Internet Technologies and Systems,

1997.

J. Gray, “The Revolution on Database Architecture”,

Paris, France, SIGMOD, 2004.

Westerinen, J. Schnizlein, J. Strassner, M. Scherling, R.

Quinn, S. Herzog, A. Huynh, M. Carlson, J. Perry, J.

and M. Waldbusser, "Terminology for Policy-based

Management", RFC 3198, November 2001.

B. Moore, E. Elleson, J. Strassner, A. Westerinen, “Policy

Core Information Model-Version 1 Specification”,

RFC 3060, February 2001.

B. Moore, “Policy Core Information Model (PCIM)

Extensions”, RFC 3460, January 2003.

R. Sahita, S. Hahn, K. Chan, K. McCloghrie, „Framework

Policy Information Base”, RFC 3318, March 2003.

Y. Snir, Y. Ramberg, J. Strassner, R. Cohen, B. Moore,

“Policy Quality of Service (QoS) Information Model”,

RFC 3644, November 2003.

B. Moore, D. Durham, J. Strassner, A. Westerinen, W.

Weiss, “Information Model for Describing Network

Device QoS Datapath Mechanisms",RFC 3670,

January 2004.

R. S. Sutton, A. G. Barto. ”Reinforcement Learning: An

Introduction”, The MIT Press, Cambridge, MA, 1998.

D. P. Bertsekas, J. N. Tsitsiklis, “Neural Dynamic

Programming”, Athena Scientific, Belmont, MA,

1996.

C. J. C. H. Watkins, P. Dayan, ” Q-learning”, in Machine

Learning, no. 8, page 279-292, 1992.

T. Croonenborghs, J. Ramon, M. Bruynooghe, “Towards

Informed Reinforcement Learning”, in Proceedings of

the ICML’04 workshop on Relational Reinforcement

Learning, Banff, Canada, 2004.

K. Driessens, S. Dzeroski, “Integrating experimentation

and guidance in relational reinforcement learning”, in

Proceedings of the Nineteenth International

Conference on Machine Learning, page 115-122,

Morgan Kaufmann Publishers Inc., 2002.

T. Urbancic, I. Bratko, C. Mammut, “Learning models of

control skills: Phenomena, results and problems”, in

Proceedings of the 13th Triennial World Congress of

the International Federation of Automatic Control,

IFAC, 1996.

IST project, "Policy Based Management of Heterogeneous

Networks for Guaranteed QoS",

www.ist-netqos.org

POLICY BASED QOS MONITORING - Automated Learning Strategies for Policy Enhancement

297