TOWARDS OBJECTIVE QUALITY ASSESSMENT OF

IMAGE REGISTRATION RESULTS

Birgit M

¨

oller, Rafael Garcia

Department of Electronics, Informatics and Automation

University of Girona, Campus Montilivi, 17003 Girona, Spain

Stefan Posch

Institute of Computer Science, Martin-Luther-University Halle-Wittenberg

Von-Seckendorff-Platz 1, 06099 Halle/Saale, Germany

Keywords:

Image Registration, Quality Assessment, Objective Metric, Voting Schemes, Error Classification.

Abstract:

Geometric registration of visual images is a fundamental intermediate processing step in a wide variety of

computer vision applications that deal with image sequence analysis. 2D motion recovery and mosaicing,

3D scene reconstruction and also motion detection approaches strongly rely on accurate registration results.

However, automatically assessing the overall quality of a registration is a challenging task. In particular,

optimization criteria used in registration are not necessarily closely linked to the final quality of the result and

often show a lack of local sensitivity. In this paper we present a new approach for an objective quality metric

in 2D image registration. The proposed method is based on local structure analysis and facilitates voting-

techniques for error pooling, leading to an objective measure that correlates well with the visual appearance

of registered images. Since observed differences are furthermore classified in more detail according to various

underlying error sources, the new measure not only yields a suitable base for objective quality assessment, but

also opens perspectives towards an automatic and optimally adjusted correction of errors.

1 INTRODUCTION

Image sequence processing is one of the basic ingre-

dients in modern vision-based problem solving. The

analysis of sequences acquired with active, i.e., non-

stationary or rotating cameras, is especially impor-

tant, offering large flexibility for solving a wide vari-

ety of different tasks in a straight-forward and elegant

way. When analysing sequences, one of the first fun-

damental steps is usually the identification of image

contents that are shared between different images of

a sequence, resulting from image overlaps. In partic-

ular, mappings between pixels that represent 2D pro-

jections of the same 3D scene point in pairs of images

are reconstructed. In the literature this identification

of corresponding pixels is usually referred to as 2D

geometric image registration.

The registration of a pair of images is based on a

geometric transformation to align corresponding pix-

els. It is usually provided in terms of a parameterised

model that describes the camera motion between the

images and its effects on the geometric appearance of

each image (Hartley and Zisserman, 2004). During

registration the parameters are estimated according to

a suitable goal function that quantifies the differences

between the images and is to be minimised.

Due to the high relevance of 2D registration in

present-day computer vision over the years huge

numbers of registration techniques have emerged (Zi-

tov

´

a and Flusser, 2003). Generally, goal functions

are either featureless, i.e., based on image intensity

residuals or correlation measures, or feature-based,

i.e., relying on Euclidean distances between the re-

projections of selected image features. Sometimes al-

gorithms working in the frequency domain or resting

upon a probabilistic fundament are also applied.

In general, minimising a goal function leads to an

optimal image alignment according to the chosen op-

timisation criterion and the underlying data. The re-

sults of 2D registration often yield the basis for more

elaborate analysis steps. Consequently, apart from

the pure registration result, an objective assessment of

the final quality is also of significant interest, i.e., the

amount of remaining errors in the pixel-wise align-

ment of both images. However, this is still a big

challenge. Although the various optimisation criteria

233

Möller B., Garcia R. and Posch S. (2007).

TOWARDS OBJECTIVE QUALITY ASSESSMENT OF IMAGE REGISTRATION RESULTS.

In Proceedings of the Second International Conference on Computer Vision Theory and Applications - IFP/IA, pages 233-240

Copyright

c

SciTePress

used within image registration may give valuable cues

regarding the final accuracy of the pixel-wise image

alignment, they often show a lack of local sensitivity.

As an example, consider the well-known Mean

Squared Error (MSE) widely used as a distance mea-

sure in featureless image registration (cf. Eq. 1).

As has been demostrated several times in the liter-

ature (Wang et al., 2004), image pairs showing the

same MSE may appear completely different accord-

ing to visual differences and registration quality. This

renders an objective evaluation impossible. Conse-

quently, registration quality assessment is nowadays

often left to the user for visual inspection. Apart from

some works aiming at the analysis of theoretical qual-

ity bounds of feature-based registration techniques

(e.g., (Weng et al., 1989)), only very few approaches

towards an automatic quality assessment have been

published so far (e.g., (Kim et al., 2000)). Therefore,

more sophisticated metrics are highly recommended.

In this paper we present first steps towards over-

coming this lack of existing metrics for an objective

assessment of registration quality. We propose a new

measure that better correlates with the real quality of a

registration result as visually experienced by humans.

Our metric primarily aims at an automatic assessment

of registration results, subsuming the detection of re-

maining differences between images. This is an im-

portant prerequisite to obtain robust and reliable re-

sults in subsequent analysis steps. Perspectively, the

metric may also guide the development of enhanced

algorithms for image registration itself.

The quality of an image registration result is basi-

cally defined by errors within the pixel-wise geomet-

ric alignment of two images that cause intensity resid-

uals between the images. Consequently, analysing

these residuals yields a suitable starting point for the

investigation of quality metrics with high local sensi-

tivity. However, in doing so it turns out that intensity

differences have to be interpreted carefully. Not all

detected residuals are directly related to registration

failures. There are additional underlying sources of

errors that also have a considerable impact on the fi-

nal result. Since each of these sources shows its own

characteristics and requires an individual compensa-

tion strategy, an explicit separation is inevitable.

Our goal to meet these requirements led to the de-

ployment of a detailed taxonomy of differences and

underlying sources in image registration that guide

the development of our new quality metric. Basically,

six classes of differences between registered images

were identified and further grouped into two main

categories: registration errors, which are directly re-

lated to the geometric registration, i.e., a misalign-

ment of corresponding pixels, and visual errors, that

subsume remaining intensity differences between cor-

rectly aligned pixels and mainly influence the visual

appearance of accurately registered images.

Based on the results of these exploratory stud-

ies, our new quality metric aims at accounting for

these different error categories during quality assess-

ment. Therefore, it extends common image quality

measures like those used within the context of assess-

ing loss of quality in image compression into the new

field of image registration. The main advantage of

the new metric is a high local sensitivity achieved by

focusing on pixel-wise quality criteria. In addition,

widely used global averaging schemes are replaced

with voting-based strategies for error pooling.

The remainder of this paper is organised as fol-

lows. In Section 2 principal ideas of registration qual-

ity assessment and the deployed taxonomy of image

differences in registration are outlined. Subsequently,

the state-of-the-art in image quality assessment is dis-

cussed (Sec. 3), yielding the base for our develop-

ments. The new voting-based metric is the subject of

Section 4. Section 5 presents results of our approach

while Section 6 contains conclusions and an outlook.

2 IMAGE DIFFERENCES

The aim of our work is to develop objective met-

rics for assessing the quality of image registration re-

sults. Usually, the quality is directly related to pixel-

wise differences between images still observable af-

ter model parameter estimation and alignment. We

start our investigations with a detailed analysis of ef-

fects influencing such differences. This leads to the

deployment of a taxonomy of sources for image dif-

ferences in registration which is well-suited to guide

the investigation of our new quality metric (Sec. 4).

2.1 A Taxonomy of Image Differences

Differences between registered images are not exclu-

sively related to the result of the registration process.

Besides, artefacts that are due to changes in the scene

or technical properties of the image acquisition device

also appear. These have to be distinguished from ef-

fects of the registration. Consequently, in objective

quality assessment various classes of sources for dif-

ferences have to be considered separately. During our

experiments six basic classes were identified:

General Registration Failure. General failure of

the parameter estimation stage (i.e., due to local min-

ima of the goal function) inducing structural image

differences and perspective image misalignment.

Model Failure and Parallax. Motion model selec-

tion is usually based on assumptions about camera

motion and scene structure; discrepancies from these

assumptions may cause (local) misalignment. Re-

garding 2D motion recovery, parallax is an exam-

ple of such effects resulting from apparent motion of

static objects due to camera viewpoint changes.

Lens Distortions. Geometric image distortions re-

sulting from non-linear lens properties that hamper a

correct reconstruction of the camera motion.

Vignetting. Non-uniform distribution of energy in

an image causing the corners to appear significantly

darker than central regions; vignetting mainly results

from physical lens and/or CCD properties.

Illumination Changes. Global and local changes

of lighting conditions within a scene or in the param-

eters of the camera that cause corresponding image

parts to appear visually different after registration.

Moving Objects. Within non-static scenes the mo-

tion model for the camera usually fails to compensate

for camera and local object motion at the same time,

leading to local inconsistencies between the images.

Each of the classes directly influences the visual

appearance of registered images. Related image dif-

ferences are pairwise independent of each other. Nev-

ertheless, due to the individual properties of each

class, two main categories of differences can be dis-

tinguished. They have a fundamental impact on the

development of metrics for quality assessment. In the

following we will refer to the two categories as regis-

tration and visual errors, characterised as follows:

A) Registration Errors: This category includes im-

age differences due to erroneous alignments of

corresponding pixels. They result from General

Registration Failures, Model Failure and Paral-

lax, or Lens Distortions and usually correlate with

differences in the local structure of both images.

B) Visual Errors: In this case the pixel-wise geo-

metric image alignment is correct, but intensity

differences still appear. Vignetting, Illumination

Changes and Moving Objects within a sequence

are the main reasons for these effects.

Comparing the individual characteristics of both cat-

egories it turns out that only errors of the first cate-

gory are directly related to the registration process.

Visual errors are only loosely linked to it. They like-

wise affect the overall appearance of registered im-

ages. However, corresponding artefacts cannot be

compensated by improving the registration result, but

require an explicit and special treatment.

The distinction between registration and visual er-

rors gives valuable cues regarding the properties that

a new metric in registration quality assessment re-

quires. In addition, a more detailed analysis of the

various error sources within the two categories sup-

plements important information. In particular, the dif-

ferent members in both categories show significantly

varying impacts on the final visual appearance of im-

ages. Among registration errors, global misalignment

and lens distortions usually affect the whole image,

while effects of parallax or minor local misalignments

are often restricted to local areas of the images, e.g.,

to corner regions. Consequently, more attention has to

be paid to the detection and correction of lens distor-

tions and global misalignment than to local misalign-

ments. Likewise, among visual errors, global illumi-

nation changes and vignetting show a deeper impact

on the visual appearance of images than artefacts re-

sulting from moving objects. Figure 1 resumes our

resulting taxonomy of differences in image registra-

tion. It considers their impact and the necessity for

compensation with regard to registration quality and

overall visual appearance.

Global Misalignment

Lens Distortion

Misalignment

Small Local

Local Parallax

Illumination

Global

Vignetting

Small Moving Objects

Total registration failure

low

high

Impact on Result

Category

Errors Errors

A) Registration B) Visual

Figure 1: A taxonomy of differences in image registration.

The taxonomy will guide the development of our

new metric, discussed in detail in Section 4. Prior

to this we will briefly review image quality mea-

sures used for the general detection of differences

between two images, e.g., regarding artefacts result-

ing from image compression, rendering or moving

objects. As the objective assessment of registration

quality is deeply linked to these tasks they yield a suit-

able starting point for our investigations.

3 REVIEW: IMAGE QUALITY

ASSESSMENT

Within some research fields, e.g., dealing with the

transmission of visual data or photo-realistic render-

ing (Wang et al., 2004), various quality measures

were investigated in the past to quantify differences

between two images that show the same contents.

One of the first and still widely used measures for

quantifying the difference between all pixels u within

the overlapping area D

12

of two images I

1

and I

2

is

the well-known Mean Squared Error (MSE):

ε

MSE

(I

1

,I

2

) =

1

|D

12

|

∑

u∈D

12

(I

1

(u) − I

2

(u))

2

(1)

However, since the MSE has shown a lack of dis-

criminative power for various use cases, alternative

metrics have emerged. Basically, two principal direc-

tions can be distinguished. On the one hand, percep-

tually motivated measures were investigated in order

to emulate the capabilities of the human visual system

(HVS). These measures take into account characteris-

tics of the human eye, like masking effects or spec-

tral sensitivity. In addition, environmental parame-

ters influencing the perception of an image are con-

sidered, e.g., lighting conditions or viewing angles.

Two prominent members of this class are the Sarnoff

Visual Discrimination Model (Lubin, 1995) and the

Visual Difference Predictor (Daly, 1993).

On the other hand, quality measures have been

developed that put higher emphasis on structural im-

age properties. They usually rely on edge data, local

gradient information or image entropy (Xydeas and

Petrovi

´

c, 2000; Wang and Bovik, 2002).

HVS-based measures basically exploit perceptu-

ally motivated image properties that influence the vi-

sual appearance of an image. On the contrary, the key

idea of structural image quality measures is given by

the observation that the human visual system focuses

strongly on the structural contents of a scene. Con-

sequently, changes within the structural information

of an image are assumed to provide a good approx-

imation to perceived image distortions (Wang et al.,

2004). Since these data also have an important and

stronger impact on the quality of image registration

results than perceptually motivated differences, we

consider structural metrics as a reasonable basis for

our approach.

3.1 Structural Metrics

Most structural image quality measures take a wide

variety of structural image properties into account,

ranging from mutual information (Qu et al., 2002) to

local gradient orientation and magnitude (Xydeas and

Petrovi

´

c, 2000). The recently proposed Universal Im-

age Quality Index (UIQI) (Wang and Bovik, 2002)

and its generalisation, a Measure of Structural Sim-

ilarity (SSIM) (Wang et al., 2004), basically exploit

statistical image properties in terms of average inten-

sity values and correlations related to contrast, lumi-

nance and image structure, respectively.

Structural measures are usually calculated pixel-

wise between the two images I

1

and I

2

to be com-

pared. Depending on the selected structural features,

a neighbourhood of each pixel is taken into account,

as is done by the UIQI which is exemplarily discussed

below. It is defined as follows:

ε

0

UIQI

(I

1

,I

2

|w) =

σ

12

σ

1

σ

2

·

2

¯

I

1

¯

I

2

¯

I

2

1

+

¯

I

2

2

·

2σ

1

σ

2

σ

2

1

+ σ

2

2

(2)

ε

0

UIQI

is calculated within a sliding window w of a

fixed size which is shifted over the images.

¯

I

1

and

¯

I

2

denote the average intensity values within each w

and (σ

1

,σ

2

,σ

12

) refer to correlation coefficients. The

pixel-wise calculation of the quality measure results

in a map that specifies the local similarity of both

images for each pixel. In the context of image data

transmission and rendering, these maps are usually

used for direct visual evaluation. Alternatively, their

entries are summarised by a single numerical value

computed from averaging:

ε

UIQI

(I

1

,I

2

) =

1

|W|

∑

w∈W

ε

0

UIQI

(I

1

,I

2

|w) (3)

with W being the set of all sliding windows. Struc-

tural metrics have proven suitable for quantifying im-

age differences, e.g., with regard to compression tech-

niques and different kinds of image noise (Cadik and

Slavik, 2004; Wang et al., 2004). However, regard-

ing the detection and assessment of registration er-

rors, even extensions of the UIQI that put higher em-

phasis on local image properties during error pool-

ing (e.g., (Cvejic et al., 2005)) show a lack of pixel-

wise local sensitivity. This is mainly due to the fact

that compression artefacts and noise are equally dis-

tributed over entire image regions most of the time

and require a more region-based evaluation. On the

contrary, differences in image registration often show

local variations and characteristic spatial patterns that

have to be considered explicitly.

Accordingly, new metrics and error pooling strate-

gies with higher local sensitivity are required for reg-

istration quality assessment. Within our approach we

account for this by carrying out a more detailed local

analysis of quality maps that result from the calcula-

tion of different structural quality criteria. These ap-

pear well-suited for our requirements since high regis-

tration quality usually coincides with the preservation

of structural properties of both images during the reg-

istration process. In the next section we present our

approach for a detailed difference classification and

an objective quality assessment.

4 VOTING-BASED EVALUATION

OF REGISTRATION QUALITY

Our new metric for registration quality assessment fo-

cuses on two principal goals. On the one hand we aim

at providing a high local sensitivity by putting strong

emphasis on pixel-wise calculations, and on the other

hand global error pooling strategies are proposed that

better preserve pixel-wise information compared to

existing structural quality measures. To achieve these

goals two main investigations are carried out. Local

sensitivity is granted by omitting region-based calcu-

lations during the first phase of an evaluation (Sub-

section 4.1). This strategy yields quality maps with

high local sensitivity. Secondly, an improved analy-

sis of the maps is performed. While weighted average

values are usually calculated to get a final quality in-

dex within state-of-the-art techniques, we propose a

voting-based scheme for interpreting the contents of

these maps within a global context (Subsection 4.2).

4.1 Local Quality Criteria

We follow the main ideas of structural image quality

measures (Subsec. 3.1) and settle our new registration

quality metric on structural cues (cf. also (Wang et al.,

2004)). Since the overall visual appearance of regis-

tered images is at the same time influenced by illumi-

nation changes, vignetting or moving objects, these

are also taken into account during evaluation.

Given two registered and aligned images I

1

and

I

2

, pixel-wise features are first calculated according

to structural criteria. Three different maps of local

measures result from these calculations:

Absolute Intensity Difference Map D. Differ-

ences within the pixel-wise intensity values of two

images always provide cues for possible misalign-

ment. Indeed, the differences themselves are only of

minor interest, since they are very sensitive to noise.

Hence, we will interpret them in conjunction with

other measures to obtain meaningful results (cf. also

(Farin and de With, 2005)). The difference map D for

both images at each pixel position (x, y) within the

overlap area of both images is defined as follows:

D(x,y) = |I

1

(x,y) − I

2

(x,y)| (4)

Structural Risk Map R. One fundamental criterion

for assessing the image structure at a certain position

(x,y) within an image is the magnitude of the local

gradient. We use this value to assess the extent to

which single pixels may give reliable cues for regis-

tration quality evaluation. At positions where the gra-

dient magnitude is quite small in both images, struc-

ture is only weakly distinctive and its analysis may

lead to wrong conclusions. Hence, these positions are

excluded from structure analysis. With regard to vi-

sual errors, however, these pixels give valuable hints,

e.g., for identifying vignetting effects, as will be ex-

plained in more detail in the next subsection. The bi-

nary risk map R for marking non-relevant pixels in

structure analysis is calculated as follows:

R(x,y) =

(

1, if G

1

(x,y) ≤ θ

G

∧ G

2

(x,y) ≤ θ

G

0, otherwise

(5)

with G

1

and G

2

being the local gradient magnitudes

in both images. θ

G

is a suitable threshold. Morpho-

logical dilation with a 3× 3 squared mask is applied

to the risk map to also exclude pixels close to a ho-

mogeneous neighbourhood.

Edge Preservation Map E. The gradient magni-

tude is only one component of the structural infor-

mation given by local derivatives. Also the orienta-

tion of the gradient yields valuable cues. In (Xydeas

and Petrovi

´

c, 2000) a metric for image fusion per-

formance was proposed that exploits gradient magni-

tude and orientation for a perceptually motivated as-

sessment of how well edge information is preserved

during image fusion. In our experiments, especially

the analysis of gradient orientation has turned out to

provide important information for quality assessment.

Thus, we use the edge preservation maps E proposed

in (Xydeas and Petrovi

´

c, 2000), but solely exploit the

gradient orientation of two registered images

1

:

E(x,y) =

Γ

α

1+ e

k

α

(A(x,y)−σ

α

)

with A(x,y) = 1−

|α

1

(x,y)−α

2

(x,y)|

π/2

being defined as the

relative local orientation, α

k

= tan

−1

s

y

k

(x,y)

s

x

k

(x,y)

, and

s

y

k

(x,y) and s

x

k

(x,y) being the outputs of convolving

image I

k

at position (x,y) with horizontal and verti-

cal Sobel templates. The constants in the formulas

have been chosen according to the default values sug-

gested by Xydeas et al. with Γ

α

= 0.9879, k

α

= −22

and σ

α

= 0.8, leading to values of E within the range

of [0,1] where 1 indicates absolute similarity.

1

In the original paper, in addition to both input images

the final fusion result is also taken into account.

4.2 Global Assessment

Given the three different maps of local criteria, the

overall goal is to automatically assess the global reg-

istration quality, and to identify other meaningful im-

age differences. As weighted averaging techniques

have not proven to be sensitive enough for our pur-

poses, we propose a block-based voting strategy in-

stead. For this the images are divided regularly into

8 × 8 pixel-sized blocks and for each block the oc-

currence of registration and visual errors is pixel-wise

checked. Given the results of these checks, statistics

are computed for each block with the result being a

final vote regarding existence and relevance of regis-

tration and/or visual errors in the underlying image

section. These voting strategies are outlined below.

Registration Error Analysis. According to Sec-

tion 2, registration errors are due to a misalignment of

corresponding pixels between both images and cor-

relate with significant structural differences. We as-

sume a single pixel position (x,y) within registered

images to show such a difference if its edge preserva-

tion value E(x,y) is smaller than a given threshold θ

E

.

It is chosen so as to indicate a significant mismatch in

the local gradient orientation between both images.

Subsequently, a whole block votes for registration er-

rors in the global assessment step if more than 10% of

its pixels are fulfilling the threshold criterion.

Visual Error Analysis. In contrast to registration

errors, visual errors are mainly related to illumination

changes and vignetting (given a correct local geomet-

ric alignment of the images). We consider a pixel

to show this type of error if the intensity difference

between both images exceeds a certain threshold θ

D

,

provided that the pixel is allowed to vote for visual er-

rors according to the risk map R (Eq. 5), i.e., lying in

a homogeneous region of the image. Subsequently, a

whole block votes for visual errors if more than 25%

of the pixels agree.

Up to now, for each of the blocks within an im-

age, two binary decisions have been taken with re-

gard to the existence of registration and visual errors

within the corresponding image section. Given an

image where each block is represented by a single

pixel, and marking the pixels according to the votes

of the blocks, a quality map is obtained. Visual in-

spection clearly indicates regions where image differ-

ences belonging to one of the two categories appear

(cf. Fig. 4). However, since we aim at a fully auto-

matic quality assessment that also differentiates be-

tween the various sources of errors in both categories,

the maps have to be further analysed and interpreted.

As widely used (weighted) averaging techniques have

shown a lack of local sensitivity we favour a voting

strategy for this. Each block participates in two in-

dependent voting schemes that subsequently form the

foundation for a rule-based decision process to iden-

tify the various sources of image differences accord-

ing to our taxonomy, as explained below.

Final Voting & Decision Rules. The final voting

and global quality assessment is done by first count-

ing the number of blocks voting for errors of one or

both categories. During the voting the spatial posi-

tion of the blocks within the image is taken into ac-

count since some difference classes are mainly char-

acterised by their spatial distributions (Subsec. 2.1).

The blocks are separated into border and central

blocks, in which the border of an image is defined to

cover approximately the outer third of the image area.

In addition, the overall structural information within

each block B is considered and characterised by the

entropy H(B). H is calculated based on the intensity

values v of all pixels (x,y) within a single block:

H(B) =

∑

v=0...255

1

p

v

log p

v

, p

v

=

1

|B|

∑

(x,y)∈B

δ

I(x,y),v

A block is considered to contain sufficient struc-

ture to vote for registration errors if its entropy nor-

malised to a range of [0,1] exceeds a threshold θ

H

.

As a result of these voting processes, we get the ra-

tios of how many central and border blocks are voting

for visual and registration errors. They yield the base

for identifying the different classes of errors in both

categories whereas the following rules are applied:

• Vignetting is present between the images if the ra-

tio of border blocks voting for visual errors signif-

icantly exceeds the ratio of central blocks.

• Global Illumination Changes occur if the ratio of

border and central blocks voting for visual errors

is more or less the same and exceeds a certain per-

centage of all blocks voting.

• Radial Geometric Distortions force a significantly

higher ratio of border blocks to vote for registra-

tion errors than that of central blocks.

• Global Misalignment results in more or less

equally high ratios of border and central blocks

voting for registration errors. Contrarily, if there

are only very few blocks in total voting for this

kind of error, the overall quality seems to be high.

In addition to these rules, any block containing a huge

amount of pixels that exceed an intensity difference

which is significantly larger than θ

D

(compared to

neighbouring blocks) may give cues for localising er-

rors due to Moving Object, Local Misalignment or

Parallax due to unfulfilled model assumptions.

5 RESULTS

Our approach has been tested on various pairs of reg-

istered images. In the following we discuss two repre-

sentative examples that outline its potential. We focus

on effects of lens distortion known to have a signifi-

cant impact on registration results (Hsu and Sawhney,

1998). Since correcting distortions as a precaution is

quite unstable if no assumptions about their impact

can be made, detecting them during registration helps

to improve the robustness and reliability of the results.

The reference images of both image pairs dis-

cussed here are depicted in Figure 2. The image on

the left shows a stony yard with a very shallow 3D

relief; the right one depicts a map placed on a plane

table. Compared to the distance of the camera moving

in parallel to the ground, both scenes may be assumed

to be planar. In both examples homographies were

applied to describe the camera motion which was

recovered applying (Mann and Picard, 1996), given

about 90% image overlap. The results suffered only

from very small misalignments, rendering it difficult

to present them adequately here. Thus, we focus on

the outcomes of our new metric.

Figure 2: The reference images (640 × 480 pixels) of the

two pairs of registered images exemplary discussed here.

For all evaluations the parameters within our ap-

proach were selected as follows: θ

G

= 5, θ

E

= 0.85,

θ

D

= 2 and θ

H

= 0.5. The resulting registration error

map for the first image pair is shown in Figure 3 on

the left. Central blocks voting for errors are marked

gray, border blocks white, and non-voting blocks as

well as blocks without errors are shown in black. It

is clearly visible that the blocks voting for registra-

tion errors cover the whole image area. Specifically,

62% of the voting border blocks and 58% of the cen-

tral blocks indicate registration errors. According to

the rules defined in Section 4.2 for interpreting the

results, this clearly underlines severe problems within

the registration. In fact, the result is notably improved

after correcting both images for radial lens distortions

prior to the registration (Fig. 3, right). We utilised the

Camera Calibration Toolbox

2

for Matlab where the

resulting distortion coefficients are indeed significant:

kc

1

= −0.19, kc

2

= 0.21 and kc

5

= 0.0001. After

2

http://www.vision.caltech.edu/bouguetj/calib

doc/.

correction only 11% of the border blocks and 6% of

the central blocks still voted for registration errors. It

should be noted that although lens distortions turned

out to be the main reason for the initial registration

failure the errors were not exclusively concentrated

along image borders. Moreover, a radial symmetric

pattern of blocks with errors was observed (Fig. 3).

Figure 3: Maps of blocks voting for registration errors in the

first example, before (left) and after (right) compensation

for lens distortions. Central blocks are marked gray, border

blocks white, and non-voting and error-free blocks black.

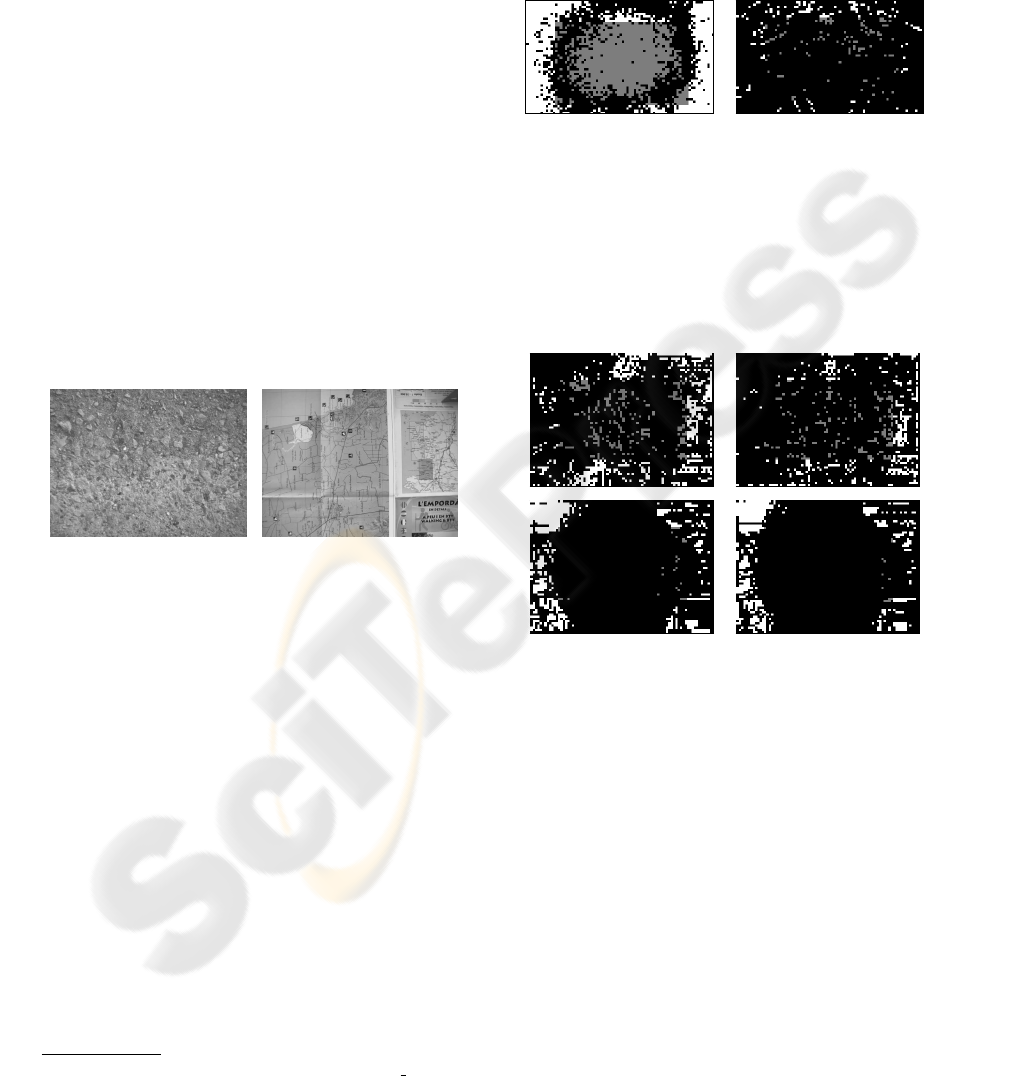

The results of the quality assessment for the sec-

ond pair of images are depicted in Fig. 4. The voting

results for registration (top) and visual (bottom) er-

rors, given the original images, are shown on the left,

and the results after distortion correction on the right.

Figure 4: Result maps for the second example, again before

(left) and after (right) lens distortion compensation. The

maps for registration errors are depicted on top while the

results for visual errors are given at the bottom.

Specifically, before lens distortion compensation

50% of the border and 32% of the central blocks voted

for registration errors, while only 5% of the central

blocks voted for visual errors compared to 70% along

the borders. The high ratio of blocks voting for reg-

istration errors indicates an overall low registration

quality. In this case the slightly higher percentage in

outer image parts may indicate problems due to lens

distortion. Indeed, after correction, the ratios in the

outer and central parts decreased to 24% and 21%,

respectively. However, since the ratios are still sig-

nificant compared to the final ones of the first exam-

ple, additional factors causing problems in registra-

tion and leading to a low quality are probabilistic, e.g.,

the more pronounced 3D relief of the scene.

With regard to visual errors there is a significant

influence of vignetting which is also clearly visible

from the original images (Fig. 2, right). Note that the

image differences resulting from these effects remain

unchanged while compensating for lens distortions as

indicated by the final ratios of 65% and 4% after the

second registration run. This is as expected since

moderate vignetting usually does not affect the reg-

istration process, but merely the final visual appear-

ance of the images. Consequently, individual correc-

tion steps are required, but not carried out in this case.

6 CONCLUSION

An objective assessment of 2D image registration

quality is a challenging task. As common measures

for image quality have proven not to be suitable for

the special requirements of errors in image alignment,

a new metric for this is proposed. Promising results

are obtained by exploiting local structural proper-

ties of registered images and preserving this informa-

tion in error pooling by applying voting-based strate-

gies. The indicated registration quality correlates well

with the visual appearance of the images and various

classes of differences can be distinguished. This capa-

bility is of significant importance with regard to sub-

sequent processing steps that aim at an automatic im-

provement of the results, since different error sources

require individual compensation strategies.

While the obtained results outline the high poten-

tial of this approach, perspectives for further refine-

ments were also discovered. Sometimes the distri-

bution of blocks voting for registration errors do not

clearly indicate the underlying error sources. We plan

to tackle this problem by refining the spatial classifi-

cation of the blocks and by taking global patterns into

account. In addition, with regard to local differences

resulting from moving objects or parallax detailed ex-

aminations of extraordinary high intensity differences

will be carried out. Finally, so far the approach relies

on various manually adjusted thresholds. Presumably

these can be chosen appropriately according to actual

image contents, leading to a fully automatic and flex-

ible approach for registration quality assessment.

ACKNOWLEDGEMENTS

This work was supported by a fellowship within the

Postdoc-Programme of the German Academic Ex-

change Service (DAAD), and has also been partially

funded through the MOMARNET EU Research and

Training Network project (MRTN-CT-2004-505026),

and by the Spanish Ministry of Education and Science

under grant CTM2004-04205.

REFERENCES

Cadik, M. and Slavik, P. (2004). Evaluation of two princi-

pal approaches to objective image quality assessment.

In Proceedings of the 8th International Conference on

Information Visualisation (IV), pages 513 – 518.

Cvejic, N., Loza, A., Bull, D., and Canagarajah, N. (2005).

A novel metric for performance evaluation of im-

age fusion algorithms. Transactions on Engineering,

Computing and Technology, 7:80–85.

Daly, S. (1993). The visible difference predictor: An al-

gorithm for the assessment of image fidelity. Digital

Images and Human Vision, pages 179–206.

Farin, D. and de With, P. (2005). Misregistration errors in

change detection algorithms and how to avoid them.

In Proc. of IEEE International Conference on Image

Processing, pages II:438–441.

Hartley, R. and Zisserman, A. (2004). Multiple View Geom-

etry in Computer Vision. Cambridge University Press.

Hsu, S. C. and Sawhney, H. S. (1998). Influence of global

constraints and lens distortion on pose and appear-

ance recovery from a purely rotating camera. In 4th

IEEE Workshop on Applications of Computer Vision

(WACV), page 154 ff., Princeton, NJ, USA.

Kim, H.-S., Kim, H.-C., Lee, W.-K., and Kim, C.-H. (2000).

Stitching reliability for estimating camera focal length

in panoramic image mosaicing. In Int. Conf. on Pat-

tern Recognition, pages 1:596–599, Barcelona, Spain.

Lubin, J. (1995). A visual discrimination model for imag-

ing system design and evaluation. In Peli, E., editor,

Visual Models for Target Detection and Recognition,

pages 245–283. World Scientific, Singapore.

Mann, S. and Picard, R. (1996). Video orbits of the pro-

jective group: A new perspective on image mosaicing.

Technical Report 338, MIT Media Laboratory Percep-

tual Computing Section, Boston, USA.

Qu, G., Zhang, D., and Yan, P. (2002). Information measure

for performance of image fusion. Electronics Letters,

38(7):313–315.

Wang, Z., Bovik, A., Sheikh, H., and Simoncelli, E. (2004).

Image quality assessment: From error visibility to

structural similarity. IEEE Trans. on Image Process-

ing, 13(4):600–612.

Wang, Z. and Bovik, A. C. (2002). A universal image qual-

ity index. IEEE Signal Proc. Letters, 9(3):81–84.

Weng, J., Huang, S., and Ahuja, N. (1989). Motion and

structure from two perspective views: Algorithms, er-

ror analysis, and error estimation. IEEE Trans. on

Patt. Anal. and Mach. Intel., 11(5):451–476.

Xydeas, C. and Petrovi

´

c, V. (2000). Objective image fusion

performance measure. Electr. Letters, 36(4):308–309.

Zitov

´

a, B. and Flusser, J. (2003). Image registration

methods: a survey. Image and Vision Computing,

21(11):977–1000.