SELF-ORGANIZING AND SELF-REPAIRING MASS MEMORIES

FOR AUTOSOPHY MULTIMEDIA ARCHIVING SYSTEMS

Replacing the Data Processing Computer with Self-Learning Machines

based on the Autosophy Information Theory

Klaus Holtz, Eric Holtz, Diana Kalienky

Autosophy, 602 Mason #305, San Francisco, California 94108, USA

Keywords: Autosophy, Information Theory, Multimedia Archiving, Self-Assembling Data Networks, Self-Repairing

Memories, Content Addressable Memories, Artificial Intelligence.

Abstract: The programmed data processing computer may soon be eclipsed by a next generation of brain-like learning

machines based on the "Autosophy" information theory. This will require a paradigm shift in memory

technology, from random addressable memories to self-organizing failure-proof memories. The computer is

essentially a blind calculating machine that cannot find "meaning" as our own brains obviously can. All that

can be achieved are mere programmed simulations. The problem can be traced to an outdated (Shannon)

information theory, which treats all data as "quantities." A new Autosophy information theory, in contrast,

treats all data as "addresses." The original research explains the functioning of self-assembling natural

structures, such a chemical crystals or living trees. The same principles can also grow self-assembling data

structures that grow like data crystals or data trees in electronic memories without computing or

programming. The resulting brain-like systems would require virtually unlimited capacity, failure-proof

memories. The memories should be self-checking, self-repairing, self-healing, clonable, both random and

content addressable, with low power consumption and very small size for mobile robots. Replacing the

programmed data processing "computer" with brain-like "autosopher" promises a true paradigm shift in

technology, resulting in system architectures with true "learning" and eventually true Artificial Intelligence.

1 INTRODUCTION

The programmed data processing computer is now

the only known computing paradigm. It is

essentially a blind calculating machine that cannot

find "meaning" like our brain. All data is regarded as

"quantities" according to the Shannon information

theory (Shannon, 1948). An alternative computing

paradigm is now evolving based on the "Autosophy"

information theory (Holtz-Langheld, 1978). All data

is regarded as "addresses". The new brain-like

machines do not require programming or human

supervision of internal functioning. The systems are

essentially error-proof, including self-organizing,

self-healing, and even self-replicating capabilities.

The systems will not produce faulty information

even after severe physical damage.

Autosophy theories and applications

are

described in many scientific publications available

on the Internet. (Use keyword "autosophy" in a

search engine or go to www.autosophy.com.)

A self-learning text database was built in 1988 to

v

erify the theoretical predictions. Autosophy

Internet television was demonstrated in 2000 (Holtz,

2001). Primitive applications include lossless data

compression in the V.42bis modem standard and

lossless still image compression in the gif and tif

standards.

The new Autosophy technology would require a

new

memory paradigm, which is the main subject of

this paper. Random Addressable Memories, used in

computers, are not suitable. The new mass memory

systems would provide virtually unlimited, cheap

and non-volatile storage capacities, combined with

very low power consumption and small size for use

in mobile robots (Holtz, 2003).

Both the theoretical knowledge and the required

me

mories are now becoming available for building

brain-like systems (Holtz, 2004). This may evolve

into self-learning robots and eventually into true

Artificial Intelligence. It may also revolutionize all

multimedia communication (Holtz, 2002).

177

Holtz K. and Holtz E. (2005).

SELF-ORGANIZING AND SELF-REPAIRING MASS MEMORIES FOR AUTOSOPHY MULTIMEDIA ARCHIVING SYSTEMS - Replacing the Data

Processing Computer with Self-Learning Machines based on the Autosophy Information Theory.

In Proceedings of the Second International Conference on e-Business and Telecommunication Networks, pages 177-182

DOI: 10.5220/0001412201770182

Copyright

c

SciTePress

2 COMPUTER VS.

AUTOSOPHER

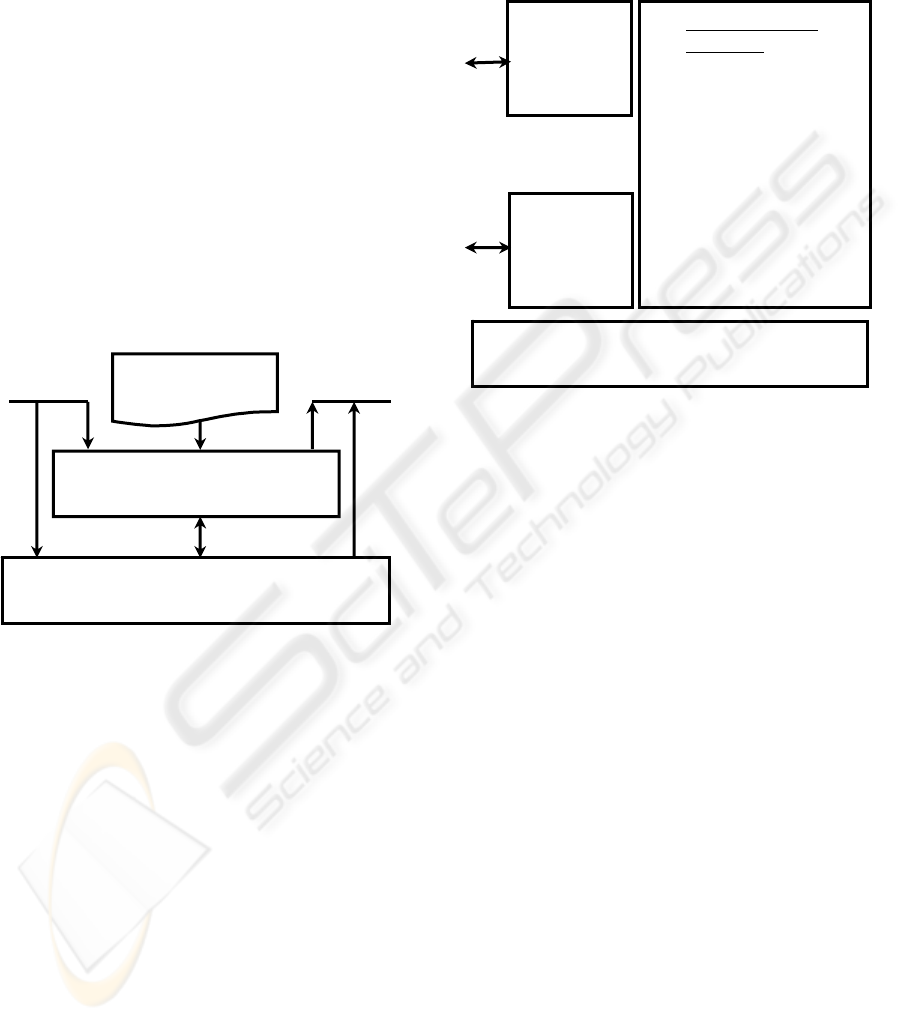

The programmed data processing computer in Fig. 1

is essentially a blind adding or calculating machine

that cannot find "meaning" as our own brains

obviously can. All input and output data items

(ASCII characters or pixels) are regarded as

quantities according to the Shannon information

theory. Its purpose is to combine raw input data,

using arithmetic, according to a stored program, to

obtain useful output data. The computer cannot learn

and no matter how much data is processed or stored

it will not become more intelligent. All intelligence

is contained in the programming, prepared by human

programmers, where every operation must be

defined by a complex series of instructions. No

matter the speed of the computation or the

complexity of the software, Artificial Intelligence in

a computer will always remain a mere simulation.

Figure 1: Programmed data processing computer

Computers store data in a Random Addressable

Memory (RAM). The address and data content are

both provided by the Central Processing Unit (CPU),

according to the programmed instructions. Data may

enter or exit the computer either via the CPU or

directly via Direct Memory Access (DMA). Both the

program data and the input/output data may be

stored in the same memory device. This may cause

viruses from the communication channels to infest

the computer. A single error in a computer memory

may lead to a total computer malfunction.

The Autosophy theory evolved from research

into self-assembling structures, such as chemical

crystals, living trees, or human societies. These

structures may start from a tiny seed containing

information stored in a DNA helix. The seed will

select specific materials from a random environment

to build itself into a very complex structure without

human design or supervision. In 1974 Klaus Holtz

discovered that the same natural laws and principles

grow self-assembling data structures in memories.

These imaginary data structures grow like data

crystals or data trees in electronic memories, without

programming or outside supervision. The Autosophy

information theory was first disclosed in 1978

(Holtz-Langheld, 1978).

Figure 2: Self-learning brain-like Autosopher

The operations in an "autosopher", shown in Fig. 2,

are based on self-learning networks. The device acts

like a "black box" to absorb all multimedia

information without programming. The sensor

input/output data are regarded as "addresses," which

define or create their own storage locations in the

memory. Computer programming is replaced by

"education" similar to the education of children.

Simple input networks, such as the serial and the

parallel networks, encode the raw sensor data for

further processing in higher level learning networks.

All learning networks may share the same mass

memory device. The memory must be virtually

error-proof for applications in self-learning

intelligent robots.

3 SELF-LEARNING NETWORKS

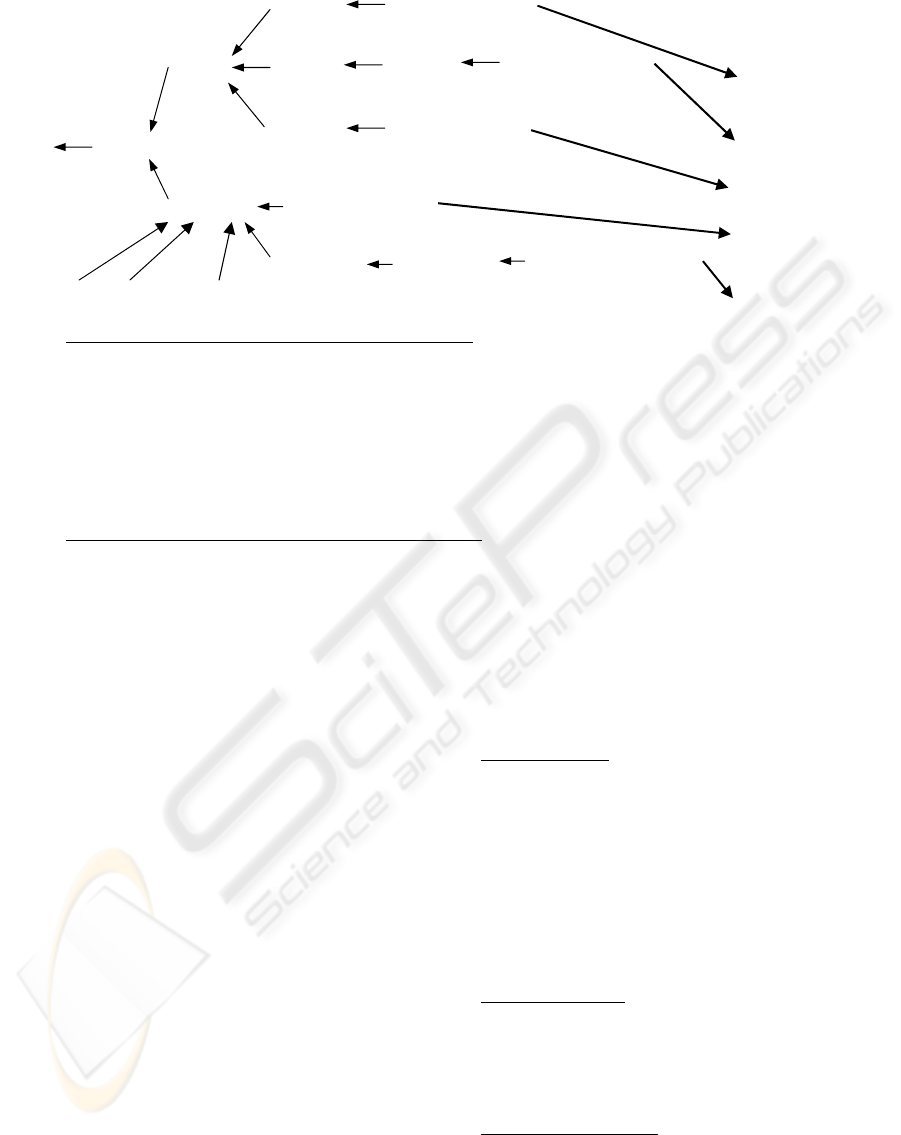

The serial network, shown in Figure 3, is an example

of true mathematical "learning," according to the

Autosophy information theory. This can be imagined

like the growing of data trees or data crystals where

data input items define or create their own storage

locations in the memory. A unit of knowledge

(engram) is created by new information (GATE),

related to already established knowledge

(POINTER), which may then create a new engram

(ADDRESS) as an extension to that which is already

known (Patent 4,366,551).

Inpu

t

Quantities

Programming

Instructions

Central Processing Unit (CPU)

Arithmetic, Control, Register

Random Addressable Memory (RAM)

Conventional Data Storage Device

Outpu

t

Quantities

Senso

r

Addresses

Random / Content Addressable CAROM

Self-Repairing Failure-Proof DECAM

Serial

Networks

(Text)

(Sound)

Parallel

Networks

(Images)

(Vision)

Higher Learning

Networks

Associative

(Connection)

Interrelational

(Languages)

Logical

(Robotics)

Primary

(Reasoning)

Hypertrees

(Brain-like)

ICETE 2005 - MULTIMEDIA SIGNAL PROCESSING

178

0 Seed

1 0 R

2 1 O

3 2 S

4 3 E

5 2 B

6 5 O

7 6 T

8 2 O

9 8 T

10 1 E

11 10 D

12 10 A

13 12 D

14 13 Y

The learning process can be imagined like the

growing of data trees or data crystals. A stored tree

network is made of separate nodes, where each

ADDRESS represents an "engram" of knowledge.

The library ADDRESS is a mathematical equivalent

to a point in omni dimensional hyperspace. The

content of each library ADDRESS is unique and can

be stored only once. One cannot learn what one

already knows. Because each input data string is

learned only once, the storage requirements would

saturate. The more information already contained in

a database the less additional storage space is

required to store additional information.

There are seven known classes of self-learning

"Omni Dimensional Networks", each providing a

different learning mode. This may include learning

modes that are not available in our own brains.

Some of these learning networks are already

implemented in commercial applications, while

others have been simulated or are known only in

theory. New applications, such as live Internet video

or advanced lossless still image compression, are

now being added at an accelerating rate.

3 E (4) ROSE

2 S (3)

Serial networks store serial data sequences such

as text, sound, or serially scanned images. The

algorithm was invented in 1974 (Patent 4,366,551).

A similar algorithm (LZ-78) was developed later by

Jacob Ziv and Abraham Lempel (Ziv-Lempel,

1978). Most commercial applications use the LZW

(Lempel Ziv Welch) code, a simplified variation

published by Terry Welch (Welch, 1984).

Application examples include the V.42bis data

compression standard in modems and the gif and tif

formats used for lossless still image compression.

Parallel networks store images in a hyperspace

funnel, yielding very high lossless image

compression and fast access to archives. These

networks are especially suitable for archiving and

storage of imaging and video data. Machine vision is

the ultimate application.

Associative networks connect various networks

into a system. They can, for example, connect

questions to answers, text to images, or commands

to execution sequences. A demonstration system was

built in 1978 to verify operations.

(0)

0 R (1)

1 O (2)

1 E (10)

2 B (5)

2 O (8)

10 D (11) RED

10 A (12)

5 O (6)

6 T (7) ROBOT

8 T (9) ROOT

12 D (13)

13 Y (14) READY

Seed

Pointer Gate Address

Input: ROSE ROBOT ROOT RED READY

SERIAL NETWORK LEARNING ALGORITHM

MATRIX [ POINTER ] GATE ] (The MATRIX is a working register in the hardware)

Start: Set POINTER = Seed (= 0)

Loop: Move the next input character into the GATE

If End Of Sequence (a SPACE char.) then output the POINTER as a Tip code; Goto Start

Else search the library for a matching MATRIX

If found then move the library ADDRESS where it was found to the POINTER; Goto Loop

Else, if not found, then store the MATRIX into a next empty library ADDRESS;

Move the library ADDRESS where it was stored into the POINTER: Goto Loop

SERIAL NETWORK RETRIEVAL ALGORITHM

MATRIX [ POINTER ] GATE ]

Start: Move the input Tip code into the POINTER

Loop: Use the POINTER as a library ADDRESS to fetch a next MATRIX from the library

Push the GATE into a First-In-Last-Out (FILO) stack

If the POINTER = Seed (= 0) then pull the output data from the FILO stack; Goto Start

Else Goto Loop

Figure 3: An example of the "Serial" self-learning tree network (Patent 4,366,551)

SELF-ORGANIZING AND SELF-REPAIRING MASS MEMORIES FOR AUTOSOPHY MULTIMEDIA ARCHIVING

SYSTEMS: Replacing the Data Processing Computer with Self-Learning Machines based on the Autosophy Information

Theory

179

Interrelational networks provide grammatical

language learning that could evolve into talking

databases with speech input and output.

Grammatical speech would be the ultimate method

of communication between humans and machines.

Logical networks yield an advanced form of self-

learning data processing with logical reasoning

capabilities. They may evolve into intelligent robots.

Primary networks provide unstructured access to

archives or databases through deductive reasoning

and automatic indexing.

Hypertree networks promise true brain-like

learning, which is currently being researched. This

ongoing research may add new types of networks

and new learning modes in the future.

The learning algorithms require that the entire

memory must be searched to locate a matching

MATRIX before creating a new network node.

Rapid learning would require a Content Addressable

Memory (CAM) in which all memory locations are

searched in parallel, all in one operation. The

retrieval algorithm, in contrast, would require a

normal Random Addressable Memory (RAM). The

optimal solution is to store each node in two separate

memory devices, one configured as a CAM, the

other as a RAM. This would also provide for

automatic self-repair in failure-proof memories.

When Autosopher robots finally evolve from

mere information access terminals to physical

interactions with human beings, then near-absolute

reliability is essential even in cases of severe

physical damage. A malfunctioning robot may cause

severe damage and injury to human beings. Robots

would require enormous capacity, non-volatile,

Content or Random Addressable memories. The

memory units should be small enough to fit into

mobile robots and consume very little power so as to

require no cooling and conserve the limited power of

mobile terminals.

The memory units should have very rugged

construction for operation in hostile environments

and even in outer space. Maintenance could be by

unskilled personnel and eventually by remote

service robots. Only solid-state technology is used,

without any moving parts. The outer surface may be

sculptured for better heat transfer and easier

handling by human hands or robots during memory

repair. The input output bus connector may be

similar to the Edison socket now used for light

bulbs. Memory modules may be screwed-in or

removed, like light bulbs, without any additional

mounting hardware. There are two types of memory

units, a "female" unit (CAM), and a "male" unit

(RAM), with the same bus sockets, either male or

female, on both sides. The memory units could be

stacked into larger and larger groups to provide

virtually unlimited storage capacity.

4 CONTENT ADDRESSABLE

READ ONLY MEMORY

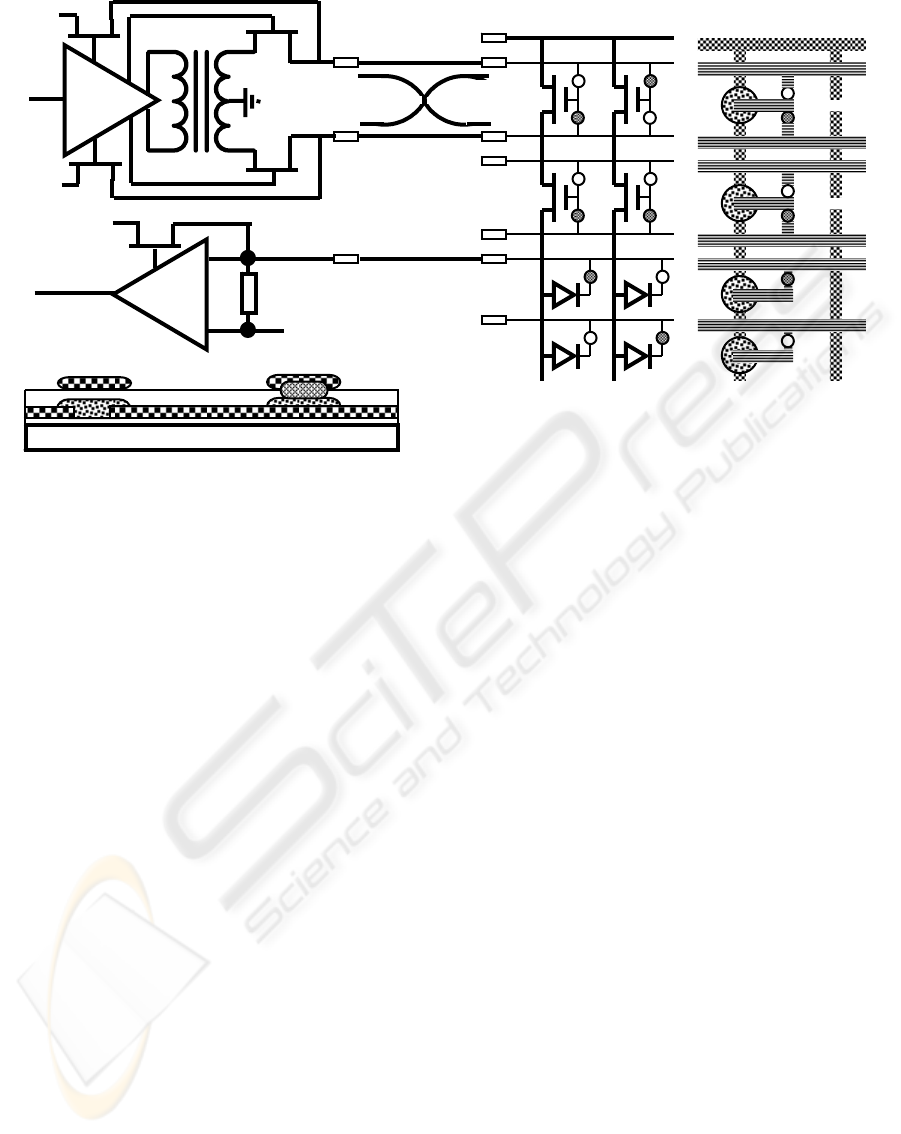

The CAROM memory, shown in Fig. 4, may operate

as a Content Addressable Memory (CAM) or as a

Random Addressable Memory (RAM). Both the

memory address and the stored data are

programmable from the outside by tiny fuses. The

fuses are made either conductive or nonconductive

according to the Autosophy learning algorithms,

where the data determines or creates its own storage

nodes. There is no need for programming or outside

supervision of the internal operations.

Memory addresses are decoded in long chains of

Field Effect Transistors (FETs), where each

transistor is turned conductive or nonconductive as

selected by a pair of fuses. Only one fuse in the pair

is made conductive. A complementary input voltage

is applied through the conductive fuse to the FETs

gate for either conductive or nonconductive. If all

FETs in a chain are conductive, then current will

flow through the FETs to a set of diodes to generate

the output data via programmable output fuses. Only

one chain of FETs can conduct at any one time

according to the learning algorithms. An input

address will therefore produce an output data pattern

as determined by the learning algorithms.

Since only one chain of FETs can conduct at any

one time, the current through the memory device is

constant and independent of the memory capacity.

The voltage input from the driver charges the tiny

capacitance on the FETs gate. The input acts like a

pure capacitive load where the whole memory

device acts like a large capacitor.

The energy used to charge the capacitor can be

recycled through an inductor to achieve very low

energy consumption. When the stored capacitive

voltage is discharged through the driver, in a charge

reversal, then the energy is temporarily stored in an

inductor. The energy stored in the inductor then

recharges the capacitive load in the opposite

polarity. This is similar to an electrical pendulum,

where the energy is being recycled in each charge

reversal. The result is very low net energy loss only

in the conductive layers and in the FETs.

Very small net energy consumption will prevent

heating and conserve the limited power in mobile

robots. Like the human brain, power consumption

will increase with memory activity, such as learning

and thinking. However, power consumption is

virtually independent of the memory capacity. A

very large memory device may have virtually the

same power consumption as a small memory device.

The internally stored energy in the capacitor may

even allow a robot to continue operations after short

power failures.

ICETE 2005 - MULTIMEDIA SIGNAL PROCESSING

180

The memory circuits are printed onto thin foils,

which are rolled into a spool, about the size of a roll

of toilet paper. The spools should only contain the

printed foil as shown in the FOIL LAYOUT without

active components. The driver and receiver circuits

should be housed in a separate bus connector unit in

the center of the spool. Rolling the foil into a tight

spool would provide very rugged and compact

construction. The spool is then inserted into a

capsule filled with self-sealing liquid.

The memory fuses are set by the learning

algorithm whenever a new node is created and

stored in a "next empty" memory location. Each

node may be stored anywhere in the memory device.

Once a fuse is set, from the driver or receiver, then it

will usually remain set to form a read-only memory.

All address bits and the output data bit fuses in a

network node may be set in a single cycle. Fuse

programming will use larger voltages and currents

than normal memory searching operations. Each

column of fuses in a node may be specified in a

"next empty" word selection layer on the foil.

There are several available fuse technologies:

conventional non-volatile memories (PROM,

EPROM, EEPROM, FLUSH) may change over long

time periods and are not recommended. Antifuses

are used in Field Programmable Gate Arrays

(FPGAs), which are more stable and less sensitive to

heat or radiation. Fuses made from electro-active

PLZT (Lead, Lanthanum, Zinc, Titanium) ceramics

may be best for long-term memories. Some fuse

technologies may allow for memory erasure and

recycling of the spools.

Set Fuse 1

CIRCUIT FOIL LAYOUT

There are three basic technologies for

manufacturing the memory spools: Silicon chips

mounted on a laminated foil would provide the

cheapest solution at this time. The circuit shown in

Fig. 4 would be printed on crystalline silicon chips

for separate mounting on the foil. This may yield a

price of about $1 per billion transistors. (The Intel

Pentium 4 contains 330 billion transistors and sells

for $350). The silicon chips need not be error-free

because of the DECAM's self-repair capabilities.

Thin film amorphous silicon transistors and

interconnecting wiring may be printed by vacuum

deposition onto stainless steel foil. This roll-to-roll

industrial process may result in very inexpensive

memory units that can withstand great heat,

radiation, and vibrations.

Printable electronics circuits are printed on plastic

foils by inkjet printing processes. Special semi-

conductive ink can produce the wiring and organic

transistors, diodes, and PLZT fuses.

5 DUAL ENTRY MEMORIES

Both the autosopher and the brain store multimedia

"information" in a saturating omni dimensional

hyperspace format, in which any node may be

located anywhere. Memory repair is far beyond

human intelligence. Repairing an individual

hyperspace memory node is just as impossible as

repairing individual neurons in the brain. Both

systems act like a sealed "black box" to organize and

repair their own memory operations.

Stainless Steel Foil

Transisto

r

Diode

N

itride

Set Fuse 2

-V

+V

Inducto

r

-V

Drive

Charge

Reversal

-V

Set Output Fuse

Receive

Terminato

r

GND

Figure 4: CAROM Memory using Thin Film FET Semiconductor and Fuses (Patent 5,576,985)

SELF-ORGANIZING AND SELF-REPAIRING MASS MEMORIES FOR AUTOSOPHY MULTIMEDIA ARCHIVING

SYSTEMS: Replacing the Data Processing Computer with Self-Learning Machines based on the Autosophy Information

Theory

181

Examples of dual redundant information storage

are found in double ledger accounting and the DNA

helix. In double ledger accounting every transaction

is recorded twice, as a gain and as a loss. Errors in

one ledger can be corrected from the other ledger to

obtain error proof accounting. In biological DNA,

information is stored in two strands wound together

into a helix, where each strand contains the same

information but in a complementary form.

Autosopher store information in two spools, a

male (RAM) and a female (CAM), each containing

the same information in a complementary format.

An error in one spool is automatically repaired from

the complementary spool. Memory repair clears

defective nodes, which are repaired in sleep mode.

Each search or retrieval memory access involves

both spools. In a search access, a MATRIX is

applied to the CAM, resulting in an ADDRESS

where a match was found. The ADDRESS is applied

to the RAM, resulting in a MATRIX, which must be

identical to the original MATRIX input. In retrieval,

an ADDRESS is applied to the RAM, resulting in a

MATRIX output, which is applied to the CAM to

result in an ADDRESS, which must be identical to

the original ADDRESS input. An error in either

spool, confirmed by a "checksum" (A Hamming

code of both the MATRIX and the ADDRESS),

would clear the node for later repair. Defective

memory nodes cleared during normal operations are

repaired in "sleep" mode.

Self-healing involves applying a binary counter

to the CAM to retrieve an ADDRESS and checksum

from the CAM. If the checksum code is incorrect or

empty, then the counter is incremented to check the

next node. Otherwise the output ADDRESS is

applied to the RAM to obtain a MATRIX output,

which must be identical to the counter ADDRESS.

Else the node in the CAM is used to generate a new

node in the RAM in a "next empty" memory

location. Once the CAM has been scanned then the

binary counter ADDRESS is applied to the RAM

ADDRESS. This will result in a MATRIX output,

which is applied to the CAM. The CAM ADDRESS

output must be identical to the counter ADDRESS.

The automatic self-healing facilities can also be

used for rejuvenation and cloning of robot

memories. Removing one spool and replacing it with

an empty spool will cause a robot to automatically

restore the information from the remaining spool

into the empty spool. The removed spool may then

be inserted into a second robot, together with an

empty spool, to produce a robot clone with the same

knowledge and “personality.” Rejuvenation involves

double cloning by removing an old spool and

replacing it with an empty spool. Old robots can thus

be rejuvenated without loss of information.

6 CONCLUSIONS

Considering the enormous head start of the

programmed data processing computer, replacing

the computer with self-learning autosopher will

neither be quick nor easy. However, there may not

really be a choice. Computer technology is

approaching the limits of performance where further

progress will require more and more effort. Higher

and higher speed computation, more advanced

operating systems, and better programming are not

the solution. Shannon's "communication," using

binary digits, is not true communication, and the

programmed data processing computer will not

achieve true Artificial Intelligence. It may be hard to

accept that our entire communications infrastructure

and the programmed data processing computer are

based on a false (Shannon) information theory.

The Autosophy information theory, in contrast, is

based on self-learning networks that grow like data

crystals or data trees in electronic memories. The

intelligence of archiving systems or robots is no

longer limited by the intelligence of the human

programmers. Autosophy may provide an alternate

computing and communication paradigm for the

future, from human-designed and programmed

machines, towards self-organizing and self-repairing

brain-like machines.

REFERENCES

Holtz, K., 2004. Autosophy Failure-Proof Multimedia

Archiving. IS&T's 2004 Archiving Conference.

www.imaging.org, San Antonio Texas, April, (2004)

Holtz, K., 2003. Replacing Data Processing Computer

with Self-Learning Autosopher: Impact on

Communication and Computing. SCI 2003 The 7th

World Multi-conference on Systemics, Cybernetics

and Informatics. Orlando FL. July (2003)

Holtz, K., 2002. The Emerging Autosophy Internet.

SSGRR 2002s, L'Aquila Italy Aug. 2 (2002)

www.ssgrr.it/en/ssgrr2002s/papers.html, Paper 140.

Holtz, K. 2001. An Autosophy Image Content-Based

Television System. IS&T's 2001 PICS Montreal

(2001)

Welch, T., 1984. A Technique for High Performance Data

Compression. IEEE Computer, June (1984)

Ziv, J., Lempel A., 1978. Compression of Individual

Sequences via Variable-Rate Coding. IEEE

Information Theory, IT-24 (1978)

Holtz, K., Langheld, E., 1978. Der selbstlernende und

programmier-freie Assoziationscomputer.

ELEKTRONIK magazin, Germany, Dec. (1978).

Shannon, C., 1948. A mathematical Theory of

Communication. Bell Telephone B-1598, July (1948)

ICETE 2005 - MULTIMEDIA SIGNAL PROCESSING

182