Biometrics Identification Based on Visual Hand

Movements Using Wavelet Transform

Sanjay kumar, Dinesh Kant Kumar, Neil Mclachalan

School of Electrical and Computer Engineering,RMIT University Melbourne,Australia

Abstract. This work presents a novel technique of biometric identification

based on the temporal history templates (THTs) of visual hand movements. The

technique uses view-based approach for representation of hand movements, and

uses a cumulative image-difference technique where the time between the se-

quences of images is implicitly captured in the representation of action. The

low level representation of the action collapses the temporal structure of the

motion from the video sequences of the hand movements while removing any

static content from the video sequences to generate temporal history templates

(THTs) of the hand movement. THTs of different individuals present distinc-

tive 2-D motion patterns, where each pixel describes the function of temporal

history of motion in that sequence. This THT are further sub-divided into four

sub- images an average and three detailed images using multi resolution wave-

let transforms. The approximate wavelet sub-image is considered as the feature

for recognition. The recognition criterion is established using KNN nearest

neighbor technique using Mahalanobis distance. The accuracy of accepting an

enrolled subject (AAES %) and accuracy of rejecting an imposter (ARI %) are

the indicators of identification performance of the technique. The experimental

results from 5-different individual indicate that the THT based technique

achieves high identification rate when subject specific movements are assigned

to the subjects during enrolment

.

1 Introduction

Biometrics-based authentication using computer vision technologies is emerging as a

reliable method that can overcome some of the limitations of the traditional automatic

personal identification technologies. Any human physiological or behavioural trait

can serve as a biometric characteristic as long as it is universal, distinctive, suffi-

ciently invariant with respect to matching criterion, and these characteristic should be

physically measurable [1]. All traditional biometrics measures have certain limitations

associated to them e.g. DNA can’t be used in certain applications due to issues of

contamination, sensitivity, cumberness and privacy; ear shape as a biometrics meas-

ure has a problem of non unique features; facial biometrics have got problems with

aging, face disguise and variable imaging conditions; hand and finger geometry has

limited applications, although fingerprints are very unique but they also have the

problem of fake fingers, storage and imaging conditions problems; iris biometrics is

difficult non intrusive and requires co-operation from the individual during enrolment

kumar S., Kant Kumar D. and Mclachalan N. (2005).

Biometrics Identification Based on Visual Hand Movements Using Wavelet Transform.

In Proceedings of the 1st International Workshop on Biosignal Processing and Classification, pages 131-141

DOI: 10.5220/0001193201310141

Copyright

c

SciTePress

and identification; and speech biometrics has the limitation of mechanical due to

microphone and dependence on subjects’ health etc[1]. Fingerprints, facial features,

DNA, and retinal features are known to be the most common biometrics based on the

physiological features. There are a number of publications describing methods using

these features as the biometric characteristics. Other known biometrics such as key-

stroke and gait analysis based on the behaviour of the individual [1]. However, not

all-behavioural biometrics has been examined extensively The Hand is a dexterous

part of the human body and is unique to the gesturer, but the use of hand gesture as a

biometric has been given little treatment so far [2]. The hand gesture has been exten-

sively used for developing Human Computer Interaction (HCI) applications and

many other applications. This work is about a new biometrics method based on the

temporal history templates (THTs) generated from the hand movements (gestures).

The Spatio-temporal templates of hand movements are proposed as the behavioural

biometric because they are unique to the hand, which performs the gesture, and are

very distinctive to the gesturer [2]. Spatio Temporal templates of Hand gesture as a

biometrics has the advantage of non-intrusiveness, are distinctive, unique and can’t be

forged easily.

2 Related Work

There are two main approaches in the literature about the automatic gait recognition

[3]. The first method is based on the model based gait recognition, where a mathe-

matical model describes the subject’s movement and the second method applies the

statistical description to the set of images. Statistical methods detect the temporal

changes in gait by using optical flow techniques [4] [5].A statistical approach to the

automatic gait recognition work, which is very similar to this technique has claimed

very promising results[6]. Encouraging results on a small database of four subjects

have also been reported for a technique that describes motion using velocity mo-

ments[7]. In the related work of identifying people by the use of behavioural biomet-

rics gait distinguished people from their walking by extraction of video sequences

from their walking patterns [8][9]. Little and Boyd used frequency and phase features

from optical flow information to recognize people from their gait [6].

This work is based on the motion based template research. The motivation of this

work comes from the real-time interactive applications developed by Davis and Bo-

bick [10] [11] [12] [13] [14] [15] where they presented a real-time computer vision

approach for representing and recognizing common human movements from low-

resolution image sequences for its successful development of an application named as

“Virtual Aerobic Instructor” [14] . This work is similar to the work done by Bobick

and Davis to develop a Virtual Aerobic Instructor for aerobics exercises and Kids

Room an interactive room where children can play with the monster in an interactive

environment[14].

132

3 Identification Technique

The philosophy behind the approach for person identification is based on the spatio-

temporal templates of hand movements for enrolment and identification.

3.1 Temporal History Template

This research is to test the efficacy of THT based method for identification on the

basis of the Temporal History Templates (THTs) of hand movements. The representa-

tion of temporal history template (THT) is based on a view-based approach of hand

movement representation, where movement is defined as the motion of the hand over

time. The technique is based on collapsing the hand motion over time to generate a

static image from the image sequence. This resulting static image can represent the

whole sequence of hand movement. This single static image also gives all the proper-

ties (shape, direction, where & how) the motion is taking place in the image se-

quence. This technique is very suitable for short duration, non-repetitive, medium

velocity movements making very much suitable for real-time biometric application

[16].

3.1.1 Motion Image Estimation

For this work a simple temporal difference of frame technique (DOF) has been

adopted [13]. The approach of temporal differencing makes use of pixel-wise differ-

ence between two or three consecutive frames in an image sequence to extract mov-

ing regions [13]. The DOF technique subtracts the pixel intensities from each subse-

quent frame in the image sequence, thereby removing static elements in the images.

Based on research reported in literature, it can be stated that actions and messages can

be recognized by description of the appearance of motion [16] [17] [18] [19] [20]

[21] without reference to underlying static images, or a full geometric reconstruction

of the moving hand [19]. It can also be argued that the static images produced using

Temporal History Template based on the Difference of Frames (DOF) can represent

features of temporally localized motion for identification [15] [16] [20] [21] [22].

This process can be represented mathematically as follows

Let I (x, y, n) be an image sequence

&

let D(x, y, n) = |I(x, y,n) – I(x, y,n-1)|

Where I (x, y, n) is the intensity of each pixel at location x, y in the n

th

frame

and D

(x, y, n), is the difference of consecutive frames representing regions of motion.

B (x, y, n) is the binarisation of image difference over a threshold of Γ

B (x, y, n)=

Γn)y,D(x, if

otherwise

1

0

{

>

To represent where and when motion occurred in the image, we form a Temporal-

History Template (THT). The temporal history of the movement in THT is inserted

133

into the data by multiplication of the intensity of each frame with a linear ramp repre-

senting time. H

N

, pixel intensity is a function of the temporal history of Motion at that

point. The result is a scalar-valued image where more recently moving pixels are

brighter.

Then THT (H

N

(x, y)) is:

==Max

{

∪

1N

1n

B(x,y,n)*n

−

=

where N represents the duration of the time window used to capture the motion. In

THT more recent movements of hand actions are brighter than the older positions

represented with the darker values[15] [16] [20] [21] [22]. The delimiters for the start

and stop of the movement are added automatically in the sequence. Feature Extrac-

tion and a Feature Recognition Platform using Multiresolution Wavelet Transform

3.1.2 Wavelet

The applications of wavelets extend in several areas such as signal processing, tempo-

ral series analysis, meteorology, image filtering and compression, and pattern recog-

nition. This technique is based on the use of wavelets as basis functions for represent-

ing other functions. These functions have a finite support in time and frequency do-

main. Multi-resolution analysis is achieved by using the mother wavelet, and a family

of wavelets generated by translations and dilations of it. A wide function can examine

a large region of the signal and resolve the low frequency details accurately, while, a

short basis function can examine a small region of the signal to resolve the time de-

tails accurately [23] [24].If

Ψ

(x) represents the mother wavelet, the scaling is accom-

plished by multiplying ‘x’ by some scaling factor, if scaling factor is power of

2,yielding

Ψ

(2

m

x), where ‘m’ is integer, we get the cascaded ‘octave band pass filter’

structure. The wavelet function

Ψ

is translated along the time axis in order to cover

an entire signal. This translation is accomplished by considering all the integral shifts

of

Ψ

,

Ψ

(2

m

x-n)n

∈

Z

Thus putting all together gives a wavelet decomposition of signal,

Ψ

mn

(x)=2

m/2

Ψ(2

m

x-n)

mn

c are the transform coefficients. These coefficients are computed by the wavelet

transform, which is the inner product of the signal f (x) with the basis func-

tions

mn

Ψ (x). For classification there is no need for computing inverse transform,

since there is no need to reconstruct the original signal. In computer vision, it is diffi-

cult to analyse the information content of the image directly from the grey-level in-

tensity of the image pixels [24] . The approximate coefficients of the Wavelet trans-

forms of the images can provide denoising and scale and rotation invariance [25].

134

This is because the low frequency components spread in the time domain can be

treated as global property while the high frequency concentrated in time domain can

be discarded. The multi-resolution capability of wavelets also provides the capability

to examine the signal at various scales and provides for reduced data. This paper

reports the use of wavelet coefficients of the THT for extracting the required features

for classifying the THT.

3.1.3 Discrete Wavelet Transform

The classical DWT suffers a drawback, that it is unable to restore the translation in-

variance properties of the image. The idea is to restore translational invariance prop-

erties of the image by defining a slightly different, DWT, called the Stationary Wave-

let Transform, SWT. In classical DWT the computational step is that each decompo-

sition of the original image generates four sub images. In the following level, the

approximated image, which is the low pass sub image, is, decomposed. Iterative de-

composition of the approximated images forms a pyramidal wavelet transform. In the

pyramidal wavelet transform, the filtered versions of each sub image are down sam-

pled by a factor of two: this is also called as dyadic transform. The SWT algorithm is

very simple and is close to the DWT. More precisely, for level 1, the DWT for a

given image can be obtained by convolving the signal with the pair of low pass filter

(H) and a high pass filter (G) and then down sampling by 2 along both rows and col-

umns. SWT is similar to DWT and can be obtained by convolving the image with

pair of low pass filter (H) and a high pass filter (G) but without down sampling along

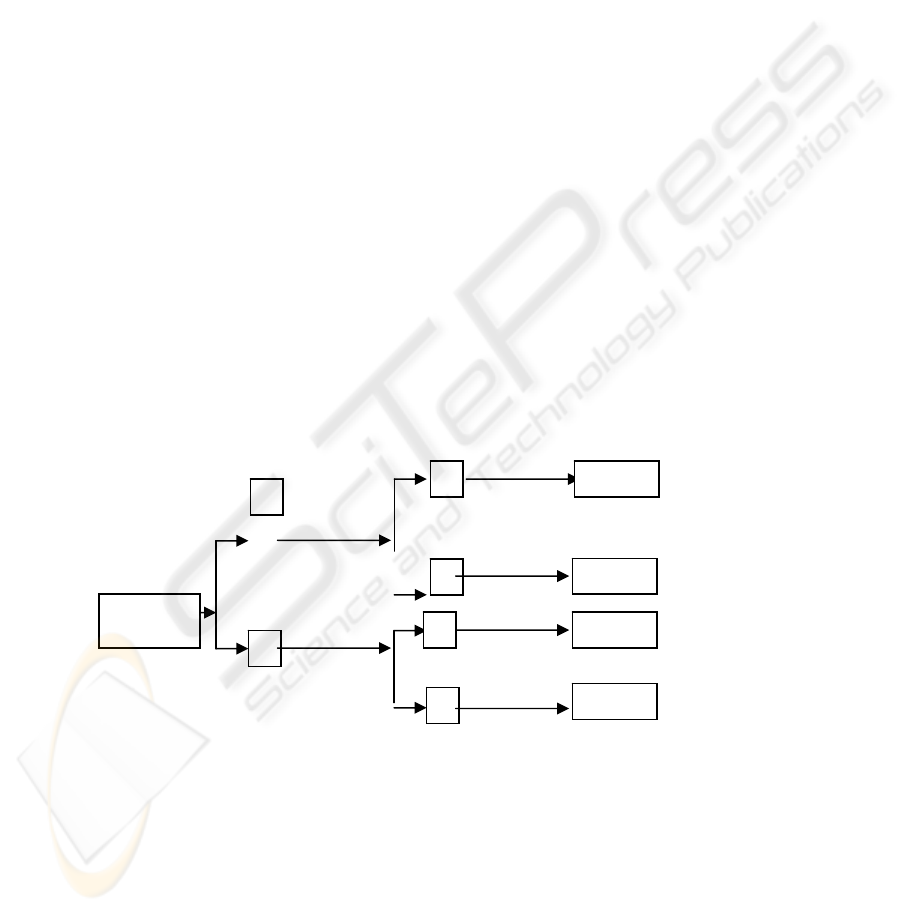

rows and columns [26]. In these experiments SWT (Figure 1) has been used resulting

in the decomposed image being of the same size after decomposition ensuring the

translational invariance.

Figure 1: Two-Dimensional Stationary Wavelet Transform of THT

• f

ll

sub-image: Both horizontal and vertical directions have low frequencies.

• f

lh

sub-image: The horizontal direction has low frequencies and the vertical

one has high frequencies.

• f

hl

sub-image: The horizontal direction has high frequencies and the vertical

one has low frequencies.

• f

hh

sub-image: Both horizontal and vertical directions have high frequencies.

f

lh

H

G

H

G

G

THT

f

ll

f

hl

f

hh

H

135

The wavelet used in these experiments is ‘db1’ and is implemented following the

multiresolution scheme [24]. As the THT contains gray level data integrated over

time the analysis on the multiresolution level will give good classification results. The

two-dimensional (2-D) SWT of a gray scale image correspond to multi-resolution

approximation expressions [23]. This work reports the use of 2-D SWT to propose a

pattern recognition solution for hand identification. Wavelet transforms using ‘db1’

are the simplest to implement, computationally the least demanding, provide high

spatial localization and are orthonormal [23]. For these reasons, this paper reports the

choice of ‘db1’wavelet transform to extract local intensity distribution information

from THT. Two-dimensional SWT is applied which results in four sub images of

THT, namely as average image (f

ll

), and three detail images (f

lh ,

f

hl,

f

hh,

). The average

image (f

ll

) is concatenated to make a single column vector representing a persons

biometric model the remaining three detail images (f

lh ,

f

hl,

f

hh,

) are discarded.

3.2 Identification Technique

3.2.1 Mahalanobis Distance

The Mahalanobis distance is a very useful way of determining the "similarity" of a set

of values from an "unknown” sample to a set of values measured from a collection of

"known" samples. It is computed by the equation below:

2

r

≡

(f - k

x

)′ C

-1

(f - k

x

)

where r is the Mahalanobis distance from the feature vector f to the mean vector

k

x

, and C is the covariance matrix for f.

Let k

1

, k

2

... k

n

be the means (templates) for the n-classes, and C

1

, C

2

, ..., C

n

are the

corresponding covariance matrices. Feature vector f is classified by measuring the

Mahalanobis distance from f to each of the means, and assigning f to the class for

which the Mahalanobis distance is minimum.

3.3 Identification and Recognition Performance

The main goal of this research is to test the identification based on the hand move-

ments of individuals, so accuracy is considered as the criterion for performance

analysis. Identification requires the subject being identified to lay claim to that iden-

tity, so that the method may decide on either accepting the enrolled subject or reject-

ing the subject. As with any security system, given that the subject is, or is not, a true

instance of the enrolled subject, there are four possible outcomes of the errors [1]

.The accuracy of any biometric method is generally judged by four error rates.

• Acceptance of Authentic Enrolled Subject (AA) or Genuine Accept Rate

(GAR)

• Acceptance of Imposter Subject (IA) or False Accept Rate (FAR)

• Rejection of Authentic Subject (RA) or False Reject Rate (FRR)

• Rejection of Imposter Subject (RI) or Genuine Imposter Rejection (GRR)

The biometric system accuracy requirements depend greatly on the application. In

forensic applications, such as criminal identification, FRR rate (and not FAR) is the

136

critical design issue, because we do not want to miss a criminal even at the risk of

manually examining a large number of potentially incorrect matches that the biomet-

ric system identifies. In some cases the FAR might be one of the most important fac-

tors in a highly secure access-control application, where the primary objective is

prevent impostors (e.g., at airports). Many civilian applications require the perform-

ance requirements to lie between these two limits of both FAR and FRR. In high-risk

applications such as bank ATM card verification, for example, a false match would

mean the loss of several hundred dollars, while a high FAR might lead to the loss of a

valued customer. As our main goal is to test the THT based method for its identifica-

tion accuracy for authentication. The first and the fourth identification rates are the

main goals to test the efficacy of the method. So AAES (%) and ARI (%) are com-

puted.

AAES (%)

= 100 X Total no of times correctly identifying an enrolled subject/ Total no of en-

rolled subject attempts

ARI (%)=100 X Total no of correctly rejecting an imposter/ Total no of imposter

attempts.

4 Method

The method of person identification is logically divided into two separate modules: an

enrolment (or training) module and a recognition (or testing) module. In first step the

experimentation for enrolment and recognition is carried out and the video sequences

of hand movements from the different individuals are captured and stored. From the

video sequences of different individuals THTs are computed and stored. Both the

enrolment and the recognition module make use of a feature extraction sub-module,

which converts the THTs into set of features (f

ll

images of THT), which are very

distinctive to the hand, which performs the movement. The enrolment module is re-

sponsible for enrolling new individuals in the system database. During the enrolment

phase, the individual supplies a number of samples of his/her hand movements. A

model of the individual is built based on the features extracted from the instances of

the hand movements. During the recognition phase, the individual supplies test sam-

ple of his/her hand movement, and a measure of similarity is computed between the

features of the test hand movement with the available model to establish the identity

of the individual, using KNN nearest neighbor approach using Mahalanobis Distance.

The efficacy of the technique is determined by computing the Accuracy of Accepting

an Enrolled Subject AAES (%) and Accuracy of Rejecting the Imposter (ARI%).

4.1 Experimental Settings

For testing the efficacy of the method and to test the performance of identification

AAES (%) and ARI (%) has to be computed. To compute the AAES (%) and ARI

(%) experiments have to be conducted. The experiments were conducted to check

whether the THT based method is subject dependent or gesture dependent method.

137

4.1.1 Experiments for Subject Dependency

To check the method with respect to the subject, each subject is assigned “moving all

fingers

clockwise” common hand movement (common to all subjects). Each subject

repeated the movement for 50 times. There were total (5X 50=250 video sequences of

common movements) from 5 different individuals. The THTs of each video sequence

was computed and features computed using

(f

ll

) images of THT. During the training of

the K-NN classifier the first 20 samples of the subject’s reference samples and

(4X30=120) of imposter samples from the 4 different subjects were used to set the

person’s biometric model by setting the mahalanobis thresholds, while the last 30

samples of

(f

ll

) images of THT per subject were used for testing to compute AAES

(%). Testing the other 30 samples of other 4 subjects was used as the imposter sam-

ples to compute the ARI (%). The subject who’s

(f

ll

) images of the THT representation

are within this threshold is correctly identified as the genuine subject otherwise the

impostor. For each subject the classifier is trained on recognising that subject’s 20

reference samples, whilst at the same time recognising that the other 4 subjects

(4X30=120) are not from the same subject. To facilitate this the desired output of the

mahalanobis classifier for the target subject was set to ‘1’,whilst the desired output

for the other 4 impostors is set to ‘0’.This process is repeated for each subject acting

as a target and the other 4 subjects as the impostors. For checking the efficacy of the

approach Accuracy of Accepting an Enrolled Subject (AAES %) and ARI (Accuracy

of Rejecting an Imposter %) is calculated and tabulated.

Figure 2: Hand Movement Assigned to all Subjects “All Finger Moving Clockwise”

4.1.2 Experiments for Gesture Dependency

To test the method with respect to the subject, each subject is assigned different sub-

ject specific movement as described in Figure 2. Each subject repeated the movement

for 50 times. There were total (5X 50=250 video sequences of subject specific move-

ments) from 5 different individuals. The THTs of each video sequence was com-

puted and features computed using (f

ll

) images of THT. During the enrolment process

each subject is assigned with a unique identifier. The identifier is assigned according

to the subject specific movement e.g., the subject assigned the movement left has the

unique identifier of “LEFT”. The subject who’s (f

ll

) images of the THT representation

are within this threshold is correctly identified as the genuine subject otherwise im-

First

Frame

Intermediate

Frame

End

Frame

THT

All Subjects With Common Hand Movement “All

Finger Moving Clockwise”

138

postor. The testing is repeated as explained in 4.1.1. For checking the efficacy of the

approach Accuracy of Accepting an Enrolled Subject AAES (%) and Accuracy of

Rejecting an Imposter ARI (%) is calculated and tabulated.

5 Results and discussion

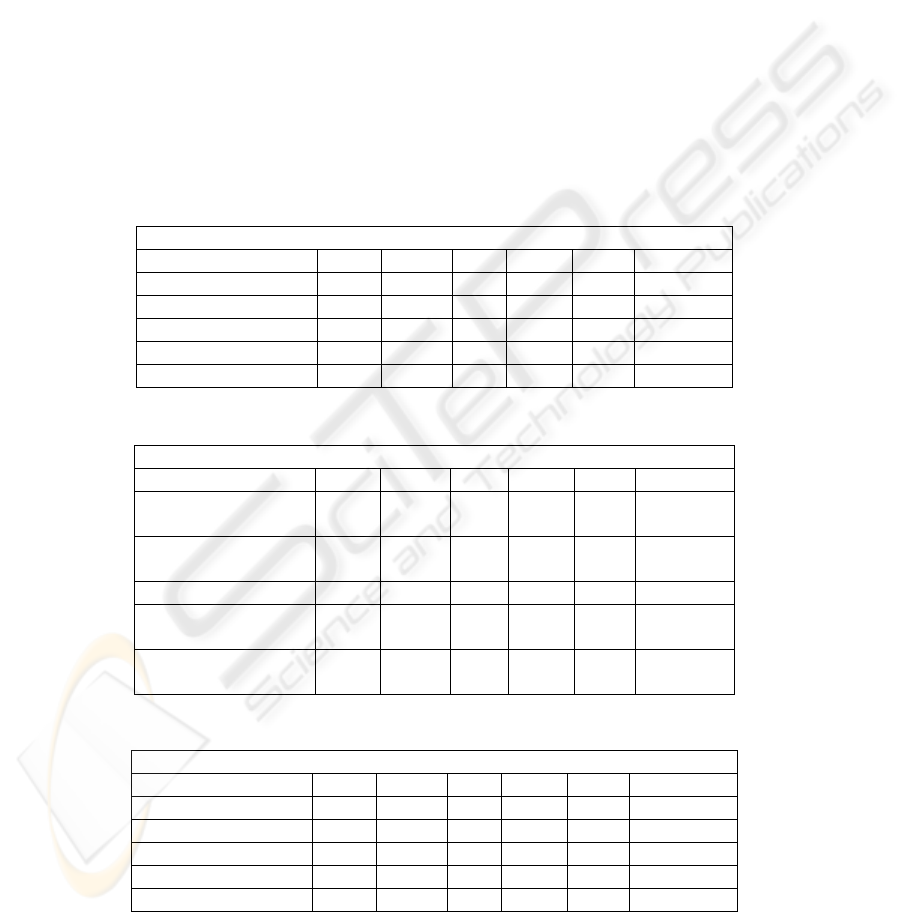

Table 1-4 describes the results of achieved AAES% and ARI % by the use of subject

specific hand movements and common hand movements, over a 5-subject population.

The test results indicate that the THT based person identification is movement de-

pendent. The row indicates the average accuracies corresponding to different sub-

jects. The test results indicate that the THT based method is dependent to the subject

specific movement. The good identification results are attributed to the (f

ll

) images of

THT as features and its better discriminating ability for identification. The use of (f

ll

)

images of THT has the advantage of computationally less expensive. Test result indi-

cates that the THT based method is gesture dependent method.

Table 1

Table 2

Table 3

AAES (%) WITH SUBJECT SPECIFIC MOVEMENTS

SUBJECT IDENTIFIER MTTF RIGHT FIST HOLD AFPR AVERAGE

MTTF 93 0 0 0 0 93 %

RIGHT 0 90 0 0 0 90 %

FIST 0 0 94 0 0 94%

HOLD 0 0 0 95 0 95 %

AFPR 0 0 0 0 96 96 %

ARI (%) WITH SUBJECT SPECIFIC MOVEMENTS

SUBJECT IDENTIFIER MTTF RIGHT FIST HOLD AFPR AVERAGE

MTTF 89.00 90.0

0

95.00 96.00

92.50 %

RIGHT 89.00 92.0

0

93.00 92.00

92.33 %

FIST 90.00 86.00 85.00 90.50 87.17 %

HOLD 96.00 87.00 86.0

0

94.00

89.00 %

AFPR 90.00 95.00 96.0

0

92.00

94.33 %

AAES (%) WITH COMMON MOVEMENTS

SUBJECT IDENTIFIER MTTF RIGHT FIST HOLD AFPR AVERAGE

MTTF 62 0 0 0 0 62 %

RIGHT 0 66 0 0 0 66 %

FIST 0 0 59 0 0 59 %

HOLD 0 0 0 58 0 58 %

AFPR 0 0 0 0 69 69 %

139

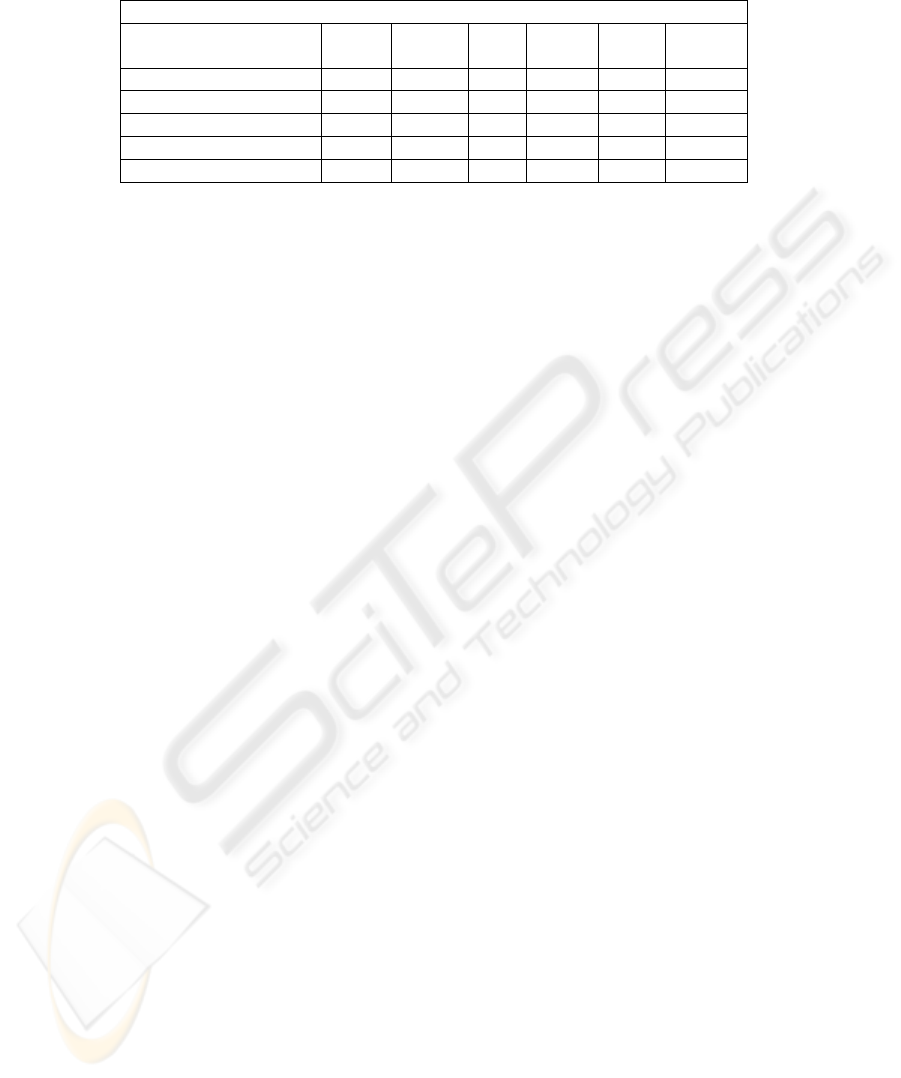

Table 4

6 Conclusion

This paper has researched a new method of person identification by the use of hand

movements as a new biometric measurement. The low level representation of the

action collapses the temporal structure of the motion from the video sequences of the

hand movements while removing any static content from the video sequences to gen-

erate temporal history templates THTs of the hand movement. THTs of different

individuals present distinctive 2-D motion patterns where each pixel describes the

function of temporal history of motion in that sequence. The scale, translation and

rotation invariant features have been used for discrimination of the THT for identifi-

cation. On the basis of the experimental results it can be concluded that the THT

based method can be used for biometric identification with proper caution and meth-

odology. On the basis of the preliminary experimental results it can be concluded

that: The THT based method can be successfully used for identification when subject

uses subject specific movements. The next step is to test the accuracy on large data-

base and its sensitivity analysis.

References

1. Prabhakar, S., Sharathpankanti. and A.K. Jain, "Biometric Recognition: Security and Pri-

vacy Concerns". IEEE Security and Privacy, 2003.

2. George Panotopoulos, D.P., "Hand Gesture Biometrics". Caltech Centre for Neuromorphic

Systems Engineering, 2001.

3. Nixon, M.S., et al., "Automatic Gait Recogntion". Biometrics: Personal Identification in

Networked society, 1999: p. 231-250.

4. Huang, P.S., C.J. Harris, and M.S. Nixon, "Human gait recognition in canonical space

using temporal templates". VISP, April 1999.

5. Huang, P.S., C.J. Harris, and M.S. Nixon., "Recognising humans by gait via parametric

canonical space". Artificial Intelligence in Engineering, 1999. 13: p. 359-366.

6. Little, J.J.a.J.E.B., "Recognising People by their Gait: The shape of the motion". Videre:

Journal of Computer Vision Research,, 1998. 1: p. 2-32.

7. Shutler, J.D., M.S. Nixon, and C.J. Harris, "Statistical Gait Recognition via Velocity Mo-

ments". Institute of Electrical Engineers, Savoy Place, London, 2000.

8. Niyuogi, S.A.a.E.H.A., "Analysis and Recognizing walking figures in XYT ". Proc. IEEE

conference on Computer Vision and Pattern Recognition, June 1994.

ARI (%) WITH COMMON MOVEMENTS

SUBJECT IDENTIFIER MTTF RIGHT FIST HOLD AFPR Aver-

age

MTTF 58 52 54 62 56.5 %

RIGHT 51 51 52 50 51 %%

FIST 62 51 56 54 53.66 %

HOLD 56 59 52 58 56.33 %

AFPR 50 65 55 63 61 %

140

9. Cunado, D., M.S. Nixon, and J.N. Carter, "Using Gait as a Biometric, via Phase-weighted

Magnitude Spectra". First International Conference, AVBPA Crans Montana Switzerland.,

March 1997.

10. Davis, J., "Recognizing movement using motion histograms ". MIT Media lab Technical

Report #487., March 1999.11. Davis, J.a.A.B., "A robust human-silhouette extraction

technique for interactive virtual environments". Proc. Modelling and Motion capture Tech-

niques for Virtual Environments, 1998.

12. Davis, J., "Representing and Recognizing Human Motion: From Motion Templates To

Movement Categories". International Conference On Intelligent Robots and Systems,Maui,

Hawaii, 2001.

13. Davis, J.a.A.B., "The representation and recognition of human movement using temporal

templates". Proceedings of Computer Vision and Pattern Recognition, June 1997.

14. Davis, J.a.A.B., "Virtual PAT: a virtual personal aerobics trainer". Proc. Perceptual User

Interfaces, November 1998.

15. Aaron F Bobick, J.D., "The recognition of Human Movements Using Temporal Templates".

IEEE Pattern Analysis and Machine Intelligence, 2001. 23 No 3: p. 257-267.

16. Arun Sharma, D.K.K., Sanjay Kumar, Neil McLachalan., "Representation and Classifica-

tion of Human Movement Using Temporal Templates and Statistical Measure of Similar-

ity". Workshop On Internet Telecommunications and Signal Processing. 2002. Wollon-

gong, Sydney Australia., WITSP'2002.

17. Starner, T.P., A., "Visual Recognition of American Sign Language Using Hidden Markov

Models". Proc. Intl Workshop on Automated Face and Gesture Recognition Zurich, 1995.

18. Pentland, I.E.a.A., "Coding, Analysis, Interpretation, and Recognition of Facial Expres-

sions ",. IEEE Trans. Pattern Analysis and Machine Intelligence, July 1997. 19, no. 7: p.

757-763.

19. Little, J., and J. Boyd, " Describing motion for recognition". International Symposium on

Computer Vision, November 1995: p. 235-240.

20. Sanjay Kumar, A.S., Dinesh K Kumar, Neil McLachalan., "Classification Of Visual Hand

gestures For HCI". ACIVS 2002 Proceedings Of the International Conference On Ad-

vanced Concepts for Intelligent Vision Systems. 9-11 Sept 2002. Ghent, Belgium.

21. Sanjay Kumar, A.S., Dinesh Kant Kumar, Neil McLachlan., "Classification of Visual Hand

Gestures Using Difference of Frames". Proc. of the Int. Conf. on Imaging Science and

Technology, Las Vegas, Nevada, USA , CISST'02. 2002. Las Vegas, USA: (CSREA Press,

2002).

22. Sanjay Kumar, D.K.K., Arun Sharma, Neil McLachalan., "Visual Hand Gestures Classifi-

cation Using Wavelets Transform". International Journal Of Wavelets and Multiresolution

Information Processing (IJWMP), December-2003. 1, No-4: p. 373-392.

23. I.Daubcheis, "Orthonormal bases of compactly supported wavelets". Pure Applied. Math,

1998. XLI: p. 906-996.

24. S.Mallat, A Theory for multiresolution Signal Decomposition:The wavelet Representation.

IEEE Trans. on Pattern Analysis and Machine Intelligence, 7th July 1989. 11: p. 674-693.

25. Sarlashkar, A.N.B., M; Malkani, M.J., "Feature Extraction Using Wavelet Transform for

neural network based image classification". System Theory Proceeding of the Thirtieth

Southeastern Symposium, Morgantown, WV, USA., 1998.

26. Chumsamrong, W.T., P. Rangsanseri, Y, "Wavelet-based texture analysis for SAR image

classification",. Proceedings Of IEEE International Geosciences and Remote Sensing Sym-

posium (IGARSS'99) Hamburg, Germany.,. g19990

141