PERSON FOLLOWING BEHAVIOR GENERATED WITH JDE

SCHEMA HIERARCHY

∗

Roberto Calvo, Jos

´

e M. Ca

˜

nas

Grupo de Rob

´

otica

Universidad Rey Juan Carlos

L

´

ıa Garc

´

ıa-P

´

erez

Escuela Polit

´

ecnica Superior

Universidad Carlos III

Keywords:

Robot behavior, autonomy, person tracking and pursuit.

Abstract:

One useful capability for service robots is person following. Service robots, like other autonomous robots, are

demanded to exhibit a full range of different behaviors. A control architecture is required to integrate them.

This paper describes the design and implementation of the person following behavior within JDE architecture,

as two groups of concurrent schemas. The robot follows a person wearing a coloured shirt, actively searches

for her when lost in the image, and safely avoids obstacles. Successful experiments with a real robot are also

described, that validate JDE for this vision-based real-time behavior.

1 INTRODUCTION

Robots are entering at homes. The Aibo robot from

Sony, sold as a pet, and the Roomba autonomous vac-

uum cleaner from i-Robot are two real samples, both

best sellers. For service robots it is desirable to ex-

hibit a whole set of different behaviors and to unfold

them accordingly to situation and goals. Their control

architectures are responsible of such ability.

Follow person behavior is part of a natural human-

robot interface for service robots. This paper faces

the generation of a such behavior with a hierarchi-

cal control architecture based in schemas. This ap-

plication has been already solved (Kleinehagenbrock

et al., 2002; Fritsch et al., 2004; Schlegel et al.,

1998; Sidenbladh et al., 1999) with real robots. The

added value of this paper is its implemention within

a perception-control architecture, named JDE (Ca

˜

nas

and Matell

´

an, 2002), which is designed to ease the

integration of other behaviors into the same system.

Such vision-based, soft real time behavior poses a

challenge and is a good test for JDE architecture.

Our solution uses shirt color as the person main fea-

ture. It does not perform any face recognition because

when the robot is following a person, it mainly sees

her shoulder. The robot must follow the tracked per-

son while avoiding bumping with her or with other

∗

This work has been funded by Spanish Ministerio de

Ciencia y Tecnolog

´

ıa, under the project DPI2004-07993-

C03-01

obstacles.

Second section of this paper briefly details the de-

sign and implentation of the behavior. Experiments

with a real robot are shown in third section. Finally,

conclusions of the work are presented.

2 PERSON FOLLOWING DESIGN

WITH JDE-SCHEMAS

The behavior is oriented to a robot endowed with

monocular vision over a pantilt unit, which can guide

the camera at will in any orientation. The general de-

sign of this behavior includes two groups of schemas

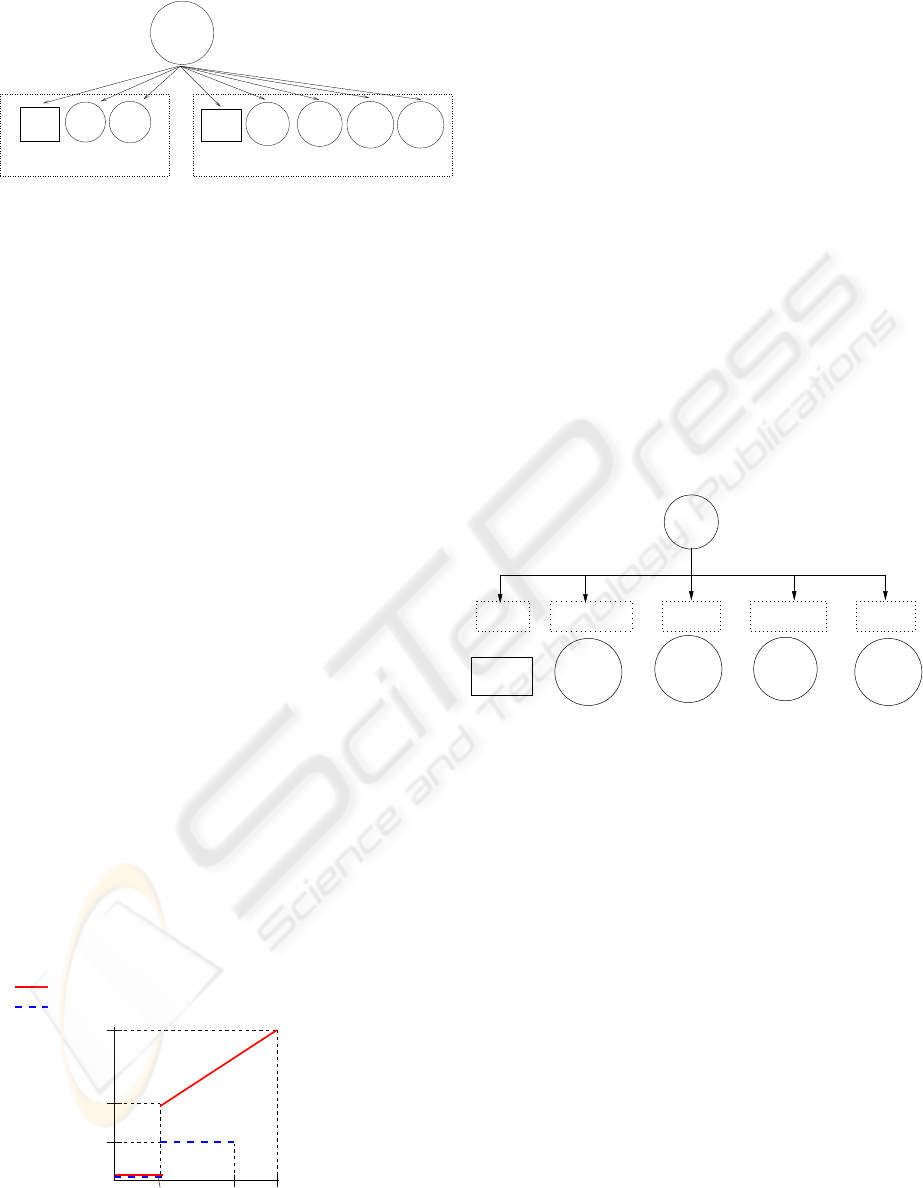

that run concurrently, as can be seen in figure 1. The

first group reactively controls the movement of the

pantilt unit that guide the camera to visually follow

the person, keeping her at the center of the image by

means of fast saccadic movements. The second group

reactively controls the robot’s base to align it with the

pantilt unit.

Visual tracking schemas

The visual tracking is performed with three schemas:

color-filter, center and search. The

camera data are processed by the color-filter

schema, which determines the presence and position

of the person in the images. If the target person ap-

463

Calvo R., M. Cañas J. and García-Pérez L. (2005).

PERSON FOLLOWING BEHAVIOR GENERATED WITH JDE SCHEMA HIERARCHY.

In Proceedings of the Second International Conference on Informatics in Control, Automation and Robotics - Robotics and Automation, pages 463-466

DOI: 10.5220/0001186104630466

Copyright

c

SciTePress

Obstacles

Avoid−

PANTILT CONTROL BASE CONTROL

color

filter

Search

Forces

Stop

VFF

Tracking

Base

FollowPerson

Center

Figure 1: JDE-schemas for person following behavior

pears inside the frames then the centerschema will

move the pantilt to keep the person centered. If she

does not appear, then the search schema will move

the pantilt around to look for the lost target.

The perceptive schema color-filter detects

the presence of the person in the camera images using

a HSI color filter. It is always in ACTIVE state. Its in-

put are such camera images. As output it builds three

dynamic stimuli which will be used by center and

search in their control decisions, and in the Action

Selection between center and search. First, a

boolean variable (presence) indicates the presence

or absence of the person in the frame. If the number of

pixels complying the color filter exceeds a threshold

then the presence variable is set to true. Second,

the location of such person in the image (position

variable) is computed as the center of masses of all the

pixels complying the color filter. And third, it stores

in the side variable whether the target appears in the

left or right side of the frame.

The center schema is an actuation one which

moves the pantilt in order to keep the target person

close to the center of the camera images. It accepts

position as input, and its output are the pantilt

commands. The preconditions of this schema will

hold whenever the presence variable is true. It fol-

lows the feedback control shown at figure 2, propor-

tional for pan axis, constant in tilt axis, and with a

dead band to avoid jitter. For instance, if the target

appears in the right side of the image, the pantilt will

rotate to the right.

16040

102

77,5

Distance (pixels)

36,5

120

Pan Speed (degrees/seg)

Tilt Speed (degrees/seg)

to image center

Figure 2: Proportional control in center schema

The search schema is an actuation one which

moves the pantilt horizontally to look around, search-

ing for the lost target person. Its output are the pantilt

movement commands and its preconditions will hold

whenever the presence variable is false, so the ro-

bot has lost its target person. This search begins turn-

ing the pantilt towards the last side (side) of the im-

age where the person target was seen. If the person

appeared in the right side before she was lost, the pan

will rotate to the right. When it reaches the maximum

angle, it rotates in the opposite direction.

Base tracking schemas

When moving the robot body both the target per-

son and the obstacles must be taken into ac-

count. The behavior design with JDE-schemas

is displayed in figure 3: Without objects around,

the base-tracking will be activated; Otherwise

stop, avoid-obstacle or vff will be activated

depending on the distance to obstacles.

Forces

near target

Lost or very

obstacle

Near

Stop

Robot

Obstacle to

medium distance

VFF

Avoid

Obstacles

far Obstacle

Tracking

Base

Person

Follow

Figure 3: Schemas for robot base movement

The robot navegation is based on repulsive forces

from the obstacles and attractive forces towards the

target. The forces schema builds and keeps up-

dated such forces, which are the input stimuli for the

actuation schemas. It is always in ACTIVE state. The

obstacles are detected reading the measurements of

sonar sensors. The relative angle between the target

person and the robot’s heading is indirectly measured

in the current position of pan motors.

The stop-robot schema stops the robot when

the target has been lost or it is too close to the robot.

Its output are speed commands to the motors of the

robot base. The presence of the person in the image is

read from the aforementioned presence. To detect

that the target is near, we count the rows in the image

with pixels similar to the color of the target person.

If such count exceeds a given threshold, then the tar-

get person is already very close to the camera. This

tecnique is robust to different person orientations.

When there is an obstacle too close to the robot,

then the avoid-obstacles schema stops the ro-

bot and it rotates until it finds an free space in front.

ICINCO 2005 - ROBOTICS AND AUTOMATION

464

Figure 4: Security region for VFF and

avoid-obstacles schemas

Its input are the sonar readings and its output are the

speed commands to base motors. Its precondition is

the presence of close obstacles, those inside the secu-

rity region in figure 4.

When the closest obstacle around the robot is at

medium distance, then the vff schema will lead the

robot avoiding such obstacle and advancing towards

the target person. This actuation schema use Virtual

Forces Field algorithm (Borenstein and Koren, 1991),

to choose a compromise solution among both trends.

Its precondition is the presence of objects inside the

security region in figure 4, which is wider than that of

avoid-obstacle schema.

When the target person is detected in the images

and there is no obstacle in such direction, then the

base-tracking schema will lead the robot to-

wards the target person. The position of pantilt is the

basic input of this schema, and is read from the pan-

tilt encoders. The angular deviation of pantilt, read

from pantilt encoders, indicates the direction to fol-

low the target. A proportional control is implemented

by this schema, similar to that of center schema,

but with the base motors, which are slower than the

pantilt ones. It also has a dead band, of 20 degrees.

Combination into global behavior

The concurrent execution of the described schemas

provides the pursuit behavior of the robot.

They are grouped together as children of the

follow-person behavior, which encapsulates

the desired functionality in a schema interface. This

schema is the ultimate responsible for the whole

pursuit behavior. In our experiments its activation

comes from the human operator through the graphical

interface of the application.

Following the design in figure 1, it will awake eight

children at the begining, separated in two independent

groups of schemas. All the schemas run concurrently,

some of them in CHECKING state, some on READY

state and one per group in ACTIVE state. There

will be continuous competitions inside each group

to control the pantilt and base motors respectively.

Only one winner is allowed per group. In the visual

tracking group, the search and center schemas

will alternatively win, depending on the presence of

the target person in the image. In the base track-

ing group, hopefully there will be no obstacle around

and the base-tracking schema will be ACTIVE

most of the time. There may be control overlaps, as

the preconditions of vff and avoid-obstacles

schemas may be fulfilled simultaneously. Cautiously,

the last one has priority over vff.

Regarding the perception side of the behavior, the

color filtering and force computations are performed

only when follow-person is ACTIVE. This is a

simple example of an attention mechanism.

3 EXPERIMENTS

The presented hierarchy of schemas for person fol-

lowing has been programmed and tested under differ-

ent conditions on a pioneer robot

2

. The target person

weared a green T-shirt during the tests, and so the tar-

get color was dark green.

Figure 5: Obstacle avoidance while pursuing target

In the experiments the robot successfully followed

the target along our university corridors and halls.

The target person constinuously moved from left

to the right while the robot followed her smoothly.

Along the typical pursuit in the corridors, the ro-

bot properly followed the target, alternating vff and

base-tracking schemas in ACTIVE state. The

target was continuously tracked by the pantilt unit.

The designed avoidance strategy depends on the

obstacle proximity. When possible, robot avoids the

obstacle without stopping, as can be observed in fig-

ure 5 when robot avoids a non-target person on the

corridor. If obstacle is very close, robot stops and

turns. When clearance is achieved robot starts mov-

ing towards the target again. Tracking the target dur-

ing the avoidance maneuver is possible because the

pantilt movement is decoupled from robot base move-

ment.

2

Some videos of the experiments are available at the

web, http://gsyc.escet.urjc.es/jmplaza/research-vision.html

PERSON FOLLOWING BEHAVIOR GENERATED WITH JDE SCHEMA HIERARCHY

465

Figure 6: Target lost, searched and recovered

Due to occlusions that may be caused by corners at

the end of corridors or obstacles, robot can lose the

target. An example of the robustness of target recov-

ering strategies can be seen in figure 6, when the tar-

get person disappeared from the camera images. Then

the visual tracking schemas switched from center

to search, rotating the pantilt unit to the right, look-

ing for the lost green T-shirt. Robot stopped the mo-

tors (stop-robot) and initiated the search with the

pantilt, rotated in the horizontal axis looking in the

last known target position. This searching method

have proved to quickly recover the target.

Figure 7: Robot keeps the distance using only vision

Maintaining a safe distance between robot and tar-

get is a key question in person following as we don’t

want the robot to bump into the human. As can be

seen in figure 7, the designed behavior detects the size

of the person in the image, and brakes the robot when

such size surpasses a safety threshold. There is no

explicit distance measurement. The stop-robot is

then activated, and it continuously checks its precon-

dition. When target moves away from the robot, the

schema hierarchy reconfigures itself, activating the

base-trackingand it starts to pursuing her again.

4 CONCLUSIONS

Person following behavior has been designed and im-

plemented inside JDE as two concurrent groups of

schemas. JDE combines several habilities in a small

hierarchy: target tracking using a color filter, naviga-

tion behavior pursuing the tracked person, avoiding

obstacles and mantaining a safe distance.

The robot exhibits good performance in the ex-

periments: it follows the moving target with smooth

movements avoiding obstacles and mantaining a safe

distance with the tracked person. In addition, when

target is lost the robot is able to recover the tracked

person quickly.

We are working to introduce new behaviors to test

JDE abilities to integrate a larger number of them.

REFERENCES

Borenstein, J. and Koren, Y. (1991). The vector field

histogram fast obstacle avoidance for mobile robots.

IEEE Journal of Robotics and Automation, 7(3):278–

288.

Ca

˜

nas, J. M. and Matell

´

an, V. (2002). Dynamic schema

hierarchies for an autonomous robot. In Francisco

J. Garijo, J. C. R. y. M. T., editor, Advances in Arti-

ficial Intelligence - IBERAMIA 2002, volume 2527 of

Lecture notes in artificial intelligence, pages 903–912.

Springer.

Fritsch, J., Kleinehagenbrock, M., Lang, S., Fink, A., and

Sagerer, G. (2004). Audiovisual person tracking with

mobile robot. In Groen, F., Amato, N., Bonarini, A.,

Yoshida, E., and Krose, B., editors, Proceedings of the

International Conference on Intelligent Autonomous

Systems, pages 898–906, Amsterdam. IOS Press.

Kleinehagenbrock, M., Lang, S., Fritsch, J., Lomker, F.,

Fink, G., and Sagerer, G. (2002). Person tracking

with a mobile robot based on multi-modal anchoring.

In Proceedings of IEEE International Workshop on

Human Interactive Communication (ROMAN), pages

423–429, Berlin (Germany).

Schlegel, C., Illmann, J., Jaberg, H., Schuster, M., and Wrz,

R. (1998). Vision based person tracking with a mo-

bile robot. In Carter, J. N. and Nixon, M. S., edi-

tors, Proceedings of the British Machine Vision Con-

ference, BMVC 1998, pages 418–427, Southampton

(UK). British Machine Vision Association.

Sidenbladh, H., Kragic, D., and Christensen, H. (1999). A

person following behaviour for a mobile robot. In Pro-

ceedings of the 1999 IEEE Int. Conference on Robot-

ics and Automation ICRA’99, pages 670–675, Detroit

(Michigan, USA).

ICINCO 2005 - ROBOTICS AND AUTOMATION

466