AUTONOMOUS MOBILE ROBOT ASSISTED HERDING

Pinky Thakkar, Leonard Wesley

Computer Engineering Department, San Jose State University, One Washington Square, San José, California USA, 95192

Keywords: Autonomous mobile robots, Herding, Autonomous human assistance.

Abstract: In this paper, we describe work that begins to address some of the issues related to developing an

autonomous mobile robotic capability to assist humans with herding animals. A novel aspect of this work is

the development of a capability to convey instructions to the robot via movements of a “toy human.” In this

work, no other explicit form of communication from the human to the robot is required. Furthermore, the

robot is able detect if the human is absent or is unable to herd, and to herd the animal autonomously if

required. We developed a herding framework that is based on low stress herding techniques. The robot uses

a pan-tilt-zoom camera and a laser ranging sensor to track the human and interpret the human’s movements.

We conduct two sets of experiments that demonstrate autonomous and co-operative herding behaviour of

the robot. We conclude by presenting experimental results that suggest our approach to developing a service

robot with assistive herding capabilities holds promise for scaling to more complex and sophisticated tasks.

1 INTRODUCTION

Developing autonomous mobile robots that are

capable of assisting humans with complex tasks

within minimally instrumented environments and

with minimal communication remains a challenge.

Having such a capability is potentially beneficial in

a variety of situations ranging from stealthy military

and law enforcement activities through civilian

activities such as herding domesticated livestock.

The focus of the work reported here is two-fold; to

develop an autonomous mobile robot that is able to

perceive and interpret the movements of a human as

a means to communicate instructions and the

human’s intentions to the robot, and to carry out

autonomous herding at the direction of or absence of

the human. An innovation of the work described

here involves developing cattle and human

behaviour models, perception techniques, and using

them along with a specific herding technique, called

low-stress herding (Smith, 1998), to carry out

assistive or autonomous herding activities. Some

researchers have attempted to communicate with

assistive robots by developing speech and gesture

recognition systems, to convey their intentions to the

robot (Fischer et al., 1996, Topp et al., 2004,

Yoshizaki et al., 2001). One differentiating aspect of

our work is that no overt speech or gesture

communication between the human and robot is

used. Rather, just human motion relative to the

animal and robot is used to communicate intentions

and instructions to the robot. Although this work

involves herding a single cattle-type animal, and the

human is modelled by a remotely controlled toy, it

represents an important incremental step toward

developing a scaled up approach that can handle

multiple animals and real humans. While we have

reduced the complexity of a real-world context by

using a “toy human” and colored objects, this

allowed us to focus our investigation more on

approaches, techniques, and methods that an

autonomous robot might employ to interpret human

movements. Such interpretations will be used to

direct the robot’s goals and locative movements to

carry the desired assistive behaviour. Once

significant progress is made on this aspect of our

efforts, then we can focus on removing the

simplifying constraints and simultaneously

improving our “interpretation” capability as we

approach a more real-world context.

2 RELATED WORK

Vaughan et al. demonstrated an automated herding

capability in a simulated as well as real world

context. They employed a potential field technique

to herd a flock of ducks to the desired location. Their

approach used an overhead camera to track the

position of the robot and the entire flock. Butler et

73

Thakkar P. and Wesley L. (2005).

AUTONOMOUS MOBILE ROBOT ASSISTED HERDING.

In Proceedings of the Second International Conference on Informatics in Control, Automation and Robotics - Robotics and Automation, pages 73-81

DOI: 10.5220/0001183300730081

Copyright

c

SciTePress

al. attempted to herd animals with the help of a

dynamic virtual fence that moved slowly and

automatically to herd animals to the desired location.

Shultz et al. developed a system that used genetic

algorithms to learn the complex task of shepherding,

in which, one mobile robot attempts to herd another

mobile robot to a desired area. Potter et al.

accomplished herding using computer-based

simulations to demonstrate the advantage of

heterogeneity over homogeneity in control systems.

Lein et al. used computer-based simulations to

compare the various shepherding behaviours, in

which, one or more shepherds control the motion of

a flock.

Considerable research has also been done in the

area of service robots that assist humans by fetching

objects and delivering them to a human. Yoshizaki

and Topp developed speech and gesture recognition

modules that the robot can use to help understand

the intentions of the user. Yoshizaki used Motion

Processor (Numazaki et al, 1998) to detect a hand of

the user, whereas, Topp used vision based gesture

recognition algorithms to track a face and a hand.

Fischer utilized a high-level natural language

processing, augmented with CCD camera and a 3D

environmental model to assist in screen based

monitoring.

Despite the advances of previous work, some

technical gaps remain. Ultimately, truly purposeful

and autonomous robot assisted herding must be

accomplished within un-instrumented environments.

Furthermore, it must be anticipated that herding in

outdoor natural environments will require robots to

carry out tasks in contexts that present varying

degrees of perception and terrain challenges. Dust,

precipitation, and noise can impair perceptions.

Terrain elevation changes and obstacles can

preclude line-of-sight perception and movement

along optimal paths. Being able to carry out assistive

herding tasks when communication is limited or

absent, presents challenges that have not been

completely addressed to date. Indeed, the work

described here does not completely address these

herding challenges. However, it does begin to bridge

some of the technical gaps that remain between

successful previous work and a truly autonomous

assistive herding capability. This is accomplished

by significantly limiting the amount of

“instrumentation” that is required to identify the

animal and human, as well as limiting the

communication between human and robot.

3 HERDING BACKGROUND

Humans have been domesticating animals for many

years. It has brought prosperity to society and has

been one of the cornerstones for human progress,

trade and commerce. One of the most critical aspects

of domesticating animals has been the actual upkeep

of the stock. Herding animals is a very important

part of the domestication process, whether it is for

point-to-point transport of livestock, grazing,

commerce, or predator avoidance.

Traditionally, herding was accomplished by the

brute force approach of inducing fear in the animal,

which can cause significant stress in animals. Stress

causes reduction in weight gain, meat quality, milk

production, reproduction performance and immunity

from diseases. In the work reported here, herding is

accomplished by using the low-stress herding

technique where the flight zone of an animal is used

to control and manoeuvre the animal in the desired

direction. The key here is that the animal is herded

from the boundary of its flight zone, which causes

anxiety, not fear, in the animal. Thus the animal

perceives the human as its protector, and not its

adversary. Additional advantages of low-stress

herding techniques are that they facilitate improved

performance with respect to weight gain, feed

efficiency, reproduction performance and immunity

(Blezinger, Gadzia, 1999 and Grandin 1989).

4 ANIMAL MODEL AND LOW

STRESS HERDING

TECHNIQUES

In this section, we introduce and briefly describe the

animal model and the low-stress herding technique

employed in this effort. A glossary of herding terms

that are used here can be found in the section

entitled “10 Herding Glossary.”

Remote controlled small toy vehicles were used

in place of real livestock and a human. The robot

recognizes the animal (i.e., one of the toy vehicles)

with the help of a very small coloured tag that is

attached to the vehicle. In a real world context, we

anticipate using the actual colours(s), shape, and size

of the livestock. In addition to colour, the animal

model consists of the following properties:

1) Flight distance: The distance from the animal

that will induce anxiety in the animal if the robot or

human is closer than that distance. The robot

determines this value before it actually attempts to

herd the animal.

ICINCO 2005 - ROBOTICS AND AUTOMATION

74

2) Minimum flight distance: The minimum

distance the robot is required to keep, from the

animal.

3) Blind-zone: This is the angle behind the rear

of the animal. The robot takes care to not enter it,

except when it has to turn the animal, in which case,

the robot attempts to enter it.

4) Movements: We define the following three

temporal movements of the animal relative to the

robot:

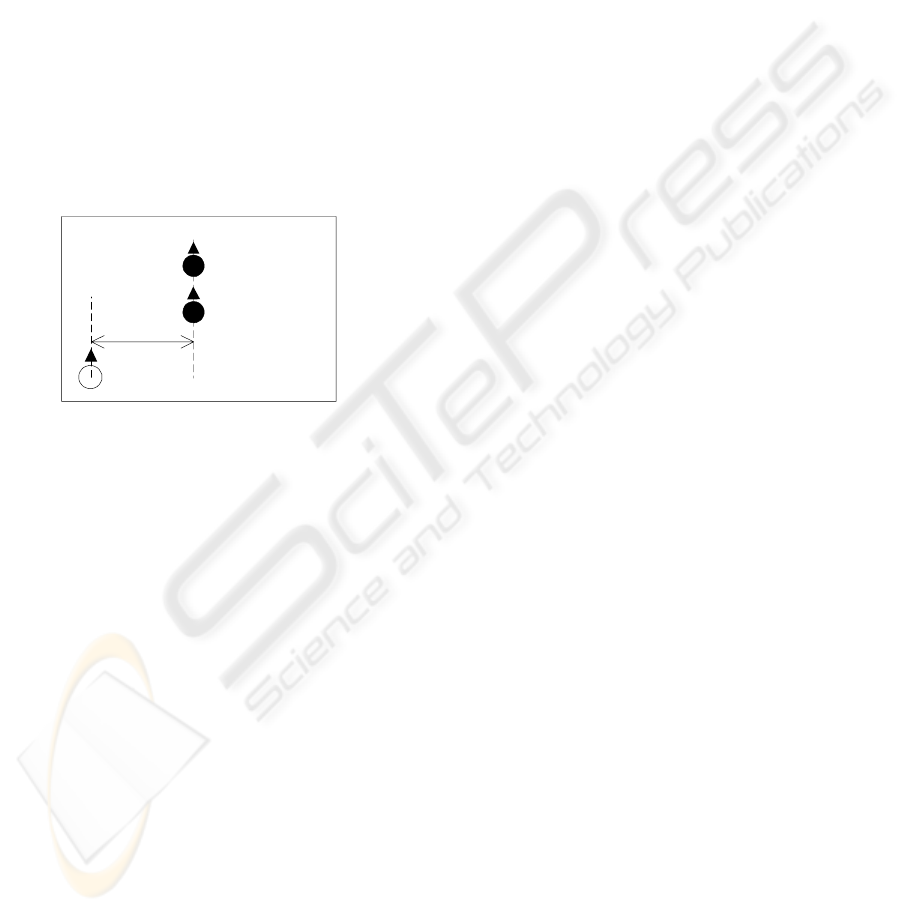

a. Moving Forward: When the distanceY, in

Figure 1, remains within a pre-specified range, the

robot perceives this as a “moving forward” type of

movement.

b. Turning: When the distanceY between the

animal and the robot is outside a pre-specified range,

the robot perceives this as a turning type movement.

c. Stopped: When the animal is neither moving

forward nor turning, then the robot perceives that the

animal has stopped moving.

distanceY

location of animal

at time t1

location of animal

at time t2

Robot

Figure 1: Moving forward

In low-stress herding techniques, the animal is

always herded from the edge of the flight zone.

Hence, the first step toward herding is to determine

the flight zone of the animal. When herding multiple

animals the flight zone is determined by a zigzag

motion. However, when herding an individual

animal, the flight zone is determined by making a

slow diagonal approach toward the rear of the

animal, as shown in Figure 2(a). When the animal

moves forward, the herder has reached the edge of

the flight zone. Other approaches to determining the

flight zone might be equally or more effective, such

as the shoulder approach. However, because of the

geometry of our animal model, we are employing

the diagonal approach.

To herd the animal forward, the herder turns in

the same direction in which the animal is facing,

takes up the outrider’s position and moves along

with the animal (Figure 2(b)). To turn the animal,

the herder employs the tail-head approach, in which,

the herder turns towards the rear of the animal and

slowly moves towards it (Figure 2(c)). When the

herder reaches the blind zone area on the edge of the

flight zone, the animal moves forward with a turned

head to keep the herder in view, this causes the

animal to turn. The herder continues to approach the

animal until the animal has turned the desired

amount.

To stop the animal, the herder stops following

the animal. The animal continues its motion until the

herder is out of the flight zone, at which point, the

animal stops and turns to look at the herder. When it

finds that the herder is not moving in its direction, it

relaxes and engages in some other activity such as

grazing.

5 HUMAN MODEL, DESIGN AND

APPROPACH OF ASSISTIVE

HERDING CAPABILITY

The robot identifies the human with the help of a

colour tag that is attached to one of the toy vehicles.

In addition to the colour tag, the human model

consists of a specification of the temporal human

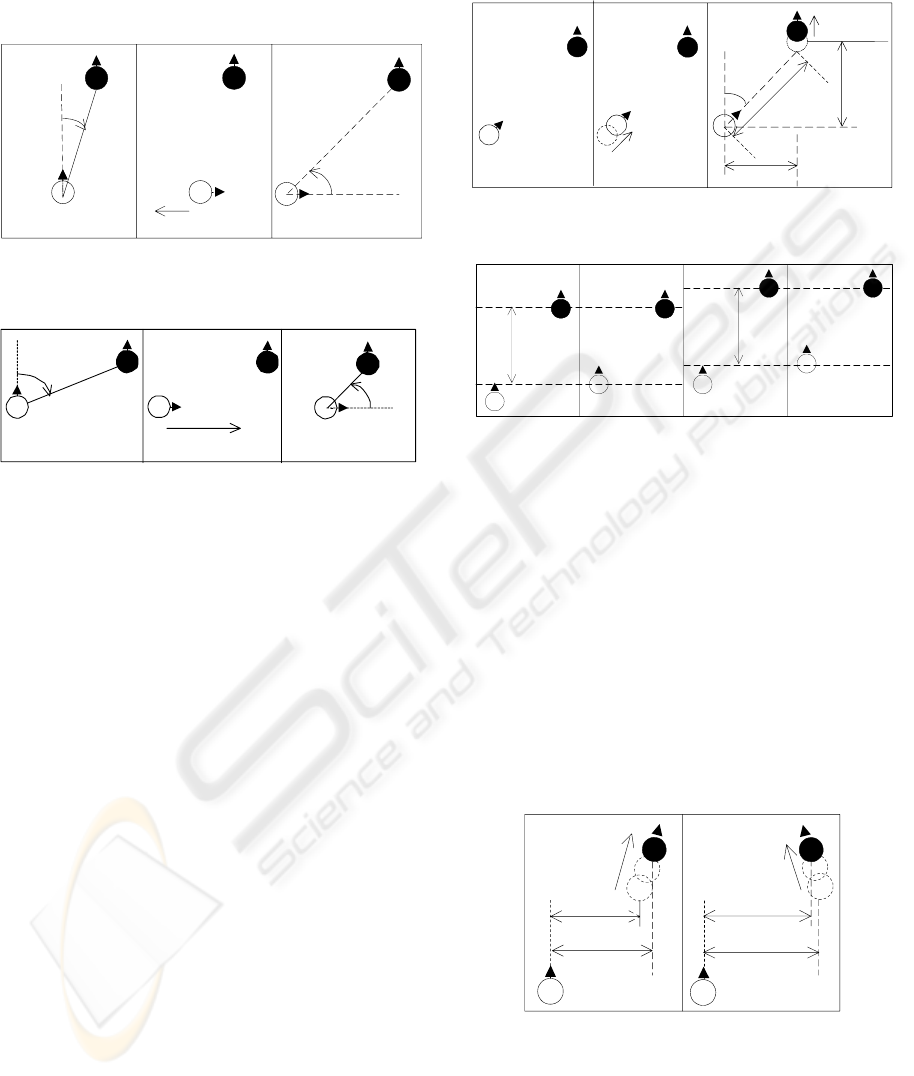

movements relative to the animal and the robot.

1) Moving towards the animal: As illustrated in

Figure 3(a), when the distanceY between the robot

and the human reduces over time, and angle1 and

angle2 are approximately equal, the robot perceives

this movement of the human as moving towards the

animal.

2) Moving away from the animal: As illustrated

in Figure 3(b), when the distanceY between the

robot and the human increases over time, then the

robot perceives this human movement as moving

away from the animal.

3) Stopped: When the human is at an angle equal

to or greater than |85| degrees relative to the robot,

the robot perceives this as the stopped movement.

The robot and the human are always on either

side of the animal. When the robot perceives the

human moving towards the animal, it interprets this

as a command to initiate herding. Once herding

initiation is successful (i.e., the animal starts to

move), the robot begins to herd the animal in the

forward direction.

To turn the animal, the human either moves

toward or away from the animal depending on the

direction in which the animal is to be turned. If the

human wants to turn the animal, then the human

moves towards the animal, or if the human wants the

robot to turn the animal, it will move away from the

animal to let the robot turn it. Hence, if the robot

perceives that the human is moving towards the

animal, then it interprets this as a command for it to

move out of the flight zone so that the human can

turn the animal. However, if the robot perceives that

the human is moving away from the animal, then it

interprets this as a command for it to turn the animal.

AUTONOMOUS MOBILE ROBOT ASSISTED HERDING

75

direction of

herder's

approach

direction in which the

animal will move

herder takes up

the outrider's

position

Figure 2: Low stress-herding techniques: (a) Initial Motion, (b) Herding forward, (c) Tuning the animal (Illustrations are

slight modified from (Smith, 1998)

angle1

location of human

at time t1

location of human

at time t2

Animal

Robot

distanceY

location of human

at time t1

location of human

at time t2

distanceY

distanceY

angle2

Figure 3: Human movements (a) Human is moving towards the animal (b) Human is moving away from the animal

Figure 4: Initial herding position

When the robot perceives that the human has

stopped moving then it interprets this as a command

for it to stop herding.

6 IMPLEMENTING THE LOW

STRESS HERDING

TECHNIQUES

Our approach to implementing low stress herding

techniques involves developing separate modules for

initiating herding, moving the animal forward,

turning the animal, and stopping the animal. We

briefly discuss each of these in this section.

6.1 Initiate Herding

This module is broken into the following two sub-

modules:

6.1.1 Initial Position

In this subroutine, the robot attempts to position

itself diagonally, between |30| and |60| degrees, to

the rear of the animal, as shown by the shaded

region in Figure 4.

The robot scans the environment to locate the

animal. With the help of a laser ranging sensor, it

measures the angle between the direction to the

animal and the current heading of the robot. If the

angle is outside the range of |30| and |60| degrees,

then the robot attempts to turn and move to the

initial position as follows:

If the animal is at an angle between 0 and –30

degrees, then the robot turns -90 degrees and moves

backwards until the animal is at an angle 45 degrees

left or right of the robot’s forward facing direction.

For example, if the animal is at an angle –25

degrees, as shown in Figure 5(a), then the robot

turns –90 degrees and moves backwards, Figure

5(b), until the animal is at an angle 45 degrees, as

shown in Figure 5(c). Similarly, if the animal is at an

angle between 0 and 30 degrees, then the robot turns

90 degrees and moves backwards until the animal is

at an angle –45 degrees.

If the animal is at an angle less than -60, then the

robot turns -90 degrees and moves forward until the

animal is at angle of 45 degrees. For example, if the

animal is at an angle of –70 degrees as shown in

Figure 6(a), then the robot turns – 90 degrees and

ICINCO 2005 - ROBOTICS AND AUTOMATION

76

moves forward, Figure 6(b), until the animal is at

angle 45 degrees, as shown in Figure 6(c). Similarly,

if the animal is at an angle greater than 60 degrees,

then the robot turns 90 degrees and moves forward

until the animal is at angle –45 degrees.

-25

direction of motion

45

Robot

Animal

(a) (b) (c)

Figure 5: Moving to initial position when the animal is at

–25 degrees

-70

45

direction of motio n

(a) (b)

(c)

Robot

Animal

Figure 6: Moving to initial position when the animal is at

–70 degrees

6.1.2 Initiating Movement

The robot turns in the direction of the animal, Figure

7(a), and slowly approaches it, until the animal

moves forward, as shown in Figure 7(b). The dotted

circles in this figure indicate the forward motion of

the robot toward the animal. The robot notes the

flight distance and the flight angle, and calculates

the flightX and flightY distances as shown in Figure

7. The dotted circle shows the location of the animal

before it moved forward. The distance flightX is

used to keep the robot on the edge of the flight zone.

The distance flightY is used in the Herding forward

routine to herd the animal in the forward direction.

6.2 Herding Forward

To move the animal forward after arriving at the

initial herding position, the robot turns in the

direction that the animal is facing, takes up the

outrider’s position, and slowly moves along with the

animal, as illustrated in Figure 8. As seen in Figure

8(a), the robot is outside the flight zone and so it

moves forward and reaches the edge of the flight

zone, as shown in Figure 8(b). As a result the animal

moves forward, leaving the robot outside the area of

the flight zone, as shown in Figure 8(c). Hence, the

robot moves forward to reach the edge of the flight

zone, as shown in Figure 8(d). This process is

repeated until the animal has moved the desired

distance.

direction

of motion

flightX

flightY

flight

distance

flight

angle

direction of

motion

Robot

Animal

(a) (b) (c)

Figure 7: Initiating movement

(a) (b) (c) (d)

flightX

flightX

Figure 8: Herding forward

Since the size of the flight zone is dynamic, it may

change in size during the herding process. Flight

zones that become larger do not present a problem,

as the animal will move forward before the robot has

reached the edge of the flight zone. If the flight zone

becomes smaller, then the animal will not move

forward when the robot reaches the edge of what it

perceives or recalls as the flight zone. In this case,

the robot moves forward for a pre-specified distance.

If the animal resumes its forward motion then the

robot updates its flight zone (flightX) value, but if

the animal still does not move, then the robot

invokes the Initial Motion routine to recalculate the

distances, and subsequently invokes the Herding

forward routine.

direction

of motion

direction

of motion

flightY

flightY

distanceY

distanceY

Figure 9: Animal moving at an angle

Complicating this task is the requirement that the

robot recognize when the animal might not be

heading in the desired direction, but may be moving

at some angle relative to the desired direction, as

shown in Figure 9. The robot recognizes this

AUTONOMOUS MOBILE ROBOT ASSISTED HERDING

77

behaviour by comparing the difference between

distance Y and flight Y with a pre-specified value.

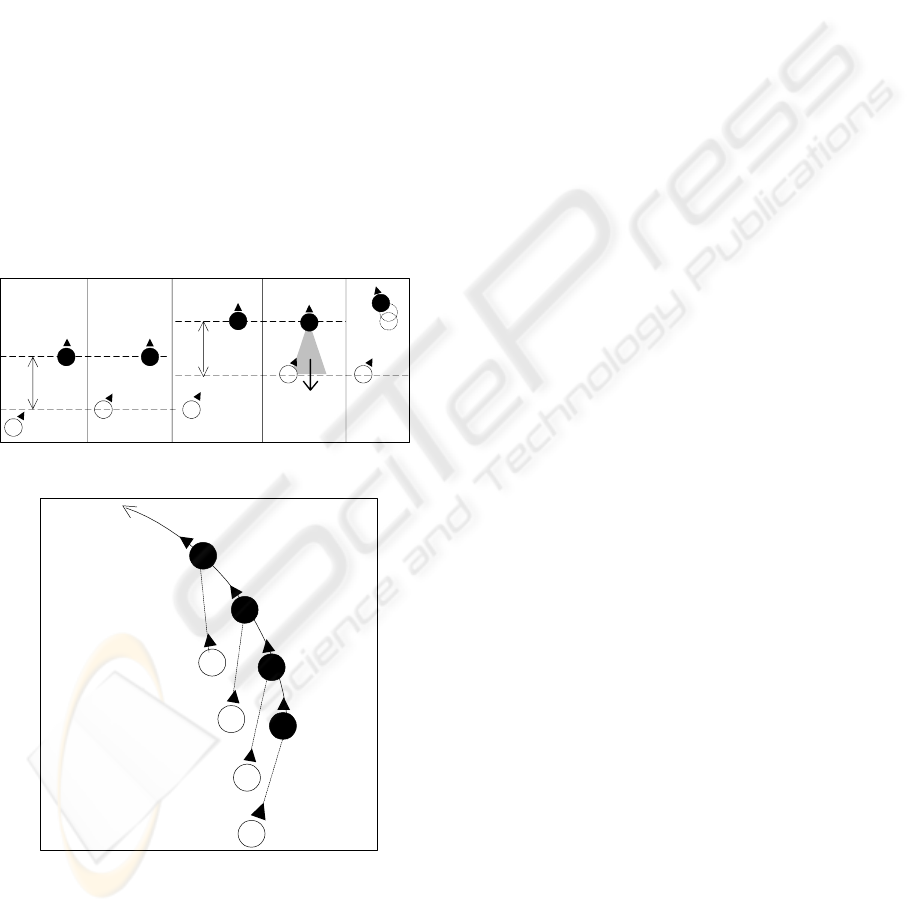

6.3 Turning the Animal

To turn an animal, the robot turns and moves

towards the rear of the animal until the animal is

oriented in the desired direction, as illustrated in

Figure 10. As sees in Figure 10(a), the robot is

facing the animal and is outside the flight zone. The

robot moves forward until it reaches the edge of the

flight zone, as shown in Figure 10(b). Hence, the

animal moves forward, and as a result, the angle

made by the animal decreases, as seen in Figure

10(c). The robot continues moving in the direction

of the animal and at some point in time, reaches the

blind zone of the animal, as shown in Figure 10(d).

To keep the robot in view, the animal moves

forward with a turned head, as a result the animal

turns, as shown in Figure 10(e). The robot continues

to move toward the animal until the animal has made

the desired turn, as shown in Figure 11.

Blind-zone

of the

animal

flightX

flightX

(a) (b) (c) (d) (e)

Figure 10: Steps to execute the turn routine

direction of motion

of the animal

Animal at

time t1

Animal at

time t2

Animal at

time t3

Animal at

time t4

Robot at time t1

Robot at time t2

Robot at time t3

Robot at time t4

Figure 11: Turning the animal

6.4 Stopping the Animal

To stop the animal from moving, the robot removes

itself from the flight zone of the animal.

7 IMPLEMENTING THE

ASSISTING CAPABILITY

Our approach to implementing the assisting

capability involves developing a module for

perceiving human movements. Based on the value

returned by the module, an appropriate herding

module is invoked. Before the robot begins tracking

the human, it executes the Initial Position routine to

move to the initial herding position. Then, it scans

the environment to locate and track the human.

1) Initiate Herding and Herding Forward: When

the robot finds the human moving towards the

animal, as shown in Figure 12(a), it stops tracking

the human and executes the Initiate Motion routine,

followed by the Herding Forward routine. It resumes

tracking the human while herding the animal

forward.

2) Turning the Animal: If the robot perceives that

the human is moving towards the animal, it stops its

motion to let the human turn the animal, as shown in

Figure 12(b). However, if the robot finds the human

moving away from the animal, then it perceives this

as the human asking the robot to turn the animal, and

so the robot executes the “Turning the animal”

routine as shown in Figure 12(c).

3) Stopping the Animal: To stop the animal, the

human stops its motion. When the human makes an

angle greater than |85| degrees relative to the animal,

the robot perceives this as the human wanting to stop

the animal, and so robot executes the “Stopping the

animal” routine.

8 EXPERIMENTS

8.1 Robot Hardware

The robot used in this work was a Pioneer2 AT

robot from Activmedia Robotics. In addition, two

remote controlled toy cars were used to represent the

animal and human respectively. The robot has a pan-

tilt-zoom (PTZ) camera, laser range finder (mounted

in front of the robot), and 8 rear bumper sensors.

The PTZ camera is used to capture images that

are processed in order to recognize the animal and

the human. It can pan up to 180 degrees (-90 to 90

degrees) from the forward direction of the robot.

ICINCO 2005 - ROBOTICS AND AUTOMATION

78

(a) Initiate herding (b) Turning the animal

(Human is attempting turning the

animal )

(c) Turning the animal

(Robot is attempting to turn the

animal)

(d) Stopping the animal

Human at time t1

Human at time t2

direction

of motion

direction

of motion

direction

of motion

angle > |85|

Figure 12: Assisting behaviour

Figure 13: Initiate herding

The laser range finder is used to help determine the

angle and distance between the robot and the animal,

and between the robot and the human. It is also used

for object tracking. The laser can scan up to 180

degrees in the forward facing direction (-90 to 90

degrees). The bumper sensors are used to prevent

damage to the robot by halting the robot when

pressed. They are additionally used to command the

robot turn the animal in some situations.

The toy cars can be moved forward, in reverse,

turned left or right, and stopped by a joy-stick that is

located on a remote control box. A pink-coloured

stick is mounted on top of one car to identify the

animal. Similarly, an orange-coloured stick is

mounted on top of the other car to identify the

human. Thus, the approach developed here assumes

that the animal being herded can be discerned from

the human by colour. In the future, colours will be

replaced by shape, size, and texture recognition and

tracking capabilities.

Two sets of experiments were conducted to

demonstrate the autonomous and assistive herding

capabilities of the robot. One experiment

demonstrates the robot’s autonomous herding

capabilities, that is, without a human. The second

experiment demonstrates the robot’s ability to assist

a human with herding.

8.2 Autonomous Herding Behaviour

In these experiments, the robot is initially placed at

various locations behind the animal at a distance

greater than the flight distance of the animal.

Experiment 1:

The robot is placed in such a way

that the angle it makes to the rear of the animal is

approximately -20 degrees. The task for the robot is

to herd the animal forward. When the robot is

herding the animal forward, the flight zone of the

animal is reduced. When this happens, the task for

the robot is to re-determine the flight zone and begin

herding the animal forward. The robot is asked to

turn the animal by pressing one of its bumpers, (in a

real-world context, a map of the terrain would be

available for the robot to determine when to turn an

animal toward the goal location). Hence, the task for

the robot is to turn the animal 90 degrees. Once

turned, the robot resumes herding the animal in the

forward direction.

Result:

The robot scans the environment to

locate the animal. Once located, it attempts to move

to the initial herding position by turning –90 degrees

and moving backwards until the animal is at an

angle of 45 degrees with respect to the robot, as

shown in Figure 13. The robot scans the

environment to locate the human. When it cannot

locate the human, it turns to face the animal and

slowly moves forward until the animal begins to

move forward. The robot then turns in the direction

in which the animal is facing and starts following it

from the outrider’s position.

In practice, environmental conditions such as the

size and orientation of shadows, lighting, and other

factors can dynamically change an animal’s

effective flight zone. At times, the robot can

unexpectedly find itself outside or inside a flight

zone due to these and other situational dynamics. It

is, therefore, possible that if the animal stops while

AUTONOMOUS MOBILE ROBOT ASSISTED HERDING

79

being herded, it might be because the flight zone has

been reduced in size. Perceiving this, the robot then

approaches the animal until the animal starts moving

forward again.

After the robot has herded the animal forward for

sometime, one of the bumpers of the robot is pressed

to indicate that the animal should be turned. The

robot then turns and moves towards the animal, as

shown in Figure 14. When the animal has turned by

an approximately -90 degrees, the robot turns –90

degrees and begins herding the animal in the

forward direction.

Figure 14: Turning the animal

8.3 Assisting Herding Behaviour

In these experiments, the assistive herding behaviour

of the robot is tested where the robot herds the

animal as per the intentions of the human.

Experiment 2:

The robot has to assist the human

in herding the animal. The robot is placed in such a

way that the angle it makes to the rear of the animals

is approximately, 20 degrees.

Result:

The robot scans the environment to

locate the animal. When the animal is located, the

robot turns 90 degrees and moves backwards until

the animal is at –45 degrees with respect to the

robot.

The robot scans the environment to locate the

human. When the human is located, it waits for the

human to move in the direction of the robot. As soon

as the human moves toward the animal, the robot

begins to move toward the animal. From hereafter,

the robot takes the same actions that it took in

Experiment 1.

The robot starts tracking the human along with

the animal. After herding for awhile, the human

stops its motion. The robot continues to herd the

animal forward until the human is at an angle 85

degrees with respect to the robot. The robot then

stops moving to stop herding the animal.

Experiment 3:

The robot has to assist the human

in herding the animal as before. However, in this

case, the herder moves away from the animal to let

the robot turn the animal. The robot is placed in such

a way that the angle it makes to the rear of the

animal is approximately -50 degrees.

Result:

The robot is already at the initial

position, and therefore it scans the environment to

locate and track the human. As soon as the human

moves toward the animal, the robot begins to move

towards the animal. From hereafter, the robot takes

the same actions that it took in Experiment 2 and

begins herding the animal forward.

After herding forward for a while, the human

turns and moves at an angle of –45 degrees w.r.t the

robot. Seeing this, the robot invokes the turn routine

as it did in Experiment 1.

Experiment 4:

The robot has to assist the human

in herding the animal as before. However, in this

case, the herder performs the turning operation. The

robot is placed in such a way that the angle it makes

to the rear of the animals is approximately 40

degrees.

Result:

Since the robot is already at the initial

position, it takes the same actions as described in

Experiment 3 until the robot begins to herd the

animal forward. After herding the animal forward

for a while, the human turns and moves towards the

animal in order to turn it. Seeing this, the robot stops

its motion to allow the animal to turn.

9 CONCLUSION

We described a robotic system that is able to assist a

human with herding an animal. We showed that the

system is able to adjust its herding behaviour as per

the intentions of the human that are conveyed to the

robot by the movements of the human. A key factor

here is that the assistive herding task is carried out

with minimal communication and within minimal

instrumented environments. We also demonstrated

the autonomous herding capability of the robot, in

situations where the human is absent.

We recognize that there remain complex

challenges to herding real animals that we have not

addressed now because, in part, we are using toy

human and cattle models. However, this paper

represents an initial step towards developing a

purposeful assisted herding behaviour by designing

and developing key representations of the animal

and the human behaviour, and also the key herding

strategies and techniques that will be useful and

expanded in follow-on work that will involve live

animals.

Our work described here is limited to herding a

single cattle-type animal. In future work, we plan to

develop methods for scaling our approach to handle

multiple animals. The other limitation of our work is

the use of colour tags to recognize the animal and

the human. As part of our future study, we intend to

develop more advanced object recognition

ICINCO 2005 - ROBOTICS AND AUTOMATION

80

algorithms that take into account several other

attributes such as shape, size and so forth.

10 HERDING GLOSSARY

Herding: Controlling and maneuvering the animal

in the desired direction.

Flight Zone:

Also known as the flight distance, is

the area around the animal which when intruded,

causes anxiety in the animal and the responds by

moving away. However, if this area is deeply

penetrated, then the animal panics and flees. Its size

is dynamic and changes depending on several

factors, such as, the behaviour of the herder, weather

conditions, state of excitement of the animal, and so

forth. The animal’s flight zone plays a key-role in

low-stress herding techniques. (Smith, 1998,

Grandin, 1989).

Animal Vision:

Cattle have wide-angle vision and

can see behind themselves without turning their

heads. However, they have a small blind spot of

about 15-30 degrees behind their rears. The handler

avoids staying in the blind-spot area for too long as

it can lead to panic in the animal.

Outrider Position:

The outrider position is “the

position the herder takes on or adjacent to the

boundary of the flight zone.

ACKNOWLEDGEMENTS

Thanks to Dr. Burt Smith, Dr. Haluk Ozemek and

Dr. Winncy Du for their helpful suggestions on this

paper.

REFERENCES

Blezinger, S., Producers can reduce stress to cattle by

improving handling methods.

http://www.cattletoday.com

Butler, Z., Corke, P., Peterson, R., Rus, D., 2004. Virtual

fences for controlling cows. In IEEE International

Conference of Robotics and Automation, volume 5,

pages 4429 - 4436, May 2004.

Fischer, C., Buss, M., Schmidt, G., 1996. Human robot

interface for intelligent service robot assistance. In 5th

IEEE International Workshop on Robot and Human

Communication, pages 177 – 182, 11-14 Nov 1996.

Gadzia, K. 1999. Back to the Future: The Environmental

Benefits of Herding. In The Quivira Coalition, vol. 2,

no. 3, March 1999.

Grandin, T. 1989. Behavioural Principles of Livestock

Handling. In American Registry of Professional

Animal Scientists, pages 1–11, Dec. 1989.

Lien, J-M., Bayazit, O.B., Sowell, R.T., Rodriguez, S.

Amato, N.M., 2004. Shepherding Behaviours. In IEEE

International Conference Robotics Automation,

volume 4 pages 4159 – 4164, April 2004.

Lien, J-M, Rodriguez, S., Malric, J-P, Amato, N., 2004.

Shepherding Behaviours with Multiple Shepherds. In

Technical Report TR04-003, Parasol Lab, Dept. of

Computer Science, Texas A&M University. Sept.

2004.

Numazaki, S., Morshita, A., Umeki, N., Ishikawa, M.,

Doi, M., 1998. A kinetic and 3D image input device.

In CHI 98, conference on Human factors in computing

systems, pages 237 – 238, April 1998.

Potter, M. A., Meeden, L., Schultz, A.C., 2001.

Heterogeneity in the Coevolved Behaviours of Mobile

Robots: The Emergence of Specialists. In Proceedings

of the 7th International Conference on Artificial

Intelligence, pages 1337 – 1343, 2001

Schultz, A. C., Grefenstette, J.J., Adams, W., 1996. Robot-

shepherd: Learning complex behaviours. In

Proceedings of the International Symposium on

Robotics and Automation, ASME Press, pages 763 –

768, May 1996

Smith, B., 1998 Moving ‘Em: A guide to Low stress

animal handling, Graziers Hui. Hawaii,1

st

edition.

Topp, E.A., Kragic, D., Jensfelt, P., Christensen, H.I.,

2004. An interactive interface for service robots. In

ICRA '04, IEEE International Conference on Robotics

and Automation, volume 4, pages 3469 – 3474, April

26-May 1 2004

Yoshizaki, M., Kuno, Y., Nakamura, A., 2001. Human-

Robot Interface based on the Mutual Assistance

between Speech and Vision. In Proceedings of the

2001 workshop on Perceptive user interfaces, ACM

International Conference Proceeding Series, pages 1 –

4, November 2001.

Vaughan, R. T., Sumpter, N., Henderson, J., Frost, A.,

Cameron, S., 2000. Experiments in automatic flock

control. In Robot and Autonomous Systems, pages

109 – 117. 2000.

ActivMedia Robotics, http://robots.activmedia.com

AUTONOMOUS MOBILE ROBOT ASSISTED HERDING

81