Improved Effort Estimation of Heterogeneous Ensembles

using Filter Feature Selection

Mohamed Hosni

1

, Ali Idri

1

and Alain Abran

2

1

Software Project Management Research Team, ENSIAS, Mohammed V University Rabat, Morocco

2

Department of Software Engineering,

´

Ecole de Technologie Sup

´

erieure Montr

´

eal, Canada

Keywords:

Software Development Effort Estimation, Machine Learning, Ensemble, Feature Selection, Filter.

Abstract:

Estimating the amount of effort required to develop a new software system remains the main activity in soft-

ware project management. Thus, providing an accurate estimate is essential to adequately manage the software

lifecycle. For that purpose, many paradigms have been proposed in the literature, among them Ensemble Ef-

fort Estimation (EEE). EEE consists of predicting the effort of the new project using more than one single

predictor. This paper aims at improving the prediction accuracy of heterogeneous ensembles whose members

use filter feature selection. Three groups of ensembles were constructed and evaluated: ensembles without

feature selection, ensembles with one filter, and ensembles with different filters. The overall results suggest

that the use of different filters lead to generate more accurate heterogeneous ensembles, and that the ensembles

whose members use one filter were the worst ones.

1 INTRODUCTION

Software Development Effort Estimation (SDEE)

aims at providing the amount of effort needed to de-

velop a software system. The estimates provided play

a decisive role on the success of a project manage-

ment, since it allows software managers to allocate

adequately the resources needed to build the software

system. Providing an accurate effort estimate has

been the subject of many studies for more than four

decades and a large number of techniques have been

proposed (Azzeh et al., 2015; Hosni et al., 2017c;

Hosni et al., 2017a; Zhu, 2010; Hosni and Idri, 2017).

This paper deals with ensemble techniques. Ensem-

ble techniques have been successfully applied to solve

many classification and regression tasks (Zhu, 2010;

Zhou, 2012). They consist of aggregating the outputs

of a set of single techniques by means of a combina-

tion rule. Ensembles techniques have been also ap-

plied in SDEE and will be referred to here as EEE

(Ensemble Effort Estimation). The literature distin-

guishes two types of ensembles (Idri et al., 2016c):

(1) Homogeneous EEE is divided into two subtypes:

ensembles that combine at least two configurations of

the same single SDEE technique, and ensembles that

combine one meta model such as Bagging, Boosting,

Negative Correlation, or Random Subspace and one

single SDEE technique; (2) Heterogeneous (HT) EEE

which combines at least two different SDEE single

techniques.

The systematic review of Idri et al. (Idri et al., 2016a)

has documented that in general ensembles outper-

formed their members. However, some studies of

EEE have shown the opposite (Hosni et al., 2017a;

Kocaguneli et al., 2009). It has been observed that

the accuracy of an ensemble mainly depends on two

main criteria: accuracy and diversity of its members

(Idri et al., 2016a; Idri et al., 2016b). In other words,

the estimates of an ensemble are influenced by the es-

timates of its members, thus, they should be as ac-

curate as possible. Also, they should be diverse (e.g.

make different errors in the same data point). Con-

sequently, each ensemble member can cancel the es-

timation errors done by other members. Otherwise,

an ensemble that integrates non-diverse members may

produce a lower estimation accuracy than its mem-

bers. Although, there is no generally accepted for-

mal definition of ensemble diversity, there are some

mechanisms used to generate diversity among them

selecting input features (Zhou, 2012), which was in-

vestigated in this paper.

Within this context, we carried out an empirical eval-

uation of heterogeneous ensembles whose members

were K-nearest neighbor (KNN), Multilayer Percep-

tron (MLP), Support Vector Regression (SVR), and

Decision Trees (DTs) (Hosni et al., 2017a). This

Hosni, M., Idri, A. and Abran, A.

Improved Effort Estimation of Heterogeneous Ensembles using Filter Feature Selection.

DOI: 10.5220/0006929104050412

In Proceedings of the 13th International Conference on Software Technologies (ICSOFT 2018), pages 405-412

ISBN: 978-989-758-320-9

Copyright © 2018 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

405

study has dealt with: (1) accuracy of the ensemble

members by tuning their parameters using the grid

search optimization technique, and (2) diversity by

using two filters: Correlation based feature selection

(CFS) and RReliefF. The results obtained showed that

the ensembles whose members used filter selection

were less accurate than their constituents and ensem-

bles without feature selection as well. This paper

presents an improvement to the selection process of

the heterogeneous ensemble members of (Hosni et al.,

2017a); in particular, we deal with the diversity cri-

terion by selecting members using different filters,

while in (Hosni et al., 2017a), the ensemble mem-

bers used the same filter. Moreover, three instead of

two filters were used in this study: CFS, RReliefF,

and Linear Correlation (LC). The ensembles of this

study were compared to ensembles of (Hosni et al.,

2017a) and ensembles without feature selection in or-

der to evaluate the impact of using different filters for

ensemble members on the accuracy of the ensembles.

The ensemble members of this study were the same as

in (Hosni et al., 2017a): KNN, MLP, SVR and DTs.

As for combiners, three linear rules were used: av-

erage, median, and Inverse Ranked Weighted Mean

(IRWM).

The main contributions of this paper are: dealing with

the diversity criterion of ensemble members by means

of three filters; and evaluating the impact of diversity

of ensemble members on the accuracy of EEE.

The rest of this paper is structured as follows: Sec-

tion 2 presents an overview of the three filters as well

as the four ML techniques used in this paper. Sec-

tion 3 presents the findings of some related work in

SDEE dealing with feature selection techniques for

heterogeneous ensembles. Section 4 presents the em-

pirical methodology pursued throughout this study.

Section 5 presents and discusses the empirical results.

Section 6 presents the conclusions of this empirical

study.

2 BACKGROUND

2.1 Feature Selection Techniques

Feature selection aims at eliminating redundant and

irrelevant features in order to reduce the complexity

and to improve the performance of any learner. Sev-

eral feature selection techniques are proposed in the

literature and can be grouped into three categories

(Jovic et al., 2015): Filter techniques, Wrapper tech-

niques and Embedded techniques.

This paper used filter techniques since they are

less costly and are performed independently of the

learner. Three filters were used: Correlation based

feature selection (CFS), RReliefF technique, and Lin-

ear Correlation. CFS belongs to the multivariate fea-

ture selection family: it assesses the full feature space,

and select a subset of features. The other two tech-

niques belong to the univariate feature selection fam-

ily: they separately assess each attribute and provide

a ranking of the features which presents an issue in

selecting the number of features. Selecting log

2

(N)

attributes where N is the number of features available

in the dataset, was the solution proposed in the lit-

erature and was adopted in this study (Hosni et al.,

2017a; Khoshgoftaar et al., 2007).

2.2 Four ML Techniques and Their

Parameters Settings

This study uses the same four ML techniques investi-

gated in (Hosni et al., 2017a): Knn, SVR, MLP, and

DTs.

It is well-known that the performance accuracy

of an estimation technique depends on its parameters

settings (Song et al., 2013; Hosni et al., 2017b; Hosni

et al., 2017c). In (Hosni et al., 2017b), we conducted

an empirical evaluation in which two optimization

techniques, Grid Search and Particle Swarm Opti-

mization, were used to set the parameters of four tech-

niques KNN, MLP, SVR, and DTs. The results ob-

tained showed that tuning the parameters by means of

optimization techniques have a positive impact on the

accuracy of these estimation techniques. Therefore,

this paper uses a grid search optimization technique to

set the parameters values of the four ML techniques.

It consists of performing a preliminary round of ex-

ecutions in a predefined range of values and there-

after selecting the optimal configuration that allows

the technique to generate the best accuracy with re-

spect to a specific measure (Hosni et al., 2017b). This

paper uses Mean Absolute Errors (MAE) as the per-

formance measure and the configuration that leads the

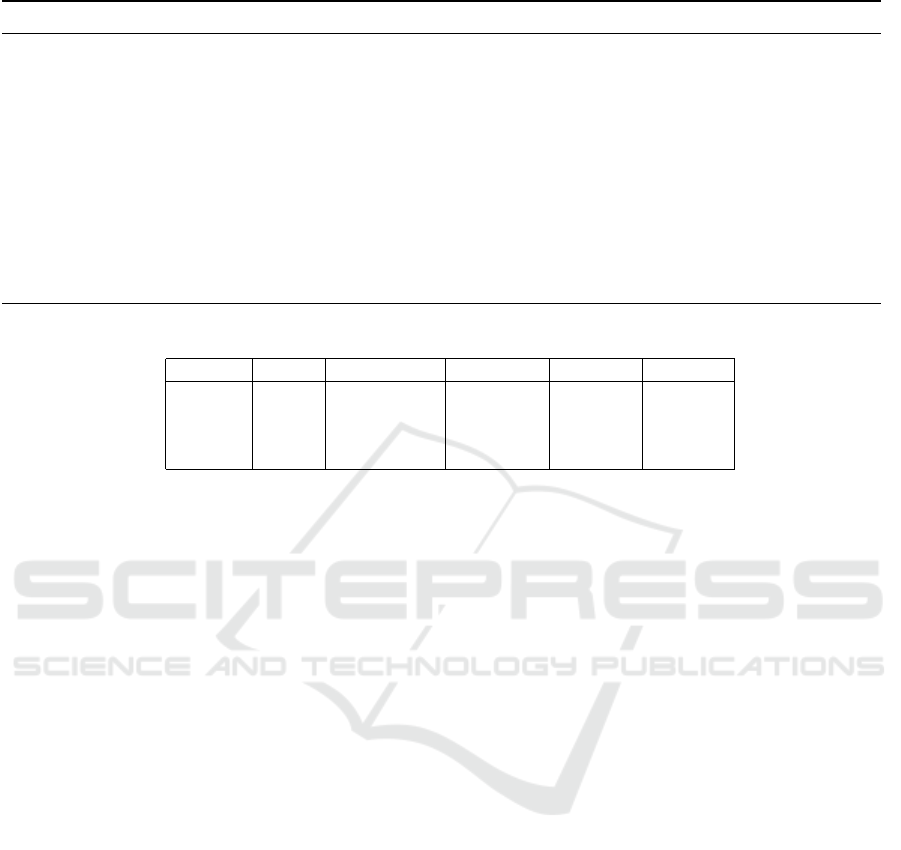

technique to generate less MAE was selected. Table 1

lists our predefined range of parameters values of the

four ML techniques.

3 LITERATURE REVIEW

The aim of feature selection techniques is to select

a set of features providing consistent information on

the instances of the data. Thus, the selected features

are used as inputs of a technique performing a knowl-

edge data discovery task such as classification, pre-

diction or clustering. Within this context, many stud-

ies in SDEE have investigated the use of feature se-

ICSOFT 2018 - 13th International Conference on Software Technologies

406

Table 1: Parameter Values For Grid Search.

Techniques Parameters with their search spaces

Knn

K= {1, 2, 4, 8, 12}

Similarity measure = {Euclidean Distance}

SVR

Complexity= {5, 10, 50, 100, 150}

Extent to which deviation are tolerated = {0.0001, 0.001, 0.01, 0.1}

Kernel=RBF

Kernel Parameter= {0.0001, 0.001, 0.01, 0.1}

MLP

Learning rate= {0.01, 0.02, 0.03, 0.04, 0.05}

Momentum= {0.1, 0.2, 0.3, 0.4, 0.5}

Kernel=RBF

Hidden layers= {3, 5, 9, 16}

DTs

Minimum instance per leaf = {1, 2, 3, 4, 5}

Minimum proportion of the data variance at a node = {0,0001, 0,001, 0.01,0.1}

Max depth= {1, 2, 4, 6, 8}

lection techniques, and the overall results have sug-

gested that the use of feature selection improved the

estimation accuracy of predictors (Hira and Gillies,

2015; Jovic et al., 2015). For instance, Idri et al. (Idri

and Cherradi, 2016) studied the impact of two wrap-

pers: feature forward selection and backward feature

selection on the accuracy of the Fuzzy Analogy esti-

mation technique: their results suggested that the two

wrappers improved the accuracy of the Fuzzy Anal-

ogy technique.

As for the EEE, there are few papers that investigate

the use of feature selection for ensembles. For in-

stance, Minku et al. (Minku and Yao, 2013) showed

that the use of CFS feature selection fails to improve

the accuracy of MLP homogeneous ensembles (Bag-

ging + MLP) but it improves the Radial Basis Func-

tion ensembles (Bagging and Negative Correlation

Learning). Hosni et al. (Hosni et al., 2017a) car-

ried out an empirical evaluation of heterogeneous en-

sembles whose members were KNN, SVR, MLP, and

DTs. The members used two filters: CFS and RRe-

liefF. Each ensemble contains four members with the

same filter and uses one of three linear rules (aver-

age, median and IRWM). Thus, 9 heterogeneous en-

sembles were developed. These ensembles were as-

sessed using Standardized Accuracy and Effect Size

to check their reasonability; thereafter the Scott-Knott

statistical test was performed to check the significant

difference between the ensembles. The best ensem-

bles that share the same predictive capability were

ranked based on 8 performance measures through

Borda Count. These experiments were performed

over six datasets. The results obtained showed that

the filter ensembles underperformed ensembles with-

out filters (Hosni et al., 2017a).

4 EMPIRICAL DESIGN

4.1 Performance Measure and

Statistical Test

The first question raised when evaluating an SDEE

technique is whether the technique is actually pre-

dicting or only guessing (Idri et al., 2017; Shep-

perd and MacDonell, 2012). Thus, the Standardized

Accuracy measure (SA, Eq.(8)) was used to check

the reasonability of any technique with respect to a

baseline method, and the Effect Size test (∆, Eq.(9))

was adopted to assess if there is an effect improve-

ment over the baseline method. The absolute val-

ues of ∆ can be interpreted in terms of the categories

proposed by Cohen (Cohen, 1992): small (≈ 0.2),

medium (≈ 0.5) and large (≈ 0.8). Thereafter, a set

of accuracy measures were used to assess the pre-

dictive capability of a given technique: Pred(0.25)

(Eq.(3)), MAE (Eq.(4)), Mean Balanced Relative Er-

ror (MBRE, Eq.(5)), Mean Inverted Balanced Rel-

ative Error (MIBRE, Eq.(6)) and Logarithmic Stan-

dard Deviation (LSD, Eq.(7)). However, given that

the mean is very sensitive to outliers, the median of

these measures was also used: median of absolute

errors (MdAE), median of Balanced Relative Error

(MdBRE), and median of Inverted Balanced Relative

Error (MdIBRE). Note that the Pred(0.25) measure

was used in this paper even if it is an MRE-based cri-

terion: it was empirically proven in (Idri et al., 2017)

that the possibility to generate biased results is very

low in SDEE datasets compared to the other MRE-

based criteria such as Mean Magnitude Relative Error

(MMRE).

Improved Effort Estimation of Heterogeneous Ensembles using Filter Feature Selection

407

AE

i

=

|

e

i

− ˆe

|

(1)

MRE

i

=

AE

i

e

i

(2)

Pred(0.25) =

100

N

N

∑

i=1

1 i f MRE

i

≤ 0.25

0 Otherwise

(3)

MAE =

1

N

N

∑

i=1

AE

i

(4)

MBRE =

1

N

N

∑

i=1

AE

i

min(e

i

, ˆe

i

)

(5)

MIBRE =

1

N

N

∑

i=1

AE

i

max(e

i

, ˆe

i

)

(6)

LSD =

s

∑

N

i=1

(λ

i

+

s

2

2

)

2

N − 1

(7)

SA = 1 −

MAE

p

i

MAE

p

0

(8)

∆ =

MAE

p

i

− MAE

p

0

s

p

0

(9)

Where:

• e

i

and ˆe

i

are the actual and predicted effort for the

ith project.

• MAE

p

0

is the mean value of a large number runs

of random guessing. This is defined as, predict

a e

i

for the target project i by randomly sampling

(with equal probability) over all the remaining n-1

cases and take e

i

=e

r

where r is drawn randomly

from 1... n∧r 6= i. This randomization proce-

dure is robust since it makes no assumption and

requires no knowledge concerning a population.

• MAE

p

i

mean of absolute errors for a prediction

technique i.

• S

p

0

is the sample standard deviation of the random

guessing strategy.

• λ

i

= ln(e

i

) - ln(ˆe

i

)

• s

2

is an estimator of the variance of the residual

λ

i

.

To check the significance of the difference between

techniques, the Scott-Knott (SK) test was used (Scott

and Knott, 1974): the SK test performs multiple com-

parisons and take into account the error type I correc-

tion.

Concerning the evaluation method, the Jackknife

method was adopted in this paper.

4.2 Ensembles Construction

This paper evaluates three groups of heterogeneous

ensembles whose members are KNN, MLP, SVR and

DTs. These ensembles differ on the way on which

they were constructed. The members were selected

according to their accuracy values. The three groups

are defined as follows:

• Ensembles whose members use different filters:

ENF.

• Ensembles whose members do not use filter fea-

ture selection: E0F.

• Ensembles whose members use one filter (i.e. en-

sembles of (Hosni et al., 2017a)): E1F.

Methodology to construct the ensembles: the

steps followed to select the ensemble members of

ENF are described next. Note that before conduct-

ing the experiments, the three filters were applied over

the six datasets to select the relevant features that will

feed the four ML techniques.

The steps followed to construct the ENF ensembles

are as follows:

Step a.1. Build the ML techniques using the three

filters over the six datasets. The parameters set-

tings of each technique were determined using the

grid search technique with the range values of Ta-

ble 1. The best three variants of each technique

over each dataset were selected.

Step a.2. Evaluate the reasonability of the best three

variants of each technique over each dataset in

terms of SA and ∆, and select the ones achiev-

ing a reasonability higher than the 5% quantile of

random guessing (high SA) and showing a large

improvement in terms of Effect Size (∆> 0.8).

Step a.3. Perform the SK test based on AE of the

three variants of each technique of Step a.2 over

each dataset. The aim of performing the SK test

is to cluster the selected techniques and to identify

the best ones (i.e. techniques that share the same

predictive capability). Note that before conduct-

ing the SK test, the distribution of AEs of all se-

lected techniques was checked to verify whether

or not it follows a normal distribution using the

Kolmogorov-Smirnov statistical test; this pre-step

is necessary since the SK test required that its in-

puts should be normally distributed. The box-cox

transformation was performed in order to make

the AEs follow a normal distribution.

Step a.4. Rank the members of the best cluster of

each technique over each dataset by means of

Borda count using 8 accuracy measures: MAE,

ICSOFT 2018 - 13th International Conference on Software Technologies

408

MdAE, MIBRE, MdIBRE, MBRE, MdBRE,

Pred, and LSD. The Borda voting system takes

into consideration the rank provided by each ac-

curacy criterion. The rationale behind using many

accuracy measures is that prior studies in SDEE

has showed that the selection of the best estima-

tion technique depends on which indicator of ac-

curacy used (Azzeh et al., 2015).

Step a.5. Select the best variant of each technique to

be used as the base technique of the ENF ensem-

bles. Therefore, for each dataset, three hetero-

geneous ensembles were defined whose members

are the four best variants of KNN, MLP, SVR and

DTs with the associated filter each. The three en-

sembles used the three linear combiners each: av-

erage, median and IRWM.

The steps followed to build the E1F and E0F ensem-

bles are as follows:

Step b.1. Return the best variants of the four ML

techniques (i.e. having the lowest MAE) using a

grid search with the ranges of Table 1. This step is

performed for each couple (technique, filter) over

each dataset (E1F). It is also performed for each

technique without feature selection (E0F).

Step b.2. Construct the E1F ensembles whose mem-

bers were the best variants of the four techniques

using the same filter. The three E1F (e.g. LC, CFS

and R) ensembles used the three linear combin-

ers each: average, median and IRWM. Construct

the E0F ensembles whose members were the best

variants of the four techniques without feature se-

lection. E0F ensemble used the three linear com-

biners: average, medium and IRWM.

Comparison Methodology: to compare the ensem-

bles (i.e. ENF, E0F and E1F) we used the same

methodology as in (Hosni et al., 2017a) which con-

sists of three steps:

Step c.2. Assess the accuracy of the ensembles with

regards to SA and Effect Size, and select the ones

that achieve SA values higher than the 5% quan-

tile of random guessing and show a large improve-

ment over random guessing in terms of Effect Size

(∆> 0.8).

Step c.2. Cluster the selected ensembles through the

SK test in order to select the ones that have similar

predictive capability.

Step c.2. Rank the ensembles of the best cluster in

each dataset according to 8 accuracy measures.

4.3 Abbreviation Adopted

We abbreviate the name of single and ensembles tech-

niques as follows:

• Single techniques:

{Feature Technique}{Single Technique}

• ENF: HT{Rule}.

• E0F: OD{Rule}.

• E1F: {Feature Technique}{Rule}.

where:

• Feature Technique: CFS, R, LC denote the Cor-

relation based feature selection, RReliefF, and

Linear Correlation respectively.

• Single Technique: Knn, MLP, SVR, and DTs.

• Rules: AV, ME, IR denote the average, median,

and inverse ranked weighted mean respectively.

Examples:

HTAV denotes the heterogeneous ensemble whose

members are the four ML techniques using different

feature selection techniques and the average as a com-

biner.

CFSIR denotes the ensemble whose members are the

four ML techniques using the CFS feature selection

technique and IR as combiner.

4.4 Datasets Description

Six well-known datasets were selected to assess the

accuracy of the single and ensembles techniques.

These datasets are diverse in terms of size, num-

ber of features, and they were collected from dif-

ferent organizations around the world and from dif-

ferent software application domains. Five datasets

namely: Albrecht, China, COCOMO81, Desharnais,

and Miyazaki were selected from the PROMISE

repository while the other dataset came from the IS-

BSG repository (Release 8).

Note that, a cleaning and instance selecting steps were

performed on the ISBSG dataset in order to select

projects with high quality. This preprocessing stage

results on a dataset that contains 148 projects de-

scribed by means of nine attributes.

5 EMPIRICAL RESULTS

5.1 Feature Selection Step

Table 2 lists the features selected for each dataset.

While none of the feature selection method chose the

same subset of features, there is at least one common

Improved Effort Estimation of Heterogeneous Ensembles using Filter Feature Selection

409

attribute selected by the three filters, to the exception

of China dataset in which the R and LC filters chose

different features. Recall that the number of features

is the same for LC and R (i.e. log

2

(N) where N is

the number of features) since they are both univari-

ate filter techniques. We can conclude that the use of

different filters results in different subset of features

which, therefore, impact the accuracy of the single

and ensemble techniques. The common features be-

tween the three filters techniques are indicated in bold

in Table 2.

5.2 Selection of Best Single Techniques

This subsection presents the evaluations results of the

four single techniques using the three filters over the

six datasets. The best variant of each single tech-

nique with each filter was selected as a base technique

for the heterogeneous ensembles ENF. Therefore, for

each dataset, 12 best variants were selected (12 = 4

single techniques * 3 filters).

Step a.1 aims at building the single four ML tech-

niques using the three filters. Thus, several experi-

ments were performed by varying the parameter set-

tings of each technique over each dataset according to

Table 1. For each dataset and each single technique

using a filter, we retain the variant having the lowest

MAE value (i.e. best variant). Next, step a.2 con-

sists of evaluating the reasonability of the best single

techniques of step a.1 over each dataset by means of

SA and Effect Size in order to select the ones that

will participate in the further experiments. We select

the best single techniques having an SA value higher

than the 5% quantile of random guessing and a large

impact over random guessing (∆>0.8). The overall

results suggest that the SA values of all single tech-

niques are greater than the 5% quantile of random

guessing; thus, all techniques provide reasonable pre-

dictions and are selected for the further experiments.

The evaluation results are not presented due to the

limit number of pages and could be obtained upon re-

quest by email to the corresponding authors of this

paper.

Thereafter, step a.3 clusters the three variants of

each single technique over each dataset through the

SK test using the AE criterion. The purpose of this

step is to select the variants that have the same predic-

tive capability and do not have a significant difference

between them. Afterward, the variants of techniques

that belong to the best cluster were selected to par-

ticipate in further experiments. In fact, the SK test

identified one cluster 14 times (i.e. the three variants

of the technique have the same capability prediction),

two clusters 9 times, and 3 clusters once (i.e. Knn

technique in COCOMO81 dataset).

The selected techniques of step a.3 were, there-

after, ranked using Borda with 8 performance mea-

sures based on the ranking obtained through Borda

counting voting system no filter outperformed the

other in all situations. For example, the LC filter was

the best for DTs since LC was ranked first in five

datasets; however, the R filter was the best for SVR

(ranked 3 times in the first position).

The best variant of each technique over each

dataset was therefore selected as a member of the pro-

posed heterogeneous ensembles ENF. Table 3 lists the

ENF ensemble members for each dataset. We observe

that each dataset has an ensemble with different filters

(e.g. ensemble of Albrecht dataset uses LC and R),

This means that the performance of a filter depends

on the characteristics of each dataset (size, number of

features, etc.). Hence, members of ENF ensembles

use different feature subsets, contrary to E1F ensem-

bles, which can lead to satisfy the diversity criterion.

5.3 Ensembles Evaluation

This subsection presents the evaluation of the hetero-

geneous ensembles ENF, E1F and E0F according to

steps c.1-c.3. We have in total for each dataset 15

ensembles (15 = 3 ENF ensembles (1 ensemble * 3

combiners) + 9 E1F ensembles (3 filters * 3 combin-

ers) + 3 E0F ensembles (1 ensemble * 3 combiners).

Step c.1 evaluates the SA and ∆ values of the 15 het-

erogeneous ensembles over the six datasets in order

to retain the ensembles that achieve SA values higher

than the 5% quantile of random guessing and show

a large improvement over random guessing (∆>0.8).

The results obtained show that all the ensembles gen-

erate better SA value than the 5% quantile of random

guessing and show large ∆ values; therefore, all the

ensembles were selected as participants in the next

experiments. The main findings are:

• There is no best ensemble that achieved the high-

est reasonability across all datasets.

• The E0F ensembles achieved the highest SA val-

ues in four datasets: Albrecht, China, Desharnais,

and Miyazaki.

• The ENF ensembles generate the highest SA

value in two datasets: COCOMO81 and ISBSG.

• None of the E1F ensembles was ranked at the first

position in all datasets.

• The less reasonable ensembles were the ones of

the E1F ensembles.

• IR and ME rules lead ensembles to generate better

SA values.

ICSOFT 2018 - 13th International Conference on Software Technologies

410

Table 2: Feature Selection Results: Common Selected Features are in Bold for Each Dataset.

Datasets CFS LC RReliefF

Albrecht Output, Inquiry, RawFP-

counts

Output, file, RawFPcounts,

AdjFP

Output, Inquiry, RawFP-

counts, AdjFP

China Output, Enquiry, Interface,

Added, Resource, Duration

AFP, Input, File, Added Output, Enquiry, File, Duration

COCOMO81 DATA, TIME, STOR, TURN,

VEXP, KDSI

DATA, TIME, STOR, TURN,

KDSI

TIME, VIRTmajeur, PCAP,

VEXP, KDSI

Desharnais TeamExp, ManagerExp,

YearEnd, Length, Adjustment,

PointsAjust

Length, Transactions,

PointsNonAdjust, PointsAjust

ManagerExp, Transactions,

PointsNonAdjust, PointsAjust

ISBSG VAF, MTS, UBCU, FC VAF, MTS, IC, FC IC, OC, EC, FC

Miyazaki KLOC, SCRN, FORM, FILE,

EFORM, EFILE

KLOC, SCRN, FILE, EFILE KLOC, FORM, ESCRN,

EFORM

Table 3: Members of the ENF Heterogeneous Ensembles.

Albrecht China COCOMO81 Desharnais ISBSG Miyazaki

LCDT LCDT RDT LCDT LCDT LCDT

LCKnn RKnn CFSKnn LCKnn CFSKnn LCKnn

RMLP RMLP LCMLP LCMLP CFSMLP CFSMLP

RSVR RSVR RSVR CFSSVR LCSVR LCSVR

Step c.2 clusters the 15 heterogeneous ensem-

bles through the SK test with the purpose of select-

ing the ones that are the best and share similar pre-

dictive capability. The SK test identified 4 clusters

in COCOMO81 dataset, 2 clusters in ISBSG and

Miyazaki datasets, and one cluster in the three re-

maining datasets. We notice that the RReliefF ensem-

bles were not selected by the SK test in two datasets:

ISBSG and Miyazaki. Similarly, the CFS ensembles

were not selected in the best cluster in COCOMO81

dataset. The results of SK test are not presented due to

the limit number of pages and could be obtained upon

request by email to the corresponding authors of this

paper.

The results obtained from the ranking provided by

Borda count are:

• The ENF ensembles, regardless of the combina-

tion rules, outperformed the E1F and E0F ensem-

ble in 5 out of 6 datasets.

• None of the ENF ensembles was ranked in the last

positions in all datasets.

• The three E0F ensembles were ranked in the three

first positions in Desharnais dataset.

• Most of the E1F ensembles were in general ranked

in the last positions in all datasets.

• The IR combiner provides more accurate ensem-

bles in 4 out of 6 datasets, followed by ME in 2

out of 6 datasets. Note that the AV combiner did

not occur in the first position in any dataset.

Note that, the Table presenting the final ranking

is not presented due to the limit number of pages and

could be obtained upon request by email to the corre-

sponding authors of this paper.

6 CONCLUSION AND FUTURE

WORK

The objective of this study was to evaluate the impact

of the diversity criterion on the accuracy of heteroge-

neous ensembles. The ensemble members were the

four ML techniques: KNN, MLP, SVR and DTs and

the combination rules were the three linear combin-

ers (average, median, and IRWM). In general, there

are three sources of diversity: sampling data, training

the same technique with different configuration in the

same sample, and using different features as input of

a technique.

This study investigated filter feature selection as a

source of diversity of ensemble members. To do that,

we improved the selection process of ensemble mem-

bers used in (Hosni et al., 2017a) by allowing the use

of different filters in the same ensemble. This led sin-

gle technique of an ensemble to use different subsets

of features. To assess the impact of this strategy, we

evaluated and compared the accuracy of three groups

of ensembles: ENF (members of an ensemble use dif-

ferent filters), E1F (members of an ensemble use one

filter) and E0F (members of an ensemble do not use

feature selection).

The results in terms of SA suggest that all ensem-

bles ENF, E1F and E0F were reasonable and generate

Improved Effort Estimation of Heterogeneous Ensembles using Filter Feature Selection

411

more reasonable results with respect to random guess-

ing. Moreover, none of the 15 ensembles was ranked

in the first position across different datasets. The E0F

ensembles were more reasonable than the other en-

sembles in four datasets; the ENF ensembles were

the best in two datasets (COCOMO81 and Miyazaki).

However, the E1F ensembles were the less reasonable

in all datasets.

However, the accuracy results in terms of 8 perfor-

mance measures suggest that the ENF, in particular

with the combiners IR or ME, outperformed the E1F

and E0F ensembles in 5 out of 6 datasets. This im-

plies that using different feature subsets by ensemble

members can lead to more accurate estimations than

when members use the same feature subset or all the

available features. In fact, the success of the ENF en-

sembles is mainly due to the fact that their members

were diverse and generate different estimations at the

same point (i.e. diversity) than the members of E1F or

E0F. Moreover, E0F ensembles generate slightly bet-

ter estimates than E1F. Therefore, we conclude that

ensembles without feature selection were better and

easier to construct than ensembles with one filter.

Ongoing work will focus on investigating the im-

pact of other feature selection techniques, including

filters or wrappers, on the accuracy of homogenous

and heterogeneous ensembles.

REFERENCES

Azzeh, M., Nassif, A. B., and Minku, L. L. (2015). An

empirical evaluation of ensemble adjustment methods

for analogy-based effort estimation. The Journal of

Systems and Software, 103:36–52.

Cohen, J. (1992). A power primer. Psychological Bulletin,

112(1):155–159.

Hira, Z. M. and Gillies, D. F. (2015). A review of feature se-

lection and feature extraction methods applied on mi-

croarray data. Advances in Bioinformatics, 2015(1).

Hosni, M. and Idri, A. (2017). Software effort estimation

using classical analogy ensembles based on random

subspace. In Proceedings of the ACM Symposium on

Applied Computing, volume Part F1280.

Hosni, M., Idri, A., and Abran, A. (2017a). Investigating

heterogeneous ensembles with filter feature selection

for software effort estimation. In ACM International

Conference Proceeding Series, volume Part F1319.

Hosni, M., Idri, A., Abran, A., and Nassif, A. B. (2017b).

On the value of parameter tuning in heterogeneous en-

sembles effort estimation.

Hosni, M., Idri, A., Nassif, A., and Abran, A. (2017c). Het-

erogeneous Ensembles for Software Development Ef-

fort Estimation. In Proceedings - 2016 3rd Interna-

tional Conference on Soft Computing and Machine In-

telligence, ISCMI 2016.

Idri, A., Abnane, I., and Abran, A. (2017). Evaluating

Pred( p ) and standardized accuracy criteria in soft-

ware development effort estimation. Journal of Soft-

ware: Evolution and Process, (September):e1925.

Idri, A. and Cherradi, S. (2016). Improving Effort Esti-

mation of Fuzzy Analogy using Feature Subset Se-

lection. In Computational Intelligence (SSCI), 2016

IEEE Symposium Series on.

Idri, A., Hosni, M., and Abran, A. (2016a). Improved Esti-

mation of Software Development Effort Using Classi-

cal and Fuzzy Analogy Ensembles. Applied Soft Com-

puting.

Idri, A., Hosni, M., and Abran, A. (2016b). Systematic

Mapping Study of Ensemble Effort Estimation. In

Proceedings of the 11th International Conference on

Evaluation of Novel Software Approaches to Software

Engineering, number Enase, pages 132–139.

Idri, A., Hosni, M., and Alain, A. (2016c). Systematic Liter-

ature Review of Ensemble Effort Estimation. Journal

of Systems and Software, 118:151–175.

Jovic, A., Brkic, K., and Bogunovic, N. (2015). A re-

view of feature selection methods with applications.

In 2015 38th International Convention on Information

and Communication Technology, Electronics and Mi-

croelectronics (MIPRO), number May, pages 1200–

1205.

Khoshgoftaar, T., Golawala, M., and Hulse, J. V. (2007). An

Empirical Study of Learning from Imbalanced Data

Using Random Forest. In 19th IEEE International

Conference on Tools with Artificial Intelligence(ICTAI

2007), volume 2, pages 310–317.

Kocaguneli, E., Kultur, Y., and Bener, A. (2009). Combin-

ing Multiple Learners Induced on Multiple Datasets

for Software Effort Prediction. In Proceedings of In-

ternational Symposium on Software Reliability Engi-

neering.

Minku, L. L. and Yao, X. (2013). Ensembles and locality:

Insight on improving software effort estimation. Infor-

mation and Software Technology, 55(8):1512–1528.

Scott, A. J. and Knott, M. (1974). A Cluster Analysis

Method for Grouping Means in the Analysis of Vari-

ance. Biometrics, 30(3):507–512.

Shepperd, M. and MacDonell, S. (2012). Evaluating pre-

diction systems in software project estimation. Infor-

mation and Software Technology, 54(8):820–827.

Song, L., Minku, L. L., and Yao, X. (2013). The impact of

parameter tuning on software effort estimation using

learning machines. In Proceedings of the 9th Interna-

tional Conference on Predictive Models in Software

Engineering.

Zhou, Z.-H. (2012). Ensemble Methods. CRC Press.

Zhu, D. (2010). A hybrid approach for efficient ensembles.

Decision Support Systems, 48(3):480–487.

ICSOFT 2018 - 13th International Conference on Software Technologies

412