Visual Inspection of Storm-Water Pipe Systems using Deep

Convolutional Neural Networks

Ruwan Tennakoon, Reza Hoseinnezhad, Huu Tran and Alireza Bab-Hadias har

School of Engineering, RMIT University, Melbourne, Australia

Keywords:

Storm-Water Pipe Inspection, Automated Infrastructure Inspection, Deep Convolutional Neural Networks.

Abstract:

Condition monitoring of storm-water pipe systems are carried-out regularly using semi-automated proces-

sors. Semi-automated inspection is time consuming, expensive and produces varying and relatively unreliable

results due to operators fatigue and novicity. This paper propose an innovative method to automate the storm-

water pipe i nspection and condition assessment process which employs a computer vision algorithm based on

deep-neural network architecture to classif y the defect types automatically. With the proposed method, the

operator only needs to guide the robot through each pipe and no longer needs to be an expert. The results

obtained on a CCTV video dataset of storm-water pipes shows that the deep neural network architectures

trained with data augmentation and transfer learning is capable of achieving high accuracies in identifying the

defect types.

1 INTRODUCTION

Condition monitoring of storm-water pipe systems

are often carried-out to provide an und erstanding of

the current status of the storm-water sy stem, which

enables the prediction of future deterioration of the

pipes and facilitate investmen t planning. These infor-

mation can also be used in allocating maintenance and

repair reso urces efficiently.

An on-site inspection with closed-circuit televi-

sion (CCTV) is currently the mo st common and com -

mercially available me thod for condition assessment

of storm-water pipes. The typical inspection process

can be described as follows. A certified technic ia n

guides a CCTV camera mounted on a robot that tra-

vels inside a pipe segment. Th e technician must v i-

sually detect the defects in the pipe segment by ob-

serving the video feed. Once a defect is detected the

technician manually rotates and zoom the camera to

gain a better understan ding of th e defect and adds the

informa tion relating to th at defect (i.e. defect type,

defect parameters) to the video together with add iti-

onal information such as pipe dia meter, location, in-

spection date. The recorded video is then used for

further analysis including discrete condition r ating,

deterioration modelling and planning (Tran et al.,

2010).

The above described CCTV inspection is conside-

red semi-automated an d is time consuming, expensive

and produces varying a nd relative ly unreliable results

in som e cases due to operators fatigu e and novicity. In

addition, training a professional technician to be able

to classify all the defect types, estimate defect para-

meters and conduct inspection is costly. Due to the

above limitations of the manual inspection process,

only around ten percent of the storm-water pipe sy-

stem in Melbourn e, Australia can be insp ected given

limited budget. Incr easing the portion of the inspected

pipes would increase the re liability of the network as

well as improve the resour c e allocation and planning

processes.

In this paper, we propose an innovative method

to automate the defect de te c tion and condition asses-

sment within the pipe inspection process. With the

proposed method, the operator only needs to guide

the robot through each pipe and no longer needs to be

an expert in piping. A computer vision algorithm ba-

sed on deep-neura l network architecture is de sig ned

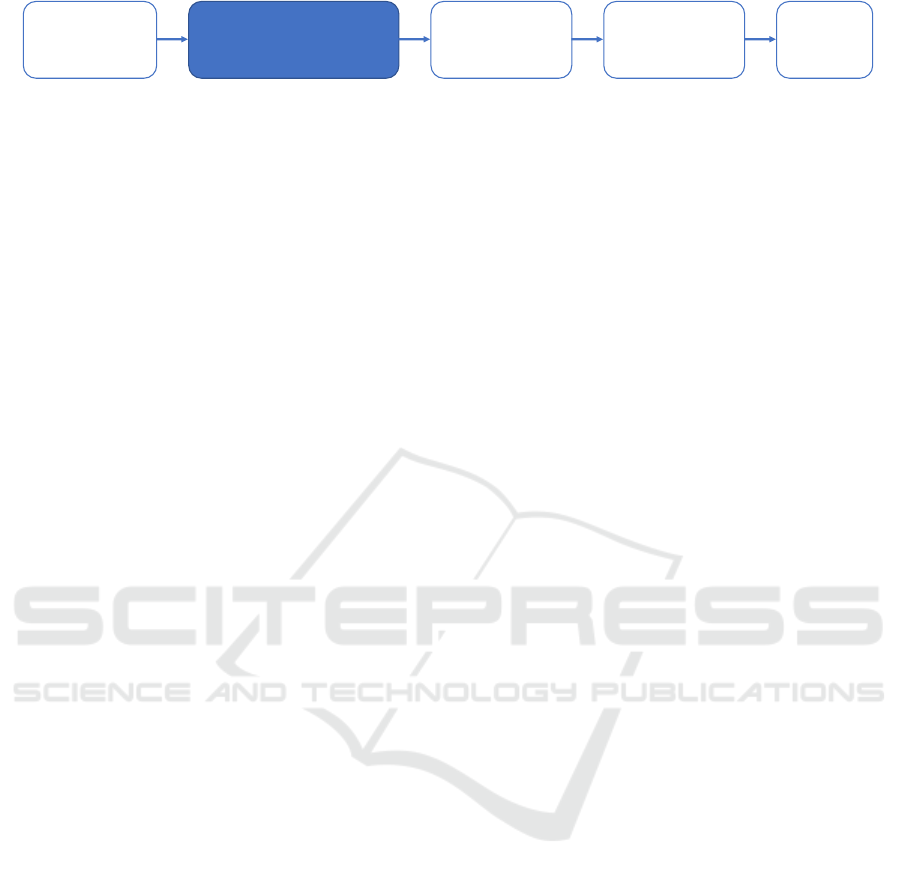

to classify the defect types automatically. The block-

diagram of the overall process is shown in Figure 1.

In the proposed system, the techn ic ia n still needs to

drive the robot through the pipe and record a clear

video of all the internal conditions of the pipe. The

video is then fed to the model and the model will go

through the video frame b y fr ame to detects the un-

derlying defects in each frame. After successfully de-

tecting a defect, the system extracts those frames with

defects and classify the defect type and extract de-

Tennakoon, R., Hoseinnezhad, R., Tran, H. and Bab-Hadiashar, A.

Visual Inspection of Storm-Water Pipe Systems using Deep Convolutional Neural Networks.

DOI: 10.5220/0006851001350140

In Proceedings of the 15th International Conference on Informatics in Control, Automation and Robotics (ICINCO 2018) - Volume 1, pages 135-140

ISBN: 978-989-758-321-6

Copyright © 2018 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

135

Record

CCTV video

Deep-learning based

Defect type detection

Extract detect

frames

Extract detect

Parameters

Analysis

Report

Figure 1: The overall block-diagram of the automated storm-water pipe inspection process.

fect parameters required for condition assessment and

further analysis. By applyin g a utomated visual in-

spection, the reliab ility of the inspection process can

be improved. In a ddition, this au tomatic system redu-

ces th e cost and time in comparison with the manual

visual inspection process.

The remainder of the pape r is organized as fol-

lows: Section 2 provides a review of existing pipe in-

spection methods and deep neura l networks. Section

3 provides a description of the overall method and

Section 4 show the re sults of of our experim ents. Fi-

nally Section 5 concludes the paper.

2 BACKGROUND

2.1 Automated Inspection of

Storm-water/Sewage Pipes

Numerous attempts have been made to automate the

pipe inspection process using computer vision and

machine learning techniq ues. Xu et al. (Xu et al.,

1998) proposed an automated method for pipe d efor-

mation analysis and crack detection that uses image

processing techniques such as edge detection and bi-

nary image thresholding c ombine with boundary seg-

ment analy sis.

Shehab and Moselhi (Shehab and Moselhi, 2005)

propose a machine learning based metho d for in-

filtration d e te ction in pipes. They first extracted

17 features from images of pipes using a sequence

of image processing operatio ns including: dilation,

backgr ound subtraction, thresholding, and segmenta-

tion. These features we re then used in a neu ral net-

work to predict th e presence of infiltration which was

trained using back propagation. Yang and Su (Yang

and Su, 2008) also proposed a mach ine learning ba-

sed automatic pipe inspection framework. They ex-

tracted texture based features from the image using

techniques including wavelet transform and compu-

tation of co-occurrence matrices. These features a re

used with, th ree machine learning approaches: back-

propagation neural network (BPN), radial basis net-

work (RBN), and support vector machine (SVM) to

classify pipe defect patterns to following categories:

broken pipe, crack , fracture, and open joint. By ana-

lysing the above mentioned classifiers they concluded

that SVM and RBN are better than BPN.

Yang and Su (Yang and Su, 2009) proposed a pip e

defect detection method that utilized both supervised

and un-supervised techniques. In their method images

from CCTV camera were first co nverted to a set of fe-

atures using morphology based segmentation techni-

que. The mo st important features w ere then identi-

fied using principle componen t analysis and used in

a Radial basis network (RBN) to cla ssify them into

one of the following defect types: broken pipe, crack,

fracture, and open joint. Su and Yang (Su and Yang,

2014) also proposed a mo rphological segmentation

based method f or detecting def ects in CCTV video

of sewer pipelines. This method was only designed to

identify cracks and open joints in pipelines.

Halfawy and Hengmeechai (Halfawy and Heng-

meechai, 2014) proposed a method that first extract

image region of interest using image segmentation

techniques. Next histogram of gradient feature s were

extracted from those regio ns and used in a SVM clas-

sifier to predict weather the regio n is defective or not.

None of the above mentioned methods are relia-

ble enough to completely replace the curre nt manual

inspection due to the limitation of d a ta size, data col-

lection techniques, image proce ssing and pattern clas-

sification approaches (Guo et al., 2009). Also, most

of them only cover few of the defect types.

2.2 Deep Convolutional Neural

Networks

Since winning th e I mageNet competition in 2012

(Russakovsky et al., 2015), deep-learning method has

gained significant attentio n in computer vision com-

munity with many applications in image classification

and segmentation.

Deep convolutional neural networks (CNN) used

in image classification compr ises of multiple layers of

convolutio n operations coupled with non-lin e ar ope-

rations. The output of the convolutional stack is fed

through a classification neural network that output th e

probability of the input image belonging to each of the

predifined categories (Krizhevsky et al., 2012). The

parameters of the overall network is learned end-to-

end using back propagation algorithm on labelled trai-

ning data. Many CNN arc hitectures has been propo-

sed so far for image classification tasks and, the state-

ICINCO 2018 - 15th International Conference on Informatics in Control, Automation and Robotics

136

of-the art method include (Simonyan and Zisserman ,

2014) , (He et al., 2016) and (Szegedy et al., 2015 ).

Unlike traditional mach ine learning that require

the features to be h a nd-craf ted, CNNs learn the re-

levant features fr om data. However, end-to-end trai-

ning of a deep neura l ne twork requires large amount

of labelled data which might not be available for many

applications. Several approachers have been propo -

sed to solve this problem including: Unsupervised

pre-train ing of feature layers, data-augmenta tion and

transfer learning (use off-the shelf pre-trained models

and fine-tune the final classification layer).

3 PROPOSED METHOD

In this section we describe our proposed method for

storm water pipe inspection. T he focus of the paper is

the n ovel defect type detection module which is desig-

ned to detect five main types of defects found in stor m

water pipes i.e. 1) Breakin g - complete separation of

a pipe segment due to a radial crack 2) Cracks - either

radial or longitudinal 3) Deposition - sediment build-

up on the floor of the pipe 4) Root intrusion - intrusion

of tree roots through a gap in the pipes at a cra ck or at

the place where two pipes segments join and 5) Ho-

les. Examples of each defect type is shown in Figur e

2. Unlike the existing methods tha t use hand-crafte d

features c ombined with a learned classifier, in this pa-

per we intend to use a deep neural n etwork that learns

end-to-end using data alone.

3.1 CNN Architecture and Cost

Functions

Given a set of labeled video frames, X = [x

i

, y

i

]

N

i=1

,

where x

i

is a vid e o fram e, y

i

is th e co rresponding

class and N is the total numb er of training in stances,

the intention here is to learn a p a rametrised function

f (x

i

;θ) that maps an unseen image to a correspon-

ding class. In this paper we test two network archi-

tectures to model this fun ction. The first network was

a shallow network with only six layers, which inclu-

des three convolution layers (Conv), a Global average

pooling layer (GAP) and two fully connected layers

(FC). The above model has on ly a few parameters

(670,981 trainiable parameters) compared to typical

deep network s, and the architecture is shown in Ta-

bel 1. The next model is based o n the well known

ResNet-50 architecture (He et al., 2016). This net-

work consists of 50 residual blocks and it is selected

as it is a deep architecture that pr ovides an appropriate

balance between comp lexity an d accuracy for image

Table 1: Architecture of the shallow network. Relu stands

for Rectified linear units.

Layer Type Activation Shape Filters

1 Conv Relu 11x11 128

2 Conv Relu 5x5 256

3 Conv Relu 3x3 512

4 GAP

5 FC Relu 128

6 FC Softmax 5

classification task. However this architecture has m il-

lions of parameters ( 23,597,957 trainable parameters)

and training of the network needs large amount of

data.

Tra ining of the mod e s require a loss function that

quantifies the errors made by comparing the model

output with the supervision signal (ground truth labels

for each image). Here we used a categorical cross-

entropy as the loss function. The categorical cross-

entropy loss function can be written as:

L =

N

∑

i=1

C

∑

j=1

y

i j

ln ˆy

i j

(1)

where y

i j

is the ground-truth indicating whether

image i belongs to category j, ˆy

i j

is the predicted pro-

bability of image i belong ing to category j, N is the

number of instances in the dataset and C is the number

of detection classes which is 5 in ou r application.

3.2 Transfer Learning

The way the model parameters are initialized would

have a significant effect on the final result. One way

to initialize the par ameters is to set them to randomly

chosen values. This method of mode l training is cal-

led training from scratch and this does not involve any

prior information. As a neural network contained m il-

lions of parameters training from scratch effectively

requires a large dataset. It is not economically feasi-

ble to generate such a large dataset in ou r application.

Another well known method to train networks

with limited data is to start from a set of parameters

that is tr ained on a different domain and fine tune the

parameter with the limited dataset collected for the

task of interest. As we have only a limited dataset we

adopted this approach and used the para meters of the

ResNet-50 model that was trained on natura l image

classification task in ImageNet competition (He et al.,

2016). The ImageNet challenge involved classifying

natural images into 1 000 different classes. Becau se

our application involves only five classes and does

not map into any class that is in ImageNet competi-

tion, we removed the last classification layer of the

network a nd added a new layer which was initialized

to random values.

Visual Inspection of Storm-Water Pipe Systems using Deep Convolutional Neural Networks

137

Figure 2: Examples of defect types.

3.3 Model Training

3.3.1 Dataset Preparation

We obtained 90 videos of storm water pipe inspecti-

ons and most of the video range from 15 to 25 mi-

nutes in duration recorded at 25 frames per second.

The v ideos were first divided into training and valida-

tion splits of 80% and 20% respectively. Th e se videos

were then broken down into images. After converting

these videos to images, those images containing de-

fects were taken out and moved to diff erent folde rs

with respect to its type of defects. Each image has a

resolution of 720 X 576, wh ich matches the r e solution

of the v ideo. Since ima ges are generated from video

frame by fram e, it is inevitable to get many duplicated

photos, which would cause over-fitting of the model.

A sof tware called “Dup lica te Photo Fixer” was used

to remove all the duplicated images at 83% simila-

rity. We ach ieved 13 classes of images at the very

beginning, which includes crac king, breaking, hole,

sparling, fracture, intrusion, roo t, steel reinforcement

explosion, deposition, water accumulation, and angu-

lar, longitudinal and radial joint displacement. But

due to image data insufficiency for so me of the defect

types, we only kept the breakin g, crack, d e position,

hole and root defect instances.

3.3.2 Class Balancing

The resulting dataset was not balanced as it had large

number of instances from some classes and few in-

stances of some other classes. Tra ining a model with

such class imbalance would result in the model lear-

ning to predict only the dominant classes in the data-

set. To overcome this issue, we balanced the classes

by oversampling the instances in less frequent classes.

3.3.3 Data Augmentation

Due to the limited number of ima ges and variation

of the classes, over-fitting would be a major concern.

Consequently, it is a challenge to achieve high clas-

sification accuracy by the limited num ber of data we

have. To reduce this issue, we applied imag e s aug-

mentation on the dataset. New images were created

by randomly zooming, shearing and horizonta lly flip-

ping the origina ls, so that a relatively larger dataset

exist to train the model and reduce the likelihood of

over- fittin g.

Once the dataset was prepared, we trained the mo -

del using ADAM o ptimization. The network was trai-

ned on a Nvidia Titan X GPU with 12 GB of RAM for

150 epoch. The hyp er parameters fo r training are: Ba-

tch size: 32, learning rate 0.001, β

1

= 0.9, β

2

= 0.999.

ICINCO 2018 - 15th International Conference on Informatics in Control, Automation and Robotics

138

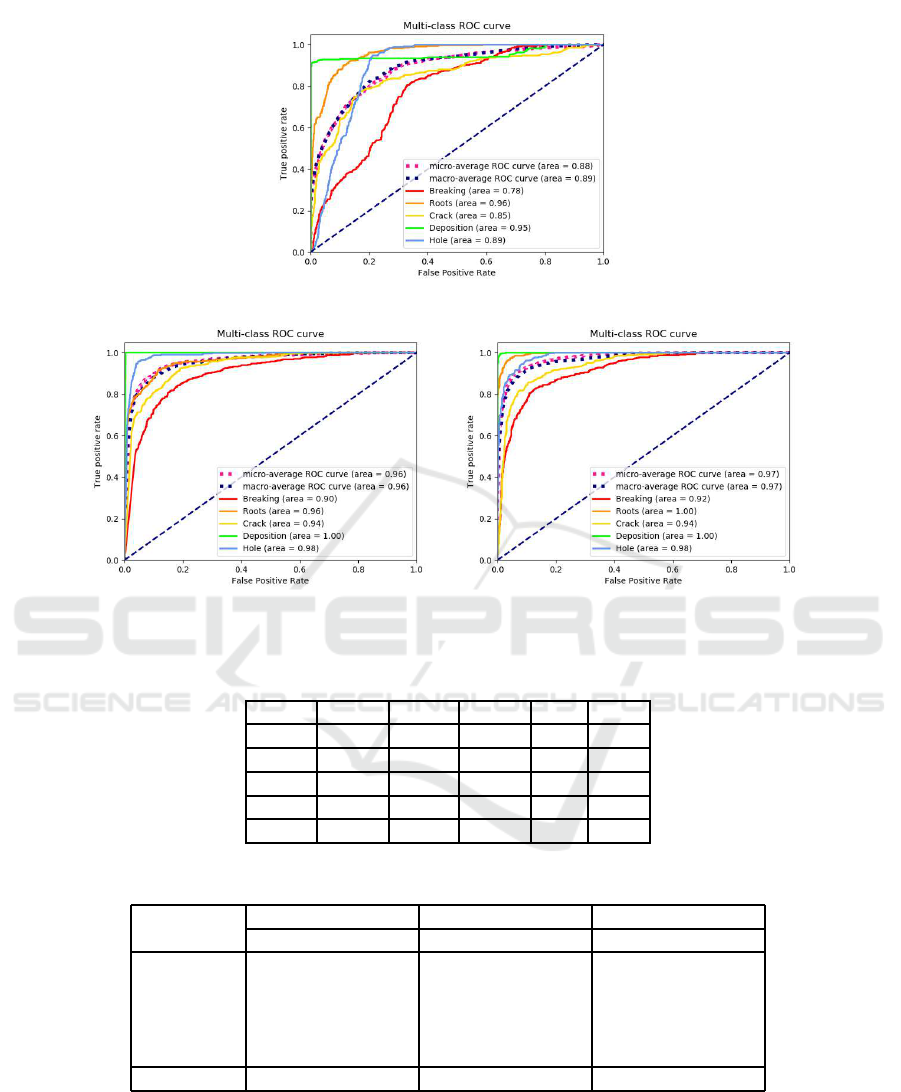

(a) S-net

(b) Resnet-RND (c) Resnet-TL

Figure 3: Area under ROC curve plots for each tested network types.

Table 2: The confusion matrix on the validation set for the method Resnet-TL. Dep stands for the class deposition.

Break Roots Crack Dep Hole

Break 290 33 48 9 20

Roots 7 383 1 3 6

Crack 88 6 300 0 6

Dep 1 0 2 397 0

Hole 33 12 30 0 325

Table 3: Per-Class Precision and Recall of each model.

Class S-net Resnet-RND Resnet-TL

Precision Recall Precision Recall Precision Recall

Breaking 0.45 0.38 0.65 0.70 0.69 0.72

Roots 0.80 0.70 0.89 0.7 4 0.88 0.96

Cracks 0.78 0.42 0.73 0.76 0.79 0.75

Deposition 0.71 0.93 0.95 1.00 0.97 0.99

Hole 0.50 0.72 0.88 0.8 8 0.91 0.81

Average 0.65 0.63 0.82 0. 82 0.85 0.85

4 RESULTS

We tested the trained models on a h eld out validation

set created from 20% o f the original inspection vi-

deos. The validation set consists of 400 images per

each category. The evaluations were done using area

under the receiver operating characteristics (ROC)

curve. We also report the p recision and rec all for each

category.

The results for the shallow network (S-net),

Visual Inspection of Storm-Water Pipe Systems using Deep Convolutional Neural Networks

139

Resnet-50 without random initialized weig hts

(Resnet-RND) and Resnet-50 with transfer learning

(Resnet-TL) are shown in Figur e 3 and Table 3.

The results show that S-net, even with fewer pa-

rameters compar ed to Resnet, has only been able to

achieve an overall ROC value of 0.88 . However both

Resnet with and without transfer learning has bee n

able to obtain h igh ROC values of 0.97 and 0.96 re-

spectively. The Resnet with transfer learning shows

slightly better ROC values in classifying breaking and

roots whereas the ROC values across othe r catego-

ries are similar to that without transfer learning. The

results indicate that data augmentation has enabled

accurate learning of a deep network with limited data

in storm-water pipe inspection.

The confusion matrix on the validation set for the

method Resnet-TL is shown in Table 2. The confu-

sion matrix shows that th ere is some misclassification

between the classes cracks and breaking. This behavi-

our is understandable given that the two defect types

mentioned above share similar physical characteris-

tics.

5 CONCLUSIONS

The pape r presents a new method for automated vi-

sual inspection of the storm water pipes. The main

novelty of our method is to use a deep convo lutional

neural network in identifying the defect types. The re -

sults obtained on a held out validation set shows that

proposed deep neural network architectures trained

with data augmentatio n and transfer learning are ca-

pable of achiev ing high accuracies in identifying the

defect typ e s.

In these experiments we have only used five de-

fect types due to the limited availability of data from

other categories and we intend to increase th is in fu-

ture work. Defect parameters such as the crack w idth

are also important in de cision making and we intend

to extend our work towards automated prediction of

defect parameters.

ACKNOWLEDGEMENTS

The Authors would like to tha nk Mr. Juncheng Li for

his help with the implementation part of this work.

REFERENCES

Guo, W., Soibelman, L., and Garrett, J. (2009). Automa-

ted defect detection for sewer pipeline inspection and

condition assessment. Automation in Construction,

18(5):587 – 596.

Halfawy, M. R. and Hengmeechai, J. (2014). Automated

defect detection in sewer closed circuit television ima-

ges using histograms of oriented gradients and support

vector machine. Automation in Construction, 38:1 –

13.

He, K., Zhang, X., Ren, S., and Sun, J. (2016). Deep resi-

dual learning for image recognition. In Proceedings of

the IEEE conference on computer vision and pattern

recognition, pages 770–778.

Krizhevsky, A., Sutskever, I., and Hinton, G. E. (2012).

Imagenet classification with deep convolutional neu-

ral networks. In Advances in neural information pro-

cessing systems, pages 1097–1105.

Russakovsky, O., Deng, J., Su, H., Krause, J., Satheesh, S.,

Ma, S., Huang, Z., Karpathy, A., Khosla, A., Bern-

stein, M., Berg, A. C., and Fei-Fei, L. (2015). Image-

net large scale visual recognition challenge. Interna-

tional Journal of Computer Vision, 115(3):211–252.

Shehab, T. and Moselhi, O. ( 2005). Automated detection

and classification of infiltration in sewer pipes. Jour-

nal of Infrastructure Systems, 11(3):165–171.

Simonyan, K. and Z isserman, A. (2014). Very deep con-

volutional networks for large-scale image recognition.

arXiv preprint arXiv:1409.1556.

Su, T.-C. and Yang, M.-D. (2014). Application of morpho-

logical segmentation to leaking defect detection in se-

wer pipelines. Sensors, 14(5):8686–8704.

Szegedy, C., Liu, W., Jia, Y., S ermanet, P., Reed, S., An-

guelov, D., Erhan, D., Vanhoucke, V., Rabinovich, A.,

Rick Chang, J.-H., et al. (2015). Going deeper with

convolutions. In The IEEE Conference on Computer

Vision and Pattern Recognition (CVPR).

Tran, H. D., Perera, B. J. C., and Ng, A . W. M. ( 2010). Mar-

kov and neural network models for prediction of struc-

tural deterioration of storm-water pipe assets. Journal

of Infrastructure Systems, 16(2):167–171.

Xu, K. , Luxmoore, A., and Davies, T. (1998). Sewer pipe

deformation assessment by image analysis of video

surveys. Pattern Recognition, 31(2):169 – 180.

Yang, M.-D. and Su, T.-C. (2008). Automated diagnosis of

sew er pipe defects based on machine learning approa-

ches. Expert Systems with Applications, 35(3):1327 –

1337.

Yang, M.-D. and Su, T.-C. (2009). Segmenting ideal mor-

phologies of sewer pipe defects on cctv images for au-

tomated diagnosis. Expert Systems with Applications,

36(2, Part 2):3562 – 3573.

ICINCO 2018 - 15th International Conference on Informatics in Control, Automation and Robotics

140