Bayesian Quadrature in Nonlinear Filtering

Jakub Prüher and Miroslav Šimandl

European Centre of Excellence - New Technologies for the Information Society,

Faculty of Applied Sciences, University of West Bohemia, Univerzitní 18, Pilsen, Czech Republic

Keywords:

Nonlinear Filtering, Bayesian Quadrature, Gaussian Process.

Abstract:

The paper deals with the state estimation of nonlinear stochastic discrete-time systems by means of quadrature-

based filtering algorithms. The algorithms use quadrature to approximate the moments given by integrals. The

aim is at evaluation of the integral by Bayesian quadrature. The Bayesian quadrature perceives the integral

itself as a random variable, on which inference is to be performed by conditioning on the function evaluations.

Advantage of this approach is that in addition to the value of the integral, the variance of the integral is

also obtained. In this paper, we improve estimation of covariances in quadrature-based filtering algorithms

by taking into account the integral variance. The proposed modifications are applied to the Gauss-Hermite

Kalman filter and the unscented Kalman filter algorithms. Finally, the performance of the modified filters is

compared with the unmodified versions in numerical simulations. The modified versions of the filters exhibit

significantly improved estimate credibility and a comparable root-mean-square error.

1 INTRODUCTION

Dynamic systems are widely used to model behaviour

of real processes throughout the sciences. In many

cases, it is useful to define a state of the system

and consequently work with a state-space represen-

tation of the dynamics. When the dynamics exhibits

stochasticity or can only be observed indirectly, the

problem of state estimation becomes relevant. Es-

timating a state of the dynamic system from noisy

measurements is a prevalent problem in many appli-

cation areas such as aircraft guidance, GPS navigation

(Grewal et al., 2007), weather forecast (Gillijns et al.,

2006), telecommunications (Jiang et al., 2003) and

time series analysis (Bhar, 2010). When the state es-

timator is required to produce an estimate using only

the present and past measurements, this is known as

the filtering problem.

For a discrete-time linear Gaussian systems, the

best estimator in the mean-square-error sense is the

much-celebrated Kalman filter (KF) (Kalman, 1960).

First attempts to deal with the estimation of nonlinear

dynamics can be traced to the work of (Smith et al.,

1962), which resulted in the extended Kalman filter

(EKF). The EKF algorithm uses the Taylor series ex-

pansion to approximate the nonlinearities in the sys-

tem description. A disadvantage of the Taylor series

is that it requires differentiability of the approximated

functions. This prompted further development (Nør-

gaard et al., 2000; Šimandl and Duník, 2009) result-

ing in the derivative-free filters based on the Stirling’s

interpolation formula. Other approaches that approx-

imate nonlinearities include the Fourier-Hermite KF

(Sarmavuori and Särkkä, 2012), special case of which

is the statistically linearized filter (Maybeck, 1982;

Gelb, 1974).

Instead of explicitly dealing with nonlinearities

in the system description, the unscented Kalman fil-

ter (UKF) (Julier et al., 2000) describes the densities

by a finite set of deterministically chosen σ-points,

which are then propagated through the nonlinearity.

Other filters, such as the Gauss-Hermite Kalman filter

(GHKF) (Ito and Xiong, 2000), the cubature Kalman

filter (CKF) (Arasaratnam and Haykin, 2009) and the

stochastic integration filter (Duník et al., 2013), uti-

lize numerical quadrature rules to approximate mo-

ments of the relevant densities. These filters can

be seen as representatives of a more general σ-point

methodology.

A limitation of classical integral approximations,

such as the Gauss-Hermite quadrature (GHQ), is that

they are specifically designed to perform with zero

error on a narrow class of functions (typically poly-

nomials up to a given degree (Särkkä, 2013)). It is

also possible to design rules, that have best average-

case performance on a wider range of functions at

the cost of permitting small non-zero error (Minka,

2000). In recent years, the Bayesian quadrature (BQ)

380

Prüher J. and Šimandl M..

Bayesian Quadrature in Nonlinear Filtering.

DOI: 10.5220/0005534003800387

In Proceedings of the 12th International Conference on Informatics in Control, Automation and Robotics (ICINCO-2015), pages 380-387

ISBN: 978-989-758-122-9

Copyright

c

2015 SCITEPRESS (Science and Technology Publications, Lda.)

has become a focus of interest in probabilistic numer-

ics community (Osborne et al., 2012). The BQ treats

numerical integration as a problem of Bayesian infer-

ence and thus it is able to provide an additional in-

formation - namely, uncertainty in the computation of

the integral itself. In (Särkkä et al., 2014), the authors

work with the concept of BQ, but the algorithms de-

rived therein do not make use of the uncertainty in the

integral computations.

The goal of this paper is to augment the current

σ-point algorithms so that the uncertainty associated

with the integral approximations is also reflected in

their estimates.

The rest of the paper is organized as follows. For-

mal definition of the Gaussian filtering problem is

outlined in Section 2, followed by the exposition of

the basic idea of Bayesian quadrature in Section 3.

The main contribution, which is the design of the

Bayes-Hermite Kalman filter (BHKF), is presented in

Section 4. Finally, comparison of the BHKF with ex-

isting filters is made in Section 5.

2 PROBLEM FORMULATION

The discrete-time stochastic dynamic system is de-

scribed by the following state-space model

x

k

= f(x

k−1

) + q

k−1

, q

k−1

∼ N(0, Q), (1)

z

k

= h(x

k

) + r

k

, r

k

∼ N(0, R), (2)

with initial conditions x

0

∼ N(m

0

, P

0

), where x

k

∈ R

n

is the system state evolving according to the known

nonlinear dynamics f : R

n

→ R

n

perturbed by the

white state noise w

k−1

∈ R

n

. Measurement z

k

∈ R

p

is

a result of applying known nonlinear transformation

h : R

n

→ R

p

to the system state and white addi-

tive measurement noise r

k

∈ R

p

. The mutual inde-

pendence is assumed between the state noise w

k

, the

measurement noise r

k

and the system initial condition

x

0

for all k ≥ 1.

The filtering problem is concerned with determi-

nation of the probability density function p(x

k

| z

1:k

).

The shorthand z

1:k

stands for the sequence of mea-

surements z

1

, z

2

, . . . , z

k

. The general solution to the

filtering problem is given by the Bayesian recursive

relations in the form of density functions

p(x

k

|z

1:k

) =

p(z

k

|x

k

) p(x

k

|z

1:k−1

)

p(z

k

|z

1:k−1

)

, (3)

with predictive density p(x

k

| z

1:k−1

) given by the

Chapman-Kolmogorov equation

p(x

k

|z

1:k−1

) =

Z

p(x

k

|x

k−1

)p(x

k−1

|z

1:k−1

) dx

k−1

.

(4)

In this paper, the integral computation is assumed

to take place over the support of x

k−1

. The like-

lihood term p(z

k

| x

k

) in (3) is determined by the

measurement model (2) and the transition probability

p(x

k

|x

k−1

) in (4) by the dynamics model (1).

For tractability reasons, the Gaussian filters make

simplifying assumption, that the joint density of state

and measurement p(x

k

, z

k

| z

1:k−1

) is of the form

N

x

k|k−1

z

k|k−1

m

x

k|k−1

m

z

k|k−1

,

P

x

k|k−1

P

xz

k|k−1

P

zx

k|k−1

P

z

k|k−1

. (5)

Knowledge of the moments in (5) is fully sufficient

(Deisenroth and Ohlsson, 2011) to express the first

two moments, m

x

k|k

and P

x

k|k

, of the conditional density

p(x

k

| z

1:k

) using the conditioning formula for Gaus-

sians as

m

x

k|k

= m

x

k|k−1

+ K

k

z

k

− m

z

k|k−1

, (6)

P

x

k|k

= P

x

k|k−1

− K

k

P

z

k|k−1

K

>

k

, (7)

with the Kalman gain defined as

K

k

= P

xz

k|k−1

P

z

k|k−1

−1

.

The problem of computing the moments in (5) can

be seen, on a general level, as a computation of mo-

ments of a transformed random variable

y = g(x), (8)

where g is a nonlinear vector function. This invari-

ably entails evaluation of the integrals of the follow-

ing kind

E[y] =

Z

g(x)p(x) dx (9)

with Gaussian p(x). Since the integral is typically in-

tractable, σ-point algorithms resort to the approxima-

tions based on weighted sum of function evaluations

Z

g(x)p(x) dx ≈

N

X

i=1

w

i

g(x

(i)

). (10)

The evaluation points x

(i)

are also known as the σ-

points, hence the name.

Thus, for instance, to compute m

x

k|k−1

, P

x

k|k−1

and

P

xz

k|k−1

, the following expressions, given in the matrix

notation, are used

m

x

k|k−1

' F

>

w, (11)

P

x

k|k−1

'

˜

F

>

W

˜

F, (12)

P

xz

k|k−1

'

˜

X

>

W

˜

F, (13)

where the weights are now w =

[

w

1

, . . . , w

N

]

>

,

W = diag

(

[w

1

, . . . , w

N

]

)

and the i-th rows of F,

˜

F and

˜

X are defined as the transpose of f(x

(i)

k−1

),

f(x

(i)

k−1

) − m

x

k|k−1

and x

(i)

k−1

− m

x

k|k−1

respectively.

BayesianQuadratureinNonlinearFiltering

381

All the information a quadrature rule has about

the function behaviour is conveyed by the N func-

tion values g(x

(i)

). Conversely, this means that any

quadrature is uncertain about the true function values

in between the σ-points. The importance of quantify-

ing this uncertainty becomes particularly pronounced,

when the function is not integrated exactly due to the

inherent design limitations of the quadrature (such as

the choice of weights and σ-points). All σ-point fil-

ters thus operate with the uncertainty, which is not

accounted for in their estimates. The classical treat-

ment of the quadrature does not lend itself nicely to

the quantification of the uncertainty associated with a

given rule. On the other hand, the Bayesian quadra-

ture, which treats the integral approximation as a

problem in Bayesian inference, is perfectly suited for

this task.

The Idea of using Bayesian quadrature in the state

estimation algorithms was already treated in (Särkkä

et al., 2014). The derived filters and smoothers, how-

ever, do not fully utilize the potential of the Bayesian

quadrature. Namely, variance of the integral is not re-

flected in their estimates, which still remains a prob-

lem to this day.

3 GAUSSIAN PROCESS PRIORS

AND BAYESIAN QUADRATURE

In this section, we introduce the key concepts of

Gaussian process priors and Bayesian quadrature,

which are crucial to the derivation of the filtering al-

gorithm in Section 4.

3.1 Gaussian Process Priors

Uncertainty over functions is naturally expressed by

a stochastic process. In Bayesian quadrature, Gaus-

sian processes (GP) are used for their favourable an-

alytical properties. Gaussian process is a collection

of random variables indexed by elements of an index

set, any finite number of which has a joint Gaussian

density (Rasmussen and Williams, 2006). That is, for

any finite set of indices X

0

=

n

x

0

1

, x

0

2

, . . . , x

0

m

o

, it holds

that

( g(x

0

1

), g(x

0

2

), . . . , g(x

0

m

) )

>

∼ N(0, K), (14)

where the kernel (covariance) matrix K is made up

of pair-wise evaluations of the kernel function, thus

[

K

]

i j

= k(x

i

, x

j

). Choosing a kernel, which in prin-

ciple can be any symmetric positive definite function

of two arguments, introduces assumptions about the

underlying function we are trying to model. Bayesian

inference allows to combine the GP prior p(g) with

the data, D =

{

(

x

i

, g(x

i

)

)

, i = 1, . . . , N

}

comprising

the evaluation points X = [x

1

, . . . , x

N

] and the func-

tion evaluations y

g

=

g(x

1

), . . . , g(x

N

)

>

, to pro-

duce a GP posterior p(g | D) with moments given by

(Rasmussen and Williams, 2006)

E

g

[g(x

0

)] = m

g

(x

0

) = k

>

(x

0

)K

−1

y

g

, (15)

V

g

[g(x

0

)] = σ

2

g

(x

0

) = k(x

0

, x

0

) − k

>

(x

0

)K

−1

k(x

0

),

(16)

where k(x

0

) = [k(x

0

, x

1

), . . . , k(x

0

, x

N

)]

>

. Thus, for

any test input x

0

, we recover a Gaussian posterior pre-

dictive density over the function values g(x

0

). Fig-

ure 1 depicts predictive moments of the GP poste-

rior density. Notice, that in between the evaluations,

where the true function value is not known, the GP

model is uncertain.

x

0

g(x

0

)

g(x

0

)

g(x

i

)

m

g

(x

0

)

Figure 1: True function (dashed), GP posterior mean

(solid), observed function values (dots) and GP posterior

samples (grey). The shaded area represents GP posterior

predictive uncertainty (±2 σ

g

(x

0

)). Notice the collapse of

uncertainty around the observations.

3.2 Bayesian Quadrature

The problem of numerical quadrature pertains to the

approximate computation of the integral

E

x

[g(x)] =

Z

g(x)p(x) dx. (17)

The key distinguishing feature of the BQ is that it

"treats the problem of numerical integration as the

one of statistical inference." (O’Hagan, 1991) This is

achieved by placing a prior density over the integrated

functions themselves. Consequence of this is that the

integral itself is then a random variable as well. Con-

cretely, if GP prior density is used, then the value of

the integral of the function will also be Gaussian dis-

tributed. This follows from the fact that integral is a

linear operator acting on the GP distributed random

function g(x).

Following the line of thought of (Rasmussen and

Ghahramani, 2003) we take expectation (with respect

ICINCO2015-12thInternationalConferenceonInformaticsinControl,AutomationandRobotics

382

to p(g |D)) of the integral (17) and obtain

E

g|D

[E

x

[g(x)]] =

ZZ

g(x)p(x) dx p(g|D) dg

ZZ

g(x)p(g |D) dgp(x) dx = E

x

[E

g|D

[g(x)]]. (18)

From (18) we see, that taking the expectation of in-

tegral is the same as integrating the GP posterior

mean function, which effectively approximates the in-

tegrated function g(x). Variance of the integral is

V

g|D

[E

x

[g(x)]] =

ZZ

k(x, x

0

)p(x)p(x

0

) dx dx

0

. (19)

A popular choice of kernel function, that enables the

expressions (18) and (19) to be computed analytically

is an Exponentiated Quadratic (EQ)

k(x

i

, x

j

; θ) = α

2

exp

−

1

2

x

i

− x

j

>

Λ

−1

x

i

− x

j

!

,

(20)

where the vertical lengthscale α and the horizontal

lengthscales on diagonal of Λ = diag( [`

2

1

, . . . , `

2

n

] )

are kernel hyper-parameters, collectively denoted by

the symbol θ. By using this particular kernel the as-

sumption of smoothness (infinite differentiability) of

the integrand is introduced (Rasmussen and Williams,

2006). Given the kernel function in the form (20) and

p(x) = N(m, P), the expressions for the integral pos-

terior mean and variance reduce to

E

g|D

[E

x

[g(x)]] = l

>

K

−1

y

g

, (21)

V

g|D

[E

x

[g(x)]] = α

2

2Λ

−1

P + I

−1/2

− l

>

K

−1

l, (22)

with l = [l

1

, . . . , l

N

]

>

l

i

=

Z

k(x, x

i

) N(x | m, P) dx = α

2

Λ

−1

P + I

−1/2

× exp

−

1

2

(x

i

− m)

>

(Λ + P)

−1

(x

i

− m)

!

. (23)

Notice that we could define weights as w = l

>

K

−1

.

Then the expression (21) is just a weighted sum of

function evaluations, conforming to the general σ-

point method as described by (11). As opposed to

classical quadrature rules, that prescribe the precise

locations of σ-points, BQ makes no such restrictions.

In (Minka, 2000), the optimal placement is deter-

mined by minimizing the posterior variance of the in-

tegral (19).

In the next section, we show how the integral vari-

ance (19) can be reflected in the current nonlinear fil-

tering quadrature-based algorithms.

4 BAYES-HERMITE KALMAN

FILTER

In this section, we show how the integral variance

can be incorporated into the moment estimates of

the transformed random variable. Parallels are drawn

with existing GP-based filters and the Bayes-Hermite

Kalman filter algorithm is outlined.

4.1 Incorporating Integral Uncertainty

Uncertainty over the function values is introduced by

a GP posterior p(g | D), whose mean function (15)

acts effectively as an approximation to the determin-

istic function g. Note that the equations (15), (16) can

only be used to model single output dimension of the

vector function g. For now, we will assume a scalar

function g unless otherwise stated. To keep the nota-

tion uncluttered, conditioning on D will be omitted.

Treating the function values g(x) as random leads to

the joint density p(g, x) and thus, when computing the

moments of g(x), the expectations need to be taken

with respect to both variables. This results in the fol-

lowing approximation of the true moments

µ = E

x

[g(x)] ≈ E

g,x

[g(x)], (24)

σ

2

= V

x

[g(x)] ≈ V

g,x

[g(x)]. (25)

Using the law of iterated expectations, we get

E

g,x

[g(x)] = E

g

[E

x

[g(x)]] = E

x

[E

g

[g(x)]]. (26)

This fact was used to derive weights for the filtering

and smoothing algorithms in (Särkkä et al., 2014),

where the same weights were used in computations

of means and covariances. Our proposed approach,

however, proceeds differently in derivation of weights

used in the computation of covariance matrices.

Note that the term for variance can be written out

using the decomposition formula either as

V

g,x

[g(x)] = E

x

[V

g

[g(x)]] + V

x

[E

g

[g(x)]] (27)

or as

V

g,x

[g(x)] = E

g

[V

x

[g(x)]] + V

g

[E

x

[g(x)]] (28)

depending on which factorization of the joint density

p(g, x) is used. The terms V

g

[g(x)] and V

g

[E

x

[g(x)]]

can be identified as variance of the integrand and vari-

ance of the integral respectively. In case of determin-

istic g, both of these terms are zero.

With EQ covariance (20), the expression (26) for

the first moment of a transformed random variable

takes on the form (21). Since the variance decom-

positions in (27) and (28) are equivalent, both can be

used to achieve the same goal.

BayesianQuadratureinNonlinearFiltering

383

The form (27) was utilized in derivation of the

Gaussian process - assumed density filter (GP-ADF)

(Deisenroth et al., 2012), which relies on the solution

to the problem of prediction with GPs at uncertain in-

puts (Girard et al., 2003). So, even though these re-

sults were derived to solve a seemingly different prob-

lem, we point out, that by using the form (27), the

uncertainty of the integral (as seen in the last term

of (28)) is implicitly reflected in the resulting covari-

ance. To conserve space, we only provide a summary

of the results in (Deisenroth et al., 2009) and point

reader to the said reference for detailed derivations.

The expressions for the moments of transformed vari-

able were rewritten into a form, which assumes that a

single GP is used to model all the output dimensions

of the vector function (8)

µ = G

>

w, (29)

Σ = G

>

WG − µµ

>

+ diag

α

2

− tr

K

−1

L

, (30)

with matrix G being defined analogously to F in (11)-

(13). The weights are given as

w = K

−1

l and W = K

−1

LK

−1

, (31)

where

L =

Z

k(X, x; θ

g

) k(x, X; θ

g

) N(x|m, P) dx. (32)

The equations (29) and (30) bear certain resemblance

to the σ-point method in (11), (12); however, in this

case matrix W is not diagonal. Note, that the weights

depend on the current location of σ-points and need

to be recomputed at every time step.

4.2 BHKF Algorithm

The filtering algorithm based on the BQ can now be

constructed utilizing (29) and (30). The BHKF uses

two GPs with the EQ covariance - one for each func-

tion in the state-space model (1)-(2), which means

that the two sets of hyper-parameters are used; θ

f

and

θ

h

. In the algorithm specification below, the lower in-

dex of q and K specifies the set of hyper-parameters

used to construct these quantities.

Algorithm (Bayes-Hermite Kalman Filter). In the

following, let x

0|0

∼ N

m

0|0

, P

0|0

, i = 1, . . . , N and

k = 1, 2, . . ..

Initialization:

Choose unit σ-points ξ

(i)

. Set hyper-parameters θ

f

and θ

h

. Proceed from the initial conditions x

0|0

, for

all k, by alternating between the following prediction

and filtering steps.

Prediction:

1. Form the σ-points x

(i)

k−1

= m

x

k−1|k−1

+

q

P

x

k−1|k−1

ξ

(i)

.

2. Propagate σ-points through the dynamics model

x

(i)

k

= f

x

(i)

k−1

and form F as in (11)-(13).

3. Using x

(i)

k

and hyper-parameters θ

f

, compute

weights w

x

and W

x

according to (31) and (32).

4. Compute predictive mean m

x

k|k−1

and predictive

covariance P

x

k|k−1

m

x

k|k−1

= F

>

w

x

P

x

k|k−1

= F

>

W

x

F − m

x

k|k−1

(m

x

k|k−1

)

>

+ diag

α

2

− tr

K

−1

L

+ Q

Filtering:

1. Form the σ-points x

(i)

k

= m

x

k|k−1

+

q

P

x

k|k−1

ξ

(i)

.

2. Propagate the σ-points through the measurement

model z

(i)

k

= h

x

(i)

k

, and form H as in (11)-(13)

3. Using x

(i)

k

and hyper-parameters θ

h

, compute

weights w

z

and W

z

according to (31) and (32).

Construct W

xz

= diag

(

l

h

)

K

−1

h

.

4. Compute measurement mean, covariance and

state-measurement cross-covariance

m

z

k|k−1

= H

>

w

z

P

z

k|k−1

= H

>

W

z

H − m

z

k|k

(m

z

k|k

)

>

,

+ diag

α

2

− tr

K

−1

L

+ R

P

xz

k|k−1

= P

x

k|k−1

(P

x

k|k−1

+ Λ)

−1

˜

XW

xz

H,

where the i-th row of

˜

X is x

(i)

k

− m

x

k|k−1

5. Compute the filtered mean m

x

k|k

and filtered co-

variance P

x

k|k

m

x

k|k

= m

x

k|k−1

+ K

k

z

k

− m

z

k|k−1

,

P

x

k|k

= P

x

k|k−1

− K

k

P

z

k|k−1

K

>

k

.

with Kalman gain K

k

= P

xz

k|k−1

P

z

k|k−1

−1

.

5 NUMERICAL ILLUSTRATION

In the numerical simulations the performance of

the filters was tested on a univariate non-stationary

growth model (UNGM) (Gordon et al., 1993)

x

k

=

1

2

x

k−1

+

25x

k−1

1 + x

2

k−1

+ 8 cos(1.2 k) + q

k−1

,

(33)

z

k

=

1

20

x

2

k−1

+ r

k

, (34)

with the state noise q

k−1

∼ N(0, 10), measurement

noise r

k

∼ N(0, 1) and initial conditions x

0|0

∼

N(0, 5).

ICINCO2015-12thInternationalConferenceonInformaticsinControl,AutomationandRobotics

384

Since the BHKF does not prescribe the σ-point lo-

cations, they can be chosen at will. The GHKF based

on the r-th order Gauss-Hermite (GH) quadrature rule

uses σ-points, which are determined as the roots of

the r-th degree univariate Hermite polynomial H

r

(x).

When it is required to integrate function of a vector

argument (n > 1), a multidimensional grid of points

is formed by the Cartesian product, leading to their

exponential growth (N = r

n

). The GH weights are

computed according to (Särkkä, 2013) as

w

i

=

r!

[rH

r−1

(x

(i)

)]

2

. (35)

The Unscented Transform (UT) is also a simple

quadrature rule (Ito and Xiong, 2000), that uses N =

2n + 1 deterministically chosen σ-points,

x

(i)

= m +

√

Pξ

(i)

(36)

with unit σ-points defined as columns of the matrix

h

ξ

(0)

, ξ

(1)

, . . . , ξ

(2n+1)

i

=

h

0, cI

n

, −cI

n

i

(37)

where I

n

denotes n × n identity matrix. The corre-

sponding weights are defined by

w

0

=

κ

n + κ

, w

i

=

1

2(n + κ)

, i = 1, . . . , 2n (38)

with scaling factor c =

√

n + κ. All of the BHKFs

used the same set of hyper-parameters θ

f

= θ

h

=

[`, α]

>

= [3, 1]

>

. UKF operated with κ = 2. BHKFs

that used UT and GH σ-points of order 5, 7, and 10

were compared with their classical quadrature-based

counterparts, namely, UKF and GHKF of order 5, 7

and 10.

We performed 100 simulations, each for K = 500

time steps. Root-mean-square error (RMSE)

RMSE =

v

u

t

1

K

K

X

k=1

x

k

− m

x

k|k

2

(39)

was used to measure the overall error in the state esti-

mate m

x

k|k

across all time steps. As a metric that takes

into account the estimated state covariance, the Non-

credibility Index (NCI) (Li and Zhao, 2006) given by

NCI =

10

K

K

X

k=1

log

10

x

k

− m

x

k|k

>

P

−1

k|k

x

k

− m

x

k|k

x

k

− m

x

k|k

>

Σ

−1

k

x

k

− m

x

k|k

(40)

was used, where Σ

k

is the mean-square-error matrix.

The filter is said to be optimistic if it underestimates

the actual error, which is indicated by NCI > 0. Per-

fectly credible filter would provide NCI = 0, that is,

it would neither overestimate nor underestimate the

actual error.

Table 1: The average root-mean-square error.

BQ Classical

UT 10.544 ± 0.048 11.081 ± 0.159

GH5 10.740 ± 0.070 10.257 ± 0.133

GH7 10.306 ± 0.053 9.855 ± 0.133

GH10 10.431 ± 0.058 9.705 ± 0.120

Tables show average values of the performance

metrics across simulations with estimates of ±2 stan-

dard deviations (obtained by bootstrapping (Wasser-

man, 2006)). As evidenced by the results in Table 1,

the BQ provides superior RMSE performance only

for the case of UT σ-points. In the classical quadra-

ture case the performance improves with increasing

number of σ-points used. This trend is not observed

in the BQ case. We suspect that this is due to the

hyper-parameters θ

f

, θ

h

being fixed to the same value

regardless of the number of σ-points. These act ef-

fectively as a training set of the GP model and thus,

it would make sense to use different values of hyper-

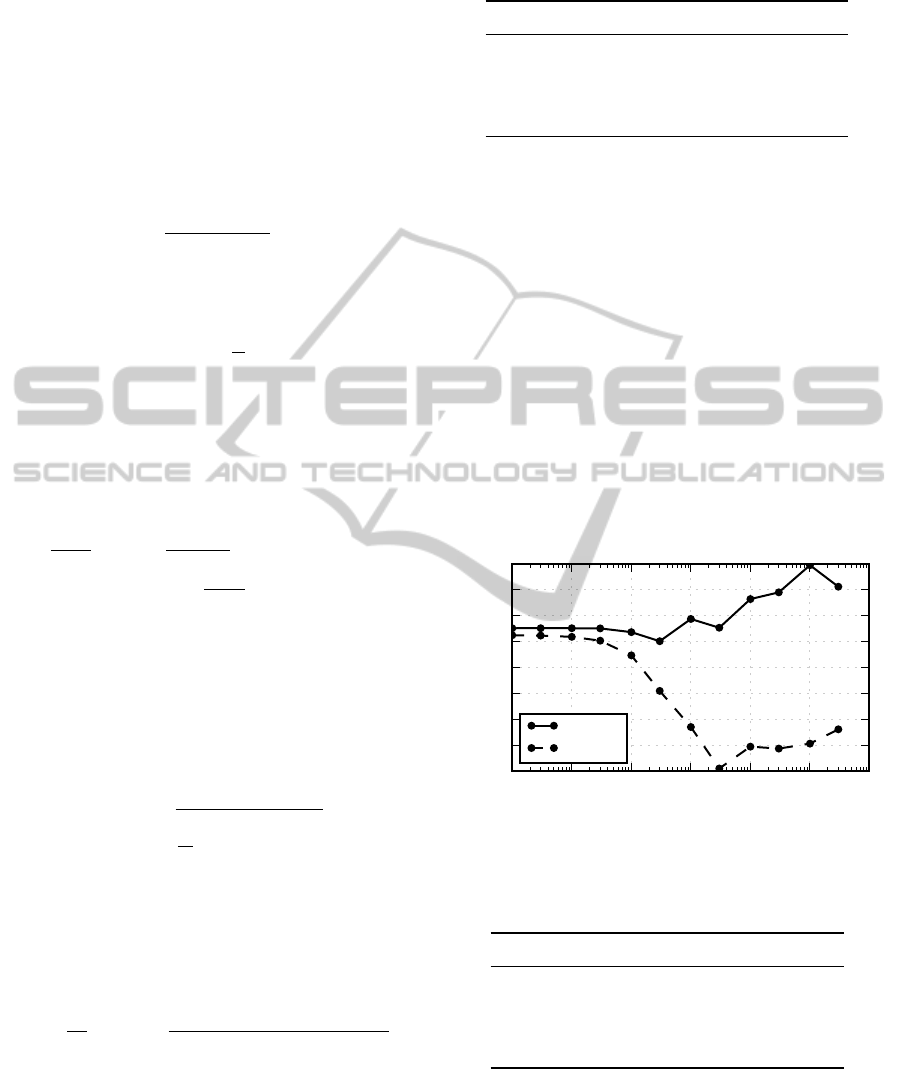

parameters with training sets of different sizes. Fig-

ure 2 illustrates the effect of changing lengthscale

on the overall performance of the BHKF with UT σ-

points. The self-assessment of the filter performance

10

−3

10

−2

10

−1

10

0

10

1

10

2

10

3

`

5

6

7

8

9

10

11

12

13

RMSE, NCI

RMSE

NCI

Figure 2: Sensitivity of BHKF performance to changes in

the lengthscale hyperparameter `. The choice ` = 3 mini-

mizes NCI at the cost of slightly higher RMSE.

Table 2: The average non-credibility index.

BQ Classical

UT 5.106 ± 0.010 12.071 ± 0.045

GH5 4.977 ± 0.013 10.228 ± 0.065

GH7 4.321 ± 0.009 9.256 ± 0.070

GH10 3.647 ± 0.010 8.042 ± 0.077

is less optimistic in the case of BQ, as indicated by

the lower NCI in the Table 2. This indicates that the

BQ based filters are more conservative in their covari-

ance estimates. This is a consequence of including

additional uncertainty (integral variance), which the

classical quadrature-based filters do not utilize.

BayesianQuadratureinNonlinearFiltering

385

6 CONCLUSIONS

In this paper, we proposed a way of utilizing uncer-

tainty associated with integral approximations in the

nonlinear quadrature-based filtering algorithms. This

was enabled by the Bayesian treatment of quadrature.

The proposed filtering algorithms were tested on a

univariate benchmarking example. The results show

that the filters utilizing additional uncertainty pro-

vided by the BQ show significant improvement in

terms of credibility of their estimates.

Proper setting of the hyper-parameters is crucially

important for achieving competitive results. The need

for a principled approach for dealing with the hyper-

parameters could prompt further research. Another

possible research direction could be concerned with

the adaptive placement of σ-points based on the pos-

terior integral variance.

ACKNOWLEDGEMENTS

This work was supported by the Czech Science Foun-

dation, project no. GACR P103-13-07058J.

REFERENCES

Arasaratnam, I. and Haykin, S. (2009). Cubature Kalman

Filters. IEEE Transactions on Automatic Control,

54(6):1254–1269.

Bhar, R. (2010). Stochastic filtering with applications in

finance. World Scientific.

Deisenroth, M. P., Huber, M. F., and Hanebeck, U. D.

(2009). Analytic moment-based Gaussian process fil-

tering. In Proceedings of the 26th Annual Interna-

tional Conference on Machine Learning - ICML ’09,

pages 1–8. ACM Press.

Deisenroth, M. P. and Ohlsson, H. (2011). A General Per-

spective on Gaussian Filtering and Smoothing: Ex-

plaining Current and Deriving New Algorithms. In

American Control Conference (ACC), 2011, pages

1807–1812. IEEE.

Deisenroth, M. P., Turner, R. D., Huber, M. F., Hanebeck,

U. D., and Rasmussen, C. E. (2012). Robust Filtering

and Smoothing with Gaussian Processes. IEEE Trans-

actions on Automatic Control, 57(7):1865–1871.

Duník, J., Straka, O., and Šimandl, M. (2013). Stochastic

Integration Filter. IEEE Transactions on Automatic

Control, 58(6):1561–1566.

Gelb, A. (1974). Applied Optimal Estimation. The MIT

Press.

Gillijns, S., Mendoza, O., Chandrasekar, J., De Moor, B.,

Bernstein, D., and Ridley, A. (2006). What is the en-

semble kalman filter and how well does it work? In

American Control Conference, 2006, page 6.

Girard, A., Rasmussen, C. E., Quiñonero Candela, J., and

Murray-Smith, R. (2003). Gaussian Process Priors

With Uncertain Inputs Application to Multiple-Step

Ahead Time Series Forecasting. In Becker, S., Thrun,

S., and Obermayer, K., editors, Advances in Neural

Information Processing Systems 15, pages 545–552.

MIT Press.

Gordon, N. J., Salmond, D. J., and Smith, A. F. M. (1993).

Novel approach to nonlinear/non-Gaussian Bayesian

state estimation. IEE Proceedings F (Radar and Sig-

nal Processing), 140(2):107–113.

Grewal, M. S., Weill, L. R., and Andrews, A. P. (2007).

Global Positioning Systems, Inertial Navigation, and

Integration. Wiley.

Ito, K. and Xiong, K. (2000). Gaussian Filters for Nonlinear

Filtering Problems. IEEE Transactions on Automatic

Control, 45(5):910–927.

Jiang, T., Sidiropoulos, N., and Giannakis, G. (2003).

Kalman filtering for power estimation in mobile com-

munications. Wireless Communications, IEEE Trans-

actions on, 2(1):151–161.

Julier, S. J., Uhlmann, J. K., and Durrant-Whyte, H. F.

(2000). A New Method for the Nonlinear Transfor-

mation of Means and Covariances in Filters and Es-

timators. IEEE Transactions on Automatic Control,

45(3):477–482.

Kalman, R. E. (1960). A New Approach to Linear Filtering

and Prediction Problems. Journal of Basic Engineer-

ing, 82(1):35–45.

Li, X. R. and Zhao, Z. (2006). Measuring Estimator’s Cred-

ibility: Noncredibility Index. In Information Fusion,

2006 9th International Conference on, pages 1–8.

Maybeck, P. S. (1982). Stochastic Models, Estimation and

Control: Volume 2. Academic Press.

Minka, T. P. (2000). Deriving Quadrature Rules from Gaus-

sian Processes. Technical report, Statistics Depart-

ment, Carnegie Mellon University, Tech. Rep.

Nørgaard, M., Poulsen, N. K., and Ravn, O. (2000). New

developments in state estimation for nonlinear sys-

tems. Automatica, 36:1627–1638.

O’Hagan, A. (1991). Bayes–Hermite quadrature. Journal

of Statistical Planning and Inference, 29(3):245–260.

Osborne, M. A., Rasmussen, C. E., Duvenaud, D. K., Gar-

nett, R., and Roberts, S. J. (2012). Active Learning

of Model Evidence Using Bayesian Quadrature. In

Advances in Neural Information Processing Systems

(NIPS), pages 46–54.

Rasmussen, C. E. and Ghahramani, Z. (2003). Bayesian

monte carlo. In S. Becker, S. T. and Obermayer, K.,

editors, Advances in Neural Information Processing

Systems 15, pages 489–496. MIT Press, Cambridge,

MA.

Rasmussen, C. E. and Williams, C. K. (2006). Gaussian

Processes for Machine Learning. The MIT Press.

Särkkä, S. (2013). Bayesian Filtering and Smoothing. Cam-

bridge University Press, New York.

Särkkä, S., Hartikainen, J., Svensson, L., and Sandblom,

F. (2014). Gaussian Process Quadratures in Nonlin-

ear Sigma-Point Filtering and Smoothing. In Informa-

ICINCO2015-12thInternationalConferenceonInformaticsinControl,AutomationandRobotics

386

tion Fusion (FUSION), 2014 17th International Con-

ference on, pages 1–8.

Sarmavuori, J. and Särkkä, S. (2012). Fourier-Hermite

Kalman Filter. IEEE Transactions on Automatic Con-

trol, 57(6):1511–1515.

Šimandl, M. and Duník, J. (2009). Derivative-free estima-

tion methods: New results and performance analysis.

Automatica, 45(7):1749–1757.

Smith, G. L., Schmidt, S. F., and McGee, L. A. (1962). Ap-

plication of statistical filter theory to the optimal esti-

mation of position and velocity on board a circumlu-

nar vehicle. Technical report, NASA Tech. Rep.

Wasserman, L. (2006). All of Nonparametric Statistics.

Springer-Verlag New York.

BayesianQuadratureinNonlinearFiltering

387