Augmented Hearing Assistance for Elderly People

From Requirements to Implementation

Pierre Barralon

1

, Ivo Ramos Maia Martins

2

, Nicole Merkle

3

and Stephanie Schwarz

4

1

Heath Division, Tecnalia Research & Innovation, P. Mikeletegi, 20009, San Sebastian, Spain

2

Research and Innovation, ATOS, Calle Viriato, 28040, Madrid, Spain

3

Information Process Engineering, Forschungszentrum Informatik, Haid-und-Neu-Str., 76131, Karlsruhe, Germany

4

Innovation Systems Department, Austrian Institute of Technology, Giefinggasse, 1210, Vienna, Austria

Keywords: Remote Assistance, Health Management, Rules, Notifications, Medication Reminder, Voice User Interface,

OpenAAL, Embedded Sensors.

Abstract: Remote assistance and health management are very important aspects in the EU funded AHEAD project.

The AHEAD project aims on the combination and integration of innovative sensing devices already used by

the elderly in their daily life (eyeglasses and hearing aid) with the Information and communications

technology based modules in order to build a completely innovative remote monitoring product and

services. This paper presents and discusses the data modelling, platform and services development, system

integration and architecture out of technical perspectives.

1 INTRODUCTION

With the age increasing, hearing skills decay. With

the use of assistive technology systems it is possible

to preserve and improve the quality of life of elderly

people with hearing losses. Currently few hearing

aids have a wireless connectivity and for those

which support it, it is done through a dedicated

physical device which works as a gateway between

the hearing aid and the smartphone (Starkey

SurfLink Mobile 2 (Starkey, 2015), Phonak

ComPilot (Phonak, 2015)). The low usability of such

wireless solutions limits the services that can be

delivered to the hearing impaired person. The

project AHEAD (Augmented Hearing Experience

and Assistance for Daily life) aims to make use of

devices already accepted by elderly people in their

daily life: eyeglasses and hearing aid. The

combination and integration between advanced and

innovative sensing with the ICT (Information and

communications technology) based modules will

result in a completely new and innovative product

(and services). Both the eyeglasses and the typical

hearing aid will embed a microphone. The hearing

aid will not only be voice-controlled but will also

become a communication device. As health

management is important, the modified hearing aid

will be able to measure vital signs such as heart rate,

oxygen saturation and body temperature. These

measurements can also be used for the user’s

emotional state detection and take action against

symptoms of depression. Finally, a 3D inertial

sensor records general activity and risky postural

behaviours. Our assistant will be wirelessly

connected to a smart phone and be a part of a smart

living environment.

Figure 1: AHEAD first prototype.

This is used for compliance or for connecting the

hearing aid to home automation systems and provide

complementary services such as personal alarms

(extension or replacement of the social alarm

button), medication reminders, house warning,

cooking and financial management assistance

59

Barralon P., Ramos Maia Martins I., Merkle N. and Schwarz S..

Augmented Hearing Assistance for Elderly People - From Requirements to Implementation.

DOI: 10.5220/0005445900590066

In Proceedings of the 1st International Conference on Information and Communication Technologies for Ageing Well and e-Health (ICT4AgeingWell-

2015), pages 59-66

ISBN: 978-989-758-102-1

Copyright

c

2015 SCITEPRESS (Science and Technology Publications, Lda.)

verbally conveyed into the wearer’s ear.

In addition additional services currently

developed in other Ambient Assisted Living (AAL)

projects can be later added. For example, navigation

support to hearing impaired people could be

supported by the Assistant project (ASSISTANT

2012-2015). For users having eyesight decline

HearMeFeelMe helps replacing visual and textual

information with audio information (HearMeFeelMe

2012-2015).

2 USE CASES

To be able to work toward the project´s objectives,

comprehensive use cases (Rashid, 2014) for

emergency and phone calls, medication reminders,

and audiometry self-verification testing respectively

were defined in a first step, as described in detail in

the following.

2.1 Emergency Call - Voice Command

An emergency call or alarm is triggered by a voice

command generated by the user. This functionality

is similar to current social alarm (push button)

devices but triggered by voice instead:

a. The user pushes a button on the hearing device in

order to engage a voice command, and is

provided with a sound (message) letting him/her

know that the system is waiting for a voice input.

b. The user says a specific “emergency” keyword.

An audio message is streamed to the smartphone.

Speech to text conversion is performed using

Google API on the smartphone.

c. The message is sent to openAAL to be logged

and to take decision if the command is an alert or

not. In case of an alert openAAL returns to the

smartphone a list of preferred phone numbers.

d. The AHEAD mobile app calls automatically the

first number on the list (e.g. Emergency Call

Center).

2.2 Emergency - Vital Signs

The user wears the AHEAD system equipped with

the embedded physiological sensors which

continuously monitor vital signs. Vital parameters

(e.g. heart rate) of the assisted person are monitored

and evaluated. Depending on the measured health

state of the person, actions are triggered:

a. Vital values from the sensor are processed every

second and sent to the openAAL platform. A

dedicated software component analyses the

evolution of the heart rate.

b. In case of values deviating from the desired

values, an alert is triggered.

c. The openAAL platform sends to the mobile an

action to perform: “make phone call”. A list of

phone numbers is provided.

d. The mobile phone automatically dials the first

number in the list (e.g. Emergency Call Center).

2.3 Emergency Call - Push Button

An emergency call or alarm is triggered manually by

the user interacting with the smartphone. This

functionality is similar to current social alarm (push

button) devices:

a. The user pushes a dedicated button on the screen.

b. A text message is sent to openAAL to be logged,

and openAAL returns to the smartphone the

preferred phone numbers.

c. AHEAD mobile app automatically calls the first

number on the list.

2.4 Phone Call

A regular phone call is initiated by voice command

like standard Bluetooth headsets:

a. The user pushes a button on the hearing device in

order to engage a voice command and is

provided with a sound (message) letting him/her

know that the system is waiting for a voice input.

b. The user says a specific keyword and contact

name (e.g. “call Peter”).

c. The call is initiated.

2.5 Medication Reminder

The AHEAD system provides reminders to the user

in taking his/her medicine in time:

a. Reminder information is introduced into the

AHEAD system by the doctor.

b. Data (frequency, time, etc.) is transferred and

stored into the openAAL platform.

c. If reminder conditions are met an event/message

is sent to the smartphone indicating the message

to transmit and the modality to be used (screen

popup or synthetized voice).

d. The user receives the message and should

acknowledge it.

2.6 Audiometry Verification Test

This test aims at establishing audiograms assessing a

users’ hearing capacity. The test stimulus is

presented to the hearing-impaired user using inserts,

ICT4AgeingWell2015-InternationalConferenceonInformationandCommunicationTechnologiesforAgeingWelland

e-Health

60

headset or a free field speaker:

a. The user hears a voice repeating a stimulus word

in different noisy environments (varying signal-

noise ratio).

b. The user has to identify the stimulus word and

tell his/her response to the audiologist

c. This sequence is repeated several times. Scores

and graphics are later presented and discussed.

Based on these comprehensive use cases low-fi

prototypes were developed and evaluated in a first

iteration by elderly people within a lab user study.

2.7 Implications from User-centred

Evaluation

The goal of the first user study was to gather

usability and acceptance feedback of the first

developed low-fi prototypes including the

Emergency call via button press on the smartphone

and via voice command using the hearing glasses, an

early standalone prototype of the Audiometry

verification test. Preferences for medication intake

reminder sounds were collected. Fifteen elderly

users aged between 60 and 79 years participated in

this study. A mix approach of different quantitative

and qualitative measurements was applied to gather

first insights on usability and interactions aspects. A

Nexus 5 cellphone and the hearing glasses prototype

were used to evaluate the following services. The

first user preferences regarding the type of initiation

of an emergency call were explored. Users had to

initiate an emergency call via speech-command

(active), using specific key words such as

“emergency”, “call”, “help”. In the second task

automatic emergency call according to severe vital-

sign deviances was simulated (passive). The third

task was to initiate an emergency call via a big and

red push button in form of a clickable mockup in the

smartphone screen (active). The mockups of this

General User Interface (GUI) based feature were

perceived as being easy to understand and use.

The medication intake reminder service was

accessed either via the Voice User Interface (VUI)

by using a speech-command or by selecting the

corresponding button on the smartphone. The

concept of the medication intake reminder was

presented to users who were asked how they would

preferably use this service. Furthermore, users had to

rate different feedback signals (Pre-recorded voice,

synthesized voice, a melody, a ringing and a beep

sound) to explore their preferences and which

interaction modality would be suitable to affirm the

medication intake to the system, e.g. speech input, or

swipe out on the smartphone screen, etc.

Finally an early version of the hearing

verification test was presented to participants who

were enabled to calibrate the volume of the hearing

glasses by using the available software. User

feedback underlined the advantage of performing

this test at home, so that they do not need to leave

the house or visit a doctor. Results were converted

into technical requirements, implemented and

presented in the next section.

3 SYSTEM DEVELOPMENT

The services described in the introduction are those

currently implemented. More services will be added

in the future (such as health, fitness, and affective

assistant). Each of the mentioned services are using

either hearing aid embedded sensors (see section

3.1) or ambient information. This low level

environmental data is transformed and enriched by a

platform with metadata and stored at a triplestore.

To fullfill this tasks the openAAL platform was

created. It is a Context Management platform which

offers different interfaces for data exchange between

every components. Every AHEAD service

participating the AHEAD system is connected to the

platform during the whole lifecycle of the AHEAD

system. Depending the involved components, there

are different interfaces to communicate with the

platform. The following list shows the different

components participating in the AHEAD system.

Wearable devices like the hearing glasses from

Bruckhoff and the C-SPO1 ear sensor from

cosinuss, which are measuring vital parameters

like the heartrate. These sensors are connected

via Bluetooth to a mobile phone running the

AHEAD APP. This APP transforms the data into

a Extensible Markup Language (XML) message

and sends it to the platform.

AHEAD services, fullfilling the services to assist

the enduser in his/her daily life.

escos connector for integrating, measuring and

controling the devices at home of the AHEAD

system enduser.

Each of this interfaces and the processing of the

data inbetween will be described later in this paper.

The openAAL platform is like the described services

not yet fully implemented but will be extended

during the AHEAD project with further

functionalities. Currently the interfaces and the

transformation of the data into higher level data are

developed. But a Context Management platform

AugmentedHearingAssistanceforElderlyPeople-FromRequirementstoImplementation

61

needs more than just the preprocessing and storeing

of data.

3.1 Hardware Components

As exemplified on Figure 1, the AHEAD system is

composed by various devices connected together:

the openAAL platform (see section 3.2), a

smartphone, a hearing instrument (either eyeglasses

of behind the hear system), hearing verification

tools, and embedded sensors that are now presented

into more details.

3.1.1

Mobile Phone

The smartphone used for the prototype is a Nexus 5

which runs an Android operating system (version

4.4.4, released on June 2014, API 20). The Nexus 5

is powered by a 2.26 GHz quad-core Snapdragon

800 processor with 2 GB of RAM. Since the android

version is higher than 4.3 the smartphone supports

Bluetooth Low Energy (BLE) which is used to

connect and exchange data with Cosinuss

physiological sensors and Bruckhoff audio module.

In addition the smartphone interoperates with the

openAAL platform and AHEAD services. The

AHEAD mobile applications has an orchestrator

responsible of managing (1) background services

(such as localization Service, SpeechToText Service,

TextToSpeech Service, Cosinuss Service (to

received Heart Rate and accelerometric values),

OpenAAL Service and (2) the mobile Graphical

User Interface (GUI, section 3.4.1).

3.1.2

Hearing Instrument

The

Figure

2 shows Bruckhoff hearing aid la belle

with a RIC system.

Figure 2: (left) Bruckhoff hearing aid la belle with a RIC

system that is mounted on regular eyeglasses. (Right)

Cosinuss hearing aid with embedded physiological

sensors.

This means the speaker is in the ear canal and is

placed in front of the ear drum (tympanum). The

RIC system can be used for an open fitting, without

an ear mould in the ear. This is a good solution for a

person with normal hearing, because they will have

the sensation of not wearing anything in the ear.

This is also suitable for people with mild or

moderate hearing loss.

3.1.3

Hearing Verification Tools

Before the start of AHEAD project a verification

procedure was performed as follow: a laptop runs

the hearing test software. A special hardware unit

(audiometer) is producing the verification sounds for

the hearing tests. The test stimulus is presented to

the hearing-impaired person using inserts, a headset

or a free field speaker. For the AHEAD project the

hearing verification hardware (the laptop and the

audiometer) will be replaced with a mobile device

(smartphone) and a wireless Bluetooth speaker. In

this upcoming new configuration, the auditory

verification test will be performed at home without

the need for the person to go to a professional office.

3.1.4

Embedded Sensors

The embedded sensors (oxygen saturation (SpO

2

),

skin temperature) are integrated in the silicon cap

(

Figure

2, right), that is positioned in the user’s ear

canal. In addition a 3D accelerometer is located on

the PCB near the Microcontroller.

To measure SpO

2

, two different light sources are

needed: one red LED and an infrared. The LEDs

blinks alternatively and a photodiode detects the out

coming light. The proportion of the constant values

of both light intensities and the modulated values,

allows the SpO

2

estimation. For this, the Lambert-

Beer law and some simplifications are used.

The heart rate (HR) is measured optically. The

light of a LED is sent into the tissue and a photo

diode will measure the intensity of the out coming

light. This light is modulated by the blood, which is

pumped from the heart. Due to the blood volume

variation, the heart rate can be detected.

The skin temperature is measured by a thermistor

which changes its resistance together with the

temperature. The resistance is measured from an

analog-digital-converter, which has a very high

resolution (24bits).

In addition an accelerometer has been added.

This data is processed by the smartphone which

extracts information related to the user physical

activity which will serve for the fitness assistant.

3.2 System Architecture

This section will deal with the requirements and the

ICT4AgeingWell2015-InternationalConferenceonInformationandCommunicationTechnologiesforAgeingWelland

e-Health

62

architecture of the openAAL platform and the

participating services. It will be pointed out, which

functionalities the platform has to provide

considering the AHEAD services and their

requirements. The openAAL platform was

developed at FZI research centre as AAL research

platform. Further openAAL is a distribution of the

universAAL middleware. The universAAL

architecture is an EU research project funded by the

European community’s seventh framework program.

The distribution is developed in several releases. It is

a context management middleware basically for

providing the communication infrastructure between

devices and AHEAD services. The goals are to

integrate and support different hardware devices and

to collect and transform environmental data,

measured by sensors into context information for the

AHEAD services. It consists of different

components, executing different tasks. The scenarios

in AHEAD require the ability of the platform to

communicate with different kind of devices from

different manufacturers. Besides of this the

introduced services like the Emergency Call need

real time communication, because every lost second

can be important to save the life of an affected

person. The modularity of the architecture allows it

to extend the platform with further features and

functions. A high number of users shall be able to

use the services running in the AHEAD system.

These services are written in different

programming languages. Various protocols are used

by different participating devices. So scalability,

interoperability, real time capability and

standardization are also requirements of the

platform. The environmental sensors and the

interactions of the user with the wearable devices

and the UIs supply a lot of valuable data. This data

is used by the AHEAD services to serve

recommendations and information appropriate to the

situation and context of the user. Further the

platform has to decide, which service is the right

service in the right moment and the right situation.

To achieve this task, the context of the end user has

to be pre-processed and evaluated. Considering these

challenges the platform has to accomplish basically

three tasks. First collect sensor data and check if

data are valid. Second, pre-process and aggregate

this data to common machine readable instances

representing the real world environment of the end

user. Third, estimate from the data what are the

needs of the end user based on contextual and

behavioural information.

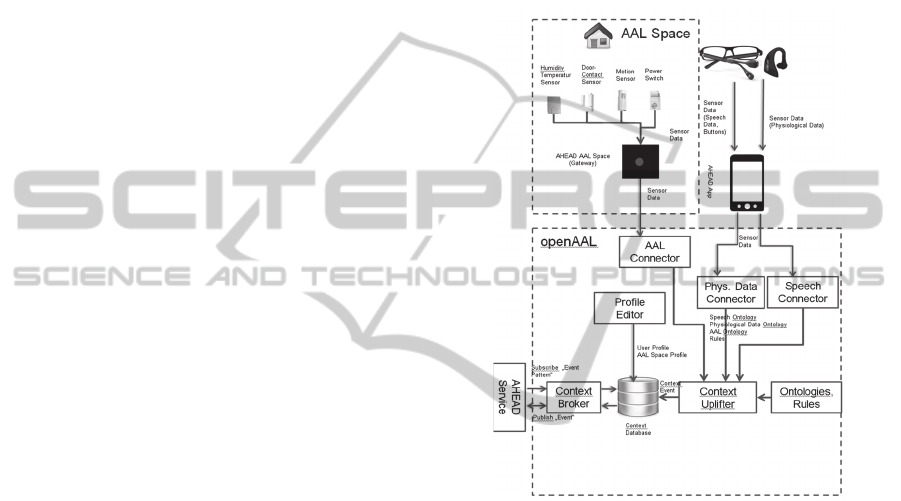

Figure 3 shows the components of the AHEAD

system. One component is the AAL space

containing sensors and actuators. Usually this AAL

space is the sensorised environment (e.g. the home)

of an end-user. The terms end-user and assisted

person are used in this context synonymously.

Beside we have two wearable devices: the hearing

aid and its embedded physiological sensors. These

devices cannot exchange data directly with the

openAAL platform but are communicating via

Bluetooth profiles with the Android mobile phone of

the end user running the AHEAD application.

Figure 3: AHEAD system components.

This AHEAD mobile application transforms the

received data into a predefined XML messages and

transmits them to the openAAL platform. This XML

messages contain basically Metadata containing:

user authorization data, the functionalities (and

associated software services) the user is interested

in, measured values by the given sensors. There are

different messages that the mobile phone can send to

the openAAL platform. For example if the user

requests a service using a voice command then an

XML message for “speech commands” will be sent

to the platform. Such xml message contains a

timestamp, login data (username, password, id,

email address…) for authorizing the user at the

platform and the main part: the message node. It has

several attributes which define both the type and

content of the message. Sensor data (e.g. heart rate)

are transferred from the mobile to the openAAL

with a different xml structure “data device”. The

communication between the mobile phone and the

AugmentedHearingAssistanceforElderlyPeople-FromRequirementstoImplementation

63

openAAL platform is set up through a Web Socket

connection. Web Socket was chosen because it

allows a full duplex communication, staying, open

as long as needed. In addition it allows to manage

several simultaneous connections, creating a new

session every time a new client connects to the

platform. The mobile phone connects as a client to

the Connectors (Figure 3) and sends the transformed

data. Every message type has an appropriate

Connector at the platform responsible for the pre-

processing and transformation of the retrieved data.

This transformed data is sent to the Context Up lifter

which enriches the data with Metadata and

transforms it into Ontology instances. These

Ontology instances are saved into a Triple Store

which contains all data about the environmental

events and provides in this way a history of the

contextual states. This history can be requested by

an AHEAD service through appropriate queries.

Beside this, the platform delivers with every

occurring event, and after a mapping process, the

appropriate ontology instances to the Context

Broker. The Broker parses the service registry and

sends to the fitting service the appropriate

information. The pre-condition is, that the service

has registered itself to the Context Broker and

implements the interfaces pretended by the Broker.

If an AHEAD service is running on a different

platform implemented in a different programming

language a remote communication is taking place.

The Broker and the appropriate AHEAD service are

generating JavaScript Object Notation (JSON)

messages to communicate.

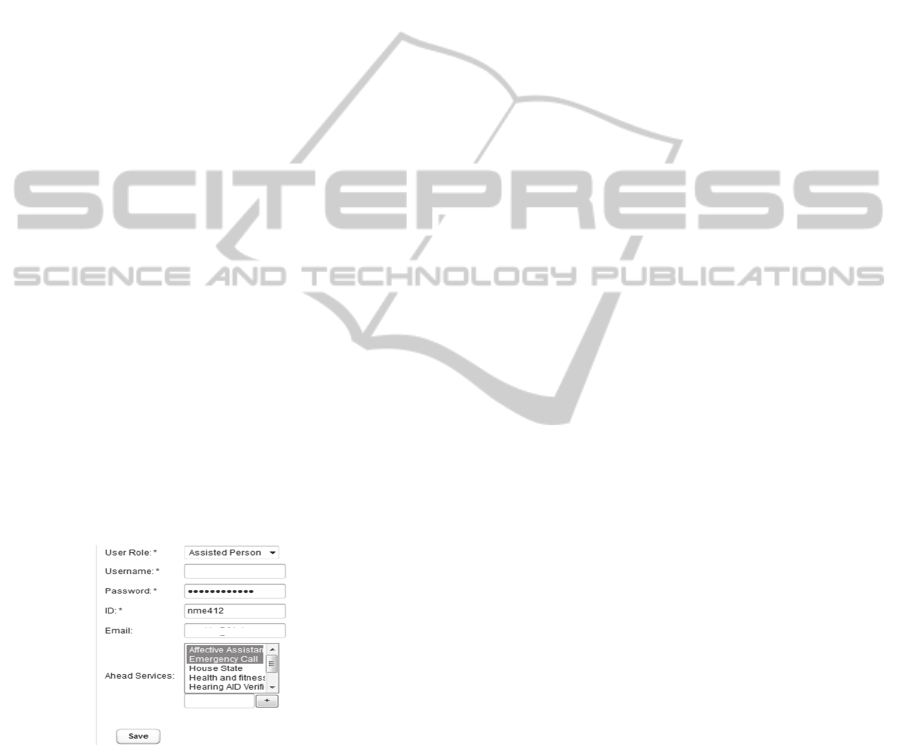

Figure 4: Web interface of the AHEAD system for adding

new user profiles.

The Profile Editor is a Web interface for introducing

new end users to the AHEAD system. Figure 4

shows the web view for adding new end users to the

AHEAD system. Each user is profiled by its

authorization data, the services he or she wants to

benefit (e.g. emergency calls, medication reminders,

auditory verification test, …). When this information

introduced for a particular user, only the requested

services will be activated for him/her.

Currently the mentioned components are

implemented. Further components to personalize the

AHEAD services to the needs of the end user will be

developed and tested in the framework of the

AHEAD project. It is planned to extend the context

management functionality with machine learning

algorithms and reasoning techniques on top of the

AHEAD ontologies. The AHEAD ontology is

modelling the context of the user environment, the

user itself and the participating services. This

ontology was developed to make it possible to

reason about the current situation of the environment

of the end user and to support in this way knowledge

about the AHEAD domain. Ontologies make it

possible for services and applications to

“understand” each other. Precondition is that the

appropriate Ontology has to be available in the web

to be every time retrievable by the appropriate

application. By publishing derived Ontologies by the

AHEAD services we can extend the knowledge of

the AHEAD system. A further goal is to give to

services the possibility to make semantic requests

when looking for information and knowledge about

the AHEAD domain.

It is quite conceivable to introduce into the

AHEAD system a learning mode, during which the

system learns out of the behavior, the activities,

preferences and needs of the end user in order to

have the most appropriate level of assistance.

3.3 Implementation and Evaluation of

the Services

This section describes briefly the results of the first

Remote Integration Workshops (November 2014

and March 2015), where we have established the

integration of three main components of the

AHEAD system: the end-user with its mobile phone,

hearing aid and embedded physiological sensors, the

openAAL platform and the desktop of a doctor

setting up medication reminders (Figure 1) through a

web interface (see section 3.4.2). The purposes of

the tests were to verify the authentication procedure,

the flow of information between the three

components, and the business logic.

3.3.1 Emergency Triggered by Vital Signs

First we tested the system with only one user

connected to the AHEAD system. The user was

wearing the in-the-hear physiological sensor (Figure

2-right) connected to a smartphone. Measurements

were received periodically (every 2 seconds) by the

ICT4AgeingWell2015-InternationalConferenceonInformationandCommunicationTechnologiesforAgeingWelland

e-Health

64

smartphone and automatically forwarded to

openAAL platform which also redirected them to the

“Rules and Notifications management” service. The

test was made with simple rules (for instance, if

Heart Rate > 60 then make an emergency phone

call). The “Rules and Notifications management”

service successfully sent back to the openAAL

platform such notification when rules were met.

Finally the openAAL properly processed this

notification and redirected it to the smartphone

which was able to trigger automatically the

emergency call. Secondly we tested how the whole

system would react with the transfer of

measurements coming from two different end users

with different smartphones. Two emergency phone

calls were automatically triggered demonstrating

that the bidirectional communication tested with two

end users, two smartphones and different sensors

was successfully accomplished (Barralon, 2014).

3.3.2 Medication Reminders

The Medication Reminders aimed at evaluating if

reminders were properly set and properly deliver to

the correct end-user (mobile phone). From a desktop

computer a fake doctor introduced a list of

reminders. This list was formatted by a AHEAD

service (running outside the openAAL platform) and

sent to the openAAL platform which was in charge

to redirect the specific medication reminder to the

correct smartphone, at the predefined day and time.

The execution of such scenario was successfully

achieved. In addition, the current implementation of

the AHEAD reminder service allows to specify

which modality (visual popup message, Figure 5-

right, or synthetized audio message) is used to

conveyed the message. Both modalities worked. The

time difference between the setup of the reminder

and its delivery was considered as short (less than

few seconds) by the tester.

3.4 Graphical User Interfaces

3.4.1 Smartphone

Figure 5: Main activity (left); Reminder popup (right).

Figure 5 is presenting two screenshot of the AHEAD

mobile application. On the left, the main screen is

shown with the four menus: emergency, phone call,

list of warnings/reminders, and various information

(e.g. weather forecast). On the right hand side a

visual medication reminder popup is shown.

3.4.2 Rules and Notifications Management

Service

The Rules and Notifications management service is

a web application, composed by a web service, a

SQL (Structured Query Language) Server Database

and a web socket client. The web service is the

responsible to access and to execute the stored

procedures created in the database which are used to

manipulate (insert, delete or modify) data into the

database. The web socket client is the responsible to

grant the bidirectional communication with the

OpenAAL platform. The aim of this service is to

allow caregivers and medical professionals to

manage rules, notifications and activities to be

performed (in the case of the affective assistant

rules) according to a set of pre-defined and

personalized rules (Figure 6) based on individual

changes in the end users’ health status and/or their

environment.

Figure 6: Personal Alarms configuration.

These notifications, in turn, will facilitate the day-

by-day work of medical professionals, as well as

formal and informal caregivers, in addition to

provide support to users related to their individual

health status. The system is based on a closed cycle

that involves end users, medical professionals and

the informal caregivers. It combines the

orchestration of services with an underlying efficient

networked-based event management solution.

The selection of a particular rule it will open the

“Notification Configuration” webpage (not shown).

This page allows the configuration of the type of

notification associated to the personal alarm rule (by

email, SMS or phone call).

There is also an Affective Assistant

configuration page, that proposes the best suitable

daily activity or suggests a new action (e.g. meet a

friend, go for a walk, take medications, and perform

AugmentedHearingAssistanceforElderlyPeople-FromRequirementstoImplementation

65

an aerobic activity) based on the analysis of the vital

signs parameters determining the current emotional

state of the user. If a sufficiently lasting low

emotional state situation is detected the user will be

instructed to do an alternative activity.

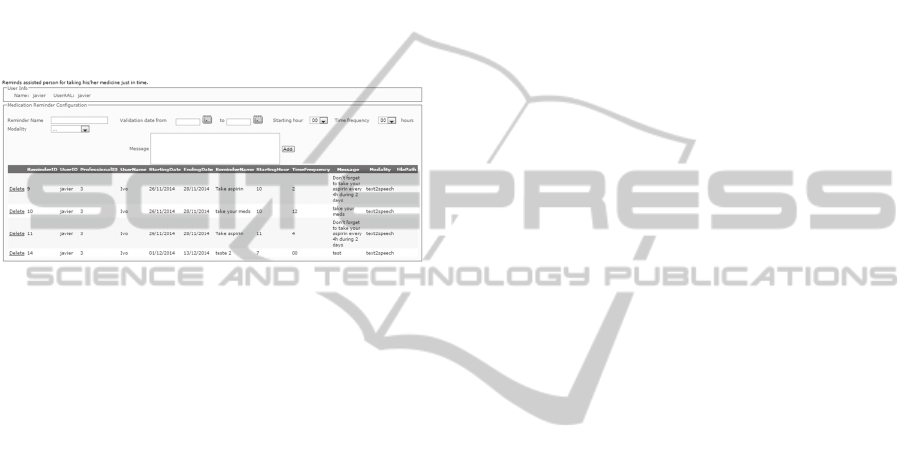

The “Medication Reminder” configuration page

allows the caregiver and the medical professional

configure the medication reminders for the end user.

It is also possible to configure the time frequencies

of the reminders: the starting intake hour, the

definition of the intake period (for instance, every

four hours) and the starting and ending date where

the reminder is considered active (Figure 7).

Figure 7: Medication Reminders configuration.

It is also the possible to visualize all medication

reminders for all users. A button “Send to

OpenAAL” will update the list into the openAAL

platform. The communication is made between the

web socket server and a web socket client, using a

JSON format message which contains inside the list

of reminders in a XML (eXtensible Markup

Language) format (Wulf, 2014).

3.5 Voice User Interface

Beside the graphical interfaces presented earlier the

user is interacting with the AHEAD system by voice

commands (e.g. “help”, “emergency”, “call Peter”)

and audio feedbacks either using pre-recorded audio

files or synthetized voice messages (“It’s time to

take your medication”). During the second lab trial

of AHEAD user expressed a preference in using pre-

recorder messages (by themselves or relatives) than

synthetized voices.

4 CONCLUSION AND OUTLOOK

Within user centred design the development of the

first service components and interaction modalities

of the AHEAD system as presented in this work has

been achieved: the emergency call via voice

command and push button as well as an early

prototype of the Audiometry Verification test were

evaluated with end users in a first lab study. The

second study involving elderly users in an upcoming

task-based lab evaluation will aim to gathering

feedback on user experience and perceived

interaction quality of selected advanced multimodal

AHEAD components namely the Activity Tracking

module, Medication Reminder and Audiometry

Verification Test which will be used on a

smartphone application. On the basis of the gathered

user feedback the system components will be

improved towards enhanced interaction quality for

hearing impaired older users. Remaining services are

currently under development and will become the

center of interest in a third evaluation study in the

lab which will finally pave the way for the

deployment of the fully integrated AHEAD system

in a long term field trial to take place by end of

2015.

ACKNOWLEDGEMENTS

The research presented in this paper is conducted as

part of the AHEAD project (AAL-2012-2-167),

which is partially funded by the European Ambient

Assisted Living Joint Programme and the National

Funding Agencies from Austria, Denmark, Germany

and Spain. Iñigo Dorronsoro, Javier Camarena,

Johannes Kreuzer, Olaf Grumptmann and Jürgen

Braun have also strongly contributed to the hardware

and software developments of all components

presented in this paper.

REFERENCES

Phonak 2015. http://www.phonak.com/com/b2c/en/

products/ wireless-accessories/products/compilot/

hearing-aid-accessory.html.

Starkey 2015. http://www.starkey.com/hearing-aids/

surflink-wireless-accessories/surflink-mobile.

ASSISTANT 2012-2015 http://www.aal-assistant.eu/

HearMeFeelMe 2012-2015. http://www.aal-europe.eu/

projects/hear-me-feel-me/

Rashid, A., Wulf, L., Garschall, M., 2014. Augmented

Hearing for elderly people – User Requirements and

Use Cases. 4. AAL-Workshop auf der Mensch &

Computer 2014 „Senioren interaktiv unterwegs –

(Kooperations-) Systeme gestalten“.

Barralon, P, Camarena, J., Dorronsoro, I., Merkle, N.,

Ramos, I., Kreuzer, J., Grumptmann, O. 2014.

AHEAD project Deliverable D3-3 First integrated

prototype.

Wulf, L., Barralon, P., Kreuzer, J., Perez, M., Ramos, I.,

2014. AHEAD project Deliverable D3-2 - Low-fi

Interface Prototypes.

ICT4AgeingWell2015-InternationalConferenceonInformationandCommunicationTechnologiesforAgeingWelland

e-Health

66