Multi Touch Shape Recognition for Projected Capacitive

Touch Screen

I. Guarneri

1

, A. Capra

1

, G. M. Farinella

2

, F. Cristaldi

2

and S. Battiato

2

1

STMicroelectronics, Computer Vision Group, Stradale Primosole, Catania, Italy

2

Department of Mathematics and Computer Science, University of Catania, Catania, Italy

Keywords: Shape Recognition, Projected Capacitive Touch Screen, Mutual Sensing.

Abstract: Devices equipped by touch screens are nowadays widely diffused. One of the most meaningful factor which

leads to this success is their easy and intuitive interface which allows a friendly user-device interaction.

Touch shape recognition is a topic which has contributed to the realization of these types of interfaces. In

this paper we propose a solution able to discriminate among different classes of touch shapes. We focus on

the problem of recognizing typical touches performed in mid-sized devices as tablets and phablets. The

proposed solution discriminates among single finger, multiple fingers and palm by reaching high

recognition accuracy and maintaining a low computational complexity.

1 INTRODUCTION

In the last decade the sale of the hand held devices

has reached high volumes and the diffusion of these

devices has increased the need to improve the user-

device interaction through simple and intuitive

interfaces. The great revolution in this field has been

determined by the introduction of the touch screen in

the market. The selection of a functionality by

touching the screen is a natural gesture which allows

the user to interact with the device in a more easy

and clear way. The effort of researchers is to further

improve the touch screen functionalities by

developing new algorithms to support the human-

device interaction.

Some of the classic touch based functions are the

zooming, the rotation of images and the scrolling of

web pages. However, with the wide diffusion of

high screen size (from 5” to 10”) new problems must

be solved in performing the recognition of the touch.

Due to the screen size, it can happen that some

conductive human parts can touch the screen

together with the fingers. For example, a desirable

feature is the recognition of the palm touching the

panel; this involuntary touch usually inhibits other

touch functionalities such as the selection of an icon

or scrolling of pictures, etc. Another example is the

keyboard device tapping with fingers and

simultaneously touching the screen with the palm.

To effectively use the device, the touch recognition

engine should be able to recognize the palm touches

and reject them.

Another shape to be recognized, to improve the

interface interaction, is the ear touch (Guarneri et al.,

SPIE 2013). The presence of this shape is possible in

phablets (having around a 5” screen size) and for

devices having lower panel resolution. The

recognition of this class could allow the elimination

of the proximity sensor used for switching from the

touch to the talk functionality.

Although the shape recognition is a key topic

discussed in different application context (Escalera

et al., 2011; Azzaro et al., 2011; Zhang et al., 2004;

Daliri et al., 2008; Belongie et al., 2002; Battiato et

al., 2012; Farinella et al., 2006), it is not yet widely

discussed in the context of touch screens and few

works have been proposed in literature. Zhang et al.

(2011) proposed a technique able to discriminate

among different shapes acquired by touching

displays. The authors exploit the integral image

obtained by summing the signals data along rows

and columns. The obtained x and y curves are

segmented into peaks and valleys and the finger

identification is obtained by applying a threshold on

peaks values. Despite the method is fast, it cannot

guarantee robustness when shapes are obtained in

critical conditions, such as in the case of slightly wet

fingers (Guarneri et al. ICCE 2013). Westermann

(2008) identifies and discriminates between

fingertips, thumbs, palms and cheeks. In order to

111

Guarneri I., Capra A., Farinella G., Cristaldi F. and Battiato S..

Multi Touch Shape Recognition for Projected Capacitive Touch Screen.

DOI: 10.5220/0004665601110117

In Proceedings of the 9th International Conference on Computer Vision Theory and Applications (VISAPP-2014), pages 111-117

ISBN: 978-989-758-009-3

Copyright

c

2014 SCITEPRESS (Science and Technology Publications, Lda.)

extracts all simultaneous touches the input

capacitive map is firstly segmented. Then a measure

of the regularity of each patch is computed as the

ratio of a patch’s spatial energy minus its peak

energy to the patch‘s total energy. The eccentricity

of the shape is also computed for each patch. The

shape discrimination is mainly based on two

parameters: the eccentricity and the regularity.

Thumb contacts are distinguished from fingertip

contacts using the patch eccentricity feature; the

cheek is discriminated from the ear because the

former is considered a regular shape, while the latter

is considered a not regular shape.

Guarneri et al. (SPIE 2013) proposed a shape

recognition engine to discriminate typical shapes in

devices equipped by a touch panel lower than 5”

size: finger, ear, cheek, hand held. The authors

presented a technique which exploits different shape

descriptors such as the area, the connections degree,

the filling degree, the central and peripheral number

of not touched lines. Another work of Guarneri et al.

(ICCE 2013) proposed a shape recognition method

based on Principal Component Analysis

transformation coupled with decision tree for

classification. This approach regards mainly devices

with a panel up to 4” size. It is a multi-touches

technique focused on the discriminations of finger/s

versus palm. The authors also investigated cases of

recognition wet panel.

This paper proposes a solution for multi touch

shape recognition built on Guarneri et al. (SPIE

2013, ICCE 2013). With respect to the previous

solution the technique proposed here has a low

computational complexity and takes into account

more shape classes. Moreover, temporal check is

introduced to have a more robust recognition engine.

The paper is organized as follows: Section 2

details the proposed multi touches recognition

system. In Section 3 the HW prototype used in the

experiments is briefly summarized. Section 4 reports

the experimental results. Finally, Section 5

concludes the paper.

2 PROPOSED MULTI TOUCHES

RECOGNITION

The proposed solution takes into account the

following ten classes of touches: one or more

isolated fingers (up to five fingers), two or more

fingers touching each other (up to five fingers) and

the palm. The involved technique can be easily

extended to more than five touching fingers; this

value has been chosen just because it is usually the

maximum number of fingers used to perform typical

gestures as scrolling or flipping. Conversely, for

isolated fingers there is not a limited number.

Three main steps are involved in the proposed

pipeline: capacitance map segmentation, shape

representation, and shape recognition.

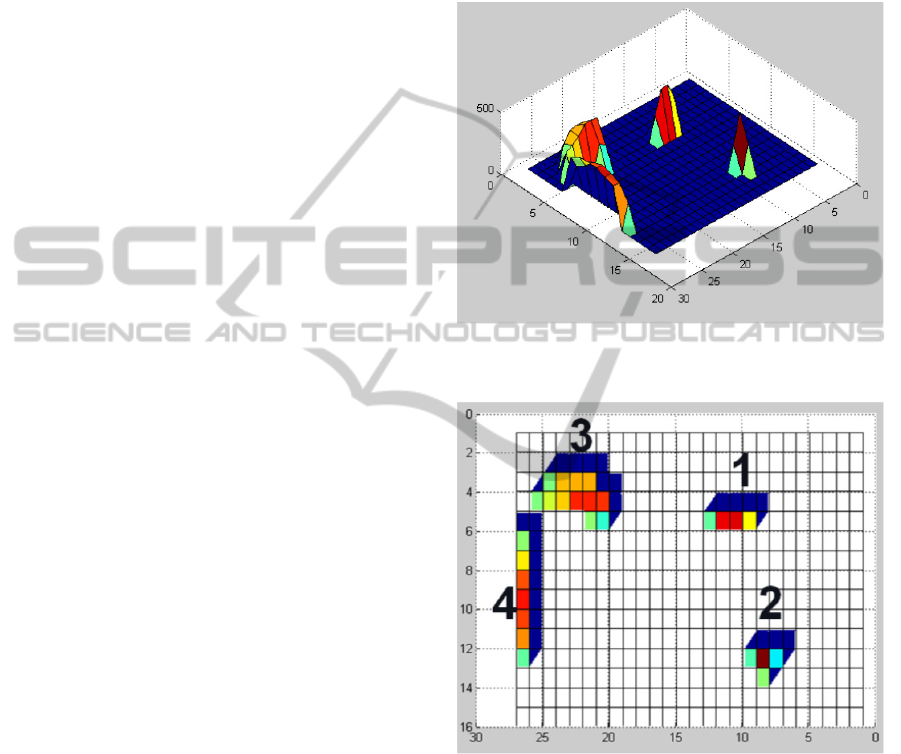

Figure 1: The capacitance map presents three touches: two

fingers and a palm.

Figure 2: 2D representation of the capacitance map

showed in Figure 1; two fingers (patches 1 and 2) and a

palm (patches 3 and 4).

2.1 Capacitance Map Segmentation

To deal with noisy spikes, a noise removal filter is

applied to each acquired capacitance matrix (i.e., a

16×27 matrix containing raw data). The filtered

frames are then analysed and cells are aggregated

into a patch using a watershed like algorithm

(Gonzalez, 2008). Through the patches aggregation

step, the number of sensed capacitive nodes is

VISAPP2014-InternationalConferenceonComputerVisionTheoryandApplications

112

Figure 3: Representation of the four extracted patches. The

bounding box, with highlighted the left- up vertex point, is

shown for each patch.

computed, and we used this value to perform a first

selection of patches to be classified. Differently

than previous work (Guarneri et al., ICCE 2013), we

don’t need to normalize and rotate each single shape

considering the shape representation described in

Section 2.2. Figure 1 shows the 3D plot of the

capacitance map relative to three contemporary

touches: two fingers and a palm. It should be noticed

that the palm is constituted by two predominant

parts, which are correctly extracted as two patches

by the watershed algorithm. Considering as example

the map reported in Figure 1, after the segmentation

step four patches are extracted: two relative to

fingers and two constituting the palm touch. Figure 2

shows the 2D representation of the map reported in

Figure 1 with over-imposed the labels of the four

extracted patches. After the segmentation step, the

patch is described to recognize the patch 1 and 2 as

fingers whereas the patch 2 and 3 as palm (not

fingers).

2.2 Shape Representation

Each patch can be considered as a group of nodes

with a maximum and the remaining nodes with

lower values. The first feature of the patch relates

the area in terms of number of nodes. Patches

formed by a single node are not processed for the

final recognition. This minimum area size has been

fixed taking into account the resolution and the

sensibility of the used panel, but it can be modified

depending on different hardware settings. The shape

size, in term of nodes number, is used to fix the

minimum finger size. We represent a patch by using

two descriptors, the height and the width of the

bounding box containing the patch. The computation

of the contouring box is based on the comparison of

x and y values of all nodes forming the shape.

Through this fast comparison we compute two

points: the first one having as abscissa the lower x-

value of all expressed nodes and as ordinate, the

minimum y-value of all expressed nodes. The

coordinate pair of the second point is found by

looking for the maxima x and y of all expressed

nodes. Hence, for each box patch we compute its

width, its height, its area and the coordinate pair of

its left-up corner (Figure 3). The area is used as first

filtering: patches too small are excluded for final

recognition. The width and the height are used to

identify a potential finger. The left-up corner,

together with the width and the weight of the

bounding box, are used for the temporal check

described in the Section 2.4. Figure 3 shows the

graphical representation of the four boxes computed

for each patch showed in Figure 2.

2.3 Shape Recognition

The recognition of different classes is performed by

a decision tree (Quinlan, 1993) trained on a set of

capacitive maps manually labeled. The

discrimination is based on the width and height of

the bounding box. In order to have a more effective

classification, a further check is performed to verify

the adjacency between patches. So, width and height

of the boxes are used to discriminate finger from

others. These information are also used to verify if a

patch is overlapping/adjacent with other patches.

This last check is performed by comparing the

intersection of the box contours of all patches and it

is done to verify if a patch is completely isolated

from others or if it is in touch with other patches. In

case of two or more adjacent or overlapped patches,

the presence of a not finger patch forces to be the

classification of all other adjacent patches in the

same class.

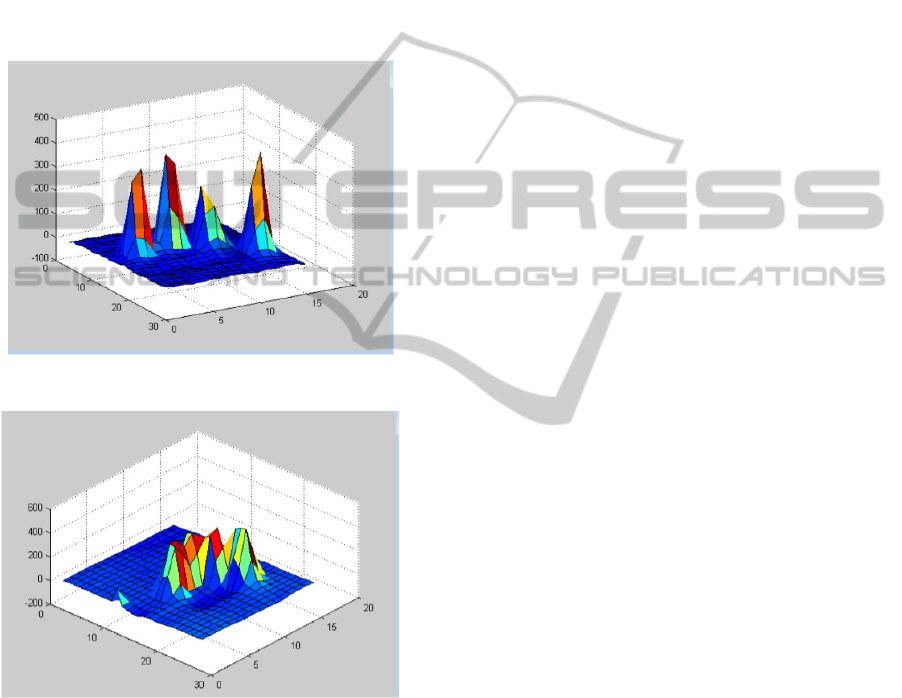

Figure 4 shows a map representing a palm touch.

After the segmentation, two bounding boxes have

been extracted. In this case one of the two boxes (the

little/blue one) is a potential finger, as well as it is

verified the overlapping with other non-finger

patches.

Since the bigger/yellow one does not satisfy the

constraints of finger for width and height, it is a

Figure 4: Map showing a palm touch. Two patches have

been extracted. The patches have an overlap and, due to

the fact that one of them is a potential not finger (the

yellow/bigger one), also the other patch (the blue/little

one) is classified as not finger (i.e., a palm).

MultiTouchShapeRecognitionforProjectedCapacitiveTouchScreen

113

Figure 5: Map showing four fingers touching each other.

The patches are all overlapping and since each patch is

classified as finger (i.e., no one is classified as not finger),

the final class will be “4 finger”.

Figure 6: Three toy frames representing a stylized palm

touching the panel during its removal from the screen. Due

to reduction of the shape size it can happen that the latest

two frames can be wrongly classified as a finger.

Figure 7: Board prototype equipped by a 7” screen size.

potential not finger (i.e., a palm), and the final

classification for the little patch is also a not finger

(i.e., a palm).

Figure 5 reports an example of four fingers and

their relative boxes touching each other. For each

box, the intersection with the other patches is

verified. Since all of them are potential fingers their

final classification is “4 fingers”. In order to reduce

the false finger rate, we perform a further check on

the potential finger patches. This analysis aims to

take into account the history (in time) of a touch

shape classification. Details about that temporal

check are reported in the following section.

2.4 Temporal Analysis

One of the most critical conditions for shape

recognition regards the moment when a palm is

removed from the display. A toy example showing

this condition is reported in Figure 6, where it is

represented a

stylized palm removed from the panel.

From the first to the third frame the palm changes its

shape. In these transition steps, the touch of the palm

changes in terms of area size, from a big to a small

one. This induces a wrong classification of the palm

shape because its shape becomes more similar to a

finger. To reduce the rate of wrong classifications

relative to this specific condition, a temporal

analysis has been introduced into the classification

process. Each time a patch is a potential finger a

check taking into account the touched area in

previous classified frames is performed. In case of

intersection/overlaying with a patch which was

classified as not finger in previous frames, than the

current potential finger patch is classified as a not

finger. This allows a better classification accuracy of

the palm when is it removed from the screen.

The temporal check can introduce an unwished

behaviour. When the algorithm wrongly classifies a

finger touch as a not finger (just because it does not

pass the width and height test), then it can happen

that the temporal analysis will propagate this error

through the next successive frames, so we lose many

right finger classifications. To avoid that

propagation a counter has been introduced to force a

reset, in this way a too old shape classification

cannot lead to a wrong classification.

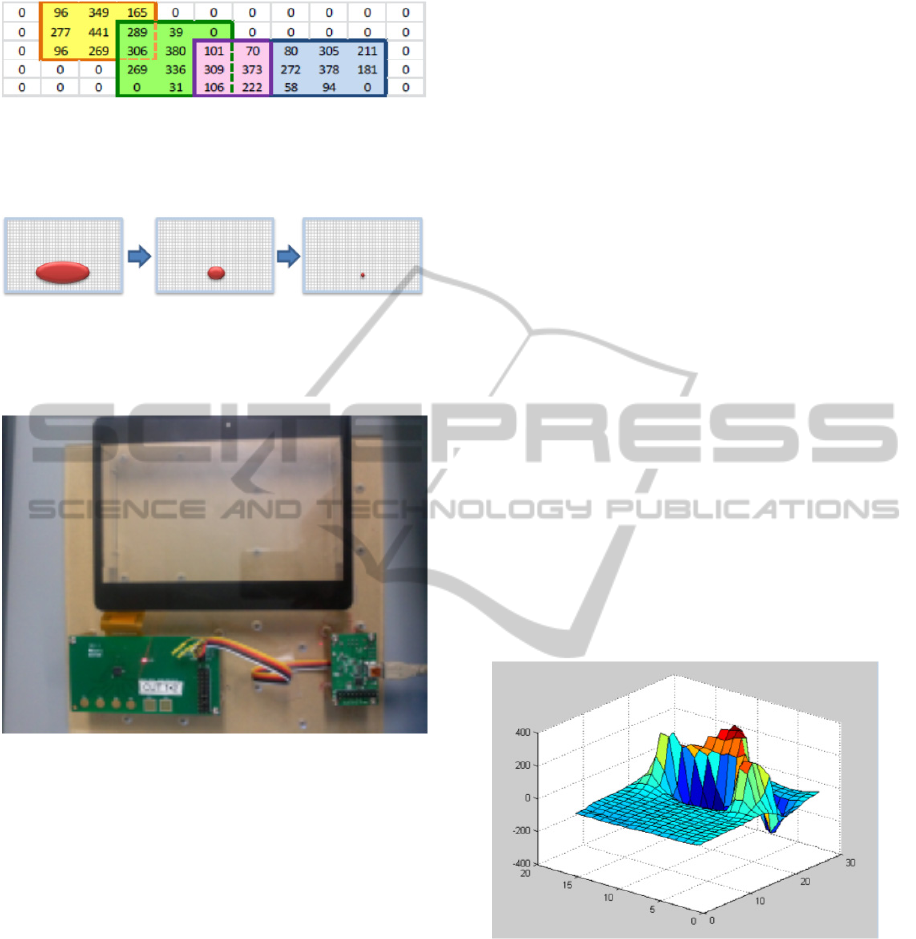

Figure 8: Capacitive map related to a palm touch.

3 HARDWARE PROTOTYPE

To acquire the capacitive maps for testing purposes

we have used the board prototype shown in Figure 7.

The board is equipped with a 7” capacitive

screen size, STM32 processor and STMT05

microcontroller.

The board is connected to the PC through a USB

cable. The developed embedded software allows to

VISAPP2014-InternationalConferenceonComputerVisionTheoryandApplications

114

store sequence of frames, each frames is a matrix of

16x27 capacitive nodes. The maps show the

presence of spike noise due to the high sensitive of

nodes. To handle this type of noise the capacitive

values are filtered by applying a threshold at the

beginning of the software pipeline. The threshold

value has been fixed by considering the range of

capacitive node magnitudes. A too low threshold

value guarantees the elimination of noise spikes but

it can cause the loosing of important data relative to

touches. On the contrary a too high threshold could

not eliminate the noisy data.

Figure 9: Capacitive map related to four separated fingers.

Figure 10: Capacitive map related to four touching finger.

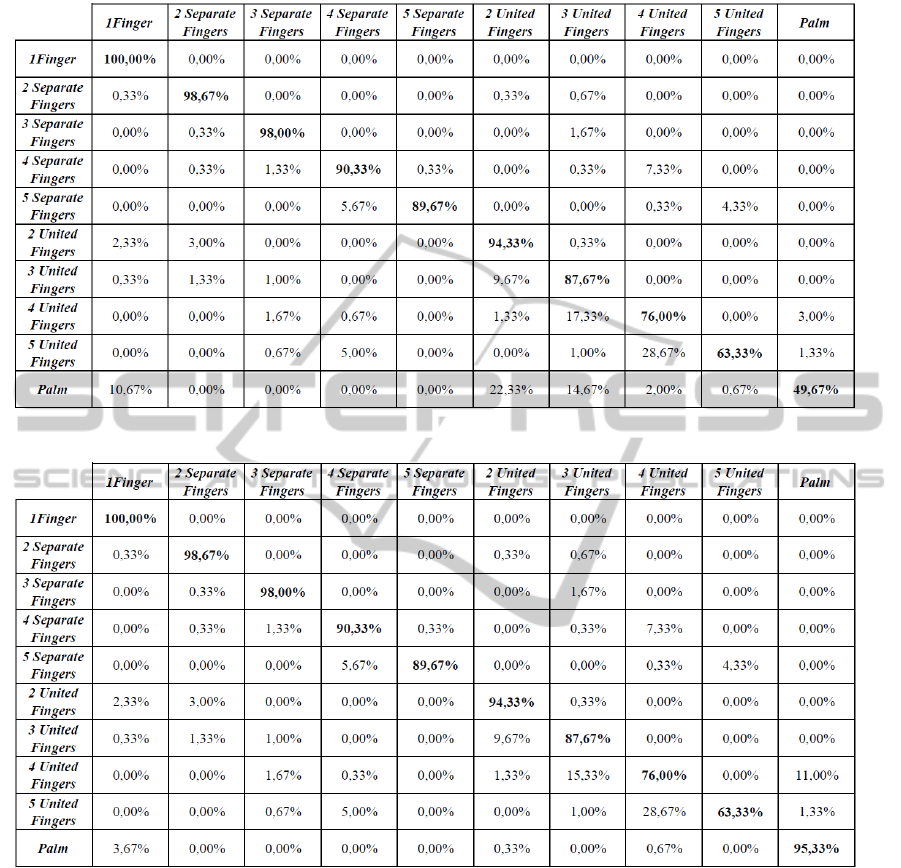

4 EXPERIMENTAL RESULTS

The proposed technique has been tested on a dataset

of 3000 capacitive maps related the following ten

classes involved in the experiments: single finger,

two separated fingers, two touching fingers, three

separated fingers, three touching fingers, four

separated fingers, four touching fingers, five

separated fingers, five touching fingers, palm. The

palm shape is strongly deformable because it

changes in size and also it can be split in two o or

more subparts. In case of multi split division the

algorithm must be able to classify each single part as

belonging to the palm shape.

Figure 8 shows an example of a palm touching

the panel and producing two separated touched area

on the screen device. Figure 9 shows an example of

four separate fingers, whereas Figure 10 shows a

capacitance map related to four touching fingers. As

first, we tested the proposed solution without

temporal checking. Each frame has been classified

independently without considering the classification

of the previous frames. The results have been

collected in the confusion matrix reported in Table

1. The test has been then repeated enabling the

temporal analysis. The results obtained in this last

experiment are reported in Table 2.

Analyzing the results reported in the two

confusion matrixes (Table 1 and 2) it can be

observed that the palm class is the one which

reaches an improvement around of 50% when the

temporal check is performed. This improvement is

due to the fact the palm changes its area size

whether the palm is removed from the screen. In

these two critical steps many palm shapes are

wrongly classified as fingers. By using the temporal

check many palm maps wrongly classified are

correctly classified. Due to its simple

implementation, the temporal check requires a low

computational complexity (it is performed by storing

three binary labels with the classification type, palm

or finger, of the current patch in the three previous

frames).

5 CONCLUSIONS

In this paper we proposed a solution for multi

touches recognition for capacitive displays. The

main aim is the discrimination of multi touch fingers

against the palm touch. The representation of shapes

through the presented simple features allows the

recognition of the different classes of touches. The

proposed technique obtains good performances in

terms of classification accuracy. Future works will

be devoted to increase the recognition accuracy,

augmenting also the number of classes to be

recognized. Moreover, algorithms to recognize

multi-fingers gestures could be useful for application

such as zooming, rotation and flipping of pictures.

MultiTouchShapeRecognitionforProjectedCapacitiveTouchScreen

115

Table 1: Results obtained by the proposed approach without temporal check.

Table 2: Results obtained by the proposed approach considering temporal check.

REFERENCES

Zang, J., Guo, Y., Mo, L. (2011). Multi-Touch Detection

Method for Capacitive Touch Screens. US

2011/0221701 A1.

Westerman, W. C., (2008). Multi-Touch Input

Discrimination. US Patent 0158185.

Gonzalez, R.C., Woods, R.E., (2008). Digital Image

Processing, Prentice Hall, 3

rd

edition.

Guarneri, I., Capra, A., Farinella, G. M., Sebastiano, B.,

(2013). Shape Recognition for Capacitive Touch

Display. In SPIE-IS&T Annual Symposium Electronic

Imaging Science and Technology - Image Processing:

Machine Vision Applications VI (EI117).

Guarneri, I., Capra, A., Castorina, A., Battiato, S.,

Farinella, G. M, (2013). PCA Based Shape

Recognition for Capacitive Touch Display. In IEEE

International Conference on Consumer Electronics

(ICCE).

Escalera, S., Fornés, A., Pujol, O., Llados, J., Radeva, P.

(2011). Circular Blurred Shape Model for Multiclass

Symbol Recognition. IEEE Transactions on Systems,

Man, and Cybernetics 41 (2), pp. 497-506.

Azzaro, G., Caccamo, M., Ferguson, J., Battiato, S.,

Farinella, G. M., Guarnera, G., Puglisi, G., Petriglieri,

R., Licitra, G. (2011). Objective Estimation of Body

Condition Score by Modeling Cow Body Shape from

Digital Images, Journal Dairy Science 94 (4) , pp.

2126-2137.

VISAPP2014-InternationalConferenceonComputerVisionTheoryandApplications

116

Zhang, D., Lu, G. (2004). Review of Shape Representation

and Description Techniques, Pattern Recognition

37(1), pp. 1-19.

Daliri, M. R., Torre, V. (2008). Robust Symbolic

Representation for Shape Recognition and Retrieval.

Pattern Recognition 41 (5), pp. 1782-1798.

Belongie, S., Malik, Y., Puzicha, J. (2002). Shape

Matching and Object Recognition Using Shape

Contexts. IEEE Transactions on Pattern Analysis and

Machine Intelligence 24 (4), pp. 509-522.

Battiato, S., Farinella, G. M., Giudice, O., Puglisi, G.

(2012) . Aligning Bags of Shape Contexts for Blurred

Shape Model Based Symbol Classification, IAPR

International Conference on Pattern Recognition.

Farinella, G. M., Impoco, G., Gallo, G., Spoto, S.,

Catanuto, G., Nava, M. B. (2006). Objective Outcome

Evaluation of Breast Surgery, in Medical Image

Computing & Computer Assisted Intervention.

Quinlan, J. R. (1993). C4.5 Programs for Machine

Learning, Morgan Kaufmann Publishers.

MultiTouchShapeRecognitionforProjectedCapacitiveTouchScreen

117