Video Foreground/Background Segmentation using Spatially

Distributed Model and Edge-based Shadow Cancellation

Shian-De Tsai, Jin-Jang Leou and Han-Hui Hsiao

Department of Computer Science and Information Engineering, National Chung Cheng University,

621 Chiayi, Taiwan

Keywords: Video Foreground/Background Segmentation, Spatially Distributed Model, Edge-based Shadow

Cancellation.

Abstract: Video foreground/background segmentation is to extract relevant objects (the foreground) from the

background of a video sequence, which is an important step in many computer vision applications. In this

study, the spatially distributed model is built by a splitting process using Gaussian probability distribution

functions in spatial and color spaces. Then, edge-based shadow cancellation is employed to obtain more

robust segmentation results. The proposed approach can well handle illumination variations, shadow effect,

and dynamic scenes in video sequences. Based on experimental results obtained in this study, as compared

with two comparison approaches, the proposed approach provides the better video segmentation results.

1 INTRODUCTION

Video foreground/background segmentation is to

extract relevant objects (the foreground) from the

background of a video sequence, which is the

important step in many computer vision applications.

Because a video sequence may contain illumination

variations, shadow effect, dynamic scenes, …, video

foreground/background segmentation is a

challenging task.

Existing video foreground/background segmen-

tation approaches include three categories, namely,

thresholding, background subtraction, and motion-

based. The first category of approaches is based on

thresholding pixel differences between two related

frames (two consecutive frames or the current frame

and a background frame). Because segmentation

results are sensitive to thresholding values, various

adaptive thresholding approaches were proposed

(Tsaig and Averbuch, 2002); (Kim and Hwang,

2002).

For the second category of approaches, Heikkila

and Pietikainen (2006) proposed an efficient texture-

based method for background modeling. The local

binary pattern (LBP) texture operator is employed,

which has several good properties for background +

modeling. Zhang et al. (2008) proposed a novel dy-

This work was supported in part by National Science Council,

Taiwan, Republic of China under Grants NSC 98-2221-E-194-

034-MY3 and NSC 99-2221-E-194-032-MY3.

dynamic background subtraction approach based on

the covariance matrix descriptor. The covariance

matrix integrates the pixel-level and region-level

features together and efficiently represents the

correlation between features. Wang et al. (2008)

presented three algorithms (running average, median,

mixture of Gaussian) for modeling the background

directly from the compressed video. Their approach

utilizes DCT coefficients at block level to represent

background, and adapts the background by updating

DCT coefficients. Li et al. (2004) proposed a

Bayesian framework that incorporates spectral,

spatial, and temporal features to characterize the

background appearance. A Bayes decision rule is

derived for classification based on the statistics of

principal features.

For the third category of approaches, motion-

based foreground/background segmentation can be

treated as fitting a collection of motion models to

spatiotemporal image data. Mezaris et al. (2004)

proposed a model-based foreground/background

segmentation approach including three stages: initial

segmentation of the first frame using color, motion,

and position features, a temporal tracking algorithm,

and a trajectory-based region merging procedure.

Wang et al. (2005) proposed a Bayesian network to

model interactions among the motion vector field,

the intensity segmentation field, and the video

segmentation field. The Markov random field is then

89

Tsai S., Leou J. and Hsiao H..

Video Foreground/Background Segmentation using Spatially Distributed Model and Edge-based Shadow Cancellation.

DOI: 10.5220/0004069600890095

In Proceedings of the International Conference on Signal Processing and Multimedia Applications and Wireless Information Networks and Systems

(SIGMAP-2012), pages 89-95

ISBN: 978-989-8565-25-9

Copyright

c

2012 SCITEPRESS (Science and Technology Publications, Lda.)

used to encourage the formation of continuous

regions.

2 PROPOSED APPROACH

In proposed approach, spatial and color information

are used as frame features and the camera is

assumed to be stationary. Each pixel in frame t is

described as a 5-dimensional feature vector

t

x

v

= [x, y,

Y, U, V]

T

, where (x,y) is the pixel coordinate, color is

encoded by the YUV format, and T denotes

transpose. The probability distribution function of

t

x

v

for model component j is given by:

,

)2(

)|(

),(

)())((

2

1

),(),(

),(

1

),(),(

tj

d

xx

tjtjt

tjttjtjt

e

xp

Σ

=

−Σ−−

−

π

ωθ

μμ

vv

v

(1)

where the parameters θ

(j, t)

= {ω

(j, t)

, μ

(j, t)

, Σ

(j, t)

} are

the weight, mean, and covariance matrix of model

component j in frame t, and the dimensionality d is 5.

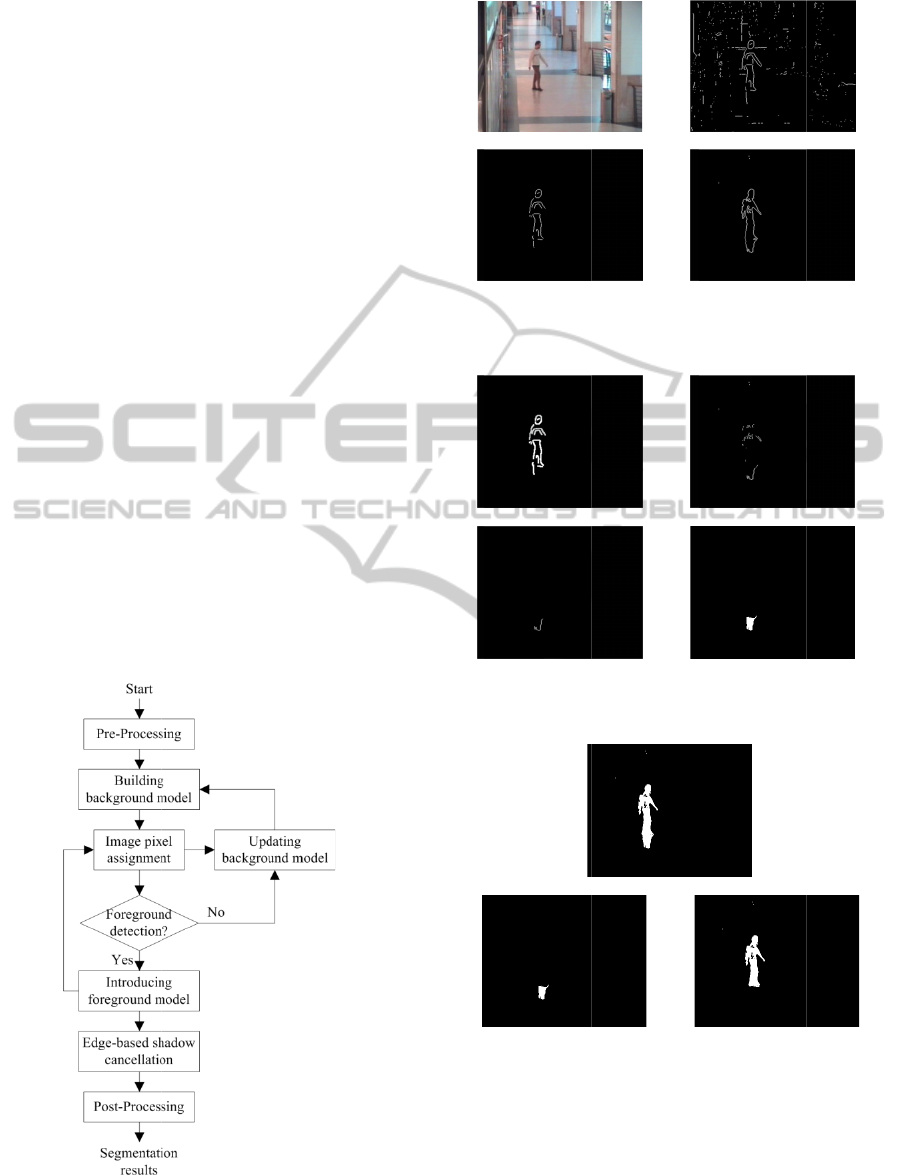

As shown in Figure 1, a spatially distributed

background model, a Gaussian foreground detection

process, and edge-based shadow cancellation are

employed in the proposed approach.

2.1 Building the Background Model

To remove noise and tune the boundaries of

foreground objects, the Gaussian smoothing filter is

applied on each video frame, which is described as:

22

2

2

2

1

(, ) .

2

xy

Gxy e

σ

πσ

+

−

=

(2)

The background model is initialized, based on the

first video frame of a video sequence. It is assumed

that no foreground objects appear in the first frame.

Then, an iterative splitting procedure, namely,

principal direction divisive partitioning (PDDP)

(Boley, 1998) is employed to initialize background

component(s).

Initially, the whole background frame is treated

as a single component, and the support map value of

each background pixel is set to 1. Then, a single

iteration of the splitting procedure divides each

existing component into two new components.

Given the background frame and the current

components, each component having the highest

spatial variance will be determined. Here, it is

assumed that the spatial and color distributions are

independent. Using the spatial covariance matrix of

each component, the eigenvalue

s

j

λ

and the

corresponding eigenvector

s

j

v

of component j with

spatial distribution s can be computed. The splitting

component

s

sp

C

is given by

}.{maxarg

s

jj

s

sp

C

λ

=

(3)

If

s

sp

s

sp

T>

λ

, where

s

sp

T

is a predefined threshold, then

the splitting component is split and a new

component is generated and reassigned as the pixels

x

v

of the splitting component which satisfy

,0)( >⋅−

s

sp

s

sp

s

vx

μ

v

(4)

where

s

sp

μ

is the spatial mean of the splitting

component

s

sp

C

. That is to place a separating plane

through the spatial mean, perpendicular to

s

sp

v

. The

parameter sets of two corresponding components are

then re-estimated based on their newly assigned

pixels, respectively, and the support map value of

each pixel is updated correspondingly. The proposed

approach applies the splitting procedure on both the

spatial and color component frames. The splitting

procedure will be iterated until the largest

eigenvalue of a splitting component is smaller than a

threshold.

Because a component may contain two or more

spatially disconnected regions, which should be split.

Here, the connected component algorithm (Haralick

and Shapiro, 1992) is employed to further split a

component containing two or more spatially

disconnected regions. The initial background model

is thus completely constructed for the background.

For a subsequent frame, each pixel

t

x

v

is assigned

to the most likely model component, i.e., each pixel

can be assigned to the component with the

maximum posterior probability C

map

defined as

))}.|({log(maxarg

jtjmap

xpC

θ

v

=

(5)

Because the spatial and color distributions are

assumed to be independent, the distribution function

in Eq. (5) can be re-expressed as the function of a 2-

D spatial Gaussian and a 3-D color Gaussian with

parameter sets

s

j

θ

and

c

j

θ

for the spatial vector

Ts

t

yxx ],[=

v

and the color vector

Tc

t

VUYx ],,[=

v

,

respectively. Hence, C

map

can be modified as

))}.|(log())|({log(maxarg

c

j

c

j

s

j

s

jjmap

xpxpC

θθ

v

v

+=

(6)

The support map value of each pixel of the new

frame is updated to respond the new assignment.

Additionally, to obtain stable assignments, a pixel

can only be assigned to a background component if

,))|((log

s

lik

s

j

s

t

Txp >

θ

v

(7)

where

s

lik

T

is a predefined threshold.

SIGMAP2012-InternationalConferenceonSignalProcessingandMultimediaApplications

90

Next, to detect new foreground object(s) in the

new frame, the “unassigned” pixels in the support

map should be detected. Here, if pixel

t

x

v

of a

component satisfies

,))|((log

unassignCt

Txp

map

≤

θ

v

(8)

where T

unassign

denotes a minimum probability

threshold, then

t

x

v

is determined as an “unassigned”

pixel.

2.2 Foreground Detection and

Introducing Foreground Model

Initially, all the initial model components of a frame

are labeled as the background. A foreground

component is detected when some region of pixels

having a low probability under the mixture model.

Such a region appears in the support map as a region

having high density of “unassigned” pixels. The

support map is divided into nonoverlapping blocks

of size 16×16 pixels. For a block, if the number of

“unassigned” pixels exceeds a threshold T

d

, it is

detected as a “foreground” block. Initially, a single

foreground component is built for all unassigned

pixels in these “foreground” blocks. Then, similar to

the background model, the splitting procedure is

recursively applied to build the foreground model.

After pixel assignment and foreground detection,

the parameters of both background and foreground

components of a frame are re-estimated. Given the

parameters of the previous frame

,

)1,( −tj

θ

the new

parameters

),( tj

θ

of the current frame can be

computed (using an adaptive learning rate) as

,)-(1

)1,(),(),( −

+=

tjjCmapjjtj

αα

θθθ

(9)

where α

j

is a vector of learning rates updated by a

variable factor

,

w

j

α

which is proportional to the area

fraction of each foreground/background component

within a frame.

2.3 Edge-based Shadow Cancellation

After foreground detection, the initial foreground

mask FM

t

of frame t usually contains both moving

objects and some shadows. A shadow often appears

in an area where the pixel (gray-level) values change

“gradually” from the background to the shadow

region. Here, the Canny edge detector (Canny, 1986)

is used to generate a binary edge map CE

t

of frame t,

where “1” denotes the edge and “0” denotes

otherwise.

Using the binary edge maps of 5 successive

frames, the integrated Canny edge map ICE

t

is

defined as

⎪

⎩

⎪

⎨

⎧

===

=

=

. otherwise, edge, moving

,1)()()( if edge, static

,0)( if edge,not

)(

5-3-

xCExCExCE

xCE

xICE

ttt

t

t

vvv

v

v

(10)

where

x

v

denotes a pixel.

Based on ICE

t

and FM

t

of frame t, the moving

Canny edge MCE

t

can be defined as

},

edge,Canny moving)(|{

t

tt

FMx

xICExMCE

∈

==

v

v

v

(11)

whereas the edges of the foreground mask EFM

t

can

be defined as

}.) ,NG(

, ,|{

truexx

FMxFMxxEFM

ttt

=

′

∉

′

∈=

vv

v

v

v

(12)

where NG(

x

v

, x

′

v

) is a logic function that returns true

when

x

v

and

x

′

v

are 4-connected neighbors. Note that

MCE

t

provides important information for seed point

selection in region growing (as an illustrated

example shown in Figure 2). Because some gaps

exist in moving Canny edges MCE

t

, in this study,

morphologic dilation with a 3×3 structure element is

applied on MCE

t

, resulting in DMCE

t

.

Because region growing is used to detect shadow

regions, some seed points are required. The shadow

region edges SRE

t

in FM

t

may be employed as seed

points, which can be defined as

}, ,-min

,|{

tsre

tt

MCExTxx

EFMxxSRE

∈

′

>

′

∈=

vvv

v

v

(13)

where T

sre

is a threshold confining the searching

neighbor and ||•|| denotes the Chebyshev distance.

Additionally, SRE

t

also contains some sporadic

pixels (the edges of moving objects). To remove

sporadic pixels, the connected component algorithm

(Haralick and Shapiro, 1992) is also used to connect

initial seed points. Each connected region with its

number of pixels more than a threshold T

seed

is

included in the final shadow region edges

.

final

t

SRE

The pixels in

final

t

SRE

serve as seed points of the

region growing algorithm (Adam and Bischof, 1994)

used for shadow detection, which are expanded pixel

by pixel in FM

t

, resulting in the detected shadow

.

shadow

t

FM

Note that the pixels in DMCE

t

should not

be included in

shadow

t

FM

(as an illustrated example

shown in Figure 3).

Based on FM

t

and

,

s

hadow

t

FM

the initial moving

object MO

t

(obtained as

shadow

ttt

FMFMMO −=

)

VideoForeground/BackgroundSegmentationusingSpatiallyDistributedModelandEdge-basedShadowCancellation

91

contains some noisy pixels and holes, which will be

processed by a post-processing procedure. In the

post-processing procedure, to remove noisy pixels

and holes, morphologic erosion with a 3×3 structure

element is first applied on MO

t

, then the connected

component algorithm (Haralick and Shapiro, 1992)

is used to remove small connected regions with

threshold T

sre

, and finally morphologic dilation with

a 3×3 structure element is applied to obtain the final

foreground/background segmentation results (as an

illustrated example shown in Figure 4).

3 EXPERIMENTAL RESULTS

In this study, 12 test video sequences and the

corresponding ground truth hand segmentations are

employed. They are “Office,” “Outdoor,” “Browse1,”

“LightSwitch,” “NightCar,” “IntelligenRoom,”

“ParkingLot,” “OneLeaveShopReenter,”

“WavingTree1,” “WavingTree2,” “Raining,” and

“Boat.” Here, sequences 1-3 contain some gradual

illumination variations, whereas sequence 4 contains

great illumination variations. Sequences 5-8 contain

both gradual illumination variations and shadow

effect. Finally, sequences 9-12 contain some

dynamic scenes, such as waving tree, raining, and

moving water. To evaluate the effectiveness of the

proposed approach, two comparison methods,

namely, self-organizing background subtraction

(SOBS) (Maddalena and Petrosino, 2008) and

spatially distributed model (SDM) (Dickinson et al.,

2009) are implemented in this study. The parameter

values and thresholds used in the proposed approach

are listed in Table 1, which are empirically

determined in this study.

To evaluate the performance of the three

comparison approaches, the Jaccard coefficient J

c

by

Rosin and Ioannidism (2003) and the total error

(E

tot

) by Toyama et al. (1999) are employed. A pixel

being classified as “foreground” by both the

approach and the ground truth is denoted as “true

positive” (TP). If it is classified as “foreground” by

only the approach, it is denoted as “false positive”

(FP). If it is classified as “foreground” by only the

ground truth, it is denoted as “false negative” (FN).

If TP, FP, and FN denote the numbers of “true

positive,” “false positive,” and “false negative”

pixels in a video sequence, respectively, then

,

)( FNFPTP

TP

J

c

++

=

(14)

.FNFPE

tot

+=

(15)

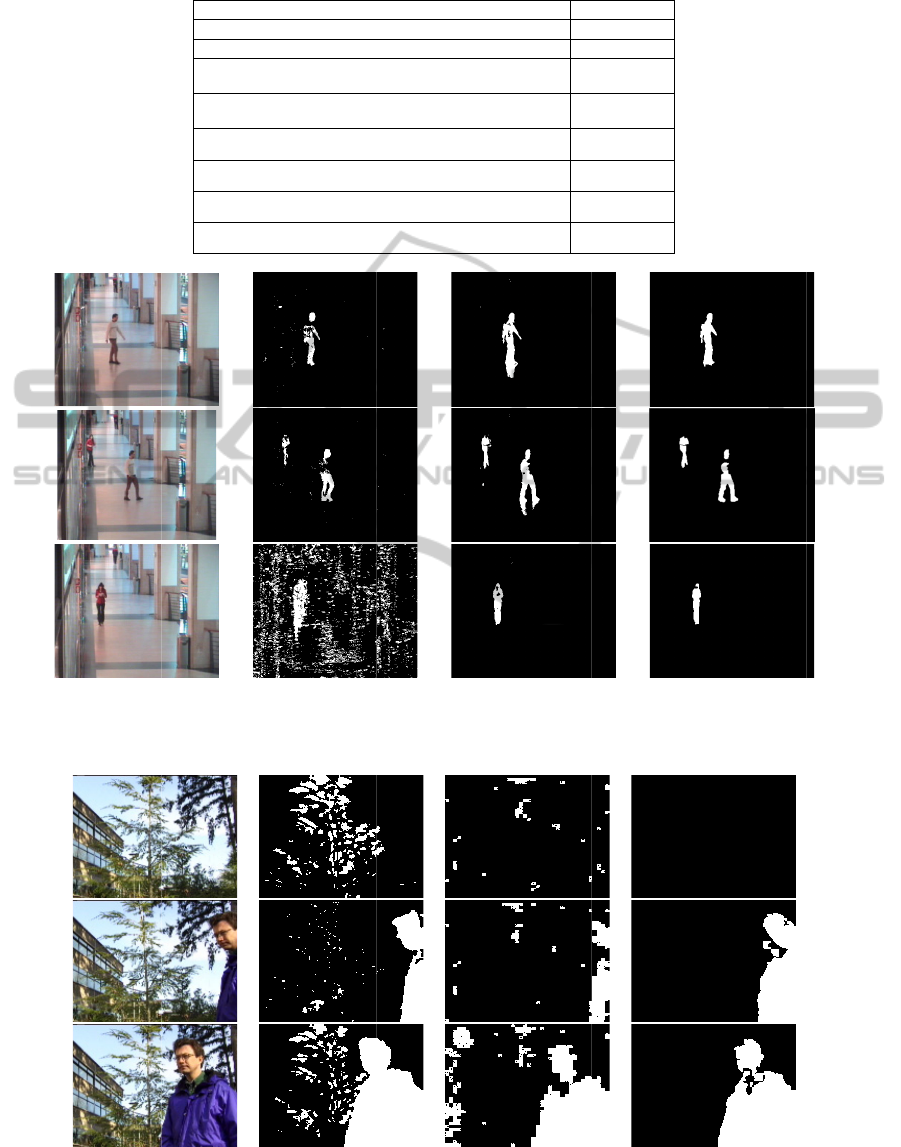

In Figure 5, as compared with two comparison

approaches, the proposed approach can handle video

sequences containing shadow effect and gradual

illumination variations, whereas in Figure 6, as

compared with two comparison approaches, the

proposed approach can handle video sequences

containing dynamic scenes, such as waving tree,

raining, and moving water.

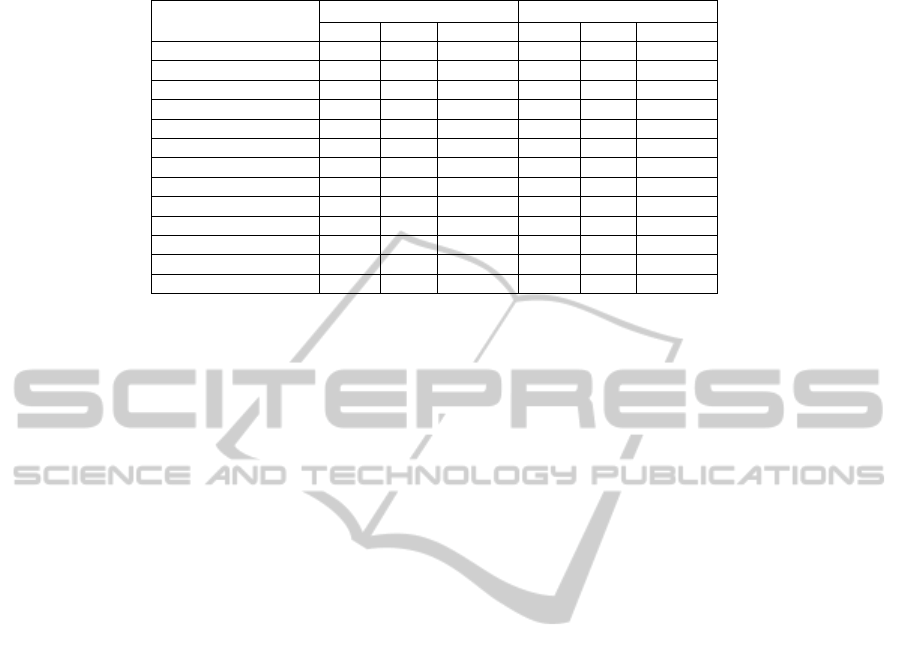

Additionally, in terms of two performance

indexes, namely, Jaccard coefficients and total errors

listed in Table 2, the performance of the proposed

approach is better than those of two comparison

approaches.

4 CONCLUDING REMARKS

In this study, a video foreground/background

segmentation approach using spatially distributed

model and edge-based shadow cancellation is

proposed to deal with video sequences containing

illumination variations, shadow effect, and dynamic

scenes. Based on the experimental results obtained

in this study, as compared with two comparison

methods, the proposed approach provides the better

video segmentation results.

REFERENCES

Adam, R. and Bischof, L., 1994. Seeded region growing.

IEEE Trans. on Pattern Analysis and Machine

Intelligence, 16(6), 641-647.

Boley, D., 1998. Principle direction devisive partitioning.

Data Mining and Knowledge Discovery, 2(4), 325-344.

Canny, J. F., 1986. A computational approach to edge

detection. IEEE Trans. on Pattern Analysis and

Machine Intelligence, 8(11), 679-698.

Dickinson, P., Hunter, A., and Appiah, K., 2009. A

spatially distributed model for foreground

segmentation. Image and Vision Computing, 27(9),

1326-1335.

Haralick, R. M. and Shapiro, L. G., 1992. Reading, MA:

Addision-Wesley. Computer and Robot Vision, 28-48.

Heikkila, M., Pietikainen, M., and Member, S., 2006. A

texture-based method for modeling the background

and detecting moving objects. IEEE Trans. on Pattern

Analysis and Machine Intelligence, 28(4), 657-662.

Kim, C. and Hwang, J. N., 2002. Fast and automatic video

object segmentation and tracking for content-based

applications. IEEE Trans. on Circuits and Systems for

Video Technol., 12(2), 122-129.

Li, L. et al., 2004. Statistical modeling of complex

backgrounds for foreground object detection. IEEE

Trans. on Image Process., 13(11), 1459-1472.

Maddalena, L. and Petrosino, A., 2008. A self-organizing

approach to background subtraction for visual

SIGMAP2012-InternationalConferenceonSignalProcessingandMultimediaApplications

92

surve

Proc

e

Mezaris,

Vide

o

temp

o

IEEE

Tech

n

Rosin, P.

imag

e

R

eco

g

Toyama,

1999.

b

ack

g

on C

o

Tsaig,

Y

segm

e

regio

n

Syste

m

Wang,

W

back

g

comp

Syste

m

Wang, Y

.

b

ase

d

Proc

e

Zhang,

S

dyna

m

Conf.

APPE

Figure 1

segment

a

illance applic

a

e

ss., 17(7), 116

8

V., Kompatsi

a

o

object se

g

o

ral tracking a

n

E

Trans. on

C

n

ol., 14(6), 782

and Ioannidis

m

e

thresholdin

g

g

nition Letters,

K., Krumm,

J

Wallflower:

g

round mainte

n

o

mputer Vision

,

Y

. and Av

e

e

ntation of mo

v

n

labeling appr

o

m

s for Video T

e

W

., Yang, J.,

g

round and s

e

p

ressed video.

m

s for Video T

e

.

et al., 2005.

S

d

on graphical

e

ss., 14(7), 937

-

S

. et al., 2008

.

m

ic backgroun

d

on Pattern Re

c

NDIX

: The propos

e

a

tion approach.

a

tions. IEEE

8

-1177.

a

ris, I., and Str

i

g

mentation

u

n

d trajectory-

ba

C

ircuits and

S

-795.

m

, E., 2003.

E

g

for change

24(14), 2345-

2

J

., Brumitt, B

principles

n

ance. in Proc.

,

255-261.

e

rbuch, A.,

v

ing objects in

o

ach. IEEE Tr

a

e

chnol., 12(7),

5

and Gao, W

e

gmenting mo

v

IEEE Trans.

e

chnol., 18(5),

6

S

patiotemporal

models. IEE

E

-

947.

.

A covarianc

e

d

subtraction. i

n

c

ognition, 1-4.

e

d video for

e

Trans. on I

m

i

ntzis, M. G.,

2

u

sing Bayes-

b

a

sed region me

r

S

ystems for

V

E

valuation of g

l

detection. P

a

2

356.

., and Meyers

and practice

of IEEE Int.

C

2002. Auto

m

video sequen

c

a

ns. on Circuit

s

5

97-612.

., 2008. Mod

e

v

ing o

b

jects

on Circuits

6

70-681.

video segment

a

E

Trans. on I

m

e

-

b

ased metho

d

n

Proc. of IEE

E

e

ground/backgr

o

m

age

2

004.

b

ased

r

ging.

V

ideo

lobal

a

ttern

, B.,

of

C

onf.

m

atic

c

es: a

s

and

eling

from

and

ation

m

age

d

for

E

Int.

ound

Fig

u

EF

M

F

Fi

g

(a) Frame

4

(c) MCE

4

6

u

re 2: (a) Fra

m

M

46

.

(a) DMCE

4

(c)

f

in

a

SRE

46

F

igure 3: An il

l

(b)

s

ha

d

FM

46

g

ure 4: An illu

s

4

6

m

e 46, (b) IC

E

4

6

a

l

l

ustrated exam

p

(a) FM

46

d

ow

trated example

(b) ICE

46

(d) EFM

46

CE

46

, (c) MCE

4

(b) SRE

46

(d)

s

had

o

FM

46

p

le of region g

r

(c) MO

46

e

of shadow ca

n

4

6

, and (d)

o

w

r

owing.

n

cellation.

VideoForeground/BackgroundSegmentationusingSpatiallyDistributedModelandEdge-basedShadowCancellation

93

Figure 5

:

segment

a

Figure 6:

results b

y

(a)

:

Experimenta

l

a

tion results by

(a

)

Experimental

y

SOBS, SDM,

Table 1: Para

m

Max back

g

Max fore

g

s

sp

T

for b

a

c

sp

T

for b

a

s

lik

T

for b

d

T

for u

n

sre

T

for s

e

seed

T

for

l

results of se

q

SOBS, SDM,

a

)

results of seq

u

and the propos

m

eter values a

n

Parameters

a

g

round compone

n

g

round compone

n

a

ckground split (

s

a

ckground split (

c

a

ckground spati

a

n

assigned pixel d

e

e

arching neighb

o

minimum seed p

(b)

q

uence “OneL

e

a

nd the propose

(b)

u

ence “Wavin

g

e

d approach, r

e

n

d thresholds u

s

a

nd thresholds

n

ts

n

ts

spatial)

c

olor)

a

l likelihoo

d

e

nsity

or

p

oin

t

eaveShopReen

t

e

d approach, re

s

g

Tree1.” (a) O

r

e

spectively.

s

ed in the prop

o

(c)

t

er.” (a) Origi

n

s

pectively.

(c)

r

iginal video

fr

o

sed approach.

Values

300

250

800

50

2.4×10

-8

0.8

2

30

n

al video fra

m

r

ames 10, 19,

(d)

m

es 47, 61, 2

0

(d)

21; (b)-(d) se

g

0

1; (b)-(d)

g

mentation

SIGMAP2012-InternationalConferenceonSignalProcessingandMultimediaApplications

94

Table 2: Jaccard coefficients and total errors of 12 test sequences by SOBS, SDM, and the proposed approach (Proposed).

Sequence

Jaccard coefficient Total errors (

×

10

3

)

SOBS SDM Proposed SOBS SDM Proposed

Office 0.43 0.41 0.47 13.4 15.7 13.6

Outdoor 0.41 0.46 0.43 18.6 15.0 15.2

Browse1 0.47 0.43 0.54 17.3 18.6 14.3

LightSwitch 0.28 0.23 0.41 21.6 25.5 13.5

NightCar 0.34 0.39 0.41 15.4 13.2 11.2

IntelligenRoom 0.68 0.71 0.78 10.3 8.6 5.5

ParkingLot 0.56 0.47 0.54 15.4 18.6 16.7

OneLeaveShopReenter 0.32 0.41 0.48 16.8 12.3 8.5

WavingTree1 0.23 0.52 0.68 18.3 10.4 7.3

WavingTree2 0.23 0.54 0.62 19.7 11.9 9.8

Raining 0.34 0.42 0.47 18.6 15.4 11.9

Boat 0.29 0.43 0.53 15.5 14.3 11.3

Average

0.38 0.45

0.53

16.7 14.9

11.6

VideoForeground/BackgroundSegmentationusingSpatiallyDistributedModelandEdge-basedShadowCancellation

95