Two-view Epipole-based Guidance Control for Autonomous Unmanned

Aerial Vehicles

W. Achicanoy

1

, C. Sag¨u´es

2

, G. L´opez-Nicol´as

2

and C. Rodr´ıguez

1

1

Departamento de Ingenier´ıa Mec´anica, Universidad de los Andes, Bogot´a, Colombia

2

Departamento de Inform´atica e Ingenier´ıa de Sistemas, Instituto de Investigaci´on en Ingenier´ıa de Arag´on,

Universidad de Zaragoza, Maria de Luna 1, E-50018, Zaragoza, Spain

Keywords:

UAV Guidance, Epipolar Geometry, Nonlinear Engagement, Input-output Linearization, State Feedback.

Abstract:

A visual control based on epipolar geometry is proposed to guide an autonomous unmanned aerial vehicle

(UAV) to a target position. The interest of this contribution resides in a new controller that allows purely

vision-based guidance reducing the dependence on the accuracy of the system’s state estimation using sensors

as Inertial Measurement Units (IMU) and Global Positioning Systems (GPS). A current view and a target view

are defined by a camera on-board the vehicle and a camera located at the target’s position, respectively. The

epipolar coordinates from these views are used todesign a nonlinear control based on input-output linearization

of the nonlinear engagement rule that relates the cameras’ positions in time. An integrator is included to force

the outputs (epipolar coordinates) to follow an equilibrium point and a state feedback control law is proposed

to stabilize the outcome of the linearized input-output mapping. Simulation experiments for guidance of an

small autonomous UAV with a classical three-loop autopilot are presented.

1 INTRODUCTION

UnmannedAerial vehicles (UAVs) are used in civilian

and military applications developing tasks of surveil-

lance and delivering cargo. Over the last decade,

UAVs have proved to be efficient in several missions

around the world, motivating the aircraft industry in

improving their internal systems and their capabil-

ities. For example, a vision system could enable

UAVs tracking a large number of targets (Schneider-

man, 2012). The UAVs’ performance commonly de-

pends on aerial and ground operation centres and hu-

man assistance, but autonomous operation is neces-

sary for stand-alone missions, or when the commu-

nication links fail and the vehicle needs to switch

to a safe flight mode. The accuracy of the UAVs’

flight depends on the on-board sensors’ capabilities

and its control robustness; the navigation and guid-

ance systems usually use Inertial Measurement Units

(IMU) and Global Positioning Systems (GPS), to get

information of the self-position and self-attitude; on

the other hand, they use cameras, spectrometers, and

radars, to retrieve the target’s features, position and

attitude.

Cameras have been used in a wide range of appli-

cations for control of aerial, terrestrial, and aquatic

vehicles, and vision-based control has become a

special branch of research. Most of the research

on vision-based control for autonomous UAVs have

used cameras’ information, together with inertial and

global positioning sensors, as inputs for filters or esti-

mators. For example, a vision-based guidance based

on trajectory optimization is proposed in (Watanabe

et al., 2006) and the vision data is used for an EKF

to estimate the target’s position and velocity relative

to the vehicle. They include a cost function that min-

imizes the acceleration effort the vehicle demands to

accomplish with three independent missions: Target

interception, obstacle avoidance, and formation flight.

In (Ma et al., 2007) a guidance law for a small UAV is

developed based on an adaptive filter that calculates

the target’s velocity over the image plane. The fil-

ter only uses the tracking information of the moving

target and a control law regulates the vehicle’s yaw

rate at a constant altitude (no depth information is re-

quired).

From another point of view, visual-based con-

trol methods based on epipolar geometry and ho-

mography have been used for mobile robot naviga-

tion. (Mariottini et al., 2004) and (L´opez-Nicol´as

et al., 2008) have developed two-view visual-control

for nonholonomic robots by means of nonlinear con-

242

Achicanoy W., Sagüés C., López-Nicolás G. and Rodríguez C..

Two-view Epipole-based Guidance Control for Autonomous Unmanned Aerial Vehicles.

DOI: 10.5220/0004032402420248

In Proceedings of the 9th International Conference on Informatics in Control, Automation and Robotics (ICINCO-2012), pages 242-248

ISBN: 978-989-8565-22-8

Copyright

c

2012 SCITEPRESS (Science and Technology Publications, Lda.)

trol and tracking of epipoles signals. In (L´opez-

Nicol´as et al., 2010) both epipolar geometry and ho-

mography matrix are used to design separated con-

trols that are switched using information of the de-

generacy of the fundamental matrix. Epipole-based

control is used at the initial stage of the navigation,

whereas homography-based control is used at the end

(short baseline). Homography has been used for UAV

navigation in (Hu et al., 2007b), where a multi-view

visual-based control, that uses quaternions and ho-

mography between pre-recorded satellite images and

the vehicle’s actual images, is proposed to track a de-

sired trajectory over the earth. Given that the esti-

mated position is up to scale and the depth is un-

known, open problems in this approach are clarified

in (Hu et al., 2007a). In (Kaiser et al., 2010) a multi-

view visual-based estimator based on homography

and GPS measurements is designed; using this infor-

mation an autopilot commands the vehicle’s control

surfaces. This estimator deals with two main chal-

lenges: The continuous tracking of features, entering

and leaving the vehicle’s camera Field of View (FoV),

and the GPS failures.

Given that the epipolar geometry has reported

successful outcomes in mobile robots, in this paper

we presented the development of a new controller

based on epipolar geometry for the guidance of an au-

tonomous UAVs with a single camera on-board is de-

veloped. The vehicle motion is assumed to be planar.

Two views, the current view (vehicle’s camera) and

the target view (static camera at the target’s position),

are used to compute the epipoles and to steer the vehi-

cle to a desired position. As in (Mariottini et al., 2004)

and (L´opez-Nicol´as et al., 2008), input-output lin-

earization (Khalil, 2002) is used, but on the cameras’

nonlinear engagement rule. The linearized output is

stabilized by an appropriated state feedback control

law and the outputs (epipolar coordinates) are forced

to follow an equilibrium point by means of an integra-

tor, that eliminates the steady state error and improves

the robustness of the closed-loop system. Both epipo-

lar coordinates in the current and target views are in-

dependently chosen as outputs. To deal with the com-

plexity of the aerial vehicle model, a classical three-

loop autopilot (Zarchan, 2007) is used to command

the vehicle. The controller reduces the complexity of

the on-board electronics, and the fact that only epipo-

lar estimation is required, makes it suitable for sim-

ple guidance applications. The paper is organized as

follows: in section 2 the planar epipolar geometry is

shown; in section 3 a state space representation of the

nonlinear planar engagement rule is presented; in sec-

tion 4 the control strategy is developed; and in section

5 results of the simulation experiments are analyzed.

f

c

e

t

Earth surface line

C

c

a

x

A

Inertial frame

a

z

f

t

γ

t

µ

t

λ

λ

γ

c

µ

c

p

c

p

t

v

t

Baseline

(Line of Sight LoS)

r

C

t

z

x

v

c

e

c

n

c

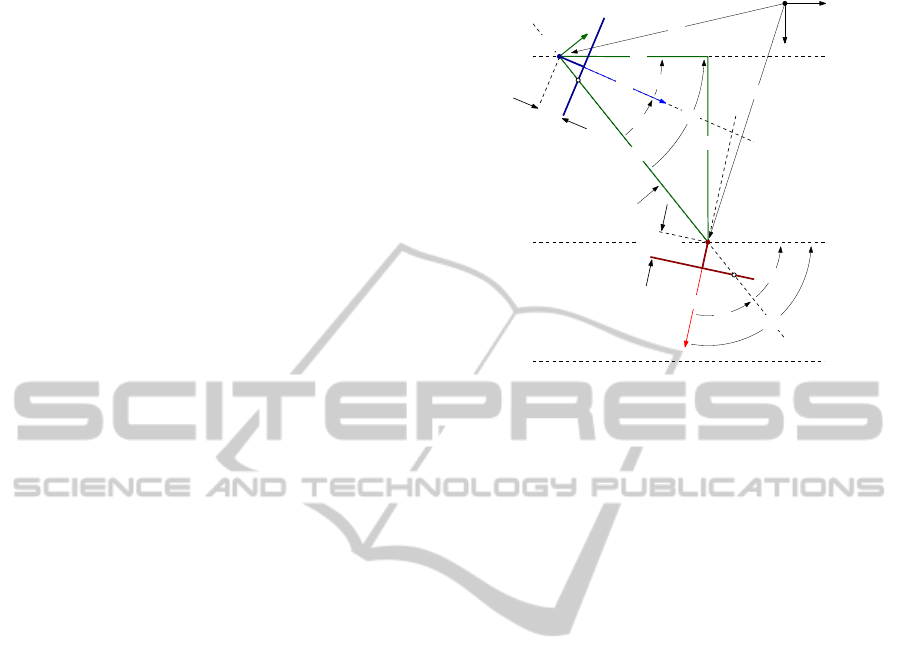

Figure 1: Planar two-view epipolar geometry (plane xz).

2 PLANAR EPIPOLAR

GEOMETRY

Figure 1 shows the planar two-view epipolar geom-

etry, relative to the inertial reference frame A, for

the autonomous UAV guidance problem. The first

view is defined by a current camera C

c

on-board the

vehicle, that is aligned with a current velocity vec-

tor v

c

= [u

A

c

,w

A

c

]

T

and is located at a current posi-

tion p

c

= [x

A

c

,z

A

c

]

T

, while the second view is an im-

age previously defined by a target camera C

t

, that

is aligned with a target velocity v

t

= [u

A

t

,w

A

t

]

T

at a

position p

t

= [x

A

t

,z

A

t

]

T

. The epipoles e

c

∈ R

2

(cur-

rent) and e

t

∈R

2

(target) are subject to the constraints

Fe

c

= 0 and F

T

e

t

= 0, where F ∈ R

3×3

is the fun-

damental matrix. F is estimated from a set of fea-

tures correspondences using the RANSAC algorithm

(Hartley and Zisserman, 2004). These epipoles can

be written as e

c

= [e

c,w

,e

c,h

]

T

and e

t

= [e

t,w

,e

t,h

]

T

,

where (e

c,w

,e

t,w

) and (e

c,h

,e

t,h

) are the coordinates

(in pixels) along the image’s width and height, re-

spectively. For planar guidance design, only the co-

ordinates (e

c,h

,e

t,h

) are useful, and it follows that

e

c,h

= f

c

tan(λ −γ

c

) (1a)

e

t,h

= −f

t

tan(γ

t

−λ) , (1b)

where f

c

and f

t

are the focal lengths for the current

camera and the target camera, respectively; λ is the

Line of Sight (LoS) angle, that is measured between

the LoS and the a

x

-axis; and γ

c

and γ

t

are the flight

path angles for the current camera and the target cam-

era, respectively. These angles are measured between

the velocity vectors (v

c

and v

t

) and the a

x

-axis. By

definition the baseline is equal to the LoS.

Two-viewEpipole-basedGuidanceControlforAutonomousUnmannedAerialVehicles

243

3 PLANAR ENGAGEMENT RULE

The planar engagement rule is a description of the

evolution of the LoS angle λ and the LoS rate of

change

˙

λ according to a lateral acceleration command

n

c

. If n

c

is proportional to

˙

λ, then, the current cam-

era C

c

(vehicle) can be steered to the target camera

C

t

(target). This is the intuitive Proportional Nav-

igation (PN) (Yanushevsky, 2007). From Fig. 1,

λ = arctan(z/x), whit x = x

A

t

−x

A

c

and z = z

A

t

−z

A

c

,

and the LoS rate of change is

˙

λ =

˙zx−z˙x

r

2

, (2)

where r =

√

x

2

+ z

2

is the magnitude of the instan-

taneous separation (range) between C

c

and C

t

. If

(2) is differentiated again, taking into account that

cosλ = x/r and sinλ = z/r, it follows that

¨

λ =

¨zcosλ− ¨xsinλ

r

−

2˙r˙zcosλ + 2˙r˙xsinλ

r

2

. (3)

Given that C

t

is static, the C

c

’s acceleration com-

ponents, in terms of the lateral acceleration magnitude

n

c

, are ¨x = −n

c

sinλ and ¨z = n

c

cosλ, then, (3) can be

symplified as

¨

λ = ωsin(λ −φ)+ n

c

/r , (4)

where ω = 2(v

cl

/r)

2

and φ = arctan(˙z/˙x). v

cl

= −

˙

r is

commonly known as the closing velocity. Finally, the

planar engagement can be presented as the continuous

nonlinear system

˙

η = f(η,u) =

η

2

ωsin(η

1

−φ)+ u/r

, (5)

where η = [η

1

,η

2

]

T

= [λ,

˙

λ]

T

∈R

2

is the state vector,

u = n

c

∈ R is the input, and f(η,u) : R

2

×R → R

2

is

a vector field.

4 EPIPOLE-BASED GUIDANCE

CONTROL

In this section, a guidance control based on input-

output linearization of the engagement system (5) is

presented. The output is based on the epipolar coordi-

nates’ measurements from the two images. The con-

trol law has the form of state feedback with integral

action. Both, the coordinate e

c,h

, defined by the Eq.

(1a), and the coordinate e

t,h

, defined by the Eq. (1b),

can be independently used as outputs, i.e., y = e

c,h

or

y = e

t,h

. If y is differentiated until the input u becomes

explicit it results that the relative degree of the system

(5) is 2, for all η ∈R

2

. We also obtain that either

u = −rωsin(η

1

−φ) −2r(η

2

−

˙

γ

c

)

2

tan(η

1

−γ

c

)

+ r

¨

γ

c

+

h

rcos(η

1

−γ

c

)

2

/ f

c

i

v

(6)

for y = e

c,h

, or

u = −rωsin(η

1

−φ) + 2rη

2

2

tan(γ

t

−η

1

)

+

h

rcos(γ

t

−η

1

)

2

/ f

t

i

v ,

(7)

for y = e

t,h

, lead the nonlinear output to the linearized

mapping

˙

ξ

1

= ξ

2

˙

ξ

2

= v

˙

σ = e ,

(8)

where ξ

1

= y, ξ

2

= ˙y, v is the new control, and

e = ξ

1

−ξ

r

1

= y −y

r

is the output error. An integral

action was included in (8) forcing the output y to fol-

low a reference ξ

r

1

= y

r

. It is easily verified that the

augmented system (8) with realization

A

a

=

0 1 0

0 0 0

1 0 0

B

a

=

0

1

0

C

a

=

1 0 0

(9)

has a controllable pair (A

a

,B

a

), and a state feedback

control

v = −K[ξ,σ]

T

, (10)

where K = [k

1

,k

2

,k

3

], can stabilize it. K is cho-

sen such that the matrix (A

a

−B

a

K) is Hurwitz (pole

placement) (Khalil, 2002) and local stability is guar-

anteed.

5 SIMULATION EXPERIMENTS

AND ANALYSIS

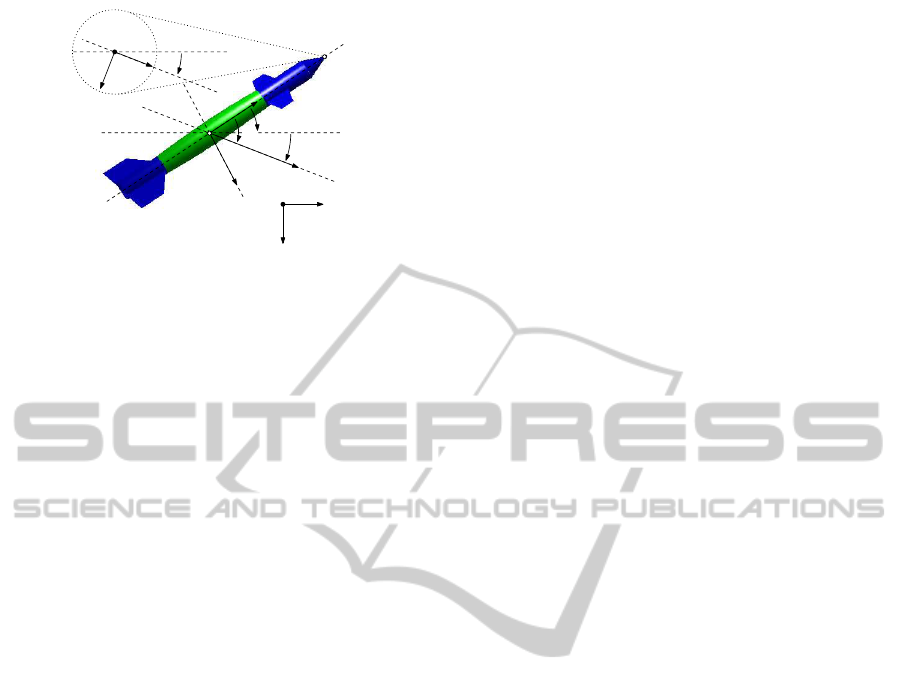

Figure 2 shows the model of an UAV, without propul-

sion and with a camera on-board, used to evaluate

the guidance control. For simplicity, a wing-body-tail

configuration with a horizontal control surface (wing)

and a stabilizing fixed surface (tail) was selected. Fast

control is guaranteed by means of the wing’s deflec-

tion angle δ, even though nonlinearities and rear sta-

bility interferences might appear (Chin, 1961). Given

that the downrange is limited, the earth is assumed to

be flat. Three reference frames are defined: (i) The

ICINCO2012-9thInternationalConferenceonInformaticsinControl,AutomationandRobotics

244

a

x

A

a

z

b

x

b

z

α

θ

v

c

x

c

z

B

C

γ

γ

cg

Vehicle

Camera

Figure 2: Autonomous UAV. The reference frames in the

vertical plane xz are: A (inertial), B (vehicle-fixed), and C

(camera-fixed).

inertial frame, named A, located at an initial altitude

h

0

; (ii) the vehicle-fixed frame, named B, aligned with

the principal axes; and (iii) the camera-fixed frame,

named C, aligned with the vehicle’s velocity vector

v or aero-stabilized. The camera’s principal axis is

parallel to the c

x

-axis and it is in the apex of the nose.

The rotation and translation equations can be found in

(Siouris, 2004). The estimation of the aerodynamic

coefficients was computed using the Vortex Lattice

Method (VLM) (Melin, 2000). For simplicity, the

drag, lift and pitch coefficients depend only on the

scheduling variables h (altitude) and M (Mach num-

ber) and are stored in look-up tables.

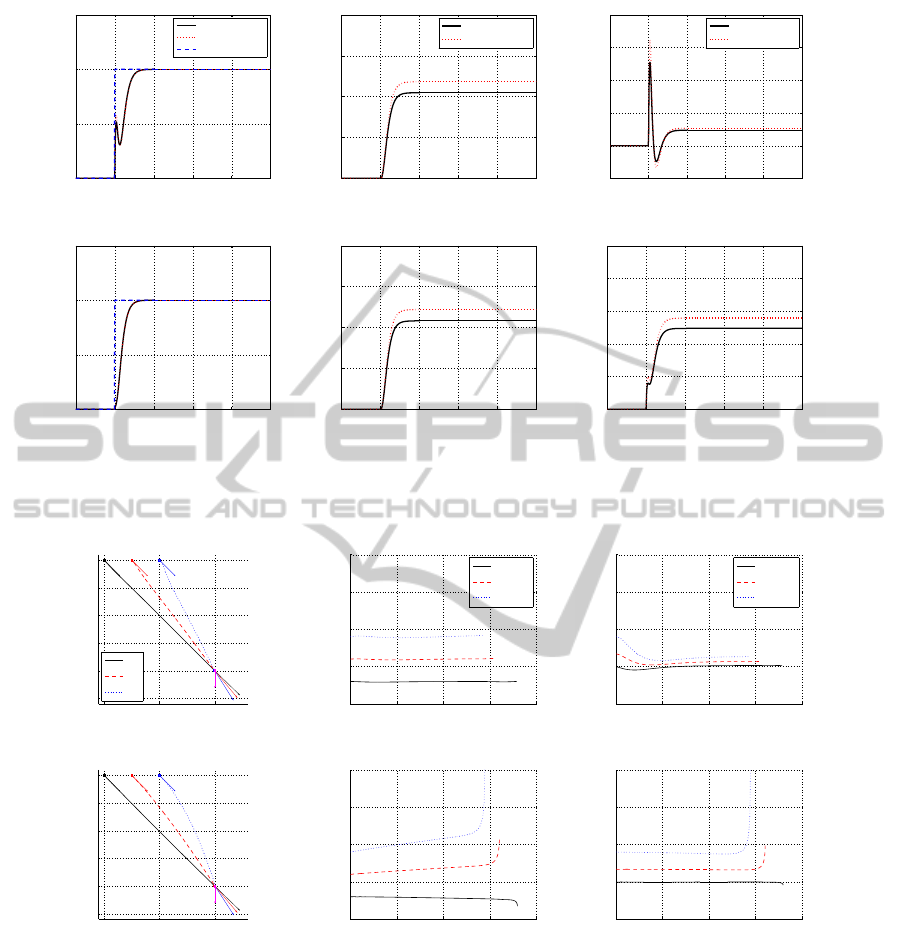

A classical three-loop autopilot (gyro and accel-

eration feedback), that translates acceleration com-

mands u to wing deflections δ is also used. A com-

plete description of the autopilot and the design equa-

tions can be found in (Zarchan, 2007). Fig. 3 shows

the autopilot outputs for the step acceleration com-

mand u = u

s

(t −1) G (u

s

is the unitary step function),

for the flight conditions h = 0 m (black continuous

lines), h = 1000 m (red dotted lines), M = 0.3, and

M = 0.9. The actuator’s dynamics are neglected and

ideal sensors are assumed. The design parameters are

ω

CR

= 50 rad/s (crossover frequency measured at the

gain margin), ζ = 0.7 (total acceleration damping),

and τ = 0.2 s (total acceleration time constant). Fig.

3(a) and Fig. 3(d) show the correspondence between

the desired acceleration n and the autopilot’s response

for these parameters. It is noted that the vehicle’s high

manoeuvrability is reached at low altitude h and high

Mach number M, and the angle of attack α and the

deflection angle δ stay at low values (Fig. 3(e) and

Fig. 3(f), respectively). For M ≤ 0.3 the autopilot

demands high values of α and δ (Fig. 3(b) and Fig.

3(c), respectively), and the vehicle’s aerodynamic ca-

pabilities are exceeded. This flight condition can lead

the system to instability. In order to deal with the

change of the vehicle’s parameters, according to the

flight conditions, a look-up table (with linear inter-

polation) is implemented to select the autopilot gains

according to the values of h and M.

On the other hand, it is assumed that the cameras

C

c

and C

t

are identical, ideal (skew is zero), and with

intrinsic parameters defined as in (Hartley and Zis-

serman, 2004). The image resolution is 480 ×640

pixels, the focal length is f = f

c

= f

t

= 240 pixel,

and the epipoles’ measurements are assumed to be

known only from geometric relationships. For the

simulations, the frame A is fixed at the initial alti-

tude h

0

= 2500 m, the vehicle’s initial velocity is

v

0

= 200b

x

m/s, and the initial attitude angle θ is

equal to the current cameraC

c

’s attitude angle γ

c

, i.e.,

the initial angle of attack α

0

= 0 deg. The state feed-

back control gain K is calculated with the Acker’s for-

mula.

Initially, it is shown that the control with unforced

output y, for both control laws (6) and (7) can be con-

sidered as PN-based control. This is explained by

means of the differentiation of the Eq. (1b), that re-

sults in a directly proportional relationship between

the rate of change of the target epipolar coordinate

˙e

t,h

and the LoS rate of change

˙

λ. As in PN, the feed-

back of ˙e

t,h

multiplied by an appropriate constant can

stabilize the linearized output (8), which means that

the LoS angle λ is constant and the control based on

y = e

t,h

can drive the vehicle to the target position

(parallel navigation). An exact estimation of v

cl

is

not necessary. Furthermore, if the vehicle’s path an-

gle γ

c

is replaced by a constant value, then, the differ-

entiation of the Eq. (1a) results in a directly propor-

tional relationship between ˙e

c,h

and

˙

λ, and the control

based on y = e

c,h

is able to drive the vehicle to the

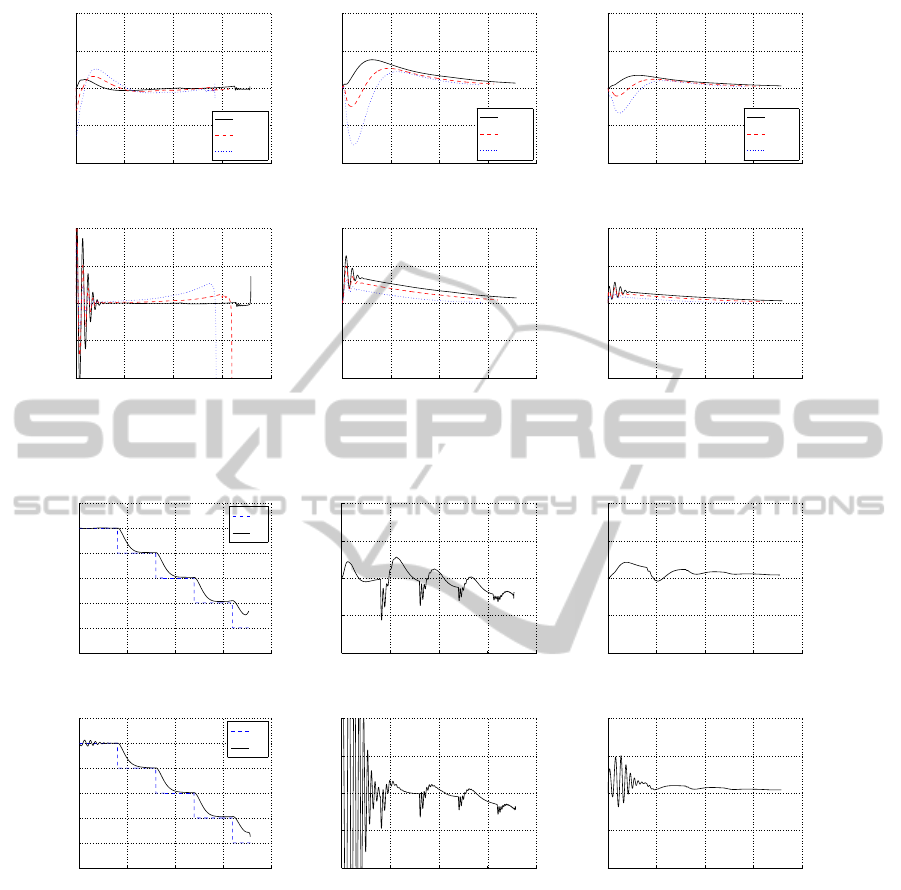

target position too. Fig. 4 shows the outputs of both

control laws for three different initial current cameras

C

c,1

at p

c,1

= [0, 0]

T

m, C

c,2

at p

c,2

= [500,0]

T

m, and

C

c,3

at p

c,3

= [1000, 0]

T

m. The initial attitude an-

gle is θ

0

= 45 deg for all the cameras. Fig. 4(a) and

Fig. 4(d) show that all cameras converge to the target

camera C

t

at p

t

= [2000,2000]

T

m (γ

t

= 90 deg) for

y = e

t,h

and y = e

c,h

, respectively. The gain K is the

same for both control laws and differences between

them are mainly noted in the shape of the trajecto-

ries. Fig. 4(b) and Fig. 4(f) show that the epipolar

coordinates e

t,h

and e

c,h

reach equilibrium points for

both control laws. Fig. 4(c) and Fig. 4(e) show the

free evolution of e

c,h

and e

t,h

for both control laws.

The shape of the vehicle’s trajectory is defined by the

shape of the output epipolar coordinates. Fig. 5(a)

and Fig. 5(d) show the control action u (command

acceleration required for the three cameras C

c,1

, C

c,2

,

and C

c,3

) for both control laws. At the initial stage

of the guidance, a maximum acceleration effort is re-

quired for both control laws. However, the control

Two-viewEpipole-basedGuidanceControlforAutonomousUnmannedAerialVehicles

245

0 1 2 3 4 5

0

0.5

1

1.5

Time: t s

Lateral acceleration: n G

n (h = 0 m)

n (h = 1000 m)

u

(a) n (M = 0.3)

0 1 2 3 4 5

0

10

20

30

40

Time: t s

Angle of attack: α deg

α (h = 0 m)

α (h = 1000 m)

(b) α (M = 0.3)

0 1 2 3 4 5

−20

0

20

40

60

80

Time: t s

Deflection angle: δ deg

δ (h = 0 m)

δ (h = 1000 m)

(c) δ (M = 0.3)

0 1 2 3 4 5

0

0.5

1

1.5

Time: t s

Lateral acceleration: n G

(d) n (M = 0.9)

0 1 2 3 4 5

0

0.5

1

1.5

2

Time: t s

Angle of attack: α deg

(e) α (M = 0.9)

0 1 2 3 4 5

0

0.2

0.4

0.6

0.8

1

Time: t s

Deflection angle: δ deg

(f) δ (M = 0.9)

Figure 3: Autopilot response to step acceleration u = u

s

(t −1) G (blue dashed lines) at altitudes h = 0 m (black continuous

lines) and h = 1000 m (red dotted lines). The first and second rows show the outputs for the Mach numbers M = 0.3 and

M = 0.9, respectively.

0 1000 2000

0

500

1000

1500

2000

2500

Downrange: x m

Crossrange: z m

C

c,1

C

c,2

C

c,3

(a) Trajectories (y = e

t,h

)

0 5 10 15 20

−300

−200

−100

0

100

Time: t s

Target coordinate: e

t,h

pixel

e

t,h

(C

c,1

)

e

t,h

(C

c,2

)

e

t,h

(C

c,3

)

(b) e

t,h

(y = e

t,h

)

0 5 10 15 20

−100

0

100

200

300

Time: t s

Current coordinate: e

c,h

pixel

e

c,h

(C

c,1

)

e

c,h

(C

c,2

)

e

c,h

(C

c,3

)

(c) e

c,h

(y = e

t,h

)

0 1000 2000

0

500

1000

1500

2000

2500

Downrange: x m

Crossrange: z m

(d) Trajectories (y = e

c,h

)

0 5 10 15 20

−300

−200

−100

0

100

Time: t s

Target coordinate: e

t,h

pixel

(e) e

t,h

(y = e

c,h

)

0 5 10 15 20

−100

0

100

200

300

Time: t s

Current coordinate: e

c,h

pixel

(f) e

c,h

(y = e

c,h

)

Figure 4: Outputs of the guidance based on both output y = e

t,h

and output y = e

c,h

, for the three current cameras C

c,1

, C

c,2

,

and C

c,3

, positioned at different space locations but with the same initial attitude angle θ

0

= 45 deg. The first and second rows

show the outputs for y = e

t,h

and y = e

c,h

, respectively.

law based on the coordinate e

c,h

requires more accel-

eration than the control law based on the coordinate

e

t,h

. Control based on e

t,h

does not develop high am-

plitude oscillations at the initial stage of the guidance,

but it needs high values of α and δ. Fig. 5(b) and Fig.

5(e) show the angle of attack α, and Fig. 5(c) and

Fig. 5(f) show the deflection angle δ, for both control

laws. After the initial stage of the guidance, the val-

ues of the angles α and δ required for the control law

based on y = e

c,h

are smaller than the values required

for the control law based on y = e

t,h

. The camera C

c,3

requires the highest values of u, α and δ, due to its

initial condition; it is ahead of the other cameras and

needs more turning effort.

Next, the outputs y = e

t,h

and y = e

c,h

, are forced

to follow an equilibrium reference. Fig. 6(a) and

ICINCO2012-9thInternationalConferenceonInformaticsinControl,AutomationandRobotics

246

0 5 10 15 20

−10

−5

0

5

10

Time: t s

Lateral acceleration: u G

u (C

c,1

)

u (C

c,2

)

u (C

c,3

)

(a) u (y = e

t,h

)

0 5 10 15 20

−10

−5

0

5

10

Time: t s

Angle of attack: α deg

α (C

c,1

)

α (C

c,2

)

α (C

c,3

)

(b) α (y = e

t,h

)

0 5 10 15 20

−10

−5

0

5

10

Time: t s

Deflection angle: δ deg

δ (C

c,1

)

δ (C

c,2

)

δ (C

c,3

)

(c) δ (y = e

t,h

)

0 5 10 15 20

−10

−5

0

5

10

Time: t s

Lateral acceleration: u G

(d) u (y = e

c,h

)

0 5 10 15 20

−10

−5

0

5

10

Time: t s

Angle of attack: α deg

(e) α (y = e

c,h

)

0 5 10 15 20

−10

−5

0

5

10

Time: t s

Deflection angle: δ deg

(f) δ (y = e

c,h

)

Figure 5: Acceleration u, angle of attack α, and deflection angle δ for the set of current cameras C

c,1

, C

c,2

, and C

c,3

. The first

and the second rows correspond to the controls based on y = e

t,h

and y = e

c,h

, respectively.

0 5 10 15 20

−50

−40

−30

−20

−10

0

10

Time: t s

Target coordinate: e

t,h

pixel

e

t,h

r

e

t,h

(a) e

t,h

(y = e

t,h

)

0 5 10 15 20

−10

−5

0

5

10

Time: t s

Lateral acceleration: u G

(b) u (y = e

t,h

)

0 5 10 15 20

−10

−5

0

5

10

Time: t s

Deflection angle: δ deg

(c) δ (y = e

t,h

)

0 5 10 15 20

−50

−40

−30

−20

−10

0

10

Time: t s

Current coordinate: e

c,h

pixel

e

c,h

r

e

c,h

(d) e

c,h

(y = e

c,h

)

0 5 10 15 20

−10

−5

0

5

10

Time: t s

Lateral acceleration: u G

(e) u (y = e

c,h

)

0 5 10 15 20

−10

−5

0

5

10

Time: t s

Deflection angle: δ deg

(f) δ (y = e

c,h

)

Figure 6: Outputs for the reference signals e

r

t,h

= e

r

c,h

= −10k, for kT ≤ t < (k + 1)T, k = 0,1,2,. . ., T = 4 s, and t ≥ 0;

acceleration u; and deflection angle δ. The target camera C

t

is located at p

t

= [2000,2000]

T

m and aligned to the current

camera C

c

(γ

t

= 45 deg). The first and second rows correspond to the controls based on y = e

t,h

and y = e

c,h

, respectively.

Fig. 6(b) show the outputs of the control laws based

on y = e

t,h

and y = e

c,h

for the reference signals

e

r

t,h

= e

r

c,h

= −10k pixel, for kT ≤ t < (k + 1)T,

k = 0,1,2,..., T = 4 s, and t ≥ 0. The initial cur-

rent camera C

c

is located at p

c

= [0, 0]

T

m with initial

attitude angle θ

0

= 45 deg. The target camera C

t

is

fixed at p

t

= [2000, 2000]

T

m and aligned to C

c

with

attitude angle γ

t

= 45 deg. The gain K has the same

value for both control laws. It is noted that the epipo-

lar coordinates follow the equilibrium references for

both control laws. As in the previous simulation, it

can be noticed at the initial stage of the guidance, that

the control law based on y = e

c,h

requires more accel-

eration effort than the other. After this stage, small

values of α (Fig. 6(b) and Fig. 6(e)) and δ (Fig. 6(c)

and Fig. 6(f)) are required for the control law based

on y = e

c,h

. As both outputs can follow the reference,

it allows the control laws to guide the vehicle with

a reference LoS angle λ to the target position. The

angle λ is directly related to the epipolar references

Two-viewEpipole-basedGuidanceControlforAutonomousUnmannedAerialVehicles

247

and a limited control of attitude can be achieved by

both control laws. In addition, a reduction of the au-

topilot’s gain margin (stability) is noted and the total

damping and the time response are affected (see the

settling time in the Fig. 6(a) and the Fig. 6(b)). For

this simulation the autopilot’s design parameters and

the control law gain K were: ω

CR

= 10 rad/s, ζ = 0.7,

τ = 0.2 s, and K = [8,2,8]. Moreover, the initial stage

of the guidance is the worse flight condition that the

autopilot has to deal with. For high altitude h and low

Mach number M, the autopilot requires the higher val-

ues of α and δ. This initial condition affects more the

control law based on y = e

c,h

than the control based on

y = e

t,h

. One way to improve the initial response of

the autopilot is to rise the value of the vehicle’s initial

velocity, but it will always be constrained.

6 CONCLUSIONS

An epipole-based control law for guiding an au-

tonomous UAV has been presented. Only epipolar

measurements from two views were used to drive the

vehicle to a static camera position. Stabilization of

a nonlinear engagement rule by an input-output non-

linear control strategy was developed and two differ-

ent alternatives for guidance, one based on the cur-

rent epipolar coordinate, and the other based on the

target epipolar coordinate, were studied. A state feed-

back control law with integral action guaranteed that

the epipolar coordinates follow a reference equilib-

rium signal. A model of a non-propelled UAV, that

includes a classical three-loop autopilot, was used to

simulate the control strategies. The tracking of refer-

ence signals and stability analysis will be studied as

future developments.

ACKNOWLEDGEMENTS

This work was supported by Universidad de los An-

des / Industria Militar de Colombia (INDUMIL), Uni-

versidad de Zaragoza / Ministerio de Ciencia e Inno-

vaci´on / Uni´on Europea DPI2009-08126, and Univer-

sidad de Nari˜no.

REFERENCES

Chin, S. (1961). Missile configuration design. McGraw-

Hill, 1st edition.

Hartley, R. I. and Zisserman, A. (2004). Multiple View Ge-

ometry in Computer Vision. Cambridge University

Press, ISBN: 0521540518, 2nd edition.

Hu, G., Gans, N., Mehta, S., and Dixon, W. (2007a). Daisy

chaining based visual servo control part ii: Exten-

sions, applications and open problems. In IEEE Inter-

national Conference on Control Applications, 2007,

pages 729 – 734.

Hu, G., Mehta, S., Gans, N., and Dixon, W. (2007b). Daisy

chaining based visual servo control part i: Adaptive

quaternion-based tracking control. In IEEE Inter-

national Conference on Control Applications, 2007,

pages 1474 – 1479.

Kaiser, M. K., Gans, N. R., and Dixon, W. E. (2010).

Vision-based estimation for guidance, navigation, and

control of an aerial vehicle. Aerospace and Electronic

Systems, IEEE Transactions on, 46(3):1064 – 1077.

Khalil, H. K. (2002). Nonlinear Systems. Prentice Hall,

ISBN: 0130673897, 3rd edition.

L´opez-Nicol´as, G., Guerrero, J., and Sag¨u´es, C. (2010).

Visual control of vehicles using two-view geometry.

Mechatronics, 20(2):315 – 325.

L´opez-Nicol´as, G., Sag¨u´es, C., Guerrero, J., Kragic, D., and

Jensfelt, P. (2008). Switching visual control based on

epipoles for mobile robots. Robotics and Autonomous

Systems, 56(7):592 – 603.

Ma, L., Cao, C., Hovakimyan, N., and Woolsey, C.

(2007). Development of a vision-based guidance law

for tracking a moving target. In AIAA Guidance, Nav-

igation and Control Conference and Exhibit. AIAA.

Mariottini, G., Prattichizzo, D., and Oriolo, G. (2004).

Epipole-based visual servoing for nonholonomic mo-

bile robots. In IEEE International Conference on

Robotics and Automation, 2004, volume 1, pages 497

– 503.

Melin, T. (2000). A vortex lattice matlab implementation

for linear aerodynamic wing applications. Master’s

thesis, Royal Institute of Technology (KTH).

Schneiderman, R. (2012). Unmanned drones are flying high

in the military/aerospace sector [special reports]. Sig-

nal Processing Magazine, IEEE, 29(1):8 – 11.

Siouris, G. M. (2004). Missile Guidance and Control Sys-

tems. Springer New York, ISBN: 9780387007267, 1st

edition.

Watanabe, Y., Johnson, E., and Calise, A. (2006). Vision-

based guidance design from sensor trajectory opti-

mization. In AIAA Guidance, Navigation and Control

Conference and Exhibit. AIAA.

Yanushevsky, R. (2007). Modern Missile Guidance. CRC

Press, ISBN: 1420062263, 1st edition.

Zarchan, P. (2007). Tactical and Strategic Missile Guid-

ance. American Institute of Aeronautics and Astro-

nautics Inc., ISBN: 9781563478741, 5th edition.

ICINCO2012-9thInternationalConferenceonInformaticsinControl,AutomationandRobotics

248