AGENT-HUMAN INTERACTIONS IN THE CONTINUOUS

DOUBLE AUCTION, REDUX

Using the OpEx Lab-in-a-Box to explore ZIP and GDX

Marco De Luca and Dave Cliff

Department of Computer Science, University of Bristol, Merchant Venturers Building

Woodland Road, Bristol BS8 1UB, U.K.

Keywords: Algorithmic trading, Continuous double auction, Experimental economics, Trading agents.

Abstract: In 2001, a team of researchers at IBM published a paper in IJCAI which reported on the first experiments

that systematically studied the interactions of human traders and software-agent traders in electronic

marketplaces running the continuous double auction (CDA) mechanism. IBM found that two software-agent

strategies, known as GD and ZIP, consistently outperformed human traders. IBM's results received

international press coverage, probably because the CDA is the mechanism that is used in the main electronic

trading systems that make up the global financial markets. In 2002, Tesauro & Bredin published details of

an extension to GD, which they named GDX, for which they wrote: "We suggest that this algorithm may

offer the best performance of any published CDA bidding strategy". To the best of our knowledge, GDX

has never been tested against human traders under experimental conditions. In this paper, we report on the

first such test: we present detailed analysis of the results from our own replications of IBM's human vs. ZIP

experiments and from our world-first experiments that test humans vs. GDX. Our overall findings are that,

both when competing against ZIP in pure agent vs. agent experiments and when competing against human

traders, GDX's performance is significantly better than the performance of ZIP.

1 INTRODUCTION

At the 2001 International Joint Conference on

Artificial Intelligence (IJCAI-01), a team of IBM

researchers presented a paper (Das, Hanson, Kephart

& Tesauro, 2001) that generated press coverage

around the world (e.g. Graham-Rowe, 2001). Das et

al.’s paper was the first to apply the laboratory

methods of experimental economics (e.g. Kagel &

Roth, 1997) to the systematic comparative

evaluation of adaptive autonomous software-agent

“robot” trader strategies, in controlled experiments

that pitted the robot traders against human traders in

a continuous double auction (CDA) mechanism. The

IBM team explored their own robot strategy, a

modified form of the Gjerstad-Dickhaut algorithm

(Gjerstad & Dickhaut, 1998) which we will refer to

as EGD (Extended GD), and a version of the Zero-

Intelligence Plus (ZIP) algorithm developed by Cliff

at Hewlett-Packard Labs (Cliff & Bruten, 1997). Das

et al. reported on results from six experiments

involving a number of human subjects being pitted

against a similar number of a particular type of

trading-agent: EGD in four experiments, and ZIP in

the remaining two. The results from all six of these

experiments were conclusive: the average efficiency

of the robot traders, i.e. their ability to enact

profitable transactions, was consistently higher than

that of the human traders, and this was true for both

the trading strategies. The IBM paper concluded

with the following memorable passage:

“[…] the successful demonstration of machine

superiority in the CDA and other common

auctions could have a much more direct and

powerful financial impact—one that might be

measured in billions of dollars annually”

Somewhat curiously, in the decade since that

paper was first published, as far as we can determine

no-one has yet reported on a replication of those

results. We speculate here that this is because, back

in 2001, to set up an experimental economics

laboratory such as that used by the IBM team

required a considerable investment. However, as the

real cost of personal computers (PCs) and data-

networking hardware has fallen dramatically in the

351

De Luca M. and Cliff D..

AGENT-HUMAN INTERACTIONS IN THE CONTINUOUS DOUBLE AUCTION, REDUX - Using the OpEx Lab-in-a-Box to explore ZIP and GDX.

DOI: 10.5220/0003293903510358

In Proceedings of the 3rd International Conference on Agents and Artificial Intelligence (ICAART-2011), pages 351-358

ISBN: 978-989-8425-41-6

Copyright

c

2011 SCITEPRESS (Science and Technology Publications, Lda.)

past ten years, we observed that it is now possible to

re-create the necessary laboratory apparatus using

low-cost “netbook” PCs for a total cost of only a few

thousand dollars. With that motivation, we have

designed and implemented an experimental

economics laboratory network trading system, where

“trader terminal” netbooks communicate with a

central “exchange” server, with the potential for

multiple instruments to be traded simultaneously in

varying quantities, and with every quote in the

marketplace, and details of all transactions, written

to a database as a single “consolidated tape” record

of the trading events (to sub-second timestamp

accuracy) over the course of a trading experiment.

This trading system, which is called “OpEx” (from

Open Exchange) will be open-sourced under a

creative commons license in the near future (De

Luca, forthcoming 2011). In this paper, we report on

the use of OpEx to replicate IBM’s IJCAI-01 results

from testing human traders against ZIP and the most

recent evolution in the “GD” class of algorithmic

traders: GDX (Tesauro & Bredin, 2002). To the best

of our knowledge, these are the first results from

testing GDX against humans. We find that our

results agree with IBM in the respect that the GDX

and ZIP robot traders consistently out-perform the

human traders, but our results differ from IBM’s in

that we find that GDX outperforms ZIP, while in the

IBM study ZIP slightly outperforms EGD on

average. Our results are also in line with those

achieved by Tesauro & Bredin: in pure robot vs.

robot competitions, GDX outperforms ZIP and

proves to be a major improvement of the original

GD algorithm.

2 BACKGROUND

Today, the vast majority of financial products are

traded electronically: following exact rules, buyers

and sellers, collectively known as traders, interact in

a common virtual “marketplace” to trade those

products. The numerous organisations that are in

place to allow electronic trading of financial

securities are known as exchanges, or sometimes

markets. The set of rules that define the exchange

process between traders on a market forms its

market mechanism, of which the continuous double

auction (CDA) is the most used due to its high

efficiency:

“Markets organised under double-auction trading

rules appear to generate competitive outcomes

more quickly and reliably than markets organised

under any alternative set of trading rules.” (Davis

& Holt, 1993)

In a CDA, traders can make bids and accept

offers asynchronously at any time during the trading

day (that is, the fixed-duration trading period during

which trading is allowed). All the offers are usually

publicly visible by all market participants, and a

trade is made whenever the outstanding bid is

greater than or equal to the outstanding ask.

Although it is made up of simple rules, the

nonlinearities of the CDA are too complex to be

analysed by traditional mathematical methods such

as game theory: as a results, researchers have turned

to empirical approaches.

In his Nobel-prize-winning work, Vernon Smith

(1962) ran several experiments with human traders,

and demonstrated that markets governed by the

CDA can reach close-to-optimal efficiency. Also, he

proved that transaction prices converge to the

market’s theoretical competitive equilibrium price,

where the supply and demand curves intersect.

Furthermore, he found that if the supply and demand

of markets suddenly changed, the transaction prices

would rapidly converge to the new equilibrium

price. In many of his experiments, Smith studied the

dynamics of CDA-based markets by assigning one

unit to sell(buy) at no less(more) than a specific

price to each of the traders. The price of the unit,

known as limit price, represents the maximum

amount of money l a buyer can spend to buy the

unit, or the minimum value c for which a seller can

sell the unit. As a consequence, buyers make a profit

l-p if they buy at a price p that is less than their limit

price, whereas sellers make a profit p-c if they sell

for a price p higher than their limit price. The limit

prices are private, each trader knowing only her

limit. The traders interact by quoting the price at

which they are willing to trade their units. In Smith’s

early experiments this happened by speaking the

number out loud, thus the public quotes in a CDA

are often referred to as shouts. A random player is

selected every turn to make a shout, and the game

finishes after a fixed number of turns. Following the

rules of the CDA, a trade occurs when the

outstanding bid is greater than or equal to the

outstanding ask. Smith measured the performance of

a trader in terms of allocative efficiency, which is the

total profit earned by the trader divided by the

maximum theoretical profit of that trader, expressed

as a percentage. The maximum theoretical profit of a

trader is the profit that trader could have made if all

the market participants would have traded their units

at the theoretical competitive market equilibrium

price. A further measure of the performance of a

ICAART 2011 - 3rd International Conference on Agents and Artificial Intelligence

352

market is the profit dispersion: this is defined as the

cross-sectional root mean squared difference

between the actual profits and the maximum

theoretical profits of individual traders. Formally, if

a

i

is the actual profit earned by trader i, and p

i

is the

theoretical equilibrium profit for that trader, then for

a group of n traders the profit dispersion is given by:

1

(

−

)

(1)

3 OPEN EXCHANGE

We ran our experiments on Open Exchange (OpEx),

an experimental algorithmic trading platform

developed by De Luca (forthcoming 2011). OpEx

was designed to resemble closely the structure and

the behaviour of modern commercial financial-

market electronic trading systems, and to be generic

enough to support experimental economics

simulations of arbitrary complexity. Figure 1

illustrates the interaction between the core

components in a simple configuration. The

connections between the components on the left

Figure 1: An instance of Open Exchange. The solid lines

and the dotted lines represent the flow of order data,

respectively the requests and the replies. The sparsely

dotted lines indicate the market data flow, from the

Exchange back to the order generators through the Market

Data Bus.

hand side show the flow of order data. Orders

represent the agents' instructions to buy or sell a

specific quantity of a given product at a particular

price condition. Human traders enter their orders in

the Trading GUI, a graphical application that allows

users to view the market order book (i.e. the

descending-ordered list of currently outstanding

bids, and the ascending-ordered list of currently

outstanding offers), their “blotter” (personal history

of orders and trades), and their assignments. Agents,

on the other hand, produce orders automatically,

without the need of human intervention, on the basis

of the market conditions that they observe. Once

generated, orders are sent to the Order Manager,

which routes them to the appropriate order processor

(in this example, the single Exchange) depending on

the destination specified by the sender. Once

received by the Exchange, orders are processed

according to the specific order matching logic

implemented (the order matching logic that we will

cover in detail here is the price-time priority

matching logic, which constitutes the foundation of

the CDA) and order completion data is passed back

to the Order Manager, which in turn dispatches it to

the appropriate sender. It is worth noting that order

data are private, as only the originator of an order

receives the order completion data relative to that

specific order, which will let him/her know its

progress. Conversely, market data are published on

the Market Data Bus and can be seen by every

market participant.

4 AGENTS

In the Open Exchange framework, automated

trading agents are implemented as individual plug-

ins running on an instance of the Agent Host. In line

with the distributed architecture of OpEx, there can

be multiple instances of the Agent Host, each one

running a particular set of Agents. Every Agent

implements one specific algorithm and has its own

configuration settings, loaded at start-up. One

instance of the Agent Host is capable of running

multiple instances of the same Agent, so that more

than one automated trader following a specific

strategy can participate in the market

simultaneously. The behaviour of an OpEx Agent

consists of cyclically listening to stimuli and reacting

to them sequentially by performing one or more

actions. Agents are idle as they wait for the next

stimulus, whereas they perform calculations and

they can send a signal to the market when they are

active. Each stimulus represents a precise event (e.g.

“the activation timer has expired”, “an order has

been sent”, or “there has been a trade”) and it is

produced by a specific source asynchronously.

Unprocessed stimuli are convoyed to a common

collector, and then the resulting queue, sorted

chronologically, is processed sequentially. Our

choice of timing mechanism is consistent with the

previous IBM work (Das et al., 2001), where similar

timing rules were used to regulate the activity of the

Agents. However, while the results presented in

AGENT-HUMAN INTERACTIONS IN THE CONTINUOUS DOUBLE AUCTION, REDUX - Using the OpEx

Lab-in-a-Box to explore ZIP and GDX

353

(Das et al., 2001) are from experiments run using

two different timer periods (“fast”, 1 second; and

“slow”, 5 second) for the different algorithms, in our

work reported here we used the same timing across

all the experiments in order to simplify the

comparison of the performances of the different

trading agents. In particular, our Agents primary

timer period is set to 1 second, equivalent to the

“Fast” configuration used in (Das et al., 2001). On

the other hand, OpEx schedules the activity of the

Agents in a much more basic way when running in

“Discrete Event Simulator” (DES) mode. DES

simulations are turn-based (300 turns in one trading

day), and at each turn only one Agent is chosen at

random among the active Agents, each of which has

the same probability of being selected.

4.1 ZIP

In 1996, Cliff invented the Zero-Intelligence Plus

(ZIP) algorithm to investigate the minimum level of

intelligence required to achieve convergence to

market equilibrium price (Cliff & Bruten, 1997). ZIP

has been used in several subsequent studies, e.g.

(Tesauro & Das, 2001) and (Das et al., 2001), as a

benchmark for evaluation of strategy efficiency, and

it was subsequently extended to ZIP60 by Cliff

(2009). Each ZIP trader agent maintains a real-

valued profit margin and employs simple heuristic

mechanisms to adjust their margin using market

data. In this context, the profit margin represents the

difference between the agent’s limit price and the

shout price, which is the price that the agent sends to

the market to buy or sell the commodity. By

observing market events, ZIP buyers (sellers)

increase their profit margin, and therefore make

cheaper bids (more expensive offers), when a trade

at a lower (higher) price than their current shout

price occurs. Conversely, ZIP buyers that observe an

accepted offer (bid) at a price higher (lower) than the

one they have put on the market move towards that

price by lowering their profit margin, that is bidding

(offering) a higher (lower) price. The same applies

to buyers (sellers) that witness a rejected bid (offer)

at a higher price than the one they are advertising.

The profit-margin adaptation rule used in the ZIP

algorithm to dynamically respond to the market

conditions is based on the Widrow-Hoff “delta rule”

with an additional noise-smoothing “momentum”

term. The profit margin of the ZIP traders is adjusted

by a small random quantity, proportional to the

learning rate of the individual agent. Consistently

with (Preist & Van Tol, 1998) and (Das et al., 2001),

we altered the original ZIP implementation to fit in

the OpEx infrastructure by introducing an “inactivity

timer”. The timer triggers a procedure that adjusts

the shout price of the agents moving it towards the

best price on the opposite side of the order book. As

a result, the piece of information “nothing is

happening in the market” is used by the agents as an

additional pricing heuristic.

4.2 GD/GDX

In 1998 Gjerstad & Dickhaut introduced a bidding

algorithm, now widely referred to as GD, centred on

“belief” functions that agents form on the basis of

observed market data. GD agents collect the orders

(rejected shouts) and trades (accepted shouts)

occurred during the last M trades, and store them in a

history H. When a GD agent prices an order, from

the history H it builds the belief function f(p), which

represents the probability that an order at price p

will result in a trade. For example, the belief

function for a GD buyer is:

(

)

=

(

)

+

()

(

)

+

(

)

+

(

)

(2)

Here, TBL(p) represents the number of accepted

bids found in H at price ≤ p, AL(p) is the number of

asks in H with price ≤ p, and RBG(p) is the number

of rejected bids in H at price ≥ p. Note that f(p)

depends on H, and therefore it can potentially

change every time a market participant sends an

order to the market. Because f(p) is defined only for

some values of p, the function is interpolated to

provide values over the domain of all the valid

prices. Finally, the price p that maximises the

product of the interpolated f(p) and the profit

function of the agent (equal to l - p for buyers and p -

l for sellers) is chosen as the order price. The

original GD algorithm was modified by Tesauro &

Bredin (2002), who christened their version “GDX”.

GDX substantially differs from GD in that it makes

use of Dynamic Programming (DP) to price orders.

The pricing function takes into account both the

effect of trading the current unit immediately, and

the effect of trading it in the future, discounting the

latter by a parameter γ. As a result, GDX agents do

not just maximise the immediate profit, but instead

optimise the pricing process in order to achieve

overall higher returns over the entire trading period.

5 EXPERIMENTAL SETUP

All of our human vs. robot experiments involved 6

human traders and 6 robot traders, both equally split

ICAART 2011 - 3rd International Conference on Agents and Artificial Intelligence

354

Figure 2: Trade price time series for a humans-vs.-GDX experiment. The vertical lines represent the start of a new round.

The 10 rounds of 3 minutes each were divided into 5 phases, each of which with its own set of limit prices. The theoretical

equilibrium price for each phase is indicated by the horizontal dashed lines. Trades between two humans are marked with

open squares, between two agents with open circles, and between an agent and a human with solid circles. Mean efficiency

per phase (vertical bars) and per rounds are shown for Agent Buyers (AB), Agent Sellers (AS), Human Buyers (HB) and

Human Sellers (HS).

into 3 buyers and 3 sellers, a structure used in the

original IBM experiments. Before each experiment,

the human subjects were briefed about the rules, and

were given some time to familiarise with the Sales

Trading GUI (briefing and tutorial typically took

less than 30 minutes). The subjects had no previous

professional experience in electronic trading, and

they were only allowed to participate in one

experiment. We motivated all 6 participants by

giving each of them a token worth £20, plus a bonus

of £40 and £20 to the first and the second best

trader, respectively. An experiment consisted of 10

consecutive “rounds” 3 minutes long. At the

beginning of a round, each of the 12 players

received a fresh supply of 13 units to buy or to sell

during that round, according to their role. At the end

of the round the unused units were discarded,

without any profit or loss for the traders. Players had

to trade their units sequentially, and the sequence of

their limit prices was arranged in an arithmetic

progression. Only 3 “generator” sequences were

actually used to produce the prices for all the

players: a human and his/her robot counterparty had

the same limit prices; and buyers and sellers share

the same values except for the order, that is

increasing for sellers and decreasing for buyers. The

progressions had the same slope, and they were

chosen so that each player had approximately the

same maximum theoretical surplus in a given round.

In line with (Das et al., 2001), we introduced market

shocks by periodically altering the limit prices

adding or subtracting a constant to them every 2

rounds. Thus, a 30 minutes simulation was

constituted by 5 consecutive trading periods at

different equilibrium prices.

6 EXPERIMENTAL RESULTS

6.1 Agents vs. Humans

The results of the four agent-human experiments,

summarised in Table 1, present several significant

findings, all of which are in line with (Das et al.,

AGENT-HUMAN INTERACTIONS IN THE CONTINUOUS DOUBLE AUCTION, REDUX - Using the OpEx

Lab-in-a-Box to explore ZIP and GDX

355

Table 1: Summary of the four agent-human experiments. For each experiment, the table displays: the strategy employed by

all six agents; the percentage of trades made between two Agents, an Agent and a Human, and two Humans; the average

efficiency of Agents and Humans; the percentage difference between Agents surplus and Humans surplus; the market

efficiency and the profit dispersion. The mean maximum theoretical profit per trader per simulation is 2107. Lower profit

dispersion and higher mean efficiency values are better.

Experiment Trades Performance Market

ID Strategy A-A A-H H-H Eff(A) Eff(H) Δ Profit (A-H) Eff Profit Disp

UoB01 ZIP 35% 35% 30% 1.010 0.965 5% 0.987 536

UoB04 ZIP 39% 30% 32% 1.037 0.931 11% 0.984 468

UoB05 GDX 36% 40% 24% 1.055 0.789 36% 0.923 707

UoB06 GDX 33% 44% 22% 1.074 0.809 35% 0.943 704

2001).

First, the agents as a group consistently

outperformed the humans in all four experiments:

the total surplus extracted from the market by the

agents was on average ~22% more than the total

surplus extracted by the human counterpart. Also,

the efficiency achieved by the agents is constantly

above 100%, which evidently implies that the agents

managed to exploit human flaws.

Second, there was a substantial interaction

between agents and humans: on average, ~37% of

the trades happened between an agent and a human,

which confirms that the humans as a group were

well integrated in the mixed humans-agents market.

Third, we found that for each experiment, either

all the buyers (but one) did better than all the sellers,

or vice versa. Because this pattern was found neither

in the numerous robot vs. robot experiments we ran

under identical conditions, nor in the many human

vs. human trials documented in (Smith, 1962), we

speculate that this asymmetry is due to the

heterogeneous nature of our market.

Finally, our analysis shows that although GDX

agents as a group achieve higher values of allocative

efficiency than ZIP agents when competing against

humans, both the overall market efficiency and the

profit dispersion values are better for ZIP.

6.1.1 GDX Agents vs. Humans

The trade price time series of the human vs. GDX

experiment UoB06 is shown in Figure 2. We will

refer to this specific experiment, although the

observations we made on UoB05 are very similar.

The dashed vertical lines separate the 10 trading

periods, whereas the dashed horizontal lines mark

the theoretical equilibrium price p

*

. The time series

exhibits a recurring pattern of convergence towards

a price that is often somewhat lower than p

*

. Most of

the trades were made at lower prices than p

*

, since

buyers closed deals at reasonably lower prices than

their limit prices, and therefore kept a higher profit

margin than their sellers counterparty. This is

confirmed by the fact that the five best traders in

terms of mean allocative efficiency are buyers, for

both the human vs. GDX experiments.

A more detailed analysis of the efficiency per

trading period reveals that the discrepancy between

buyers and sellers is accentuated by the raising of

the equilibrium price (e.g. between trading periods 6

and 7), and attenuated by the drop (e.g. between

trading periods 2 and 3, and 8 and 9). We explained

this by looking at the first few trades made in the

trading period following the market shock: their

prices tend to remain close to the previous value of

p

*

, resulting in better opportunities for buyers or for

sellers, if there was a raise or a drop of p

*

respectively. This confirms that the GDX strategy

requires a few samples before it can adapt to the new

market condition.

6.1.2 ZIP Agents vs. Humans

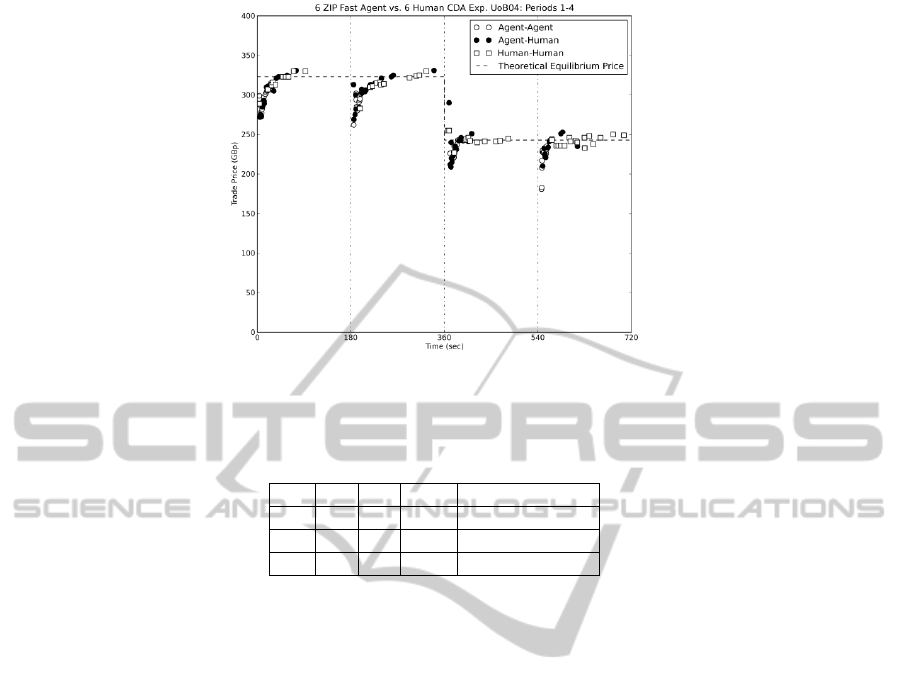

Figure 3 illustrates the first four trading periods of

experiment UoB04, which are quite representative

for the two human vs. ZIP experiments we ran. By

visual inspection, it can be verified that human-ZIP

markets display better capabilities of tracking the

equilibrium price, as convergence to p

*

is more

pronounced than in human-GDX markets. It is clear

that the patterns displayed by this time series are

quite different from those in Figure 2. It can be

noted that, qualitatively, the shape of the time series

is reasonably consistent across the trading periods,

and that the curve presents a higher price excursion

in a shorter time than GDX before converging to p

*

.

We ran a detailed quantitative analysis of the time

series to confirm this, and found that the mean trade

time relative to the trading period is ~45 seconds for

ZIP-humans and ~69 seconds for GDX-humans

markets. Moreover, by isolating the trades between

two agents (A-A), between two humans (H-H), and

between a human and an agent (A-H), we found that

the mean trade time of the three types of trades is

ICAART 2011 - 3rd International Conference on Agents and Artificial Intelligence

356

Figure 3: The first four trading periods of experiment UoB04.

Table 2: Summary of three sets of robot vs. robot experiments between GDX & ZIP agents. For each set of experiments, the

table presents: the type of experiment, the value of the discount parameter γ, the number of experiments won by the two

agents, and the mean number of rounds per experiments won by GDX (±1 s.d.).

Type γ ZIP GDX GDX rounds won

DES 0.0 46 1011 8.567 (± 1.817)

DES 0.9 14 985 9.094 (± 1.273)

RT 0.9 316 654 5.736 (± 1.518)

consistently higher in GDX than in ZIP. Also, the

mean trade time of A-A trades is the smallest and

that of H-H trades is the largest consistently across

trading periods in the experiments involving ZIP,

while this relationship does not hold for some

trading periods of experiments UoB05 and UoB06.

6.2 Robots vs. Robots

In order to further benchmark ZIP and GDX, we ran

three sets of experiments between the two agents, in

a pure robot vs. robot market. The results are

outlined in Table 2.

Qualitatively in line with (Tesauro & Bredin,

2002), GDX clearly outperforms ZIP in discrete

event simulations, both when run in optimal mode (γ

= 0.9) and when degenerated to GD (γ = 0); in

particular, the performance of GDX improves

slightly for γ = 0.9. However, the win-lose ratio

changes radically when the experiment is run in

Real-Time (RT) mode, that is using the same set-up

described for human vs. robot markets. This is also

confirmed by the values of the mean number of

rounds won by GDX.

We speculate that the difference between the

DES and the RT results is mostly due to the very

nature of the two simulators: DES simulations are

essentially single-threaded, and the agent selected

for the current move has a virtually unlimited time to

perform its calculation before ending the move.

Conversely, each agent is represented by (at least)

one thread in a RT simulation: agents are woken up

asynchronously, therefore two or more of them may

happen to operate “simultaneously” (compatibly

with the software and hardware scheduling policies

in force on the system running the simulation). This

discrepancy is particularly relevant when comparing

GDX and ZIP because the calculations performed by

the latter are much more light-weighted than those

performed by the former: while the GDX strategy

may fare overwhelmingly better than ZIP if it is

given all the required time to execute the pricing

calculations, the difference between the performance

of the two is dramatically reduced when time is

critical, and the fastest agent to hit a price makes

more profit.

7 DISCUSSION & CONCLUSIONS

We were pleased to employ our low-cost, portable

experimental economics laboratory to, for the first

time ever, pit humans against what is known to be

the most evolved version of the “GD” class of

algorithms. The results we obtained are, at the best

AGENT-HUMAN INTERACTIONS IN THE CONTINUOUS DOUBLE AUCTION, REDUX - Using the OpEx

Lab-in-a-Box to explore ZIP and GDX

357

of our knowledge, unique, and they present several

noteworthy characteristics.

The application of Dynamic Programming

techniques indeed proves its validity in terms of

overall efficiency achieved by the agents as a group,

against both human and automated rivals. The

advantage of GDX over its predecessor is also

confirmed by comparing our results to those realised

in the IBM study, which present consistently lower

values of mean efficiency of the trading agents.

On the other hand, human-ZIP markets certainly

display better overall performance, in terms of

market efficiency and profit dispersion. This

suggests that ZIP agents would be better companions

for human traders in a CDA-regulated market where

the objective is to maximise the whole profit

extracted, whereas GDX would be a better choice in

a scenario where humans and agents are pitted

against each other as two separate teams, each one

trying to exploit their rivals’ weaknesses to

maximise their own profit.

Moreover, we note here that several features of

the market dynamics observed in our experiments

deserve further investigation: the curved price

trajectories and their convergence to the theoretical

equilibrium price; the distinct separation between

buyers and sellers in terms of overall performance;

and the effect of timing constraints on the

algorithmic traders.

Ultimately, it would be interesting to test our

algorithmic traders in two additional scenarios,

compatibly with the time and money issues related

to running the experiments. One where the period of

the agency interventions is forced to be comparable

to the estimated reaction time of the human traders:

this would reveal in what measure the superiority of

the agents is bound to their speed. And a second

scenario where professional traders are used instead

of amateurs, which would explain whether solid

trading skills in the global financial markets make

any difference in a competition against automated

traders.

ACKNOWLEDGEMENTS

The authors are very thankful to the UK Engineering

and Physical Sciences Research Council (EPSRC:

http://www.epsrc.ac.uk) for funding the equipment

used for this research, and to the many students and

staff at University of Bristol who took part in the

experiments. Both authors are members of, and

receive financial support from, the UK Large-Scale

Complex IT Systems (LSCITS) Initiative. Further

details at http://www.lscits.org.

REFERENCES

Cliff, D., 2009. ZIP60: Further explorations in the

evolutionary design of online auction market

mechanisms. IEEE Transactions on Evolutionary

Computation, 13(1):3-18.

Cliff, D., Bruten, J., 1997. Minimal Intelligence Agents

for Bargaining Behaviours in Market-Based

Environments. Technical report, HPL-97-91, Hewlett

Packard Labs.

Das, R., Hanson, J. E., Kephart, J. O., Tesauro, G.,

2001.Agent-Human Interactions in the Continuous

Double Auction. In: Proc. 17th International Joint

Conference on Artificial Intelligence, pp. 1169—1176.

Davis, D. D., Holt, C. A., 1993.Experimental Economics.

Princeton University Press.

De Luca, M. Forthcoming 2011. OpEx: An Open

Exchange Experimental Economics Laboratory in a

Box. Technical Report in preparation.

Gjerstad, S., Dickhaut, J., 1998.Price Formation in Double

Auctions. In: Games and Economic Behaviour, vol.

22, pp. 1—29.

Graham-Rowe, D., 2001. Bots knock the socks off city

slickers. New Scientist, 11 August 2001.

Kagel, J., Roth, A., 1997.The Handbook of Experimental

Economics. Princeton University Press.

Preist, C., Van Tol, M., 1998. Adaptive agents in a

persistent shout double auction. In: Proc. of the First

International Conference on Information and

Computation Economies, pp 11—18, ACM Press.

Tesauro, G., Das, R., 2001. High-performance bidding

agents for the continuous double auction. In: Proc.

Third ACM Conference on Electronic Commerce, pp.

206—209.

Tesauro, G., Bredin, J. L., 2002. Strategic Sequential

Bidding in Auctions using Dynamic Programming. In:

Proc. 1st International Joint Conf. on Autonomous

Agents and Multiagent Systems, pp. 591—598.

Smith, V., 1962. An Experimental Study of Comparative

Market Behaviour. In: Journal of Political Economy,

vol. 70, pp. 111—137.

ICAART 2011 - 3rd International Conference on Agents and Artificial Intelligence

358