LASER BASED TELEROBOTIC CONTROL FOR ASSISTING

PERSONS WITH DISABILITIES PERFORM ACTIVITIES OF

DAILY LIVING

Karan Khokar, Redwan Alqasemi and Rajiv Dubey

Department of Mechanical Engineering, University of South Florida, 4202 E. Fowler Ave., Tampa, Florida, U.S.A.

Keywords: Telerobotics, Rehabilitation Robotics, Human-Machine Interaction.

Abstract: In this paper we demonstrate an innovative use of range information from a laser sensor mounted on the

end- effector of a remote robot manipulator to assist persons with disabilities carry out ADL (Activities of

Daily Living) tasks in unstructured environments. Laser range data is used to determine goals, identify

targets and via points to enable autonomous execution of trajectories. The human operator performs

minimal teleoperation and is primarily involved in higher level decision making. We hypothesize that laser

based assistance improves task performance in terms of time and accuracy and also reduces the cognitive

load on the user executing the task. Tests on ten healthy human subjects in executing a remote manipulation

task conform the hypothesis.

1 INTRODUCTION

Robotic devices have thus been used to enable

physically disabled individuals to execute ADL

tasks (Bolmsjo, Neveryd and Eftring, 1995).

However, teleoperation of a remote manipulator puts

a lot of cognitive load on the operator (Bolmsjo et

al., 1995). Our previous work at the Rehabilitation

Robotics Laboratory at University of South Florida

has focused on augmenting the performance of

motion-impaired users in job-related tasks using

scaled teleoperation and haptics (Pernalete, Yu,

Dubey and Moreno, 2002) and assistance based on

real-time environmental information and user

intention (Yu, Alqasemi ,Dubey and Pernalete,

2005). In this work the laser data is used to

determine goal points, identify targets and via points

in unstructured environments that enables

autonomous execution of certain sub tasks under

human supervisory control thus providing assistance

to the human. The human is still in the loop to make

high level decisions like pointing the laser to critical

points in the remote environment by teleoperating

the arm.

Hasegawa, Suehiro and Takase (1991) made use

of the laser range information to compute and record

3D co-ordinates of points on objects in the

environment, to enable autonomous task execution.

Takahashi and Yashige (2000) presented a simple

and easy to use laser based robot positioning system

to assist the elderly in doing daily pick-and-place

activities. Nguyen, Anderson, Trevor, Jain, Xu and

Kemp (2008) made use of an arrangement consisting

of a laser pointer, a monochrome camera, a color

filter and a stereo camera pair to estimate the 3D co-

ordinates of a point in the environment and thereby

fetch objects in the environment designated with the

laser pointer.

2 LASER ASSISTED CONTROL

We have used PUMA560 as the remote manipulator

and Phantom Omni haptic device as the master. The

human user by means of teleoperation points the

laser to critical points in the environment. These

critical points could be goal points, objects or planar

surfaces of interest. The laser sensor is mounted on

the end-effector of PUMA and has the same

orientation as the end-effector. The laser beam

direction thus will always be parallel to the z axis of

the end-effector.

2.1 Laser Assisted Target Position

Determination and Autonomous

Trajectory Execution

The user points the laser to a specific target and

446

Khokar K., Alqasemi R. and Dubey R. (2010).

LASER BASED TELEROBOTIC CONTROL FOR ASSISTING PERSONS WITH DISABILITIES PERFORM ACTIVITIES OF DAILY LIVING.

In Proceedings of the 7th International Conference on Informatics in Control, Automation and Robotics, pages 446-449

DOI: 10.5220/0003004804460449

Copyright

c

SciTePress

locks the target by ceasing to teleoperate. Then as

the user commands by pressing a keyboard key, the

machine generates a linear trajectory from the

current PUMA end effector location to the target

point determined by the laser and also executes the

trajectory.

For generating a linear trajectory, the initial

and final transformation matrices are required. The

initial point transformation matrix is determined

from PUMA forward kinematics and that for the

final point is determined using laser range data and

(1) (ref Fig. 1).

L

O

E

L

B

E

B

O

TTTT **=

(1)

2.2 Laser Assisted Autonomous

Surface Alignment

For certain ADL tasks determination of orientation

of the planar surface associated with the target is

necessary to orient the end-effector in such a way

that it is aligned with the perpendicular to the

surface. This alignment has been implemented as an

autonomous function using laser data and it helps

the user to manipulate a target from a convenient

angle in teleoperation.

Figure 1: Autonomous trajectory generation concept.

The user points the laser to three distinct points

on the planar surface to record their co-ordinates.

When the user activates autonomous alignment

algorithm, the transformation matrix required for

end-effector alignment is computed online and the

end-effector aligns with the surface. The z-

component of the rotation component of the

transformation matrix is the same as the unit vector

along normal to the surface which is computed by

the cross product of the vectors connecting the three

points P1, P2 and P3. The x and y-components are

then obtained using the right-hand rule.

3 APPLICATION OF LASER

BASED CONCEPT IN TASK

EXECUTION

Fig. 2 shows the sequence of steps in executing a

pick and place task using laser assistance. The user

starts with locating the destination point by pointing

the laser in teleoperation and commanding the

system to record the co-ordinates of that point (Fig.

2(a)). Next the user identifies three random points on

the planar surface with the laser (Fig. 2(b)). After

this the user locates the target object with the laser

and commands the execution of the autonomous

trajectory (Fig. 2(c)). When the arm stops near the

target (Fig. 2(d)) the user commands autonomous

alignment with the planar surface (Fig. 2(e)). Now

as the arm is in a convenient configuration for

grasping the user makes fine adjustment in

teleoperation to locate the gripper exactly over the

target to grasp it.

Next the user commands the system to

autonomously execute a path from the current

location to the destination point while avoiding the

obstacle. The path is executed in three linear

segments. , the first being from the current PUMA

location to a point vertically above it so that the arm

is clear of the tallest obstacle (Fig. 2(e) and 2(f)), the

second being in the horizontal plane from this point

to a point vertically above the destination (Fig. 2(f)

and 2(g)) and the third is to a point just above the

destination (Fig. 2(g) and 2(h)). The initial and final

transformation matrices for each segment are

computed by the system from forward kinematics,

the heeight of the tallest obstacle and the destination

point coordinates. The height of the tallest obstacle

is determined by pointing the laser to the top of the

obstacle and recording the co-ordinates.

After the arm has traversed the path, the user makes

fine movements to precisely locate the target over

the destination by teleoperation and places the

target. Thus we see that the human is only a

supervisor and is involved in minimum

teleoperation. The trajectories and generated online

and executed autonomously. Moreover the interface

consisting of pointing with laser and pressing

LASER BASED TELEROBOTIC CONTROL FOR ASSISTING PERSONS WITH DISABILITIES PERFORM

ACTIVITIES OF DAILY LIVING

447

(a) (b) (c) (d)

(e) (f) (g) (h)

Figure 2: Laser based features in pick and place task execution: (a) Pointing to destination point for path planning (b)

Pointing to platform points for planar surface orientation determination (c) Pointing to target (cup) for autonomous

trajectory generation (d) End of autonomous trajectory (e) Autonomous end-effector alignment (f) Autonomous path – end

of first segment (g) Autonomous path – end of second segment (h) Autonomous path – end of third segment.

keyboard keys is simple to use. This way we aim at

relieving the human from much cognitive load in

task execution. The destination point, target and

surface could be located anywhere in the

environment and thus the method can be used for

manipulating in unstructured environments.

4 TESTS AND RESULTS

The test bed consists of PUMA and Omni

manipulators (Fig. 3). A SICK DT60 laser range

finder and a Logitech MT Orbit CCD Camera were

mounted on the PUMA end effector.

For testing, 10 healthy subjects were asked to

perform a pick and place task thrice in each of the

two modes, one is the unassisted teleoperation mode

and the other is the laser assisted mode. For each run

the time taken to complete the task, the end effector

Cartesian co-ordinates and the user experience were

recorded. Before starting the tests the subjects were

given sufficient time to acclimatize with the system.

In general each subject was given 5 to 6 trials before

testing.

Figure 3: Test bed of PUMA and Omni manipulators.

The experimental set up is shown in Fig. 4. The

cup is the target which is to be picked up from the

location shown, and placed on the orange sticky

shown to the left. The folder simulates an obstacle.

The task here is to command the arm from a ‘ready’

position towards the target, grasp the target and

place it over the destination point while avoiding the

obstacle.

Figure 4: Experimental set-up for pick and place task.

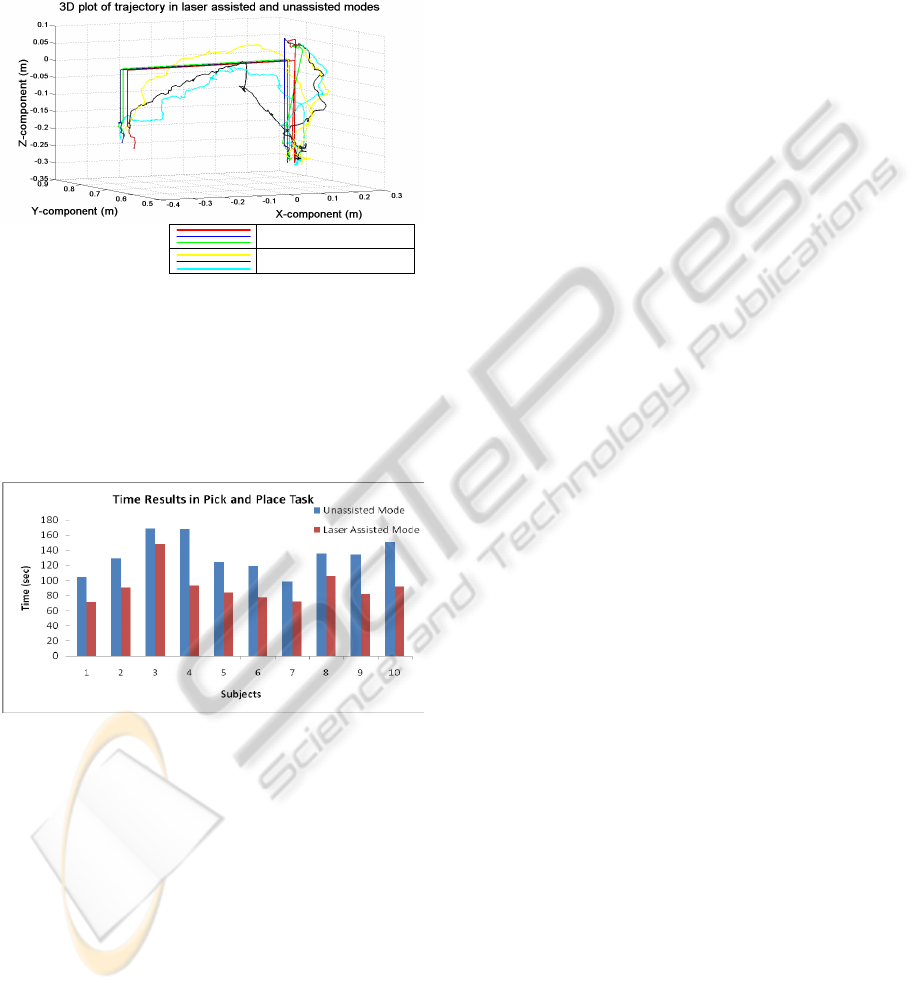

The task performance is compared between the two

modes in terms of accuracy and time. Accuracy is

presented in the form of the path traversed by the

end effector in the two modes.. Plot for only one

subject is shown in Fig. 5. We observe that the

motion generated by laser assisted mode was,

continuous and accurate. On the other hand the

motion in the unassisted mode was discontinuous

and the arm often deviated from the path. The

subjects had tremendous difficulties in teleoperating

ICINCO 2010 - 7th International Conference on Informatics in Control, Automation and Robotics

448

the PUMA without any assistance. The loops around

the pick-up point are due to subjects repeatedly

trying to orient the gripper properly so that it is in a

convenient configuration for grasping. Orienting the

arm properly was one of the most challenging

activities the subjects faced.

Laser Assisted Modes

Unassisted Modes

Figure 5: Accuracy in path traversal for laser assisted and

unassisted modes.

The average time per subject per mode was

computed and is presented in the form of plots in

Fig. 6. We observe that each subject spent more time

to complete the task in the unassisted mode than in

the laser assisted mode.

Figure 6: Time plots in executing pick and place task.

The user experience in executing the task in the

two modes was also noted. The users preferred the

laser assisted mode as generating task plans by

picking up points with the laser was easier for them

and the robot executed the task. In teleoperating

without assistance they experienced a lot of mental

load.

5 CONCLUSIONS

Our hypothesis that the laser assisted telerobotic

control methodology of task execution would

improve the task performance and reduce the mental

load on the users has been validated by tests on

healthy subjects on a pick and place task. The task

performance in terms of time and accuracy improved

and the subjects were overwhelmingly in favour of

using the laser assisted mode in executing the task as

they experienced less cognitive load. With the

results obtained we believe that this telerobotic

system would make it possible for persons with

disabilities to execute ADL tasks with much greater

ease. Next we intend to test the method on persons

with disabilities and for a variety of ADLs.

REFERENCES

Bolmsjo, G., Neveryd, H., Eftring, H. (1995). Robotics in

Rehabilitation. IEEE Transactions on Rehabilitation

Engineering. 3(1), 77 -83.Hasegawa, T., Suehiro, T.

and Takase, K. (1991). A Robot System for

Unstructured Environments Based on an Environment

Model and Manipulation Skills. IEEE International

Conference on Robotics and Automation (Vol. 1, pp.

916-923).

Nguyen, H., Anderson, C., Trevor, A., Jain, A., Xu, Z. and

Kemp, C. (2008). El-E: An Assistive Robot that

Fetches Objects from Flat Surfaces. The Robotic

Helpers Workshop at HRI.

Pernalete, N., Yu, W., Dubey, R. and Moreno, W. (2002).

Development of a Robotic Haptic Interface to Assist

the Performance of Vocational Tasks by People with

Disability. IEEE International Conference on Robotics

and Automation. (Vol. 2, pp. 1269-1274).

Takahashi, Y. and Yashige, M. (2000). Robotic

manipulator operated by human interface with

positioning control using laser pointer. IEEE 26th

Annual Conference of the Industrial Electronics

Society (Vol. 1, pp. 608-613).

Tarn, T., Xi, N. and Guo, C. (1996). Task-Oriented

Human and Machine Co-Operation in Telerobotic

Systems. Annual Reviews in Control. (Vol. 20, pp.

173-178).

Yu, W., Alqasemi , R., Dubey, R. and Pernalete, N.

(2005). Telemanipulation Assistance based on Motion

Intention Recognition. IEEE International Conference

on Robotics and Automation. (pp. 1121-1126).

LASER BASED TELEROBOTIC CONTROL FOR ASSISTING PERSONS WITH DISABILITIES PERFORM

ACTIVITIES OF DAILY LIVING

449