TOWARDS PLUGGABLE USER INTERFACES FOR PEOPLE WITH

COGNITIVE DISABILITIES

∗

Jochen Frey, Christian Schulz, Robert Neßelrath, Verena Stein and Jan Alexandersson

Deutsches Forschungszentrum f

¨

ur K

¨

unstliche Intelligenz GmbH, Stuhlsatzenhausweg 3, 66123 Saarbr

¨

ucken, Germany

Keywords:

Intelligent user interfaces, Ambient assisted living, Multimodal dialogue systems, Pluggable user interfaces,

Task-based user interfaces.

Abstract:

We present two user interfaces: one multimodal dialogue system and one task-based calendar which assist

people with mild cognitive disabilities affecting their concentration and memory. A new middleware based

upon a new open industrial standard—ISO/IEC 24752 Universal Remote Console (URC)—allows access to

any network services or appliances as well as devices for home entertainment and household via abstract

user interfaces. This architecture promotes the concept of pluggable user interfaces, that is, the abstract user

interface being rendered on a controller.

1 INTRODUCTION

In a modern home environment, handling complex

media devices, e. g., TV, radio or DVD player, but also

household devices, e. g., washing machine, fridge,

freezer or oven, is only possible through proprietary

user interfaces which are typically remote controls or

built-in control panels. In a considerably high number

of cases, even mainstream users have problems exe-

cuting seemingly moderate tasks, such as recording a

movie. In most cases there is a lack of support offered

by the in-house solution concerning meaningful and

user-friendly user interface design. The task of de-

signing intuitive user interfaces is getting even more

important if the target group includes people with

cognitive disabilities. A crucial point of why new

technologies actually remained beyond their grasp de-

pend on how much or how little attention has been

paid to user interface design.

A possible solution to address this problem is to

create one single user interface customized for the tar-

get group that spans all appliances at home in a homo-

geneous way. Such a user interface should be intuitive

and not overtake users, e. g., with unnecessary fea-

tures. The target users focussed on in this work need

support for recurring tasks via home automation.

These requirements, among others, are addressed

∗

This work has been funded by the European Commis-

sion under the grant FP6-033502 (i2home). The opinions

herein are those of the author and not necessarily those of

the funding agency.

by the EU-funded project i2home (www.i2home.org),

where the newly published ISO standard Universal

Remote Console (URC) (Zimmermann and Vander-

heiden, 2007) is used as a middleware (UCH) for in-

teracting with a smart home. The UCH exposes an

abstract description of the appliance or service (tar-

get) in form of a “contract” (socket description). All

targets are thus exposed in a coherent way which en-

ables a user interface designer to focus on the interac-

tion concepts.

2 USER-CENTRED DESIGN

The i2home project incorporates technical and user

partners from different European countries and aims

at opening access technologies around home appli-

ances for persons with special needs. In this, a ma-

jor aspect is to design standardized access strategies

that are also applicable to domains beyond scenarios

linked to the home environment. One outcome of the

project is the development of an accessible platform

for abstract user interfaces that can be plugged (see

section 3) and rendered independently.

By pursuing a user-centered design (UCD) ap-

proach, e. g., ISO 13407, the interaction is completely

based on real user needs meaning that different user

types are the driving force for the development of

technology. A key ingredient at this point is Cooper’s

persona approach (Cooper, 1999) describing differ-

ent users in various stages of the development pro-

428

Frey J., Schulz C., Neßelrath R., Stein V. and Alexandersson J. (2010).

TOWARDS PLUGGABLE USER INTERFACES FOR PEOPLE WITH COGNITIVE DISABILITIES.

In Proceedings of the Third International Conference on Health Informatics, pages 428-431

DOI: 10.5220/0002731404280431

Copyright

c

SciTePress

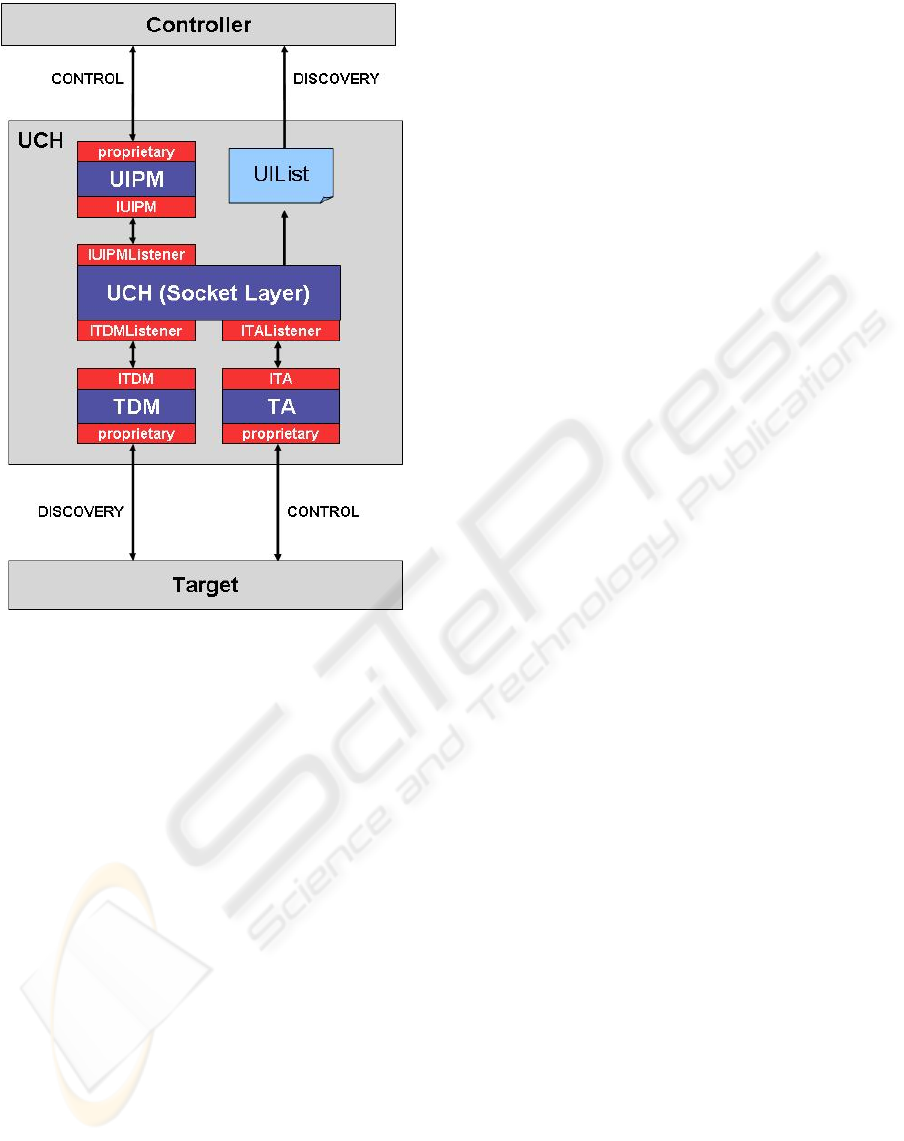

Figure 1: The Universal Control Hub architecture.

cess. On the basis of this approach several general

descriptions, personae and scenarios have been con-

structed bearing the typical characteristics of inter-

viewed participants (Buiza et al., 2007). User inter-

faces implemented for these personae are evaluated

and the results and experiences are fed into the re-

quirements phase again. This cycle has been executed

three times.

In this paper, we focus on 1) a multimodal di-

alogue system and 2) a task-based calender which

have been designed jointly by the technical partner

German Research Center for Artificial Intelligence

(DFKI GmbH), and the user partner the Swedish Insti-

tute of Assistive Technology (SIAT). Our target users

are represented by the persona Emma, 28 years old,

who is living with her boyfriend, and, due to a car ac-

cident, is suffering from concentration and memory

problems. Emma is the synthesis of approximately

20 persons. By using her UI, she can, in a coherent

manner, interact with a number of targets. Among the

most prominent ones we have consumer electronicsı.

e., DVB-T in combination with Windows Media Cen-

ter, kitchen appliances and a calendar/reminder. The

selection of targets is derived from scenarios imple-

menting realistic daily activities in Emma’s life.

3 ARCHITECTURE: THE

UNIVERSAL REMOTE

CONSOLE

The i2home architecture is built upon a new series of

industry standards (ISO/IEC 24752 Universal Remote

Console & ANSI/CEA 2018 Task Model Description)

for interfacing networked appliances by means of a

Universal Remote Console (URC) (Zimmermann and

Vanderheiden, 2007) and for adding to the UIs, sup-

port for interaction, see (Rich, 2009). The imple-

mentation thereof is a middleware called universal re-

mote console (UCH) that supports up-to-date promi-

nent communication standards and allows for control-

ling multiple devices at the same time, see (Zimmer-

mann and Vanderheiden, 2007). This allows for the

implementation of scenarios like leaving home: as

a person leaves his house and locks the door, some

running appliances should be turned off—TV, hood,

oven the heating should depending on the situation be

lowered—and the alarm system should be activated.

The UCH architecture is based on the concepts of a

hub—UCH—which is a gateway-based architecture

implementing the URC standard managing the com-

munication between controllers and targets: a Con-

troller, that is any device for rendering the abstract

user interface, e. g., TV, touch screen or the smart-

phone presented in this paper; a Target, which is any

networked device or service intended to be controlled

or monitored, e. g., kitchen appliance, home enter-

tainment or security devices; and, finally, a Resource

Server, a global service for sharing user interfaces

and other resources necessary for interacting with the

targets.

The benefit of this approach is that we can deploy

consistent and, particularly, accessible user interfaces

which are tailored to particular users.

Pluggable user Interfaces

The URC framework provides an abstract definition

the abstract user interface layer between the back-end

devices and the front-end user interfaces (see figure

1). This user interface socket describes on an ab-

stract level the input/output behavior of the appliance.

The socket (or sockets) is (are) then rendered on some

controller thus giving the abstract user interface a con-

crete implementation, or, in other words: plugging the

socket (Vanderheiden and Zimmermann, 2005).

Clearly, this architecture offers a flexible way of

connecting different user interfaces with any user in-

terface socket. Multiple controllers can be attached,

exchanged and detached at runtime.

TOWARDS PLUGGABLE USER INTERFACES FOR PEOPLE WITH COGNITIVE DISABILITIES

429

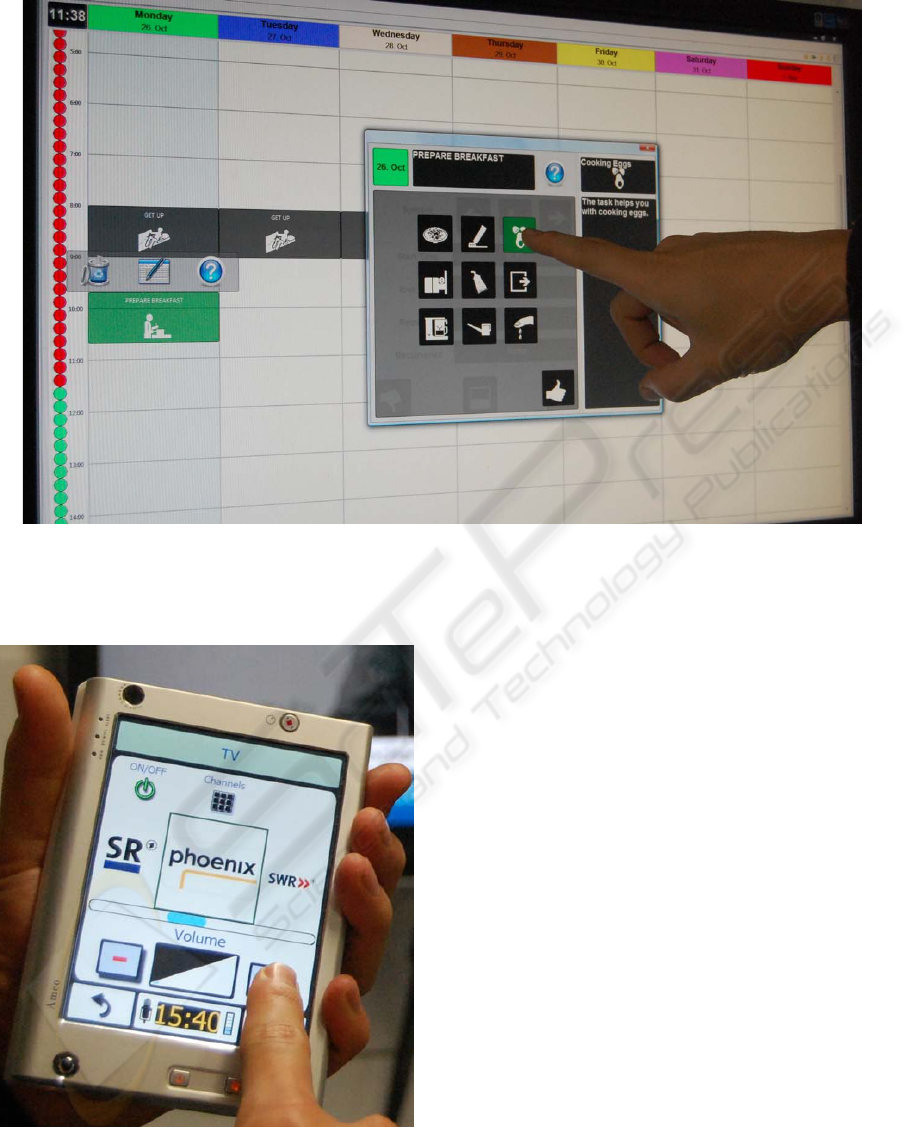

Figure 3: Picture of a test user interacting with i2home’s task based calendar. The user interface follows special design

guidelines for people with cognitive disabilities. For example, buttons and tasks are represented using a combination of text

and pictograms

2

, weekdays have fixed colors and the vertical timeline on the left displays the current time of day.

Figure 2: The i2home multimodal UI for smartphones

showing interaction with a TV.

4 TWO CONCRETE USER

INTERFACES

The configuration of the i2home system considered

here contains a wide range of appliances and ser-

vices: Google calendar; TV (Microsoft Windows Me-

dia Center); Siemens’ serve@Home kitchen (hood,

oven, fridge, freezer, dishwasher and air condition);

SweetHeart blood pressure meter; and SmartLab Ge-

nie blood sugar meter. For these appliances, we show

two UIs that have been implemented based on Emma,

see section 2 and have been evaluated with real users.

A Mobile Multimodal Dialogue System. Figure

2 depicts a multimodal user interface for interaction

with graphics, speech and pointing gestures. The in-

terface is implemented by using the Ontology Dia-

logue Platform (ODP) (Schehl et al., 2008). Given

a client-server architecture, the client part of the UI

is running on an HTC 7500 Advantage running Win-

dows Mobile 5.0 and the server on a standard PC.

A Task based Calendar. A common challenge

when creating intelligent user interfaces is the com-

plexity of modern appliances. In (Rich, 2009), an

approach for creating task-based user interfaces is

HEALTHINF 2010 - International Conference on Health Informatics

430

described, where the main idea is that computer-

controlled devices should actively help the users to

operate the device and therefore reduce their own

complexity. This approach has been adopted in

i2home by integrating a task model engine with the

UCH. Figure 3 shows the i2home calendar interface,

which is, in contrast to a standard calendar applica-

tion, completely based on the notion of task models.

In addition to traditional calendar features, the

i2home task based calendar enables the users to

schedule predefined tasks. Therefore, the users will

not only be reminded of a task—the calendar auto-

matically triggers tasks and thus assists the users by

giving instructions or automating the necessary steps

to perform arbitrary tasks which might or might not

interact with the appliances connected to the system.

DFKI and SIAT have developed several task based

scenarios for people with mild cognitive disabilities.

Examples of tasks in this domain include preparing a

meal, watching DVD or handling daily activities like

morning routines, medication or blood sugar mea-

surement.

5 CONCLUSIONS AND FUTURE

WORK

We have presented two different pluggable user in-

terfaces that provide consistent access to the digi-

tal home environment for people with cognitive dis-

abilities. They are rendered on the basis of the new

open industrial standard ISO/IEC 24752—the Uni-

versal Remote Console (URC). URC offers a flexible

middleware platform that supports several communi-

cation standards and allows for interaction with any

networked target device. The presented task-based

calendar approach combines the notion of task models

with commonly known calendar concepts and there-

fore helps users with special needs to manage their

daily activities. Based on the results of the third sys-

tem evaluation, the next steps will include the resump-

tion and optimization of the interaction concepts.

REFERENCES

Buiza, C., Dubielzig, M., Franc, J., G

¨

orlich, J., Kunnari, J.,

Klima, M., Langr, O., Macik, M., Maly, I., Rylen, A.,

Sporka, A. J., and Urdaneta, E. (2007). User require-

ments report. i2home Deliverable D1.1.

Cooper, A. (1999). The Inmates are running the Asylum

- why high-tech products drive us crazy and how to

restore the sanity. SAMS, Indianapolis, Ind., 1. print.

edition.

Rich, C. (2009). Building task-based user interfaces with

ANSI/CEA-2018. Computer, 42(8):20–27.

Schehl, J., Pfalzgraf, A., Pfleger, N., and Steigner, J. (2008).

The babbleTunes system: talk to your ipod! In IMCI

’08: Proceedings of the 10th international conference

on Multimodal interfaces, pages 77–80, New York,

NY, USA. ACM.

Vanderheiden, G. and Zimmermann, G. (2005). Use of user

interface sockets to create naturally evolving intelli-

gent environments. In Proceedings of the 11th Inter-

national Conference on Human-Computer Interaction

(HCI 2005), Caesars Palace, Las Vegas, Nevada USA.

Zimmermann, G. and Vanderheiden, G. (2007). The uni-

versal control hub: An open platform for remote user

interfaces in the digital home. In Jacko, J. A., editor,

Human-Computer Interaction, volume 4551 of LNCS,

pages 1040–1049. Springer.

TOWARDS PLUGGABLE USER INTERFACES FOR PEOPLE WITH COGNITIVE DISABILITIES

431