VIDEO

EVENT CLASSIFICATION AND DETECTION

USING 2D TRAJECTORIES

Alexandre Hervieu, Patrick Bouthemy

INRIA, Centre Rennes - Bretagne Atlantique, Campus Universitaire de Beaulieu, 35042 Rennes Cedex, France

Jean-Pierre Le Cadre

INRIA, Centre Rennes - Bretagne Atlantique / CNRS, Campus Universitaire de Beaulieu, 35042 Rennes Cedex, France

Keywords:

Image sequence analysis, Image motion analysis, Hidden Markov models, Pattern recognition.

Abstract:

This paper describes an original statistical trajectory-based approach which can address several issues related

to dynamic video content understanding: unsupervised clustering of events, recognition of events correspond-

ing to learnt classes of dynamic video contents, and detection of unexpected events. Appropriate local differ-

ential features combining curvature and motion magnitude are robustly computed on the trajectories. They are

invariant to image translation, in-the-plane rotation and scale transformation. The temporal causality of these

features is then captured by hidden Markov models whose states are properly quantized values, and similarity

between trajectories is expressed by exploiting the HMM framework. We report experiments on two sets of

data, a first one composed of typical classes of synthetic (noised) trajectories (such as parabola or clothoid),

and a second one formed with trajectories computed in sports videos. We have also favorably compared

our method to other ones, including feature histogram comparison, use of the longest common subsequence

(LCSS) distance and SVM-based classification.

1 INTRODUCTION

Content-based exploitation of video footage is of con-

tinuously increasing interest in numerous applica-

tions, e.g., for retrieving video sequences in huge TV

archives, creating automatic video summarization of

sports TV programs (Kokaram et al., 2006), or detect-

ing specific actions or activities in video-surveillance

(Boiman and Irani, 2005; Hu et al., 2007). It im-

plies to shorten the well-known semantic gap between

computed low-level features and high-level concepts.

Considering 2D trajectories is attractive since they

form computable image features which capture elab-

orate spatio-temporal information on the viewed ac-

tions. Methods for tracking moving objects in an im-

age sequence are now available to get reliable enough

2D trajectories in various situations. These trajecto-

ries are given as a set of consecutive positions in the

image plane (x, y) over time. If they are embedded in

an appropriate modeling framework, high-level infor-

mation on the dynamic scene can then be reachable.

We aim at designing a general trajectory classifi-

cation method. It should take into account both the

trajectory shape (geometrical information related to

the type of motion and to variations in the motion di-

rection) and the speed change of the moving object

on its trajectory (dynamics-related information). Un-

less required by a specific application, it should not

be affected by the location of the trajectory in the im-

age plane (invariance to translation), by its direction

in the image plane (invariance to rotation), by the dis-

tance of the viewed action to the camera (invariance

to scale). It should also be robust enough, since local

differential features computed on the extracted trajec-

tories are prone to be noise corrupted. It should not

exploit strong a priori information on the scene struc-

ture, the camera set-up, the 3D object motions.

In this paper we tackle three important tasks re-

lated to dynamic video content understanding within

the same trajectory-based framework. The first one

is clustering trajectories extracted from videos. An

unsupervised solution is developed. The second con-

sidered problem is recognizing (or retrieving) events

in videos. Semantic classes of dynamic video con-

tents are first learnt from a set of representative train-

ing trajectories. The third task is detecting unexpected

158

Hervieu A., Bouthemy P. and Le Cadre J. (2008).

VIDEO EVENT CLASSIFICATION AND DETECTION USING 2D TRAJECTORIES.

In Proceedings of the Third Inter national Conference on Computer Vision Theory and Applications, pages 158-166

DOI: 10.5220/0001073001580166

Copyright

c

SciTePress

events by comparing the test trajectory to representa-

tive trajectories of known classes of events.

The remainder of the paper is organized as fol-

lows. In Section 2, we outline related work on

trajectory-based video content analysis. In Section 3,

we introduce the local differential features consid-

ered to represent 2D trajectories. We show that they

are invariant to 2D translation, 2D rotation and scale

transformation, and we also describe their computa-

tion. Section 4 presents our HMM-based framework

to model trajectories. It can be viewed as a (statistical)

quantization of the local features while accounting for

their temporal evolution. We also describe the HMM-

based similarity measure used to compare or to clas-

sify trajectories. Section 5 deals with the detection of

unexpected events. Section 6 introduces other classi-

fication methods which will intervene in the compara-

tive experimental evaluation of the proposed method.

In Section 7, we present the two data sets used to

test and compare the methods. The first one is com-

posed of typical classes of synthetic (noised) trajec-

tories (such as parabola or clothoid), and the second

one includes trajectories computed in sports videos.

Results are then reported and discussed. Concluding

remarks are given in Section 8.

2 RELATED WORK

Trajectory analysis can help recognizing events, ac-

tions, or interactions between people and objects.

First methods considered point coordinates and lo-

cal orientations on image trajectories as input features

(Bashir et al., 2007; Buzan et al., 2004; Chan et al.,

2004; Porikli, 2004). Using these features leads to

express strict spatial similarity between trajectories.

Other methods use velocities as features to compare

2D trajectories (Porikli, 2004; Hu et al., 2007; Wang

et al., 2006), but visual velocity still depends on the

distance of the viewed action to the camera.

Different methods have been developed to com-

pare and cluster trajectories in order to analyze the

content of video sequences. Buzan et al. (Buzan et al.,

2004) resorted to the Longest Common Subsequence

(LCSS) distance (Vlachos et al., 2002), to classify tra-

jectories computed in an image sequence acquired by

a single stationary camera for video surveillance. It is

based on a hierarchical unsupervised clustering of tra-

jectories where trajectory features are vectors of 2D

coordinates of the trajectory points. Wang et al. intro-

duced a novel similarity measure based on a modified

Hausdorff distance and a comparison confidence mea-

sure. They compare the distributions of the spatial co-

ordinates of the trajectory points, and also use other

attributes, such as velocity and object size (Wang et

al., 2006) Bashir et al. presented a trajectory-based

real-time indexing method (Bashir et al., 2007), us-

ing PCA and spectral clustering. A system that learns

patterns of activity from trajectories, and hierarchi-

cally classifies sequences using a codebook was de-

veloped by Stauffer and Grimson (Stauffer and Grim-

son). Li et al. considered statistical distributions of

trajectory orientations exploited in a clustering algo-

rithm (Li et al., 2006). Recent work has explored

modeling frameworks such as DPN (Dynamic Proba-

bilistic Network) and HMM (Hidden Markov Model)

to express the temporal information (causality) em-

bedded in video trajectories and the semantic mean-

ing that they convey. Hongeng et al. (Hu et al., 2007)

described a complex event recognition method based

on the definition of scenarios and on the use of Semi-

Markov Chain (SMC). Chan et al. (Chan et al., 2006)

coped with fragmented tracks that occur when using

mean-shift tracking. They attempted to jointly solve

the problem of linking these “tracklets” and recogniz-

ing complex events using DBN (Dynamic Bayes Net).

They also proposed a method for detecting rare events

by representing motions and space-time relations be-

tween objects using HMMs (Chan et al., 2004). A

recognition method for group activities was defined

by Gong and Xiang (Gong and Xiang, 2003) relying

on DPN to model and detect actions involving multi-

ple objects. DPN are specially used to model the tem-

poral relationships among different temporal events

in the scene. Porikli defined distances to handle tra-

jectories, especially a HMM-based distance (Porikli,

2004). The methods based on HMMs, SMCs or DPNs

developed so far are unable to treat short trajectories

(see subsection 4.1). Let us also stress that all the

aforementioned methods exploit features invariant to

translation or scale transformation only.

The approach we have designed is different from

those proposed so far in several points. First, we in-

troduce local differential trajectory features which are

able to jointly capture information on the trajectory

shape and on the object speed. Besides, they are in-

variant to translation, rotation and scale transforma-

tions. We have also developed a procedure to com-

pute them which is efficient and robust to noise. Sec-

ond, temporal evolution of these features over the tra-

jectory curve is explicitly accounted for by consider-

ing an original and effective HMM scheme. Indeed,

the HMMs states are given by properly quantizing the

real feature values. Our HMM method is also able to

process trajectories of any sizes (especially small tra-

jectories). Moreover, we have adopted a HMM dis-

tance which can be exploited both for clustering and

recognizing dynamic video contents and for detecting

VIDEO EVENT CLASSIFICATION AND DETECTION USING 2D TRAJECTORIES

159

unexpected events. All these elements make the over-

all framework we have defined general and flexible.

3 INVARIANT LOCAL

TRAJECTORY FEATURES

A feature that represents both trajectory shape and

object acceleration (more specifically, we mean ve-

locity magnitude change) is required to capture the

full intrinsic properties of a video trajectory. As

stressed in the introduction, it should also be invariant

to 2D translation, 2D rotation and scale transforma-

tion, which will be helpful in most video applications.

3.1 Trajectory Kernel Smoothing

A trajectory T

k

is defined by a set of n

k

points

{(x

1

, y

1

), ..,(x

n

k

, y

n

k

)} corresponding to the succes-

sive image positions of the tracked object in the im-

age sequence (video shot). The term “object” must

be understood in a broad sense, i.e., interest point,

gravity center of a segmented region, window cen-

ter,. . . To reliably compute the local differential tra-

jectory features, we need a continuous representation

of the curve formed by the trajectory. To this end, we

perform a kernel approximation of T

k

defined by

u

t

=

∑

n

k

j=1

e

−(

t− j

h

)

2

x

j

∑

n

k

j=1

e

−(

t− j

h

)

2

, v

t

=

∑

n

k

j=1

e

−(

t− j

h

)

2

y

j

∑

n

k

j=1

e

−(

t− j

h

)

2

, (1)

where (x

t

, y

t

) designates the coordinates of the

tracked object at t and (u

t

, v

t

) its smoothed represen-

tation. h is a smoothing parameter to be set accord-

ing to the observed noise magnitude. Explicit expres-

sions can then be derived for the first- and second-

order temporal derivatives of the trajectory positions:

respectively, ˙u

t

, ˙v

t

, ¨u

t

and ¨v

t

.

3.2 Derivation of the Trajectory

Features

Let us first consider the local orientation of the curve

given by γ

t

= arctan(

˙v

t

˙u

t

). By construction, it is invari-

ant to 2D translation and scale transformation. To add

invariance to 2D rotation, let us now take the tempo-

ral derivative of γ

t

,

˙

γ

t

, and let us analyze this quantity.

We have

d(tanγ

t

)

dt

=

1

cos

2

γ

t

˙

γ

t

. On the other hand :

d(tan γ

t

)

dt

=

¨v

t

˙u

t

− ¨u

t

˙v

t

˙u

2

t

.

Then

˙

γ

t

= cos

2

γ

t

µ

¨v

t

˙u

t

− ¨u

t

˙v

t

˙u

2

t

¶

.

Also

cos

2

γ

t

= (1 + tan

2

γ

t

)

−1

=

˙u

2

t

˙u

2

t

+ ˙v

2

t

.

Hence

˙

γ

t

=

¨v

t

˙u

t

− ¨u

t

˙v

t

˙u

2

t

+ ˙v

2

t

= κ

t

.kw

t

k (2)

where κ

t

=

¨v

t

˙u

t

− ¨u

t

˙v

t

( ˙u

2

t

+ ˙v

2

t

)

3

2

is the local curvature of the tra-

jectory and kw

t

k = ( ˙u

2

t

+ ˙v

2

t

)

1

2

the local velocity mag-

nitude at point (u

t

, v

t

). The numerator of

˙

γ

t

is the

determinant of matrix

µ

˙u

t

¨u

t

˙v

t

¨v

t

¶

and the denomi-

nator ˙u

2

t

+ ˙v

2

t

= kw

t

k

2

is the squared velocity magni-

tude. Then,

˙

γ

t

is also rotation invariant. We have also

demonstrated that this local feature well captures both

the trajectory shape and the object speed since it is the

product of the local curvature and the instantaneous

velocity magnitude.

4 TRAJECTORY MODELING

AND SIMILARITY

4.1 Design of the Hidden Markov Model

We resort to a hidden Markov model (HMM) to build

the statistical framework we need since HMM natu-

rally expresses temporal causality. The feature vector

representing a trajectory T

k

extracted in a video shot is

the vector containing the n

k

successive values of

˙

γ(t):

V

k

= (

˙

γ

1

,

˙

γ

2

, ...,

˙

γ

n

k

−1

,

˙

γ

n

k

).

We also exploit the HMM framework in a some-

what original way since the HMMs states are given

by properly quantized values of

˙

γ(t). To determine

the HMMs state values, we first study the distribu-

tion of

˙

γ(t) on representative trajectories. We define

an interval [−S, S] containing a given percentage P

v

of

computed

˙

γ values in order to discard “outliers” and to

control the number N of state values. Hence, a quan-

tization is performed on [−S, S] into a fixed number N

of bins (whatever the value of S, in order to be able

to compare trajectories using the estimated HMMs).

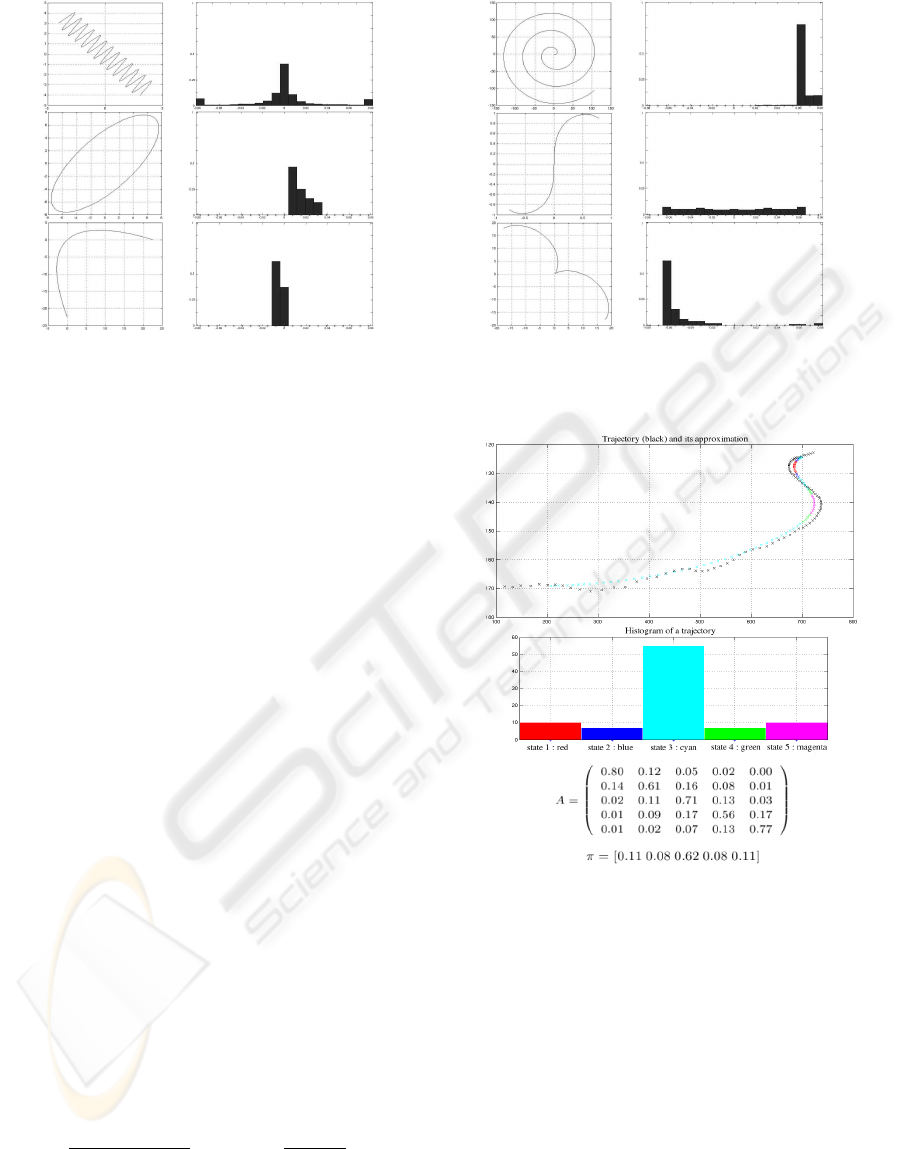

This is illustrated in Fig.1 where synthetic trajecto-

ries of six different classes are drawn and their corre-

sponding histograms are plotted restricted to [−S, S].

In contrast, in the HMM framework introduced in

(Porikli, 2004) to model trajectories and their tempo-

ral evolution, the number of states remain difficult to

set (it relies on a validity score that requires a balanc-

ing factor to be fixed), and the trajectory size should

be much larger than the number of Gaussian mix-

ture components (used for the conditional observa-

tion distribution) times the number of states, whereas

our method is developed to handle trajectories of any

sizes.

VISAPP 2008 - International Conference on Computer Vision Theory and Applications

160

Figure 1: Six samples of synthetic trajectories (a sinusoid, an ellipse, a parabola, a spiral, a clothoid and a cycloid) and their

Figure 1: Six samples of synthetic trajectories (a sinusoid, an ellipse, a parabola, a spiral, a clothoid and a cycloid) and their

associated normalized histograms plotted in [−S, S], with h = 3, P

v

= 90%, and N = 21 (see text).

The HMM which models the trajectory T

k

is now

characterized by:

- the state transition matrix A = {a

i j

} with

a

i j

= P[ q

t+1

= S

j

| q

t

= S

i

], 1 ≤ i, j ≤ N,

where q

t

is the state variable at instant t and S

i

is its

value (i.e., the ith bin of the quantized histogram);

- the initial state distribution π = {π

i

}, with π

i

=

P[ q

1

= S

i

], 1 ≤ i ≤ N;

- the conditional observation probabilities B =

{b

i

(

˙

γ

t

)}, where b

i

(

˙

γ

t

) = P[

˙

γ

t

| q

t

= S

i

], since the com-

puted

˙

γ

t

are the observed values.

The conditional observation probability is defined

as a Gaussian distribution of mean µ

i

(i.e., the me-

dian value of the histogram bin S

i

). Its standard devi-

ation σ does not depend on the state and is specified

so that the interval [µ

i

− σ, µ

i

+ σ] corresponds to the

bin width. This conditional observation model can

reasonably account for measurement uncertainty. It

also prevents from having zero values when estimat-

ing matrix A in the training stage by lack of measures

(especially in case of short trajectories). Otherwise,

infinite distances would be found between trajectories

(some coefficients of matrix A would be zero values).

To estimate A and π, we have adapted the least-

squares technique proposed in (Ford and Moore,

1998) where the HMM is assimilated to a count pro-

cess. If H

(i)

t

= P(

˙

γ

t

|q

t

= i) (corresponding to a weight

for the count process), empirical estimates of A and π,

for a trajectory k of size n

k

are given by

a

i j

=

∑

n

k

−1

t=1

H

(i)

t

H

( j)

t+1

∑

n

k

−1

t=1

H

(i)

t

and π

i

=

∑

n

k

t=1

H

i

t

n

k

. (3)

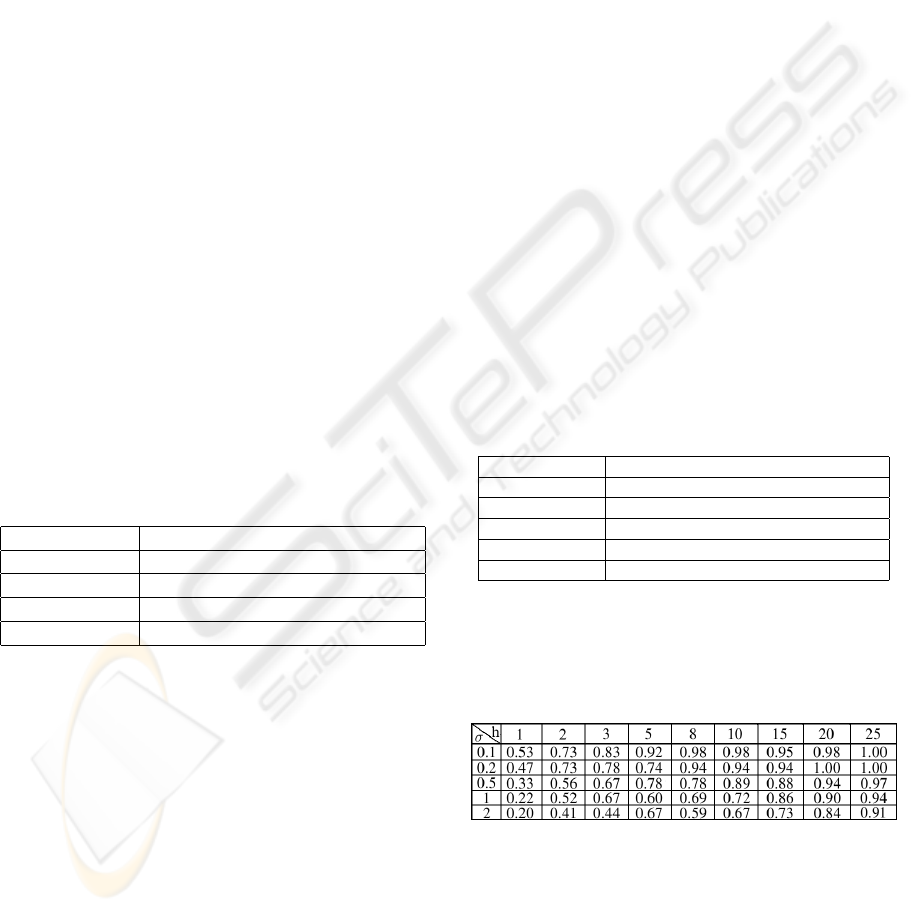

As illustration, we show an example of a real trajec-

tory in Fig. 2, its smoothed counterpart, the estimated

values of A and π coefficients for its associated HMM.

Figure 2: Upper part: Plots of a real trajectory (extracted

from a Formula One race video shot), its smoothed coun-

terpart obtained with h = 8. Colors of the curve points

stand for the different state values and correspond to the

histogram bin colors. Middle part: histogram of the state

values (with N = 5). Lower part: estimated transition ma-

trix A and initial state distribution π.

4.2 Similarity Measure

To compare two trajectories, we have to define a sim-

ilarity measure. To this end, we exploit the HMM

framework we have built. We adopt the distance be-

tween HMMs proposed by Rabiner (Rabiner, 1989).

It can also be used to classify the trajectories since

it is defined at the trajectory model level. Given two

HMMs represented by their parameter sets λ

1

and λ

2

VIDEO EVENT CLASSIFICATION AND DETECTION USING 2D TRAJECTORIES

161

(λ

i

= (A

i

, B

i

, π

i

), i = 1, 2), the distance D is defined by

D(λ

1

, λ

2

) =

1

T

[log P(O

(2)

|λ

2

) − log P(O

(2)

|λ

1

)]

(4)

where O

( j)

= {

˙

γ

1

,

˙

γ

2

, ...,

˙

γ

n

j

} is the sequence of mea-

sures used to train the model λ

j

and P(O

( j)

|λ

i

) ex-

presses the probability of observing O

( j)

with model

λ

i

(computed with the Viterbi algorithm). To be used

as a similarity measure, a symmetrized version is re-

quired:

D

s

(λ

1

, λ

2

) =

1

2

[D(λ

1

, λ

2

) + D(λ

2

, λ

1

)]. (5)

5 VIDEO UNDERSTANDING

TASKS

5.1 Unsupervised Clustering of

Trajectories

We first describe how we address the unsupervised

clustering task. Given a set of video shots (obtained

by an automatic temporal video segmentation tech-

nique), we try to cluster the extracted trajectories in

a sensible way to come out with relevant classes of

dynamic video content. We first represent each tra-

jectory by a HMM whose parameters are estimated as

explained in Section 4. We then perform a classical

binary ascendant hierarchical classification using the

trajectory similarity measure introduced in the previ-

ous section. The distance between two groups of tra-

jectories G

i

and G

j

is defined using an average link

method, e.g, calculating the mean of the distance be-

tween all pairs of trajectories :

D

average link

(G

i

, G

j

) =

∑

T

k

∈G

i

,T

l

∈G

j

D

s

(T

k

, T

l

)

#G

i

#G

j

. (6)

When achieving a binary ascendant hierarchical

classification, the system needs to know when to stop

the merging iterations. If the process is stopped too

late, trajectories will be grouped in too few heteroge-

neous classes. Otherwise, if the process is terminated

too early, classes will be too fragmented, and they

would not correspond to relevant semantic classes.

Two alternatives have been tested. We stop merging

groups when a predefined number C of classes has

been created, which means that the user has some

knowledge on the diversity of the dynamic video

contents. A second procedure is to fix a threshold τ,

and merging is continued until the current minimum

inter-classes distance passes the threshold τ.

5.2 Recognition of Learnt Classes of

Dynamic Video Content

Let us consider the problem of recognizing events, or

equivalently, of retrieving instances of known classes

of events in videos. It can be achieved in a super-

vised way (classes are learnt from training examples)

or in an unsupervised way using the clustering stage

described above. Each class is modeled by a set

of HMMs corresponding to representative trajectories

(those used in the training step, or those belonging to

the corresponding cluster supplied by the initial clus-

tering step applied on a subset of the video sequence

base) which we will call the initial members in the se-

quel. Recognition is then performed by assigning the

processed trajectory to the nearest class. As afore-

mentioned, the distance to a class is defined using the

average link method (see subsection 5.1).

5.3 Detection of Unexpected Events

Detecting unexpected (or equivalently, rare or abnor-

mal) events is of interest in many applications. We

tackle this issue with the same HMM-based frame-

work. First, we consider a set of predefined (or learnt)

classes represented again by the estimated HMMs of

the initial class members. We compute for each class

C

i

its centroid G

i

in the λ-parameter space from the

estimated parameters λ

l

i

of the HMMs of the initial

members T

l

i

of class C

i

. Then we evaluate the dis-

tances of all the initial class members T

l

i

to the cen-

troid G

i

using relation (5), and we denote R

i

the maxi-

mum distance value. Let σ

i

designate the standard de-

viation of these distance values. We decide that a test

trajectory T

k

corresponds to an unexpected event if,

for every class C

i

, D

average link

(T

k

,C

i

) > R

i

+σ

i

, where

D

average link

(T

k

,C

i

) =

∑

T

l

∈C

i

D

s

(T

k

,T

l

)

#C

i

.

6 OTHER METHODS FOR

COMPARISON PURPOSE

6.1 Bhattacharyya Distance between

Histograms

To assess the importance of introducing temporal

causality, i.e., transitions between states, we have im-

plemented a Bhattacharyya distance-based classifica-

tion method. The Bhattacharyya distance D

b

between

two (normalized) histograms h

i

and h

j

of features

˙

γ

t

,

respectively corresponding to two trajectories T

i

and

T

j

, is defined by

VISAPP 2008 - International Conference on Computer Vision Theory and Applications

162

D

b

(h

i

, h

j

) =

v

u

u

u

t

1 −

v

u

u

t

N

∑

q=1

h

i

q

h

j

q

(7)

where h

i

q

is the histogram value of bin q for trajec-

tory T

i

. Similarly to the HMM-based method, we as-

sign the test trajectory T

k

to the nearest class and the

distance to a class is defined using an average link

method (see subsection 5.1).

6.2 SVM Classification Method

An efficient tool to perform supervised classification

of patterns is the SVM method (Burges, 1998). As

input for the SVM method, we take the HMM pa-

rameters corresponding to the trajectories. A SVM

method needs patterns to be represented by vectors.

Hence, for each trajectory T

k

, a vector X

k

containing

the HMM parameters λ

k

of the trajectory is created.

Let us give an example with only N = 3 state values.

We have

A

k

=

a

11

a

12

a

13

a

21

a

22

a

23

a

31

a

32

a

33

, π

k

= [a

1

a

2

a

3

],

and X

k

= [a

11

a

12

a

13

a

21

a

22

a

23

a

31

a

32

a

33

a

1

a

2

a

3

]

is the vector representing the trajectory T

k

. A SVM

classification technique with a Gaussian RBF (radial

basis function) kernel is used. The reported results

are obtained using the “one against all” classification

scheme.

7 EXPERIMENTS

7.1 Synthetic Trajectories

First, a set of typical trajectories has been generated to

settle experiments with ground truth and easily speci-

fiable data. More specifically, 8 classes (sinusoid,

parabola, hyperbola, ellipse, cycloid, spiral, straight

line, and clothoid) have been considered and 8 dif-

ferent trajectories (of different sizes, including short

trajectories) have been simulated for each class, with

different parametrizations of the curves, and for sev-

eral geometric transformations (rotation, scaling), as

illustrated in Fig.1. Noised versions have been gen-

erated with different noise levels. Thus, we can eval-

uate the performance of the methods with respect to

trajectory shape and length variations within a class,

invariance to transformations and robustness to noise.

7.2 Video Trajectories

Real trajectories have been extracted from a Formula

One race TV program and from Alpine skiing TV

programs, both filmed with several cameras. The tra-

jectories are computed with the tracking method de-

scribed in (Perez et al., 2002). The background mo-

tion due to camera panning, tilting and zooming is

canceled. Trajectory shapes supplied by this method

are thus nearly similar to the real 3D trajectories of

Formula One cars (up to an homography, since the

3D motion is almost planar) and of skiers. Examples

are plotted on Fig.3.

Figure 3: Images from video shots acquired by two differ-

ent cameras at two different places on the circuit. The com-

puted trajectories are overprinted on the images.

Figure 4: Plots of the 6 classes of dynamic content (tra-

jectories) for a Formula 1 race video, each box contains a

different class. A class of trajectories is composed of tra-

jectories extracted from shots acquired by the same camera.

The different classes correspond to different cameras placed

throughout the circuit at different strategic turns.

7.3 Results on Unsupervised Clustering

We have applied the proposed unsupervised trajec-

tory clustering scheme based on the distance between

HMMs to trajectories extracted from a Formula One

race TV program (Fig.3). In that real example, the

different classes correspond to views supplied by six

different cameras placed throughout the circuit at dif-

ferent strategic turns. Indeed, a given type of dynamic

content is attached to a given view, and it can then be

characterized by a specific trajectory shape and car

speed which essentially depend on the turn configura-

VIDEO EVENT CLASSIFICATION AND DETECTION USING 2D TRAJECTORIES

163

tion at the considered location of the circuit, what-

ever the passing car. Hence, ground truth is avail-

able while a real video (TV program) is processed.

The clustering relying on the ascendant hierarchical

binary classification (AHBC) technique supplies very

good results with the two stopping criteria aforemen-

tioned. Due to page limitation, we will only report

results obtained with the first stopping criteria. Ta-

ble 1 contains results obtained with our HMM-based

method and with the same AHBC technique but using

the Bhattacharyya distance and the LCSS distance in-

stead. Two cases were considered: 4 and 6 classes,

the four first ones being nested subsets of the last

six ones. Our method outperforms the Bhattacharyya

distance based method (with approximately the same

computation time), and the LCSS method while re-

quiring a much lower computation time (at least five

time faster). This experiment also demonstrates that

our unsupervised classification method is able to form

meaningful clusters since the later are very close to

the ground truth groups presented in Fig.4.

Table 1: Results of unsupervised classification by an ascen-

dant hierarchical binary classification (AHBC) technique,

using the proposed HMM-based distance, the LCSS dis-

tance and the Bhattacharyya distance for Formula One cars

trajectories. Two cases were considered: 4 and 6 classes,

the four first ones being nested subsets of the last six ones

(precisely the four classes on the left on Fig.4). Percentages

correspond to rates of good classification for the extracted

classes (the ground truth being known). As stopping cri-

terion, the number of classes to create is provided to the

AHBC technique.

Percentage of correct clustering

4 classes 6 classes

HMM 100 92.2

Bhattacharyya 58.4 53.9

LCSS 84.4 72.3

7.4 Results on Supervised Recognition

We are now reporting results regarding the recog-

nition task. We have compared our HMM-based

method with the SVM classification method de-

scribed in subsection 6.2 and the histogram compar-

ison technique based on the Bhattacharyya distance

outlined in subsection 6.1. To evaluate the perfor-

mances, we have adopted the leave-one-out cross val-

idation. Table 2 contains best classification results for

the real sets of video trajectories (4 and 6 classes pre-

sented in Fig.4). Table 3 shows the performance of

our HMM method for different levels of noise on the

synthetic trajectories using different values of h.

Tests performed on the sets of synthetic trajecto-

ries gave very promising results, hence a perfect clas-

sification was performed for most parameter config-

urations (i.e., for N, h and P

v

) with the SVM and

HMM methods, where the technique based on the

Bhattacharyya distance fail to efficiently classify syn-

thetic trajectories (highlighting the importance of the

temporal causalities modeled with HMM). The tech-

nique based on the Longest Common Subsequence

distance (LCSS) (Buzan et al., 2004) gave good re-

sults but not perfect, and with a higher computation

time (more than five times longer than with HMM

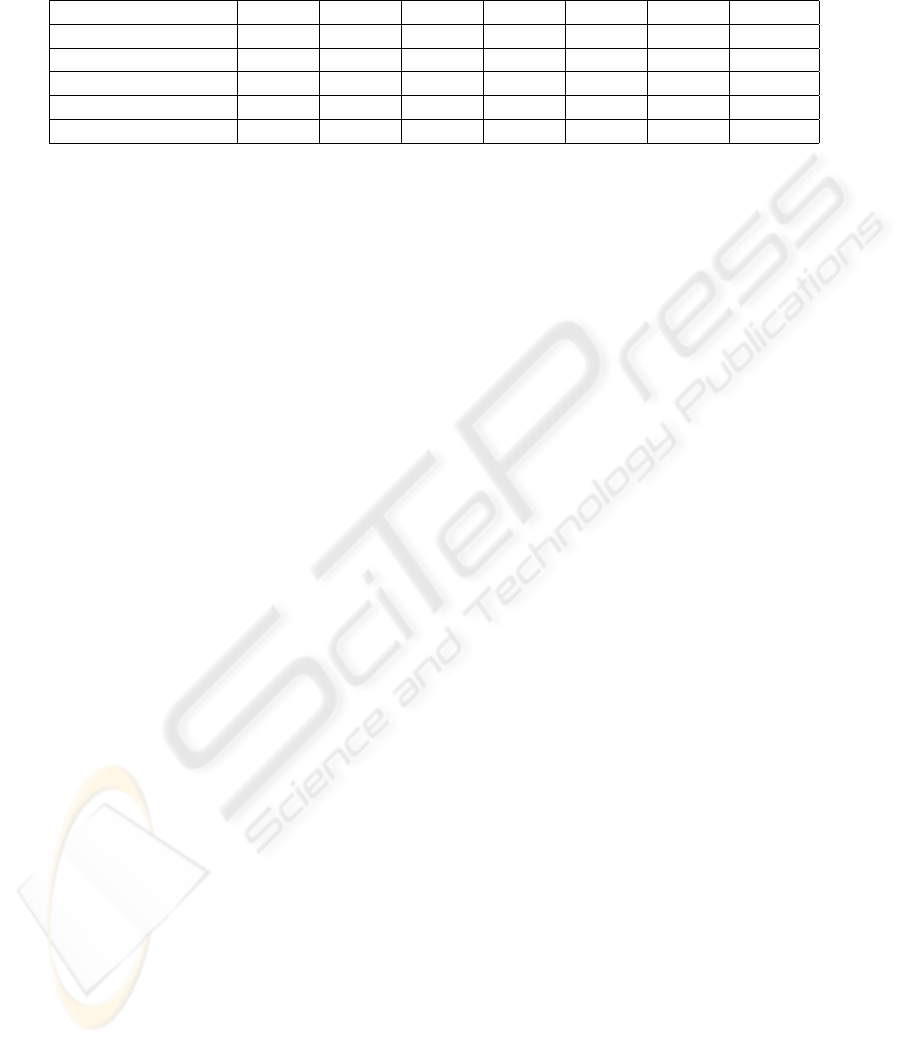

based method). Recognition results with noised data

(Table 3) shows that the parameter h helps handling

efficiently noised data, by smoothing the processed

trajectories.

For the evaluation on real videos (Fig.4), the same

type of results have been obtained, very satisfying

classification results for most parameter configura-

tions with the SVM and HMM methods, and less ac-

curate classification results with the techniques us-

ing the Bhattacharyya distance and the LCSS dis-

tance (Table 2). Besides, our HMM method is much

more flexible than the SVM classification stage (e.g.,

adding a new class only requires to learn the parame-

ters of that class)

Table 2: Comparison of the best recognition percentages for

the trajectories extracted from real video, using the leave-

one-out cross validation technique.

Percentage of correct classification

4 classes 6 classes

HMM 100 99.0

SVM 100 96.1

Bhattacharyya 100 93.1

LCSS 97.1 91.2

Table 3: Classification results for the synthetic trajectories,

with a HMM-based method, using the leave-one-out cross

validation technique, for different values of h and σ (σ is

the standard deviation of the added noise).

7.5 Results on the Detection of

Unexpected Events

We have conducted experiments on several real

videos for the detection of different types of unex-

pected events. For the Formula One race video, we

were able to detect incidents such as cars driving off

VISAPP 2008 - International Conference on Computer Vision Theory and Applications

164

the track (revealed by an abnormal trajectory shape)

or intervention of the safety car (revealed by a quite

different speed while the global trajectory shape re-

mains unchanged). For the skiing competition, the

objective was to detect falls of skiers. Fig.5 and

Fig.6 respectively show three Formula One video se-

quences and two Alpine skiing race video sequences.

Fig.6 also presents trajectories corresponding to a

class (printed in blue) and to two unexpected events

(printed in magenta and blue). In each case, the first

one belongs to a regular event class while the other

ones are examples of unexpected events. The criterion

described in subsection 5.3 allowed us to correctly

detect the unexpected events in all the processed ex-

amples. In Table 5 we supply the maximum intra-

class distance R

i

and the distance between the trajec-

tory detected as unexpected event and the six consid-

ered classes C

i

(presented in Fig.4), for several cases.

Table 4 presents results corresponding to skiers tra-

jectories, showing the difference between the max-

imum intra-class distance and the distance between

unexpected events and this regular class. These re-

sults show that our HMM-based framework can be

straightforwardly and successfully exploited for de-

tecting unexpected events in videos.

Figure 5: Images from Formula One race video shots ac-

quired by the same camera. Top row: images displaying

instances of a regular class. Bottom rows: example of un-

expected events (safety car, car driving off the track). The

trajectories are overprinted on the images.

8 CONCLUSIONS

We have proposed a trajectory-based HMM frame-

work for video content understanding. We have

shown that it is general and flexible enough to solve

three tasks: unsupervised clustering of events, recog-

nition of events corresponding to learnt classes of dy-

namic video contents, and detection of unexpected

Figure 6: Images from Alpine skiing competition video

shots acquired by the same camera. Trajectories are over-

printed on the images. Top row: example of regular class.

Bottom row: example of unexpected event (fall of a skier).

Table 4: Detection threshold is supplied in the second row

for the class of ski trajectories used in the detection task

of unexpected events. The following rows contain the dis-

tances between the unexpected events trajectories and the

regular class. Last column shows the detection status.

Class 1 Status

R

1

+ σ

1

0.1434

Accident 0.4412 detected

Skier veering off the pist 0.3307 detected

events. We have introduced appropriate local tra-

jectory features invariant to translation, rotation and

scale transformations, and we can reliably compute

them in presence of noise. We have conducted an im-

portant set of comparative experiments both on syn-

thetic examples and real videos (sports TV programs)

with classification ground truth. We have shown that

our method supplies accurate results and offers bet-

ter performance and usability than other approaches

such as SVM classification, histogram comparison or

LCSS distance. Extensions of this work will investi-

gate the hierarchical modeling of space-time groups

of trajectories in association or in interaction to repre-

sent activities in videos.

REFERENCES

F. I. Bashir, A. A. Khokhar, and D. Schonfeld. Real-

time motion trajectory-based indexing and retrieval

of video sequences. IEEE Trans. on Multimedia,

9(1):58–65, 2007.

O. Boiman and M. Irani. Detecting irregularities in images

and in video. IEEE Int. Conf. on Computer Vision,

ICCV’05, Beijing, Vol.1, pages 462–469, Oct. 2005.

C. Burges. A tutorial on support vector machines for pattern

recognition. Data Mining and Knowledge Discovery,

2:121–167, 1998.

VIDEO EVENT CLASSIFICATION AND DETECTION USING 2D TRAJECTORIES

165

Table 5: Detection thresholds are supplied in the second row for the (learnt) classes C

i

used in the detection task of unexpected

events. The following rows contain the distances between the unexpected events trajectories and the regular classes. The

events ’Accident 1’, ’Veering off the track’ and ’Safety car’ were shot by the camera corresponding to class 1, whereas

’Accident 2’ corresponds to class 2. Last column shows the detection status.

Class 1 Class 2 Class 3 Class 4 Class 5 Class 6 status

R

i

+ σ

i

0.0753 0.1310 0.1180 0.0632 0.0225 0.0330

Accident 1 0.2084 0.1427 0.1713 0.1306 0.0296 0.1992 detected

Veering off the track 0.1603 0.1991 0.3017 0.2068 0.0484 0.1556 detected

Safety car 0.2958 0.5200 0.6425 0.3088 0.2595 0.2788 detected

Accident 2 0.2474 0.3978 0.5141 0.2068 0.0716 0.2127 detected

D. Buzan, S. Sclaroff, and G. Kollios. Extraction and clus-

tering of motion trajectories in video. In Proc. of the

17th Int. Conf. Pattern Recognition, ICPR’04, pages

521–524, Cambridge, UK, Aug. 2004.

M. T. Chan, A. Hoogs, J. Schmiederer, and M. Peterson.

Detecting rare events in video using semantic primi-

tives with HMM. In Proc. of the 17th Int. Conf. on

Pattern Recognition, ICPR’04,pages 150– 154, Cam-

bridge, UK, Aug. 2004.

M. T. Chan, A. Hoogs, R. Bhotika, and A. Perera. Joint

recognition of complex events and track matching. In

Proc of the IEEE Conf. on Comp. Vis. and Patt. Rec.,

CVPR’06, pages 694–699, New York, June 2006.

J. Ford and J. Moore. Adaptive estimation of HMM transi-

tion probabilities. IEEE Trans. on Signal Processing,

46(5):1374–1385, 1998.

P. Perez, C. Hue, J. Vermaak, and M. Gangnet. Color-based

probabilistic tracking. Proc. Europ. Conf. Computer

Vision, ECCV’02, Copenhaguen, June 2002.

S. Gong and T. Xiang. Recognition of group activities using

dynamic probabilistic networks. In Proc. of the IEEE

Int. Conf. on Computer Vision, ICCV’03, pages 742–

749, Nice, Oct. 2003.

S. Hongeng, R. Nevatia, and F. Bremond. Video-based

event recognition: Activity representation and prob-

abilistic recognition methods. Computer Vision and

Image Understanding, 96(2):129–162, 2003.

W. Hu, D. Xie, Z. Fu, W. Zheng, and S. Maybank.

Semantic-based surveillance video retrieval. IEEE

Trans. on Image Proc., 16(4):1168-1181, April 2007.

A. Kokaram, N. Rea, R. Dahyot, M. Tekalp, P. Bouthemy,

P. Gros, and I. Sezan. Browsing sports video (Trends

in sports-related indexing and retrieval work). IEEE

Signal Processing Magazine, 23(2):47–58, March

2006.

C. Li and G. Biswas. Temporal pattern generation using

hidden Markov model based unsupervised classifica-

tion. Advances in Intelligent Data Analysis, Lecture

Notes in Computer Science, 1642:245–256, 1999.

X. Li, W. Hu, and W. Hu. A coarse-to-fine strategy for ve-

hicle motion trajectory clustering. In Proceedings of

the 17th International Conference on Pattern Recog-

nition, pages 591–594, Hong Kong, Aug. 2006.

F. Porikli. Trajectory distance metric using hidden Markov

model based representation. In PETS Workshop,

Prague, May 2004.

L. Rabiner. A tutorial on hidden Markov models and se-

lected applications in speech recognition. Proc. IEEE,

77(2):257–285, 1989.

C. Stauffer and W. E. L. Grimson. Learning patterns of

activity using real-time tracking. IEEE Trans. Pat-

tern Analysis and Machine Intelligence, 22(8):747–

757, Aug. 2000.

M. Vlachos, G. Kollios, and D. Gunopulos. Discovering

similar multidimensional trajectories. In Proc. of the

18th International Conference on Data Engineering,

San Jose, Feb 2002.

X. Wang, K. Tieu, and E. Grimson. Learning semantic

scene models by trajectory analysis. In Proc. Europ.

Conf. Computer Vision, ECCV’06, Graz, May 2006.

VISAPP 2008 - International Conference on Computer Vision Theory and Applications

166