A Scalarized Augmented Lagrangian Algorithm (SCAL) for

Multi-objective Optimization Constrained Problems

Lino Costa

1,2

, Isabel Esp

´

ırito Santo

1,2

and Pedro Oliveira

3,4

1

Algoritmi R&D Center, University of Minho, Portugal

2

Department of Production and Systems Engineering, University of Minho, Portugal

3

Instituto de Ci

ˆ

encias Biom

´

edicas Abel Salazar, Universidade do Porto, Portugal

4

ISPUP-EPIUnit, Universidade do Porto, Rua das Taipas, n

◦

135, 4050-600 Porto, Portugal

Keywords:

Multi-objective Constrained Optimization, Augmented Weighted Tchebycheff, Pattern Search, Augmented

Lagrangian.

Abstract:

In this paper, a methodology to solve constrained multi-objective problems is presented, using an Augmented

Lagrangian technique to deal with the constraints and the Augmented Weighted Tchebycheff method to tackle

the multi-objective problem and find the Pareto Frontier. We present the algorithm, as well as some preliminary

results that seem very promising when compared to previous state-of-the- art work. As far as we know, the

idea of incorporating an Augmented Lagrangian in multi-objective optimization is rarely used so, the obtained

results are very encouraging to pursuit further in this line of investigation, namely with the tuning of the

Augmented Lagrangian parameters as well as testing other algorithms to solve the subproblems or to handle

the multi-objective problems. It is also our intention to investigate the resolution of problems with three or

more objectives.

1 INTRODUCTION

A multi-objective optimization (MO) problem with m

objectives and n decision variables, without loss of

generality, can be mathematically formulated as fol-

lows:

minimize: f (x) = ( f

1

(x), f

2

(x), . . . , f

m

(x))

T

subject to:

c

i

(x) = 0, i = 1, . . . , q

g

j

(x) ≥ 0, j = 1, . . . , p

x ∈ Ω

(1)

where x is the decision vector, Ω ⊆ R

n

is the feasible

decision space, f (x) is the objective vector defined

in the objective space R

m

, c(x) = 0 are the equality

constraints and g(x) ≥ 0 are the inequality constraints.

When several objectives are optimized at the same

time, the search space becomes partially ordered. In

such scenario, solutions are compared on the basis of

the Pareto dominance. For two solutions a and b from

Ω, a solution a is said to dominate a solution b (de-

noted by a ≺ b) if:

∀i ∈ {1, . . . , m} : f

i

(a) ≤ f

i

(b)∧

∃ j ∈ {1, . . . , m} : f

j

(a) < f

j

(b).

(2)

Since solutions are compared against different ob-

jectives, there is no longer a single optimal solution

but a set of optimal solutions, generally known as

the Pareto optimal set. This set contains equally im-

portant solutions representing different trade-offs be-

tween the given objectives and can be defined as:

P S = {x ∈ Ω | @y ∈ Ω : y ≺ x}. (3)

Approximating the Pareto optimal set is the main

goal in multi-objective optimization.

Constraint handling in multi-objective optimiza-

tion is still a very challenging research endeavor.

Constraints are present in every real world problem

and, so far, there is no superior approach to the han-

dling of constraints. The inclusion of constraints, in

particular, equality constraints, further complexifies

multi-objective optimization since its inclusion can

greatly transform the Pareto Frontier and, thus, make

its approximation much more difficult.

In this paper, a Scalarized Augmented Lagrangian

Algorithm (SCAL) for constrained multi-objective

optimization problems is presented and tested in sev-

eral constrained problems.

Costa, L., Santo, I. and Oliveira, P.

A Scalarized Augmented Lagrangian Algorithm (SCAL) for Multi-objective Optimization Constrained Problems.

DOI: 10.5220/0006720603350340

In Proceedings of the 7th International Conference on Operations Research and Enterprise Systems (ICORES 2018), pages 335-340

ISBN: 978-989-758-285-1

Copyright © 2018 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

335

2 AUGMENTED WEIGHTED

TCHEBYCHEFF METHODS

The main goal of the MO optimization is to obtain the

set of Pareto-optimal solutions that correspond to dif-

ferent trade-offs between objectives. In this study, a

scalarization method is used to obtain an approxima-

tion to the Pareto-optimal set (Miettinen, 1999).

The Augmented Weighted Tchebycheff method,

proposed by Steuer and Choo (Steuer and Choo,

1983), has the advantage that it can converge to non-

extreme final solutions and may also be applicable

to nonlinear and nonconvex multi-objective optimiza-

tion problems (Steuer and Choo, 1983). The Aug-

mented Weighted Tchebycheff method can be formu-

lated as:

minmax[w

i

| f

i

(x) − z

?

i

|] +ρ

m

∑

i=1

| f

i

(x) − z

i

| (4)

where w

i

are the weighting coefficients for objective

i, z

?

i

are the components of a reference point, and ρ is

a small positive value (Dchert et al., 2012). The solu-

tions of the optimization problem defined by Eq. 4 are

Pareto-optimal solutions. Different combinations al-

low computing approximations to the Pareto-optimal

set using a single objective optimization method. An

approximation to the ideal vector can be used as refer-

ence point z

?

= (z

?

1

, . . . , z

?

m

)

T

= (min f

1

, . . . , min f

m

)

T

.

3 AUGMENTED LAGRANGIAN

TECHNIQUE USING THE

HOOKE AND JEEVES

PATTERN SEARCH METHOD

The Augmented Lagrangian technique herein pre-

sented solves a sequence of simple subproblems

where the objective function penalizes all or some of

the constraint violation. This objective function is an

Augmented Lagrangian that depends on a penalty pa-

rameter and on the multiplier vectors and is based in

the ideas presented in (Bertsekas, 1999; Conn et al.,

1991; Lewis and Torczon, 2002):

Φ(x;λ, δ, µ) = f (x) + λ

T

c(x) +

1

2µ

kc(x)k

2

(5)

+

µ

2

δ +

g(x)

µ

+

2

− kδk

2

!

where µ is a positive penalty parameter, λ =

(λ

1

, . . . , λ

m

)

T

, δ = (δ

1

, . . . , δ

p

)

T

are the Lagrange

multiplier vectors associated with the equality and in-

equality constraints, respectively. Function Φ aims

to penalize solutions that violate the equality and in-

equality constraints, not including the simple bounds

l ≤ x ≤ u. To force feasibility, the inner iterative pro-

cess must return an approximate solution that satis-

fies the bound constraints. While using the Hooke

and Jeeves version of the pattern search (Hooke and

Jeeves, 1961), any computed solution x that does not

satisfy the bounds is projected onto the set Ω compo-

nent by component (for all i, . . . , n) as follows:

x

i

=

l

i

if x

i

< l

i

x

i

if l

i

≤ x

i

≤ u

i

u

i

if x

i

> u

i

. (6)

The corresponding subproblem is then formulated as:

minimize

x∈Ω

Φ(x;λ

j

, δ

j

, µ

j

) (7)

where, for each set of fixed λ

j

, δ

j

and µ

j

, the solu-

tion of subproblem (7) provides an approximation x

j

to the problem formulated in Eq. (4), where the index

j is the iteration counter of the outer iterative process.

We refer to (Bertsekas, 1999) for details. In practice,

common safeguarded schemes maintain the sequence

of penalty parameters far away from zero so that solv-

ing subproblem (7) is an easy task.

To evaluate the equality and inequality constraint

violation, and the complementarity, the following er-

ror function is used:

E(x, δ) = max

kc(x)k

∞

1 +kxk

,

k[g(x)]

+

k

∞

1 +kδk

,

max

i

δ

i

|g

i

(x)|

1 +kδk

.

(8)

The Lagrange multipliers λ

j

and δ

j

are estimated in

this iterative process using the first-order updating

formulae

¯

λ

j+1

i

= λ

j

i

+

c

i

(x

j

)

µ

j

, i = 1, . . . , m (9)

and

¯

δ

j+1

i

= max

0, δ

j

i

+

g

i

(x

j

)

µ

j

, i = 1, . . . , p (10)

where: for all j ∈ N, and for i = 1, . . . , m and l =

1, . . . , p, λ

j+1

i

is the projection of

¯

λ

j+1

i

on the inter-

val [λ

min

, λ

max

] and δ

j+1

i

is the projection of

¯

δ

j+1

i

on

the interval [0, δ

max

], where −∞ < λ

min

≤ λ

max

< ∞

and 0 ≤ δ

max

< ∞. After the new approximation x

j

has been computed, the Lagrange multiplier vector δ

associated with the inequality constraints is updated,

in all iterations, since δ

j+1

is required in the error

function (8) to measure constraint violation and com-

plementarity. We note that the Lagrange multipliers

λ

i

, i = 1, . . . , m are updated only when feasibility and

complementarity are at a satisfactory level, herein de-

fined by the condition

E(x

j

, δ

j+1

) ≤ η

j

(11)

ICORES 2018 - 7th International Conference on Operations Research and Enterprise Systems

336

CF1

-0.1

0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

(a) CF1

CF2

0.05

0.1

0.15

0.2

0.25

0.3

0.35

0.4

0.45

0.5

0.55

(b) CF2

CF3

0.2

0.3

0.4

0.5

0.6

0.7

0.8

(c) CF3

CF4

0

0.5

1

1.5

2

2.5

3

(d) CF4

CF5

0.1

0.2

0.3

0.4

0.5

0.6

(e) CF5

CF6

0.03

0.035

0.04

0.045

0.05

0.055

(f) CF6

CF7

0.1

0.2

0.3

0.4

0.5

0.6

(g) CF7

BNH

1.35

1.4

1.45

1.5

1.55

1.6

1.65

(h) BHN

CONSTR

-1

0

1

2

3

4

5

6

(i) CONSTR

OSY

66

68

70

72

74

76

(j) OSY

SRN

5

10

15

20

(k) SRN

TNK

-0.2

-0.1

0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

(l) TNK

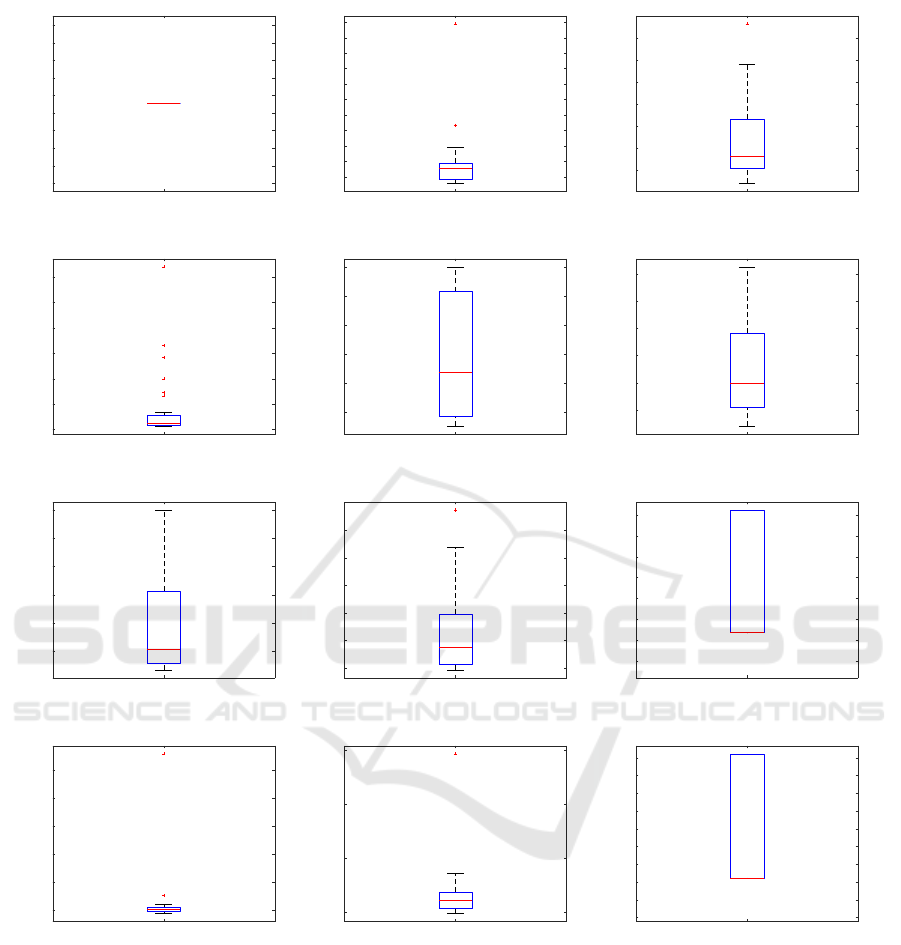

Figure 1: Boxplots of IGD on test problems.

for a positive tolerance η

j

. It is required that {η

j

}

defines a decreasing sequence of positive values con-

verging to zero, as j → ∞. This is easily achieved by

η

j+1

= πη

j

for 0 < π < 1.

We consider that an iteration j failed to provide

an approximation x

j

with an appropriate level of fea-

sibility and complementarity if condition (11) does

not hold. In this case, the penalty parameter is de-

creased using µ

j+1

= γµ

j

where 0 < γ < 1. When

condition (11) holds, then the iteration is considered

satisfactory. This condition says that the iterate x

j

is

feasible and the complementarity condition is satis-

fied within some tolerance η

j

and, consequently, the

algorithm maintains the penalty parameter value. We

remark that when (11) fails to hold infinitely many

times, the sequence of penalty parameters tends to

zero. To be able to define an algorithm where the se-

quence {µ

j

} does not reach zero, the following update

is used instead:

µ

j+1

= max{µ

min

, γµ

j

}, (12)

A Scalarized Augmented Lagrangian Algorithm (SCAL) for Multi-objective Optimization Constrained Problems

337

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1

f

1

(x)

0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

f

2

(x)

(a) CF1

0 0.5 1 1.5 2 2.5

f

1

(x)

0

0.2

0.4

0.6

0.8

1

1.2

f

2

(x)

CF2

(b) CF2

0 0.2 0.4 0.6 0.8 1 1.2

f

1

(x)

0

0.5

1

1.5

2

2.5

f

2

(x)

CF3

(c) CF3

0 1 2 3 4 5 6 7 8

f

1

(x)

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

1.1

f

2

(x)

CF4

(d) CF4

0 0.2 0.4 0.6 0.8 1 1.2 1.4 1.6 1.8 2

f

1

(x)

0

0.5

1

1.5

2

2.5

f

2

(x)

CF5

(e) CF5

0 0.2 0.4 0.6 0.8 1 1.2

f

1

(x)

0

0.5

1

1.5

2

2.5

f

2

(x)

CF6

(f) CF6

0 0.2 0.4 0.6 0.8 1 1.2

f

1

(x)

0

0.2

0.4

0.6

0.8

1

1.2

1.4

1.6

1.8

f

2

(x)

CF7

(g) CF7

0 20 40 60 80 100 120 140

f

1

(x)

0

5

10

15

20

25

30

35

40

45

50

f

2

(x)

BNH

(h) BNH

0.3 0.4 0.5 0.6 0.7 0.8 0.9 1

f

1

(x)

1

2

3

4

5

6

7

8

9

f

2

(x)

CONSTR

(i) CONSTR

-300 -250 -200 -150 -100 -50 0

f

1

(x)

0

10

20

30

40

50

60

70

80

f

2

(x)

OSY

(j) OSY

0 50 100 150 200 250

f

1

(x)

-250

-200

-150

-100

-50

0

50

f

2

(x)

SRN

(k) SRN

0 0.2 0.4 0.6 0.8 1 1.2

f

1

(x)

0

0.2

0.4

0.6

0.8

1

1.2

f

2

(x)

TNK

(l) TNK

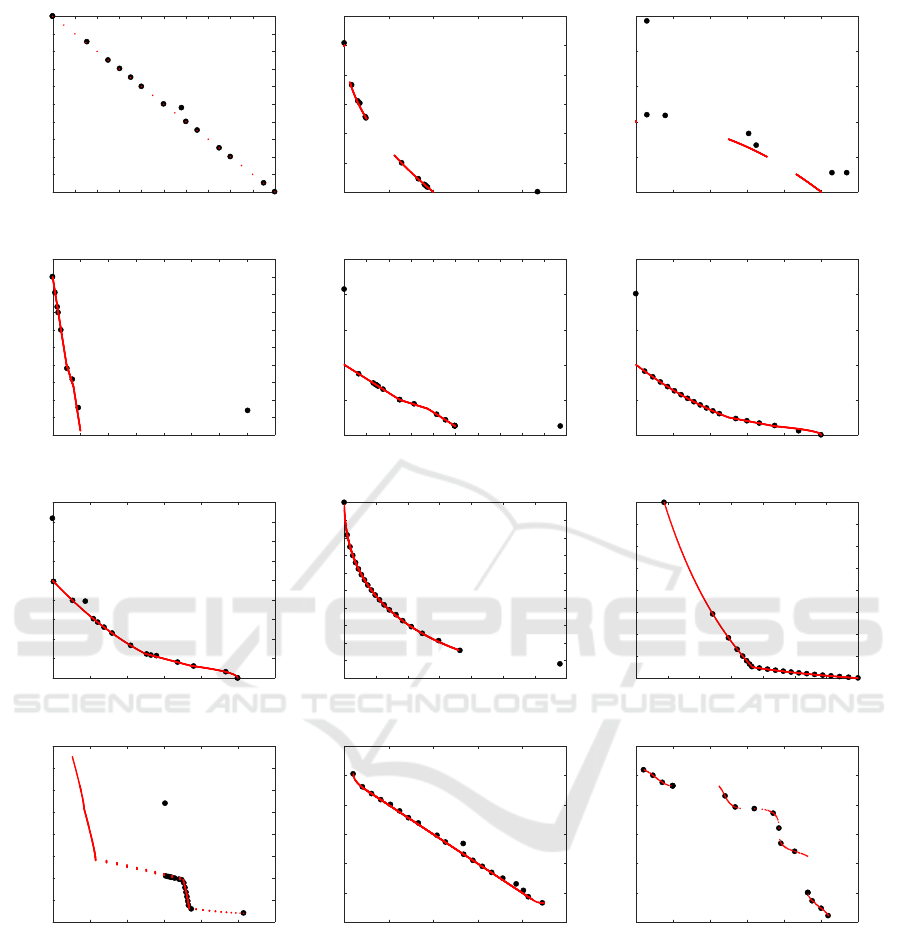

Figure 2: Illustrations of the Pareto fronts and an example run on test problems.

where µ

min

is a sufficiently small positive real value.

In our algorithm, ∆

k

s

k

is computed by the Hooke

and Jeeves (HJ) search method (Hooke and Jeeves,

1961). This algorithm differs from the traditional co-

ordinate search since it performs two types of moves:

the exploratory move and the pattern move. An ex-

ploratory move is a coordinate search – a search along

the coordinate axes – around a selected approxima-

tion, using a step length ∆

k

. A pattern move is a

promising direction that is defined by z

k

− z

k−1

when

the previous iteration was successful and z

k

was ac-

cepted as the new approximation. A new trial ap-

proximation is then defined as z

k

+ (z

k

− z

k−1

) and an

exploratory move is then carried out around this trial

point. If this search is successful, the new approxi-

mation is accepted as z

k+1

. We refer to (Hooke and

Jeeves, 1961; Lewis and Torczon, 1999) for details.

This HJ iterative procedure terminates, providing a

new approximation x

j

to the problems (4), x

j

← z

k+1

,

when the following stopping condition is satisfied,

ICORES 2018 - 7th International Conference on Operations Research and Enterprise Systems

338

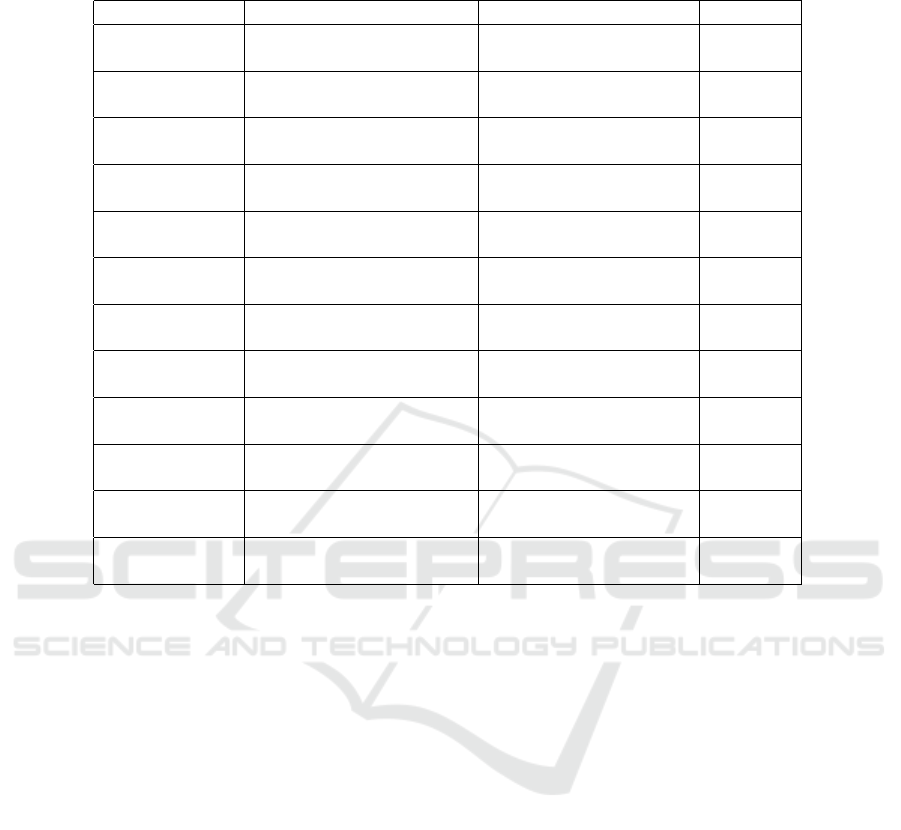

Table 1: Performance of MOEA/D-IEpsilon (SBX), MOEA/D-IEpsilon (DE), and SCAL on CF1-CF7, BNH, CONSTR,

OSY, SRN and TNK problems in terms of the mean and standard deviation values of IGD.

Problem MOEA/D-IEpsilon (SBX) MOEA/D-IEpsilon (DE) SCAL

CF1 mean 6.06E-03 5.13E-03 3.54E-01

std 1.36E-03 9.43E-04 1.13E-16

CF2 mean 1.04E-01 1.41E-02 9.49E-02

std 4.62E-02 2.15E-02 9.46E-02

CF3 mean 3.15E-01 2.33E-01 3.30E-01

std 1.25E-01 1.47E-01 1.79E-01

CF4 mean 1.18E-01 2.73E-02 3.97E-01

std 3.12E-02 9.17E-03 6.65E-01

CF5 mean 2.91E-01 2.49E-01 2.94E-01

std 1.33E-01 1.09E-01 2.00E-01

CF6 mean 1.34E-01 6.46E-02 3.73E-02

std 7.21E-02 3.59E-02 8.14E-03

CF7 mean 2.48E-01 2.08E-01 1.96E-01

std 8.81E-02 8.50E-02 1.77E-01

BNH mean 3.91E-01 4.59E-01 1.41E+00

std 1.17E-01 1.11E-01 7.81E-02

CONSTR mean 8.33E-03 1.28E-02 2.10E+00

std 9.96E-04 1.29E-03 2.75E+00

OSY mean 3.86E+00 4.31E+00 6.51E+00

std 5.60E-01 8.67E-01 2.19E+00

SRN mean 3.54E-01 3.56E-01 6.62E+00

std 5.89E-03 8.26E-03 2.63E+00

TNK mean 2.21E-03 1.82E-03 3.00E-01

std 9.08E-05 4.47E-05 3.36E-01

∆

k

≤ ε

j

. However, if this condition can not be sat-

isfied in k

max

iterations, then the procedure is stopped

with the last available approximation.

More detailed explanation on this can be found in

(Costa et al., 2012).

4 RESULTS AND DISCUSSION

In order to test the Augmented Lagrangian in con-

straint handling, several multi-objective test problems

are used: CF1-CF7 (Zhang et al., 2009), BNH (Binh

and Korn, 1997), CONSTR (Deb, 2001), OSY (Osy-

czka and Kundu, 1995), SRN (Srinivas and Deb,

1994), and TNK (Tanaka et al., 1995). Furthermore,

the results with this new approach are compared with

the results of (Fan et al., 2017), in particular, the al-

gorithm MOEA/D-IEpsilon, since this was the best

algorithm of all those tested, using simulated binary

crossover (SBX) and differential evolution crossover

(DE) operators (Fan et al., 2017). The compari-

son is based on the Inverted Generational Distance

(IGD) measure; this measure evaluates algorithm per-

formance in terms of convergence to the Pareto front

as well as the diversity of the approximation along the

frontier. For this purpose it is mandatory to know the

exact definition of the Pareto Frontier. These are pre-

liminary results and no thorough study has been made

of the parameters of the Augmented Lagrangian. Fig-

ures 1 presents the box plots for 30 executions for

each problem and Figure 2 shows the Pareto Fron-

tier (line) for the different problems with the approx-

imation (dots in the graphs) resulting from one of

the executions. It can be observed that the algorithm

closely approximates the Pareto Frontier, exhibiting

some extreme points which have a large impact on the

IGD measure. Table 1 presents the results of the new

approach and the published results with algorithm

MOEA/D-IEpsilon (Fan et al., 2017). Although, the

new algorithm only wins in two of the problems, with-

out much investigation of the Augmented Lagrangian

parameters, these results seem promising.

5 CONCLUSIONS

In this work a new approach for dealing with con-

straints in multi-objective optimization problems is

proposed based on the Augmented Lagrangian cou-

pled with weighted Tchebycheff method. Although

A Scalarized Augmented Lagrangian Algorithm (SCAL) for Multi-objective Optimization Constrained Problems

339

the reported results are a preliminary work on this ap-

proach, the results are very encouraging. Therefore,

future work will address the study of the parameters

of the Augmented Lagrangian, the use of an achieve-

ment scalarizing function, and the testing of the algo-

rithm in problems with three objectives.

ACKNOWLEDGEMENTS

This work has been supported by the Portuguese

Foundation for Science and Technology (FCT) in the

scope of the project UID/CEC/00319/2013 (ALGO-

RITMI R&D Center).

REFERENCES

Bertsekas, D. P. (1999). Nonlinear Programming. Athena

Scientific, Belmont, 2 edition.

Binh, T. T. and Korn, U. (1997). Mobes: A multiobjective

evolution strategy for constrained optimization prob-

lems. In In Proceedings of the Third International

Conference on Genetic Algorithms, pages 176–182.

Conn, A. R., Gould, N. I. M., and Toint, P. L. (1991).

A globally convergent augmented Lagrangian algo-

rithm for optimization with general constraints and

simple bounds. SIAM Journal on Numerical Analy-

sis, 28(2):545–572.

Costa, L., Santo, I. A. E., and Fernandes, E. M. (2012).

A hybrid genetic pattern search augmented lagrangian

method for constrained global optimization. Applied

Mathematics and Computation, 218(18):9415 – 9426.

Dchert, K., Gorski, J., and Klamroth, K. (2012). An aug-

mented weighted tchebycheff method with adaptively

chosen parameters for discrete bicriteria optimiza-

tion problems. Computers & Operations Research,

39(12):2929 – 2943.

Deb, K. (2001). Multi-Objective Optimization using Evolu-

tionary Algorithms. Wiley-Interscience Series in Sys-

tems and Optimization. John Wiley & Sons.

Fan, Z., Fang, Y., Li, W., Lu, J., Cai, X., and Wei, C.

(2017). A comparative study of constrained multi-

objective evolutionary algorithms on constrained

multi-objective optimization problems. In 2017 IEEE

Congress on Evolutionary Computation (CEC), pages

209–216.

Hooke, R. and Jeeves, T. A. (1961). Direct search solution

of numerical and statistical problems. Journal on As-

sociated Computation, 8:212–229.

Lewis, R. and Torczon, V. (1999). Pattern search algorithms

for bound constrained minimization. SIAM Journal on

Optimization, 9(4):1082–1099.

Lewis, R. M. and Torczon, V. (2002). A globally conver-

gent augmented Lagrangian pattern search algorithm

for optimization with general constraints and simple

bounds. SIAM Journal on Optimization, 12(4):1075–

1089.

Miettinen, K. (1999). Nonlinear multiobjective optimiza-

tion. Kluwer Academic Publishers, Boston.

Osyczka, A. and Kundu, S. (1995). A new method to solve

generalized multicriteria optimization problems using

the simple genetic algorithm. Structural optimization,

10(2):94–99.

Srinivas, N. and Deb, K. (1994). Muiltiobjective opti-

mization using nondominated sorting in genetic algo-

rithms. Evolutionary Computation, 2(3):221–248.

Steuer, R. E. and Choo, E.-U. (1983). An interac-

tive weighted tchebycheff procedure for multiple ob-

jective programming. Mathematical Programming,

26(3):326–344.

Tanaka, M., Watanabe, H., Furukawa, Y., and Tanino, T.

(1995). Ga-based decision support system for mul-

ticriteria optimization. In 1995 IEEE International

Conference on Systems, Man and Cybernetics. Intel-

ligent Systems for the 21st Century, volume 2, pages

1556–1561 vol.2.

Zhang, Q., Zhou, A., Zhao, S., Suganthan, P. N., Liu, W.,

and Tiwari, S. (2009). Multiobjective optimization

test instances for the CEC 2009 special session and

competition. Technical Report CES-487, University

of Essex, UK.

ICORES 2018 - 7th International Conference on Operations Research and Enterprise Systems

340