Background-Invariant Robust Hand Detection based on Probabilistic

One-Class Color Segmentation and Skeleton Matching

Andrey Kopylov, Oleg Seredin, Olesia Kushnir, Inessa Gracheva and Aleksandr Larin

Institute of Applied Mathematics and Computer Science, Tula State University, Tula, Russian Federation

Keywords:

Hand Detection, One-class Classification, Pixel Color Classifier, Support Vector Data Description, Structure

Transferring Filter, Skeleton Matching.

Abstract:

In this paper we present a new method of hand detection in cluttered background for video stream processing.

At first, skin segmentation is performed by one-class color pixel classifier which is trained using just a face

image fragment without any background training sample. The modified version of one-class classifier is

proposed. For each pixel it returns the grade (probability) of its belonging to the skin category instead of

common binary decision. To adjust output of the one-class classifier the structure-transferring filter built on

probabilistic gamma-normal model is applied. It utilizes additional information about the structure of an image

and coordinates local decisions in order to achieve more robust segmentation results. To make a final decision

whether an image fragment is the image of human hand or not, the method of binary image matching based on

skeletonization is employed. The experimental study on segmentation and detection quality of the proposed

method shows promising results.

1 INTRODUCTION

Accurate and robust hand detection in a video stream

is necessary and crucial stage in construction of easy-

to-use human-computer interaction systems as an al-

ternative to touch-based devices in surgery, robot con-

trol, bio identification, etc. In spite of noticeable

progress in this field there are still several challenges

to be addressed, for instance, background clutter, il-

lumination change, hand shape flexibility. It is espe-

cially important to tackle them in hand detection sys-

tems working in real-life environment.

It is possible to specify three main approaches to

the solution to this problem: 1) the approach based on

background subtraction techniques (Benezeth et al.,

2008; Piccardi, 2004; Shiravandi et al., 2013), 2) the

approach focused on skin-color segmentation (Jones

and Rehg, 2002; Kakumanu et al., 2007; Phung

et al., 2005; Vezhnevets et al., 2003; Wimmer and

Munchen, 2005) and further hand detection with

possible usage of additional information about hand

shape peculiarities (Junqiu and Yagi, 2008), 3) the ap-

proach utilizing depth information (Suarez and Mur-

phy, 2012).

Background subtraction techniques require un-

changeable background within the chosen model.

Such a serious drawback significantly limits a num-

ber of their applications in video stream processing.

Segmentation methods using in the second ap-

proach are based on parametric representation of

color space domain (RGB, HSV, YCbCr) which cor-

responds to the color of skin. In particular, simple

thresholding (Francke et al., 2007; Kakumanu et al.,

2007; Vezhnevets et al., 2003), PCA-based ellipsoid

descriptions (Hikal and Kountchev, 2011; Wimmer

and Munchen, 2005) and Gaussian mixture models

(Hassanpour et al., 2008; Jones and Rehg, 2002)

could be applied. However, in real environment the

geometry of skin color domain inside the color space

could be changed dramatically. In addition, individual

skin color characteristics vary from person to person

which leads to the necessity of utilizing adaptive color

models. Geometric features of hand shape are often

used to improve the quality of segmentation. The con-

tour of hand can be obtained by applying edge detec-

tion operators. The combination of shape, texture and

color features may produce better performance (Jun-

qiu and Yagi, 2008). There are several methods fo-

cusing on the hand morphology features, such as fin-

gertips (Oka et al., 2002). One first common clue

to fingertips detection is the curvature (Argyros and

Lourakis, 2006), and another is template matching.

Images of fingertips (Crowley et al., 1995) or fingers

(Rehg and Kanade, 1995) could be used as templates.

Kopylov, A., Seredin, O., Kushnir, O., Gracheva, I. and Larin, A.

Background-Invariant Robust Hand Detection based on Probabilistic One-Class Color Segmentation and Skeleton Matching.

DOI: 10.5220/0006649805030510

In Proceedings of the 7th International Conference on Pattern Recognition Applications and Methods (ICPRAM 2018), pages 503-510

ISBN: 978-989-758-276-9

Copyright © 2018 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

503

However, template matching has some inherent draw-

backs: 1) it is computationally expensive; 2) it can-

not cope with scaling or rotation changes of the tar-

get hand; 3) hand shape can vary dramatically due to

its 22 degrees of freedom, so it could be difficult to

choose the right template. See the comprehensive re-

view of above-mentioned techniques in (Zhu et al.,

2013).

Taking into account additional information about

scene depth partly overcomes these disadvantages

(Suarez and Murphy, 2012). Nevertheless, such ap-

proach assumes that no other objects are placed be-

tween the hand and the camera and requires additional

equipment.

We propose to use a fragment of a human face

on the current video frame for adaptive adjustment

of the skin-color model to compensate illumination

changes, since human faces can be easily detected by

Viola-Jones method (Jones and Viola, 2003). Simi-

lar idea is described in (Francke et al., 2007), but a

simple single Gaussian model is used there for skin

color description. In contrast, we apply here a mod-

ified version of the one-class pixel classifier (Larin

et al., 2014) which does not need to collect any train-

ing data for background modeling. The advantage of

one-class classifier over statistical threshold is that the

region of interest in color space is described by more

complex geometrical shape than just cuboids or ellip-

soids, by means of Support Vector Data Description

method (SVDD) (Tax and Duin, 2004). This fact lets

minimizing the misclassification of skin-colored pix-

els. Re-training of the classifier could be performed

in near real-time within almost every single frame of

video stream.

The proposed segmentation method implies the

further modification of one-class classifier. We use

Bayesian approach on the basis of gamma-normal

probabilistic model (Gracheva et al., 2015; Gracheva

and Kopylov, 2017). Such a model lets us define and

correct probabilistic relations between rough classifi-

cation results of each individual element on a frame

based on the structure of a source image.

Thereby, the color segmentation allows selecting

a set of candidates (binary image fragments) for sub-

sequent decision about their possible belonging to the

class of hands, or hand detection.

It is worth noting that one of the most popular

approaches to hand detection today is the method of

Viola and Jones (Bowden, 2004; Fang et al., 2007;

Jones and Viola, 2003). In general, this method is

utilized for real-time detection of different kinds of

objects in images. For example, it is implemented in

OpenCV computer vision library for human face de-

tection. However, it is necessary to collect large high-

quality training set to apply Viola-Jones method to

the hand detection problem. Taking into account the

number of a human hand degrees of freedom, the gen-

eration of the proper training dataset becomes rather

sophisticated task. In (Bowden, 2004) the dataset in-

cludes 5013 images of hands, divided into the training

set consisting of 2504 images, and the test set consist-

ing of 2509 images.

Our solution to the hand detection problem in-

cludes the shape skeleton matching method based on

the pair-wise alignment of skeleton primitive chains

(Kushnir and Seredin, 2015). The main advantage of

the method is its invariance to the scaling and rota-

tion changes of the target hand. The skeleton of a

segmented binary object is described by the primi-

tive chain, then the chain is compared with the skele-

ton primitive chain of the typical hand shape. If the

dissimilarity measure is less then some predefined

threshold, the object is classified as a hand. For the

more robust classification result several typical hand

shapes could be used.

Overall result of our hand detection method will

be the binary mask corresponded to the hand region

on the current video frame, coupled with the skeleton

description of the hand shape. It allows solving ges-

ture recognition or bio-identification tasks afterwards.

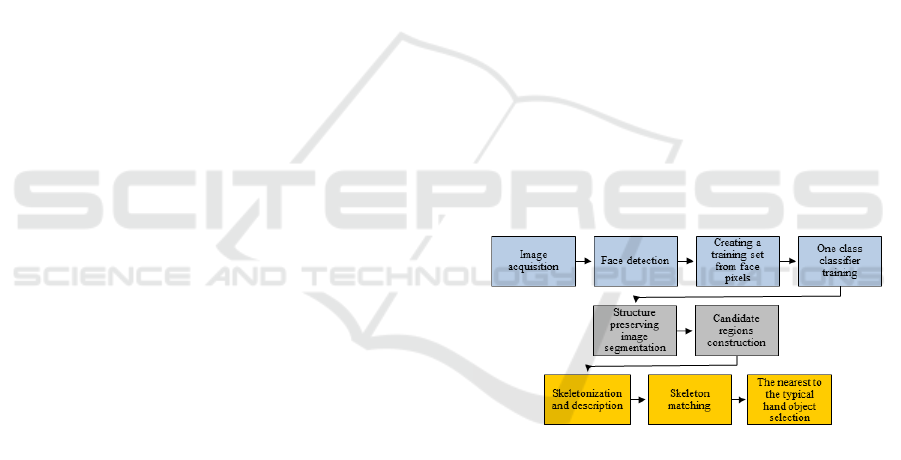

The general flow-chart of the proposed hand detection

method is shown in Figure 1.

Figure 1: General flow-chart of the proposed hand detection

method.

The paper is organized in the following way. In

Section 2, the parametric representation of skin color

by one-class classifier is described. The probabilistic

method of skin segmentation based on such a para-

metric representation is introduced in Section 3. Sec-

tion 4 describes the approach to hand detection based

on the shape skeleton matching. Finally Section 5 is

devoted to the experimental results and quality evalu-

ation.

ICPRAM 2018 - 7th International Conference on Pattern Recognition Applications and Methods

504

2 PARAMETRIC

REPRESENTATION OF SKIN IN

COLOR SPACE USING

ONE-CLASS CLASSIFIER

The approach to segmentation based on skin color

representation requires a pixel distribution model in

the proper color space. In the real-life environment

the individual peculiarities of a persons skin and il-

lumination changes vary the color set of skin pixels

completely.

To avoid these obstacles we use the modification

of one-class pixel classifier (Larin et al., 2014) which

does not use the background model. In contrast to

static threshold decision, the one-class classifier al-

lows describing more complex surface than cuboid,

cylindrical or ellipsoid (Fritsch et al., 2002; Hsieh

et al., 2012; Kim et al., 2005; Sabeti and Wu, 2007).

This advantage is provided by using kernel trick in the

SVDD (Tax and Duin, 2004) and allows minimizing

skin-color pixels misclassification. The second bene-

fit is the ability of real-time classifier re-training; we

can adjust the color model in every single frame.

As a training set for the one-class color classifier

we use a set of pixels in a rectangle located in the cen-

tral part of face (see Figure 2(a)). Face detection is

a well-developed technique (Degtyarev and Seredin,

2010) so this stage is performed in a rather simple

way – we use the OpenCV realization of Viola-Jones

method. The parameters of training region inside

the face rectangle are defined as in (Degtyarev and

Seredin, 2010). Particularly, the shift from the top of

the face rectangle is equal to 0.49 of the face rectan-

gle height, and the height and the width of training

fragment are equal to 0.11 and 0.6 of the height and

the width of the face rectangle correspondingly. This

area lies between the eyes and nose tip, it is less de-

formable and free of beard, mustache, glasses, head-

dress, make-up.

This idea allows us to provide on-line estimation

of skin color changes at every new frame, but requires

a face presented in the scene (or reliable face detec-

tion). Otherwise, we can use a preliminary trained

model.

The training set for the one-class classifier is a

cloud of points in color-space. Flexibility of the

describing surface in color space is defined by the

parameter of Gaussian kernel in SVDD (see Fig-

ure 2(b)). After the training it is possible to make a

fast decision about similarity between each pixel of an

image and the training fragment checking whether a

pixel belongs to the hypersphere in the Hilbert space.

Figure 2: Parametric representation of skin: (a) training re-

gion; (b) training set of pixels (dark points) shown in RGB

color space and the describing decision surface (light gray)

built by the one-class classifier.

3 PROBABILISTIC IMAGE

SEGMENTATION BASED ON

PARAMETRIC

REPRESENTATION OF SKIN

Parametric representation of skin by itself cannot pro-

vide robust and accurate segmentation (see Figure 3)

since it is based on pixel properties in color space only

and does not take into account any spatial relations

between neighboring pixels as well as the structure of

homogeneous regions inside the image.

Figure 3: Results of color segmentation via one-class pixel

color classifier. The segmentation inside the face bounding

box was omitted.

Instead of well-known segmentation methods (e.g.

described in (Bali and Singh, 2015)) which, unfortu-

nately, needs too long processing time for online hand

detection, we propose here to use the new class of

filters, called structure-transferring filters which arise

recently in the literature (He et al., 2013; Zhang et al.,

2014). The main idea of structure-transferring filters

is to extract the structure from the so-called guided

image and make the filtering result of the source im-

age consistent with this structure. Joint Bilateral Fil-

ter (Petschnigg et al., 2004) and Guided Filter (He

et al., 2013) currently occupies a leading position

among the filters of this class. The main disadvantage

of the Joint Bilateral Filter and the Guided Filtration is

the presence of artifacts, which visually manifested in

the form of halos around the edges of the objects. The

appearance of such artifacts is a characteristic of all

filters with finite impulse response which is explained

by the Gibbs effect. Recent attempts to overcome this

Background-Invariant Robust Hand Detection based on Probabilistic One-Class Color Segmentation and Skeleton Matching

505

drawback (Zhang et al., 2014) using weighted global

average parameters of corresponded model increase

computational complexity beyond the real-time lim-

its.

In this paper we use alternative Bayesian approach

described in (Gracheva et al., 2015; Gracheva and

Kopylov, 2017) and based on the special model of the

Markov random field, called gamma-normal model

(Krasotkina et al., 2010). It makes possible to take

into account the structure which extracted from the

“guide” image through setting an appropriate proba-

bilistic relationships between elements of the sought

for filtering result.

Let Y = (y

t

,t ∈ T ) be an initial data array defined

on a subset of the two-dimensional discrete space

T = {t = (t

1

,t

2

) : t

1

= 1,...,N

1

,t

2

= 1,...,N

2

} and let

X = (x

t

,t ∈ T ) defined on the same argument set plays

the role of desirable result of processing. We will con-

sider Y and X as the observed and hidden components

of the two-component random field (X,Y ). Prob-

abilistic properties of two-component random field

(X,Y) are completely determined by the joint condi-

tional probability density Φ(Y |X, δ) of original data

Y = (y

t

,t ∈ T ) with respect to the secondary data X =

(x

t

,t ∈ T ), and the a prior joint distribution Ψ(X|Λ,δ)

of hidden component X = (x

t

,t ∈ T ).

Let the joint conditional probability density

Φ(Y |X,δ) be in the form of Guassian distribution:

Φ(Y |X,δ) =

1

δ

(N

1

N

2

)/2

(2π)

(N

1

N

2

)/2

×

×exp(−

1

2δ

∑

t∈T

(y

t

− x

t

)

2

), (1)

where δ is the variance of the observation noise,

which is assumed to be unknown.

The a priori joint distribution Ψ(X|Λ, δ) of the

hidden component X = (x

t

,t ∈ T ) is also assumed

Gaussian. But the variance r

t

of hidden variables is

assumed to be different at different points t ∈ T of

the hidden field X . It is convenient to make r

t

,t ∈ T

proportional to the variance of observation noise r

t

=

λ

t

δ. Coefficients of proportionality Λ = (λ

t

,t ∈ T )

can serve as a means to flexibly define the structure

of probabilistic relationships between elements of the

hidden field X = (x

t

,t ∈ T ).

Under this assumption, we come to the improper

a priori density:

Ψ(X|Λ,δ) ∝

1

∏

t∈T

δλ

t

1/2

(2π)

(N

1

N

2

)/2

×

×exp

−

1

2

∑

t

′

,t

′′

∈V

1

δλ

t

(x

t

′

− x

t

′′

)

2

, (2)

where V is the neighborhood graph of the image ele-

ments having the form of a lattice.

Finally, we assume the inverse coefficients 1/λ

t

to be a priori independent and identically gamma-

distributed on the positive half-axis λ

t

≥ 0.

G(Λ|δ,η,µ) = exp

−

1

2δµ

∑

t∈T

η

1

λ

t

+

1

η

lnλ

t

,

(3)

where η and µ is, respectively, the basic average fac-

tors of variability.

If µ → 0, then 1/λ

t

is almost completely concen-

trated around the mathematical expectation 1/η, and

with µ → ∞, 1/λ tends to have the almost uniform dis-

tribution.

The joint a posteriori distribution of hidden field

X and Λ is completely defined by (1), (2) and (3):

P(X,Λ|Y,δ, η,µ) =

=

Ψ(X|Λ,δ)G(Λ|η,µ)Φ(Y |X,δ)

∫ ∫

Ψ(X

′

|Λ

′

,δ)G(Λ

′

|η,µ)Φ(Y |X

′

,δ)dX

′

dΛ

′

. (4)

It is easy to see that the maximum a posteriori

probability (MAP) estimate leads to the minimization

of the following goal function.

J(X, Λ|Y, η,µ) =

∑

t∈T

(y

t

− x

t

)

2

+

+

∑

t

′

,t

′′

∈V

1

λ

t

′

(x

t

′

− x

t

′′

)

2

+ η/µ

+ (1 +1/µ) lnλ

t

′

.

(5)

If the field of coefficients is fixed, optimal MAP

estimation of X can be obtained by solving the fol-

lowing simple quadratic optimization task:

ˆ

X = argmin

X

∑

t∈T

(y

t

− x

t

)

2

+

∑

t

′

,t

′′

∈V

1

λ

t

(x

t

′

− x

t

′′

)

2

,

by using the extremely fast procedure on the basis of

tree-serial dynamic programming (Mottl and Blinov,

1998).

If X = (x

t

,t ∈ T ) is fixed X = X

g

, criterion (5)

gives the following equation for optimal Λ with fixed

structural parameters η and µ:

ˆ

λ

t

′

(X

g

,η,µ) = η

(1/η)(x

g

t

′

− x

g

t

′′

)

2

+ 1/µ

1 + 1/µ

,

t

′

,t

′′

∈ V.

The additional guided image can serve here as

X

g

to objectify its structure by

ˆ

Λ = (

ˆ

λ

t

,t ∈ T ). As

mentioned above, the field Λ = (λ

t

,t ∈ T ) serves

as a measure of local variability of a hidden field

X = (x

t

,t ∈ T ). As it can be seen from the criterion

(5),

ˆ

λ

t

′

,t

′

∈ T actually plays the role of a penalty for

the difference between values of two corresponding

neighboring variables x

g

t

′

and x

g

t

′′

, (t

′

,t

′′

) ∈ T . Thus,

Λ = (λ

t

,t ∈ T ), being estimated with the help of an

additional guided image, can be used to transfer the

structure of local relations between elements of the

guided image to the result of processing.

ICPRAM 2018 - 7th International Conference on Pattern Recognition Applications and Methods

506

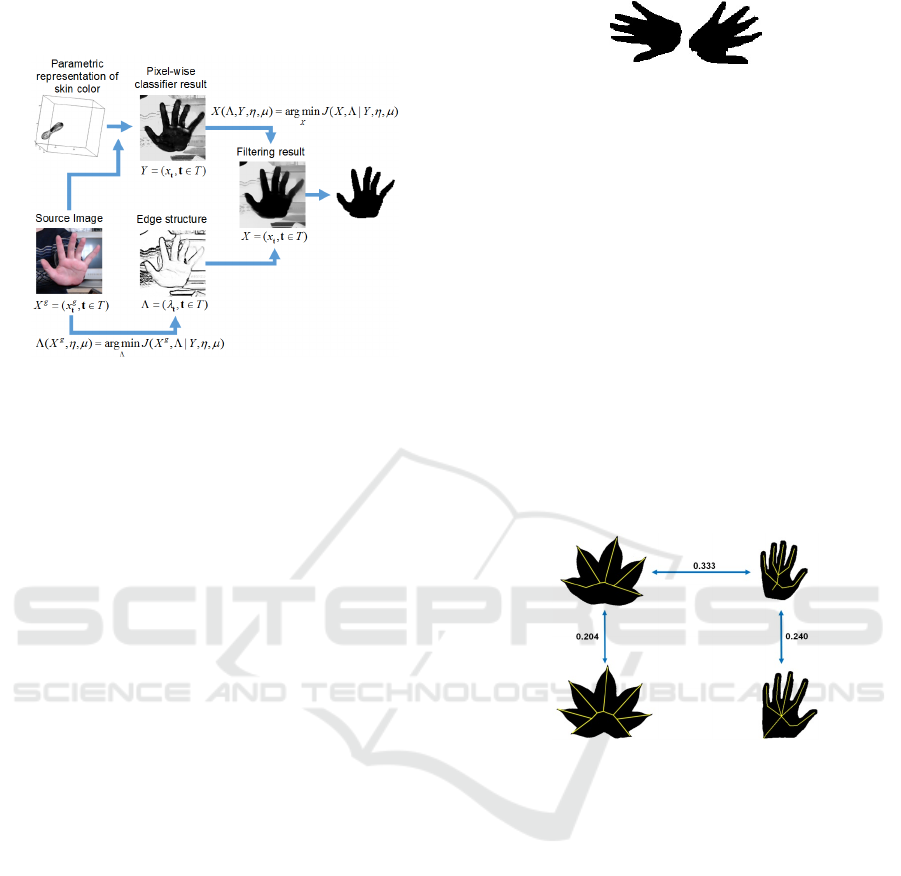

The general scheme of segmentation is shown in

Figure 4.

Figure 4: General scheme of probabilistic color segmenta-

tion.

Proposed procedure has approximately the same

computational time as Fast Guided Filter (He et al.,

2013) and has linear computational complexity with

respect to the number of pixels in the source image.

For the image segmentation task the initial image

plays the role of a “guide” image X

g

and the rough

output from the one-class classifier plays the role of

initial data Y . In addition, the concept of SVDD gives

the possibility to get fuzzy classification instead of bi-

nary one (see Figure 3). We use the distance from the

center of hypersphere in Hilbert space to the object

as the grade of membership in the class of interest.

Fuzzy classification lets us obtain more accurate de-

cision with the help of structure transferring filter, de-

scribed above.

4 HAND DETECTION BY THE

SKELETON MATCHING

Some regions of a binary image which are potentially

can be referred to as hands according to their empir-

ical features (such as geometrical characteristics, the

size which is proportional to a face size, and the filled-

in degree which is computed as the ratio of the num-

ber of black pixels to the size of the region) are pre-

sented for the skeleton matching procedure described

in (Kushnir and Seredin, 2015). This procedure com-

pares the skeleton of a candidate binary region with

the skeleton of the typical hand shape. So far as the

procedure is not invariant under reflection, we need to

choose two typical hand shapes – for the left and the

right hand (see Figure 5).

The skeletons of the typical left and right hands

are needed to calculate once before detection will

Figure 5: Shapes of the most typical left and right hands.

start. Then, the skeleton of a candidate region is cal-

culated. Each skeleton is transformed to the appropri-

ate for the matching form (so called ”base skeleton”)

by using pruning and approximation procedures (see

Figure 6). The matching procedure performs three

following steps:

1. each skeleton is coded by the sequence of primi-

tives (primitive chain); each primitive contains in-

formation about topological characteristics of the

corresponding skeleton edge (length, inner-angle

and radial function),

2. two primitive chains (belonging to the one of typ-

ical hands and candidate region respectively) are

aligned by dynamic programming procedure,

3. dissimilarity measure of primitive chains and,

consequently, corresponding skeletons, is calcu-

lated based on their optimal pair-wise alignment.

Figure 6: Examples of two classes of shapes, their base

skeletons and pair-wise dissimilarity measures.

Thus, the matching procedure produces the dis-

tance function of two variables which returns non-

negative dissimilarity measure between shapes. It is

expected that classification decision (left/right hand

or non-hand) could be made based on a simple thresh-

old decision rule applied to dissimilarity value be-

tween this region and the image of typical hand.

Therefore, it is important to choose typical images rel-

evant to the application. In our preliminary studies,

the procedure demonstrated a good processing time,

near 3-5 ms per one matching in ordinary PC.

5 EXPERIMENTAL STUDY

For experimental study of the proposed method

we collected a database of images gotten under

varying illumination conditions and in different

interiors. There is only one person with clearly

Background-Invariant Robust Hand Detection based on Probabilistic One-Class Color Segmentation and Skeleton Matching

507

visible hand(s) in each image. The hand and the face

bounding boxes have no intersections (see examples

in Figure 7). Total number of images in the database

is 549. For the each image ground-truth hand(s)

location was determined by expert and marked

with four points which are the corners of corre-

sponding bounding box. The database is available on

http://lda.tsu.tula.ru/papers/TulaSU HandsDetDB.zip.

Figure 7: Examples of images used in experimental study.

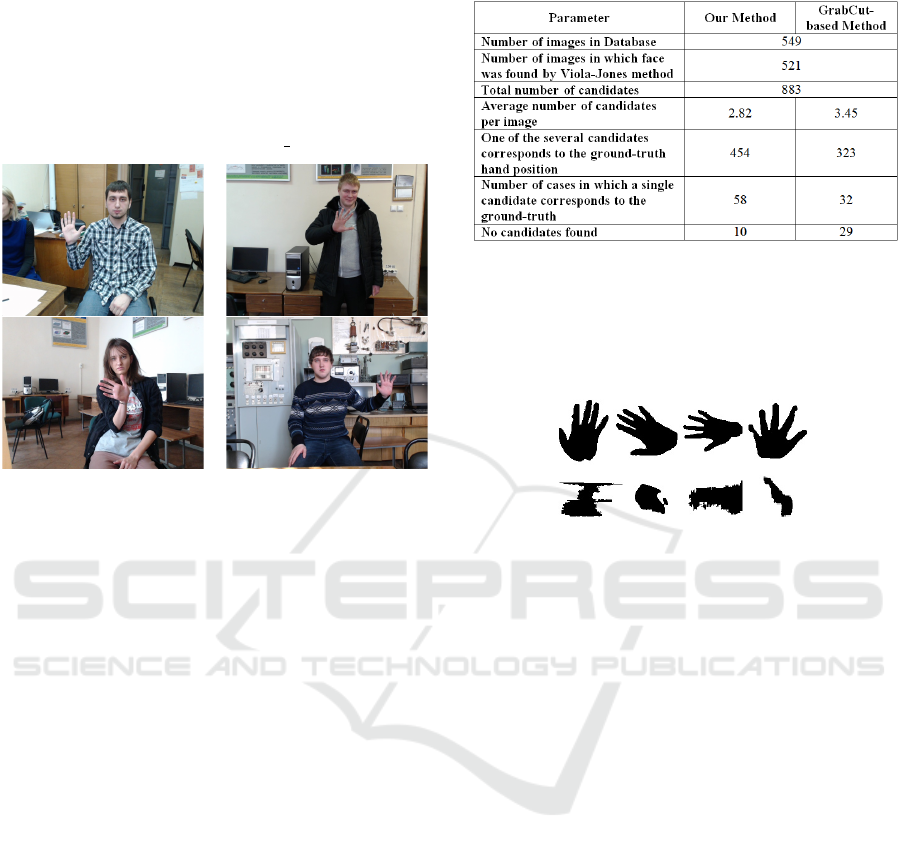

On the first stage of experimental study we evalu-

ated the segmentation quality of the algorithms pro-

posed in Sections 2 and 3. The main goal was to

figure out whether the segmentation method capable

to detect candidates for the further comparison with

the most typical hands shapes. As shown on Table 1,

for the most of images, in which a face was detected

(we used parameter NeighborFaces=9 in OpenCV re-

alization of Viola-Jones algorithm), our segmentation

method had found image fragment which corresponds

to the ground-truth hand position; in 58 cases the only

one candidate corresponds to the hand position. De-

cision about correspondence of candidate fragment to

the ground-truth fragment was made if intersection of

their bounding boxes was greater than 50%.

We have compared our segmentation method

with well-known GrabCut method (Boykov and Kol-

mogorov, 2004) based on graph cuts and MRF op-

timization. To make a comparison more correct we

have replaced Gaussian Mixture Models which were

utilized in the paper for background and foreground

representation, by our one-class classifier.

Average computational time per image for seg-

mentation algorithms running in MATLAB environ-

ment was 1.3 sec for graph cuts based optimization

method and 0.3 sec for our method on the basis of

gamma-normal probabilistic model.

The examples of candidate fragments are shown

in Figure 8. All objects-candidates were separated

by expert into three categories: “left hands” (173 in-

stances), “right hands” (117), and “non-hands” (593).

Table 1: Segmentation quality.

Moreover, two most typical hands (the left and the

right) were chosen (see Figure 5). We estimated qual-

ity of skeleton matching algorithm (Section 4) by

analyzing distances between objects-candidates ob-

tained by segmentation procedure and two most typi-

cal hands (the left and the right).

Figure 8: Examples of objects-candidates, which are similar

to human hand (top) and are not similar (bottom).

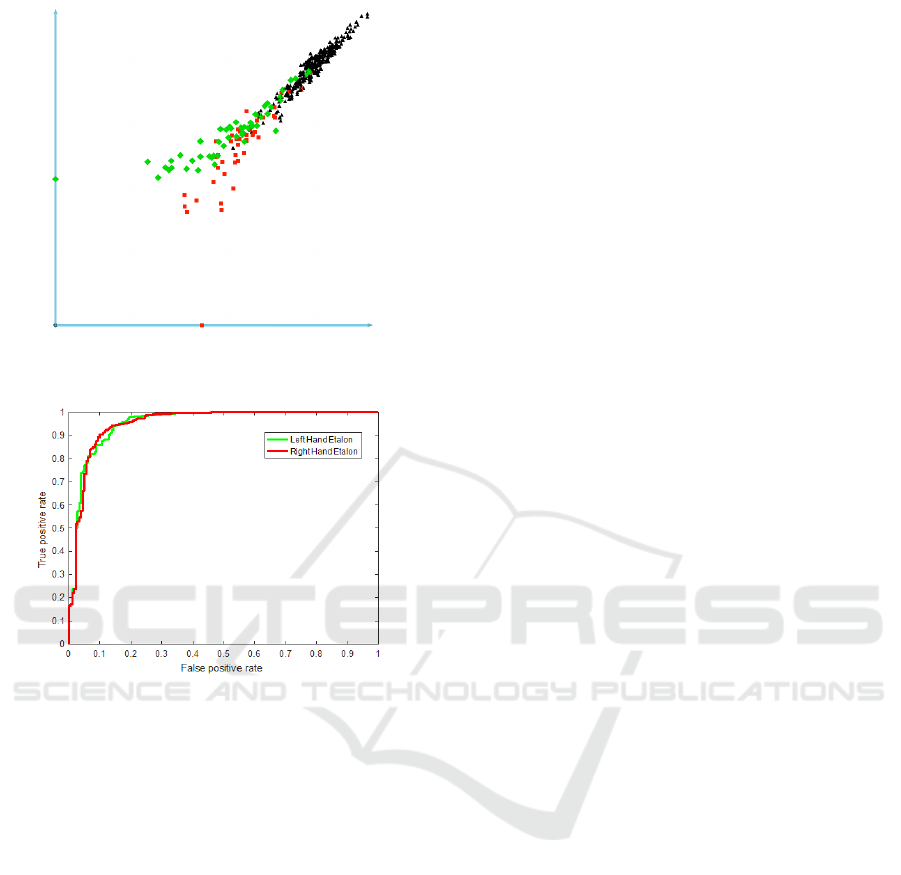

In Figure 9 we have a projection of whole dis-

tance matrix (883 x 883) on two-dimensional space.

The first feature is the distance to the most typical

left hand (horizontal axis) and the second one is the

distance to the most typical right hand (vertical axis).

Therefore, the “left hands” are the green rhombuses,

“right hands” are the red squares and the non-hands

are the black triangles. The chart displays that the

compactness hypothesis for the three classes holds

true. It allows us to build hand detection system us-

ing simple threshold rule based on the distance to the

most typical objects.

Quality of separation of hands from non-hands in

the form of ROC-curves is shown in Figure 10. These

curves demonstrate the true positive against false pos-

itive rate at the increasing of distance function from

the chosen typical objects. The AUC (area under the

curve) for left hands (green curve) is equal to 0.9535,

and for right hands (red curve) is 0.9531.

6 CONCLUSION

Hand detection is rather complex problem and re-

quires a combination of different methods and algo-

rithms for reliable solution. Proposed method consists

in three major stages: face detection and one-class

ICPRAM 2018 - 7th International Conference on Pattern Recognition Applications and Methods

508

Figure 9: The distances to the most typical left hand (hori-

zontal axis) and right hand (vertical axis).

Figure 10: ROC-curves built at the distance function from

the most typical left (green) and right hand (red).

classifier training, segmentation of skin-colored re-

gions and comparison of hand-candidate shapes with

the most typical hand objects.

The main advantage of the method is invariance

to illumination conditions, personal skin color speci-

ficity, hand rotation and scale. Additionally, no image

background model is used which makes our method

performing robust in complex or varying scenes. Our

method allows fast recalculating of the skin color

model by using information just from the small frag-

ment of face. The new method of probabilistic seg-

mentation based on one-class color classifier and the

structure-transferring filter was built on probabilistic

gamma-normal model. It allows reducing the num-

ber of candidates for the shape matching procedure.

Rotating/scaling-invariant matching algorithm based

on skeleton primitive chains alignment performs very

fast and obtains reliable classification results.

We have paid special attention to low computa-

tional time of constituent algorithms. Thereby, our

method could be implemented in real-time and ap-

plied to hand detection in video streams. However,

no temporal inter-frame relations are taking into ac-

count so far, that could be a subject for future work.

The experimental study demonstrates robustness and

precision of proposed hand detection method.

ACKNOWLEDGEMENTS

This work is supported by the Russian Fund for Ba-

sic Research, grants 16-57-52042, 16-07-01039. The

results of the research project are published with the

financial support of Tula State University within the

framework of the scientific project No 2017-20PUBL.

REFERENCES

Argyros, A. A. and Lourakis, M. I. (2006). Vision-based

interpretation of hand gestures for remote control of

a computer mouse. Computer Vision in Human-

Computer Interaction, 3979 LNCS:40–51.

Bali, A. and Singh, S. N. (2015). A review on the strategies

and techniques of image segmentation. 2015 Fifth In-

ternational Conference on Advanced Computing and

Communication Technologies, pages 113–120.

Benezeth, Y., Jodoin, P., Emile, B., Laurent, H., and

Rosenberger, C. (2008). Review and evaluation of

commonly-implemented background subtraction al-

gorithms. 19th ICPR, pages 1–4.

Bowden, R. (2004). A boosted classifier tree for hand shape

detection. Sixth IEEE International Conference on

Automatic Face and Gesture Recognition, 2004. Pro-

ceedings, pages 889–894.

Boykov, Y. and Kolmogorov, V. (2004). An experi-

mental comparison of min-cut/max-flow algorithms

for energy minimization in vision. IEEE Transac-

tions on Pattern Analysis and Machine Intelligence,

26(9):1124–1137.

Crowley, J., Berard, F., and Coutaz, J. (1995). Finger track-

ing as an input device for augmented reality. Interna-

tional Workshop on ldots, (June):1–8.

Degtyarev, N. and Seredin, O. (2010). Comparative

testing of face detection algorithms. International

Conference on Image and Signal Processing, 6134

LNCS:200–209.

Fang, Y., Wang, K., Cheng, J., and Lu, H. (2007). A real-

time hand gesture recognition method. Multimedia

and Expo, 2007 IEEE International Conference on,

pages 995–998.

Francke, H., Ruiz-del Solar, J., and Verschae, R. (2007).

Real-time hand gesture detection and recognition us-

ing boosted classifiers and active learning. Advances

in Image and Video Technology, pages 533–547.

Fritsch, J., Lang, S., Kleinehagenbrock, M., Fink, G. A.,

and Sagerer, G. (2002). Improving adaptive skin color

Background-Invariant Robust Hand Detection based on Probabilistic One-Class Color Segmentation and Skeleton Matching

509

segmentation by incorporating results from face de-

tection. IEEE International Workshop on Robot and

Human Interactive Communication, pages 337–343.

Gracheva, I. and Kopylov, A. (2017). Image processing al-

gorithms with structure transferring properties on the

basis of gamma-normal model. Communications in

Computer and Information Science, 661:257–268.

Gracheva, I., Kopylov, A., and Krasotkina, O. (2015). Fast

global image denoising algorithm on the basis of non-

stationary gamma-normal statistical model. Com-

munications in Computer and Information Science,

542:71–82.

Hassanpour, R., Shahbahrami, A., and Wong, S. (2008).

Adaptive gaussian mixture model for skin color seg-

mentation. World Academy of Science, Engineering

and Technology, 31(July):1–6.

He, K., Sun, J., and Tang, X. (2013). Guided image filtering.

IEEE Transactions on Pattern Analysis and Machine

Intelligence, 35(6):1397–1409.

Hikal, N. A. and Kountchev, R. (2011). Skin color segmen-

tation using adaptive pca and modified elliptic bound-

ary model. Advanced Computer Science and Infor-

mation System (ICACSIS), 2011 International Confer-

ence, pages 407–412.

Hsieh, C. C., Liou, D. H., and Lai, W. R. (2012). Enhanced

face-based adaptive skin color model. Journal of Ap-

plied Science and Engineering, 15(2):167–176.

Jones, M. and Viola, P. (2003). Fast multi-view face detec-

tion. Mitsubishi Electric Research Lab TR2000396,

(July).

Jones, M. J. and Rehg, J. M. (2002). Statistical color mod-

els with application to skin detection. International

Journal of Computer Vision, 46(1):81–96.

Junqiu, W. and Yagi, Y. (2008). Integrating color and shape-

texture features for adaptive real-time object tracking.

IEEE Transactions on Image Processing, 2(17):235–

240.

Kakumanu, P., Makrogiannis, S., and Bourbakis, N. (2007).

A survey of skin-color modeling and detection meth-

ods. Pattern Recognition, 40(3):1106–1122.

Kim, K., Chalidabhongse, T. H., Harwood, D., and Davis,

L. (2005). Real-time foreground-background segmen-

tation using codebook model. Real-Time Imaging,

11(3):172–185.

Krasotkina, O., Kopylov, A., Mottl, V., and Markov, M.

(2010). Bayesian estimation of time-varying regres-

sion with changing time-volatility for detection of hid-

den events in non-stationary signals. 7th IASTED In-

ternational Conference on Signal Processing, Pattern

Recognition and Applications, pages 8–15.

Kushnir, O. and Seredin, O. (2015). Shape matching based

on skeletonization and alignment of primitive chains.

Communications in Computer and Information Sci-

ence, 542:123–136.

Larin, A., Seredin, O., Kopylov, A., Kuo, S. Y., Huang,

S. C., and Chen, B. H. (2014). Parametric represen-

tation of ob jects in color space using oneclass clas-

sifiers. International Workshop on Machine Learn-

ing and Data Mining in Pattern Recognition. Springer,

Cham., pages 300–314.

Mottl, V. and Blinov, A. (1998). Optimization techniques

on pixel neighborhood graphs for image processing.

Graph-Based Representations in Pattern Recognition,

12(Computing. Supplement, 0344-8029):135–145.

Oka, K., Sato, Y., and Koike, H. (2002). Real-time finger-

tip tracking and gesture recognition. IEEE Computer

Graphics and Applications, 22(6):64–71.

Petschnigg, G., Szeliski, R., Agrawala, M., Cohen, M.,

Hoppe, H., and Toyama, K. (2004). Digital photogra-

phy with flash and no-flash image pairs. ACM Trans-

actions on Graphics, 23(3):664.

Phung, S., Bouzerdoum, A., and Chai, D. (2005). Skin

segmentation using color pixel classification: analy-

sis and comparison. IEEE Transactions on Pattern

Analysis and Machine Intelligence, 27(1):148–154.

Piccardi, M. (2004). Background subtraction tech-

niques: a review. 2004 IEEE International Confer-

ence on Systems, Man and Cybernetics (IEEE Cat.

No.04CH37583), 4:3099–3104.

Rehg, J. and Kanade, T. (1995). Model-based tracking

of self-occluding articulated objects. Proceedings of

IEEE International Conference on Computer Vision,

pages 612–617.

Sabeti, L. and Wu, Q. M. J. (2007). High-speed skin color

segmentation for real-time human tracking. 2007

IEEE International Conference on Systems, Man and

Cybernetics, pages 2378–2382.

Shiravandi, S., Rahmati, M., and Mahmoudi, F. (2013).

Hand gestures recognition using dynamic bayesian

networks. 2013 3rd Joint Conference of AI and

Robotics and 5th RoboCup Iran Open International

Symposium, pages 1–6.

Suarez, J. and Murphy, R. R. (2012). Hand gesture recog-

nition with depth images: A review. Ro-Man, 2012

Ieee, pages 411–417.

Tax, D. M. J. and Duin, R. P. W. (2004). Support vector data

description. Machine Learning , 54(1):45–66.

Vezhnevets, V., Sazonov, V., and Andreeva, A. (2003). A

survey on pixel-based skin color detection techniques.

Proceedings of GraphiCon 2003, 85(0896-6273 SB -

IM):85–92.

Wimmer, F. and Munchen (2005). Adaptive skin color

classificator. Proc. of the first ICGST International

Conference on Graphics Vision and Image Processing

GVIP-05, (December):324–327.

Zhang, J., Cao, Y., and Wang, Z. (2014). A new image filter-

ing method: Nonlocal image guided averaging. 2014

IEEE International Conference on Acoustics, Speech

and Signal Processing (ICASSP), (2012):2479–2483.

Zhu, Y., Yang, Z., and Yuan, B. (2013). Vision based hand

gesture recognition. Service Sciences (ICSS), 2013 In-

ternational Conference on, 3(1):260–265.

ICPRAM 2018 - 7th International Conference on Pattern Recognition Applications and Methods

510