Cylindric Clock Model to Represent Spatio-temporal Trajectories

Joanna Isabelle Olszewska

School of Engineering and Computing, University of West Scotland, U.K.

Keywords:

Spatial and Temporal Reasoning, Reasoning about Motion and Change, Ontologies of Time and Space-time,

Temporal Information Extraction, Spatio-temporal Knowledge Representation Systems.

Abstract:

To automatically understand agents’ environment and its changes, the study of spatio-temporal relations be-

tween the objects evolving in the observed scene is of prime importance. In particular, the temporal aspect is

crucial to analyze scene’s objects of interest and their trajectories, e.g. to follow their movements, understand

their behaviours, etc. In this paper, we propose to conceptualize qualitative spatio-temporal relations in terms

of the clock model and extend it to a new spatio-temporal model we called cylindric clock model, in order to

effectively perform automated reasoning about the scene and its objects of interest and to improve the mod-

eling of dynamic scenes compared to state-of-art approaches as demonstrated in the carried out experiments.

Hence, the new formalisation of the qualitative spatio-temporal relations provides an efficient method for both

knowledge representation and information processing of spatio-temporal motion data.

1 INTRODUCTION

The study of spatio-temporal information such as

the computation and analysis of scene objects’ tra-

jectories has been proven to be of a major chal-

lenge for real-world applications involving the reli-

able and automatic understanding of a sensed envi-

ronment where agents evolve, whatever their level of

autonomy. Hence, a wide range of tasks from traffic

monitoring (Yue and Revesz, 2012), video summa-

rization (Cooharojananone et al., 2010), action pre-

diction (Young and Hawes, 2014), activity recogni-

tion (Sun et al., 2010; Zhang et al., 2013) to robot

path planning (Dash et al., 2012) and UAV navigation

aid (Kalantar et al., 2017) require efficient models to

represent spatio-temporal trajectories.

In particular, joint navigation in commander/robot

teams (Summers-Stay et al., 2014; Olszewska, 2017a)

needs both reliable, quantitative spatio-temporal data

and efficient, qualitative spatio-temporal models, in

order to generate objects’ paths.

In the literature, objects’ trajectories are usually

computed by quantitative methods that at first iden-

tify objects’ motion with computer-vision techniques

such as optical flow (Min and Kasturi, 2004), and then

build the related trajectories applying statistical mod-

els such as clustering (Zheng et al., 2005), local prin-

cipal component analysis (Beleznai and Schreiber,

2010), or Bayesian networks (Zhang et al., 2013).

Despite the effectiveness of these approaches, their

grounded representation does not allow natural lan-

guage processing or automated reasoning about them.

On the other hand, some qualitative knowledge-

based methods (Ligozat, 2012) have been developed

to analyze the qualitative motion of objects, e.g. us-

ing interval temporal relations (Gagne and Trudel,

1996). Other state-of-art approaches focus on the ob-

ject’s path consistency check, based on simple spa-

tial relations such as left, right, behind of, in front

of (Kohler et al., 2004), cardinal directions (Brehar

et al., 2011), or qualitative temporal interval models

(Belouaer et al., 2012). However, these existing qual-

itative temporal models do not provide a fully inte-

grated spatio-temporal model and rely only on lim-

ited, qualitative spatial concepts.

Hence, in this work, we aim to integrate the time

notion within natural-language meaningful, qualita-

tive spatial relations such as the clock model (Ol-

szewska, 2015) to built a complete and coher-

ent spatio-temporal model we called cylindric clock

model for both quantitative and qualitative analysis

of scene objects’ kinematics.

Our cylindric clock model thus leads, on one hand,

to the cylindrical representation of the motion and

paths of the objects of interest belonging to the sensed

scene, rather than the traditional box representation

(Beleznai et al., 2006). Therefore, our approach al-

lows a new and user-friendly representation of static

Olszewska, J.

Cylindric Clock Model to Represent Spatio-temporal Trajectories.

DOI: 10.5220/0006649605590564

In Proceedings of the 10th International Conference on Agents and Artificial Intelligence (ICAART 2018) - Volume 2, pages 559-564

ISBN: 978-989-758-275-2

Copyright © 2018 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

559

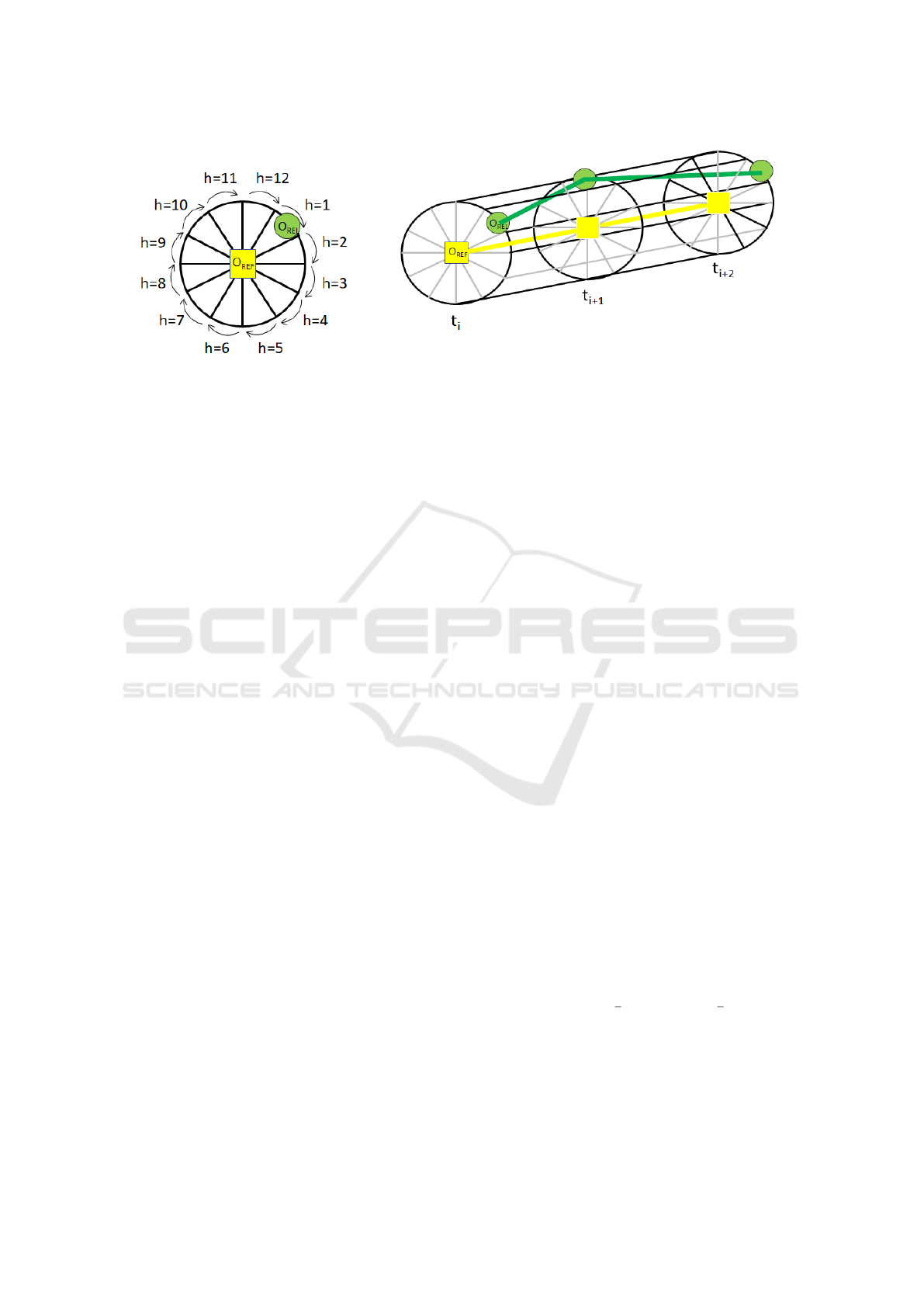

(a) (b)

Figure 1: Overview of (a) the spatial clock model; (b) the spatio-temporal cylindric clock model.

and dynamic objects within the observed scene. On

the other hand, our model conveys both quantitative

and qualitative spatio-temporal knowledge about the

scene objects as well as provides additional spatio-

temporal information, such as the semantic elucida-

tion of the relations between objects’ positions and

their change in time.

Thence, in the cylindric clock model, objects’

trajectories are represented in a three-dimensional

(2D+1) space, with (r,θ) spatial dimensions and the

(t) time dimension, unlike the two-dimensional repre-

sentations with (x,t) coordinates (Bennett et al., 2008),

(Zhang et al., 2013).

As in (Barber and Moreno, 1997), we represent

continuous change in the spatio-temporal space with

discrete time. However, our temporal segmenta-

tion of the space-time scene provides a novel tem-

poral model consisting in cylindrical segments rather

than the state-of-the-art linear time intervals (Allen,

1983; Halpern and Shoham, 1991), branching tem-

poral structures (Bolotov and Dixon, 2000), or time

points (Kowalski and Sergot, 1986; Dean and McDer-

mott, 1987).

We validated our cylindric clock model by apply-

ing it to camera-acquired, spatio-temporal data. It

is worth noting the model allows to process spatio-

temporal data obtained by any sensor recording the

scene and the objects of interest’s motion.

The main contribution of this work is the repre-

sentation of object’s trajectory by means of qualitative

spatio-temporal relations; in particular, its formaliza-

tion as a cylindric clock model. Furthermore, this

model leads to a new conceptualization of the Time

in terms of cylindrical segments.

The paper is structured as follows. In Section 2,

we present our qualitative spatio-temporal model we

called cylindric clock model. This proposed method

has been successfully tested on real-world datasets as

reported and discussed in Section 3. Conclusions are

drawn up in Section 4.

2 PROPOSED APPROACH

The proposed cylindric clock model is a 2D + 1

spatio-temporal model, where the 2D space of the

scene is divided into 12 parts (h) as per spatial clock

model (Olszewska, 2015) mapping the clock face as

illustrated in Fig. 1(a), and where the t time space is

discretized into i temporal instants (i.e. t

i

, t

i+1

, etc.)

represented as cylindrical segments (see Fig. 1(b)).

Hence, we formalise the cylindric clock model C

as follows:

C = C

t

i

∨ ... ∨C

t

j

, (1)

where t

i

and t

j

are the temporal instants when the

observation of the scene starts and stops, respectively,

with t

i

< t

j

, and where C

t

i

is a spatial relation between

two objects of interest, namely, the reference object

O

REF

and the relative sought object O

REL

, present in

the 2D view of the scene at the instant t

i

:

C

t

i

= R(O

REF

, O

REL

)

t

i

, (2)

with R a 2D, directional, clock-based spatial rela-

tion such as hCK, with h ∈ {1, 2, .., 12} and h ∈ N.

For example in Fig. 1, the clock-modeled relation

between O

REF

and O

REL

at the instant t

i

and in the

t

i

2D view has the semantic meaning of the natural-

language expression is at 1 o’clock and is represented

by the hCK relation, with h = 1, as follows:

hCK

t

i

= {(r

t

i

, θ

t

i

)|

π

6

+ 2kπ < θ

t

i

≤

π

3

+ 2kπ,

k ∈ N, h = 1},

(3)

where (r

t

i

, θ

t

i

) are the polar coordinates in the 2D

view of the observed scene at the instant t

i

, where r

t

i

is the radius or distance d

t

i

between O

REF

and O

REL

in the t

i

2D view, and with θ

t

i

the polar angle between

ICAART 2018 - 10th International Conference on Agents and Artificial Intelligence

560

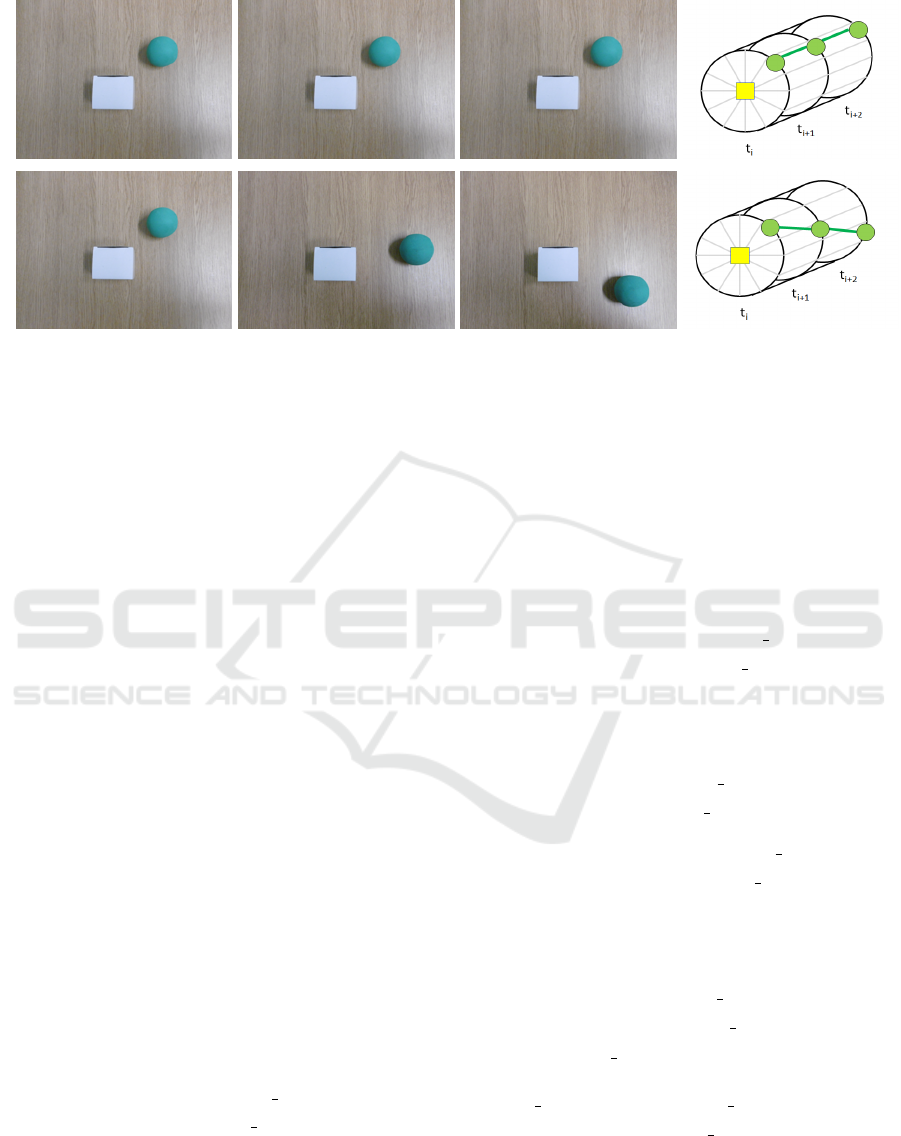

(a) (b) (c) (d)

Figure 2: Results samples for an observed scene with O

REF

(static white box) and O

REL

(green ball). Columns: (a)-(c) frames

of the scene captured at instants t

i

, t

i+1

, and t

i+2

, respectively; and (d) the resulting cylindrical temporal segments and O

REL

path represented by means of the spatio-temporal cylindric clock model. Rows: (1st) static O

REL

; (2nd) dynamic O

REL

.

the horizontal axis and the line determined by O

REF

and O

REL

in the t

i

2D view.

The trajectory P of the object of interest could be

thus defined as:

P = {R

t

i

, ..., R

t

j

, with i, j ∈ N, i < j}, (4)

for the object of interest’s path from instant t

i

to t

j

within the observed scene and taking into account the

definitions in Eqs. 2-3.

Unlike (Meyer and Bouthemy, 1993), we do not

assume the continuity of the object’s velocity. There-

fore in our model, the O

REL

objects could be static or

dynamic or both, assuming O

REF

is always static.

If the O

REL

object is static within a period of ob-

servation of the scene [k, p], with k ∈ N, k < p and

p 6= 0, or if the O

REL

object stops moving for a period

of time between instants k and p, then we can express

the static path P

s

⊆ P, as follows:

P

s

≡ {∃k, p, R

t

k

= R

t

k+1

= ... = R

t

p

}; (5)

otherwise, O

REL

object is dynamic, in which case

we can formalize the dynamic path as P

d

⊆ P, with:

P

d

≡ {∃l, R

t

l

6= R

t

l+1

with l ∈ N

0

}. (6)

Furthermore, we define the hasMoved ontological

concept using Description Logics (DL) (Baader et al.,

2010), as follows:

hasMoved v Temporal Relation

v Spatial Relation

u ∃O

REF

u ∃O

REL

u ¬isStatic,

(7)

where isStatic is defined in terms of Eqs. 5-6.

As appearance and/or disappearance of objects of

interest from the scene (Olszewska, 2017b) is an im-

portant issue for the continuity of the global trajectory

of the objects (Meyer and Bouthemy, 1993), we in-

troduce the hasAppeared and hasDisappeared on-

tological notions in our model with temporal DL (Ar-

tale and Franconi, 1999), as follows:

hasAppeared(@t

i

) v Temporal

Relation

v Spatial Relation

u ∃O

REF

u ∃O

REL

u (t

i−1

)(t

i

)(t

i−1

< t

i

)

· (¬has hCK@t

i−1

u has hCK@t

i

),

(8)

hasDisappeared(@t

i

) v Temporal Relation

v Spatial Relation

u ∃O

REF

u ∃O

REL

u (t

i−1

)(t

i

)(t

i−1

< t

i

)

· (has hCK@t

i−1

u ¬has hCK@t

i

),

(9)

where the has hCK(@t

i

) ontological concept is in-

troduced as follows:

has hCK(@t

i

) v Temporal Relation

v Spatial Relation

u ∃O

REF

u ∃O

REL

u (t

i

)

· ∃(1CK t ... t 12CK)@t

i

.

(10)

Cylindric Clock Model to Represent Spatio-temporal Trajectories

561

It is worth noting that in both cases, i.e. when an

object of interest appears or disappear from a scene,

this leads to dynamic trajectories as defined in our

cylindric clock model by Eq. 6.

3 EXPERIMENTS AND

DISCUSSION

To evaluate the performance of our spatio-temporal

model, our relations introduced in Section 2 have

been implemented within the STVO ontology (Ol-

szewska, 2011), using Prot

´

eg

´

e software and FACT++

automated reasoner.

Both development and experiments have been run

on a computer with Intel(R) Pentium (R) CPU N3540,

2.16 GHz, 4Gb RAM, 64-bit OS.

In our experiments on reasoning with our 2D+1

relations about real-world scenes, we merged two

datasets, one from the work of (Olszewska, 2015) for

static objects’ paths, and one with mixed static and

dynamic trajectories of objects of interests. Samples

of the datasets and corresponding results have been

presented in Fig. 2.

In particular, the global dataset is composed of

top views of camera-acquired scenes with two to five

objects of interests evolving in a real-world environ-

ment, leading to 1214 possible spatio-temporal re-

lations in between two different objects, one static

(O

REF

) and one static and/or dynamic (O

REL

). On the

other hand, this entire dataset has also a ground-truth

file attached to it, where the semantic spatio-temporal

relations have been identified by three humans for

cross-validation purpose and described with the cor-

responding poll-winning result. As an example, the

spatial description corresponding to Fig. 2(a) is ‘the

green ball (O

REL

) is 1CK (right above) the white box

(O

REF

)’, while the one related to Fig. 2(c) (2nd row)

is ‘the green ball (O

REL

) has moved to 3CK (right be-

low) the white box (O

REF

)’.

Table 1: Performance of our proposed approach for object’s

move detection (experiment 1) and trajectory building (ex-

periment 2), when using state-of-art methods (*) (Beleznai

et al., 2006), (**) (Belouaer et al., 2012), and (our) cylin-

dric clock model, respectively. The presented rates are the

mean average values.

state-of-the-art our approach

experiment 1 93% * 99.4%

experiment 2 97%** 98.2%

The first carried-out experiment consists in detect-

ing O

REL

objects evolving in the recorded scenes, as

illustrated in Fig. 2. For this purpose, we compared

our algorithm’s performance in terms of standard de-

tection rate with a traditional computer-vision based

method (Beleznai et al., 2006).

We can observe in Table 1 that our approach out-

performs the state-of-art quantitative technique. In-

deed, our average detection rate over the entire dataset

is of 99.4%, allowing the detection of both static and

dynamic objects of interest. Moreover, our system al-

lows to perform automated reasoning about the scene

objects of interest.

In the second experiment, we applied our method

to the dataset images in order to build consistently the

related trajectories of the detected objects with our al-

gorithm.

Examples of the results obtained when building

objects’ trajectories within spatio-temporal space are

presented in Fig. 2 for both static and dynamic objects

of interest.

We observe that a static object follows a linear tra-

jectory within our model, whereas dynamic objects’

trajectories are of an helical type.

For the experiment 2, we compared our ap-

proach’s performance in terms of the accuracy of the

built trajectory with the the qualitative method (Be-

louaer et al., 2012) using well-established time inter-

vals.

As reported in Table 1, our overall mean aver-

age accuracy rate across the dataset for the different

scenes and objects of interest is of 98.2%, outper-

forming the results obtained by using existing qual-

itative temporal models (Belouaer et al., 2012), while

our cylindric clock model provides the object’s path

within few milliseconds.

Hence, these values obtained in both cases by our

model demonstrate excellent scores and outperform

the state-of-art ones, in both accuracy and computa-

tional efficiency. Moreover, our cylindric clock model

provides meaningful natural-language interpretation

of the spatio-temporal relations in between the ob-

jects of interest and allows both numeric and seman-

tic description of their trajectories. Hence, our system

could be used in real-world applications for the nav-

igation of autonomous systems and/or multiple con-

versational agents.

4 CONCLUSIONS

In this work, we proposed new, qualitative spatio-

temporal relations leading to the cylindric clock

model, which allows the 2D+1 representation of the

trajectories of objects evolving in an observed scene,

where the time is conceptualized in terms of cylin-

drical segments. Experiments in real-world con-

ICAART 2018 - 10th International Conference on Agents and Artificial Intelligence

562

text demonstrate the effectiveness and usefulness of

our approach compared to state-of-the-art techniques.

Thus, our model could be applied to intelligent sys-

tems for multiple agents and/or autonomous systems’

navigation and guidance aid.

REFERENCES

Allen, J. (1983). Maintaining knowledge about temporal

intervals. Communications of the ACM, 26(11):832–

843.

Artale, A. and Franconi, E. (1999). Introducing temporal

description logics. In Proceedings of the IEEE Inter-

national Workshop on Temporal Representation and

Reasoning (TIME), pages 2–5.

Baader, F., Calvanese, D., McGuinness, D. L., Nardi, D.,

and Patel-Schneider, P. F. (2010). The Description

Logic Handbook: Theory, Implementation and Appli-

cations. Cambridge University Press, 2nd. edition.

Barber, F. and Moreno, S. (1997). Representation of con-

tinuous change with discrete time. In Proceedings of

the IEEE International Symposium on Temporal Rep-

resentation and Reasoning (TIME), pages 175–179.

Beleznai, C., Fruhstuck, B., and Bischof, H. (2006). Multi-

ple object tracking using local PCA. In Proceedings of

the IEEE International Conference on Pattern Recog-

nition (ICPR), pages 79–82.

Beleznai, C. and Schreiber, D. (2010). Multiple ob-

ject tracking by hierarchical association of spatio-

temporal data. In Proceedings of the IEEE Interna-

tional Conference on Image Processing (ICIP), pages

41–44.

Belouaer, L., Bouzid, M., and Mouhoub, M. (2012). Dy-

namic path consistency for spatial reasoning. In Pro-

ceedings of the IEEE International Conference on

Tools with Artificial Intelligence (ICTAI), pages 1004–

1009.

Bennett, B., Magee, D., Cohn, A., and Hogg, D. (2008).

Enhanced tracking and recognition of moving objects

by reasoning about spatio-temporal continuity. Image

and Vision Computing, 26(1):67–81.

Bolotov, A. and Dixon, C. (2000). Resolution for branch-

ing time temporal logics: Applying the temporal reso-

lution rule. In Proceedings of the IEEE International

Symposium on Temporal Representation and Reason-

ing (TIME), pages 163–172.

Brehar, R., Fortuna, C., Bota, S., Mladenic, D., and Nede-

vschi, S. (2011). Spatio-temporal reasoning for traffic

scene understanding. In Proceedings of the IEEE In-

ternational Conference on Intelligent Computer Com-

munication and Processing, pages 377–384.

Cooharojananone, N., Kasamwattanarote, S., Satoh, S., and

Lipikorn, R. (2010). Real-time trajectory search in

video summarization using direct distance transform.

In Proceedings of the IEEE International Conference

on Signal Processing, pages 932–935.

Dash, T., Mishra, G., and Nayak, T. (2012). A novel ap-

proach for intelligent robot path planning. In Proceed-

ings of the AIRES Conference, pages 388–391.

Dean, T. and McDermott, D. (1987). Temporal data base

management. Artificial Intelligence, 32(1):1–55.

Gagne, D. and Trudel, A. (1996). A topological transi-

tion based logic for the qualitative motion of objects.

In Proceedings of the IEEE International Symposium

on Temporal Representation and Reasoning (TIME),

pages 176–181.

Halpern, J. and Shoham, Y. (1991). A propositional

modal logic of time intervals. Journal of the ACM,

38(4):935–962.

Kalantar, B., Mansor, S. B., Halin, A. A., Shafri, H. Z. M.,

and Zang, M. (2017). Multiple moving object detec-

tion from UAV videos using trajectories of matched

regional adjacency graphs. IEEE Transactions on

Geoscience and Remote Sensing, 55(9):5198–5213.

Kohler, C., Ottlik, A., Nagel, H.-H., and Nebel, B. (2004).

Qualitative reasoning feeding back into quantitative

model-based tracking. In Proceedings of the Eu-

ropean Conference on Artificial Intelligence (ECAI),

pages 1041–1042.

Kowalski, R. and Sergot, M. (1986). A logic-based calculus

of events. New Generation Computing, 6795(4):217–

240.

Ligozat, G. (2012). Qualitative Spatial and Temporal Rea-

soning. John Wiley & Sons.

Meyer, F. and Bouthemy, P. (1993). Exploiting the temporal

coherence of motion for linking partial spatiotemporal

trajectories. In Proceedings of the IEEE International

Conference on Computer Vision and Pattern Recogni-

tion (CVPR), pages 746–747.

Min, J. and Kasturi, R. (2004). Extraction and temporal

segmentation of multiple motion trajectories in hu-

man motion. In Proceedings of the IEEE International

Conference on Computer Vision and Pattern Recongi-

tion Workshop (CVPR).

Olszewska, J. (2015). 3D Spatial reasoning using the clock

model. In Research and Development in Intelligent

Systems, volume 32, pages 147–154. Springer.

Olszewska, J. (2017a). Clock-model-assisted agent’s spa-

tial navigation. In Proceedings of the Interna-

tional Conference on Agents and Artificial Intelli-

gence (ICAART’17), pages 687–692.

Olszewska, J. (2017b). Detecting hidden objects using ef-

ficient spatio-temporal knowledge representation. In

Revised Selected Papers of the International Confer-

ence on Agents and Artificial Intelligence. Lecture

Notes in Computer Science, volume 10162, pages

302–313.

Olszewska, J. I. (2011). Spatio-temporal visual ontology. In

Proceedings of the BMVA-EACL Workshop on Vision

and Language.

Summers-Stay, D., Cassidy, T., and Voss, C. R. (2014).

Joint navigation in commander/robot teams: Dia-

log and task performance when vision is bandwidth-

limited. In Proceedings of the ACL International

Conference on Computational Linguistics Workshop

(COLING), pages 9–16.

Cylindric Clock Model to Represent Spatio-temporal Trajectories

563

Sun, J., Mu, Y., Yan, S., and Cheong, L.-F. (2010). Activity

recognition using dense long-duration trajectories. In

Proceedings of the IEEE International Conference on

Multimedia and Expo, pages 322–327.

Young, J. and Hawes, N. (2014). Effects of training

data variation and temporal representation in a QSR-

based action prediction system. In Proceedings of

the AAAI International Conference on Artificial Intel-

ligence (AAAI).

Yue, H. and Revesz, P. (2012). TVICS: An efficient traf-

fic video information converting system. In Proceed-

ings of the IEEE International Symposium on Tem-

poral Representation and Reasoning (TIME), pages

141–148.

Zhang, Y., Zhang, Y., Swears, E., Larios, N., Wang, Z., and

Ji, Q. (2013). Modeling temporal interactions with in-

terval temporal Bayesian networks for complex activ-

ity recognition. IEEE Transactions on Pattern Analy-

sis and Machine Intelligence, 35(10):2468–2483.

Zheng, J.-B., Feng, D. D., and Zhao, R.-C. (2005). Trajec-

tory matching and classification of video moving ob-

jects. In Proceedings of the IEEE Workshop on Multi-

media Signal Processing, pages 1–4.

ICAART 2018 - 10th International Conference on Agents and Artificial Intelligence

564