Virtual Exploration: Seated versus Standing

Noah Coomer, Joshua Ladd and Betsy Williams

Department of Mathematics and Computer Science, Rhodes College, 2000 N Parkway, Memphis, TN, U.S.A.

Keywords:

Virtual Environments, Spatial Updating, Locomotion.

Abstract:

Virtual environments are often explored standing up. The purpose of this work is to understand if standing

exploration has an advantage over seated exploration. Thus, we present an experiment that directly compares

subjects’ spatial awareness when locomoting with a joystick when they are physically standing up versus

sitting down. In both conditions, virtual rotations matched the physical rotations of the subject and the joystick

was only used for translations through the virtual environment. In the seated condition, users sat in an armless

swivel office chair. Our results indicated that there was no difference between the two conditions, sitting

and standing. However, this result is interesting and might compel more virtual environment developers to

encourage their users to sit in a comfortable swivel chair. As an additional finding to our study, we find a

significant difference between the performance of males versus females and gamers versus non–gamers.

1 INTRODUCTION

With the introduction of low cost commodity level

immersive virtual systems (like the Oculus Rift, HTC

Vive, and GearVR), the use of virtual reality (VR)

has become more widespread. With these systems,

users wear a head-mounted displays (HMDs) to view

a 3D computer generated world. Exciting, new re-

search involving this equipment has been published

such as analyzing the effectiveness of directional sig-

nage (Huang et al., 2016), visiting a historical Se-

limiye mosque in Turkey (Kersten et al., 2017), al-

lowing doctors to visualize 3D MRI slices (Duncan

et al., 2017), and treating spider phobia (Miloff et al.,

2016). When playing video games, HMDs have been

shown to produce stronger, more immersive experi-

ences than desktops and televisions (Pallavicini et al.,

2017). Given the relatively low–cost of these im-

mersive virtual systems and their potential to produce

more meaningful experiences, VR might have a huge

impact on learning, social interaction, medical care,

training workers, entertainment, and so on. However,

much is still unknown about how humans learn in a

virtual environment (VE) and how this learning is dif-

ferent than the real world.

Humans reason about space in a VE in a way

that is functionally similar to the real world (Williams

et al., 2007). That is, they update their spatial knowl-

edge or spatial orientation with respect to their envi-

ronment as their relationship to objects in the envi-

ronment change much like the real world. However,

humans are more disoriented in VEs (Darken and Pe-

terson, 2014; Kelly et al., 2013). Thus, navigation, the

most common way people interact with a VE (Bow-

man et al., 2004), causes people to feel disorientated.

Exploring a VE by physically walking seems to re-

sult in the best spatial awareness (Darken and Peter-

son, 2014), but the size of the space that can be ex-

plored using a tracking system is limited without al-

ternate interventions such as teleporting. Much work

has looked at how best to explore a VE larger than

the tracked space while maintaining spatial aware-

ness (Kitson et al., 2017; Riecke et al., 2010; Suma

et al., 2012; Wilson et al., 2016; Zielasko et al., 2016).

Much of the more recent navigation work has fo-

cused on engaging the user in physical movement as

it seems to result in better spatial awareness of the VE

as compared to using a joystick (Wilson et al., 2016;

Waller and Hodgson, 2013). Alternatively, (Riecke

et al., 2010) found that when the joystick was com-

bined with physical rotations that performance com-

pared to actual walking was similar in terms of search

efficiency and time.

Many of the proposed methods of locomotion

used by researchers (Kitson et al., 2017; Suma et al.,

2012; Wilson et al., 2016; Zielasko et al., 2016) and

in industry (such as a tracked system with teleporting

(Bozgeyikli et al., 2016)) involve the user standing

up to explore the VE. Standing can be tiring to VR

users, especially while wearing a helmet that can get

warm. Sedentary behaviors such as sitting in front

of a television or computer, and riding in an auto-

264

Coomer, N., Ladd, J. and Williams, B.

Virtual Exploration: Seated versus Standing.

DOI: 10.5220/0006624502640272

In Proceedings of the 13th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2018) - Volume 1: GRAPP, pages

264-272

ISBN: 978-989-758-287-5

Copyright © 2018 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

Figure 1: This picture shows the two conditions, standing

and seated exploration in a VE.

mobile typically are in the energy-expenditure range

of 1.0 to 1.5 METs (multiples of the basal metabolic

rate)(Ainsworth et al., 2000). Thus, behaviors that

involve sitting involve low energy expenditure and

might be more natural to encourage the widespread

adoption of VR for a variety of applications, espe-

cially entertainment.

In this work, we examine what happens to spa-

tial orientation when subjects explore a VE with a

joystick seated as compared to standing. Subjects

used the joystick for translating in the VE while ro-

tations matched that of the real world. Thus, similar

to (Riecke et al., 2010), subjects in our experiment

translate in the direction that their head is facing by

pushing the joystick forward. It is important to note

that the major difference between the two conditions

was that in one condition the subjects were standing

and the other condition the subjects were seated on a

swivel chair. Eye height for two conditions matched

the physical eye height of the participant. In this

work we could have studied distance perception or

looked at different locomotion interfaces to compare

seated versus standing. However, for our own pur-

poses we were interested in what happens when peo-

ple move and navigate through environments while

seated. Thus, we decided to run an experiment that

examines spatial awareness as subjects move through

a VE. Most video games involve some sort of hap-

tic devices that allows the user to move around in the

environment. Joystick translations plus physical rota-

tions can easily be achieved while sitting or standing.

In the real world there has been some evidence

of difference between sitting and standing observa-

tions. (Carello et al., 1989) conducted four real world

experiments and found different effects for reaching

when people stood versus sat down. While sitting,

subjects had a tendency to overestimate the reaching

range of their arm while imagining picking up a target

object placed at different distances in front of them.

While standing, there was an underestimation of per-

ceived reachability when participants were standing

upright. In the virtual world, not much is known about

seated versus standing observations. Much work has

studied the issue of the similarities and differences

in distance estimation between real and virtual envi-

ronments (Loomis and Knapp, 2003; Willemsen and

Gooch, 2002; Li et al., 2016). These works all find

that subjects underestimate distances in VEs.

2 BACKGROUND

Haptic devices, such as joystick or keyboard, have

been used to explore environments for many years

(Ruddle et al., 1999; Bowman et al., 1999; Waller

et al., 1998). However, studies have consistently

shown that physically walking rather than using a

haptic device produces significantly better spatial ori-

entation (Ruddle and Lessels, 2006; Lathrop and

Kaiser, 2002). Suma et al. (Suma et al., 2007) show

that using position and orientation tracking with an

HMD is significantly better than using a system that

combines the orientation tracking and a haptic de-

vice for translations. However, (Riecke et al., 2010)

found that joystick translations and physical rotations

led to better performance than joystick navigation,

and yielded almost comparable performance to actual

walking in terms of search efficiency and time. Thus,

in our experiment, the users’ orientation in the VE

matched their physical head orientation.

(Nybakke et al., 2012) preform a within subjects

experiment similar to (Riecke et al., 2010) where sub-

jects search the contents of 16 randomly positioned

and oriented boxes to find 8 hidden target using four

locomotion modes: real walking, joystick translation

with real rotation while standing, joystick translation

while sitting, and motorized wheel chair. They find

a marginal effect of condition for number of revisits

to the same hidden target, but pairwise comparisons

did not reveal differences. They found that that the

distance traveled was smaller in the walking condi-

tion and it took less time to complete the trial when

users physically walked. The spatial orientation task

in our experiment is similar to (Williams et al., 2006)

where they find a significant advantage of walking

over joystick plus physical rotation exploration. We

suggest that it could be that the task outlined in (Ny-

bakke et al., 2012) and (Riecke et al., 2010) is not hard

enough to yield differences and could explain why

Riecke et al. found results that are somewhat incon-

sistent with the literature. (Waller et al., 2003) found

that people who received the motion cues from riding

in a car were no better at learning the spatial layout

Virtual Exploration: Seated versus Standing

265

of the environment over people who were provided

with only the visual optical flow of the same experi-

ence. However, in both of these conditions, the par-

ticipant passively experienced both the motion and vi-

sual stimuli, and did not actively control the process.

(Waller and Greenauer, 2007) presented a study in-

tended to contrast the effects of visual, proprioceptive,

and inertial information to acquire spatial knowledge

in a large-scale environment. Using a variety of mea-

sures, such as pointing, distance estimation, and map

drawing, they observed very few differences among

groups of participants who had access to various com-

binations of visual, priopriocetive and interracial in-

formation. Proprioceptive information did produce a

small effect. They found that pointing accuracy was

better in people who had proprioceptive cues.

In our everyday lives, we humans constantly

change our viewing perspective by sitting, standing,

etc., yet the perceived size of objects remains the

same. As (Wraga, 1999) points out, this may be be-

cause of familiar size or previous knowledge about

size and shape (Gogel, 1977; Rock, 1975). (Gib-

son, 1950) showed that the ground plane provides

crucial information about an object’s location in an

environment. Gibson explained that there were two

reasons why the ground plane played such a crucial

role in our understanding of space. First, humans

rely on ground surfaces for locomotion and second,

that ground planes are universal whereas ceilings are

mostly human created. Thus, the ground or horizon is

important for perceiving the distance and size of ob-

jects. More specifically, the angle of declination from

the horizon line to an object is an important source of

information. The angle of declination is defined as the

vertical viewing angle from a target object to the hori-

zon. In nature, the horizon is always at the observer’s

eyelevel. Therefore, the higher the object appears on

the horizon, the further its distance appears to the

observer. In the experiment presented in this work,

users experience a 50m by 50m outdoors environ-

ment. Other studies have shown the importance of eye

height. (Wraga, 1999) compare seated, standing and

ground–level prone observations and find that seated

and standing observations are similar, but prone ob-

servations are significantly less accurate. (Warren,

1984) found that people judged whether they could sit

on a surface according to whether the surface height

exceeded 88% of their leg length. Moreover, people

choose to climb or sit on a surface according to the re-

lationship between the surface’s height and their eye

height (Mark, 1987). Prior work has shown manipu-

lating eye height can produce a predictable effect on

judged distances measured with verbal estimates, and,

thus, could be a promising candidate to reduce or even

eliminate distance underestimation in a similar way

across different measures (Leyrer et al., 2015). Ad-

ditionally, prior work found that eye height manipu-

lation not only affects perceived distances, but also

judgments of single room dimensions, suggesting a

change in perceived scale rather than a cognitive cor-

rection in distance judgments (Leyrer et al., 2011).

3 EXPERIMENTAL EVALUATION

We compare seated joystick and standing joystick ex-

ploration in this experiment. We tested 24 subjects

(12 female, 12 male) on their spatial orientation in the

environment using a within-subjects design. After a

user finished both navigation methods, we asked them

a series of questions. In both conditions, pushing all

the way forward on the joystick resulted in translat-

ing through the VE a rate of 1 m/s, which is approx-

imately normal walking speed. Participants moved

with the joystick in the yaw-direction of gaze for both

conditions. Eye height in the VE matched the phys-

ical eye height of the participant. Thus, seated ex-

ploration was at a lower eye height than standing. In

both conditions, the subjects memorized a set of ob-

jects, locomoted to various positions and then turned

to face these remembered target objects.

3.1 Materials

The experiment was conducted in a 3m by 3m room

that only contained the head-mounted display. The

immersive VE was viewed through a HTC Vive head-

mounted display (HMD) which had a resolution of

1080x1200 per eye, a field-of-view of 110

◦

diago-

nally, a weight of 0.47 kg and an optical frame rate

of 90Hz. Orientation was updated using the orienta-

tion from the sensor found on the Vive. The joystick

used in this experiment was a Logitech Extreme 3D

Pro. Graphics were rendered using the Unity. The

swivel desk chair used in the experiment was a HON

Volt Series 5701 Task Chair.

The immersive VE used in the experiment was a

circular shaped grassy plane (50m diameter) with a

generic dome backdrop depicting the sky as seen in

Figure 2. For each condition, the user would memo-

rize the positions of 6 objects placed around the en-

vironment on columns (Figure 2). The heights of the

columns varied so that the top of the objects were al-

ways at the same level. After the user had memorized

the locations of the objects, the tests would begin.

These six target objects were arranged in a particu-

lar configuration, such that the configuration in both

conditions varied only by a rotation about the cen-

ter axis. In this manner, the angles of correct yaw-

GRAPP 2018 - International Conference on Computer Graphics Theory and Applications

266

Figure 2: A view of the virtual environment.

angle responses were preserved across all conditions.

The random order of trials and the different objects

concealed the fact that the arrangement was the same

throughout the experiment. Objects were similar in

size and height and were placed on pillars. We used

a red cylinder and a red floating sphere to indicate the

position and orientation, respectively, of the testing

location for a particular trial. The red cylinder was

used to indicate the position of the subject that was

needed for that particular turn-to-face trial. The cor-

rect orientation was facing the red sphere at the red

cylinder. In this manner, the cylinder and sphere were

used to control the exact testing location and orienta-

tions of the subjects.

In both conditions, the target objects and testing

locations where located with a radius of 8m from the

center of the plane. Subjects were instructed to re-

main relatively close to the center and the experi-

menter told the participants to move more towards the

center of the environment if they were venturing too

far from the space. In this manner, the explored space

was roughly the same in both conditions.

3.2 Procedure

Each of the 24 participants explored each of the en-

vironments under the two different translational loco-

motion conditions, seated joystick and standing joy-

stick. Half of the subjects performed the seated ex-

periment first and half of the subjects performed the

standing experiment first. Order was also counter-

balanced for gender. The experimental procedure

was verbally explained to the subjects using physi-

cal props prior to seeing the VEs. Before the subject

saw the target objects in each condition, the partic-

ipant was shown two objects on pillars that did not

appear in our test set. Participants performed several

practice trials in this environment so that they were

familiar with the setup and the experimental design.

After the subject understood the task and the condi-

tion, the practice target objects disappeared. The par-

ticipant then practiced the locomotion condition by

moving to various targets in the environment for 2

minutes. Once the participants were comfortable with

this, they were asked to memorize the set of target ob-

jects. During the learning phase, subjects were asked

to learn the positions of the six target objects while

freely moving around the VE according to the con-

dition that they were in. After about five minutes of

study, the experimenter tested the subject by having

them close their eyes, and point to randomly selected

targets. This testing and learning procedure was re-

peated until the subject felt confident that the config-

uration had been learned and the experimenter agreed.

Participants’ spatial knowledge was tested from

six different locations. A given testing position and

orientation was indicated to the subject by the appear-

ance of a red cylinder and a red sphere in the envi-

ronment. Participants were instructed to locomote to

the cylinder until it turned green and then turn to face

the floating sphere until it turned green. When both

the cylinder and sphere were green, the participants

were in an appropriate position. When the subject

reached this position, the objects were hidden so that

the participant only saw the cylinder and the sphere

on a black background. After the participant was told

which object to turn to face, the cylinder and sphere

disappeared and the participant briefly saw the name

of the target object. Specifically the subjects were told

“turn to face the <target name>”. Once the partici-

pant indicated that they had turned to face the object,

the angle turned, the angle of correct response and the

latency associated with turning to face the object were

recorded. The cylinder and sphere reappeared and the

participant then turned back to face the sphere until it

turned green again. Then, the subject was instructed

to face another target object. At each location, the

subject completed three trials by turning to face three

different target objects in the environment, making 18

trials per condition. After completing three trials at

a particular testing location, the participant was asked

to face the sphere until it turned green before the envi-

ronment and objects were displayed again so that the

participant would not receive any feedback. After the

environment and objects were shown again, the cylin-

der and sphere were moved to the next target location.

Subjects were encouraged to re–orient themselves af-

ter completing a testing location.

To compare the angles of correct responses across

conditions, the same trials were used for each condi-

tion. The testing location and target locations were

analogous in both conditions, and targets varied ran-

domly across the environments. The trials were de-

signed so that the disparity was evenly distributed in

the range of 20–180

◦

. That is, the correct angle of

response for each trial was evenly distributed in the

range of 20–180

◦

. Also the testing locations were po-

sitioned in such a way that they would never turn to

face a target object closer than 0.8m.

Virtual Exploration: Seated versus Standing

267

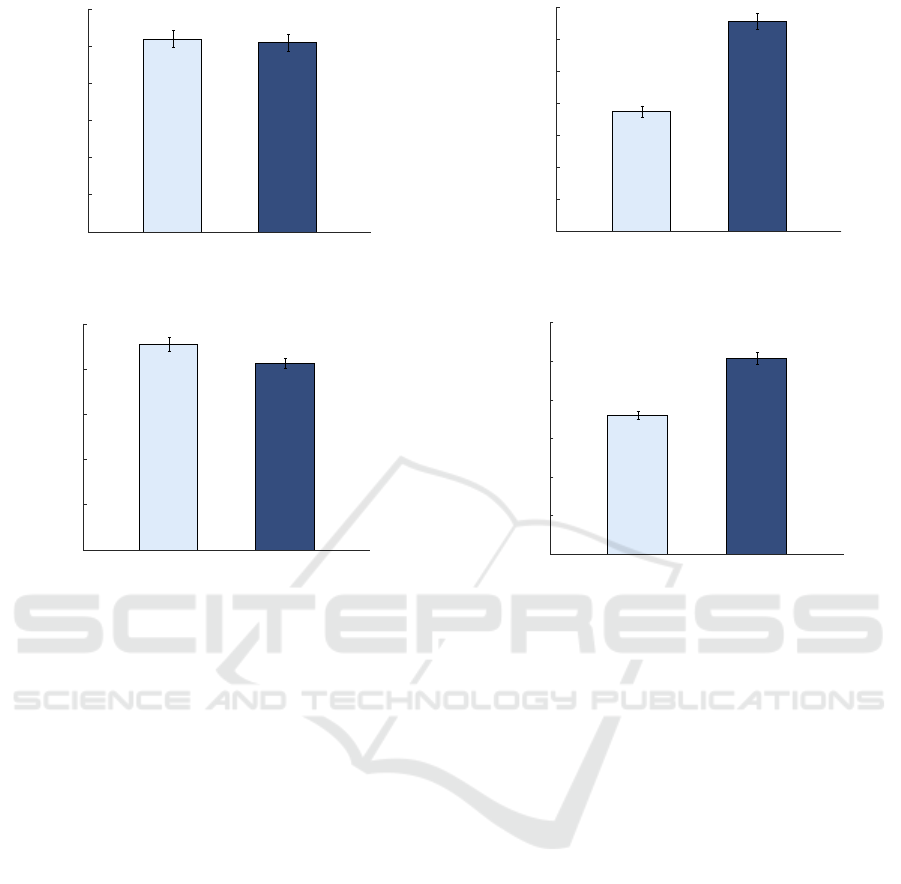

Seated Standing

Turning Error - Degrees

0

10

20

30

40

50

60

Figure 3: Mean turning error for the seated and standing

conditions. Error bars show the standard error of the mean.

Seated Standing

Latency - Seconds

0

1

2

3

4

5

Figure 4: Mean latency for the seated and standing condi-

tions. Error bars indicate the standard error of the mean.

To assess the degree of difficulty of updating ori-

entation relative to objects in the VE, latencies and

errors were recorded. Latencies were measured from

the time when the target was identified until subjects

said they had completed their turning movement and

were facing the target. Unsigned turning errors were

measured as the absolute value of the difference in

initial facing direction (toward the sphere) minus the

correct facing direction. The subjects indicated to the

experimenter that they were facing the target by ver-

bal instruction, and the experimenter recorded their

time and orientation. The time was recorded by com-

puter, and the rotational position was recorded using

the orientation sensor on the HTC Vive HMD. Sub-

jects were encouraged to respond as rapidly as possi-

ble, while maintaining accuracy.

After the experiment, subjects were asked a few

questions. They rated both conditions on a scale of 1

to 5 on how well they thought they did. They were

also asked to choose which method they liked better,

seated or standing exploration. They also indicated if

they regularly play 1st person video games and if they

had ever experienced an immersive VE.

Male Female

Turning Error - Degrees

0

10

20

30

40

50

60

70

Figure 5: Mean turning error collapsed by gender. Error

bars indicate the standard error of the mean.

Male Female

Latency - Seconds

0

1

2

3

4

5

6

Figure 6: Mean latency collapsed by gender. Error bars

indicate the standard error of the mean.

3.3 Results

Figures 3 and 4 show the subjects’ mean turning er-

rors and latencies by locomotion condition in the VE.

9 out of 24 of our subjects regularly play video games.

Also, 9 out of 24 of our subjects had experienced a

VE. Only a few subjects were both a gamer and had

prior experience in an immersive VE. Subjects were

asked to report how well they did on a 5 point Lik-

ert scale from 1 to 5. 1 indicating that they felt their

performance was bad and 5 indicating that they felt

their performance was good. For the seated condition

this average was 2.875 across the participants. For the

standing task this value was a little lower at 2.79. 11

subjects prefered the seated condition while 13 sub-

jects preferred the standing condition.

We compared mean turning error between the con-

ditions using a repeated measures ANOVA and did

not find any significant differences between the two

conditions. Thus, subjects were similarly accurate

when turning to face the location of target objects in

both conditions. We ran a similar analysis for latency

and found a marginal difference for the seated and

standing conditions, F(1, 23) = 3.621, p = 0.07. We

also ran repeated measures ANOVA using the turning

errors with order between groups and we did not find

GRAPP 2018 - International Conference on Computer Graphics Theory and Applications

268

Non-Gamer Gamer

Turning Error - Degrees

0

10

20

30

40

50

60

70

Mean

Figure 7: Mean turning error collapsed by video game ex-

perience. Error bars indicate the standard error of the mean.

Non-Gamer Gamer

Latency - Seconds

0

1

2

3

4

5

6

Mean

Figure 8: Mean latency collapsed by video game experi-

ence. Error bars indicate the standard error of the mean.

an effect of condition, order, or an interaction of con-

dition and order. An analysis on latency with order

between groups also did not reveal any effects. Thus,

subjects did not seem to get more accurate or faster

over the course of the experiment.

We analyzed mean turning error between the con-

ditions using a repeated measures ANOVA with gen-

der between groups. The analysis not reveal an ef-

fect of condition or a two way interaction between

condition and gender for turning errors. However, we

found a significant effect of gender for turning errors,

F(1, 22) = 11.72, p = .002. Running a similar anal-

ysis with latency yielded similar results with only a

significant effect of gender, F(1, 22) = 7.26, p = .013.

Figures 5 and 6 show the mean turning errors and la-

tencies collapsed across gender. The differences be-

tween these two groups is considerable. Males re-

spond faster and more accurately than females.

Within our pool of 24 subjects, we had 9 gamers

and 15 non–gamers. Gamers identified themselves as

those who self reported that they regularly play video

games. We ran a repeated measures ANOVA on turn-

ing error and latency with gamers and non-gamers as

a between group factor. There were no significant ef-

fects of condition or the interaction of condition and

gamer for turning error and latency. However, there

was an effect of gamer for turning errors (F(1, 22) =

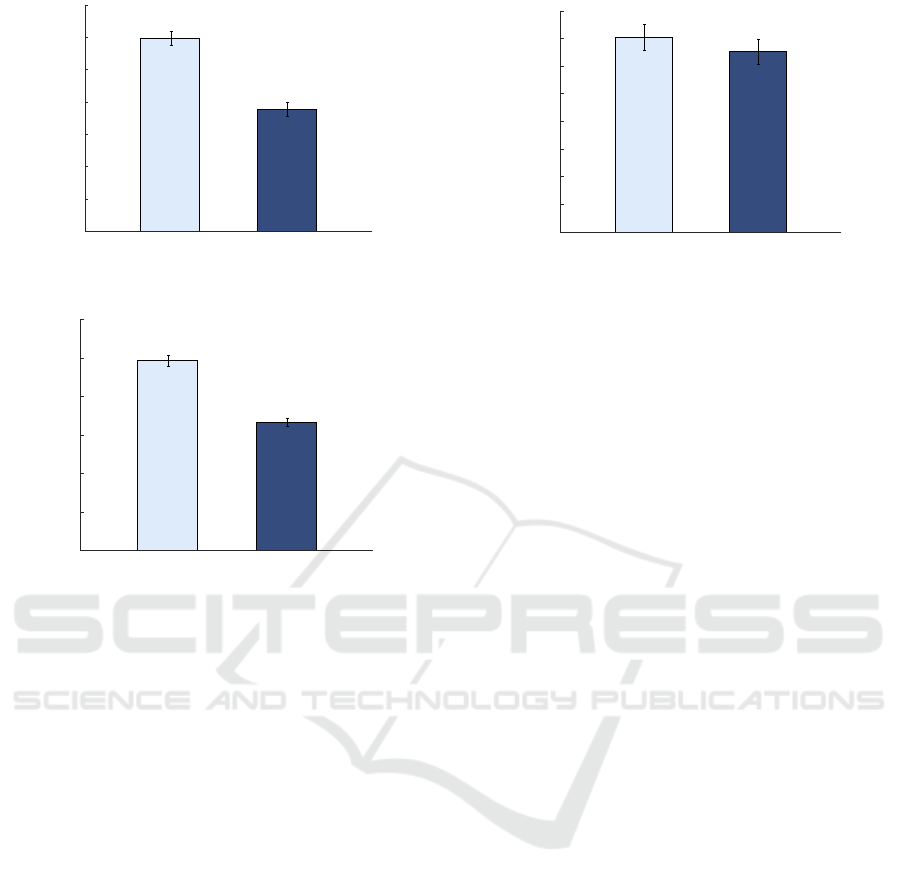

Seated Standing

Turning Error - Degrees

0

5

10

15

20

25

30

35

40

Figure 9: Median turning error for the seated and standing

conditions. Error bars show the standard error of the mean.

4.67, p = 0.04) and for latency (F(1, 22) = 28.77, p =

0.009). Turning errors and latency collapsed across

gamers versus non-games can be seen in Figures 7

and 8, respectively.Video gamers made fewer errors

than non–gamers and responded more quickly. We

did not find any effects as to whether or not someone

has had prior experience exploring an immersive VE.

Figure 9 shows the subjects’ median turning errors

by locomotion condition. The related median latency

figure was omitted because it is similar to Figure 4.

Although the trends are similar to Figure 3, the turn-

ing errors are lower. The reason for the difference

between the median and mean data was mainly due

to the existence of large outliers. We completely re-

ran all of the statistical analyses outlined above and

found similar results using the median turning errors

and median latency. The only difference was that

the repeated measures ANOVA for latency did not re-

veal any significant differences between the two con-

ditions (seated and standing).

4 DISCUSSION

We presented a within–subjects experiment with 24

participants to examine whether seated exploration

or standing exploration has any effect on navigation

through a VE. Before running this experiment, we hy-

pothesized that subjects would be better at standing to

explore the VE. Standing seemed more natural to us

and eye height changes have been shown to have an

effect on distance judgements in VR (Leyrer et al.,

2011; Leyrer et al., 2015). In our studies eye height

always matched the physical eye height of the partic-

ipant. The carefully designed experiment presented

here revealed that subjects update their spatial aware-

ness while navigating through a VE with a joystick

similarly when they are seated versus standing up. It

is important to note that in both conditions users were

able to freely rotate around the VE using their own

Virtual Exploration: Seated versus Standing

269

physical rotations. In the seated condition users sat in

a swivel office chair. We think that these results are

interesting and that more techniques should be imple-

mented that allow the user to fully explore a VE while

sitting down.

From the results, our turning errors are sizable,

but are consistent with similar experiments (Wilson

et al., 2016). Other works have presented virtual ex-

ploration techniques that are not limited in size by the

bounds of the tracking system and outperform the joy-

stick (Kitson et al., 2017; Suma et al., 2012; Wilson

et al., 2016; Zielasko et al., 2016). It will be interest-

ing to see other techniques adapted for seated explo-

ration and possibly be better than the joystick. This

work lays the groundwork for this future direction.

One idea that we had to move this work forward is to

look at manipulating rotations while seated to explore

a VE. Turning around in a swivel chair does require

some effort and it could be possible to scale the rota-

tional gain of the turning so that less physical turning

is required to turn. (Kuhl et al., 2008) showed that

people can recalibrate their rotational gain. That is,

people can adapt to amplified visual rotation and cal-

ibrate their physical turning to adjust accordingly.

After the experiment we asked subjects to com-

ment on their experiences. Some subjects felt strongly

about sitting down to explore the VE while other sub-

jects felt strongly about standing up. The prefer-

ence for one method over the other was almost evenly

split, with 11 subjects preferring seated exploration

and 13 preferring standing. One subject commented

that “standing made me feel like I got lost. I liked the

swivel aspect of sitting, it also felt more like a video

game.” Another subject cited that they preferred sit-

ting because “sitting felt like [they were] in a vehi-

cle.” Two subjects specifically commented about the

swivel aspect of sitting being positive. Another sub-

ject explained that “standing felt much more realistic

than sitting, felt like I had better knowledge of the ob-

jects around me.” The results indicate no clear winner

of the two conditions in terms of latencies, turning er-

rors, and user preference. Thus, we feel like seated

virtual exploration is a good option that needs further

exploration.

We found huge gender differences in this experi-

ment. Mean turning error for females in this exper-

iment was almost double that of males. Moreover,

males were around a second and a half faster than fe-

males. There was only one female game player in

our dataset which could explain some of the large dif-

ferences. There have been many studies that report

males outperforming females in spatial orientation

tasks over the last few decades (Masters and Sanders,

1993; Coluccia and Louse, 2004; Kitson et al., 2016;

Nori et al., 2015). Studies suggest that the females are

more likely to adopt an egocentric strategy as opposed

to males who are more likely to adopt an allocen-

tric strategy when exploring a VE(Kitson et al., 2016;

Chen et al., 2009). These gender differences suggest

the possible need for interface designs of navigational

support systems taking into consideration gender dif-

ferences.

Finally, we find a between subjects effect of

gamers versus non-gamers. Previous studies have

shown that gamers perform spatial task better in VR

(Murias et al., 2016). These works suggest that peo-

ple who play video games regularly have better nav-

igation and topographical orientation skills because

they consistently practice these skills while playing

for the purpose of entertainment. As VR games be-

come more and more prevalent, it will be important

for researcher to understand the effects of video game

exposure on performance of navigation tasks in VEs.

ACKNOWLEDGEMENTS

This material is based upon work supported by the

National Science Foundation under grant 1351212.

Any opinions, findings, and conclusions or recom-

mendations expressed in this material are those of the

authors and do not necessarily reflect the views of the

sponsors.

REFERENCES

Ainsworth, B., Haskell, W., Whitt, M., Irwin, M., Swartz,

A., et al. (2000). Compendium of physical activities:

an update of activity codes and met intensities. Med.

and Sci. in Sports and Exer., 32(9):498–504.

Bowman, D., Davis, E., Hodges, L., and Badre, A. (1999).

Maintaining spatial orientation during travel in an

IVE. Pres., 8(6):618–631.

Bowman, D., Kruijff, E., LaViola, J., and Poupyrev, I.

(2004). 3D User Interfaces: Theory and Practice.

Addison-Wesley.

Bozgeyikli, E., Raij, A., Katkoori, S., and Dubey, R. (2016).

Point & teleport locomotion technique for virtual re-

ality. In ACM CHI PLAY, pages 205–216.

Carello, C., Grosofsky, A., Reichel, F. D., Solomon, H. Y.,

and Turvey, M. T. (1989). Visually perceiving what is

reachable. Ecological psychology, 1(1):27–54.

Chen, C.-H., Chang, W.-C., and Chang, W.-T. (2009). Gen-

der differences in relation to wayfinding strategies,

navigational support design, and wayfinding task dif-

ficulty. J. Envir. Psych., 29(2):220–226.

Coluccia, E. and Louse, G. (2004). Gender differences

in spatial orientation: A review. J. Envir. Psych.,

24(3):329–340.

GRAPP 2018 - International Conference on Computer Graphics Theory and Applications

270

Darken, R. P. and Peterson, B. (2014). Spatial orientation,

wayfinding, and representation.

Duncan, D., Newman, B., Saslow, A., Wanserski, E., Ard,

T., Essex, R., and Toga, A. (2017). VRAIN: VR as-

sisted intervention for neuroimaging. In IEEE VR,

pages 467–468.

Gibson, J. (1950). The perception of the visual world.

Boston. Houghton-Mifflin.

Gogel, W. (1977). Stability and constancy in visual percep-

tion: Mechanisms and processes, chapter The metric

of visual space, pages 129–182. Wiley, New York.

Huang, H., Lin, N.-C., Barrett, L., Springer, D., Wang, H.-

C., Pomplun, M., and Yu, L.-F. (2016). Analyzing

visual attention via VE. In ACM SIGGRAPH ASIA,

pages 8:1–8:2.

Kelly, J., Donaldson, L., Sjolund, L., and Freiberg, J.

(2013). More than just perception–action recalibra-

tion: Walking through a VE causes rescaling of per-

ceived space. Attn., Perc., & Psych., 75:1473–1485.

Kersten, T., B

¨

uy

¨

uksalih, G., Tschirschwitz, F., Kan, T.,

Deggim, S., Kaya, Y., and Baskaraca, A. (2017). The

selimiye mosque of edirne, turkey-an immersive and

interactive VR experience using HTC vive. Intern. Ar.

of the Photo., Rem. Sens. & Spat. Info. Sci., 42.

Kitson, A., Hashemian, A. M., Stepanova, E. R., Kruijff,

E., and Riecke, B. E. (2017). Lean into it: Explor-

ing leaning-based motion cueing interfaces for virtual

reality movement. In IEEE VR, pages 215–216.

Kitson, A., Sproll, D., and Riecke, B. E. (2016). Influence

of ethnicity, gender and answering mode on a virtual

point-to-origin task. Front. in Behav. Neuro., 10.

Kuhl, S. A., Creem-Regehr, S. H., and Thompson, W. B.

(2008). Recalibration of rotational locomotion in im-

mersive virtual environments. ACM TAP, 5(3):17.

Lathrop, W. B. and Kaiser, M. K. (2002). Perceived orienta-

tion in physical and VEs: Changes in perceived orien-

tation as a function of idiothetic information available.

Pres., 11(1):19–32.

Leyrer, M., Linkenauger, S., B

¨

ulthoff, H., Kloos, U., and

Mohler, B. (2011). The influence of eye height and

avatars on egocentric distance estimates in immersive

virtual environments. In ACM APGV, pages 67–74.

Leyrer, M., Linkenauger, S., B

¨

ulthoff, H., and Mohler, B.

(2015). Eye height manipulations: A possible solution

to reduce underestimation of egocentric distances in

head-mounted displays. ACM TAP, 12(1):1.

Li, B., Nordman, A., Walker, J., and Kuhl, S. A. (2016). The

effects of artificially reduced field of view and periph-

eral frame stimulation on distance judgments in hmds.

SAP ’16, pages 53–56.

Loomis, J. M. and Knapp, J. M. (2003). Virtual and

Adaptive Environments, chapter Visual perception of

egocentric distance in real and virtual environments,

pages 21–46. ErlBaum, Mahwah, NJ.

Mark, L. (1987). Eyeheight-scaled information about affor-

dances. a study of sitting and stair climbing. J. Exp.

Psych: Hum. Perc. Perf., 13:361–370.

Masters, M. S. and Sanders, B. (1993). Is the gender dif-

ference in mental rotation disappearing? Behavior

genetics, 23(4):337–341.

Miloff, A., Lindner, P., Hamilton, W., Reuterski

¨

old, L., An-

dersson, G., and Carlbring, P. (2016). Single-session

gamified VR exposure therapy for spider phobia vs.

traditional exposure therapy. Trials, 17(1):60.

Murias, K., Kwok, K., Castillejo, A. G., Liu, I., and Iaria, G.

(2016). The effects of video game use on performance

in a virtual navigation task. Comp. in Hum. Behav.,

58:398–406.

Nori, R., Piccardi, L., Migliori, M., Guidazzoli, A., Frasca,

F., De Luca, D., and Giusberti, F. (2015). The VR

walking corsi test. Comp. in Hum. Behav., 48:72–77.

Nybakke, A., Ramakrishnan, R., and Interrante, V. (2012).

From virtual to actual mobility: Assessing the benefits

of active locomotion through an immersive VE using

a motorized wheelchair. In IEEE 3DUI, pages 27–30.

Pallavicini, F., Ferrari, A., Zini, A., Garcea, G., Zanacchi,

A., Barone, G., and Mantovani, F. (2017). What dis-

tinguishes a traditional gaming experience from one

in VR? an exploratory study. In Inter. Conf. on App.

Hum. Fact. and Ergo., pages 225–231.

Riecke, B. E., Bodenheimer, B., McNamara, T. P.,

Williams, B., Peng, P., and Feuereissen, D. (2010). Do

we need to walk for effective VR navigation? physi-

cal rotations alone may suffice. In 7th Inter. Conf. on

Spat. Cogn., pages 234–247.

Rock, I. (1975). An introduction to perception. MacMillan.

Ruddle, R. and Lessels, S. (2006). For efficient navigational

search, humans require full physical movement, but

not a rich visual scene. Psych. Sci., 17(6):460–465.

Ruddle, R., Payne, S., and Jones, D. (1999). Navi-

gating large-scale VEs: What differences occur be-

tween helmet-mounted and desk-top displays? Pres.,

8(2):157–168.

Suma, E., Babu, S., and Hodges, L. (2007). Comparison of

travel techniques in complex, mulit-level 3d environ-

ment. In IEEE 3DUI, pages 149–155.

Suma, E., Bruder, G., Steinicke, F., Krum, D., and Bolas, M.

(2012). A taxonomy for deploying redirection tech-

niques in immersive VE. In IEEE VR, pages 43–46.

Waller, D. and Greenauer, N. (2007). The role of body-

based sensory information in the acquisition of endur-

ing spatial representations. Psych. Res., 71:322–332.

Waller, D. and Hodgson, E. (2013). Sensory contributions

to spatial knowledge of real and virtual environments.

In Human Walking in VEs, pages 3–26. Springer.

Waller, D., Hunt, E., and Knapp, D. (1998). The transfer

of spatial knowledge in VE training. Pres., 7(2):129–

143.

Waller, D., Loomis, J. M., and Steck, S. D. (2003). Inertial

cues do not enhance knowledge of environmental lay-

out. Psychonomic bulletin & review, 10(4):987–993.

Warren, W. H. (1984). Perceiving affordances: Visual guid-

ance of stair climbing. J. Exp. Psych: Hum. Perc.

Perf., 10:683–703.

Willemsen, P. and Gooch, A. A. (2002). Perceived ego-

centric distances in real, image-based and traditional

virtual environments. In IEEE VR, pages 79–86.

Williams, B., Narasimham, G., McNamara, T. P., Carr,

T. H., Rieser, J. J., and Bodenheimer, B. (2006). Up-

Virtual Exploration: Seated versus Standing

271

dating orientation in large VEs using scaled transla-

tional gain. In ACM APGV, pages 21–28.

Williams, B., Narasimham, G., Westerman, Rieser, J., and

Bodenheimer, B. (2007). Funct. similarities in spatial

representations between real and VEs. TAP, 4(2).

Wilson, P., Kalescky, W., MacLaughlin, A., and Williams,

B. (2016). VR locomotion: walking > walking in

place > arm swinging. In ACM VRCAI, pages 243–

249.

Wraga, M. (1999). Using eye height in different postures

to scale the heights of objects. J. Exp. Psych: Hum.

Perc. Perf., 25:518–530.

Zielasko, D., Horn, S., Freitag, S., Weyers, B., and Kuhlen,

T. W. (2016). Evaluation of hands-free hmd-based

navigation techniques for immersive data analysis. In

IEEE 3DUI, pages 113–119.

GRAPP 2018 - International Conference on Computer Graphics Theory and Applications

272