Studying Natural Human-computer Interaction in Immersive Virtual

Reality: A Comparison between Actions in the Peripersonal and in the

Near-action Space

Chiara Bassano, Fabio Solari and Manuela Chessa

Dept. of Informatics, Bioengineering, Robotics, and Systems Engineering,

University of Genoa, Genoa, Italy

Keywords:

Virtual Reality, Natural Human-computer Interaction, Peripersonal Space Interaction, Near-action Space

Interaction, Virtual Grasping, Head Mounted Displays, Oculus Rift, Leap Motion.

Abstract:

Interacting in immersive virtual reality is a challenging and open issue in human-computer interaction. Here,

we describe a system to evaluate the performance of a low-cost setup, which has not the need of wearing

devices to manipulate virtual objects. In particular, we consider the Leap Motion device and we assess its

performance into two situations: reaching and grasp in the peripersonal space, and in the near-action space,

i.e. when a user stays on foot and can move his own arms to reach objects on a desk. We show how these

two situations are similar in terms of user performance, thus indicating a possible use of such device in a wide

range of reaching tasks in immersive virtual reality.

1 INTRODUCTION

In the last decade, virtual reality (VR) has had a

widespread success, thanks to the release of several

head-mounted displays (HMDs), such as the Oculus

Rift or the GearVR (by OculusVR), the HTC Vive,

the Project Morpheus (by Sony) or the Google Card-

board. These new technologies can be considered low

cost if compared to the ones previously available, for

example expensive motion capture systems or room-

filling technologies, such as the CAVE. In the past,

because of the high cost of the hardware required, VR

was mainly used for military applications, cinema and

multimedia production. Nowadays, instead, it has be-

come very popular in the entertainment world and has

caught the attention of researchers who are studying

its application in many other different fields: serious

games and edutainment, cognitive and physical reha-

bilitation, support for surgeons in diagnosis, operation

planning and minimal invasive surgery, modeling and

products design, assembly and prototyping process,

cultural heritage applications.

The work presented in this paper is a prelimi-

nary study, mainly focused on analyzing interaction

modalities within immersive virtual reality. In fact, in

many cases, controllers or joysticks are used to inter-

act with the virtual environments. Even if this solu-

tion is effective, actions performed do not resemble

to their real counterpart: people do not push buttons

to grab objects in their daily life. Therefore, non-

wearable devices could be an alternative solution. In

particular, the Leap Motion, a hand tracker device, al-

lowing the user to interact with virtual objects without

wearing specific tools and to see a virtual representa-

tion of his hands, seems to be promising. A recent

study (Sportillo et al., 2017), compared a controller-

based interface with a realistic interface, composed

by a steering wheel and the Leap Motion, in a driv-

ing simulation application. People reaction time was

lower in the first case but a better control over the ve-

hicle and a higher stability was measured in the sec-

ond case. Moreover, (Khundam, 2015; Zhang et al.,

2017) implemented a walk-through algorithm for nav-

igating the virtual environment based on hand-gesture

detection. Performances were task-dependent, but

participants preferred the Leap Motion to traditional

gamepads and joysticks, stating it is more intuitive,

easier to learn and use, causes less fatigue and motion

sickness and induces more immersion. Despite its

tracking issues, researchers are very interested in the

application of the Leap Motion for different purposes:

simulation of experiments (Wozniak et al., 2016) or

oral and maxillofacial surgery (Pulijala et al., 2017),

gaming, from puzzle (Cheng et al., 2015) to First

Person Shooter (Chastine et al., 2013), model craft-

ing (Park et al., 2017) and visualization of complex

108

Bassano, C., Solari, F. and Chessa, M.

Studying Natural Human-computer Interaction in Immersive Virtual Reality: A Comparison between Actions in the Peripersonal and in the Near-action Space.

DOI: 10.5220/0006622701080115

In Proceedings of the 13th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2018) - Volume 2: HUCAPP, pages

108-115

ISBN: 978-989-758-288-2

Copyright © 2018 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

dataset (Reddivari et al., 2017; Cordeil et al., 2017).

In this paper, we aim to assess the stability, efficiency

and naturalness of a Leap Motion-based interface dur-

ing the interaction with virtual objects in the periper-

sonal and near-action space. We define as periper-

sonal space the area reachable by an user sitting in

front of the game area; whereas we define as near-

action space the area in which the user can reach ob-

jects standing in front of the workspace and perform-

ing few body movements (not walking).

2 RELATED WORKS

The success of virtual reality and HMDs is correlated

with the fact that these low cost technologies are still

able to provide a truly immersive experience (Chessa

et al., 2016) and an intuitive human-computer inter-

face.

Headset like the Oculus Rift and the HTC Vive also

provide simple tracking systems, able to localize and

track the HMD in a limited area. Moreover, these

commercial systems offer better performance, higher

resolution and lower weight with respect to expen-

sive specialized hardware (Young et al., 2014). Con-

versely, they tend to cause simulator sickness. Any-

way, it is also important taking into account that,

while the HTC Vive is conceived as a room-scale de-

vice, the Oculus Rift is mainly suggested for seated

or standing scenarios (Cuervo, 2017). A recent study

compared the two HMDs in a Pick-and-Place task and

pointed out that the HTC Vive tracking system is more

reliable and stable than the Oculus one, while there is

no difference in term of precision (Su

ˇ

znjevi

´

c et al.,

2017).

In order to guarantee a natural interaction, it is also

fundamental considering the reliability and precision

of the Leap Motion device. Its stated accuracy is

1/100th of a millimeter in a range from 25 to 600

mm above the device. Anyway, it has been demon-

strated that in static scenarios (not moving hands), the

error in measurement is between 0.5 mm and 0.2 mm,

depending on the plane and on the hand-device dis-

tance; while in dynamic situations (moving hands),

independently from the plane, an average accuracy of

1.2 mm could be obtained (Weichert et al., 2013). To

our knowledge, no study on the Leap Motion accu-

racy has been conducted yet with the device mounted

on a HMD. Anyway, for our purpose, we can consider

the hand tracker device reliable enough.

Finally, an issue with which everyone trying to recre-

ate a natural hand-based interaction with virtual en-

vironment deals with is the hand-object interpenetra-

tion due to the absence of real physical constraints

between the virtual hand and the virtual object. Dif-

ferent grasping approaches has been investigated: us-

ing a 3D model which simply follows the real hand

and can interpenetrate objects; a see-through method,

similar to the previous one, but showing the actual po-

sition of the fingers inside the objects; using a hand

model constrained to avoid interpenetration; using

two hands, a visual one not interpenetrating objects

and a ghost hand directly following the real one; using

different kind of feedback during collision, such as vi-

sual (object or fingers changing color), haptic (pres-

sure, vibration) or auditory feedback.

Many studies have pointed out that even if people

prefer interpenetration to be prevented, actually this

method does not improve performances, while any

kind of feedback seems to be a promising solution

(Prachyabrued and Borst, 2012; Prachyabrued and

Borst, 2014; Just et al., 2016).

3 MATERIALS AND METHODS

3.1 Hardware Components

The experimental setup is composed of a head-

mounted display, the Oculus Rift DK2

1

, with its own

positional tracking camera, and a computer able to

support VR. For the interaction with virtual objects,

we used the Leap Motion

2

mounted on the HMD.

The Oculus Rift DK2 has a 2160 × 1200 low-

persistence OLED display with a 90-Hz refresh rate

and vertical and horizontal field of view (FOV) of

maximum 100 degrees (Figure 1b). This headset con-

tains a gyroscope, an accelerometer and a magne-

tometer, which allow to calculate user’s head rotation

in the three-dimensional space and consequently to

synchronize his perspective in the virtual environment

in real-time.

Moreover, the Oculus Rift provides a 6-degree-of-

freedom position tracking system: the Oculus Sensor

device is an infrared camera able to detect and track

the array of infrared micro-LEDs on the HMD sur-

face. In this way, it is possible to obtain a precise 3D

position estimation and tracking of the user head in

the 3D space. The tracking area, however, is defined

by the limited field of view of the camera (Figure 2).

Another important element of our setup is the Leap

Motion. It is composed by two cameras and three in-

frared LEDs that allow to detect hands inside a hemi-

spheric area of approximately 1 meter above the de-

vice. Originally conceived for desktop applications,

1

https://www.oculus.com/

2

https://www.leapmotion.com/

Studying Natural Human-computer Interaction in Immersive Virtual Reality: A Comparison between Actions in the Peripersonal and in the

Near-action Space

109

Leap Motion has been recently adapted for VR appli-

cations: a special support for the device to be placed

at the centre of the frontal part of the HMD (Figure

1a) is supplied and on the web site it is possible to

freely download an integration software for the devel-

opment of VR contents, the Orion Beta. In our case,

we used the 3.2.0 version.

a

b

Figure 1: (a) Leap Motion device mounted on the Oculus

Rift DK2 (b) FOV of the Oculus Rift and of the Leap Mo-

tion device.

Figure 2: Field of view of the Positional Tracking Camera

of the Oculus Rift.

Finally, the computer we used respects the minimum

requirements for both the Oculus Rift and the Leap

Motion. It is an Alienware Aurora R5 with a 4GHz

Intel Core i7-6700K processor, 16GB DDR4 RAM

and a Nvidia GeForce GTX 1080 (8GB GDDR5X

RAM) graphics card.

3.2 Software Components

The VR environment has been created using Unity 3D

version 5.6.1f1. Unity 3D is one of the most used

game engine for the creation of games and 3D and

2D contents. In order to integrate the Oculus Rift and

the Leap Motion with Unity, we used the specific in-

tegration packages, respectively the Oculus Utilities

and the Leap Motion Unity Asset Core and Modules,

available on the official sites of the two devices.

3.3 Subjects

21 people, 12 males and 9 females, aged between 24

and 52 (mean 30,8 ± 6,7 years) took part to the exper-

imental session. All the participants were volunteer

and received no reward. The majority of them had al-

ready tried the Leap Motion and VR devices and ap-

plications in the past, but only 4 of them had already

tried the Oculus Rift.

3.4 Interaction Task

As our main purpose is investigating the operability

limits deriving from the combination of the Leap Mo-

tion and the Oculus Rift when interacting with virtual

objects, the task is very simple so that performances

are related mainly on the interaction than on the level

of difficulty of the task itself.

Participants wear the Oculus Rift and act in a virtual

room. Right in front of them, there is a virtual desk,

corresponding to the real desk in position and dimen-

sion, while behind them a virtual bookcase, corre-

sponding to the real one, delimits the game area (Fig-

ure 3). The position of these elements is defined in

the reference system of the tracking camera, which is

considered the origin of our virtual environment. The

camera, in fact, is the only fixed point in our setup,

existing in both realities, the real one and the virtual

one, and the position and rotation of the headset in the

3D space is referred to the system of reference of the

camera. When the Oculus Rift is out of the boundaries

of the camera FOV, lags occurred in the rendering of

the scene. When this happens, red lines, representing

the camera tracking cone, are displayed so that the

player can understand the reason of the problem and

come back to the tracked area.

On the desk there are 12 objects, different in color

and shape, and players are asked to grab them, one

per time and using a single hand, and place them in

the corresponding hole in the black support behind.

HUCAPP 2018 - International Conference on Human Computer Interaction Theory and Applications

110

A desktop application, showing the scene from a fixed

point of view, allows an external operator, the super-

visor, to see what the player is doing and help him in

three ways: deleting some objects in order to make

the scene clearer; repositioning objects accidentally

thrown out of the game area; selecting a hole. The

operator will also be able to reset the scene, start the

timer for the acquisitions and stop it.

As in a pilot study, in a task of grabbing and dragging

a

b

Figure 3: Example of the two different setups with the main

user immersed in the virtual reality and an external opera-

tor supervising him: (a) Peripersonal space setup (b) Near-

action space setup.

objects in the near-action space, participants com-

plained about the instability of the hands tracking,

which caused inadequate performances, we have de-

cided to write our own grasp function instead of us-

ing the one provided in the Leap Motion Interaction

Module. Moreover, we have decided to create two

slightly different versions of the game, one with the

interactable virtual objects in the peripersonal space

and one with the objects in the near-action space. In

the first case, the game area corresponds almost to the

real desk 110 cm long and 60 cm wide and the vol-

unteer sits on the chair; in the second case, instead,

the game area occupies part of the real desk and the

space between the desk and the bookcase, for a total

surface 156 cm long and 140 cm wide and the par-

ticipant has to stand up and play on foot (Figure 3).

The two setups have been created in order to under-

stand whether low performances, previously obtained

in the pilot study, were due to the algorithm used to

interact with virtual objects or to Leap Motion track-

ing issues in a near-action space interaction. Con-

cerning the grasp function, every time a hand collides

a

b

c

Figure 4: Grasp function: (a) The user looks at the scene

(b) The user reaches an object (c) The user grabs an object.

with an object, the object itself turns grey (Figure 4b)

and the distance between the palm and the center of

the object is calculated. We then check the hand’s

Grab Angle, which is a precalculated value, and, in

case it is greater than a threshold experimentally de-

fined, the object becomes purple (Figure 4c) and its

new position is calculated so that the distance between

hand and object remains constant; if the Grab Angle

is smaller than the threshold, no changes are applied

to the object. The most correct way to grasp an object

is approaching it with the hand open and closes it un-

til the object changes color. While, in order to release

the object, the user has to open his hand and move it

away from the item.

In order to ensure a greater stability, we also decided

not to implement a realistic physics. For example,

when an object is not colliding with the hand, its po-

sition and rotation is locked. On one hand, this causes

the presence of objects floating in the air; on the other

hand, it is the simplest way to overcome one of the

most common mistakes users do while using the Leap

Motion: grabbing an object and looking around for

the right hole. In fact, the rotation of the head causes

the hand to get out of the edges of the Leap Motion

FOV (Figure 1b), which can potentially lead to an un-

stable behavior of the object grabbed.

Studying Natural Human-computer Interaction in Immersive Virtual Reality: A Comparison between Actions in the Peripersonal and in the

Near-action Space

111

3.5 Experimental Parameters

The evaluation of the performances is divided in 3 dif-

ferent parts: (i)objective parameters recorded during

the game execution; (ii) the Simulator Sickness Ques-

tionnaire; (iii) the user’s subjective opinion.

3.5.1 Objective Parameters

During the task execution, for each participant, we

measured the total time required to complete the task

and the average time required to position each sin-

gle object. As the task is very simple, we expect

that these values reflect the stability and efficiency of

the human-computer interaction modality used, only.

Moreover, we counted the number of errors, in terms

of repositioned or deleted pieces, selection and reset

actions. If the system is stable, then participants will

not need any external aid.

3.5.2 Simulator Sickness Questionnaire

The Simulator Sickness Questionnaire (SSQ)

(Kennedy et al., 1993) is the standard test commonly

used when working with virtual reality. It allows

to analyze the participants’ status before and after

each exposure to VR in order to estimate if the

experience inside a virtual environment had some

physical effects. It considers 16 different aspects:

general discomfort, fatigue, headache, eye strain,

blurred vision, dizziness with eyes opened or closed,

difficulty focusing or concentrating, fullness of

head, vertigo, sweating, salivation increase, nausea,

stomach awareness, burping. These symptoms

indicate a state of malaise due to a poor quality of the

rendering of the virtual environment or to a too slow

integration of signals coming from different sensors

or to a bad tracking leading to a delay between

visual information coming from the HMD and other

information coming from other sensory channels

(vestibular, proprioceptive). The user has to rate

these voices using a scale ranging from 0 to 4, where

0 is none and 4 is severe.

3.5.3 Subjective Opinion

In order to evaluate the user’s experience we wrote

a custom questionnaire, taking into account different

aspect related to the interaction of the user in VR.

First of all, it is important to understand his/her de-

gree of confidence in moving in a virtual environment,

unable to see the real obstacles surrounding him (real

desk and bookcase). Then, we are interested in inves-

tigating at what extent movements performed are per-

ceived as natural and intuitive or, otherwise, at what

extent the user has to adapt to the system in order

to interact with virtual objects. Also, the obligation

of using one hand could be perceived as a constraint,

even if in general people unconsciously use only their

dominant hand to perform a task. For this reason, we

would like to receive feedbacks from the users. More-

over, we want to understand if our grasp function is

efficient and stable enough, so that people can easily

complete the task. Finally, it is important to know if

visual feedback provides helpful information.

We ask participants to evaluate their experience in the

two different setups and give a preference. The ques-

tionnaire, as shown below, is composed by an open

question and eight 5-point Likert scale questions:

Q1) Did you prefer the peripersonal space or the

near-action space trial? Why?

Q2) Did you feel safe to freely move your hands in the

peripersonal space trial?

Q3) Did you feel safe to freely move your hands in the

near-action space trial?

Q4) Was the interaction natural in the peripersonal

space trial?

Q5) Was the interaction natural in the near-action

space trial?

Q6) Level of frustration in the peripersonal space

trial

Q7) Level of frustration in the near-action space trial

Q8) In the task, you could use just a hand, did this

fact limit you?

Q9) Did the change in color of reached/grabbed ob-

jects help you?

3.6 Experimental Procedure

The experiment was composed of two trials: the

peripersonal space setup and the near-action space

setup. The order of execution was arbitrarily chosen

by the experimenter: to reduce the statistical variabil-

ity half of the participants carried out the first task

in the peripersonal space setup and half in the near-

action space setup. Before entering the main scene

and starting the experiment, each participant can ex-

plore and act in a demo scene, in order to become

familiar with the virtual scene and the interaction

modality and find the boundaries of the game area.

First, participants were asked to submit the SSQ, in

order to obtain an evaluation of their physical status

before the exposure to VR.

Then, they had to perform the task in one of the two

modality. When all the 12 objects were correctly po-

sitioned, the trial ended.

After this, the subjects had to submit a second SSQ,

which was used both to evaluate users’ status after

the first exposure and before the second exposure to

HUCAPP 2018 - International Conference on Human Computer Interaction Theory and Applications

112

the VR. The second trial was executed in the comple-

mentary modality to the one used during the previous

task. In the two VR scenes the position of the objects

changed, to avoid learning.

Subsequently, the users had to submit two last ques-

tionnaires: another SSQ and the subjective question-

naire.

4 RESULTS

4.1 Objective Parameters

The objective parameters defined to evaluate the per-

formances have been analyzed on four levels: firstly,

we made a general comparison between the results

obtained in the first and second trial, without taking

into account the setup; then, we compared the per-

formances in the two different setups; after this, we

made a ”crossed” comparison considering the order

of execution and the setup; finally, we considered the

personal preference and the setup.

In each analysis the statistical significance of the dif-

ferences between dataset was calculated by making a

t-test analysis.

Dividing the data according to the order of execu-

tion and comparing the mean total completion times

(Table 1), a consistent improvement of performances

between first (mean 89,7 ± 26,4 s) and second trial

(mean 73,3 ± 16,8 s) emerges. This is the only re-

sult statistically significant and can be explained as

the learning curve of participants. These results are

confirmed by the analysis of the completion time in

the crossed comparison between the order of execu-

tion and the setup (Table 3).

Slightly better performances in terms of total comple-

tion time, have been found in the near-action space

setup with respect to the peripersonal space setup, but

this results is not statistically significant (Table 2).

Table 1: Mean and standard deviation of total completion

time over all participants in the first and second trial (*

means a statistically significant difference, p < 0.02).

First vs Second trial

First Second

Mean [s]

89,7* 73,3*

Std [s]

26,4* 16,8*

There is no correlation between preferences and per-

formance, in fact, even if who preferred the near-

action space setup shows better results in the near-

action trial, this is not true for the one who preferred

the peripersonal space setup (Table 4). Anyway the

high variability suggests that more acquisitions are

Table 2: Mean and standard deviation of total completion

time over all participants in the peripersonal space and

near-action space setup.

Peripersonal vs Near-Action Space

Peripersonal Near-Action

Mean [s]

82 81,1

Std [s]

25,7 21,4

Table 3: Mean and standard deviation of total completion

time over all participants considering the order of execution

and the setup (P= Peripersonal, NA= Near-Action).

Order and Setup Crossed Comparison

1

st

P

2

nd

P

1

st

NA

2

nd

NA

Mean[s]

94,8 67,9 84,1 78,3

Std [s]

27,5 14 25,5 17,8

required. Data on partial times confirm previous re-

sults. In all the experiments the only kind of help

that the supervisor needed to use was the reposition-

ing of objects but the mean related to this parameter

was smaller than 0.5 in all the four analysis. Even

if this result is not statistically significant it demon-

strates the stability and reliability of our system. The

absence of statistical significance in the different sets

of data can indicate that the two setups can be consid-

ered equivalent.

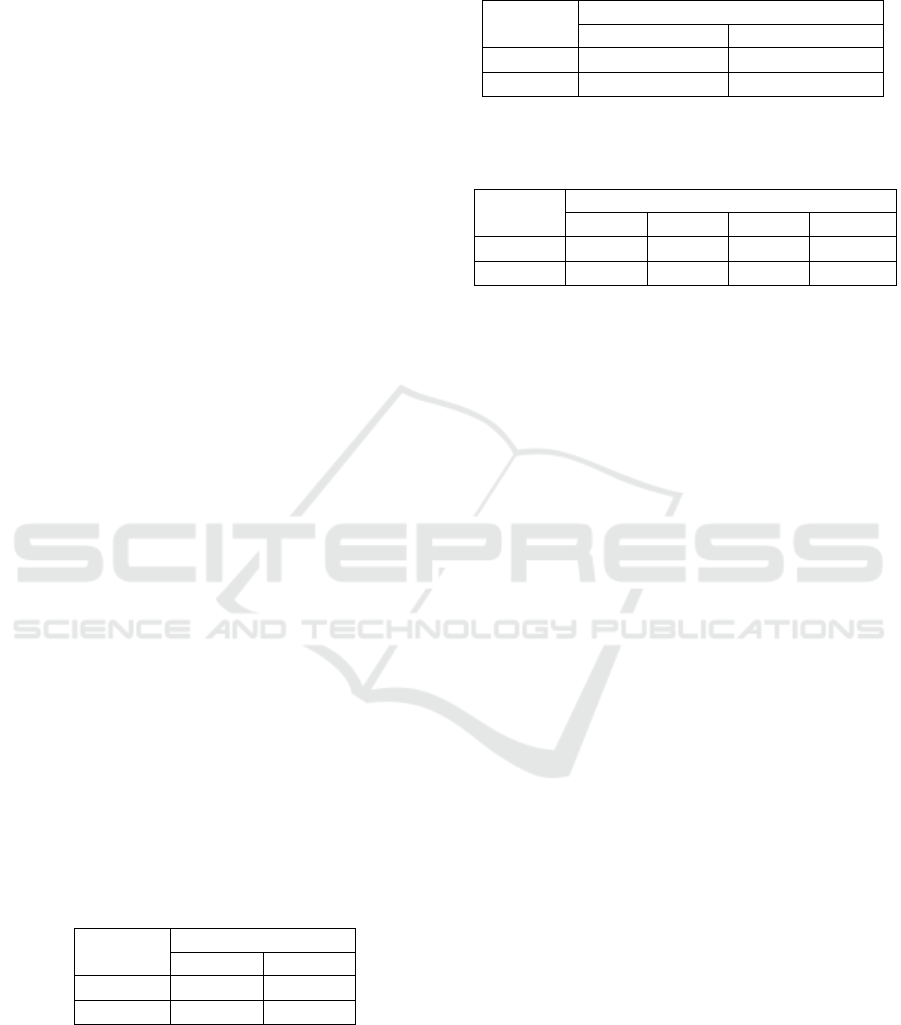

4.2 Simulator Sickness Questionnaire

The SSQ analysis highlights no change in the phys-

ical status of participants as there are no significant

differences between the data acquired before and af-

ter each task execution (see Fig. 5). 3 people how-

ever, reported a strange behavior of the system: in

the peripersonal space setup, when rotating their head

looking at the table, they perceived an oscillatory

movement accompanied by a sort of zooming effect.

This did not happen when they were staring at their

hands while rotating the head. This phenomenon

however did not interfere with their performances nor

caused sickness.

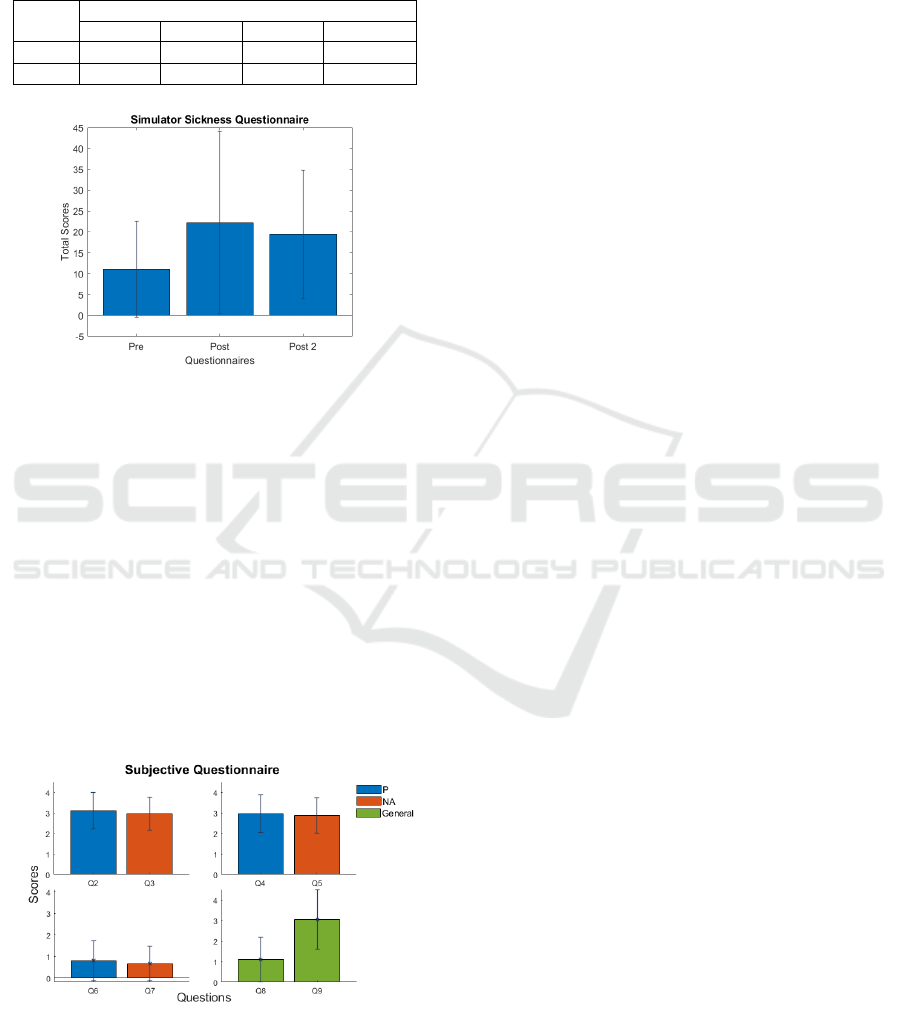

4.3 Subjective Opinion

The subjective questionnaire allows us to know par-

ticipants’ opinions about their experience in VR and

their interaction with virtual objects (see Fig. 6).

4 of the volunteers had not a preference and were

satisfied by both the setups. 11 of them stated that

they preferred the near-action space setup, but rea-

sons were different: some of them said that it was

more comfortable, as it allowed more freedom of

movements and more interaction with the scene and

Studying Natural Human-computer Interaction in Immersive Virtual Reality: A Comparison between Actions in the Peripersonal and in the

Near-action Space

113

Table 4: Mean and standard deviation of total completion

time (sec) over all participants considering the preference

and the setup (P-P = preference peripersonal - peripersonal

setup, P-NA = preference peripersonal -near-action setup,

NA-P = preference near-action - peripersonal setup, NA-

NA = preference near-action - near-action setup).

Preference and Setup Crossed Comparison

P-P P-NA NA-P NA-NA

Mean

97,6 93,5 73,9 77,8

Std

36,1 24,6 16,1 20,6

Figure 5: Mean and standard deviation of the Simula-

tor Sickness Questionnaires submitted before the first trial

(Pre), between the first and the second trial (Post) and after

the second trial (Post 2).

the virtual objects and consequently the task was fun-

nier and more engaging; other people referred that it

was more realistic regarding distances. 6 of the par-

ticipants judged the peripersonal space setup the best

one, as they felt safer, more at ease and free to move

and complained that in the other setup they felt con-

strained by the wires and the limited game area. Be-

cause of the FOV of Oculus camera, in fact, the game

area is small. Moreover, on the boundaries, the Ocu-

lus tracking system frequently lost the signal from the

HMD, this caused a lag on the rendering of the scene,

Figure 6: Mean and standard deviation of the answers given

to the 8 5-points questions in the subjective opinion ques-

tionnaire. P = peripersonal space setup, NA = near-action

space setup, General = questions not referred to a specific

setup.

which was perceived as annoying and confusing. The

same problem occurred when users rotated too much

their head or looked backward, as there are no infrared

sensors on the back part of the headset. All these fac-

tors influenced the rate given to questions Q2, Q3, Q4,

Q5, which can be considered discrete to good. On one

hand, in the peripersonal space setup, due to the char-

acteristic of the setup itself, people felt their move-

ments were limited, but the tracking was stable; on

the other hand, in the near-action space setup, they

felt freer to move in a larger space but were limited

by the tracking system. Focusing on the naturalness

of the interaction, instead, the absence of physics was

perceived as weird and contributed to lower evalua-

tions.

The interdiction of using both hands together to grab

objects had a minimal influence (Q8), also because

in general people tend to instinctively use just their

dominant hand to move items, even in the real world.

Regarding the interaction with virtual objects and

the ability to complete the task (Q6, Q7), partici-

pants gave very good rates. So we can say that our

grasp function was well implemented. Finally, the

visual feedback (Q9) was appreciate, especially by

those who had stability problem with the Leap Mo-

tion tracking, probably caused by too fast movements

or interference within the sensors of the different de-

vices.

5 CONCLUSIONS

Our work aimed to define a room-scale setup to be

used when working with the Oculus Rift and the

Leap Motion mounted on it. For this purpose, we

have to take into account the limited field of view of

the tracking camera, which causes the game area to

be smaller than the one guaranteed by other tracking

systems commonly used for the VR. Anyway, we

were interested in finding the actual limits of the

two devices and in assessing how people could feel

constrained by them and if it is possible to create an

engaging exergame even in a restricted space. From

the received feedbacks, we can state that people in

general prefer having more freedom of movement

and just the fact of staying on foot satisfied them. The

experience is even more pleasurable and engaging

when the player is far from the boundaries of the

game area, where tracking is less stable: it has

frequently happened that participants had to find

alternative strategies in order to reach certain objects

still remaining in the limits of the camera FOV, for

example stretching their left arm (for right-handed

people). These situations in which the user is forced

HUCAPP 2018 - International Conference on Human Computer Interaction Theory and Applications

114

to adapt reduce the naturalness of the interaction

and the sense of presence. Moreover, we wanted to

evaluate if our grasp function could allow a natural

and efficient interaction with virtual objects. Many

participants also reported that the possibility to

see a virtual representation of their hands and use

them to interact with the surrounding environment,

made them feel more confident, as it enhanced their

capability to estimate distances. Anyway, further

investigations on this topic are required. One of the

main aspects that people pointed out was the absence

of physics rules, so it would be interesting to investi-

gate how to add gravity or collision between objects

without losing stability. Finally, we would like to

refine the parameter controlling the grasp action

and adapt it to the shape of different objects. This

could also potentially solve the object-hand interpen-

etrating problem still maintaining good performances.

REFERENCES

Chastine, J., Kosoris, N., and Skelton, J. (2013). A study

of gesture-based first person control. In Computer

Games: AI, Animation, Mobile, Interactive Multime-

dia, Educational & Serious Games (CGAMES), 18th

International Conference on, pages 79–86. IEEE.

Cheng, B., Ketcheson, M., van der Kroon, J., and Graham,

T. (2015). Corgi defence: Building in a virtual reality

environment. In Proceedings of the Annual Sympo-

sium on Computer-Human Interaction in Play, pages

763–766. ACM.

Chessa, M., Maiello, G., Borsari, A., and Bex, P. J. (2016).

The perceptual quality of the Oculus Rift for immer-

sive virtual reality. Human–Computer Interaction,

pages 1–32.

Cordeil, M., Bach, B., Li, Y., Wilson, E., and Dwyer, T.

(2017). A design space for spatio-data coordination:

Tangible interaction devices for immersive informa-

tion visualisation. In Proceedings of IEEE Pacific Vi-

sualization Symposium (Pacific Vis).

Cuervo, E. (2017). Beyond reality: Head-mounted displays

for mobile systems researchers. GetMobile: Mobile

Computing and Communications, 21(2):9–15.

Just, M. A., Stirling, D., Naghdy, F., Ros, M., and Stap-

ley, P. J. (2016). A comparison of upper limb move-

ment profiles when reaching to virtual and real targets

using the Oculus Rift: implications for virtual-reality

enhanced stroke rehabilitation. Journal of Pain Man-

agement, 9(3):277–281.

Kennedy, R. S., Lane, N. E., Berbaum, K. S., and Lilien-

thal, M. G. (1993). Simulator sickness questionnaire:

An enhanced method for quantifying simulator sick-

ness. The international journal of aviation psychol-

ogy, 3(3):203–220.

Khundam, C. (2015). First person movement control with

palm normal and hand gesture interaction in virtual

reality. In Computer Science and Software Engineer-

ing (JCSSE), 12th International Joint Conference on,

pages 325–330. IEEE.

Park, G., Choi, H., Lee, U., and Chin, S. (2017). Virtual fig-

ure model crafting with VR HMD and Leap Motion.

The Imaging Science Journal, 65(6):358–370.

Prachyabrued, M. and Borst, C. W. (2012). Visual inter-

penetration tradeoffs in whole-hand virtual grasping.

In 3D User Interfaces (3DUI), IEEE Symposium on,

pages 39–42. IEEE.

Prachyabrued, M. and Borst, C. W. (2014). Visual feedback

for virtual grasping. In 3D User Interfaces (3DUI),

2014 IEEE Symposium on, pages 19–26. IEEE.

Pulijala, Y., Ma, M., and Ayoub, A. (2017). VR surgery:

Interactive virtual reality application for training oral

and maxillofacial surgeons using Oculus Rift and

Leap Motion. In Serious Games and Edutainment Ap-

plications, pages 187–202. Springer.

Reddivari, S., Smith, J., and Pabalate, J. (2017). VRvisu:

A tool for virtual reality based visualization of medi-

cal data. In Connected Health: Applications, Systems

and Engineering Technologies (CHASE), IEEE/ACM

International Conference on, pages 280–281. IEEE.

Sportillo, D., Paljic, A., Boukhris, M., Fuchs, P., Ojeda, L.,

and Roussarie, V. (2017). An immersive virtual re-

ality system for semi-autonomous driving simulation:

a comparison between realistic and 6-DoF controller-

based interaction. In Proceedings of the 9th Interna-

tional Conference on Computer and Automation En-

gineering, pages 6–10. ACM.

Su

ˇ

znjevi

´

c, M., Mandjurov, M., and Matija

ˇ

sevi

´

c, M. (2017).

Performance and QoE assessment of HTC Vive and

Oculus Rift for pick-and-place tasks in VR. In Ninth

International Conference on Quality of Multimedia

Experience (QoMEX).

Weichert, F., Bachmann, D., Rudak, B., and Fisseler, D.

(2013). Analysis of the accuracy and robustness of the

Leap Motion controller. Sensors, 13(5):6380–6393.

Wozniak, P., Vauderwange, O., Mandal, A., Javahiraly, N.,

and Curticapean, D. (2016). Possible applications

of the Leap Motion controller for more interactive

simulated experiments in augmented or virtual real-

ity. In Optics Education and Outreach IV, volume

9946, page 99460P. International Society for Optics

and Photonics.

Young, M. K., Gaylor, G. B., Andrus, S. M., and Bo-

denheimer, B. (2014). A comparison of two cost-

differentiated virtual reality systems for perception

and action tasks. In Proceedings of the ACM Sym-

posium on Applied Perception, pages 83–90. ACM.

Zhang, F., Chu, S., Pan, R., Ji, N., and Xi, L. (2017). Dou-

ble hand-gesture interaction for walk-through in VR

environment. In Computer and Information Science

(ICIS), IEEE/ACIS 16th International Conference on,

pages 539–544. IEEE.

Studying Natural Human-computer Interaction in Immersive Virtual Reality: A Comparison between Actions in the Peripersonal and in the

Near-action Space

115