Towards Risk-aware Access Control Framework for Healthcare

Information Sharing

Mohamed Abomhara, Geir M. Køien, Vladimir A. Oleshchuk and Mohamed Hamid

Department of Information and Communication Technology, University of Agder, Grimstad, Norway

Keywords:

Access Control, Healthcare Information Sharing, Electronic Health Records, Security, Privacy, Risk Assess-

ment, Risk Management.

Abstract:

Access control models play an important role in the response to insider threats such as misuse and unautho-

rized disclosure of the electronic health records (EHRs). In our previous work in the area of access control,

we proposed a work-based access control (WBAC) model that strikes a balance between collaboration and

safeguarding sensitive patient information. In this study, we propose a framework for risk assessment that

extend the WBAC model by incorporating a risk assessment process, and the trust the system has on its users.

Our framework determines the risk associated with access requests (user’s trust level and requested object’s

security level) and weighting such risk against the risk appetite and risk threshold of situational conditions.

Specifically, an access request will be permitted if the risk threshold outweighs the risk of granting access to

information, otherwise it will be denied.

1 INTRODUCTION

Electronic health records (EHRs) are an essential in-

formation source and a communication channel to

create a shared view of the patient among healthcare

providers (healthcare professionals and healthcare or-

ganizations) (Chao, 2016; Reitz et al., 2012). How-

ever, since EHRs systems gather and enable health

information access, secure control over information

flow is a key aspect of EHRs where sensitive infor-

mation is shared among a group of people within or

across organizations (Shoniregun et al., 2010). In-

sider abuse or misused of health information may lead

to a significant damage to patient’s privacy and health-

care provider alike (Probst et al., 2010). It is, on

the one hand, healthcare providers are authorized to

access their patient health information during patient

treatment and on the other hand, there is always a se-

curity risk associated with each access to health in-

formation which might lead to potential information

leakage (Salim et al., 2011; Rostad et al., 2007).

Access control is the most popular approach for

developing an active form of mitigating insider threats

(Baracaldo and Joshi, 2013; Probst et al., 2010).

Role-based access control (RBAC) (Ferraiolo et al.,

2001) and attribute-based access control (ABAC) (Hu

et al., 2014) are a popular models for access con-

trol and they widely employed in medical industry

(Ferraiolo et al., 2001; Salim et al., 2011). These

access control models are characterized by a con-

siderable imbalance between security and efficiency,

especially when applied in distributed environments

(Salim et al., 2010). One of the main problem in

these models often is having a difficulties to enforce

the “need-to-know” principle (Ferraiolo et al., 2001)

and “minimum necessary” standard to use and dis-

close patient’s records for treatment (Agris, 2014).

This is due to problems like, for one, medical records

containing a wide range of information and it is in-

feasible for the policy author (e.g., the administra-

tor) to foreseen what health information a healthcare

provider may need in various situation (Salim et al.,

2011). Second, healthcare providers cannot decide

on what appropriate information is really necessary in

a patient’s treatment case. Thus, healthcare provider

get unlimited access to patient health records which

lead to unaccountable risk on patient health records.

In our previous works (Abomhara and Køien,

2016; Abomhara et al., 2017), an enhanced access

control model was proposed to strike a balance be-

tween collaboration and safeguarding sensitive pa-

tient information. The enhanced model introduced the

team role concept and modifying the user-role assign-

ment model from a RBAC and ABAC. In our access

control model, the level of fine-grained control of ac-

cess to objects that can be authorized to healthcare

312

Abomhara, M., Køien, G., Oleshchuk, V. and Hamid, M.

Towards Risk-aware Access Control Framework for Healthcare Information Sharing.

DOI: 10.5220/0006608103120321

In Proceedings of the 4th International Conference on Information Systems Security and Privacy (ICISSP 2018), pages 312-321

ISBN: 978-989-758-282-0

Copyright © 2018 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

providers is managed and controlled based on the job

required to meet the “minimum necessary” standard.

In this study, we propose a framework for risk assess-

ment to estimate the risk that users may present to ob-

jects by computing the risk of each user access request

and the object accessed by the user. Access decisions

are made by determining the risk associated with ac-

cess requests (user’s trust level and requested object’s

security level) and weighting such risk against the risk

appetite and risk threshold of situational conditions.

Specifically, an access request will be permitted if the

risk threshold outweighs the risk of granting access to

information, otherwise it will be denied.

The remaining part of this study is organized as

follows. Section 2 presents a related work. In section

3, we briefly describe the WBAC model. Section 4

introduces the risk aware framework. In section 5, we

present a usage scenario and proof of concept of the

proposed framework. A discussion, conclusions and

future works are provided in section 6.

2 RELATED WORK

To determine whether user access risk levels are on

par with user trust levels and object security levels,

Sandhu, 1993 proposed lattice-based access control

models, where a user is only allowed to access an ob-

ject if the security level of the subject is higher than

or equal to the security level of the object.

Cheng, et al., 2007 proposed a quantified risk-

adaptive access control based on fuzzy multi-level se-

curity. They illustrated the concept of their approach

by showing how the rationale of the Bell-LaPadula

model (Bell and LaPadula, 1975) and Multi-Level Se-

curity (MLS) access control model could be used to

develop a risk-adaptive access control model. Chari,

et al., 2012 used a fuzzy logic risk inferencing system

to determine permission sensitivity levels and user ac-

cess risk levels, and infer the risk of a particular role

by looking at the aggregate role sensitivity and aggre-

gate user access risk level.

Ma, 2012 presented a formal approach to risk as-

sessment for RBAC systems. The basic idea of this

approach is assigning a security level to each user,

calculating the security level for role and then cal-

culate the risk value of role-user assignment relation.

Bijon, et al., 2013 discussed the difference between

traditional constraint-based risk mitigation and recent

quantified risk-aware approaches in RBAC, and also

proposed a framework for a quantified approach to

risk-aware role-based access control.

Baracaldo and Joshi, 2013 proposed a framework

that extends the RBAC model. Their framework

adapts to suspicious changes in users’ behavior by

removing privileges when users’ trust falls below a

certain threshold. Moreover, Shaikh et al., 2011

proposed a dynamically user trust calculation model

based on the past behavior of the users with particu-

lar objects. The past behavior is evaluated based on

the history of reward and penalty points assigned to

user after the completion of every transaction. In their

model, the old and recent history (rewards and penalty

points history) has an equal weight values (Shaikh

et al., 2012) and the consequences of this is that the

model may be enable to detect small changes in re-

cent behavior of user in timely manner. In this case, if

the rewords points increase then the user trust level

increase and vice versa. This model was extended

in (Shaikh et al., 2012) by exponentially weighted

moving average approach. However, in the extended

model, exponentially weighted average of past behav-

ior was obtained recursively. Also, the authors did not

enforce giving the recent events higher weight than

the old events. In this case, we could assume that, the

insider would behavior according to the rules to in-

crease his/her rewards points and reaches to the trust

level that will allow him/her to violate system rules.

Motivated by the shortcomings in the related

work, in our risk-aware framework, we calculate the

risk associated with an access request using the trust

level based on object security level (section 4.2) and

the past user behavior. The past behavior of users is

dynamically calculated based on rewards and penalty

points assigned to users. In our model, rewards and

penalty points history are given a different weight val-

ues based on the oldness of their occurrence based on

the forgetting factor which allows weight the recent

events higher than old one (section 4.3).

3 WORK BASED ACCESS

CONTROL MODEL (WBAC)

WBAC enforces a three-layer access control that ap-

plies RBAC, a secondary RBAC and ABAC. The sec-

ondary RBAC layer, with extra team roles extracted

from the team work requirements, is added to man-

age the complexity of cooperative engagements in

the healthcare domain (Abomhara and Køien, 2016).

Role and team role are used in conjunction to deal

with access control in dynamic collaborative envi-

ronments. Policies related to collaboration and team

work are encapsulated within this coordinating layer

to ensure that the attribute layer is not overly burdened

(Abomhara et al., 2017).

Towards Risk-aware Access Control Framework for Healthcare Information Sharing

313

3.1 WBAC Core Components

The Core WBAC model includes the following com-

ponents:

• U SR is a set of users.

• OBJ is a set of objects. OBJ = OBJ

A

∪ OBJ

B

where, OBJ

A

is a set of private objects, OBJ

B

is

a set of protected objects and OBJ

A

∩ OBJ

B

=

/

0.

• OPR is set of operations.

• PER is set of permissions; PER ⊆ OBJ × OPR.

• R is a set of roles.

• T R is a set team roles. T R = {tr

t

,tr

a

,tr

m

} where

tr

t

is thought role, tr

a

is action role, tr

m

is man-

agement role (early described in (Abomhara and

Køien, 2016)).

• T is a set of teams, where T ⊆ 2

USR

.

• W is a set of collaborative works. W =

(t, ob j, state), where, t ⊆ T , ob j ⊆ OBJ, and

state ∈ {Active, Inactive}.

• U SR-R-A ⊆ USR × R: User to role assignment re-

lation.

• U SR-T -A ⊆ USR × T : User to team assignment

relation.

• T M-T R-A ⊆ USR × T × T R: Team member to a

team role assignment relation.

• PER-R-A ⊆ PER × R: Permission to role assign-

ment relation.

• PER-T R-A ⊆ PER × T R: Permission to team role

assignment relation.

• T -W -A ⊆ T ×W : Team to work assignment rela-

tion.

• PS: Represents a policy set which is based on one

or more policy.

• P: Represents a policy which composed of one or

more rules.

• rule: Represents a rule in policy.

Definition 1. Access state (Γ) is a set of all WBAC

model assignments which contains all the information

necessary to make access control decisions.

In order to specify the access control, we define

the following functions:

• authorized-usr

role

(r) = {usr ∈ USR|(usr, r) ∈

USR-R-A}: A set of all users assigned to role r.

• team

member

(t) = {usr ∈ USR|(usr,t) ∈

USR-T -A}: A set of team members in team

t.

• authorized-usr

tr

(usr,t) = {tr ∈ T R|(usr, t, tr) ∈

T M-T R-A}: A set of team roles hold by user usr

in team t.

• team-role

member

(tr) = {usr ∈ U SR|∃t ∈ T : tr ∈

authorized-usr

tr

(usr,t)}: A set of all users as-

signed to team roles in team t.

• assigned-role

per

(r) = {per ∈ PER|(per, r) ∈

PER-R-A}: A set of permissions hold by role r.

• assigned-team-role

per

(tr) = {per ∈

PER|(tr, per) ∈ PER-T R-A}: A set of per-

missions hold by team role tr.

• assigned-team-work(w) = {t ∈ T |(w,t) ∈

T -W -A}: A set of teams assigned to work w.

• can-access(usr, per): User usr has a permission

per.

Definition 2. An access policy is a security state-

ment of what is, and what is not allowed in the

organization. Formally: can-access(usr, per) ⇐⇒

rule

1

∧ . . . ∧ rule

n

, where usr ∈ USR, per ∈ PER and

per = (ob j, opr).

It can be interpreted as user (usr) can perform an

operation (opr) on object (ob j) if and only if all con-

ditions (rule

i

) hold.

Definition 3. A rule is a fundamental component

of an policy. A rule consists of a condition (rule-

Con) and an effect (ruleEff) that can be either

a permission or denial associated with the suc-

cessful evaluation of the rule. Formally: rule =

(ruleId, ruleCon, target, ruleE f f ,CombiningAlg).

A rule condition is a Boolean function over sub-

ject, object and operation that refines the applicabil-

ity of the rule beyond the predicates implied by its

target. The correct evaluation of a condition returns

the effect of the rule (permit or deny), while incor-

rect evaluation results in an error (Indeterminate) or

the discovery that the condition does not apply to the

request (Not Applicable). target = (usr, ob j, opr) is

a set of attributes and their values for matching the

user, object, and operation, to check if the given rule

and CombiningAlg is the policy combining algorithm

(e.g., deny-overrides, permit-overrides and/or first-

applicable algorithms (Li et al., 2009)).

Example 1. Consider a policy with one rule

(rule

1

) to ensuring that the primary doctor has a

clearance to read medical file. Formally: rule =

(Rule

1

, {isPrimaryDoctor}, {{usr}, {Records}, {opr}

}, {permit}, {CombiningAlg}).

Where “isPrimaryDoctor” is the rule conditions. In

this case, only healthcare provider who hold a role

as “isPrimaryDoctor” are permit to access the object

Records.

ICISSP 2018 - 4th International Conference on Information Systems Security and Privacy

314

Definition 4. A policy set (PS) is a container of one

or more policies. PS may contain other policy sets,

policies or both.

An example of defined access control policies as

following:

Policy 1 (Role separation of duty (SoD) constraints).

User to role assignments should respect SoD where

number of roles from SoD assigned to the same user

can not exceed N. RSoD ⊆ (2

R

× N) is collection of

pairs (rs, n) in RSoD, where, rs is a role set, m ⊆ rs

and n is a integer number ≥ 2, with the property that

no user is assigned to n or more roles from the set rs

in each (rs, n) ∈ RSoD. Formally:

∀(rs, n) ∈ RSoD, ∀m ⊆ rs : |m|≥ n ⇒

T

r∈m

authorized-usr

role

(r) =

/

0.

Policy 2 (Team role separation of duty (SoD) con-

straints). A user in one team must be assigned to ex-

actly one team role. Formally: ∀t ∈ T, ∀usr ∈ USR :

usr ∈ team

member

(t) ⇒ |(authorized-usr

tr

(usr,t))|=

1.

Policy 3 (Role cardinality constraints). User to

role assignments must respect the cardinality con-

straints where the number of authorized users for

any role does not exceed the authorization cardinal-

ity (card

role

(r)) of that role. Formally: ∀r ∈ R :

|authorized-usr

role

(r)|≤ card

role

(r).

Policy 4 (Team cardinality constraints). The number

of authorized users for any team (t) does not exceed

the cardinality (card

team

(t)) of that team. Formally:

∀t ∈ T : |team

member

(t)|≤ card

team

(t).

Policy 5 (Team role cardinality constraints). The

number of authorized users for any team role does

not exceed the cardinality (card

team-role

(tr)) of that

role. Formally: ∀tr ∈ T R : |team-role

member

(tr)|≤

card

team-role

(tr).

Policy 6 (Access). Object “Records” can

be accessed only by users with associated

roles. Formally: ∀usr ∈ USR, ∀r ∈ R, ∀per ∈

PER : usr ∈ authorized-usr

role

(r) ∧ per ∈

assigned-role

per

(r) ⇒ can-access(usr, Records),

where per = (Records, opr).

Definition 5. An access query (Q) is a request by a

user to perform an operation on an object. Formally:

Q = (usr

rq

, ob j

rq

, opr

rq

) where, usr

rq

∈ U SR, ob j

rq

∈

OBJ and opr

rq

∈ OPR.

A response to access query Q is an access re-

sponse RS = {D} which is a decision D to the access

query against the defined policy and rules (definitions

2 and 3) in policy set PS.

Definition 6. Access control decision function (d f )

is defined as if condition defined by rules in access

policy is evaluated as true or false (Algorithm 1), then

there is decision D = {permit, deny, indeterminate},

according to the rules in the policy. Formally: d f :

Q × PS → D.

Algorithm 1 evaluate the access request against

the rules defined in policy. It takes rule and Q as an

input and the result of evaluation would be the rule

decision. The algorithm begins by checking the rule

applicability against the request (Line 4). This is done

by comparing the request Q against the rule target.

The target contains a set of attributes and their values

for matching the usr, object and operation, to check if

the given rule is applicable to the request. If the target

match, the rule condition is evaluated (line 6), other-

wise, a discovery that the rule does not apply to the re-

quest “Indeterminate” (line 16) is returned to the pol-

icy d f . Incorrect evaluation results in an error “Inde-

terminate” (Line 7), while correct evaluation of a con-

dition returns the effect of the rule (line 9-13) which

can be can be either a permission or denial of request

Q. In the case there exists a ruleEff=permit and a

ruleEff=deny, the decision will be taken according to

the policy combining algorithm (CombiningAlg). For

example, if CombiningAlg = Deny-Overrides, deci-

sions will be combines in such a way that if any rule-

Eff is a deny, then that decision is deny.

Algorithm 1: Rule evaluation algorithm

(RuleEva(rule, Q)).

Input rule, target and Q

Output Rule decision

1: rule = {{ruleId}, {ruleCon}, {target},

{ruleE f f }, {CombiningAlg}}

2: target = {{usr}, {ob j}, {opr}}

3: Q = {usr

rq

, ob j

rq

, opr

rq

}

4: if ((usr

rq

∩ usr) 6=

/

0) ∧ (ob j

rq

∩ ob j) 6=

/

0) ∧

(opr

rq

∩ opr) 6=

/

0) then Target is applicable

5: for (i ∈ rule) do

6: if (i.ruleEff= false) then

7: RuleDecision = Indeterminate

8: else

9: if (i.ruleEff= Deny) then

10: RuleDecision = Deny

11: end if

12: RuleDecision = permit

13: end if

14: end for

15: else

16: Indeterminate Target is indeterminate

17: end if

Towards Risk-aware Access Control Framework for Healthcare Information Sharing

315

Risk threshold

0

0.5

1

Permit

Permit with risk

mitigation

strategy

Risk

Object Security Level

0 10050

Risk appetite

Deny

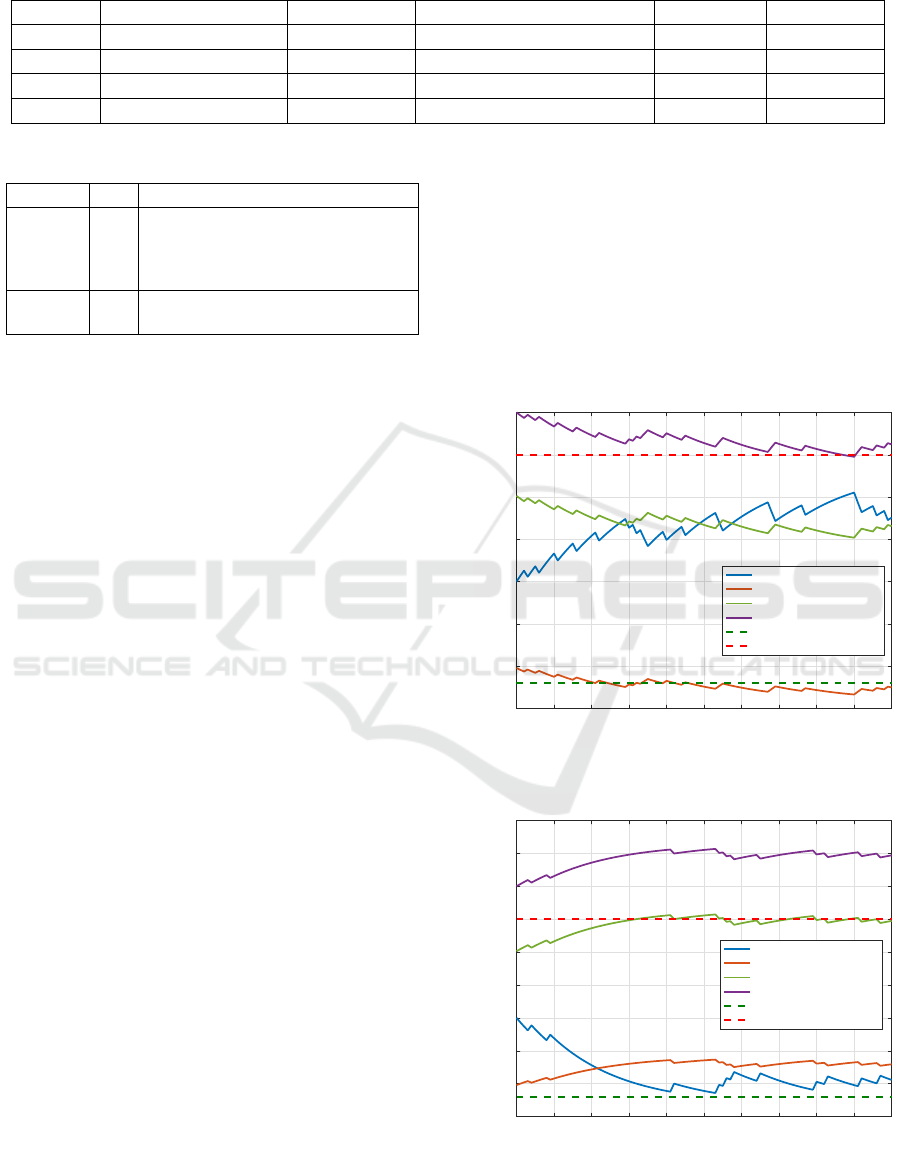

Figure 1: WBAC risk scale.

4 RISK AWARE FRAMEWORK

In this section, we present our risk framework. First,

we present the risk appetite and risk threshold and

then object security labels and user trust level. Fol-

lowed by, user trust calculation and risk value calcu-

lation.

4.1 Risk Appetite and Thresholds

In the decision function (definition 6), the static deci-

sion (permit, deny, indeterminate) would be replaced

by a dynamic access decision based on the risk value.

Figure 1 presents the risk scale of our model, where

the risk curve is divided into three bands.

The first band (risk appetite boundary) is associ-

ated with a decision permission because there is no

risk or the risk is very low and an entity (e.g., organi-

zation) is willing to take the risk in anticipation of a

reward.

The second band (risk threshold boundary) is as-

signed a decision permission with risk mitigation

plans (e.g., risk acceptance (Rittenberg and Martens,

2012), risk transfer and risk avoidance (Stoneburner

et al., 2002)). Below that risk threshold (between the

risk appetite boundary and risk threshold boundary)

is the risk tolerance, which is the amount of risk that

an entity will accept or is willing to withstand. Risk

threshold is measured along the level of uncertainty

or level of impact at which an entity may have a spe-

cific interest. Below that risk threshold, the entity will

accept or withstand the risk. Above that risk thresh-

old, the entity will not tolerate the risk. Risk thresh-

old varies from one entity to another depending on the

risk level an entity is willing to take.

The third band is associated with the deny deci-

sion because the risk is too high. In this case, it is

not desirable to prevent a healthcare provider from ac-

cessing an object as this could cause greater damage

to the patient than the risk of accessing the patient’s

records. This is due to requirement of “nothing must

interfere with the delivery of care” (Salim et al., 2011;

Rostad et al., 2007). But the object owner or sys-

tem administrator (e.g. security administrator) must

be notified of the risk and the access request will re-

quire evaluating the patient’s (object owner) consent.

Therefore, the problem of low risk detectability can

be examined and the object owner or system adminis-

trator may carry out an investigation and discover the

facts of access.

In a case of the risk value is greater than the risk

appetite and risk threshold, the healthcare provider’s

access request to the object will not be denied com-

pletely, but mitigation plans can be put in place to

reduce the risk. An example of a mitigation plan

is an additional evaluation layer such as a purpose-

based access control policy to solve conflicting anal-

ysis (Wang et al., 2010). The purpose of information

access could be associated with the access request to

specify the intention of the access request.

4.2 Object Security Labels

Every object in our model is associated with a secu-

rity label which represents the level of object protec-

tion. Based on the level of sensitivity, the object can

be classified in numerous ways. For example, one of

the following labels is assigned to the object are top

secret, secret, confidential and unclassified (Stewart

et al., 2015).

Definition 7. The object security labels denoted by

security-level(ob j) and is defined in the interval

[0, 1], where 0 means the required level of protection

is very low and 1 means the level of protection re-

quired is very high.

Object security level is assigned to an object by

the object owner. It is understood that healthcare

providers need varying degrees of patient information

to perform their job duties (Salim et al., 2011). Thus,

object classification require a great deal to set a secu-

rity levels to patient data as well as define what access

privileges could lead to potential insider threats and

compromise patient data. However, we believe that

assigning a security level to a medical record based on

the sensitivity of information is doable. Here, we as-

sume that the object owner decides on the object’s se-

curity level based on its sensitivity. For example, psy-

chotherapy notes (i.e., notes recorded by a health care

provider who is a mental health professional (US De-

partment of Health and Human Services et al., 2014))

could have a higher security level comparing to pa-

tient personal information such as phone number and

address. In our access control model (section 3), and

according to our object classification, such an object

(psychotherapy notes) will be accessible to treatment

ICISSP 2018 - 4th International Conference on Information Systems Security and Privacy

316

team only if treatment team decide such information

is necessary for patient treatment.

4.3 User Trust Level

User trust level has been defined by Mayer et al.,

1995, as a function of trustee’s behavior that includes

its ability, benevolence and integrity of the trustor’s

propensity to trust. In this study, we assume that, each

user (healthcare provider) is associated with a trust

level that represents the level of user clearance by the

organization (e.g., hospital and clinic) that owns the

data.

Definition 8. The trust for a user (usr) towards an

object (ob j) is denoted by trust-level(usr) and is de-

fined in the interval [0, 1], where 1 means the user is

fully trusted and 0 means the user is totally untrusted.

User trust level may be computed either statically

or dynamically. Static computation refers to user at-

tributes. For example, the trustworthiness level of car-

diologists in the cardiology department may be higher

than that of the nurses who work in the same depart-

ment. Dynamic computation refers to users’ access

history (Cheng et al., 2007), behavior (Shaikh et al.,

2012; Shaikh et al., 2011; Baracaldo and Joshi, 2013)

and value of user reputation (Bijon et al., 2013).

As we mentioned early (section 2), Shaikh and

others in (Shaikh et al., 2011; Shaikh et al., 2012)

proposed a dynamically user trust calculation model

based on rewards and penalty points. The authors as-

sumed that, on the one hand, if the access request to an

object is successfully redeemed, an obligation server

will assign rewards points to the user with a respect

to the object. On the other hand, if the access request

is not successfully redeemed due to any problem, the

obligation server will assign penalty points.

In this study, we modify the proposed method of

calculating rewards and penalty histories by assigning

different weight values based on the oldness of their

occurrence. That is, the older the action that lead to a

specific reward/penalty, the smaller the weight given

to that reward/penalty. This is done by incorporating

a forgetting factor (Hayes, 2009) which decays ex-

ponentially with the time lag between the instance of

calculating the trust level and the reward/penalty ac-

tion occurrence.

4.4 User Trust Calculation

For each user, we calculate a trust level value

trust-level(usr) with a respect to an object. The cal-

culation is done based on user previous trust level

value T L

value

, rewards RE and penalty PE points with

a regards to the time τ as in equation 1. In case of

new user (user has no T L

value

) or if neither penalties

nor rewards are available, then the user trust will be

assigned to the lower trust level TL

low

.

trust-level(usr) = T L

value

+

∑

n

i=1

RE(i) · λ

τ

i

−τ

0

RE

−

∑

n

i=1

PE(i).λ

τ

i

−τ

0

PE

n

!

(1)

Where, i is an event index, λ

RE

≤ 1 is rewards for-

getting factor, λ

PE

≤ 1 is penalty forgetting factor, τ

i

is a time of the event occurrence i, τ

0

is the current

time of calculating the user trust level, and n is the

number of events.

4.5 Risk Value Calculation

Risk is defined as the probability that a hazardous sit-

uation (threat) will occur and the impact of success-

ful violation. When designing a system, it is nec-

essary to understand the risk exposure level and en-

sure it is within risk appetite. The National Institute

of Standards and Technology (NIST) (Stoneburner

et al., 2002) calculate the risk based on threat prob-

ability and the impact. In this paper, we determine

the threat probability based on the number of rewards

and penalty points and the impact is defined based on

the object security label (section 4.2). For example, if

the object security label is high (e.g., secret) than the

risk threshold is high and the impact will be high (this

depends on the user trust level).

Before we start explain how we calculate the risk

value, we first, give an auxiliary predicates we need

for our risk assessment. The following functions are

defined:

• risk-appetite(opr, ob j): The risk appetite value

used to determine the amount or volume of risk

that an organization or individual will accept and

is defined in the interval [0, 1].

• risk-threshold(opr, ob j): The risk threshold value

is used to determine whether the risk is acceptable

to an organization and is defined in the interval

[0, 1] which

Given rewards and penalty points, we calculate the

risk associated with an access request Q based on the

user trust level (trust-level(usr)) and the object secu-

rity level (security-level(ob j)) as shown in equation

2.

rv(usr, ob j) = (sigm(security-level(ob j)−

trust-level(usr)).

Where, sigm(x) =

1

1 + e

−x

.

(2)

We believe there are many ways to calculate the

risk value. Here, we chose the sigmoid function

Towards Risk-aware Access Control Framework for Healthcare Information Sharing

317

(equation 2) as an activation function to weigh the risk

sum of the object security level and user trust level

and present the output as a sigmoid curve. The sig-

moid function is real-valued and differentiable maps

binary events to real-valued (probabilistic) curves. It

has a non-negative or non-positive first derivative,

which makes calculating and learning the weights of

a risk easier. It is also a very popular function used in

machine learning, artificial neural networks (Basheer

and Hajmeer, 2000) and modeling risk management

(Cheng et al., 2007), because it satisfies a property

between the derivative and itself, such that it is com-

putationally easy to perform.

4.6 Risk-aware Access Decision

Mechanism

After calculating the trust level of user and the risk

value, The risk parameter is added to the decision

function (definition 6). It is said a threat exists if

a usr ∈ USR can access an ob j ∈ OBJ such that

rv(usr, ob j) ≥ risk-appetite(opr, ob j).

Definition 9. Risk-Aware Access Decision Mecha-

nism is defined as if condition defined in rules is eval-

uated as true and the risk value rv(usr, ob j) is less

than risk-appetite(opr, ob j) , the usr is permitted,

otherwise, denied. Formally:

rd f : Q × PS × rv(usr, ob j) → D

rd f =

Permit if Case1.

Mitigated if Case2.

Deny if Case3.

Indeterminate Otherwise.

Where, PS and rv(usr, ob j) are the policy set (defini-

tion 2) and risk value date sets, respectively, related to

object ob j and

• Case1= ∃ rule [rule.ruleE f f == permit] ∧

rv(usr, ob j) ≤ risk-appetite(opr, ob j).

• Case2= ∃ rule [rule.ruleE f f == permit] ∧

risk-threshold(opr, ob j) ≤ rv(usr, ob j) ≥

risk-appetite(opr, ob j).

• Case3= ∃ rule [rule.ruleE f f == deny] ∨

rv(usr, ob j) ≥ risk-threshold(opr, ob j).

We say, if the risk value given by rv(usr, ob j) fails

between the first and second bands (Figure 1) and

there exists rules (Algorithm 1) permit the request,

then user (usr) is permitted to perform operation (opr)

on object (ob j) with risk value (rv(usr, ob j)) and risk

mitigation plans, otherwise, the access request is de-

nied. Unless the request is approved by the object

owner or system administrator. In deny case, we also

assume it might be rules in access policy deny the ac-

cess. Indeterminate means there is no rules applicable

to the request.

5 RISK ASSESSMENT IN ACCESS

DECISIONS

In this section, first, we consider a usage scenario and

then we give examples of how the access decision is

made according to user trust level and risk value.

5.1 Usage Scenario

Considering the following case study (adapted from

(Zhang and Liu, 2010)). Four healthcare providers

(Dean, Bob, Cara and Alex) are working on a case

(treatment of a patient Alice). In our model, we as-

sume Dean is assigned the primary doctor role and

he serves as the group manager. He is responsible

for initiating the work (Alice’s treatment case) and

choosing the practitioners (team of doctors) who may

be required to attend Alice’s consultation and treat-

ment. Dean assigns users (Bob, Cara and Alex) to the

team. The members join the team and are assigned

team roles based on the required job function.

We assume that Dean decides who should access

what based on the required job. Table 1 presents the

policy data used as input for our proof of concept.

As shown in Table 1, we assume that objects

(Alice’s health recors) are classified to ob j

a(Alice)

=

{File

1

, ......, File

n

}, where f ile

i

is private informa-

tion such as Alice’s personal data and other infor-

mation not related to the current case (e.g sexu-

ally transmitted diseases (STD)), and ob j

b(Alice)

=

{File

1

, ......, File

n

}, where f ile

i

is information re-

lated to Alice’s current case, such as her old medical

records. The security level of each object are assigned

as shown in Table 2.

Bob, Cara and Alex are healthcare professionals

who join Alice’s treatment team and are assigned to

team role. We assume, if all conditions (e.g. separa-

tion of duty (SOD) are true, then role R and T R are

assigned to users and the access state Γ (definition 1)

will be updated as shown in example 2.

Example 2. Access State (γ

1

): Consider Alice’s case,

the initial state denoted by γ

1

is the formal model as-

signment presented in Table 1 as follows:

USR = {Dean, Bob,Cara, Alex}.

R = {Primary-doctor, General-practitioner,

Gastroenterologist, Medical-coordinator}.

T R = {tr

a

,tr

t

,tr

m

}.

T = {t

1

}.

ICISSP 2018 - 4th International Conference on Information Systems Security and Privacy

318

Table 1: Tabular structure of policy data.

Subject Job Function Team Role Object Type Action Permission

Dean Primary Doctor Role OBJ

A(Alice)

and OBJ

B(Alice)

Read/write Permit

Bob General practitioner Action OBJ

A(Alice)

and OBJ

B(Alice)

Read Permit

Cara Gastroenterologist Thought OBJ

B(Alice)

Read Permit

Alex Medical coordinator Management OBJ

B(Alice)

Read Permit

Table 2: Assumptions of object security levels.

Object SL Description

ob j

A

0.8 Alice’s personal information (e.g.

name, phone number, STD) and

other EHRs that are not related to

Alice’s current case

ob j

b

0.5 Alice’s medical records that are re-

lated to Alice’s current case

W = {w

1

}.

OBJ = {ob j

a

, ob j

b

}.

OPR = {Read,W rite}.

PER = {(read, ob j

a

), (write, ob j

a

), (read, ob j

b

),

(write, ob j

b

)}.

where,

USR-R-A = {(Dean, Primary-doctor)}.

PER-R-A = {(Primary-doctor, (read, ob j

a

)),

(Primary-doctor, (write,ob j

a

)), (Primary-doctor,

(read, ob j

b

)), (Primary-doctor, (write, ob j

b

))}.

USR-T -A = {(Alex, t

1

), (Cara, t

1

), (Bob,t

1

)}.

T M-T R-A = {(Bob, t

1

,tr

a

), (Cara, t

1

,tr

t

), (Alex,t

1

,tr

m

)}.

PER-T R-A = {(tr

a

, (read, ob j

a

)), (tr

a

, (write, ob j

a

)),

(tr

a

, (read, ob j

b

)), (tr

t

, (read, ob j

b

)), (tr

m

, (read, ob j

b

))}.

T -W -A(t

1

, w

1

) = {(w

1

,t

1

)}.

risk-appetite(usr, ob j) = 0.18 (assumption).

risk-threshold(usr, ob j) = 0.45 (assumption).

5.2 Proof of Concept

In this proof of concept, we consider the access state

γ

1

which is a particular model assignment to a given

WBAC system. An access state γ ∈ Γcontains all the

information necessary to make access control deci-

sions for a given time.

Example 3 (User permission with risk assess-

ment:). Let assume an example where Cara re-

quests read access to file in ob j

b(Alice)

and let q =

(Cara, ob j

b

, read) where q is the access request (def-

inition 5).

Table 2 shows that security-level(ob j

b

) = 0.5 and

access state γ

1

(Example 2) shows that Cara is a mem-

ber of team t

1

and she is assigned to team role tr

t

. Ac-

cording to γ

1

, we could say that the system dose not

violate the any constraints (e.g., SoD and cardinality

constraints) and based on the access decision function

d f (Definition 6), Cara could access and preform read

operation on a file in ob j

b(Alice)

as she is a member of

a team and she hold a team role (policy 6).

Considering the user trust level (Figures 2) as the

basic criterion for conducting risk assessment, we

could see how trust level of user and risk values in-

crease and/or decreases with the change in user be-

havior. For Cara’ request, it can be concluded that

permitting Cara to read ob j

b

has low risk (Figure

2(a)) comparing with her trust level which was calcu-

lated according to her history of rewards and penalty

points.

On the one hand, in figure 2(a), we assumed

0 10 20 30 40 50 60 70 80 90 100

Time index

0.15

0.2

0.25

0.3

0.35

0.4

0.45

0.5

Trust level/ Risk value

User TL

Risk value when SL = 0.2

Risk value when SL = 0.5

Risk value when SL = 0.8

Risk Appetite

Risk Threshold

(a) Trust level and risk value in case of 20% misbehaving

user

0 10 20 30 40 50 60 70 80 90 100

Time index

0.15

0.2

0.25

0.3

0.35

0.4

0.45

0.5

0.55

0.6

Trust level/ Risk value

User TL

Risk value when SL = 0.2

Risk value when SL = 0.5

Risk value when SL = 0.8

Risk Appetite

Risk Threshold

(b) Trust level and risk value in case of 90% misbehaving

user

Figure 2: Risk exposure using our model.

Towards Risk-aware Access Control Framework for Healthcare Information Sharing

319

that Cara is 80% behaving according to rules and

she only violates the system rules and policy about

20% (i.e., 20% misbehaving user). Figure 2(a) in-

dicates that the risk value rv(Cara, ob j

b(Alice)

) is

greater than risk-appetite(usr, ob j) and less than

risk-threshold(usr, ob j). Therefore, user Cara is per-

mitted to perform operation read on object ob j

b(Alice)

with a risk value of ' 0.4 (function 2). This happens

when trust-level(Cara) ≈ 0.3.

On the other hand, figure 2(b) shows the case

when Cara is 90% misbehaving user. As shown in

the Figure 2(b), Cara is posing a high risk towards

ob j

b(Alice)

since the trust-level(Cara) ≈ 0.2 is less

than security-level(ob j) = 0.5 then the rv(usr, ob j) '

0.45 ≥ risk-threshold(usr, ob j). According to the

principle presented in (Cheng et al., 2007) and Fig-

ure 2(b), the threat always increases as the difference

between the object security level and user trust level

increases and and vice versa.

As we mentioned early (section 4.1) that we can

not prevent a healthcare provider from accessing an

object during patient treatment. However, in our ac-

cess control model (section 3), the risk that Cara

poses to the object has been mitigated. Upon con-

ducting risk assessment of Alice’s object, it is noted

that Cara poses a threat to this file. Therefore, the

problem of low risk detectability is examined and Al-

ice or the system administrator has discovered the fact

of an access (Alice’s treatment).

6 DISCUSSION AND

CONCLUSIONS

6.1 Discussion

In our access control model, a realistic way of han-

dling collaboration risk is to minimize the discrep-

ancy between the granted and required access. This

is done by utilizing resource classification in orga-

nizing shared resources and team roles. Dean could

retrieve all resources that he thinks team members

does not need to for Alice’s treatment. Dean must

also receive Alice’s consent (explicitly or implic-

itly) regarding treatment team formation and which

objects according to “need-to-know” principle (Fer-

raiolo et al., 2001) and “minimum necessary” stan-

dard (Agris, 2014) can be shared with the team. This

would be done to satisfy the requirement of “selective

relevancy” where Alice would be able to withhold in-

formation that remains confidential.

In reality, patients cannot decide what informa-

tion is needed to complete their treatment, therefore

doctors must choose the required information them-

selves and the patient has to agree (Salim et al.,

2011; Rostad et al., 2007). In any case, the pa-

tient is aware of the threat posed by the healthcare

provider to the objects and the patient can refuse to

share objects with the healthcare provider. Although

the patient may refuse to share, the risk of refus-

ing to share objects with healthcare providers poses a

greater risk than the risk of sharing the objects. Thus,

in our access control model, the extent of collabo-

rative access has been elevated and then audited the

increased responsibilities of an insider pre-justifying

the extent request, and an authorizing entity (patient

or primary doctor) granting the access possibly for

a specified ahead access time which would be done

by the work component. This would make an in-

sider threat more detectable. In addition, the activ-

ity of a healthcare provider who joined the team and

was granted such access extent can be logged and

audited for intrusion detection if there is contradic-

tion of the pre-justified request for the extent. In a

case where the risk is substantial and non-acceptable

(rv(Cara, ob j

b

) ≥ risk-threshold(usr, ob j

b

)), the pol-

icy must be amended to allow such additional con-

straints.

6.2 Conclusions

The motivation behind creating a risk assessment

framework for our enhances access control model is

to help improve system security in terms of protect-

ing healthcare information from insider threats, such

as patient data disclosure and/or unauthorized access

or modification by insiders. We, first, briefly talked

about our access control model (WBAC). Second, we

presented the concept of user trust level and object se-

curity level. Also, we introduced the idea of assigning

rewards and penalty points for a users and how this

rewards and penalty points are used to calculate the

user trust level. The main goal of our risk assessment

framework is to evaluate the risks associated with ac-

cess requests and based on the risk value, an access

decision is made.

In the future, we plan to extent the model by con-

sidering other factors such as cost and impact of per-

mission, risk associated with role/ team role assign-

ments as well as directly compared our model with

exciting models. Also, we plan to evaluate the validity

of the scheme to provide solutions to improve health-

care quality, provide access to a high-quality health-

care system and support close cooperation between

healthcare professionals and care providers from dif-

ferent organization.

ICISSP 2018 - 4th International Conference on Information Systems Security and Privacy

320

REFERENCES

Abomhara, M. and Køien, G. M. (2016). Towards an access

control model for collaborative healthcare systems.

In HEALTHINF’16, 9th International Conference on

Health Informatics, volume 5, pages 213–222.

Abomhara, M., Yang, H., Køien, G. M., and Lazreg, M. B.

(2017). Work-based access control model for coop-

erative healthcare environments: Formal specification

and verification. Journal of Healthcare Informatics

Research, pages 1–33.

Agris, J. L. (2014). Extending the minimum necessary

standard to uses and disclosures for treatment: Cur-

rents in contemporary bioethics. The Journal of Law,

Medicine & Ethics, 42(2):263–267.

Baracaldo, N. and Joshi, J. (2013). An adaptive risk man-

agement and access control framework to mitigate in-

sider threats. Computers & Security, 39:237–254.

Basheer, I. and Hajmeer, M. (2000). Artificial neural net-

works: fundamentals, computing, design, and applica-

tion. Journal of microbiological methods, 43(1):3–31.

Bell, D. E. and LaPadula, L. J. (1975). Computer security

model: Unified exposition and multics interpretation.

MITRE Corp., Bedford, MA, Tech. Rep. ESD-TR-75-

306, June.

Bijon, K. Z., Krishnan, R., and Sandhu, R. (2013). A frame-

work for risk-aware role based access control. In Com-

munications and Network Security (CNS), 2013 IEEE

Conference on, pages 462–469. IEEE.

Chao, C.-A. (2016). The impact of electronic health records

on collaborative work routines: A narrative network

analysis. International journal of medical informatics,

94:100–111.

Cheng, P.-C., Rohatgi, P., Keser, C., Karger, P. A., Wagner,

G. M., and Reninger, A. S. (2007). Fuzzy multi-level

security: An experiment on quantified risk-adaptive

access control. In 2007 IEEE Symposium on Security

and Privacy (SP’07), pages 222–230. IEEE.

Ferraiolo, D. F., Sandhu, R., Gavrila, S., Kuhn, D. R., and

Chandramouli, R. (2001). Proposed nist standard for

role-based access control. ACM Transactions on In-

formation and System Security (TISSEC), 4(3):224–

274.

Hayes, M. H. (2009). Statistical digital signal processing

and modeling. John Wiley & Sons.

Hu, V. C., Ferraiolo, D., Kuhn, R., Schnitzer, A., Sandlin,

K., Miller, R., and Scarfone, K. (2014). Guide to at-

tribute based access control (abac) definition and con-

siderations. NIST Special Publication, 800:162.

Li, N., Wang, Q., Qardaji, W., Bertino, E., Rao, P., Lobo,

J., and Lin, D. (2009). Access control policy com-

bining: theory meets practice. In Proceedings of the

14th ACM symposium on Access control models and

technologies, pages 135–144. ACM.

Probst, C. W., Hunker, J., Gollmann, D., and Bishop, M.

(2010). Insider Threats in Cyber Security, volume 49.

Springer Science & Business Media.

Reitz, R., Common, K., Fifield, P., and Stiasny, E. (2012).

Collaboration in the presence of an electronic health

record. Families, Systems, & Health, 30(1):72.

Rittenberg, L. and Martens, F. (2012). Enterprise risk man-

agement: understanding and communicating risk ap-

petite. COSO, January.

Rostad, L., Nytro, O., Tondel, I., and Meland, P. H. (2007).

Access control and integration of health care systems:

An experience report and future challenges. In Avail-

ability, Reliability and Security, 2007. ARES 2007.

The Second International Conference on, pages 871–

878. IEEE.

Salim, F., Reid, J., and Dawson, E. (2010). Authorization

models for secure information sharing: A survey and

research agenda. The ISC International Journal of In-

formation Security, 2(2):69–87.

Salim, F., Reid, J., Dawson, E., and Dulleck, U. (2011). An

approach to access control under uncertainty. In Avail-

ability, reliability and security (ARES), 2011 Sixth In-

ternational conference on, pages 1–8. IEEE.

Shaikh, R. A., Adi, K., and Logrippo, L. (2012). Dynamic

risk-based decision methods for access control sys-

tems. computers & security, 31(4):447–464.

Shaikh, R. A., Adi, K., Logrippo, L., and Mankovski,

S. (2011). Risk-based decision method for access

control systems. In Privacy, Security and Trust

(PST), 2011 Ninth Annual International Conference

on, pages 189–192. IEEE.

Shoniregun, C. A., Dube, K., and Mtenzi, F. (2010). Elec-

tronic healthcare information security, volume 53.

Springer Science & Business Media.

Stewart, J. M., Chapple, M., and Gibson, D. (2015). CISSP

(ISC)2: Certified Information Systems Security Pro-

fessional Official Study Guide. John Wiley & Sons,

Seventh Edition edition.

Stoneburner, G., Goguen, A. Y., and Feringa, A. (2002).

Special publication 800-30: risk management guide

for information technology systems. National Insti-

tute of Standards & Technology.

US Department of Health and Human Services et al. (2014).

Hipaa privacy rule and sharing information related to

mental health.

Wang, H., Sun, L., and Varadharajan, V. (2010). Purpose-

based access control policies and conflicting analysis.

In Security and Privacy–Silver Linings in the Cloud,

pages 217–228. Springer.

Zhang, R. and Liu, L. (2010). Security models and re-

quirements for healthcare application clouds. In Cloud

Computing (CLOUD), 2010 IEEE 3rd International

Conference on, pages 268–275. IEEE.

Towards Risk-aware Access Control Framework for Healthcare Information Sharing

321