TEATIME: A Formal Model of Action Tendencies

in Conversational Agents

Alya Yacoubi

1,2

and Nicolas Sabouret

1

1

LIMSI, CNRS, Univ. Paris-Sud, Universit

´

e Paris-Saclay, B

ˆ

at 508,

rue John von Neumann, Campus Universitaire, 91400 Orsay, France

2

DAVI les humaniseurs, 19 Rue Godefroy, 92800 Puteaux, France

Keywords:

Conversational Agents, Computational Model of Affects, Formal Model, Dialogue System.

Abstract:

This paper presents a formal model of socio-affective behaviour in a conversational agent based on the Ac-

tion Tendency theory. This theory defines emotions as tendencies to perform an action. This theory allows

us to implement a strong connection between emotions and speech acts during an agent-human interaction.

Our model presents an agent architecture with beliefs, desires, ideals and capacities. It relies on 6 appraisal

variables for the selection of different emotional strategies depending on the context of the dialogue. It also

supports social regulation of emotions depending on social rules. We implemented this model in an agent

architecture and we give an example of dialogue with a virtual insurance expert in the context of customer

relationship management.

1 INTRODUCTION

Computer scientists are increasingly interested in

adding an affective component to the human-agent in-

teraction systems. Indeed, it has been validated that

machines which express affective states enhance the

user’s satisfaction and commitment in the interaction

(Prendinger and Ishizuka, 2005). Moreover, affects

play a fundamental role in the decision-making pro-

cess and rational behaviour (Coppin, 2009). They

act as heuristics for decision making to ease the ac-

tion selection process in context (Frijda, 1986). This

role of affects is crucial for conversational agents that

have to combine a rational answers with social be-

haviours. For instance, when the user expresses dis-

appointment about a task done by the agent, a credible

agent should be inclined to apologize rather than pur-

suing the task-oriented interaction. However, com-

bining task-oriented answers with socio-affective be-

haviour in a dialogue is still challenging.

In order to achieve such a combination, one must

better understand what are emotions and how they in-

tervene in the decision process. The notion of ”emo-

tions” has been used initially to refer to the ten-

dency to fight or to yell when you are angry against

other, the tendency to run away when fear is trig-

gered (McDougall, 1908) or the tendency to approach

others (positive emotions). In contemporary theories

(Lazarus, 1991), emotions are often defined as com-

bination of numerous components such as 1) the ap-

praisal component which evaluates the world changes

in terms of goals, 2) the experience component which

consists in labelling the feeling’s change toward the

stimulus with a common-sense word (fear, sadness,

etc) and 3) the behavioural component which conveys

physical actions, facial expression, and/or vocal out-

put. Frijda in (Frijda, 2010) also stressed out 4) the

motivational component which aims at changing the

relation between the self and the stimulus and 5) the

somatic component which prepares the organism for

action.

The role of the motivational component, often re-

ferred as action tendency in some theories, is to give

a direction to one’s future actions. According to Fri-

jda, this general direction overrides all other possible

goals. Moreover, this component play an important

role in behaviour regulation. Thus, the coping strat-

egy, i.e. the agent’s adaptation to the stimulus at the

source of the emotion, cannot be chosen without this

frame for possible actions. For this reason, we claim

that implementing a proper decision mechanism in a

conversational agent requires to incorporate the moti-

vational component.

In this paper, we present a formal model of af-

fect based on the action tendency theory which makes

a connection between the appraisal process and the

Yacoubi, A. and Sabouret, N.

TEATIME: A Formal Model of Action Tendencies in Conversational Agents.

DOI: 10.5220/0006595701430153

In Proceedings of the 10th International Conference on Agents and Artificial Intelligence (ICAART 2018) - Volume 2, pages 143-153

ISBN: 978-989-758-275-2

Copyright © 2018 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

143

agent’s verbal behaviour by modelling the motiva-

tional component. The agent interprets the user’s ut-

terances. It generates action tendencies and decides

whether to perform an emotional reaction or to con-

tinue with the task-oriented dialogue.

In the following section, we discuss existing com-

putational models of emotions and their relation of

the motivational component of emotions. The third

section explains the syntax and the semantics of our

logical framework. The fourth section defines the ap-

praisal and action selection process using those log-

ics. In the fifth section, we illustrate the implementa-

tion of our model with a short interaction sample.

2 RELATED WORK

2.1 Action Tendency Theory

The Action Tendency theory is a part of the appraisal

theories of emotions. The term appraisal refers to

the continuous process of evaluating the stimuli en-

countered according to their relevance to well-being

(Lazarus, 1991; Frijda, 1986). In other words, it de-

scribes the mechanism that makes an individual eval-

uate a situation, and later adapt to it. This adaptation

is often referred-to as coping.

Several appraisal theorists argue that the eval-

uation process often occurs automatically, uncon-

sciously and/or rapidly (Arnold, 1960; Frijda and

Zeelenberg, 2001; Lazarus, 1991; Jenkins and Oat-

ley, 1996). This is the reason why computer scientists

are interested in the simulation of this process in vir-

tual agents, so as to improve the spontaneity and the

credibility of their reaction.

In all appraisal theories, the appraisal process re-

sults from the assignment of a value to a set of ap-

praisal variables following the perception of a stim-

ulus. For example: ”is the stimulus good for me”.

Depending on the model, the output of this appraisal

process can be either an emotional label (fear, joy, etc)

for so-called categorical models such as OCC (Ortony

et al., 1990), or a vector for so-called dimensional

models such as PAD (Mehrabian, 1996).

However, it is important to understand that emo-

tions cannot be reduced to a label or a vector: these

are only a description of the state of the individual,

in reaction to an affective stimulus, in order to adapt

itself to the new situation. Frijda (Frijda, 1986) ex-

plains this aspect by saying that one of the goals of

the appraisal process is to prepare the individual for

a reaction. In this view, emotions can be seen as an

heuristic mechanism to select the behaviours, which

is the reason why Frijda refers to them as action ten-

dency. In this model, the output of appraisal process

is not a label or a vector but an action tendency (AT).

It is defined as the will to establish, modify, or main-

tain a particular relationship between the person and

a stimulus (Frijda, 2010; Frijda and Mesquita, 1998).

This AT can then be turned into an emotion label as

in categorical models (Roseman, 2011). It is impor-

tant to note that action tendencies are not necessar-

ily a preparation for physical action (Coombes et al.,

2009). They may also be a mental action such as dis-

engagement, disinterest, nostalgia, and so on.

Frijda also introduced another notion in his model:

the activation. It has been defined in works prior to

Frijda (Duffy, 1962) as a state of energy mobilization.

Frijda used this term to refer to the state of readi-

ness for action of a given organism (somatic or mo-

tor). The action tendency is said to be activated when

it benefits from an energy concentration to reach the

desired final state. In other words, the action tendency

can be turned into a concrete behaviour or plan. On

the contrary, the action tendency is said to be inhib-

ited when it lacks energy concentration. The acti-

vation of physical behaviour resulting from a action

tendency depends on several factors most of them are

social rules (Frijda, 1986). The action of insulting,

for instance, is a behaviour resulting from a state of

anger. In some social groups, insulting is not accept-

able. The tendency to insult is then inhibited. The

way the individual adapts to the situation, taking into

account social norms and self-capacity to deal with

affects, is the coping strategy.

The theory of action tendency highlights the

strong connection between emotions and actions and

proposes hint on how to integrate this connection in

affective component for human-agent interaction sys-

tems such as conversational agents. In our work, we

propose to enhance a simple task-oriented conversa-

tional agent with a computational model of action ten-

dency so as to produce more spontaneous and rele-

vant reactions. The action tendencies are expressed

through speech acts since we are in the context of di-

alogue, but our model can be used for a more general

purpose.

2.2 Affective Models in Literature

A great number of affective models for conversa-

tional agents have been proposed during the last

two decades. Most of them are founded on one

or more psychological theories so as to produce re-

alistic behaviours for the virtual agents. For ex-

ample, the ALMA model for controlling the non-

verbal behaviour of a virtual agent (Gebhard, 2005) is

ICAART 2018 - 10th International Conference on Agents and Artificial Intelligence

144

based on the PAD theory by Mehrabian (Mehrabian,

1996). It computes the emotion of a virtual agent at

each time of the simulation based on external stim-

uli. In a different context, (Adam et al., 2009) pro-

pose a modal logic implementation of the OCC the-

ory (Ortony et al., 1990) to compute the emotions,

reason about them and build believable agents. How-

ever, these models of emotions do not cover the be-

havioural component: they do not explain how the

agent should behave in reaction to the computed af-

fects.

A few cognitive architectures have been proposed

that implement both the appraisal component and the

behavioural component. The most famous ones are

EMA (Gratch and Marsella, 2004), based on Lazarus’

theory (Lazarus, 1991) and FAtiMA (Dias and Paiva,

2005), based on the OCC theory. Both models rely on

a specific list of variables to implement the appraisal

and the experience components. Both models also

support the description of coping behaviours. How-

ever, in these models, the emotion labelling (which

corresponds to the experience component of emo-

tions) makes a separation between the appraisal pro-

cess and the behaviour selection. On the contrary, ac-

cording to (Frijda, 1986), these processes cannot be

separated: the behaviour is part of the experience it-

self.

Similarly, (Dastani and Lorini, 2012) proposed a

formal model for both appraisal and coping. The ac-

tion selection is defined through inference rules in the

model itself: emotions directly affect the beliefs, de-

sires and intentions of the agent. For instance, when

an agent faces a fearful event, it will reduce its in-

tention toward the action that produces this situation.

However, this work has three limitations. First, it re-

lies on emotion intensities, which are difficult to com-

pute and justify from a psychological point of view,

as explained by (Campano et al., 2013). Second, it

only considers a limited subset of the OCC emotion

categories for appraisal and the coping process only

considers two negative emotions. Last, they do not

consider the cause of the emotion, which is very im-

portant in social interaction since it impacts the action

tendency as shown by (Roseman, 2011).

Other approaches consider the social dimension

of the interaction. For example, the Psychsim cogni-

tive architecture (Pynadath and Marsella, 2005) pro-

poses an action selection mechanism based on a for-

mal decision-theoretic approach: the agent selects ac-

tions based on beliefs and goals and can adopt so-

cial attitudes using reverse appraisal and theory of

mind. However, the architecture does not consider

affects in the action selection process. Our goal in the

TEATIME model is to define how the affects inter-

vene in the action section.

The model proposed by (Courgeon et al., 2009) in

the MARC architecture proposes a direct connection

between appraisal and action tendencies. This mod-

els is based on Scherer’s theory (Scherer, 2005) and it

connects the appraisal variables to action units for the

facial animation of the virtual character. This model

focuses only on non-verbal behaviour and does not

consider the decision-making process (action selec-

tion or dialogue). For this reason, it is complementary

with our approach: we focus on building a connection

between appraisal and action tendencies for dialogue

act selection in a conversational agent.

The formal model proposed by Steunebrink and

Dastani (Steunebrink et al., 2009) also aims at taking

into consideration action tendencies in the decision

making process. The appraisal process is based on

the OCC theory of emotions and the proposed cop-

ing mechanism is inspired by Frijda’s theory of ac-

tion tendencies. However, this model is not compli-

ant with the theories of emotions in social science,

because it makes a clear separation between the ap-

praisal process and the coping processes. Action ten-

dencies are simply coping strategies for social emo-

tions (such as pity, resentment and gratitude) or re-

evaluation of the situation to revise the agent’s de-

sires.

The problem with such an approach is that, when

it comes to dialogue, actions cannot be separated from

emotion expression. For instance, insulting the inter-

locutor is a dialogue act that conveys the anger emo-

tion. For this reason, one cannot separate the emotion

appraisal from the action performance. Actions ten-

dencies are the theoretical bricks that connects the two

and, to our knowledge, no complete computational

model of action tendencies has been proposed in the

literature.

Our goal in this paper is to propose a model of

emotions in which action tendencies (i.e. the motiva-

tional component in Frijda’s theory) directly connect

the evaluation process with the action selection mech-

anism.

3 THE TEATIME LOGICS

The TEATIME model aims at offering a formal repre-

sentation, using modal logics, of the affective process

from appraisal to action selection in the context of

dialogical interaction. TEATIME stands for Talking

Experts with an Action TendencIes MEchanism. It is

part of a more general virtual agent architecture that

combines knowledge representation, dialogue man-

agement and agent’s animation. This architecture is

TEATIME: A Formal Model of Action Tendencies in Conversational Agents

145

used to build assistant agents in different industrial

applications such as customer relationship manage-

ment and online help.

The interaction relies on a strict turn-taking ap-

proach (as opposed to continuous interaction). The

user utterances are interpreted as atomic stimuli for

the decision process. The agent evaluates the stimuli

with respect to a set of appraisal variables, depend-

ing on its beliefs, desires, ideals and capabilities. This

may generate an action tendency, following the the-

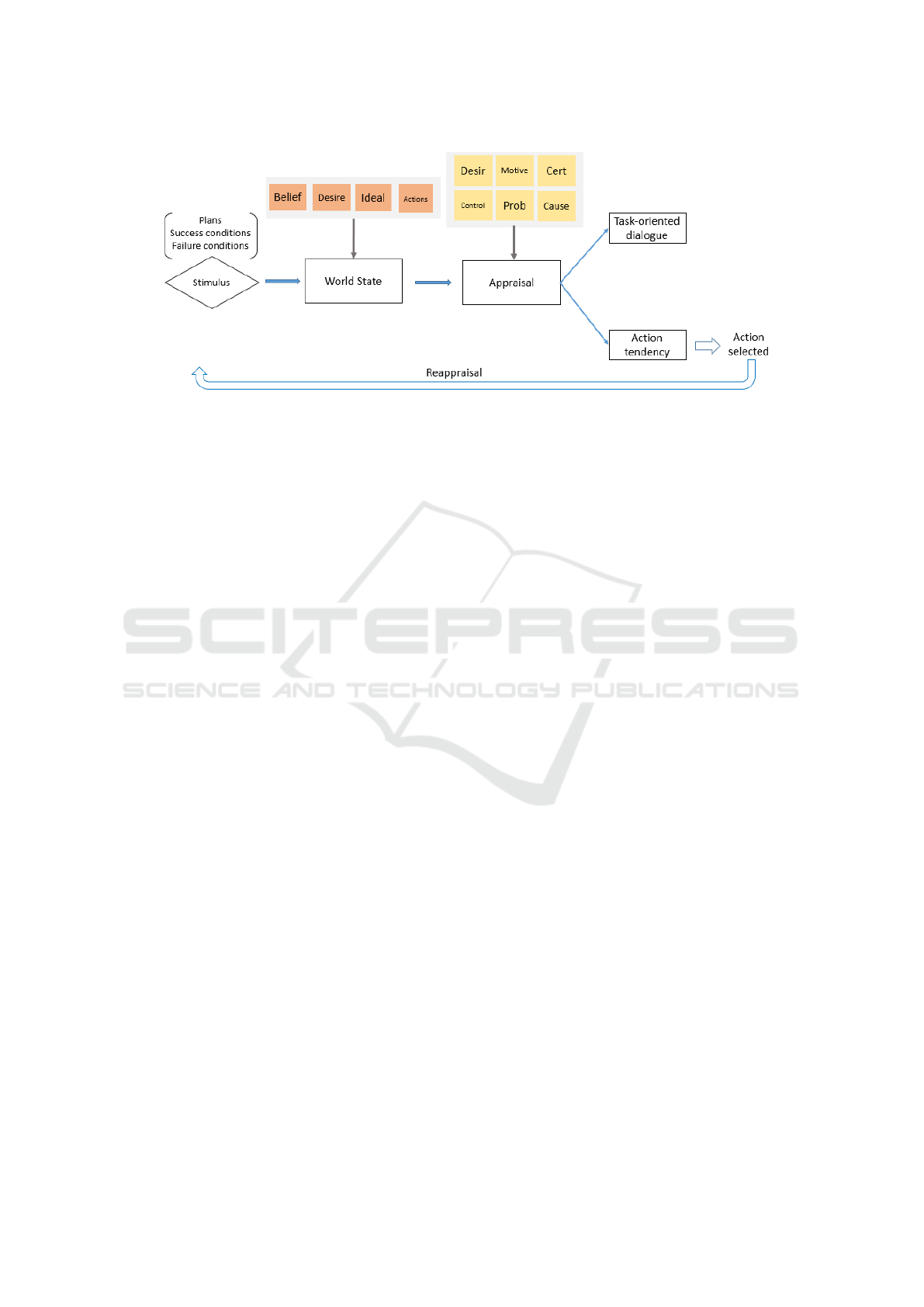

ory proposed by (Roseman, 2011). This model is il-

lustrated on Figure 1. The appraisal process evaluates

the stimulus’s impact on the agent’s goals. If it has a

direct or indirect impact, the action tendency mecha-

nism is used to select the answer. Otherwise, the agent

follows classical task-based interaction rules (that will

not be presented in this paper).

This section presents the syntax and semantics of

the TEATIME logical model.

3.1 Syntax

3.1.1 Agents, Facts and Actions

Let AGT = {i, j, ...} be a finite set of agents, F =

{ϕ

1

, ϕ

2

, ...} a finite set of atomic facts and AC T =

{a, b, ...} a finite set of physical actions.

For example, in the context of interaction with a

tourist assistant, A GT = {agt, user}, we can consider

the facts chvr (City Has Vegan Restaurant) and vrb

(Vegan Restaurant Booked) and the action bvr (Book

Vegan Restaurant): F = {chvr, vrb, . . .} and ACT =

{bvr, . . .}.

For a given agent i ∈ AGT , we denote AC T

i

⊆

AC T the set of actions that i can perform.

We also define two predicates Ask and In f orm as

follow:

∀i, j ∈ AGT , ∀a ∈ ACT , ∀ϕ ∈ F :

Ask

i, j

a , In f orm

i, j

ϕ ∈ F

Ask

i, j

a describes the fact that the agent i asks the

agent j to do the action a. In f orm

i, j

ϕ denotes the fact

that the agent i informs the agent j that ϕ is true.

3.1.2 Beliefs, Desires and Ideals

Following a BDI-based approach, we define a set

of modalities for expressing propositions about facts.

For a given agent i ∈ AGT and a given fact ϕ ∈ F ,

Bel

i

ϕ describes a belief of agent i and should be read

”the agent i believes that ϕ is true” ; Des

i

ϕ describes

an appetitive desire that the agent aims at reaching. It

should be read ”the agent i desires ϕ”. Des

i

¬ϕ de-

scribes an aversive desire (i.e. a desire that the agent

aims at avoiding). Ideal

i

¬ϕ should be read as ”The

agent i has for ideal ¬ϕ” or simply ”ϕ does not con-

form with the ideals of agent i”.

3.1.3 Action Execution and Conditions

We also define classical predicates for action execu-

tion. For any agent i ∈ AGT and for any action

a ∈ ACT

i

, Done(a, i) represents the fact that agent

i has achieved action a and Exec(a, i) represents the

fact that agent i can perform action a.

To compute the impact of a stimulus ϕ, it is neces-

sary to know the list of actions that made the fact true.

We denote P L

ϕ

⊂ ACT × AGT the set of actions

that explain the fact ϕ (the computation of this set is

not part of this paper). For all couple (a, i) ∈ P L

ϕ

,

the following formula holds:

|= ϕ ⇒ Done(a, i)

In the above example, the couple (bvr, agt) ex-

plains the fact vrb: vrb ⇒ Done(bvr, agt).

Also note that facts can depend on each other. For

instance, vrb depends on the fact chvr in the above

example. This is represented in the logics by the for-

mula: vrb ⇒ chvr. Conversely, if the vegan restaurant

is closed (vrc), it cannot be booked. This is repre-

sented by: vrc ⇒ ¬vrb. Such inferences will be used

in the appraisal process.

3.1.4 Action Tendencies and Emotional

Strategies

Action tendency is defined by (Frijda, 1986) as a state

of readiness to perform one or more action which

aims at establishing or modifying or maintaining a

particular relationship between the appraising person

and the stimulus. As explained by (Roseman, 2011),

action tendencies are a part of emotional strategies

which correspond to high-level goals that a person

wants to achieve regarding a stimulus: these emo-

tional strategies are the primary result of the appraisal

while action tendencies participate in the resolution

of the strategy in a specific context.

For example, imagine that Bob insults Anna. This

stimulus will be appraised into a general emotional

strategy to Move Against the responsible of the situa-

tion (here Bob). This concept of ”Move Against Bob”

is an emotional strategy which Anna is attempted to

reach by following an action tendency. Several speech

actions can correspond to this strategy (e.g. Anna

might insult Bob back or yell at him or criticize him).

Each emotional strategy coupled with the event

cause corresponds to a set of actions : ”Move Against

Someone” could be expressed by yelling, fighting,

ICAART 2018 - 10th International Conference on Agents and Artificial Intelligence

146

Figure 1: Overview of the TEATIME architecture.

hurting, etc. ”Move Against Self” could be ex-

pressed by withdrawing, punishing self, submitting,

etc. ”Move Away from Circumstances” could be

showed by leaving the conversation. Some examples

were presented in (Roseman, 2011) and a more com-

plete state-of-the-art of action tendencies in literature

can be found in (Bossuyt, 2012).

The TEATIME model implements these two as-

pects of the motivational component of emotions. We

consider the 8 emotional strategies proposed by Rose-

man:

• Prepare To Move Toward It (PMT)

• Move Toward It (MT)

• Stop Moving Away From It (SMA)

• Prepare To Move Away From It (PMA)

• Move Against It (MA)

• Move It Away (MIA)

• Stop Moving Toward It (SMT)

• Move Away From It (MAF)

Each strategy answers to a different kind of appraisal.

However, at a given time of the interaction, these

strategies are not mutually exclusive: several emo-

tional responses can be generated for a single situa-

tion. This requires the agent to combine the strategies.

Also note that these strategies are independent

from the application domain. The target of the emo-

tional strategy, referred to as ”It” in the definitions,

is important: it directly impacts the list of possible

action tendencies. For example, Move Toward the in-

terlocutor can be done in approaching her, connect-

ing to her, whereas Move Toward oneself consists in

exhibiting pride. Following Roseman’s proposal, we

consider three possible directions in our model: sel f

represents the appraising agent, other the interlocutor

in the dialogue and circ any external cause. Action

tendencies refers to sets of possible actions in answer

to a given emotional strategy and target.

More formally, let ES be the set of 8 emotional

strategies and I T = {sel f , other, circ} be the set of

possible target. For any agent i ∈ AGT , possible ac-

tion a ∈ AC T

i

, emotional strategy e ∈ ES and target

t ∈ I T , the proposition Tend(i, a, e, t) represents the

action tendency to perform the action a to achieve the

strategy e toward the target t.

In the example above, the tendency to yell could

be activated in Anna toward Bob:

|= Tend(anna, yell, MA, other)

The first role of the TEATIME component in the

conversational agent is to select the relevant emo-

tional strategy based on appraisal variables. The list

of appraisal variables is presented in the following

section. The second role is to select actions based on

the action tendencies and the agent’s ideals. This will

be presented in section 4.

3.1.5 Appraisal Variables

There is no consensus about the number and the

meaning of appraisal variables needed to generate

an emotional response in appraisal theories. In our

model, we chose to use Roseman’s list of appraisal

variables. We define 6 appraisal variables: causal-

ity, desirability, controllability, motive, nature of the

problem and certainty.

Formally, we define 6 predicates. Let i ∈

AGT , ϕ ∈ F , t ∈ I T , v ∈ {−, 0, +} and nat ∈

{intri, instru}:

Cause(i, ϕ, t) represents the fact that agent i consid-

ers that t (self, other or circ) is the cause of stimu-

lus ϕ.

Desir(i, ϕ, v) states that agent i considers ϕ as desir-

able (+), undesirable (-) or is indifferent to ϕ (0).

TEATIME: A Formal Model of Action Tendencies in Conversational Agents

147

Control(i, ϕ) represents the fact that agent i has con-

trol over an undesirable stimulus ϕ, i.e. it is capa-

ble of executing an action toward the cause of the

stimulus.

Motive(i, ϕ, v) represents the nature of the desire im-

pacted by the stimulus (appetitive or aversive).

Cert(i, ϕ) expresses the fact that the stimulus ϕ is

certain.

Prob(i, ϕ, nat) expresses the nature of problem for

an undesirable stimulus (intrinsic or instrumen-

tal).

Section 4 explains how these predicates are computed

at runtime, depending on beliefs and desires.

3.1.6 TEATIME Valid Formulaes

A proposition p in the TEATIME logics is defined us-

ing the following Backus-Naur form, in which ϕ ∈ F ,

i ∈ AGT , a ∈ ACT ∗, e ∈ ES , t ∈ IT , v ∈ {−, 0, +}

and nat ∈ {intri, instru}:

prop ::= ϕ | Exec(a, i) | Done(a, i) | Tend(i, a, e, t) |

Cause(i, ϕ, t) | Desir(i, ϕ, v) | Control(i, ϕ) |

Motive(i, ϕ, v) | Prob(i, ϕ, nat) | Cert(i, ϕ)

and a formula f is defined as:

f ::= prop | ¬ f | f ∧ f

0

|

Bel

i

ϕ | Des

i

¬ϕ | Des

i

ϕ | Ideal

i

¬ϕ

3.2 Semantics

The TEATIME semantics is based on possible

worlds (Kripke semantics). Let us consider the

universe Ω = {AGT , F , ACT ∗, ES } and M

Ω

=

{Ω, W , V , B, D, I } with:

• W : the non-empty set of possible worlds,

• V : W → 2

Prop

the valuation function for all

propositions (facts and predicates Exec, Done,

Tend, Cause, Desir, Control, Motive, Prob and

Cert).

• B, D, I transition functions from AGT × W to

2

W

which associate for each w ∈ W the set of

possible worlds accessible by the agent’s beliefs

Bel

i

(w), desires Des

i

(w) and ideals Ideal

i

(w) (re-

spectively).

For a formula f , a model M ∈ M and a world w ∈ W ,

M, w |= f read as ϕ is true at (M, w)

The rules defining the truth conditions of formulas

are:

• M, w |= p iff p ∈ V (w)

• M, w |= ¬ f iff M , w 2 f

• M, w |= f ∧ f

0

iff M , w |= f and M, w |= f

0

• M, w |= Bel

i

ϕ iff ∀v ∈ B(i, w), M, v |= ϕ

• M, w |= Des

i

ϕ iff ∀v ∈ D(i, w), M, v |= ϕ

• M, w |= Ideal

i

¬ϕ iff ∀v ∈ I (i, w), M, v |= ¬ϕ

with p a proposition, i and agent and f a formula.

In addition, a set of inference rules has to be de-

fined in the TEATIME engine. For instance, a fact

cannot be simultaneously an appetitive and an aver-

sive desire:

|= Des

i

ϕ ⇒ ¬Des

i

¬ϕ

The next section only presents the rules that define the

emotional process.

4 DYNAMIC APPRAISAL AND

ACTION SELECTION

The execution of the TEATIME model is composed

of a phase of appraisal followed by a phase of emo-

tional response, as explained in the previous section.

The first phase selects the emotional strategy based

on appraisal variables. The second phase selects the

agent’s response to the stimulus.

4.1 Computation of Appraisal Variables

This subsection presents the computation of the ap-

praisal variables. It explains how we have imple-

mented the principles from (Roseman, 2011) using

modal logics.

There is no consensus about the number and the

definition of appraisal variable through literature. In

our model, we had to simplify some definitions of

appraisal variables in order to build a computational

model easy to understand and implement.

We consider only two participants in the interac-

tion: i ∈ AGT is an agent and i

0

∈ AGT its interlocu-

tor. In the following, M ∈ M is the current model and

ϕ ∈ F is the fact to be appraised.

4.1.1 Causality

Causality is a complex topic and understanding the

causes and consequences of actions and facts requires

complex models (Pearl, 2009). We chose to simplify

the causality definition in the TEATIME model in or-

der to facilitate the implementation. In our model, in-

direct contribution to a fact is not taken into account

and all direct contributions to an action achievement

have the same value. We thus make the supposition

that all actions are equally important and voluntarily

achieved.

ICAART 2018 - 10th International Conference on Agents and Artificial Intelligence

148

The causality of ϕ is evaluated according to

the set of actions P L

ϕ

that explains this fact and

the agents that performed these actions. We want

Cause(i, ϕ, sel f ) to be true when the agent i has at

least done one action in P L

ϕ

. Similarly, we want

Cause(i, ϕ, other) to be true when at least one ac-

tion is done by the agent’s interlocutor. If none are

responsible for all actions in P L

ϕ

, or if there is no

such action (ϕ is true a priori), the agent must con-

sider that the fact is caused by ”the circumstances”

and Cause(i, ϕ, circ) shall be true.

Concretely, we have the following rules in the

TEATIME inference engine:

• M |= Cause(i, ϕ, circ) iff ∀(a, j) ∈ P L

ϕ

such that

M |= Done(a, j), j 6= i and j 6= i

0

• M |= Cause(i, ϕ, sel f ) iff ∃(a, i) ∈ P L

ϕ

such that

M |= Done(a, i)

• M |= Cause(i, ϕ, other) iff ∃(a, i

0

) ∈ P L

ϕ

such

that M |= Done(a, i

0

)

Note that a single stimulus can have multiple

causes. In this case, we can have up to three actions

tendencies that can be generated.

4.1.2 Desirability

In the TEATIME model, the stimulus ϕ is desirable

in three situations: if it is an appetitive desire of the

agent; if it contributes to an appetitive desire of the

agent; or if it prevents an aversive desire of the agent.

Formally, M |= Desir(i, ϕ, +) iff

• M |= Des

i

ϕ or

• ∃ψ, M |= Des

i

ψ ∧ ψ ⇒ ϕ or

• ∃ψ, M |= Des

i

¬ψ ∧ ϕ ⇒ ¬ψ.

Similarly, M |= Desir(i, ϕ, −) iff M |= Des

i

¬ϕ or

∃ψ, M |= Des

i

¬ψ ∧ ψ ⇒ ϕ or ∃ψ, M |= Des

i

ψ ∧ ϕ ⇒

¬ψ: ϕ is undesirable if it is related to an aversive de-

sire or if it prevents an appetitive desire.

In all other situations, we cannot determine the de-

sirability of ϕ: M |= Desir(i, ϕ, 0).

4.1.3 Motive

The motivational variable (represented by the predi-

cate Motive in our model) is strongly related to the de-

sirability, as explained by (Roseman, 2011). Indeed,

it specifies the nature of the desire that the stimulus ϕ

has impacted. It is positive if the stimulus impacted an

appetitive desire and negative if it impacted an aver-

sive desire. Formally, M |= Motive(i, ϕ, +) iff

• M |= ¬Desir(i, ϕ, 0) and

• M |= Des

i

ϕ or ∃ψ, M |= Des

i

ψ ∧ ψ ⇒ ϕ or

∃ψ, M |= Des

i

ψ ∧ ϕ ⇒ ¬ψ.

For example, if the agent has the appetitive de-

sire to book a vegan restaurant but it receives the

stimulus ”the restaurant is closed”, it will appraise

Desire(i, vrc, −) but Motive(i, vrc, +).

Similarly, Motive(i, ϕ, −) is true when the fact

(desirable or un-desirable) is related to aversive goals.

Note that Motive will have no value if the stimulus’

desirability is evaluated to 0.

4.1.4 Controllability

This cognitive evaluation variable defines whether the

agent is capable of acting or adapting to a fact ϕ.

It is relevant only when the stimulus is undesirable.

The controllability is positive if agent is capable of

performing an action towards the cause of the stimu-

lus. This reaction is necessarily the result of an action

tendency and, according to (Roseman, 2011), it oc-

curs only in two particular emotional strategies: move

against (MA) that modifies the stimulus and move it

away from you (MIA) that alters the perception of the

stimuly. If there exists an action doable by the agent

that satisfies one of these strategies toward the cause,

the controllability is positive. Otherwise it is negative.

Formally, M |= Control(i, ϕ) iff

• M |= Desir(i, ϕ, −) and ∃t, a such that:

• M |= Cause(i, ϕ, t) ∧ Exec(a, i) and

• M |= Tend(i, a, MA, t) ∨ Tend(i, a, MIA, t).

M |= ¬Control(i, ϕ, −) in all other situations (includ-

ing when controllability is not a relevant question).

4.1.5 Certainty

In our model, the certainty of the stimulus is simply

the coherence of this stimulus with the agent’s beliefs.

Formally, Cert(i, ϕ) ≡

de f

Bel

i

(ϕ).

4.1.6 Problem Nature

The nature of the problem is only relevant when the

stimulus is undesirable. The problem is instrumental

if the stimulus participates in the failure or success of

a desire. On the contrary, it is intrinsic if the stimulus

itself is a desire.

Formally:

• M |= Prob(i, ϕ, intri) iff M |= Desir(i, ϕ, −) ∧ Des

i

¬ϕ

• M |= Prob(i, ϕ, instru) iff M |= Desir(i, ϕ, −) ∧

¬Des

i

ϕ ∧¬Des

i

¬ϕ

4.2 Computation of the Emotional

Strategy

Table 1 describes the computation of the emotional

strategy depending on the different appraisal vari-

TEATIME: A Formal Model of Action Tendencies in Conversational Agents

149

ables. A star means that any value is acceptable

for the predicate. The last column gives the result-

ing emotional strategy. This table is extracted from

(Roseman, 2011). The only difference is that we did

not consider the surprise and its associated appraisal

variable ”expectedness” in our model.

Table 1: Computation of the Emotional Strategy.

Desir Caus Control Prob Cert Motive ES

-

Self/Other

- * * * MAF

+

Intri * * MIA

Instru * * MA

Circ

- *

- * PMA

+

- MAF

+ SMT

+

Intri * * MIA

Instru * * MA

+

Self/Other * * * * MT

Circ * *

- * PMT

+

- SMA

+ MT

4.3 Action Selection

Based on the emotional strategy, the TEATIME en-

gine selects an action according to the possible action

tendencies, as defined by the Tend predicate (see sec-

tion 3.1.4). Several verbal and non-verbal actions can

participate in the same emotional strategy. The fact

of smiling, looking at avidly, saying sweet words are

different actions for showing love to someone. The

selection of one action among others depends on the

context.

In TEATIME, the context that defines the choice

of action corresponds to the rules of social interac-

tion, which are implemented in the ”ideals”. Thus,

when an action tendency is generated from the ap-

praisal process, the coherence of actions belonging

to that tendency with social norms is computed. If

no action satisfies the ideals, the agent simply drops

the tendency and the system falls back to the task-

oriented interaction rules. If one or several actions

conform with the ideals and can be directed toward

the cause of the emotional strategy, the agent selects

one that is addressed to the cause of the event (self,

other, circ) and performs it.

Formally, let M be the current model of the world

and e and t be the emotional strategy and the target

appraised by agent i. We compute the set A

∗

of actions

compatible with the ideals:

A

∗

= { a ∈ AC T

i

| M |= Tend(i, a, e, t) ∧

(∀φ ∈ F , M |= (Done(a, i) ⇒ φ) ⇒

¬Ideal

i

¬φ) }

The agent selects one action in A

∗

and performs it.

In the next dialogue turn, the appraisal process should

produce a different emotional strategy and target. If

this is not the case, the agent will not select the same

action again. This corresponds to the re-appraisal pro-

cess as described by Frijda (Frijda, 1986).

5 IMPLEMENTATION EXAMPLE

The TEATIME formal model has been implemented

in Java and integrated within a virtual agents and chat-

bot architecture for Customer Relationship Service.

To overcome complexity issues that arise with modal

logics inference engine (SAT modal logics is NEXP-

time complete), we implemented the TEATIME rules

as Java methods that manipulate the stimuli. Facts

and actions are represented by Java objects and the

appraisal variables are computed by methods. The se-

lection of the emotional strategy is computed in linear

time.

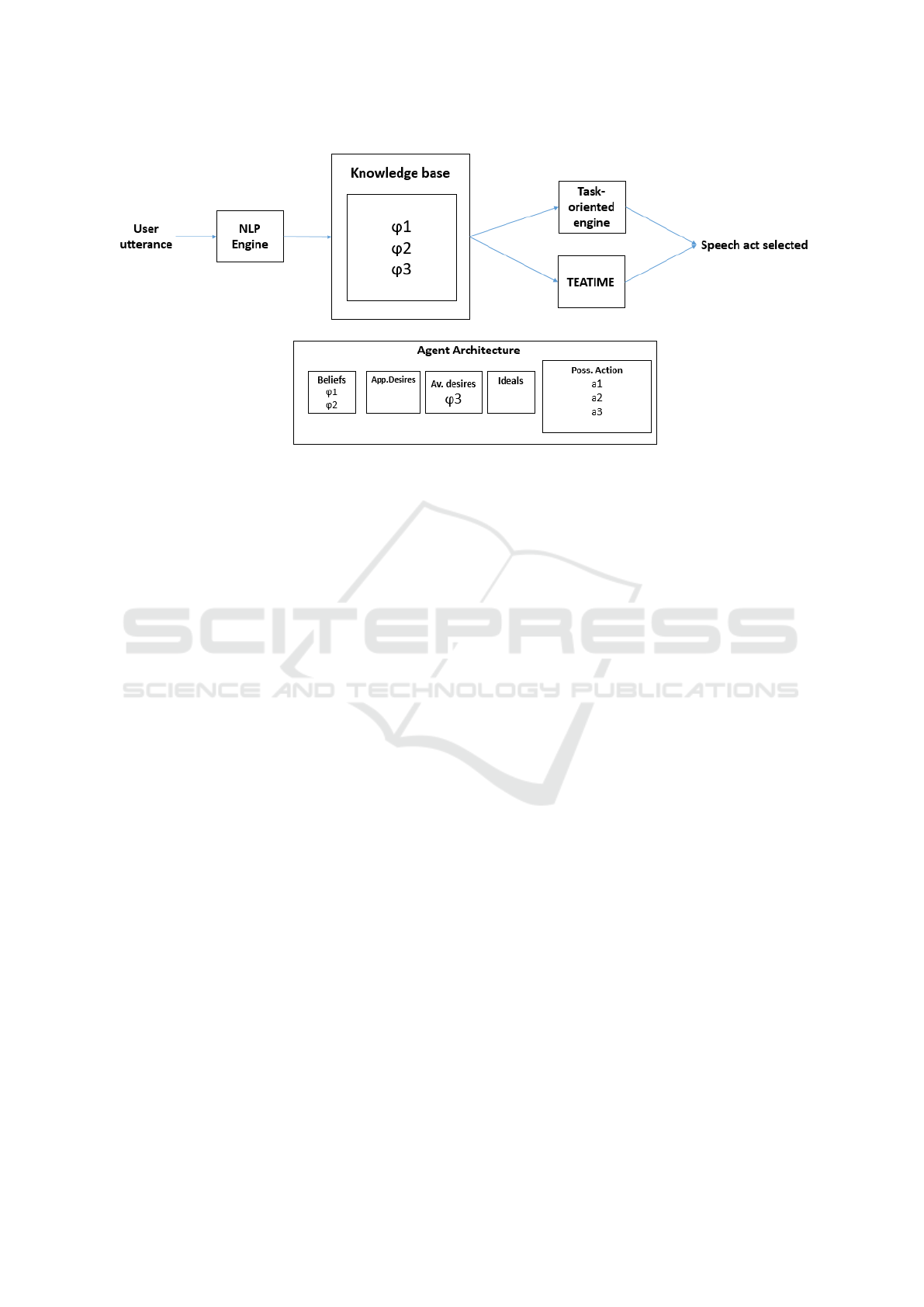

In this section, we illustrate how the TEATIME

model works during a small interaction. We took

an insurance application example where the user can

ask questions about her contract and evaluate the help

given by the agent. It is important to note that our

work does not concern Natural Language Processing

(NLP). We use a professional engine developed by the

French company DAVI to identify which fact has been

invoked by the user.

The (domain specific) knowledge base of the

agent is represented into an ontology which regroups

the insurance expertise and dependencies between

facts and actions. The NLP engine identifies the gen-

eral intention of the user’s utterance and turns it into a

stimulus (i.e, a fact ϕ) as shown on figure 3. It uses a

set of pattern-matching rules coupled with the domain

ontology. This determines which fact has been said

by the user and which task-oriented answer should be

chosen.

Each fact in the ontology is also associated with

beliefs, desires and ideals of the agent, so that the

TEATIME formal model can be applied to generate

affective responses.

When the NLP engine fails to detect a domain-

related intention in the ontology, the agent express an

action tendency to ”Stop moving toward” which cor-

responds to the emotional label ”Sadness”. Indeed,

misunderstanding the user utterance is considered to

be an in undesirable, uncontrollable, certain fact with

an appetitive motive.

Interactions need to be dynamically managed by

rules and related to a domain-specific knowledge

base.

ICAART 2018 - 10th International Conference on Agents and Artificial Intelligence

150

Figure 2: Implementation of Teatime in Insurance expert example.

Interaction Rules

For the example on figure 2, we applied three rules to

manage the interaction dynamics :

1. When the agent i asks the agent j to perform an action

a, and the action a is executable by the agent j, the

agent j performs the action a. This rule is applied to the

first and second utterance in the dialogue 2 where the

user asks the agent to give information. In our model, it

written this way :

Ask

i, j

a ∧ Exec(a, j)

de f

⇒ Do(a, j)

2. When the agent i informs the agent j that ϕ is true,

Bel

j

ϕ turns true. This rule is applied to the third utter-

ance on figure 2 where the user informs the agent that

he is disappointed. We write it this way :

In f orm

i, j

ϕ

de f

⇒ Bel

j

ϕ

3. When the agent i beliefs that ϕ is true, that is undesir-

able, certain, uncontrollable related to an intrinsic prob-

lem and caused by self, it leads to an emotional strategy

MAF. This rule is one of the appraisal rules defined in

TEATIME model. It is applied to the third user utter-

ance.

Bel

i

ϕ ∧ Desir(i, ¬ϕ, +) ∧ Cert(i, ϕ) ∧

Control(i, ϕ) ∧ Prob(i, ϕ) ∧ Caus(ϕ, sel f )

de f

⇒ Tend(i, a, MAF, sel f )

Facts, Actions and Agents

For the insurance example, we defined a knowledge

base containing three actions (a

1

= give-information,

a

2

= give-details, a

3

= correct-mistake), three facts(

ϕ

1

= information-given , ϕ

2

= details-given, ϕ

3

=

customer-disappointment ), two agents (user = hu-

man user, agent = virtual insurance expert)and rela-

tions between them((a

1

, agent) ∈ P L

ϕ

1

, (a

2

, agent) ∈

P L

ϕ

2

)

Interaction Dynamics

Here we describe formally the conversation flow il-

lustrated on figure2:

1. Ask

user,agent

a

1

2. Ask

user,agent

a

1

∧ Exec(a

1

, agent)

de f

⇒ Do(a

1

, agent)

3. Ask

user,agent

a

2

4. Ask

user,agent

a

2

∧ Exec(a

2

, agent)

de f

⇒ Do(a

2

, agent)

5. In f orm

user,agent

ϕ

3

de f

⇒ Bel

agent

ϕ

3

6. Bel

agent

ϕ

3

∧ Desir(agent, ¬ϕ

3

, +) ∧

Cert(agent, ϕ

3

) ∧ ¬Control(agent, ϕ

3

) ∧

Prob(agent, ϕ

3

, intri) ∧ Caus(ϕ

3

, sel f )

de f

⇒

Tend(agent, a

3

, MAF, sel f )

In the example illustrated on figure 2, in the two

first user utterance, the appraisal output is null as the

facts have no link with agent’s desires or ideals. The

third one is undesirable for the agent. The whole ap-

praisal process is then done. It is appraised by the

agent as an undesirable, certain and uncontrollable,

related to an intrinsic problem and caused by ”Self ”

(the agent). Following the rules presented in sec-

tion 4, it leads to an emotional strategy ”Move Away

From self” and the action tendency to correct the mis-

take and express regret. In this example, only the

TEATIME: A Formal Model of Action Tendencies in Conversational Agents

151

Figure 3: Dialog Process where TEATIME is implemented.

verbal output is impacted by TEATIME (the gesture

visible on the screen capture is not controlled by our

model, for now).

The strength of the TEATIME model is to offer a

general mechanism that can be used to select domain-

specific actions sorted by emotional strategy. In the

example, the designer needs to specify that:

|= Tend(agent, a

3

, MAF, sel f )

where a

3

is the action that produces the last textbox

and:

|= Des

agent

¬ϕ

3

where ϕ

3

corresponds to user disappointment (de-

tected by the NLP engine).

6 CONCLUSION

We have presented a formal model of affects which

integrates an appraisal process and an action selec-

tion mechanism based on the Action Tendency theory.

Six appraisal variables are computed according to the

agent beliefs, desires, ideals and capacities. The result

of this appraisal is an emotional strategy that leads to

actions tendency for the conversational agent.

One originality of the TEATIME model is to pro-

vide a formal description of the motivational compo-

nent of emotions, which plays an important role in

action selection. The model also conveys social rules

of interaction using ”ideals”.

This model has been implemented but our evalu-

ation is still work-in-progress. We are interested in

evaluating the perception of emotions through speech

acts produced by the agent. It is also interesting to

evaluate the importance of social inhibition during

an interaction. An agent without any regulation pro-

cess would be perceived as non-credible. We are cur-

rently defining experimental protocol to support an

empirical study on this impact. In order to assess

the whole emotional response during the experimen-

tal interaction, we also want to extend the TEATIME

model to the non-verbal expression of action tenden-

cies through facial expressions, gesture and speech.

REFERENCES

Adam, C., Herzig, A., and Longin, D. (2009). A logical

formalization of the occ theory of emotions. Synthese,

168(2):201–248.

Arnold, M. B. (1960). Emotion and personality.

Bossuyt, E. (2012). Experimental studies on the influence

of appraisal on emotional action tendencies and asso-

ciated feelings. PhD thesis, Ghent University.

Campano, S., Sabouret, N., De Sevin, E., and Corru-

ble, V. (2013). An evaluation of the cor-e computa-

tional model for affective behaviors. In Proceedings

of the 2013 international conference on Autonomous

agents and multi-agent systems, pages 745–752. In-

ternational Foundation for Autonomous Agents and

Multiagent Systems.

Coombes, S. A., Tandonnet, C., Fujiyama, H., Janelle,

C. M., Cauraugh, J. H., and Summers, J. J. (2009).

Emotion and motor preparation: a transcranial mag-

netic stimulation study of corticospinal motor tract ex-

citability. Cognitive, Affective, & Behavioral Neuro-

science, 9(4):380–388.

Coppin, G. (2009). Emotion, personality and decision-

making. Revue d’intelligence artificielle, 23(4):417–

432.

Courgeon, M., Clavel, C., and Martin, J.-C. (2009). Ap-

praising emotional events during a real-time interac-

tive game. In Proceedings of the International Work-

ICAART 2018 - 10th International Conference on Agents and Artificial Intelligence

152

shop on Affective-Aware Virtual Agents and Social

Robots, page 7. ACM.

Dastani, M. and Lorini, E. (2012). A logic of emotions:

from appraisal to coping. In Proceedings of the 11th

International Conference on Autonomous Agents and

Multiagent Systems-Volume 2, pages 1133–1140. In-

ternational Foundation for Autonomous Agents and

Multiagent Systems.

Dias, J. and Paiva, A. (2005). Feeling and reasoning: A

computational model for emotional characters. In Por-

tuguese Conference on Artificial Intelligence, pages

127–140. Springer.

Duffy, E. (1962). Activation and behavior.

Frijda, N. H. (1986). The emotions: Studies in emotion

and social interaction. Paris: Maison de Sciences de

l’Homme.

Frijda, N. H. (2010). Impulsive action and motivation. Bio-

logical psychology, 84(3):570–579.

Frijda, N. H. and Mesquita, B. (1998). The analysis of emo-

tions. What develops in emotional development, page

273œ295.

Frijda, N. H. and Zeelenberg, M. (2001). Appraisal: What

is the dependent?

Gebhard, P. (2005). Alma: a layered model of affect. In

Proceedings of the fourth international joint confer-

ence on Autonomous agents and multiagent systems,

pages 29–36. ACM.

Gratch, J. and Marsella, S. (2004). A domain-independent

framework for modeling emotion. Cognitive Systems

Research, 5(4):269–306.

Jenkins, J. M. and Oatley, K. (1996). Emotional episodes

and emotionality through the life span.

Lazarus, R. S. (1991). Cognition and motivation in emotion.

American psychologist, 46(4):352.

McDougall, W. (1908). The gregarious instinct.

Mehrabian, A. (1996). Pleasure-arousal-dominance: A gen-

eral framework for describing and measuring individ-

ual differences in temperament. Current Psychology,

14(4):261–292.

Ortony, A., Clore, G. L., and Collins, A. (1990). The cog-

nitive structure of emotions. Cambridge university

press.

Pearl, J. (2009). Causality. Cambridge university press.

Prendinger, H. and Ishizuka, M. (2005). The empathic com-

panion: A character-based interface that addresses

users’affective states. Applied Artificial Intelligence,

19(3-4):267–285.

Pynadath, D. V. and Marsella, S. C. (2005). Psych-

sim: Modeling theory of mind with decision-theoretic

agents. In IJCAI, volume 5, pages 1181–1186.

Roseman, I. J. (2011). Emotional behaviors, emotivational

goals, emotion strategies: Multiple levels of organiza-

tion integrate variable and consistent responses. Emo-

tion Review, 3(4):434–443.

Scherer, K. R. (2005). What are emotions? and how

can they be measured? Social science information,

44(4):695–729.

Steunebrink, B. R., Dastani, M., and Meyer, J.-J. C. (2009).

A formal model of emotion-based action tendency for

intelligent agents. In Portuguese Conference on Arti-

ficial Intelligence, pages 174–186. Springer.

TEATIME: A Formal Model of Action Tendencies in Conversational Agents

153