Team Distribution between Foraging Tasks with Environmental Aids to

Increase Autonomy

Juan M. Nogales and Gina Maira Barbosa de Oliveira

Department of Computer Science, Federal University of Uberlandia, Santa Monica, Uberlandia, Brazil

Keywords:

Task Allocation, Foraging, Adaptability, Cooperation, Autonomy.

Abstract:

In this paper, robots have to distribute themselves across a set of regions where they will serve in foraging

tasks, transporting objects repetitively. Each region stores information about the performance of the subgroup

of robots serving that region. Robots can also share information between them and identify which region is

offering better conditions to forage. In particular, each region has a different rate to recover recently removed

objects, which demands a different number of robot foragers. We explore the effects of the network structure

in robot distribution and their performance. Results indicate a small dependence of robot-robot connections

and a great dependence of robot-environment interaction. Since cooperative robots are going after a global

goal, the proposed distribution rules combined with environmental aids allowed them to make better decisions

autonomously, increasing the number of transported objects and reducing the number of travels.

1 INTRODUCTION

Social insects live in colonies, but they divide them-

selves into subgroups to complete different tasks, for

instance, honeybees searching for nest sites (Seeley

et al., 2006), wasps storing wood (Jeanne and Nord-

heim, 1996), or ants collecting food (Anderson and

Bartholdi, 2000). These insects tend to distribute

themselves between regions to perform specific jobs.

However, they can switch between jobs whenever the

colony needs it (Zahadat et al., 2015). Scientists at-

tribute their success and recovery skills to the coor-

dination within and across the subgroups of insects

(Bonabeau et al., 1999).

In (Schmickl et al., 2012), the authors showed,

with bees in a simulated environment, that their adapt-

ability depends on regulated communication. The

swarm has only a few receivers in the entrance of

the hive. They get information about source qual-

ity, which foragers share them through a short-range

communication (trophallaxis). Then, receivers spread

this information through a long-range communication

(waggle-dance) to help in the recruitment of other

bees. Thus, bees could distribute themselves to forage

for nectar by combining short and long-range commu-

nication.

Insects also exhibit a highly decentralized con-

trol; it seems they have no leader, known as divi-

sional autonomy. On the other hand, they follow col-

lective rules, known as distributed control (Anderson

and Bartholdi, 2000). For instance, in the bee colony

investigated in (De Marco and Farina, 2001), individ-

uals can decide which recruiter to follow, exhibiting

divisional autonomy. However, they have to forage

for nectar for the colony, following distributed control

rules. In other words, individuals are autonomous, but

some group rules or objectives restrain their auton-

omy.

Due to insect success, robotics researchers

brought forth the concept of swarm robotics. It is a

novel concept inspired by insect strategies to solve

complex tasks, which began to grow at the begin-

ning of the 2000s. In particular, such solutions of-

fer a far better alternative by employing simpler units.

Designing simple robots seems easier than creating a

big, expensive, and heavy robot. In (S¸ahin, 2005),

the authors considered pertinent to describe the desir-

able properties of swarm robotics before new works

blurred this concept through time, which continue un-

til our days:

• Robustness: redundancy and decentralization

should foster the swarm to continue operating de-

spite failures or disturbances in the environment,

although at a lower performance.

• Flexibility: requires the swarm to be able to gen-

erate modularized solutions to different tasks.

• Scalability: considers that the coordination

Nogales, J. and Oliveira, G.

Team Distribution between Foraging Tasks with Environmental Aids to Increase Autonomy.

DOI: 10.5220/0006588700250036

In Proceedings of the 10th International Conference on Agents and Artificial Intelligence (ICAART 2018) - Volume 1, pages 25-36

ISBN: 978-989-758-275-2

Copyright © 2018 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

25

mechanism would be able to deal with a large

number of relatively simple robots.

Modern applications with a swarm of robots range

from navigation to surveillance problems, for in-

stance: wildfires containment (Phan and Liu, 2008),

intruders detection (Raty, 2010; Khan et al., 2016),

and area exploration (Antoun et al., 2016). In those

scenarios, robots have to coordinate among them-

selves to achieve multiple objectives. Therefore, it is

necessary to allow them to divide into subgroups. As

insects, robots can make their own decisions, but their

behaviors need an orientation toward the group goal.

A common approach to design these decision-

making strategies is to model the group as a Multi-

Agent System (MAS), define a local utility function

for each objective, and establish the common goal as

the sum of all utility functions (Krause and Guestrin,

2007). Thus, if agents optimize the sum of all lo-

cal utilities, they also achieve the common goal. De-

spite MAS solutions are generic and often abstract in-

herent complexities of Multi-Robot Systems (MRS),

the decision-making rules stimulate individuals to co-

operate among subgroups such that they may maxi-

mize the group utility. Some authors have reached

optimal solutions by adapting multi-agent decision-

making rules to robots as described in the survey pre-

sented in (Yan et al., 2013).

For instance, in (Krause and Guestrin, 2007), the

authors employ the law of diminishing returns to de-

sign utility functions. They incorporate the benefits

of assigning an extra robot to serve a particular lo-

cation. In particular, robots have to surveil a water

distribution system divided into regions. Each region

has a probability of intrusions and a potential detri-

ment if a robot is not serving there (e.g., the affected

population by an intrusion). Robots make decisions

based on the marginal contribution they yield when

selecting a region. Thus, when robots optimize the

utility functions, they reach an effective allocation for

the surveillance. Unlike some agent models, nature of

robots restrains them to be in one place at a time.

Here, we adapted the MAS decision-making

strategies described in (Nogales and Finke, 2013)

to reach a near-optimal distribution with foraging

robots. Each task is associated with a region where

robots have to forage for virtual objects. In par-

ticular, we mimic the receivers at the entrance of a

hive of bees with some environmental aids to help

robots to share information. Robot’s decision-making

strategies depend on the law of diminishing returns,

which allow individuals to show both divisional au-

tonomy and distributed control. Each individual can

decide which task to serve, while it seeks to op-

timize the group utility. We tested three different

decision-making models varying their information-

sharing structure and robot autonomy. The proposed

decision-making models stimulate robots to cooperate

among subgroups such that they maximize the num-

ber of foraged objects.

The rest of the paper is organized as follows: Sec-

tion 2 describes previous works and their strategies.

Our proposal is detailed in Section 4, while the exper-

iments and results are in Section 5. Finally, Section 6

provides a short discussion of the results and suggests

future work.

2 RELATED WORKS

In this section, we focus on Multi-Robot Task Alloca-

tion (MRTA) for foraging. It is important to mention

that Search and Rescue missions are related to forag-

ing task (Ahuja et al., 2002; Liu and Nejat, 2013), but

they are out of the scope of this work. The objective of

MRTA is to assign M jobs to N robots. Unlike MAS,

robots have to interact with a physical world and with

one another (Cao et al., 1997). Thus, we first reviewed

some works in MRTA solving foraging tasks, then, we

described a pair of papers in MAS, which followed a

similar way to the one we designed the local utilities

for the decision-making rules. In particular, because

they work with the law of diminishing returns to reach

an optimal distribution of agents.

Task allocation has brought significant improve-

ments in foraging tasks. Foraging robots are trans-

porting objects from one place (e.g., a source) to an-

other (e.g., a nest). Notwithstanding, the problem

continues in terms of jobs and workers that must max-

imize the overall performance (Dasgupta, 2011). The-

ories from operational research and combinatorial op-

timizations underlie several approaches of task allo-

cation. Such solutions employed concepts like util-

ity functions (Sung et al., 2013), auctions (Viguria

et al., 2007), market-based processes (Akbarimajd

and Simzan, 2014), game theory payoffs (Marden

et al., 2009), and the like to help robots in coordi-

nation. In those works, the impact of robot decision

appears only after a robot takes action according to its

selection.

One of the former and most cited works with

robots transporting objects while employing task allo-

cation strategies is (Kube and Bonabeau, 2000). The

authors tested MURDOCH, an auction-based task al-

location system, in a box-pushing experiment with

heterogeneous robots. Their results show auctions

as a promising strategy for foraging with task allo-

cation. Later, several variations of auctions strategies

appeared, e.g., repetitive auction processes, decentral-

ICAART 2018 - 10th International Conference on Agents and Artificial Intelligence

26

ized auctions, and role assignment strategy. In par-

ticular, the idea of role assignment came from robot

soccer domain, where each robot calculates its utility

for each role and periodically broadcasts these values

to coordinate with its teammates (Stone and Veloso,

1999). The authors of (Chaimowicz et al., 2002) in-

troduced roles, as functions robots must perform to

transport an object. Thus, when a robot finds an ob-

ject, it shares information about the utility of the avail-

able role and the need of helpers. Robots would listen

this alternative, and some of them could consider it a

better option. Then, similar to the bidding process of

an auction, they would offer their help to the leader,

which would choose the best-qualified helper to trans-

port that object.

In other transportation tasks, the robots have pre-

vious knowledge of the object positions and can em-

ploy path-planning strategies to avoid collisions. For

instance, in (Yan et al., 2012), the robots could mini-

mize the total transportation time while keeping a low

energy consumption on each robot. The environment

includes a place of constant production of goods, but

their rate of production is unknown. The authors im-

plemented a heuristic that helps robots to estimate the

rate of production and define their idle periods to re-

duce their energy consumption. They compared their

heuristic solution to a centralized replanner described

in (Wawerla and Vaughan, 2010). The results show

that their strategy was faster in the implemented en-

vironments, and it only required a few more energy

than the replanner.

In (Lerman and Galstyan, 2002), the authors ex-

amine a scenario for foraging objects where experi-

ments show a decreasing average return effect, which

is known as the law of diminishing returns. Loosely

speaking, each additional robot working on a task

would increase the performance, but the size of its im-

provement is gradually lower until the group reaches a

size with which its performance declines (F

¨

are, 1980).

Several works in MRTA exhibit this phenomenon

(Bonabeau et al., 1997; Schmickl et al., 2012; Akbari-

majd and Simzan, 2014). However, few works exploit

it to help the group to find an optimal distribution of

agents (Nogales and Finke, 2013) or to reach a near-

optimal distribution of robots (Krause and Guestrin,

2007).

In particular, this phenomenon appears due to both

limited resources and space where robots interact with

one another while exploring or going after an ob-

ject. As the group grows, more interference appears

lengthening the delivery of the items to nests. In some

occasions, robots begin to focus on avoiding colli-

sions, which holds them back from delivering objects.

Finally, in (Lerman and Galstyan, 2002), the authors

concluded that there is an optimal quantity of robots,

and beyond that number, the benefits of parallelism

begin to disappear.

Finally, the most accepted taxonomy of the classi-

fication of MRTA problems was found in (Gerkey and

Matari, 2004), which divides problems as follows:

• Single (ST) vs Multiple Tasks (MT): refers to a

number of tasks a robot can carry out simultane-

ously

• Single (SR) vs Multiple Robots (MR): refers to

a number of robots needed to fulfill a task

• Instantaneous (IA) vs. Time-extended Assign-

ment (TA): refers to the available information for

planning future allocations

Although our robots reallocate themselves dy-

namically, we can consider that our proposal belongs

to the group of ST-MR-IA, because several robots

have to forage for objects, and each object requires

one robot for its transport. They cannot predict the

rates of object production of the environment as in

(Yan et al., 2012), i.e., they are working with cur-

rent (and possibly outdated) information. Different

levels of communication are also explored. Three

decision-making strategies must help robots to find

a balance between inhibition and stimulation of au-

tonomy through communication. For instance, if a

robot informs that its region is providing objects more

quickly, most robots could decide to arrive at that re-

gion and increase the congestion, which would hold

them back, as a group. Therefore, we need to find

a threshold between divisional autonomy and dis-

tributed control.

Besides that, we associate each subgroup to math-

ematical functions that satisfy the law of diminishing

returns to regulate robots autonomy. We opted for

the MAS strategy described in (Nogales and Finke,

2013), which we adapted for a MRTA system. In

particular, because those decision-making rules con-

sider: i) delays in both communication and move-

ment of heterogeneous agents, ii) agents can serve

in only one task at a time, and iii) since agents are

of the same type, they generate the same contribution

to the utility being indistinguishable from each other.

Thus, our robots could employ utility functions based

on the law of diminishing returns to distribute them-

selves and reach an (near-)optimal number of foraged

objects when following those rules.

3 PROBLEM

Following the objective of MRTA, we have to de-

fine the tasks for our foraging robots and an envi-

Team Distribution between Foraging Tasks with Environmental Aids to Increase Autonomy

27

ronment that favors task allocation strategies. In par-

ticular, we adapted the decentralized task allocation

strategy for multi-agent systems found in (Nogales

and Finke, 2013) to work with a multi-robot system.

Although in (Nogales and Finke, 2013), agents can

be of various types, here, we worked with homoge-

neous robots to follow one of the conditions of swarm

robotics (S¸ahin, 2005). However, another type of

robots could work in parallel tasks.

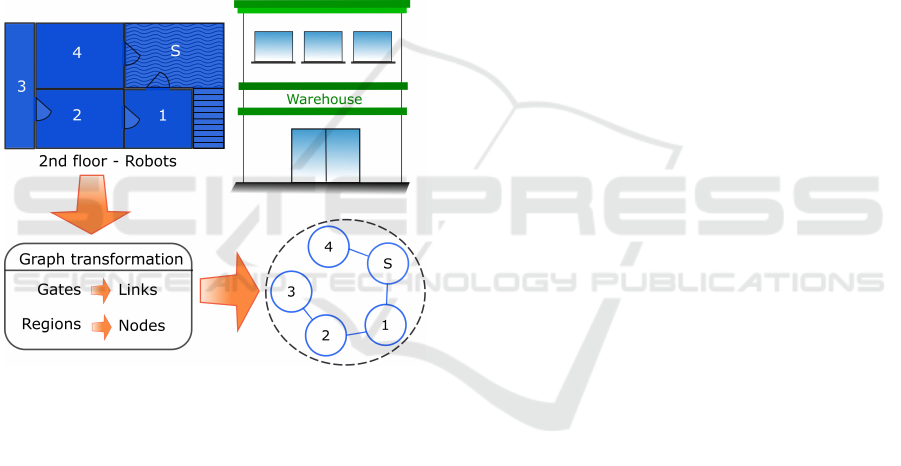

We employed graph theory to model the environ-

ment and its tasks, besides complex network sub-

strates for robot-robot and robot-environment infor-

mation sharing. Thus, let nodes represent the regions,

i.e., distributed locations in which robots perform for-

aging tasks. Nodes belong to a set N, indexed from 1

to n. Figure 1 illustrates how a graph models the

rooms of a floor in a warehouse.

Figure 1: Transforming a warehouse scenario into a graph

model. Small circles represent the nodes, while the dashed

circle represent the floor.

In this building, robots have to move objects

within a room (node), but each room requires a dif-

ferent number of robots. Therefore, robots should

distribute themselves between the rooms. Note that

the graph can be as complex as the designer needs to

model the environment.

Challenge

As illustrated in the warehouse of Figure 1, we model

the regions of the environment as nodes into a graph.

Note that within each node, a robot should perform

several subtasks, e.g., repetitive object transportation.

For mathematical reasons, we assume that the num-

ber of robots is large enough to be appropriately rep-

resented by a continuous variable. However, as our

experiments show, this is not a critical assumption for

a practical implementation.

Thus, for a node i, the number of robots is de-

fined by r

i

. Let ∆

q

⊂ R

n

denote the (n − 1) di-

mensional simplex defined by the equality constraint

∑

n

i=1

r

i

= q, where q denotes the quantity of robots

available. The benefit of having an amount of robots

r

i

foraging within node i is given by the utility func-

tion f

i

: R → [0,∞). The total utility function is de-

fined by f : R

n

→ [0,∞), f (r) =

∑

n

i=1

f

i

(r

i

), where

r = [r

1

,...,r

n

]

>

represents the state of the system.

Under the assumption of local information-sharing

and decentralized decision-making, the objective is to

identify conditions that allow us to solve the follow-

ing optimization problem

maximize f (r), subject to r ∈ ∆

q

. (1)

In other words, we want to find the optimal alloca-

tion of all robots that maximizes the utility associated

to each node. The following section details the pro-

posed environment, the mathematical notation, and

the decision-making mechanisms.

4 PROPOSAL

In this work, the information sharing structure has two

layers that include robot-robot and robot-environment

connections. Since TAMs are available in the envi-

ronment, we can consider them as an additional aid

for communication and coordination between robots.

TAM devices emulate picking or dropping activities

of virtual objects through color codes. Besides that,

TAMs, as proposed in (Brutschy et al., 2015), can

connect one another through a Zigbee network in-

creasing the possibility of spreading information be-

tween regions. The second layer allows different net-

work structures to underlie the communication be-

tween robots.

4.1 Environment

Since we want to evaluate the team performance in

foraging through a task allocation strategy, we em-

ployed an environment consisting of several regions.

Commonly, the environment for foraging includes at

least two regions: one where they deposit objects and

other where robots explore (Mataric, 1994). Notwith-

standing, more regions can appear when: robots di-

vide the environment into regions (Pini et al., 2014;

Buchanan et al., 2016) or the researchers provide a

predefined division (Bobadilla et al., 2012; Piton-

akova et al., 2016). We opted for a predefined division

of the environment by combining ground color, smart

ICAART 2018 - 10th International Conference on Agents and Artificial Intelligence

28

color-changing landmarks (Brutschy et al., 2015), and

gates (Bobadilla et al., 2012) to separate the regions.

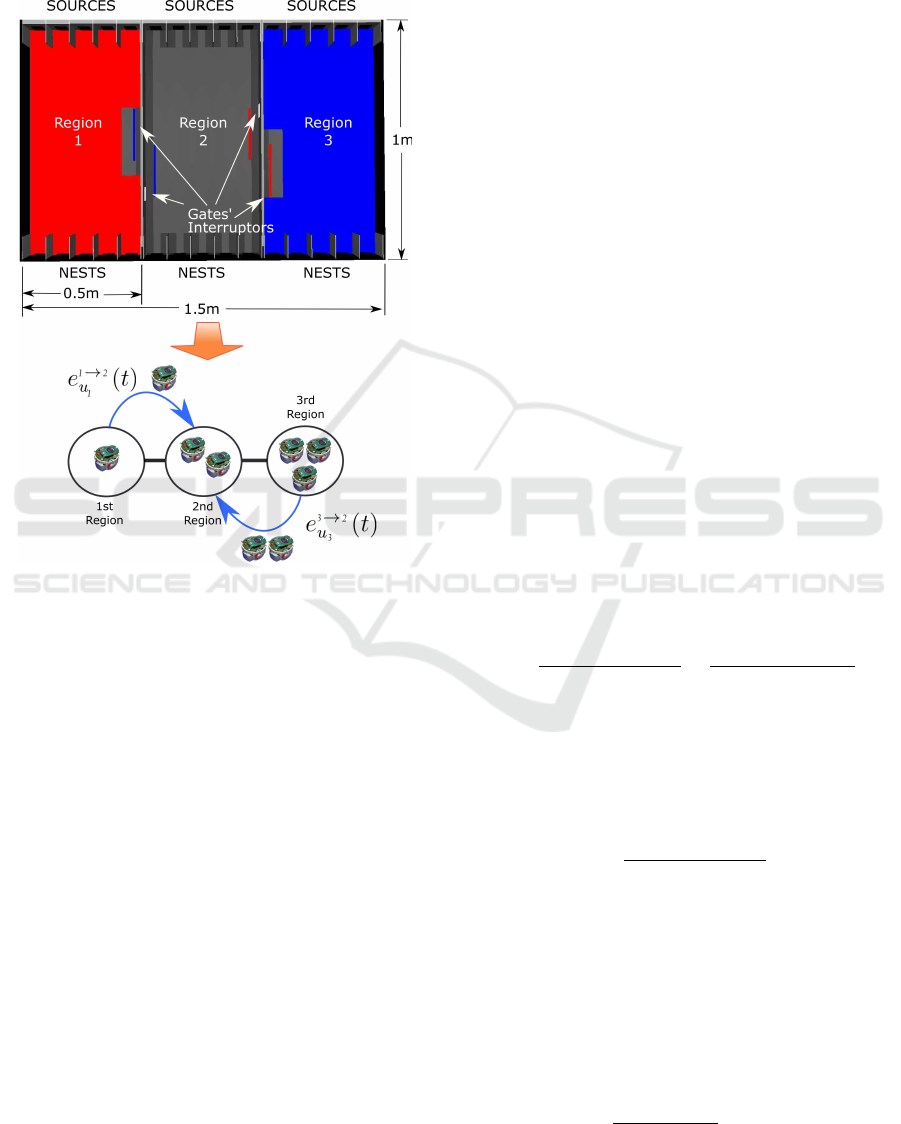

Figure 2 shows the environment dimensions, gates,

landmarks and their distribution.

1m

1.5m

0.5m

Region

1

Region

2

Region

3

Figure 2: Environment for the foraging task. Upper image

considers all dimensions of the environment. Below it is the

transformation to a graph considering robot movements.

Note that these environmental aids are a cheap so-

lution to distinguish regions. The more complex aids

we used are TAMs, which are smart landmarks pro-

posed in (Brutschy et al., 2015). TAMs’ dimensions

are 10×10 cm

2

in order that an e-puck robot can enter

them. Every region has five TAMs working as nests

and five working as sources. In each region, TAMs

working as nests store performance and utility infor-

mation of that region and help the subgroup of robots

working in the same region to update this information.

On the other hand, TAMs working as sources have a

rate for the delivery of objects, which is different for

each region (as production rates of goods in compa-

nies). However, robots and TAMs do not know about

these rates.

Note that the environment consists of three re-

gions that are linked through hallways with automatic

gates. Since transitions between regions last a finite

time in real environments, we added a predefined cost

(in time) in the gates. Once a robot reaches one of

the switches, the gate opens and waits enough time

for the robot to pass through. Next, when robots are

transitioning, they are not foraging in any region, con-

sequently, more travels leads to less objects foraged

from TAMs.

Although we compute the optimal quantity of

robots for the environment, in any moment, a region

can hold more robots than its optimal number. There-

fore, robots would need distributed rules inside their

decision-making mechanism in order to be able to de-

cide whether to abandon or remain there and to im-

prove their performance in each region.

4.2 Notation and Model

We adapted the MAS strategy proposed in (Nogales

and Finke, 2013) such that we could employ it in

a MRTA scenario. We implemented two decision-

making strategies: i) The deterministic model (D),

which guarantees an optimal distribution and works

as a reference for the other strategies and ii) A semi-

stochastic version (SS), which allows validating the

effect of connections with the environment as (SS-

TAM). These strategies tried to solve the problem de-

scribed in Section 3. However, we needed to add

some assumptions to make it possible for robots to

use the MAS solution. In particular, each utility f

i

satisfies the following three assumptions (common in

economic theory (F

¨

are, 1980)):

A1 Each function f

i

is continuously differentiable

on R.

A2 An increase in utility satisfies

f

i

(r

i

+ u

i

) − f

i

(r

i

)

u

i

>

f

i

(r

i

+ w

i

) − f

i

(r

i

)

w

i

(2)

where r

i

∈ R, w

i

> u

i

> 0 represent a finite

number of robots entering node i.

A3 An increase in the number of robots within

a region increases the utility of that region,

bounded by

0 <

f

i

(r

i

+ u

i

) − f

i

(r

i

)

u

i

< ∞ (3)

Assumption A2 represents the law of diminish-

ing returns and implies that increasing the number of

robots in a node will always yield decreasing average

returns. Assumption A3 indicates that any additional

robot should increase the utility moderately.

Using Eq. (2) and according to Assumption A3,

the partial derivative of f

i

with respect to r

i

, denoted

by s

i

, satisfies

−a ≤

s

i

(x

i

) − s

i

(y

i

)

x

i

− y

i

≤ −b (4)

Team Distribution between Foraging Tasks with Environmental Aids to Increase Autonomy

29

for any x

i

,y

i

∈ R, x

i

6= y

i

, and constants 0 < b ≤ a.

It can be shown that if Assumptions A1-A3 are sat-

isfied, the marginal utility functions s

i

(·) are continu-

ous on R, strictly decreasing, and non-negative, while

f

i

(·) is strictly concave (see (Nogales and Finke,

2013) for details). Note that each additional robot

must yield a lower average return. We have to find

the marginal utility functions for the environment of

this proposal described in Figure 2.

Next, a connection between two nodes i and j (re-

gions) means that robots can move back and forth be-

tween them, and robots at node i can obtain informa-

tion about node j. By moving across nodes, robots

may join or leave them at time indexes t = 0,1,2,...

according to the asynchronous occurrence of discrete

events. Let e

i→k

u

i

(t) denote the decision of a num-

ber u

i

of robots to leave node i ∈ N to join a neigh-

boring node k ∈ N

i

at time t. Let e

i→N

i

u

i

(t) denote

the set of all possible simultaneous decisions from

node i to its neighboring nodes N

i

. The set of events

E = P ({e

i→N

i

u

i

(t)}) − {

/

0} represents all possible si-

multaneous decisions from all nodes. A single event

e(t) ∈ E is defined as a set where each element rep-

resents a decision of a number of robots to abandon

the nodes (see robot movements in the graph model

between connected nodes in Figure 2).

If an event e(t) ∈ E occurs at time t, the update of

the state of the system is given by r(t + 1) = g(r(t)).

For the robots belonging to node i ∈ N, g(r(t)) is de-

fined as

r

i

(t + 1)=r

i

(t)−

∑

{k:e

i→k

u

i

(t)∈e(t)}

u

i

(t) +

∑

{ j:e

j→i

u

j

(t)∈e(t)}

u

j

(t) (5)

In other words, the current amount minus those leav-

ing plus some arriving. Solving (1) requires that the

model satisfy the following assumptions on the net-

work and its robots:

A4 The nodes are in a connected graph G

n

.

A5 There is a large enough number of robots, q,

such that there can be at least a robot within

each node providing a positive utility when

they reach the optimal distribution r ∈ ∆

?

q

.

Assumption A4 implies that there is a path across

all locations of the graph, placing minimum condi-

tions on the sensing and possible decisions of robots

across them. Assumption A5 requires a minimum

number of robots, which, in general, depends on the

nature of the utility functions. Moreover, under As-

sumptions A4 and A5, the optimal solution takes the

form

∆

?

q

= {r ∈ ∆

q

| ∀i ∈ N, ∀k ∈ N

i

, s

i

(r

i

) = s

k

(r

k

)} (6)

Thus, for any finite number of robots, the optimal

distribution r ∈ ∆

?

q

is unique (Bertsekas, 1999). The

distribution r ∈ ∆

?

q

captures the optimal division of

subgroups when all of them have the same marginal

utility because no robot has incentives to abandon its

node. How robots decide which node to serve is the

focus of the next section.

4.3 Deterministic Decision-making

In this section, we detail how environmental aids reg-

ulate robot movements and a variation where robots

recover their autonomy to decide which region to

serve by using these aids information. Both decision-

making models consider marginal utilities as the av-

erage number of packages delivered within a region’s

period. After a fixed period or interval of time, TAMs

update robots information such that they can restore

their performance metrics and update their informa-

tion to make their decisions.

4.3.1 Deterministic Decision-making (D)

Movements may be stochastic, but any e

i→N

i

u

i

(t) ∈ e(t)

must satisfy the following rules in this model.

D-R1 If s

i

(r

i

(t)) ≥ s

j

(r

j

(t)) for all i ∈ N

i

, then

u

i

(t) = 0, i.e., robots remain in a node

where they have a better marginal utility.

D-R2 If there exists a node j ∈ N

i

such that

s

i

(r

i

(t)) < s

j

(r

j

(t)), then some robots

could decide to abandon i to serve in the

neighboring node j, which has the high-

est marginal utility among the neighbors of

node i, N

i

. In particular, the number of

robots leaving node i is bounded by

0 < u

i

(t) ≤

1

2

φ[s

k

(r

k

(t)) − s

i

(r

i

(t))] (7)

where φ ∈ (0, 1/a] represents the level of

cooperation between robots, and ∀ j,k ∈ N

i

s

k`

(r

k

(t)) ≥ s

j

(r

j

(t)).

Rules D-R1 and D-R2 restrain the allowable

events in the network. In particular, D-R2 captures

the tendency of robots to join a node that has a

higher marginal utility value than all other neighbor-

ing nodes. Within a particular node, robots show di-

visional autonomy in the sense that they are uncon-

strained in their decisions to serve that node. How-

ever, in this deterministic solution, nodes regulate

robot transitions; they choose which robot must de-

part bereaving them of node-to-node movements. It

would be as if in a warehouse scenario, robots serving

within a room could not move between rooms unless

the room indicates to do so.

ICAART 2018 - 10th International Conference on Agents and Artificial Intelligence

30

4.3.2 Semi-stochastic Decision-making (SS)

We allowed robots to choose the node where they

want to serve, that is, nodes have no authority upon

them. In this case, we needed to add the following

assumptions on communication:

A6 Each node offers information of its local util-

ity to robots working on it.

A7 If there is a connection between two nodes,

that is, j ∈ N

i

, then there exists at least one

link communicating a robot from node i with

some robot in node j.

Assumptions A6 and A7 are guaranteeing com-

munication, which is a critical requirement in our

robotic task allocation. Robots need information

about neighboring nodes to decide which node is the

best for them. Note that Assumption A7 is a local

version of Assumption A4; it allows robots to receive

information about performances and local utilities in

neighboring nodes. A robot serving in the neighbor-

ing nodes shares this information. Thus, robots can

compare the options and make a decision.

Since robots following the semi-stochastic

decision-making have no node regulation, they are

free to move across nodes. Note that when robots

detect another node with a better utility, they could

depart massively and leave a node empty. Therefore,

we have to design local rules to avoid such massive

movements, i.e., to regulate robot movements such

that some robots remain in a node, even when there is

another node with a better utility. Thus, robots would

need to know or at least estimate how many of them

are working in that node. However, knowing this

information means they would have a kind of global

knowledge, which is commonly unavailable in swarm

robotics (S¸ahin, 2005). However, they can estimate

or guess how many are in the same node. Each robot

keeps track of its performance at node i in s

`

i

(t),

which relates to its marginal contribution. Since we

are working with homogeneous robots, a robot ` can

estimate how many of them are foraging in the same

region by using the marginal utility of its region,

s

i

(r

i

(t)), and its own performance, s

`

i

(t). Despite

robots have a similar performance, it is not exactly

the same. Thus, for robot `, let ˆr

`

i

(t) = s

i

(r

i

(t))/s

`

i

(t)

represent its estimation of the number of robots

working in the same node.

Next, let p

`

i→ j

(t) be the probability of robot ` de-

parting from node i toward node j, which offers a bet-

ter utility. When robots follow the proposed decision-

making strategy, any movement e

i→N

i

u

i

(t) ∈ e(t) must

also satisfy the following rules.

S-R1 If s

i

(r

i

(t)) = s

`

i

(t) (i.e., ˆr

`

i

(t) = 1), then

p

`

i→ j

(t) = 0, i.e., that robot is the only one

serving there and must remain in it even

when there is a node with a better utility.

S-R2 If s

i

(r

i

(t)) > s

`

i

(t) (i.e., ˆr

`

i

(t) > 1), then

p

`

i→ j

(t) > 0, i.e., that robot should compute

its probability of departing toward node k

with a better utility. If robot ` is considering

departing, it means that node i has a neigh-

boring node k ∈ N

i

such that s

k

(r

k

(t)) >

s

i

(r

i

(t)). Then, some robots could decide

to abandon node i at the same time. The

probability of robot ` departing is given by

p

`

i→ j

(t) =

1

2

φ

`

[s

k

(r

k

(t)) − s

i

(r

i

(t))]

ˆr

`

i

(t)

(8)

where φ

`

is its level of cooperation.

Note that, unlike the deterministic solution, robots

use probability functions, and therefore, there is no

longer a warranty of achieving the optimal distribu-

tion. However, robots can reach a near-optimal solu-

tion as in (Krause and Guestrin, 2007).

4.4 Learning Mechanism

In this work, robots are learning about their perfor-

mance in each region. They keep a historical estima-

tion of the number of objects they transported. Recall

that if they did not work in a region, information from

other robots working there could spread up to them.

TAMs are helping in these information-sharing pro-

cesses by keeping track of the historical performance

of the region where they are and by indicating robots

the end of a period when they update local variables.

This idea resembles the way enterprises pay their em-

ployees: they are asynchronous and deliver the pay-

ments at the end of a period of work (e.g., 15 days or

monthly). Once TAMs indicate the end of a period in

their region, robots will consider how many objects

they transported as their marginal contribution.

Next, a robot ` uses the following exponential

moving average function for learning updates with its

own measurements and with incoming messages

s

`

i

(t) = α ∗ s

`

i

(t − 1) + (1 − α) ∗ M

`

i

(9)

where α ∈ (0,1] is the rate of learning, M

`

i

is the

score the robot measured (listened) during that pe-

riod, and s

`

i

(t) defines the performance estimation that

robot ` has of working at node i. Robots are sharing

this estimation with neighboring robots, that is, those

robots with which they have a connection. Therefore,

changes in the communication structure may affect

the task allocation process because they are affecting

the learning process. Note that if robots have no path

of connections with other regions, their only possible

Team Distribution between Foraging Tasks with Environmental Aids to Increase Autonomy

31

reality is that their current region is the best. They

would never got information about other regions.

The following section describes the preliminary

analysis to configure the environment parameters,

simulations, and the results of both decision-making

strategies that performed the foraging task in the pro-

posed environment.

5 EXPERIMENTS

Initially, we needed to find the marginal utility func-

tions of the environment. Recall that to find the op-

timal distribution point, ∆

?

q

in Eq. (6), it is necessary

to have the marginal utility functions, because the op-

timal distribution is reached when all marginal utili-

ties have the same value. We measured the marginal

utility as the number of foraged objects in each re-

gion within a period. Since robots are transitioning

between regions, we established a periodical evalua-

tion of the marginal utilities every 1,000 steps. We

ran our simulations in Netlogo and fixed cooperation

for all robots to 1.

Thus, if the system follows the law of diminish-

ing returns, the marginal utility of a region should de-

crease by adding more robots into that region (node).

Since each region has the same dimensions, we chose

one of them and variated the rate of recovery in that

region. This rate indicates the capacity of sources to

restore objects as soon as robots remove them. How-

ever, a source evaluates if it can recover the object ev-

ery period (1,000 steps). The rate of recovery of the

objects removed by robots can be 20%, 40%, 60%,

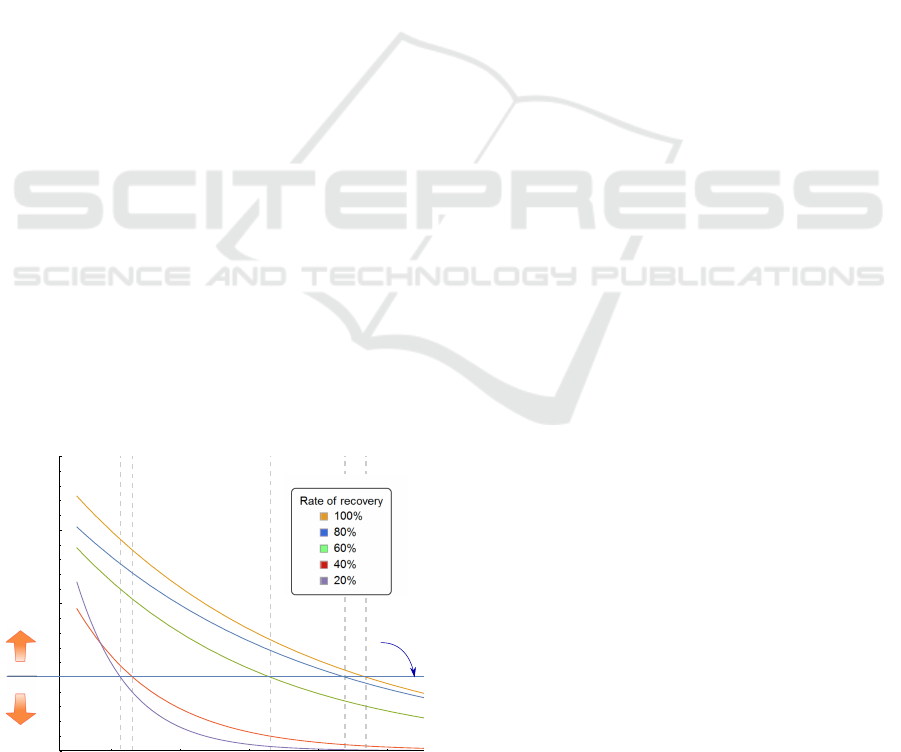

80%, and 100%. Figure 3 shows the marginal utility

functions with each rate of recovery (30 simulations

for each rate of recovery).

4

6

8

10

12

10

15

20

5

26.966 e

- 0.148 x

23.346 e

- 0.143 x

32.621 e

- 0.406 x

23.731 e

- 0.181 x

88.222 e

- 0.657 x

Number of robots

Marginal utility

Optimal

point

5

Figure 3: Marginal utility functions for the different rates of

recovery of the sources.

By moving the point of optimal distribution and

using the functions, we could get an estimation of the

quantity of robots the environment requires. For in-

stance, working with the optimal point of Figure 3

and defining the rates of the regions as follows: the

first region (red ground) with 20% needs 4 robots, the

second (gray ground) with 40% needs 4 robots, and

the third (blue ground) with 80% needs 11. We would

need 19 robots with these rates of recovery. However,

the initial distribution is different from this optimal

one so that the decision-making rules lead them to-

ward it.

Next, for the simulations, we have a determin-

istic model (D), the semi-stochastic with (SS-TAM)

and without TAMs’ help (SS). In the models with in-

formation sharing available (i.e., working with As-

sumptions A6 and A7), we tested three different net-

works in robot-robot interaction: Fully connected and

two regular networks with degree 3 and 1. We also

tested a variation with switching-links strategy in the

information-sharing structure to observe the effects of

changing neighbors. In particular, after a robot transi-

tion, they could abandon previous neighbors and con-

nect to some of those in the new region. For this vari-

ation, we added a prefix (-S). The following sections

describes the results in two groups of different sizes.

5.1 Simulation Results

First, we settle the regions rate such that the optimal

distribution point is 2-5-9 (i.e., 2 in the red region, 5

for the gray region, and 9 for the blue region). This

indicates that we need 16 robots for this configura-

tion. With this number of robots, we tried different

initial conditions avoiding the optimal one. For rea-

sons of space, we opted for showing only the results

with the initial distribution 5-5-6, almost the same

number of robots in each region. Note that only three

robots should move from red to blue region, but sys-

tem decentralization and robot autonomy generated

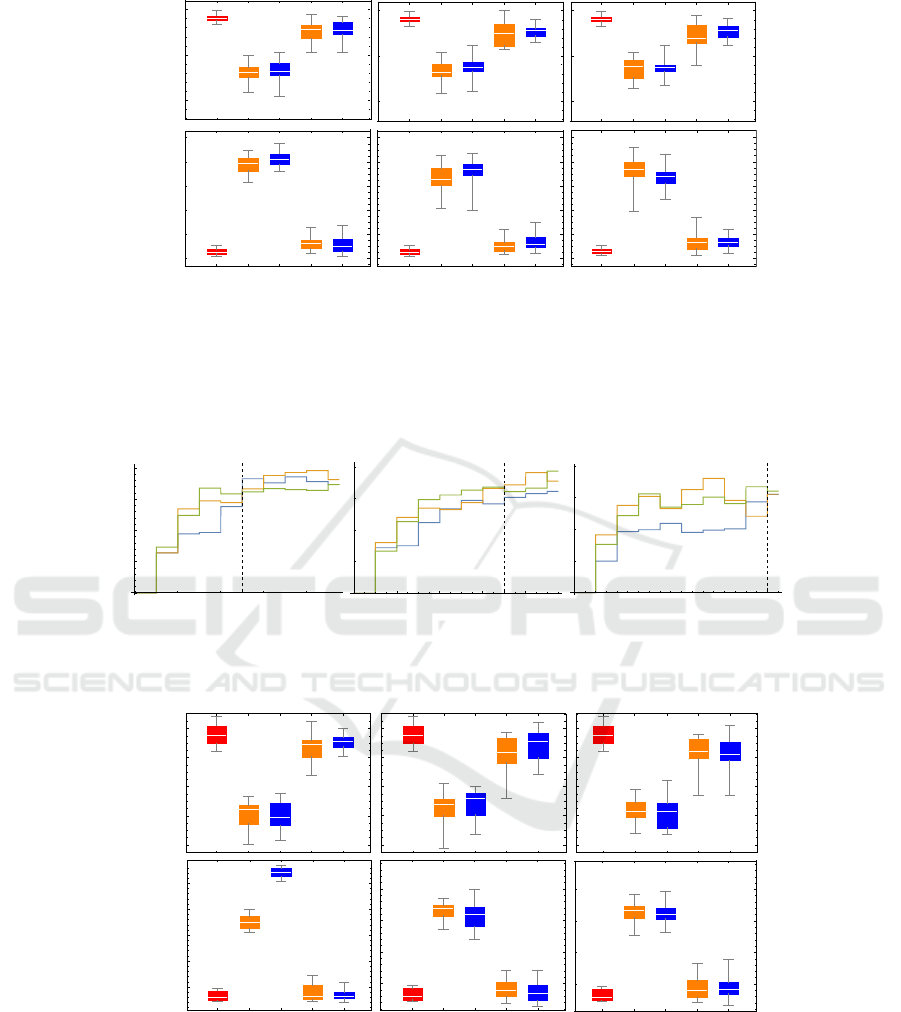

more travels. Figure 4 shows the results of 30 sim-

ulations of 10,000 steps for all the models with and

without switching-links strategy.

Note that the deterministic model delivered the

best performance; t-test showed that it has no com-

petitor. SS-TAM-S with a network of degree 3 got

the second place (losing by an average of 18 objects,

with p = 0.001). Although the box-plots show that the

variation in the results with the switching-links strat-

egy decreases, the t-tests indicate there is no signifi-

cant deterioration in introducing this strategy in any

model with any network structure. On the other hand,

it means that robots could work with local-range com-

munication.

ICAART 2018 - 10th International Conference on Agents and Artificial Intelligence

32

200

250

300

0

20

40

60

80

100

Fully Connected

Regular d-3 Regular d-1

# Travels Foraged objects

D

SS

SS-S

SS-TAM

SS-TAM-S

D

SS

SS-S

SS-TAM

SS-TAM-S

D

SS

SS-S

SS-TAM

SS-TAM-S

Figure 4: Performance and travels of robots while foraging in the environment with different network structures. Desired

distribution 2-5-9.

2000 4000 6000 8000

0.5

1.0

1.5

2.0

2000 4000

6000

8000

2000 4000 6000 8000

Time steps Time steps Time steps

Deterministic model

Semi-stochastic

with TAM info

Semi-stochastic

without TAM info

Figure 5: Evolution of the performance with a period of update in TAMs of 1,000 steps. The dashed lines represent the

moment when the marginal utilities reached a value near to the optimal distribution.

Fully Connected

Regular d-3 Regular d-1

# Travels Foraged objects

D

SS

SS-S

SS-TAM

SS-TAM-S

D

SS

SS-S

SS-TAM

SS-TAM-S

D

SS

SS-S

SS-TAM

SS-TAM-S

240

260

280

300

320

50

100

150

200

250

Figure 6: Performance and travels of robots while foraging in the environment with different network structures. Desired

distribution 5-11-16.

Note also that robots with lower amount of trav-

els obtained the best performances. In the SS model,

which did not include the TAMs’ information, robots

required more travels because they only have the in-

Team Distribution between Foraging Tasks with Environmental Aids to Increase Autonomy

33

formation shared among neighbors. In other words,

without Assumption A6, robots invested more time

traveling than foraging due to lack of information. In

contrast to SS, robots foraging with SS-TAM obtain

better results due to more accurate information re-

ceived from TAMs. Moreover, they have more auton-

omy than those foraging with the deterministic model.

Although they were considering only an estimation of

the number of partners, they reached a near-optimal

performance.

Next, to see which mechanism was near to the

optimal distribution point ∆

?

q

, we computed the Eu-

clidean distance of the last distribution. We allowed

simulations to run about 4 periods of 1,000 steps after

the deterministic model reached a value near the equi-

librium. Table 1 shows the results of the Euclidean

distance.

Table 1: Values of the Euclidean distance of the imple-

mented models and the computed optimal distribution.

Structure

Model Fully Reg. d-3 Reg. d-1

D 1.31 1.31 1.31

SS 1.75 1.72 3.42

SS-S 2.23 2.39 2.60

SS-TAM 1.71 1.04 1.74

SS-TAM-S 1.53 0.80 1.48

The SS and SS-TAM models (with and with-

out TAM information, respectively) delivered a value

near to the optimal distribution. To know the reason

behind their difference in performance, we observed

the evolution of the marginal utilities. The SS-TAM

model was faster in reaching such value near the op-

timal distribution. Since the only difference between

SS and SS-TAM is the information available in the

TAMs of each region, we can affirm that TAMs were

fundamental for a better performance in SS-TAM.

Figure 5 shows the settling time for the evolution of

marginal utilities of a simulation with each model.

It is clear that the faster model to reach the bal-

ance is the deterministic one. However, we reached

a near-optimal solution where robots could keep their

autonomy in making decisions to transition between

regions without restriction. Although robots follow-

ing the SS model without TAMs’ help reached a good

distribution, they required a large time due to diversity

in the estimations of other robots.

We increased the number of robots by moving the

optimal distribution point downward. We settled the

regions rate in 20%, 40%, and 60% and the optimal

distribution point became 5-11-16 (i.e., 5 in the red

region, 11 for the gray region, and 16 for the blue

region). This indicates that we need 32 robots for

this configuration. With this group of robots, we tried

different initial conditions avoiding the optimal robot

distribution. In this occasion, we opted for 1-15-16 as

initial distribution. The following paragraphs details

the effects of working with a large group.

Again, the deterministic model delivered the best

performance; t-test showed it has surpassed all other

models. Only the SS-TAM-S model with a fully con-

nected network was near, with a difference of 13

objects in the average performance and p = 0.002.

Moreover, t-test results indicated that there is no sig-

nificant difference between the models with and with-

out the switching-link strategy. Nevertheless, it was

observed a lower variability among the simulations

(i.e., the box-plots seem shorter).

We arrived to similar conclusions of those ob-

tained with the small group. The semi-stochastic

model without TAMs’ help worsened its performance

because a greater number of robots increases the di-

versity in the estimations of each robot too. In the

travel plots, we could confirm that SS has the greatest

amount of travels and the lowest number of foraged

objects. In other words, TAMs information was key

to stimulate robots to focus on foraging and avoid too

much travels between regions.

6 CONCLUSIONS

We adjusted a MAS strategy to work in a MRTA sce-

nario successfully. The optimal solution provided

by Bertsekas worked because the performance is

non-linear; the robots exhibited a diminishing return

curve. However, we could only reach near-optimal

solutions. We offered a decentralized solution that al-

lowed robots to keep their autonomy; however, it was

necessary to add environmental aids. In particular, the

information provided by TAMs was critical for robots

to reach a better performance. TAMs helped robots

to have a better reference for decentralized decisions

(autonomy).

The different structures of robot-robot communi-

cation show that robots could forage with a minimal

condition on information sharing, not necessarily a

fully connected network. However, they would sacri-

fice a small portion of their performance. Therefore, it

depends on the environment dimensions and the hard-

ware embedded in the robots to decide between fully

communication and highest performance, or scarcely

connection and a still-good performance.

All decision-making models stimulated robots to

work for the group goals (distributed control) through

the diminishing return utility functions. Nevertheless,

the time to reduce the distance to the optimal point

ICAART 2018 - 10th International Conference on Agents and Artificial Intelligence

34

indicates that it is important to achieve such point as

faster as robots can. Although we have provided a

good alternative for the deterministic solution, in fu-

ture works, we want to explore alternatives to improve

the speed to reach such point without depending on

TAMs or losing autonomy.

Moreover, the phenomenon of diminishing returns

is present in many scenarios where the incorporation

of an additional worker to a job may improve the per-

formance, but each additional worker increases (grad-

ually) in smaller portions the performance. We have

seen this kind of shapes in many previous works of

MRTA because robots are sharing limited resources.

Therefore, there is a great opportunity to adjust this

same set of rules for those systems. It would be easily

adjusted for each environment and its conditions.

ACKNOWLEDGEMENTS

GMBO is grateful to Fapemig, CNPq and CAPES for

their financial support. Juan M Nogales also is grate-

ful with the OEA for the scholarship.

REFERENCES

Ahuja, R. K., Ergun,

¨

O., Orlin, J. B., and Punnen, A. P.

(2002). A survey of very large-scale neighborhood

search techniques. Discrete Applied Mathematics,

123(1):75–102.

Akbarimajd, A. and Simzan, G. (2014). Application of ar-

tificial capital market in task allocation in multi-robot

foraging. Int. Journal of Computational Intelligence

Systems, 7(3):401–417.

Anderson, C. and Bartholdi, J. J. (2000). Centralized versus

decentralized control in manufacturing: lessons from

social insects. Complexity and complex systems in in-

dustry, pages 92–105.

Antoun, A., Valentini, G., Hocquard, E., Wiandt, B., Tri-

anni, V., and Dorigo, M. (2016). Kilogrid: A modular

virtualization environment for the kilobot robot. In

Proc. of the IEEE/RSJ Int. Conf. on Intelligent Robots

and Systems, pages 3809–3814, Daejeon, South Ko-

rea. IEEE.

Bertsekas, D. P. (1999). Nonlinear programming. Athena

scientific Belmont.

Bobadilla, L., Martinez, F., Gobst, E., Gossman, K., and

LaValle, S. M. (2012). Controlling wild mobile robots

using virtual gates and discrete transitions. In Ameri-

can Control Conf., pages 743–749. IEEE.

Bonabeau, E., Dorigo, M., and Theraulaz, G. (1999).

Swarm intelligence: from natural to artificial systems.

Number 1. Oxford university press.

Bonabeau, E., Sobkowski, A., Theraulaz, G., Deneubourg,

J.-L., et al. (1997). Adaptive task allocation inspired

by a model of division of labor in social insects. In

Proc. Biocomputing and emergent computation, pages

36–45, Skovde, Sweden.

Brutschy, A., Garattoni, L., Brambilla, M., Francesca, G.,

Pini, G., Dorigo, M., and Birattari, M. (2015). The

TAM: abstracting complex tasks in swarm robotics re-

search. Swarm Intelligence, 9(1):1–22.

Buchanan, E., Pomfret, A., and Timmis, J. (2016). Dynamic

task partitioning for foraging robot swarms. Proc. of

the 10th Int. Conf. on Swarm Intelligence, pages 113–

124.

Cao, Y. U., Fukunaga, A. S., and Kahng, A. (1997). Coop-

erative mobile robotics: Antecedents and directions.

Autonomous Robots, 4(1):7–27.

Chaimowicz, L., Campos, M. F. M., and Kumar, V. (2002).

Dynamic role assignment for cooperative robots. In

Proc. of the IEEE Int. Conf. on Robotics and Automa-

tion, volume 1, pages 293–298 vol.1.

Dasgupta, P. (2011). Multi-Robot Task Allocation for Per-

forming Cooperative Foraging Tasks in an Initially

Unknown Environment, pages 5–20. Springer Berlin

Heidelberg, Berlin, Heidelberg.

De Marco, R. and Farina, W. (2001). Changes in food

source profitability affect the trophallactic and dance

behavior of forager honeybees (apis mellifera). Be-

havioral Ecology and Sociobiology, 50(5):441–449.

F

¨

are, R. (1980). Laws of diminishing returns. Number 176.

Springer Verlag.

Gerkey, B. P. and Matari, M. J. (2004). A formal anal-

ysis and taxonomy of task allocation in multi-robot

systems. The Int. Journal of Robotics Research,

23(9):939–954.

Jeanne, R. L. and Nordheim, E. V. (1996). Productivity in

a social wasp: per capita output increases with swarm

size. Behavioral Ecology, 7(1):43–48.

Khan, M. U., Li, S., Wang, Q., and Shao, Z. (2016). Cps ori-

ented control design for networked surveillance robots

with multiple physical constraints. IEEE Transactions

on Computer-Aided Design of Integrated Circuits and

Systems, 35(5):778–791.

Krause, A. and Guestrin, C. (2007). Near-optimal obser-

vation selection using submodular functions. In Proc.

of the 22nd Conf. on Artificial Intelligence, volume 7,

pages 1650–1654.

Kube, C. and Bonabeau, E. (2000). Cooperative transport

by ants and robots. Robotics and Autonomous Sys-

tems, 30(1):85 – 101.

Lerman, K. and Galstyan, A. (2002). Mathematical model

of foraging in a group of robots: Effect of interference.

Autonomous Robots, 13(2):127–141.

Liu, Y. and Nejat, G. (2013). Robotic urban search and res-

cue: A survey from the control perspective. Journal

of Intelligent & Robotic Systems, 72(2):147–165.

Marden, J. R., Arslan, G., and Shamma, J. S. (2009). Co-

operative control and potential games. IEEE Transac-

tions on Systems, Man, and Cybernetics, Part B (Cy-

bernetics), 39(6):1393–1407.

Mataric, M. J. (1994). Reward functions for accelerated

learning. In Proc. of the Eleventh Int. Conf. on Ma-

chine Learning, pages 181–189. Morgan Kaufmann.

Team Distribution between Foraging Tasks with Environmental Aids to Increase Autonomy

35

Nogales, J. M. and Finke, J. (2013). Optimal distribution of

heterogeneous agents under delays. In 2013 American

Control Conf., pages 3212–3217.

Phan, C. and Liu, H. H. T. (2008). A cooperative uav/ugv

platform for wildfire detection and fighting. In Proc.

of the 7th Int. Asia Simulation Conf. on System Simu-

lation and Scientific Computing, pages 494–498.

Pini, G., Brutschy, A., Scheidler, A., Dorigo, M., and Bi-

rattari, M. (2014). Task partitioning in a robot swarm:

Object retrieval as a sequence of subtasks with direct

object transfer. Artificial life, 20(3):291–317.

Pitonakova, L., Crowder, R., and Bullock, S. (2016). Task

allocation in foraging robot swarms: The role of in-

formation sharing. Proc. of the Artificial Life Conf.,

page 306.

Raty, T. D. (2010). Survey on contemporary remote surveil-

lance systems for public safety. IEEE Transactions on

Systems, Man, and Cybernetics, Part C (Applications

and Reviews), 40(5):493–515.

S¸ ahin, E. (2005). Swarm Robotics: From Sources of In-

spiration to Domains of Application. Springer Berlin

Heidelberg, Santa Monica, CA.

Schmickl, T., Thenius, R., and Crailsheim, K. (2012).

Swarm-intelligent foraging in honeybees: benefits

and costs of task-partitioning and environmental

fluctuations. Neural Computing and Applications,

21(2):251–268.

Seeley, T. D., Visscher, P. K., and Passino, K. M. (2006).

Group decision making in honey bee swarms. Ameri-

can Scientist, 94(3):220–229.

Stone, P. and Veloso, M. (1999). Task decomposition, dy-

namic role assignment, and low-bandwidth commu-

nication for real-time strategic teamwork. Artificial

Intelligence, 110(2):241 – 273.

Sung, C., Ayanian, N., and Rus, D. (2013). Improving the

performance of multi-robot systems by task switch-

ing. In Proc. of the IEEE Int. Conf. on Robotics and

Automation, pages 2999–3006.

Viguria, A., Maza, I., and Ollero, A. (2007). Set: An al-

gorithm for distributed multirobot task allocation with

dynamic negotiation based on task subsets. In Proc.

of IEEE Int. Conf. on Robotics and Automation, pages

3339–3344.

Wawerla, J. and Vaughan, R. T. (2010). A fast and fru-

gal method for team-task allocation in a multi-robot

transportation system. In Proc. of the IEEE Conf. on

Robotics and Automation, pages 1432–1437. IEEE.

Yan, Z., Jouandeau, N., and Ali-Chrif, A. (2012). Multi-

robot heuristic goods transportation. In 6th IEEE Int.

Conf. Intelligent Systems, pages 409–414.

Yan, Z., Jouandeau, N., and Cherif, A. A. (2013). A survey

and analysis of multi-robot coordination. Int. Journal

of Advanced Robotic Systems, 10(12):399.

Zahadat, P., Hahshold, S., Thenius, R., Crailsheim, K.,

and Schmickl, T. (2015). From honeybees to robots

and back: division of labour based on partition-

ing social inhibition. Bioinspiration & biomimmics,

10(6):66005.

ICAART 2018 - 10th International Conference on Agents and Artificial Intelligence

36