Constraint Networks Under Conditional Uncertainty

Matteo Zavatteri and Luca Vigan

`

o

Department of Computer Science, University of Verona, Verona, Italy

Department of Informatics, King’s College London, London, U.K.

Keywords:

Constraint Networks, Conditional Uncertainty, Controllability, Resource Scheduling, AI-based Security,

CNCU.

Abstract:

Constraint Networks (CNs) are a framework to model the constraint satisfaction problem (CSP), which is

the problem of finding an assignment of values to a set of variables satisfying a set of given constraints.

Therefore, CSP is a satisfiability problem. When the CSP turns conditional, consistency analysis extends

to finding also an assignment to these conditions such that the relevant part of the initial CN is consistent.

However, CNs fail to model CSPs expressing an uncontrollable conditional part (i.e., a conditional part that

cannot be decided but merely observed as it occurs). To bridge this gap, in this paper we propose constraint

networks under conditional uncertainty (CNCUs), and we define weak, strong and dynamic controllability of

a CNCU. We provide algorithms to check each of these types of controllability and discuss how to synthesize

(dynamic) execution strategies that drive the execution of a CNCU saying which value to assign to which

variable depending on how the uncontrollable part behaves. We benchmark the approach by using ZETA, a

tool that we developed for CNCUs. What we propose is fully automated from analysis to simulation.

1 INTRODUCTION

Context and Motivations. Assume that we are given

a resource-scheduling problem specifying a conditio-

nal part that is out of control, and that we are then as-

ked to schedule (some of the) resources in a way that

meets all relevant constraints, or to prove that such a

scheduling does not exist. We are also permitted to

make our scheduling decisions as we like. In general,

we can act in three different main ways:

1. We assume that we can predict the future and then

make sure that a (possibly different) scheduling

for each possible uncontrollable behavior exists.

2. We assume that we know nothing and then make

sure that at least a single solution always works.

3. We assume that we can make our scheduling de-

cisions according to what is going on around us.

These are the intuitions behind the three main kinds of

controllability: weak (for presumptuous), strong (for

anxious) and dynamic (for grandmasters).

In recent years, a considerable amount of rese-

arch has been carried out to investigate controllabi-

lity analysis in order to deal with temporal and con-

ditional uncertainty, either in isolation or simultane-

ously. In particular, a number of extensions of sim-

ple temporal networks (STNs, (Dechter et al., 1991))

have been proposed. For example, simple tempo-

ral networks with uncertainty (STNUs, (Morris et al.,

2001)) add uncontrollable (but bounded) durations

between pairs of temporal events, whereas conditi-

onal simple temporal networks (CSTNs, (Hunsber-

ger et al., 2015)) and formerly conditional tempo-

ral problem (CTP, (Tsamardinos et al., 2003)) ex-

tend STNs by turning the constraints conditional. Fi-

nally, conditional simple temporal networks with un-

certainty (CSTNUs, (Hunsberger et al., 2012)) merge

STNUs and CSTNs, whereas conditional simple tem-

poral networks with uncertainty and decisions (CST-

NUDs, (Zavatteri, 2017)) encompass all previous for-

malisms.

Several algorithms have been proposed to check

the controllability of a temporal network, e.g.,

constraint-propagation (Hunsberger et al., 2015), ti-

med game automata (Cimatti et al., 2016; Zavatteri,

2017) and satisfiability modulo theory (Cimatti et al.,

2015a; Cimatti et al., 2015b).

Research has also been carried out in the “dis-

crete” world of classic constraint networks (CNs)

(Dechter, 2003) in order to address different kinds of

uncertainty. For example, a mixed constraint satis-

faction problem (Mixed CSP, (Fargier et al., 1996))

divides the set of variables in controllable and uncon-

Zavatteri, M. and Viganò, L.

Constraint Networks Under Conditional Uncertainty.

DOI: 10.5220/0006553400410052

In Proceedings of the 10th International Conference on Agents and Artificial Intelligence (ICAART 2018) - Volume 2, pages 41-52

ISBN: 978-989-758-275-2

Copyright © 2018 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

41

trollable, whereas a dynamic constraint satisfaction

problem (DCSP, (Mittal and Falkenhainer, 1990)) in-

troduces activity constraints saying when variables

are relevant depending on what values some other va-

riables have been assigned. Probabilistic approaches

such as (Fargier and Lang, 1993) aim instead at fin-

ding the most probable working solution.

Despite all this, a formal model to extend classic

CNs (Dechter, 2003) with conditional uncertainty ad-

hering to the modeling ideas employed by CSTNs is

still missing. In a CSTN, for instance, time points

(variables) and linear inequalities (constraints) are la-

beled by conjunctions of literals where the truth va-

lue assignments to the embedded Boolean propositi-

ons are out of control. Every proposition has an asso-

ciated observation time point, a special kind of time

point that reveals the truth value assignment to the as-

sociated proposition upon its execution (i.e., as soon

as it is assigned a real value). Equivalently, this truth

value assignment can be thought of as being under the

control of the environment.

Contributions. Our contributions in this paper are

three-fold. First, we define constraint networks un-

der conditional uncertainty (CNCUs) as an extension

of classic CNs and we give the semantics for weak,

strong and dynamic controllability. Second, we pro-

vide algorithms for each of these types of controllabi-

lity. Third, we discuss ZETA, a prototype tool that we

developed to automate and benchmark our results.

Organization. Section 2 provides essential back-

ground on CNs and the adaptive consistency algo-

rithm. Section 3 introduces a motivational example.

Section 4 introduces our main contribution: CNCUs.

Section 5 defines the semantics for weak, strong and

dynamic controllability and Section 6 addresses the

related algorithms. Section 7 discusses our tool ZETA

for CNCUs along with an experimental evaluation.

Section 8 discusses the correctness of our approach.

Section 9 discusses related work. Section 10 draws

conclusions and discusses future work.

2 BACKGROUND

In this section, we briefly review CNs and the adap-

tive consistency algorithm for the related consistency

checking (Dechter, 2003). We renamed some sym-

bols for coherence with the rest of the paper.

Definition 1. A Constraint Network (CN) is a triple

Z = hV , D, C i, where V = {V

1

,.. .,V

n

} is a finite

set of variables, D = {D

1

,.. .,D

n

} is a set of discrete

domains D

i

= {v

1

,.. .,v

j

} (one for each variable), and

C = {R

S

1

,.. .,R

S

n

} is a finite set of constraints each

one represented as a relation R

S

defined over a scope

of variables S ⊆ V such that if S = {V

i

,.. .,V

r

}, then

Algorithm 1: ADC(Z, d).

Input: A CN Z = hV ,D,C i and an ordering d = V

1

≺ · ·· ≺ V

n

Output: A set Buckets of buckets (one for each variable) if Z is

consistent, inconsistent otherwise.

1 for i ← n downto 1 do Partition the constraints as follows:

2 Put in Bucket(V

i

) all unplaced constraints mentioning V

i

3 for p ← n downto 1 do

4 Let j ← |Bucket(V

p

)| and S

i

be the scope of

R

S

i

∈ Bucket(V

p

)

5 S

0

←

S

j

i=1

S

i

\ {V

p

}

6 R

S

0

← π

S

0

(

j

i=1

R

S

i

)

7 if R

S

0

6=

/

0 then

8 Bucket(V

0

) ← Bucket(V

0

) ∪ {R

S

0

}, where V

0

∈ S

0

is

the “latest” variable in d.

9 else

10 return inconsistent

11 Buckets = {{Bucket(V )} | V ∈ V }

12 return Buckets

R ⊆ D

i

×··· × D

r

. A CN is consistent if each variable

V

i

∈ V can be assigned a value v

i

∈ D

i

such that all

constraints are satisfied.

The constraint satisfaction problem (CSP) is NP-

hard (Dechter, 2003). A CN is k-ary if all constraints

have scope cardinality ≤ k, binary when k = 2 (Dech-

ter, 2003; Montanari, 1974).

Let R

ij

be a shortcut to represent a binary relation

having scope S = {V

i

,V

j

}. A binary CN is minimal

if any tuple (v

i

,v

j

) ∈ R

ij

∈ C belongs to at least one

global solution for the underlying CSP (Montanari,

1974). Besides for a few restricted classes of CNs, the

general process of computing a minimal network is

NP-hard (Montanari, 1974). Furthermore, even con-

sidering a binary minimal network, the problem of ge-

nerating an arbitrary solution is NP-hard if there is no

total order on the variables (Gottlob, 2012).

Therefore, a first crude technique is that of se-

arching for a solution by exhaustively enumerating

(and testing) all possible solutions and stopping as

soon as one satisfies all constraints in C . To speed up

the search, we can combine techniques such as back-

tracking with pruning techniques such as node, arc

and path consistency (Mackworth, 1977).

k-consistency guarantees that any (locally consis-

tent) assignment to any subset of (k −1)-variables can

be extended to a k

th

(still unassigned) variable such

that all constraints between these k-variables are sa-

tisfied. Strong k-consistency is k-consistency for each

j such that 1 ≤ j ≤ k (Freuder, 1982).

Directional consistency has been introduced to

speed up the process of synthesizing a solution for

a constraint network limiting backtracking (Dechter

and Pearl, 1987). In a nutshell, given a total order

ICAART 2018 - 10th International Conference on Agents and Artificial Intelligence

42

V

1

{a,b, c}

V

2

{a,b, c}

V

3

{a,b, c}

V

4

{a,b, c}

R

13

R

14

R

24

R

34

(a) Constraint graph.

Bucket(V

4

) : R

14

,R

24

,R

34

k

Bucket(V

3

) : R

13

kR

123

Bucket(V

2

) : kR

12

Bucket(V

1

) : kR

1

(b) R

ij

∈ C

Figure 1: Graphical representation of a binary CN.

on the variables of a CN, the network is directional-

consistent if it is consistent with respect to the gi-

ven order that dictates the assignment order of va-

riables. In (Dechter and Pearl, 1987), an adaptive-

consistency (ADC) algorithm was provided as a di-

rectional consistency algorithm adapting the level of

k-consistency needed to guarantee a backtrack-free

search once the algorithm terminates, if the network

is consistent (see Algorithm 1). The input of ADC

is a CN Z = hV , D,C i along with an order d for V .

At each step the algorithm adapts the level of consis-

tency to guarantee that if the network passes the test,

any solution satisfying all constraints can be found

without backtracking. If the network is inconsistent,

the algorithm detects it before the solution generation

process starts. ADC initializes a Bucket(V ) for each

variable V ∈ V and first processes all the variables

top-down (i.e., from last to first following the orde-

ring d) by filling each bucket with all (still unplaced)

constraints R

S

∈ C such that V ∈ S. Then, it processes

again the variables top-down and, for each variable

V , it computes a new scope S

0

consisting of the union

of all scopes of the relations in Bucket(V ) neglecting

V itself. After that, it computes a new relation R

S

0

by

joining all R

S

∈ Bucket(V ) and projecting with respect

to S

0

( and π are the join and projection operators of

relational algebra). In this way, it enforces the appro-

priate level of consistency. If the resulting relation

is empty, then Z is inconsistent; otherwise, the algo-

rithm adds R

S

0

to the bucket of the latest variable in S

0

(with respect to the ordering d), and goes on with the

next variable. Finally, it returns the set of Buckets (we

slightly modified the return statement of ADC).

Any binary CN can be represented as a constraint

graph where the set of nodes coincides with V and

the set of edges represents the constraints in C . Furt-

hermore, nodes are labeled by their domains. Each

(undirected) edge between two variables V

1

and V

2

is

labeled by the corresponding R

12

∈ C . As an example,

consider the constraint graph in Figure 1(a) repre-

senting Z = hV , D, C i, where V = {V

1

,V

2

,V

3

,V

4

},

D = {D

1

,D

2

,D

3

,D

4

}, with D

1

= D

2

= D

3

= D

4

=

{a,b, c}, and C = {R

13

,R

14

,R

24

,R

34

}. All R

ij

∈

C contain the same tuples; actually, they all spe-

cify the 6= constraint between the pair of variables

they connect. That is, R

13

= R

14

= R

24

= R

34

=

{(a,b), (a,c), (b, a),(b, c), (c,a), (c,b)}.

The CN in Figure 1(a) is consistent. To prove that

we chose, without loss of generality (recall that any

order is fine for this algorithm (Dechter, 2003)), the

order d = V

1

≺ V

2

≺ V

3

≺ V

4

and ran ADC(Z, d).

The output of the algorithm is shown in Figure 1(b).

ADC first processes V

4

by filling Bucket(V

4

) with R

14

,

R

24

and R

34

(as they all mention V

4

in their scope

and are still unplaced). Then, it processes V

3

by fil-

ling Bucket(V

3

) with R

13

(but not R

34

). Finally, it le-

aves Bucket(V

2

) and Bucket(V

1

) empty as all relati-

ons mentioning V

2

and V

1

in their scope have already

been put in some other buckets. Therefore, the ini-

tialization phase fills the buckets in Figure 1(b) with

all relations on the left of k (the newly generated ones

will appear on the right).

In the second phase, the algorithm computes

R

123

= π

123

(R

14

R

24

R

34

) = {(a, a,a), (a, a,b),

(a,a, c), (a, b, a), (a, b,b), (a, c,a), (a, c,c), (b, a,a),

(b,a, b), (b, b, a), (b, b,b), (b, b,c), (b, c,b), (b, c,c),

(c,a, a), (c, a, c), (c, b,b), (c, b,c), (c, c,a), (c, c,b),

(c,c, c)} and adds it to Bucket(V

3

) (the latest vari-

able in the scope {V

1

,V

2

,V

3

}). Then, it goes ahead

by processing Bucket(V

3

) generating in a similar way

R

12

and adding it to Bucket(V

2

). Finally, it proces-

ses Bucket(V

2

) by computing R

1

and adding it to

Bucket(V

1

). Since the joins yielded no empty relation,

it follows that Z is consistent.

We generate a solution by assigning the variables

following the order d. For each V ∈ d we just look

for a value v in its domain such that the current so-

lution augmented with V = v satisfies all constraints

in Bucket(V ). If the network is consistent, at least one

value is guaranteed to be there. In this way, each solu-

tion can be generated efficiently without backtracking

by assigning one variable at a time. A possible solu-

tion is V

1

= a, V

2

= c, V

3

= c and V

4

= b.

3 MOTIVATIONAL EXAMPLE

As a motivational example, we consider an adaptation

of a standard workflow/business-process example that

describes a loan origination process (LOP) for eligi-

ble customers whose financial records have already

been approved. We tuned the example in order to fo-

cus on a few characteristics of interest.

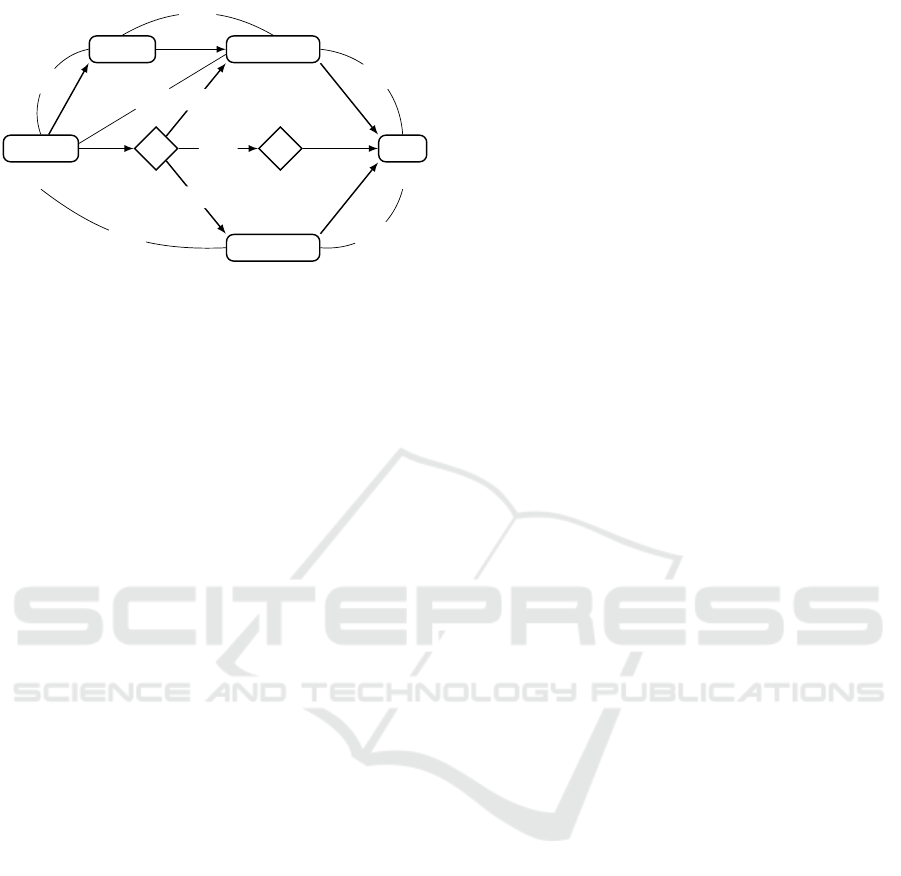

The Workflow. The workflow follows a free composi-

tion approach and is subject to conditional uncertainty

as the truth value assignments to the Boolean pers?

and rnd? are out of control (Figure 2).

The LOP starts by processing a request (ProcReq)

Constraint Networks Under Conditional Uncertainty

43

ProcReq

{a,b, c}

LogReq

{a,b, c}

?

{wf}

pers?

BContract

{a,b, c, d}

?

{wf}

rnd?

PContract

{a,b}

Sign

{b}

Yes

No

Yes

SP

1

SP

2

SP

3

SP

4

SP

5

SP

6

Figure 2: A simplification of a loan origination process.

ProcReq, LogReq, Sign and the leftmost conditional split

connector are always executed. PContract and the right-

most conditional split connector are executed iff pers? = T.

BContract is executed iff pers? = F. rnd? only influences

security policies. We abbreviate Alice, Bob, Charlie, David

and workflow engine to a, b, c, d and wf.

with Alice, Bob and Charlie being the only authorized

users. After that, the request is logged for future ac-

countability purposes (LogReq) with the same users

of ProcReq authorized for this task. The flow of exe-

cution then splits into two (mutually-exclusive) bran-

ches upon the execution of the first conditional split

connector (leftmost diamond labeled by ?) which sets

the truth value of pers? according to the discovered

type of loan. A workflow engine wf is authorized to

execute this split connector.

If pers? is true (T), it means that the workflow

will handle a personal loan and that the flow of the

execution continues by preparing a personal contract

(PContract), with Alice and Bob the only authorized

users. Moreover, when processing personal loans, dif-

ferent security policies hold depending on what truth

value a second Boolean variable (rnd?) is assigned

(see below). The truth value of rnd? is generated at

random upon the execution of the second conditional

split connector (rightmost diamond) whose authori-

zed user is again wf. Thus, the truth value assignment

of rnd? can be thought of as uncontrollable as well.

Note that no task will be prevented from executing de-

pending on the value of rnd?, only the users carrying

them out will (see the end of this section).

Instead, if pers? is false (F), the workflow will

handle a business loan and the flow of execution con-

tinues by preparing a business contract (BContract)

with Alice, Bob, Charlie and David authorized users.

Finally, regardless of the truth values of pers?

and rnd? the LOP concludes with the signing of the

contract (Sign) with Bob the only authorized user.

Security Policies. A separation of duties (SoD) (resp.,

binding of duties (BoD)) between two tasks T

1

and T

2

says that the users executing T

1

and T

2

must be dif-

ferent (resp., equal). In our example, Alice and Bob

are married and thus the only relatives. Our process

enforces six security policies (SP

1

− SP

6

).

SP

1

calls for a SoD between ProcReq and LogReq

and also requires that the users executing the two

tasks must not be relatives if pers? = F. SP

2

calls

for a SoD between LogReq and BContract (im-

plicitly when pers? = F). SP

3

calls for a SoD

between ProcReq and BContract (implicitly when

pers? = F). SP

4

calls for a SoD between ProcReq

and PContract and also requires that the users exe-

cuting the two tasks must not be relatives (impli-

citly when pers? = T). SP

5

calls for a SoD between

BContract and Sign (implicitly when pers? = F).

SP

6

calls for either a SoD between PContract and

Sign if pers? = T and rnd? = T, or a BoD between

PContract and Sign if pers? = T and rnd? = F.

4 CNCUs

In this section, we extend CNs to address conditio-

nal uncertainty. We call this new kind of network

Constraint Network under Conditional Uncertainty

(CNCU). CNCUs are obtained by extending CNs with

• a set of Boolean propositions whose truth value

assignments are out of control (or, equivalently,

can be thought of as being under the control of

the environment),

• observation variables to observe such truth value

assignments, and

• labels to enable or disable a subset of variables

and constraints, and therefore introduce a (impli-

cit) notion of partial order among the variables.

We will also talk about execution meaning that

we execute a variable by assigning it a value and we

execute a CNCU by executing all relevant variables.

Variables and constraints are relevant if they must be

considered during execution.

Given a set P = {p,q, .. . } of Boolean proposi-

tions, a label = l

1

∧ ·· · ∧ l

n

is a finite conjunction

of literals l

i

, where a literal is either a proposition

p ∈ P (positive literal) or its negation ¬p (negative

literal). The empty label is denoted by . The la-

bel universe of P , denoted by P

∗

, is the set of all

possible labels drawn from P ; e.g., if P = {p,q},

then P

∗

= {, p, q,¬p,¬q, p ∧q, p ∧¬q,¬p∧q, ¬p ∧

¬q, p ∧ ¬p, q ∧ ¬q}. A label

1

∈ P

∗

is consistent iff

1

is satisfiable, entails a label

2

(written

1

⇒

2

)

iff all literals in

2

appear in

1

too (i.e., if

1

is more

specific than

2

) and falsifies a label

2

iff

1

∧

2

is

not consistent. The difference of two labels

1

and

ICAART 2018 - 10th International Conference on Agents and Artificial Intelligence

44

PR

{a,b, c}

[]

LR

{a,b, c}

[]

P?

{wf}

[]

BC

{a,b, c, d}

[¬p]

Q?

{wf}

[p]

PC

{a,b}

[p]

S

{b}

[]

(R

1

,¬p)

(R

1

,)

(R

2

,¬p)

(R

3

,¬p)

(R

4

, p)

(R

5

,¬p)

(R

6

, p ∧ q)

(R

6

, p ∧ ¬q)

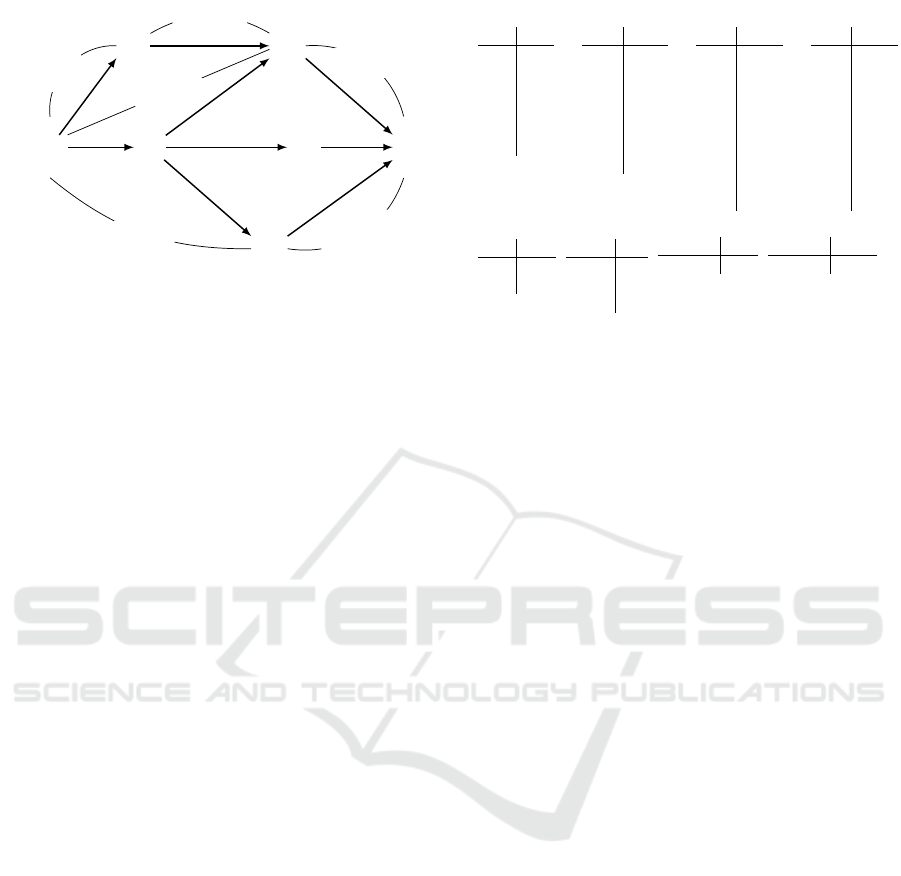

Figure 3: Binary CNCU modeling the workflow in Figure 2.

2

is a new label

3

=

1

−

2

consisting of all liter-

als of

1

minus those shared with

2

. For instance, if

1

= p ∧ ¬q and

2

= p, then

1

and

2

are consistent,

1

⇒

2

,

1

−

2

= ¬q and

2

−

1

= .

Definition 2. A constraint network under

conditional uncertainty (CNCU) is a tuple

hV , D, D, OV , P ,O, L,≺, C i, where:

• V = {V

1

,V

2

,. .. } is a finite set of variables.

• D = {D

1

,D

2

,. .. } is a set of discrete domains.

• D : V → D is a mapping assigning a domain to

each variable.

• OV ⊆ V = {P?, Q?, .. .} is a set of observation

variables.

• P = {p, q,. ..} is a set of Boolean propositions

whose truth values are all initially unknown.

• O : P → OV is a bijection assigning a unique ob-

servation variable P? to each proposition p. When

P? executes, the truth value of p becomes known

and no longer changes.

• L : V → P

∗

is a mapping assigning a label to

each variable V saying when V is relevant.

• ≺⊆ V × V is a precedence relation on the varia-

bles. We write (V

1

,V

2

) ∈≺ (or V

1

≺ V

2

) to express

that V

1

is assigned before V

2

.

• C is a finite set of labeled constraints of the

form (R

S

,), where S ⊆ V and ∈ P

∗

. If S =

{V

1

,. .. ,V

n

}, then R

S

⊆ D(V

1

) × ·· · × D(V

n

).

We graphically represent a (binary) CNCU by ex-

tending the constraint graph discussed for CNs into

a labeled constraint (multi)graph, where each varia-

ble is also labeled by its label L(V ), and the edges

are of two kinds: order edges (directed unlabeled ed-

ges) and constraint edges (undirected labeled edges).

An order edge V

1

→ V

2

models V

1

≺ V

2

. A constraint

edge between V

1

and V

2

models (R

12

,). Many con-

straint edges may possibly be specified between the

same pair of variables, as long as is different (e.g.,

(R

1

,) and (R

1

,¬p) between PR and LR in Figure 3).

Figure 3 shows a CNCU modeling the workflow

(a) (R

1

,)

PR LR

a b

a c

b a

b c

c a

c b

(b) (R

1

,¬p)

PR LR

a a

a c

b b

b c

c a

c b

c c

(c) (R

2

,¬p)

LR BC

a b

a c

a d

b a

b c

b d

c a

c b

c d

(d) (R

3

,¬p)

PR BC

a b

a c

a d

b a

b c

b d

c a

c b

c d

(e) (R

4

, p)

PR PC

c a

c b

(f) (R

5

,¬p)

BC S

a b

c b

d b

(g) (R

6

, p ∧ q)

PC S

a b

(h) (R

6

, p∧¬q)

PC S

b b

Figure 4: Labeled constraints of the CNCU in Figure 3.

in Figure 2: PR, LR, PC BC and S model ProcReq,

LogReq, PContract, BContract and Sign, whereas

P? and Q? (observation variables) model the two con-

ditional split connectors. P? and Q? are associated

to the Boolean propositions p and q which, in turn,

abstract pers? and rnd?. The union of all relations

having the form (R

i

,) model the security policy SP

i

discussed at the end of Section 3. We show all con-

straints of Figure 3 in Figure 4. For example, (R

1

,)

(Figure 4(a)) contains all tuples (x, y) such that x 6= y

(what must always hold) and (R

1

,¬p) (Figure 4(b))

contains all tuples (x,y) such that x and y are not rela-

tives (what must also hold for business loans). Thus,

(R

1

,) and (R

1

,¬p) model SP

1

.

In the rest of this section, we say when CNCUs

are well-defined. We import the notions of label ho-

nesty and coherence from temporal networks (see,

e.g., (Hunsberger et al., 2015; Zavatteri, 2017)).

A label labeling a variable or a constraint is ho-

nest if for each literal p or ¬p in we have that

⇒ L(P?), where P? = O(p) is the observation va-

riable associated to p; is dishonest otherwise. For

example, consider (R

6

, p ∧ q) in Figure 3. The con-

straint applies only if p = q = T. However, the truth

value of q is set (by the environment) upon the execu-

tion of Q?, which in turn is relevant iff p was previ-

ously assigned true (as L(Q?) = p). Thus, an honest

containing q or ¬q should also contain p. A label

on a constraint is coherent if it entails the labels of all

variables in the scope of the constraint.

Definition 3. A CNCU hV ,D, D,OV ,P , O, L,≺, C i

is well defined iff all labels are consistent and the fol-

lowing properties hold.

• Variable Label Honesty. L(V ) is honest for any

V ∈ V , and O(p) ≺ V for any p or ¬p belonging

to L(V ). That is, V only executes when the honest

L(V ) becomes completely known and evaluates to

true; e.g., BC after P? if ¬p in Figure 3.

Constraint Networks Under Conditional Uncertainty

45

• Constraint Label Honesty. is honest for any

(R

S

,) ∈ C . That is, R

S

only applies when the ho-

nest becomes completely known and evaluates

to true; e.g., (R

6

, p ∧ q) in Figure 3 if after P? and

Q?, p and q are observed true.

• Constraint Label Coherence. ⇒ L(V ) for any

(R

S

,) ∈ C and any V ∈ S. That is, the label of a

constraint is at least as specific as any label of the

variables in its scope; e.g., (R

6

, p ∧ q) in Figure 3.

• Precedence Relation Coherence. For any V

1

,V

2

∈

V , if V

1

≺ V

2

then L(V

1

) ∧ L(V

2

) is consistent.

That is, no partial order can be specified between

variables not taking part together in any execu-

tion; e.g. PC and BC in Figure 3.

Thus, the CNCU in Figure 3 is well-defined.

5 SEMANTICS

We give the semantics for weak, strong and dynamic

controllability of CNCUs. We note that in this paper

we provide algorithms relying on total orderings so

as to handle as many solutions as possible.

Definition 4. A scenario is a mapping s : P →

{T,F, U} assigning true or false or unknown to each

proposition in P .

A scenario s is honest if for any p ∈ P where

s(p) 6= U, we have that s(q) = T for any q ∈ L(O(p)),

and s(q) = F for any ¬q ∈ L(O(p)).

A scenario s satisfies a label (written s |= ) if

s satisfies all literals in , where s |= p iff s(p) = T

(positive literal) and s |= ¬p iff s(p) = F (negative li-

teral).

An (honest) scenario s is partial if there exists an

unknown proposition p (i.e., s(p) = U) such that s |=

L(P?) (i.e., P? = O(p) is relevant in s); s is complete

otherwise. The initial scenario is that in which all

propositions are unknown.

We write S to denote the set of all scenarios.

Consider Figure 3. If s(p) = T and s(q) = U, then

s is honest (note that L(P?) = so no check is re-

quired) and partial as s(q) = U and Q? is relevant for

s because s |= L(Q?). Instead, if s(p) = U, s(q) = T,

then s is dishonest as q can only be assigned upon the

execution of Q?, which requires s(p) = T. Moreover,

s

1

(p) = T, s

1

(q) = T and s

2

(p) = T, s

2

(q) = F are both

honest and complete (Figure 3).

From now on, we will assume scenarios to be ho-

nest, sometimes partial, sometimes complete, unless

stated otherwise. In what follows, we give the defini-

tion of projection, an operation to turn a CNCU into a

classic CN according to a complete scenario s, which

is crucial to define the three kinds of controllability.

Definition 5. Let Z = hV ,D, D,OV ,P , O, L,≺, C i

be a CNCU and s any complete scenario. The pro-

jection of Z onto s is a CN Z

s

= hV

s

,D,C

s

i such that:

• V

s

= {V | V ∈ V ∧ s |= L(V )}

• C

s

= {R

S

| (R

S

,) ∈ C ∧ s |= }

For example the projection of Figure 3 with re-

spect to the initial scenario s(p) = U and s(q) = U

results in a CN, where V

s

= {PR, LR,P?, S}, and

C

s

= {R

1

}, where R

1

is the relation of the original

(R

1

,) ∈ C . Instead, if s(p) = F and s(q) = U we get

V

s

= {PR, LR,P?, BC, S} and C

s

= {R

1

,R

2

,R

3

,R

5

},

where this time R

1

is the intersection of the original

(R

1

,), (R

1

,¬p) ∈ C since s |= and s |= ¬p.

Definition 6. A schedule for a subset of variables

V

0

⊆ V is a mapping ψ : V

0

→

S

V ∈V

0

D(V ) assig-

ning values to the variables. We write Ψ to denote the

set of all schedules.

Consider again Figure 3. ψ(PR) = c means that

PR is assigned c (i.e., Charlie processes the loan re-

quest). At any time, the domain of ψ coincides with

the set V

s

of variables arising from the projection of

the CNCU onto s (when s is initial, the domain of ψ

consists of all unlabeled variables). However, a sche-

dule is nothing but a fixed plan for executing a bunch

of variables (not even saying in which order). The in-

teresting part is how we generate it. To do so, we need

a strategy. Let ∆(V

0

) be the set of all orderings for a

subset V

0

⊆ V , and ∆

∗

= ∪

i

{∆(V

i

)} for any V

i

∈ 2

V

be the ordering universe.

Definition 7. An execution strategy for a CNCU Z is

a mapping σ : S → Ψ × ∆

∗

from scenarios to schedu-

les and orderings such that the domain of the resulting

schedule ψ ∈ Ψ consists of all variables V belonging

to the projection Z

s

= hV

s

,D,C

s

i and d ∈ ∆

∗

is an

ordering for V

s

. If (ψ, d) = σ(s) also specifies a con-

sistent assignment with d meeting the restriction ≺ of

the initial CNCU, then σ is said to be viable. We write

val(σ,s,V ) = v to denote the value v assigned to V by

σ in s, and ord(σ, s) to denote the ordering d assigned

by σ in the scenario s to the variables in V

s

.

The first kind of controllability is weak controlla-

bility which ensures that each projection is consistent.

Definition 8. A CNCU is weakly controllable (WC)

if for each complete scenario s ∈ S there exists a via-

ble execution strategy σ(s).

Dealing with such a controllability is quite com-

plex as it always requires one to predict how all un-

controllable parts will behave before starting the exe-

cution. This leads us to consider the opposite case in

which we want to synthesize a strategy working for

all possible scenarios. Thus, the second kind of con-

trollability is strong controllability.

ICAART 2018 - 10th International Conference on Agents and Artificial Intelligence

46

Definition 9. A CNCU is strongly controllable (SC)

if there exists a viable execution strategy σ such that

for any pair of honest scenarios s

1

,s

2

and any variable

V , if V ∈ V

s

1

∩ V

s

2

, then val(σ, s

1

,V ) = val(σ,s

2

,V )

and ord(σ, s

1

) = ord(σ, s

2

).

Strong controllability is, however, “too strong”. If

a CNCU is not strongly controllable, it could be still

executable by refining the schedule in real time de-

pending on how s evolves. To achieve this purpose,

we introduce dynamic controllability. Since the truth

values of propositions are revealed incrementally, we

first introduce the formal definition of history that we

then use to define dynamic controllability.

Definition 10. The history H (V, s) of a variable V

in the scenario s is the set of all observations made

before V executes.

Consider the projection of Figure 3 with respect

to s(p) = T and s(q) = U and the ordering d = PR ≺

LR ≺ P? ≺ PC ≺ Q? ≺ S. We have that H (PC, s) =

/

0

before P? executes and H (PC, s) = {p} after.

Definition 11. A CNCU is dynamically controllable

if there exists a viable execution strategy σ such that

for any pair of scenarios s

1

,s

2

and any variable V ∈

V

s

1

∩V

s

2

if H (V, s

1

) = H (V, s

2

), then val(σ,s

1

,V ) =

val(σ,s

2

,V ) and ord(σ, s

1

) = ord(σ, s

2

).

Abusing grammar, we use WC, SC and DC as

both nouns and adjectives (the use will be clear from

the context). As for temporal networks (Morris et al.,

2001), it is easy to see that SC ⇒ DC ⇒ WC.

6 CONTROLLABILITY

CHECKING ALGORITHMS

In this section, we provide the algorithms to check the

three kinds of controllability introduced in Section 5.

Since we are going to exploit directional consistency,

we first need to address how to get a suitable total or-

der for the variables meeting the restrictions specified

by ≺. We will always classify as uncontrollable those

CNCUs for which no total order exists.

Given a CNCU, to get a possible total order co-

herent with ≺, we build a directed graph G where

the set of nodes is V and the set of edges is such

that there exists a directed edge V

1

→ V

2

in G for any

(V

1

,V

2

) ∈≺. We refer to this graph as G = hV , ≺i.

For example, in Figure 3, G is the graph that remains

after removing all labels and constraint edges.

From graph theory, we know that an ordering of

the vertexes of a directed acyclic graph (DAG) meet-

ing a given restriction ≺ can be found in polynomial

time by running the TOPOLOGICALSORT algorithm

Algorithm 2: WC-CHECKING (Z).

Input: A CNCU Z = hV ,D,D, OV , P , O,L, ≺,C i

Output: A set of solutions each one having the form

hs,d, Bucketsi, where s is a complete scenario, d an

ordering for V

s

and Buckets is a set of buckets (one for

each variable in V

s

) if Z

s

is WC, uncontrollable

otherwise.

1 Solutions ←

/

0

2 HonestLabels ← COMPLETESCENARIOS(Z)

3 foreach ∈ HonestLabels do For each honest scenario

4 Let s be the scenario corresponding to

5 Let Z

s

be the projection of Z onto s

6 d ← TOPOLOGICALSORT(G) G ← hV

s

,≺

s

i

7 if no order is possible then

8 return uncontrollable

9 Buckets ← ADC(Z

s

,d)

10 if Z

s

is inconsistent then

11 return uncontrollable

12 Solutions ← Solutions ∪ {hs, d,Bucketsi}

13 return Solutions

Algorithm 3: CCCLOSURE(Labels).

Input: A set of labels Labels

Output: The closure of all possible consistent conjunctions

1 Closure ← Labels

2 do

3 Pick two labels

1

and

2

from Closure

4 if

1

∧

2

is consistent and

1

∧

2

6∈ Closure then

5 Closure ← Closure ∪ {

1

∧

2

}

6 while Any adding is possible

7 return Closure

on G. At every step, TOPOLOGICALSORT chooses

a vertex V without any predecessor (i.e., one without

incoming edges), outputs V and removes V and all di-

rected edges from V to any other vertex (equivalently,

removes every (V,V

2

) ∈≺). Then, TOPOLOGICAL-

SORT recursively applies to the reduced graph until

the set of vertexes becomes empty. If no total order

exists, TOPOLOGICALSORT gets stuck in some ite-

ration because of a cycle V

1

→ . . .V

1

, which makes

impossible to find a vertex without any predecessor.

6.1 WC-checking

The idea behind the weak controllability checking

(WC-checking) is quite simple: every projection

must have a solution. Given a CNCU Z =

hV , D, D, OV , P ,O, L,≺, C i, we run the classic ADC

on each projection Z

s

according to a complete sce-

nario s. Since each Z

s

is a classic CN, any orde-

ring (meeting the relevant part of ≺ for Z

s

) will be

fine. We get one by running TOPOLOGICALSORT

on G

s

= hV

s

,≺

s

i, where ≺

s

= {(V

1

,V

2

) | (V

1

,V

2

) ∈≺

∧V

1

,V

2

∈ V

s

} (this is the relevant part of ≺). Since

Constraint Networks Under Conditional Uncertainty

47

Algorithm 4: COMPLETESCENARIOS(Z)

Input: A CNCU Z = hV ,D,D, OV , P , O,L, ≺,C i

Output: The set of all complete scenarios, where each s is

represented as the corresponding

s

.

1 HonestLabels ← {}

2 for P? ∈ OV do

3 HonestLabels ←

HonestLabels ∪ {L(P?) ∧ p} ∪ {L(P?)∧ ¬p}

4 HonestLabels ← CCCLOSURE(HonestLabels)

5 do

6 Pick two labels

1

and

2

from HonestLabels

7 if

1

6=

2

and

1

⇒

2

then

8 HonestLabels ← HonestLabels \ {

2

}

9 while Any removal is possible

10 return HonestLabels

there is no difference between honest labels and ho-

nest scenarios, we conveniently work with labels.

WC-CHECKING (Algorithm 2) starts by compu-

ting all complete scenarios (line 2). In a nutshell,

it computes the longest consistent conjunctions ari-

sing from an initial set of labels containing (i) the

empty label , and (ii) for each observation varia-

ble P?, the pair of labels L(P?) ∧ p (i.e., L(P?) aug-

mented with the positive literal p associated to P?),

and L(P?) ∧ ¬p (the other case) (Algorithm 4, lines

1-3). In this way, we consider all possible ways to

extend an honest (but not necessarily complete) sce-

nario. After that, Algorithm 4 computes the closure of

all consistent conjunctions of labels drawn from this

set (Algorithm 4, Algorithm 4, and more in detail Al-

gorithm 3), and eventually rules out from the compu-

ted set of labels those entailed by some other diffe-

rent label in the same set. This is equivalent to saying

that Algorithm 4 rules out all partial scenarios (last

loop). In this way, we keep the longest conjunctions

corresponding to all complete scenarios. Note that the

conjunction of two honest and consistent labels is an

honest and consistent label corresponding to a partial

or a complete scenario.

The CNCU in Figure 3 is WC: the complete sce-

narios (written as labels) are ¬p, p ∧q and p ∧ ¬q.

For each complete scenario s, we synthesize a

strategy σ by generating a solution for the projection

Z

s

following the ordering d computed initially (Algo-

rithm 2, line 6). Although Definition 8 says that one

strategy is enough, our approach is able to handle all

possible strategies for each scenario s as during the

solution-generation process the value assignments do

not depend on any uncontrollable part.

As an example, consider Figure 3, the scenario

s

1

= ¬p and the ordering d = PR ≺ P? ≺ LR ≺ BC ≺ S

for Z

¬p

. A possible strategy synthesized from the

buckets of Z

¬p

along d and satisfying all constraints

in Figure 4 is val(σ,¬p, PR) = a, val(σ, ¬p, P?) = wf,

Algorithm 5: SC-CHECKING (Z)

Input: A CNCU Z = hV ,D,D, OV , P , O,L, ≺,C i

Output: A tuple hd, Bucketsi, where d is a total ordering for V

and Buckets is a set of buckets (one for each variable) if

Z is SC, uncontrollable otherwise.

1 Compute a CN Z

∗

← hV , D,C

∗

i where C

∗

← {R

S

| (R

S

,) ∈ C }

2 d ← TOPOLOGICALSORT(G) G ← hV ,≺i

3 if no order is possible then

4 return uncontrollable

5 return ADC(Z

∗

,d)

val(σ,¬p,LR) = c, val(σ,¬p,BC) = d and

val(σ,¬p,S) = b. That is, whenever we can

predict that the workflow in Figure 2 is going through

a business loan, Alice processes the request, Charlie

logs it, David prepares the business contract and Bob

does the signing. The relevant part of the complexity

of WC-CHECKING is 2

|P |

× Complexity(ADC) as

the worst case is a CNCU specifying 2

|P |

complete

scenarios (all other sub-algorithms run in polynomial

time).

6.2 SC-checking

The strong controllability checking (SC-checking)

does not need to unfold all honest scenarios at all.

From an algorithmic point of view it is even easier

to understand: a single solution must work for all

projections. To achieve this purpose, we start with

a simple operation: we wipe out all the labels in the

CNCU. Then, we run ADC on this “super-projection”

by choosing the ordering obtained by TOPOLOGI-

CALSORT run on the related G (Algorithm 5).

The CNCU in Figure 3 is not SC. Although a to-

tal order exists once we have wiped out all the labels

(d = PR ≺ P? ≺ PC ≺ Q? ≺ LR ≺ BC ≺ S), there is

no way to find a consistent assignment to S that al-

ways works for the initial CNCU. It is not difficult

to see that the problem lies in the constraints of the

original CNCU shown in Figure 4. In the first phase,

when ADC fills the buckets, each original constraint

(R

S

,) is deprived of its label (becoming (R

S

,))

and added to the bucket of the latest variable in S.

Consider the original (R

6

, p ∧ q) and (R

6

, p ∧ ¬q)

(Figure 4). ADC transforms them into (two) un-

labeled constraints (R

6

,) and then add both to

Bucket(S). Since the labels of the two relations

are the same, Bucket(S) actually contains the inter-

section of the two (as both must hold). However,

({(a,b)}, ) ∩ ({(b,b)}, ) = (

/

0,).

In other words, in the workflow in Figure 2, Bob

always does the signing. The problem is that the user

who prepares the personal contract must be different

according to which truth value rnd? will be assigned.

If the system calls for a SoD (rnd? = T), then Alice

ICAART 2018 - 10th International Conference on Agents and Artificial Intelligence

48

Algorithm 6: LABELEDADC(Z, d).

Input: A CNCU Z = hV ,D,D, OV , P , O,L, ≺,C i and an

ordering d = V

1

≺ · ·· ≺ V

n

Output: A set Buckets of buckets (one for each variable) if Z is

consistent along d, inconsistent otherwise.

1 foreach (R

S

,) ∈ C do Partition constraints as follows

2 Let V be the latest variable in S according to d

3 Let

Rem

be the conjunction of all literals p or ¬p in such

that either V = P? or V ≺ P? in d, where P? = O(p)

4 Add (R

S

, −

Rem

) to Bucket(V )

5 foreach V in d taken in reverse order do Process buckets

6 Closure ← CCCLOSURE({ | (R

S

,) ∈ Bucket(V )})

7 for

n

∈ Closure do new constraint’s label

8 Entailed ← {R

S

| (R

S

,) ∈ Bucket(V ) ∧

n

⇒ }

9 S

n

←

S

R

S

∈Entailed

S \ {V} new constraint’s scope

10 Compute R

tmp

←

R

S

∈Entailed

R

S

enforce

k-consistency

11 if R

tmp

=

/

0 then

12 return uncontrollable

13 if S

n

6=

/

0 then propagate the new constraint

14 R

n

← π

S

n

(R

tmp

) Project onto the new scope

15 Let V

n

be the latest variable in S

n

according to d

16 Compute

Rem

as before but w.r.t.

n

17 Add (R

S

n

,

n

−

Rem

) to Bucket(V

n

)

18 Buckets ← {{Bucket(V )} | V ∈ V }

19 return Buckets

prepares the contract, else Bob does it. However, the

intersection of the users allowed to carry out this task

according to rnd? is empty, which means that the user

who prepares the contract for a personal loan cannot

be decided before the execution starts. The complex-

ity of SC-CHECKING coincides with that of ADC as

the sub-procedures to turn a CNCU unconditional and

computing a total ordering run in polynomial time.

6.3 DC-checking

The dynamic controllability checking (DC-checking)

addresses the most appealing type of controllability.

If a CNCU is not SC, it could be DC by deciding

which value to assign to which variable depending

on how the uncontrollable part behaves. This sub-

section discusses this algorithm. We start with LA-

BELEDADC (Algorithm 6), a main subalgorithm we

make use of, which extends ADC to address the con-

ditional part refining the adding or tightening of con-

straints to the buckets and the constraint-propagation.

When we add a constraint (R

S

,) to a Bucket(V ),

we lighten by removing all literals p or ¬p in that

will still be unknown by the time V executes. That is,

those whose related observation variables are either V

itself or will be assigned after V according to d.

When propagating constraints, LABELEDADC

enforces the adequate level of k-consistency for all

combinations of relevant honest (partial) scenarios

arising from the conjunctions of all labels related to

the constraints in the buckets. That is, for each V , it

runs CCCLOSURE on the set Closure = { | (R

S

,) ∈

Bucket(V )}. After that, it generates a new constraint

(R

S

n

,

n

) for each

n

∈ Closure, where S

n

is the union

of the scopes of the constraints in Bucket(V ) (whose

labels are entailed by

n

) deprived of V . R

S

n

contains

all tuples surviving the join of the entailed constraints

projected onto S

n

(as in the classic ADC). If no empty

relation is computed, then the new constraint is added

to the bucket of the latest variable in S

n

(if any). If

S

n

=

/

0, then it means that the algorithm computed an

(implicit) unary constraint for V .

Finally, LABELEDADC returns the set of buckets

from which any solution can be built according to d.

However, given an ordering d, if LABELEDADC

“says no”, it could be a matter of wrong ordering.

Consider PC, Q? and S in Figure 3, and assume that

those three variables are ordered as PC ≺ Q? ≺ S.

Further, consider Figure 4(g) and Figure 4(h), and

suppose that PC = a. When Q? is executed (Q? = wf),

the truth value of q becomes known (recall that in this

partial scenario s(p) = T). If s(q) = T, then S = b and

(a,b) ∈ (R

6

, p ∧ q) (Figure 4(g)), but if s(q) = F, then

S = b and (a, b) 6∈ (R

6

, p ∧ ¬q) (Figure 4(h)).

More simply, if Alice executes PContract and af-

terwards rnd? = F, then no valid user remains for

Sign as SP

6

calls for a BoD between the two tasks

(Figure 1). If Bob executes PContract and after-

wards rnd? = T, then the problem is the same (so

there is no user who can be assigned conservatively to

PContract without any information on rnd?). Fortu-

nately, PContract and this split connector are unor-

dered (no precedence is specified between the two

components). This situation allows us to act in a more

clever way: What if we executed PContract after ob-

serving the truth value of rnd?? In such a case, our

strategy would be: if rnd? = T, then Alice, else Bob.

Formally, DC-CHECKING (Algorithm 7) works

by looking for an ordering d coherent with ≺ such

that LABELEDADC “says yes” when analyzing Z al-

ong d. If no ordering works, then the network is un-

controllable. The algorithm refines a recursive back-

tracking extension of all topological sorts to run LA-

BELEDADC for every possible ordering meeting ≺.

This makes DC-CHECKING incremental.

For example, the CNCU in Figure 3 is DC along

the ordering d

1

= PR ≺ LR ≺ P? ≺ Q? ≺ BC ≺ PC ≺

S and uncontrollable along d

2

= PR ≺ LR ≺ P? ≺

BC ≺ PC ≺ Q? ≺ S (as PC is assigned before Q?).

We execute a CNCU proved to be DC as follows.

Let

s

be the label corresponding to the current scena-

rio. Initially

s

= . For each variable V along the

Constraint Networks Under Conditional Uncertainty

49

Algorithm 7: DC-CHECKING (Z).

Input: A CNCU Z = hV ,D,D, OV , P , O,L, ≺,C i

Output: A tuple hd, Bucketsi, where d is an ordering for V and

Buckets is a set of buckets (one for each variable) if Z is

DC along d, uncontrollable otherwise.

1 S ← V global variable

2 Buckets ←

/

0 global variable

3 Let d be an empty list for the ordering global variable

4 if ALLTOPSORTDC(S) = true then

5 return hd, Bucketsi dynamically controllable

6 return uncontrollable

7 Procedure ALLTOPSORTDC(S) S is the current set of

variables

8 if S =

/

0 then try the current order

9 Buckets ← LABELEDADC(Z, d)

10 if Z is consistent then

11 return true stop here

12 else

13 Let Π be the set of variables without predecessors in ≺

14 for V ∈ Π do

15 Add V to the order d as the last element

16 S ← S \ {V}

17 if ALLTOPSORTDC(S) = true then

18 return true

19 Remove the last element of d backtracking

20 S ← S ∪ {V} backtracking

21 return false the ordering d does not work

ordering d, if V is relevant for

s

, then we look for a

value v in the domain of V satisfying all relevant con-

straints in Bucket(V ). If V is irrelevant (as

s

falsifies

L(V )), then we ignore V and go ahead with the next

variable (if any). Moreover, if V is an observation va-

riable, where p is the associated proposition, then

s

extends to

s

∧ p iff p is assigned T, and to

s

∧ ¬p

otherwise. In this way, a partial scenario extends to a

complete one, one observation variable at a time.

A strategy to execute the CNCU in Figure 3 is a

strategy for the workflow in Figure 2: Charlie execu-

tes ProcReq, Alice LogReq and the workflow engine

executes the first conditional split connector (always).

If pers? = F, then David executes BContract. If

pers? = T, then the workflow engine executes the se-

cond split connector to have full information on rnd?.

If rnd? = T, then Alice executes PContract, else

Bob. Bob executes Sign (always).

The complexity of DC-CHECKING is V ! ×

Complexity(LABELEDADC) as in the worst case

there are V ! orderings. We leave the investigation of

the complexity of LABELEDADC as future work.

7 ZETA: A TOOL FOR CNCUS

We have developed ZETA, a tool for CNCUs that ta-

kes in input a specification of a CNCU and acts both

as a solver for WC, SC and DC and as an executor.

1

1

ZETA is available at http://regis.di.univr.it/

ICAART2018.tar.bz2 along with the set of benchmarks.

Given a CNCU specification file network.cncu,

WC is checked by running java -jar zeta.jar

network.cncu --WCchecking network.ob (we

use --SCchecking and --DCchecking for SC and

DC). If the CNCU is proved controllable, ZETA saves

to file the order and buckets needed to later generate

any solution (for WC, ZETA does so for any complete

scenario). A controllable CNCU is executed by

running java -jar zeta.jar network.cncu

--execute network.ob [N], where [N] (default 1)

is the number of simulations we want to carry out.

For WC, ZETA executes the CNCU with respect to

each complete scenario, whereas for SC and DC, it

executes the CNCU generating a random scenario

(that is why ZETA allows for multiple simulations).

We ran ZETA on the CNCU in Figure 3. We used

a FreeBSD virtual machine run on top of a VMWare

ESXi Hypervisor using a physical machine equipped

with an Intel i7 2.80GHz and 20GB of RAM. The

VM was assigned 16GB of RAM and full CPU po-

wer. ZETA proved in about 200 milliseconds that the

CNCU in Figure 3 is WC (saving an ob-file of 12Kb),

is not SC but is DC (saving an ob-file of 8Kb). For

WC and DC, the CNCU was correctly executed.

We implemented ZETA also in order to be able

to carry out an automated and extensive experimental

evaluation to compare the performances of the algo-

rithms checking WC, SC and DC. We summarize our

findings in the following.

We randomly generated an initial set of bench-

marks of 10000 well-defined CNCUs. Each CNCU

has 5 to 15 variables (of which minimum 1 and max-

imum 10 are observation variables), and 1 to 5 dom-

ains, where each domain is filled by sampling from

the same initial random set of elements (10 to 30).

Each CNCU is such that: each proposition labels

some component, each domain is associated to at le-

ast one variable and a TOPOLOGICALSORT coherent

with ≺ is possible. The number of constraints and

tuples contained in them were generated to avoid un-

derconstrained and overconstrained networks.

We ran WC, SC and DC-checking on this set im-

posing a time out of 300 seconds for each CNCU. Fi-

gure 5 shows the results, where the x-axis represents

the # of analyzed instances, and the y-axis the over-

all time elapsed. ZETA first carried out the analysis

for WC on the whole set of benchmarks proving that

3806 CNCUs were WC, 6189 were not WC and 5

hit the timeout. Then, it ran the analysis for SC pro-

ving that 3270 CNCUs were SC, 6730 were not SC

and 0 hit the timeout. Finally, it ran the analysis for

DC, proving that 3627 CNCUs were DC, 3034 were

not DC and 3339 hit the timeout. We then confirmed,

considering the CNCUs for which ZETA terminated

ICAART 2018 - 10th International Conference on Agents and Artificial Intelligence

50

Figure 5: The race for controllability checking. SC (green,

below) got 1st place as SC tests unconditional CNs. WC

(blue, middle) got 2nd place as it tries all complete scena-

rios but not with respect to all possible orderings. DC (red,

above) got 3rd place as it also looks for a suitable ordering.

within the timeout, that SC ⇒ DC ⇒ WC. Further-

more, 538 CNCUs were proved WC but not SC, 61

WC but not DC, and 357 DC but not SC. Finally, we

executed 1000 times each controllable CNCU, shuff-

ling the domains of the variables at every execution

in order to get different solutions (if any). CNCUs

proved WC were executed 1000 times with respect to

each complete scenario. No execution crashed.

8 CORRECTNESS

Definition 12. A controllability algorithm is sound if,

whenever it classifies a CNCUs as uncontrollable, the

CNCU is really uncontrollable (⇒), and it is complete

if, whenever a CNCU is uncontrollable, the algorithm

classifies it as uncontrollable (⇐).

WC-CHECKING runs ADC on each projection Z

s

corresponding to a complete scenario s. If ADC com-

putes an empty relation, the CNCU is uncontrolla-

ble as there is no way to satisfy the constraints if

s happens. Thus, WC-CHECKING is sound. WC-

CHECKING is also complete because it does so for all

complete scenarios guaranteeing that if all projections

are consistent, then there exists a (possibly different)

strategy for each complete scenario.

SC-CHECKING first wipes out the conditional

part the original CNCU obtaining a super-projection

whose set of constraints corresponds to the inter-

section of all sets of constraints (even inconsistent one

another) related to all possible projections. Then it

runs ADC on the resulting network. If ADC compu-

tes an empty relation, then it means that there is no

way to decide some variable assignment before star-

ting. Thus, SC-CHECKING is sound and complete as

it boils down to the classic ADC.

Note that both WC-CHECKING and SC-

CHECKING carry out the analysis on (possibly many)

unconditional CNs. We point out that the chosen

ordering according to ≺ given in input to ADC never

breaches soundness and completeness of ADC but

might only affect its complexity (Dechter, 2003).

LABELEDADC extends ADC to accommodate

the propagation of labeled constraints. When it adds a

constraint to the bucket of a variable V it lightens the

label of the constraint by removing all literals whose

truth value will be still unknown by the time V exe-

cutes. This is because the observation variables as-

sociated to the propositions embedded in those liter-

als will be executed after V or coincide with V itself.

For this reason, we must be conservative and consider

the constraint as if it just held, since we are unable to

predict “what is going to be”. LABELEDADC pro-

pagates the constraints enforcing the adequate level

of k-consistency for all possible combinations of ho-

nest (partial) scenarios arising from the labels of the

constraints in a bucket. If LABELEDADC detects an

inconsistency, it means that there exists a (partial) sce-

nario for which the value assignments to the variables

of the CNCU (along the ordering in input) will vio-

late some constraint. Thus, DC-CHECKING is sound

as it runs LABELEDADC for all possible orderings.

We believe that DC-CHECKING is also complete but

leave a formal proof for future work.

9 RELATED WORK

CNs (Dechter, 2003) do not address uncontrollable

parts and are thus incomparable with CNCUs.

A Mixed CSP (Fargier et al., 1996) partitions the

set of variables in controllable and uncontrollable.

Fargier et al. provide a consistency algorithm as-

suming full observability of the uncontrollable part.

CNCUs do not have this restriction.

DCSPs (Mittal and Falkenhainer, 1990) introduce

activity constraints saying when variables are relevant

depending on the values assigned to some other vari-

ables. No uncontrollable parts are specified.

Some probabilistic approaches (e.g., (Fargier and

Lang, 1993)) attempted to find the most probable wor-

king solution to a CSP under probabilistic uncertainty.

Instead, our work addresses exact algorithms.

In a Prioritized Fuzzy Constraint Satisfaction Pro-

blem (PFCSP) (e.g., (Luo et al., 2003)) a solution

threshold states the overall satisfaction degree. CN-

CUs do not deal with satisfaction degrees yet.

STNs, CSTNs, STNUs, CSTNUs and CSTNUDs

only model temporal plans and are unable to represent

resources. ACTNs (Combi et al., 2017) extend CST-

Constraint Networks Under Conditional Uncertainty

51

NUs to represent a dynamic user assignment that also

depends on temporal aspects. CNCUs do not address

temporal constraints for the good reason that directi-

onal consistency (CNs) allows for convergence when

generating a solution only if a total ordering is fol-

lowed. Most temporal networks do not have this re-

striction. ACTNs solve this problem by synthesizing

memoryless execution strategies before starting.

WC, SC and DC are investigated for access-

controlled workflows under conditional uncertainty in

(Zavatteri et al., 2017). That work deals with structu-

red workflows by unfolding workflow paths, consi-

dering binary constraints only (whose labels are the

conjunction of the labels of the connected tasks) and

assuming that a total order for the tasks is given in

input. This work overcomes all these limitations.

10 CONCLUDING REMARKS

We introduced CNCUs to address a kind of CSP un-

der conditional uncertainty. CNCUs implicitly embed

classic CNs (if OV =

/

0 and ≺=

/

0). We then defined

and provided algorithms for WC, SC and DC. Cur-

rently, we only deal with CNCUs that are controllable

with respect to a total ordering for the variables.

We discussed the correctness and complexity of

our algorithms and provided ZETA, a tool for CNCUs

that acts as a solver for WC, SC and DC as well as

an execution simulator. We provided an extensive ex-

perimental evaluation against a set of benchmarks of

10000 CNCUs. SC is the easiest type of controllabi-

lity to check, followed by WC and finally DC, which

is currently the hardest one. DC is a matter of order

(CNCUs not admitting any are uncontrollable). SC

and DC provide usable strategies for executing work-

flows under conditional uncertainty. WC calls for pre-

dicting the future. However, WC is important because

a CNCU proved non WC will never be SC nor DC.

As future work, we plan to work on the all topolo-

gical sort phase of DC-CHECKING in order to contain

the explosion of this step. We also plan to investi-

gate if CNCUs classified as non-DC with respect to

all possible total orderings might turn DC for some

ordering that refines dynamically during execution.

REFERENCES

Cimatti, A., Hunsberger, L., Micheli, A., Posenato, R., and

Roveri, M. (2016). Dynamic controllability via timed

game automata. Acta Inf., 53(6-8).

Cimatti, A., Micheli, A., and Roveri, M. (2015a). An SMT-

based approach to weak controllability for disjunctive

temporal problems with uncertainty. Artif. Intell., 224.

Cimatti, A., Micheli, A., and Roveri, M. (2015b). Solving

strong controllability of temporal problems with un-

certainty using SMT. Constraints, 20(1).

Combi, C., Posenato, R., Vigan

`

o, L., and Zavatteri, M.

(2017). Access controlled temporal networks. In

ICAART 2017. INSTICC, ScitePress.

Dechter, R. (2003). Constraint processing. Elsevier.

Dechter, R., Meiri, I., and Pearl, J. (1991). Temporal con-

straint networks. Artif. Intell., 49(1-3).

Dechter, R. and Pearl, J. (1987). Network-based heuristics

for constraint-satisfaction problems. Artif. Int., 34(1).

Fargier, H. and Lang, J. (1993). Uncertainty in constraint

satisfaction problems: A probabilistic approach. In

ECSQARU ’93. Springer.

Fargier, H., Lang, J., and Schiex, T. (1996). Mixed con-

straint satisfaction: A framework for decision pro-

blems under incomplete knowledge. In IAAI 96.

Freuder, E. C. (1982). A sufficient condition for backtrack-

free search. J. ACM, 29.

Gottlob, G. (2012). On minimal constraint networks. Artif.

Intell., 191-192.

Hunsberger, L., Posenato, R., and Combi, C. (2012). The

Dynamic Controllability of Conditional STNs with

Uncertainty. In PlanEx 2012.

Hunsberger, L., Posenato, R., and Combi, C. (2015). A

sound-and-complete propagation-based algorithm for

checking the dynamic consistency of conditional sim-

ple temporal networks. In TIME 2015.

Luo, X., Lee, J. H.-m., Leung, H.-f., and Jennings, N. R.

(2003). Prioritised fuzzy constraint satisfaction pro-

blems: Axioms, instantiation and validation. Fuzzy

Sets Syst., 136(2).

Mackworth, A. K. (1977). Consistency in networks of rela-

tions. Artif. Intell., 8(1).

Mittal, S. and Falkenhainer, B. (1990). Dynamic constraint

satisfaction problems. In AAAI 90.

Montanari, U. (1974). Networks of constraints: Fundamen-

tal properties and applications to picture processing.

Inf. Sci., 7.

Morris, P. H., Muscettola, N., and Vidal, T. (2001). Dy-

namic control of plans with temporal uncertainty. In

IJCAI 2001.

Tsamardinos, I., Vidal, T., and Pollack, M. E. (2003). CTP:

A new constraint-based formalism for conditional,

temporal planning. Constraints, 8(4).

Zavatteri, M. (2017). Conditional simple temporal networks

with uncertainty and decisions. In TIME 2017, LIPIcs.

Zavatteri, M., Combi, C., Posenato, R., and Vigan

`

o, L.

(2017). Weak, strong and dynamic controllability of

access-controlled workflows under conditional uncer-

tainty. In BPM 2017.

ICAART 2018 - 10th International Conference on Agents and Artificial Intelligence

52