Analyzing Eye-gaze Interaction Modalities in Menu Navigation

R. Grosse

1,2

, D. Lenne

2

, I. Thouvenin

2

and S. Aubry

1

1

Safran E&D 102 av. de Paris, 91300, Massy, France

2

UMR CNRS Heudiasyc, UTC, Sorbonne Universités, 60200, Compiègne, France

Keywords: HCI, Interaction Modalities, Eye-tracking, Multimodality, Graphical User Interface.

Abstract: While eye-gaze interaction for disabled people proved to work fine, its usability in general cases is still far

from being integrated. In order to design a wearable interface for military products, several modalities using

the eye were tested. We proposed a new modality named Relocated DwellTime which aimed at giving more

control than existing modalities. We then conceived an experimental military representative observation

task where 4 interaction modalities using the eye were tested (2 eye-only and 2 multimodal methods using

an external physical button). The experiment evaluated the effect of two types of menus, circular and linear,

on eye-gaze interactions performances. Significant results were observed regarding interaction modalities.

The modality adding a physical button proved significantly more efficient than eye-only methods in this

context and instant opening of menus was rather accepted despite the hypothesis of the literature. No impact

of the menu type was observed.

1 INTRODUCTION

For decades the study of active interaction with

technological systems using the eye as a modality

has been undertaken. It started with disabled people

and showed that it was possible to use the eye to act

on systems. However, for a usual user, the

performance of mouse-based interaction tends to be

better than with an eye-tracker. However, the

analysis of the literature indicates that, if well

applied, this method could be more efficient in terms

of interactions performances and/or user feeling.

First, there is a natural link between the eye path

and the front cognitive task of the user (Liebling and

Dumais, 2014). Indeed, the eye is often already

staring at where the interaction is taking place.

Second, the eye is the fastest organ of the human

body and the execution speed of an interaction is an

important component of interaction evaluation.

Third, contrary to other organs that can be fully

appropriated by the task, the eye is almost available

anytime. For example, the hands of a surgeon are not

available to interact with external systems while the

eye remains partly available.

On the contrary, some disadvantages have

already been exposed regarding eye-gaze

interactions. The eye is a sensory organ, but during

an eye-based interaction it is used as a motor organ,

which is not natural and requests some efforts (Zhai,

et al., 1999). This also narrows the time during

which the eye may actually perceive. The current

inability to differentiate when the eye is used as a

sensor from when it is used as a motor organ implies

some execution mistakes. This concept was named

the MidasTouch problem (Jacob, 1993).

Furthermore, the eye presents some physiological

limits. The unstoppable micro-movements of the

eyeball limit the detection precision to about 1°, to

which the precision of the tracking-system must be

added. These problems lead us to study the

conception of specific interactions and interfaces for

the usage of the eye as a modality. This may be

applied in portable optronics, which appears to be a

context in which eye-gaze interaction could be more

efficient than classical interaction modalities.

Military infrared binoculars are an example of

technologies that integrate more and more

functionalities over the years and are used,

cognitively speaking, in a very demanding

environment. This represents an interesting context

to study and propose interaction modality

optimizations to allow for decreases in user

cognitive charge. Indeed, the observation task does

not only consist in watching but also annotating,

communicating, using different image processing

algorithms to get a target out of camo and so on.

Grosse, R., Lenne, D., Thouvenin, I. and Aubry, S.

Analyzing Eye-gaze Interaction Modalities in Menu Navigation.

DOI: 10.5220/0006538000170025

In Proceedings of the 13th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2018) - Volume 2: HUCAPP, pages

17-25

ISBN: 978-989-758-288-2

Copyright © 2018 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

17

Because of the complexification of this task, the

current binoculars medium of interactions (mainly

buttons and joysticks) may limit the usability of

future additional functions. As the eye is already

greatly solicited in these products, it is a short step to

using it as an interaction medium. Several

interaction modalities have already been proposed

but we aim to evaluate their adequacy to existing

interface mechanisms in order to allow cohabitation

of several modalities. We also keep in mind to ease

the transition between interaction modalities by

letting users use interfaces they already know.

The improvement of the eye-gaze interaction

should incorporate the conception of a graphical

interface adapted to the modality. The choice of an

interaction modality that fits the interface and the

user task needs to be considered too.

The task that is carried out using the binoculars

is the characterization of a point which consists in

locating a unit, most of the time on a map, and

providing information about it. The information

concerns the unit type, size, state or affiliation. The

APP-6A (Kourkolis, 1986) standard aims to do this

but the number of combinations of characteristics is

important. Each characteristic is thus chosen

independently, most of the time using multi-level

menus. This is a complex task.

Over the years, several interactions modalities

using the eye showed up. Some modalities are based

on fixations such as the DwellTime method (Jacob,

1993) (Hansen, et al., 2003) (Lutteroth, et al., 2015),

Neovisus (Tall, 2008) or on smooth pursuit (Vidal,

et al., 2013). Others are gesture-based interactions of

which a wide picture is presented by Møllenbach

(Møllenbach, et al., 2013). External input mediums

are sometimes proposed to specify the user intention

of interaction such as a physical button (Kammerer,

et al., 2008), a brain-computer interface (Zander, et

al., 2010) or even frowning (Surakka, et al., 2004).

In practice, the context of use, the task to carry out

and the available mediums are limiting the possible

modalities.

While using the mouse as the input modality or

touch, circular menus such as pie menus have

already proven to be faster and to better fit the user

task than linear menus in several cases (Callahan, et

al., 1988) (Samp and Decker, 2010). For example,

the even placement of the items, all at the same

distance of the center of the circle optimizes the

hovered distance. Moreover, pie menus ease the

learning of an expert path. In marking menus, the

selection of an item can be seen as a gesture

summing the directions of the path during the novice

interaction (Kurtenbach, 1993). Some studies seem

to assure that circular menus are suited for the gaze

interaction. Urbina (Urbina, et al., 2010) compares

two eye-gaze interaction modalities: the classic and

over-studied DwellTime which consists in starting at

an item for a definite time and a new modality

named “selection borders” which is the equivalent of

marking-menus. It consists in activating an item if

the gaze path cut the circle in its direction. However,

this second modality was proven harder to use.

Kammerer (Kammerer, et al., 2008) compares gaze

interactions in pull-down menus versus circular and

semi-circular menus and concludes on a better

usability of the circular and semi-circular menus.

As we aim to provide interactions which are not

linked to a particular type of menu in order to

incorporate it in systems with different graphical

interfaces, this paper proposes to study four eye-

based interaction modalities and whether they

preform differently in linear and circular menus.

2 METHOD

Our experiment aims to highlight the links between

the shapes of menus and the performances or

preferences of interaction modalities. The following

describes the experimental task and the results.

2.1 Participants

This experiment was conducted with 14 participants

aged 24 to 43. Five of them had already used the

system before for another short experiment that used

linear menus but was based on a different task. All

of the 14 participants either had a normal vision or a

totally compensated vision and all of them were

used to frequently using a computer, either at work

or during their free time. 9 of them were engineers

and all of them had very little knowledge of military

tasks.

2.2 Interaction Modalities Design

The usability of four interaction modalities is tested

in this experiment. These were selected because they

match the usability restrictions of binoculars and

match the experimental task. Below, we provide a

detailed description on how the four modalities were

designed. They mainly come from the literature and

were partly adapted for this experiment.

DwellTime (DT): this is the most studied

modality in the literature. It consists in fixating an

item with the eye to activate it (Hansen, et al., 2003).

HUCAPP 2018 - International Conference on Human Computer Interaction Theory and Applications

18

If the fixating time is too short, unwanted activations

might appear, and if it is too long the interaction

might be boring, therefore the optimal time should

be a midway between these constraints. During the

fixating time, a visual feedback of the time already

spent fixating the item is provided to the user (cf.

Figure 2). A semi-transparent picture shaped as the

item it highlights appears at the center of the item as

soon as the user looks at it. It then linearly grows to

reach the item size at the selected dwell time. At this

time, the item is activated. The cumulated fixation

time on an item is reset only if the user looks away

for more than 0.4s. This choice was made for two

reasons: the first is that it allows the user to make a

“square wave jerk”, which is a fast uncontrolled

two-way trip on another location, without having to

start the interaction again (Leigh and Zee, 2015).

The second reason is to avoid stopping the

interaction if the eye is detected outside of an item

for a very short time because of the system precision

or because of the eye micro-movements. The dwell

time is individually set between 300ms and 1000ms

during the learning phase of each modality. It is a

compromise between the feeling of the user and the

expertise of the operator checking that there were

neither too many false positives (untimely

activations) nor too many anticipations of the

activation (when the user leaves the item just before

it activates). In practice, dwell times were set with a

mean of 625 ± 92ms.

Relocated DwellTime (RD): This modality

consists in a DwellTime on an always present target

located near the item to activate. So instead of

directly fixating an item to activate it, the user can

decide to activate it by fixating an external linked

target whose sole purpose is the interaction (cf.

Figure 2). This interaction modality is inspired by

the “selection borders” presented by Urbina (Urbina,

et al., 2010), which allows the user to activate an

item by gazing outside of an item of a circular menu.

It is also inspired by Tall’s work (Tall, 2008) which

proposes a modality where the user has to gaze at a

target outside of the item which dynamically appears

when the item is gazed at. This modality allows the

user to analyze the menu for as much time as he/she

needs without risking any unwanted activation. The

cost of this improvement is a more complex gaze

path, though we think an expert path is possible

where the user may directly look at the targets when

he/she knows the architecture of the menu. If this

hypothesis proves true, the expert path would not be

more complex than with the DwellTime. When a

deeper level menu opens, the targets of the main

menu disappear. This does not allow the user to

close a menu by opening another one anymore. As

for the DwellTime modality, the dwell time was set

during the learning phase. In practice, dwell times

were set with a mean of 477 ± 92ms.

Figure 1: Example of a circular menu using the relocated

DwellTime modality. The targets are located outside the

circle.

Multimodal button + gaze (B): this interaction

consists in activating the fixated item with a push on

the physical button. This modality allows the user to

differentiate intention of action with simple analysis

of the interface. However, it needs a good hand-eye

coordination which may be unnatural because

usually, “the eye precedes the action” (Liebling and

Dumais, 2014). In practice, any item is highlighted

as it is looked at. Then the user has to press the

“space” key on the keyboard provided to actually

activate the item. To decrease some eye-hand

synchronization problems encountered during

pretests, any item can still be selected 0.2s

(empirical choice) after the eye started looking away

from it if it is not gazing at any other interactive

item.

Instant activation (I): this modality consists in

activating an item as soon as the eye hovers over it.

This modality was rejected by the literature (Jacob,

1993) as the MidasTouch was introduced. We still

think that it may be used in cases where the cost of a

mistake is really low (i.e. to open a menu). Instant

activation is only used as a way to open a sub-menu

from the main one. This is due to the fact that the

error of opening an unwanted menu may be easily

recovered by looking at another item of the main

menu. This modality is thus combined with the

Button modality to activate the sub-menu item. A

threshold of 20ms was implemented to avoid

opening a sub-menu while the eye was going from

the main menu item to any of the sub-menu items.

No specific visual feedback is associated with this

modality except for the opening of the sub-menu.

Analyzing Eye-gaze Interaction Modalities in Menu Navigation

19

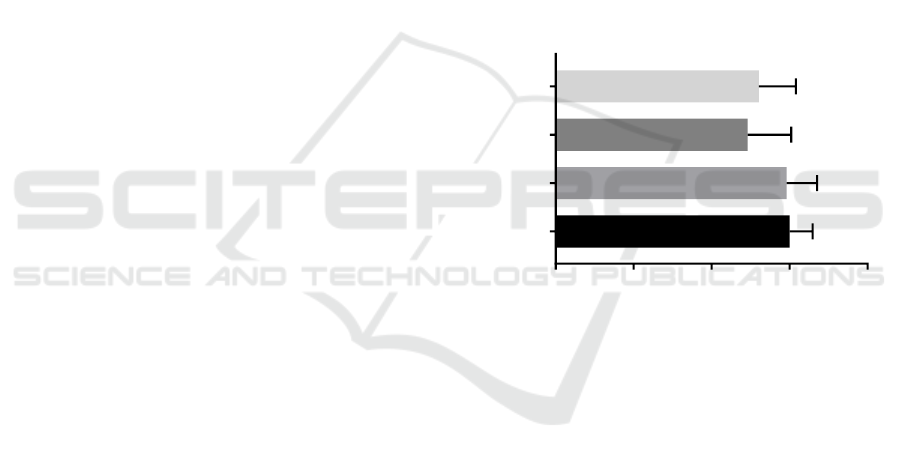

2.3 Menu Design

Two menus were proposed to the user: a linear menu

and a circular one. Menus were designed with

similar item sizes of approximately 1.5° when the

user is the farthest from the screen in order to ensure

the usability of the eye-gaze. The minimum space

between two adjacent items was approximately 0.2°.

This implies a minimal distance of 1.7° between the

centers of closest items (cf. Figure 3)

Figure 3: Size and spacing design of menu items.

Each characteristic is represented by an icon just

above the textual information. The closure of a sub-

menu can be done by selecting an item, by looking

away from the menu for more than 0.5s, or by

opening another sub-menu.

Linear menu: The linear menu is composed of a

horizontal sequence of square-shaped items with a

narrow separation between them. The sub-menus

open above the main menu and are horizontally

centered on the opened main item that triggered the

opening. Two lines play the role of visual indicators

to help the user control the opened main-item. The

opening upward is arbitrary; it may remind of the

presentation of opened applications on a Windows

environment for example (cf. Figure 4).

Circular menu: The main menu is composed of

four items disposed with N, S, E, W orientations

while the sub-menus have orientations following the

NE, NW, SW, SE directions. The center of sub-

menus is located in the continuity of the item

direction so that the selected main item is hidden

behind the sub-menu, just like in a marking menu.

Figure 4: Two-depth-levels linear menu opening upward

(top) and circular menu (bottom).

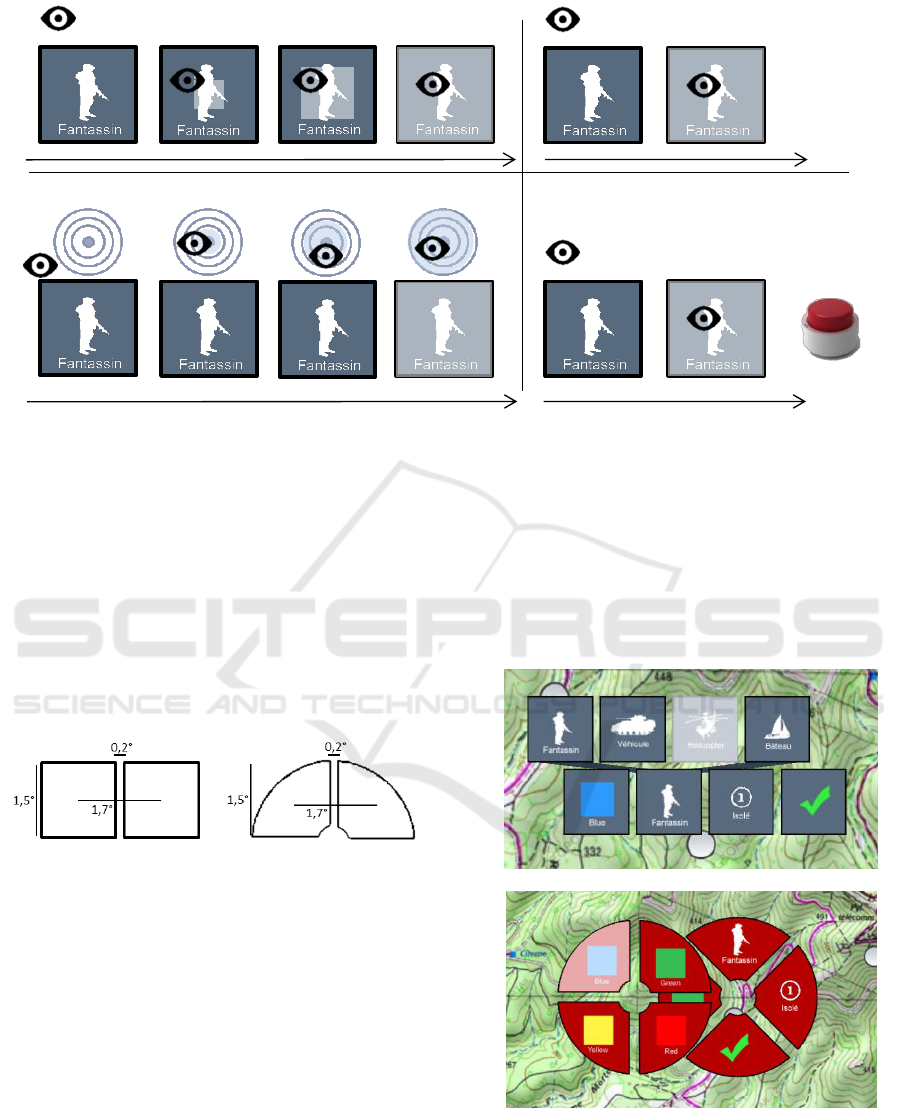

Figure 2 : Interaction modalities and associated feedbacks. In reading order: DwellTime (DT), Instant activation (I),

Relocated DwellTime (RD) and multimodal button + gaze (B).

Activation

Activation

Activation

Activation

DwellTime

Relocated DwellTime

Instant

Button

t

t

+

t

t

HUCAPP 2018 - International Conference on Human Computer Interaction Theory and Applications

20

2.4 Experimental Task

The experimental task consists in selecting three

characteristics (color, approximate number and type)

of objects presented on a picture (e.g. Figure 5)

before validating the selection. This experimental

task is representative of the operational task

presented above. Selecting the characteristics is done

using the menus presented in Figure 4. The opening

and the selection in a sub-menu are done using one

of the four presented modalities which vary during

the experiment. Current active choices are showed

on the main menu to give the user the possibility to

control his/her choice.

Figure 5: Example of a picture where the user has to

specify the three characteristics (color: red, number: 10 or

more, type: helicopter).

2.5 Material

As no totally isolated room could have been booked,

the experiment was conducted in a calm open-space

with very little transit. The user was facing a 15

inches laptop with a resolution of 1920x1080 at a

distance of approximatively 55cm. Neither the

screen nor the user was affected by glint or light

surplus. The eye-tracking system was a Tobii Eye-X.

The “space” key of the computer keyboard was used

for the “Button” modality.

Outside of the visual field of the participant, a

second screen was set up to allow the operator to

visualize the eye-movement of the participant. This

was done using the “OBS Studio” application

coupled with the “Streaming gaze overlay” provided

by Tobii. During verbal feedback, participants were

filmed with a small Panasonic camera if they agreed.

2.6 Experimental Design and

Procedure

First, a filling form was presented to the participant

to collect individual data. The subsequent form

which aimed at getting feedback of their experience

was presented before the experiment in order to

avoid them discovering it after the first test.

Then the user was asked to calibrate the eye-tracking

system and the task was presented together with the

first interaction modality.

The experiment followed a 4x2 (independent

factors) model: the first dependent factor was the

interaction modality, taking value in the whole of the

four interaction modalities presented above

{DwellTime, Relocated DwellTime, Instant,

Button} while the second dependent factor was the

menu shape, taking value in the set {Linear,

Circular}. Each participant passed the whole 8 tests.

The order was randomized this way: first the order

of modalities was selected, and then for each

modality, the first menu to pass the test on was also

randomly picked. None of the combinations was

identical to another.

Each of the 8 tests consisted in a learning phase

of the modality on the subsequently asked task

during 1 minute. At the end of this timer, if the user

was able to make 2 successful tasks in a row, the

actual test would start. Otherwise he/she could take

another minute to get used to the modality and the

menu shape. The first learning phase was longer as

the operator also had to explain the task. Then the

test consisted in 12 realizations of the experimental

task in a row, no matter the results of the user. After

each couple of tests (representing one of the

modalities), the user was asked to verbalize his/her

feelings about the modality and was asked to fill a

form with subjective values about the intuitiveness,

the perceived speed, the effort needed or the

reliability of the modality. A mark on 20 (standard

French scale) was provided for both kinds of menus

with this interaction modality. Larger values mean a

better overall feeling about the modality. This non-

classic scale was used because French people are

used to it. You may interpret it as a Likert rating

scale where 0 means “very bad” and 20 means “very

good”.

No specific indication on how to do the task was

given to the participant, and the order of the

characteristics to specify was up to him/her. The

only indication given was to try to be “efficient”,

with the meaning of this word let to the user’s

interpretation.

Analyzing Eye-gaze Interaction Modalities in Menu Navigation

21

2.7 Measured Variables

As mentioned before, verbal feedbacks were

collected at the end of the use of each modality.

They were used to understand how the users felt

about each interaction modality and the adequacy

with each of the menus. At first they were free to

give their feedback and then they were encouraged

to give more information.

A form allowed collecting qualitative data about

perceived characteristics, namely the intuitiveness,

the perceived speed, the reliability and the effort

needed to interact. The users were asked to grade

each characteristic on a 7 -ank Likert scale (the 7-

rank choice was made accordingly to Symonds’

work (Symonds, 1924)) where 1 meant, for example,

not intuitive at all and 7 meant very intuitive. Then

the users marked the modalities on 20. The data are

presented as mean ± SD.

Quantitatively, only the time spent looking on

the interface per activation was analyzed. This time

is representative of the efficiency of the modalities

and excludes any complexity implied by the picture

presented to the user.

Subjective results were analyzed using non-

parametric statistical tests. First, results were

analyzed by pairs (only the Menu dimension varied)

using Wilcoxon’s matched-pairs signed rank test to

look for interaction effect of the type of menus on

the modalities appreciations. Then, only the

modality dimension was looked at using Friedman’s

test to look for a main effect of modality on results.

A post hoc analysis on the modality dimension was

carried out using Dunn’s multiple comparison tests.

Quantitative data (activation time) were analyzed

using two-way ANOVA for repeated measures on

the logarithm of the time although results are

presented in a linear scale for clarity.

3 RESULTS

3.1 Intuitiveness

None of the pairwise Wilcoxon signed rank tests

shows any effect of the menu type on perceived

intuitiveness (all p > 0.05). None of the modalities

was more intuitive on one type of menu than on the

other. But letting the influence of menus apart,

Friedman tests show a significant effect (p < 0.0001)

of the modality on the perceived intuitiveness,

showing all the eye-gaze based interactions are not

perceived in the same way.

Button (B) and DwellTime (DT) interaction

modalities show no significant difference (p > 0.05).

Same goes for Instant (I) and relocated DwellTime

(RD). However these two groups (Button-

DwellTime vs Instant-Relocated DwellTime) show

significant differences from each other (all four

pairwise p values < 0.05).

Contrary to our expectations, circular menus

seem much more intuitive than linear ones, even if

linear menus are more common. Some users feel

uncomfortable watching non-semantic items (the

target) to interact with while using Relocated

DwellTime. They mentioned they preferred (or

would prefer if they did not already use DwellTime)

to interact using any of the other presented

modalities where they directly fixated the item.

The Instant modality bothered some users, mainly

because of the central position of the menu during

the task. This shows the MidasTouch effect once

again.

0

2

4

6

8

B

D T

R D

I

In tu itiven es s

In tera c tio n D e sig n

Figure 6: Intuitiveness mean by interaction modalities.

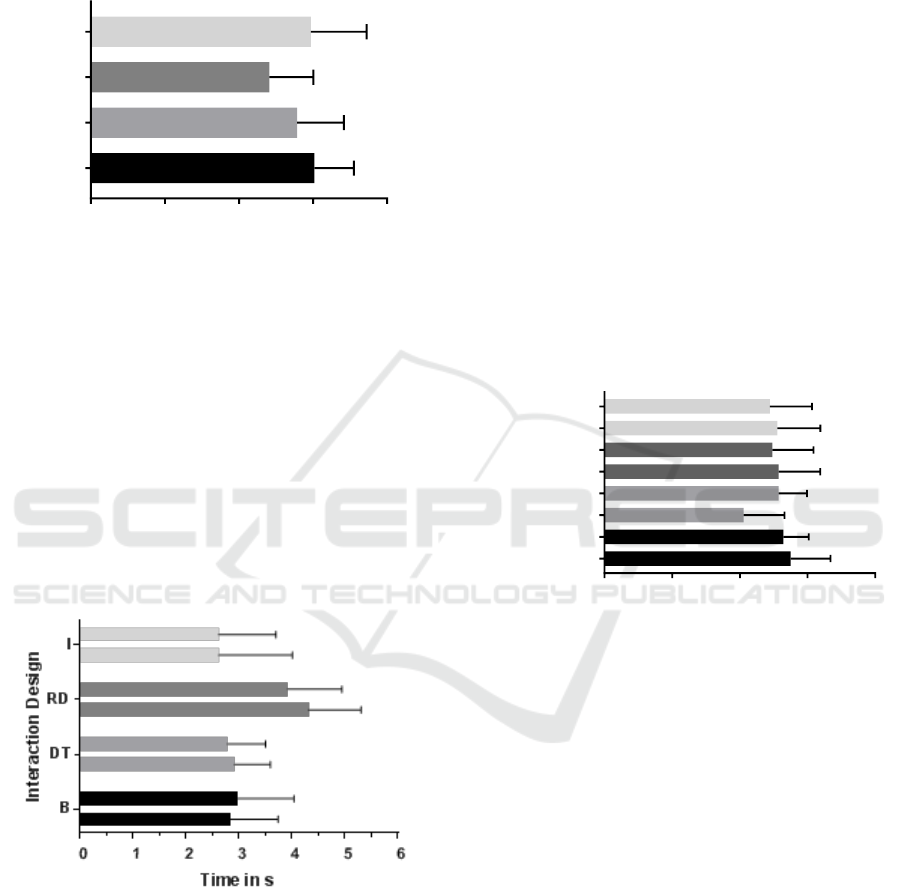

3.2 Activation Speed and Perceived

Speed

Pairwise Wilcoxon signed rank tests show no impact

of the menu type on the perceived speed by the user

(all p > 0.05). Thus no interaction modality felt

faster on a menu type than on the other. The impact

of modalities was analyzed by merging data from

both kinds of menus. Friedman test shows a

significant effect of interaction modalities on

perceived speed (p < 0.001). Post hoc analysis

shows that only the Relocated DwellTime modality

is significantly different from each of the others

modalities. It is particularly striking in comparison

with the Instant or Button modalities (p < 0.001)

while it is less obvious against DwellTime (p =

0.02). Relocated DwellTime scores 1.2 point less on

average than Instant and Button (0.8 less than

DwellTime). Users say that having to stay focused

HUCAPP 2018 - International Conference on Human Computer Interaction Theory and Applications

22

on fixating a point seems long; they feel they are

waiting for the interface to respond. This felt even

longer with Relocated DwellTime as there is an

additional step.

0

2

4

6

8

B

D T

R D

I

P e rc e ived S p e ed

In te rac tio n D e s ig n

Figure 7: Perceived speed by interaction modalities.

With quantitative activation times, RM-ANOVA

shows an interaction effect of menu types on

modalities. Relocated DwellTime on linear menus is

significantly (p < 0.05) faster, by 0.5s (around

12.5% of the activation time for these modalities).

But this change was not perceived by users. It also

shows a significant effect of modalities on execution

times. Indeed, Relocated DwellTime is significantly

slower than the three others modalities by about 1.3s

(all 3 p-values < 0.001). This is consistent with the

perceived speed. Contrary to our expectations,

Button and Instant interactions are not faster than

DwellTime.

Figure 8: Activation speed. Time spent looking at the

interface until one characteristic is selected. The upper

bars represent results on linear menus, while the lower

represent circular menus.

3.3 Reliability

Contrary to other variables, the Wilcoxon test shows

a significant effect of the menu on the perceived

reliability of DwellTime (p < 0.01) and for

DwellTime only (other p-values > 0.05). An

explanation was quickly highlighted: this is due to

the fact that the sub-menu superimposes the main

menu for the circular design (cf. Figure 4). This

implies that the waiting time of one of the sub-menu

items hiding the main menu starts just as the menu

opens, even before the user processes this opening.

This has no impact on other modalities as they either

need the user to press a button or to look further to

activate any item. So this result is probably more

due to a design difference than to a real impact of

menu type. Because of that, the others statistical

tests were performed considering only DwellTime

data coming from linear menus. By doing this,

Friedman and Dunn’s tests do not show any effect of

the modality on the perceived reliability. Our

hypothesis was that the Button and Relocated

DwellTime interaction modalities would make the

user feel more in control, because of the explicit

activation step. Results do not reflect this, as every

modality is perceived to be as reliable as the others,

except for the point mentioned before.

0

2

4

6

8

C ircu lar (B )

L in e ar (B )

C ircu lar (D T )

L in e ar (D T )

C ircu lar (R D )

L in e ar (R D )

C ircu lar (I)

L in e ar (I)

P e rc iev ed in te ractio n reliab ility

In te ra c tio n D esig n

Figure 9: Perceived reliability for each interaction design.

3.4 Effort Needed during the

Interaction

As for the intuitiveness, Wilcoxon tests show no

impact of the menu type on perceived effort (all p >

0.05). The modality shows no main effect to the

perceived effort using Friedman tests. Moreover,

almost all pairwise comparison between modalities

show no significant results except for the

comparison between Relocated DwellTime and

Button which is significant (p=0.05) with a means

difference of 0.6 point. This was verbalized by users

as the need to do an additional step for Relocated

DwellTime. As for intuitiveness, it demands more

effort to look at a non-semantic item. For 3

participants only, the Instant modality felt difficult

because they could cut themselves off from the

opening of menus and “had to” look through the

recently opened menu. It made it very difficult for

them to check for already selected characteristics for

Analyzing Eye-gaze Interaction Modalities in Menu Navigation

23

example. This was more verbalized for linear menus

as the design makes it harder to identify the opened

menu without looking at it, since it almost appears in

the same place.

0

2

4

6

8

B

D T

R D

I

P e rc e iv e d e ffo rt

In te rac tio n D e s ig n

Figure 10: Perceived effort using the interaction

modalities. Upper score means less effort.

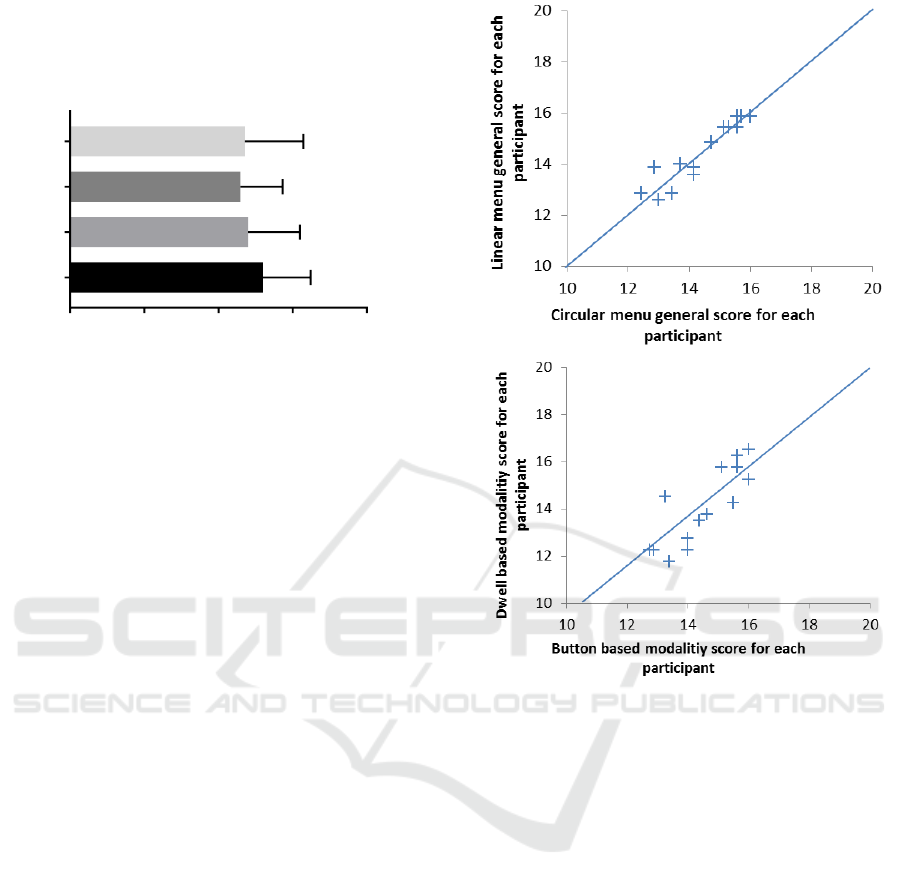

3.5 General Appreciation

Considering general appreciation (ranking on a 20

point scale), no impact of menu type proved to have

significant influence (all four p-values < 0.05 for

Wilcoxon tests). Thus, only the interaction

modalities were studied. Only the Button modality

showed significant advantages over the three other

modalities (p < 0.05), scoring an average of about

1.4 point over the other modalities.

Figure 11 shows both the intra-user and inter

user influences. First, it shows that users who

positively mark modalities on linear menus do the

same for circular ones (value points are located near

the first diagonal). It seems a bit less true for

modalities as users tend to prefer one type of

modality over the others (here Instant and Button

were grouped as button-based modalities, whereas

DwellTime and Relocated DwellTime were as

dwell-based modalities). The interpretation must

consider these two groups of people.

Secondly, user marks range from about 12 to 17,

showing inter-user variability and account for

onsidering the user as a random effect on the model.

Figure 11: Inter and intra user variability. Circular vs.

linear appreciation (top). Button based vs. Dwell based

interaction (down).

4 CONCLUSION & DISCUSSION

Knowing the previous work on linear and circular

menus, we conceived an experiment to evaluate user

perception of these menus with four different

modalities in an operational context.

Contrary to what we expected, we found no impact

of the menu shape on preferences or performances

of any of the four tested modalities. The only

significant effect (on DwellTime) was very likely

due to menu superimposition. However, this was

done using restricted design. Indeed, button size and

inter-button distances were similarly fixed, and the

tested interaction modalities were the same on both

types of menu. In practice, circular menus might

have assets that were not exploited in our

experiment. For example, as buttons are spread from

the center, button size could be decreased or other

interaction techniques could be developed only for

HUCAPP 2018 - International Conference on Human Computer Interaction Theory and Applications

24

circular designs. This could lead to results similar to

those presented in (Kammerer, et al., 2008). But our

aim was to evaluate modalities on already existent

interfaces (very similar actually) in order to evaluate

the integration of eye-gaze based modalities in

existent systems.

While no effect of the menu was highlighted,

interaction modalities were considered differently by

users. In general, the multimodal modality using a

button in addition of the gaze was more appreciated

and performed better than the others. While in pre-

experiments the instant opening showed great results

(menus were on the edge of the screen at that time),

it did not perform that well, both in terms of

appreciation and speed. Some of the users did not

even see the difference between Instant and Button.

This is probably good news as it does not totally

exclude the instant opening from interaction

modalities.

We designed Relocated DwellTime in order to

provide control to the user but in practice, users did

not mark Relocated DwellTime that way. The

theoretically added control was balanced by the

added complexity of the modality. The expected

extra control could probably be more visible with a

task where the user must analyze the interface more

deeply.

It is important to note that the experiment was

conducted by novices. If the task itself was easy

enough to let us consider users were expert in the

main task, they were not expert in using the eye as

an interaction medium. It is important in further

experiment to consider having users more

experienced with eye based interactions to compare

tendencies.

For a military task such as characterizing a point,

it seems that the Button interaction would be more

adapted, giving the user more control with the

interface over the task flow at very little cost.

Choosing between a circular and a linear menu

should be done considering the impact on the task

rather than on the interaction modalities.

REFERENCES

Callahan, J., Hopkins, D., Weiser, M. & Shneiderman, B.,

1988. An empirical comparison of pie vs. linear

menus. s.l., s.n., pp. 95-100.

Hansen, J. P. et al., 2003. Command without a click: Dwell

time typing by mouse and gaze selections. s.l., s.n., pp.

121-128.

Jacob, R. J. K., 1993. Eye movement-based human-

computer interaction techniques: Toward non-

command interfaces. Advances in human-computer

interaction, Volume 4, pp. 151-190.

Kammerer, Y., Scheiter, K. & Beinhauer, W., 2008.

Looking my way through the menu: the impact of

menu design and multimodal input on gaze-based

menu selection. s.l., s.n., pp. 213-220.

Kourkolis, M., 1986. APP-6 Military symbols for land-

based systems. Military Agency for Standardization

(MAS), NATO Letter of Promulgation.

Kurtenbach, G. P., 1993. The design and evaluation of

marking menus, s.l.: s.n.

Leigh, R. J. & Zee, D. S., 2015. The neurology of eye

movements. s.l.:Oxford University Press, USA.

Liebling, D. J. & Dumais, S. T., 2014. Gaze and mouse

coordination in everyday work. s.l., s.n., pp. 1141-

1150.

Lutteroth, C., Penkar, M. & Weber, G., 2015. Gaze vs.

Mouse: a fast and accurate gaze-only click alternative.

s.l., s.n., pp. 385-394.

Møllenbach, E., Hansen, J. P. & Lillholm, M., 2013. Eye

movements in gaze interaction. Journal of Eye

Movement Research, Volume 6.

Samp, K. & Decker, S., 2010. Supporting menu design

with radial layouts. s.l., s.n., pp. 155-162.

Surakka, V., Illi, M. & Isokoski, P., 2004. Gazing and

frowning as a new human--computer interaction

technique. ACM Transactions on Applied Perception

(TAP), Volume 1, pp. 40-56.

Symonds, P. M., 1924. On the Loss of Reliability in

Ratings Due to Coarseness of the Scale. Dans: s.l.:s.n.,

p. 456.

Tall, M., 2008. Neovisus: Gaze driven interface

components. s.l., s.n., pp. 47-51.

Urbina, M. H., Lorenz, M. & Huckauf, A., 2010. Pies with

EYEs: the limits of hierarchical pie menus in gaze

control. s.l., s.n., pp. 93-96.

Vidal, M., Pfeuffer, K., Bulling, A. & Gellersen, H. W.,

2013. Pursuits: eye-based interaction with moving

targets. s.l., s.n., pp. 3147-3150.

Wikipédia, 2017. APP-6A --- Wikipédia, l'encyclopédie

libre. s.l.:s.n.

Zander, T. O., Gaertner, M., Kothe, C. & Vilimek, R.,

2010. Combining eye gaze input with a brain--

computer interface for touchless human--computer

interaction. Intl. Journal of Human--Computer

Interaction, Volume 27, pp. 38-51.

Zhai, S., Morimoto, C. & Ihde, S., 1999. Manual and gaze

input cascaded (MAGIC) pointing. s.l., s.n., pp. 246-

253.

Analyzing Eye-gaze Interaction Modalities in Menu Navigation

25