An Agent-based Approach to Decentralized Global Optimization

Adapting COHDA to Coordinate Descent

Joerg Bremer and Sebastian Lehnhoff

Department of Computing Science, University of Oldenburg, Uhlhornsweg, Oldenburg, Germany

Keywords:

Global Optimization, Distributed Optimization, Multi-agent Systems, COHDA, Coordinate Descent.

Abstract:

Heuristics like evolution strategies have been successfully applied to optimization problems with rugged,

multi-modal fitness landscapes, to non-linear problems, and to derivative free optimization. Parallelization for

acceleration often involves domain specific knowledge for data domain partition or functional or algorithmic

decomposition. We present an agent-based approach for a fully decentralized global optimization algorithm

without specific decomposition needs. The approach extends the ideas of coordinate descent to a gossiping

like decentralized agent approach with the advantage of escaping local optima by replacing the line search with

a full 1-dimensional optimization and by asynchronously searching different parts of the search space using

agents. We compare the new approach with the established covariance matrix adaption evolution strategy and

demonstrate the competitiveness of the decentralized approach even compared to a centralized algorithm with

full information access. The evaluation is done using a bunch of well-known benchmark functions.

1 INTRODUCTION

Global optimization comprises many problems in

practice as well as in the scientific community. These

problems are often hallmarked by presence of a rug-

ged fitness landscape with many local optima, high

dimensionality and non-linearity. Thus optimization

algorithms are likely to become stuck in local optima

and guaranteeing the exact optimum is often intracta-

ble.

Several approaches have been developed to over-

come this problem by settling for a randomized search

and an at least good solution. Among them are evo-

lutionary strategies, swarm based approaches or Mar-

kov chain Monte Carlo methods. These methods also

cope well with a lack of derivatives in black-box pro-

blems.

In order to accelerate execution, parallel imple-

mentations based on a distribution model on an algo-

rithmic level, iteration level, or solution level can be

harnessed (Talbi, 2009) to parallelize meta-heuristics.

The iteration level model is used to generate and

evaluate different off-spring solutions in parallel, but

does not ease the actual problem. The solution le-

vel parallel model always needs a problem specific

decomposition of the data domain or a functional de-

composition based on expert knowledge.

We present an approach to global, non-linear op-

timization based on an agent-based heuristics using

a cooperative algorithmic level parallel model (Talbi,

2009) for an easy and automated distribution of the

objective. This is achieved by adapting an agent-

based gossipping heuristics (Boyd et al., 2005; Hin-

richs et al., 2014) that acts after the perceive-decide-

act paradigm towards a coordinate descent method

(Wright, 2015). Each agent is responsible for solving

a 1-dimensional sub-problem and thus for searching

one dimension of the high-dimensional problem. By

integrating this local optimization into the decision

process of the decentralized agent approach, global

optimization is achieved by asynchronously genera-

ting local solutions with fixed parameters for other

dimensions like in coordinate descent approaches; but

with the ability to escape local optima and searching

different parts of the search space concurrently. Alt-

hough the used agent protocol has originally been de-

veloped for distributed constraint optimization, we fo-

cus in the following merely on unconstrained optimi-

zation problems.

The rest of the paper is organized as follows. We

start with an overview of related, distributed approa-

ches and give a brief recap of the used decentralized

baseline algorithm COHDA. We describe the adap-

tion to global optimization and evaluate the approach

with several experiments involving standard test ob-

jectives for global optimization.

Bremer J. and Lehnhoff S.

An Agent-based Approach to Decentralized Global Optimization - Adapting COHDA to Coordinate Descent.

DOI: 10.5220/0006116101290136

In Proceedings of the 9th International Conference on Agents and Artificial Intelligence (ICAART 2017), pages 129-136

ISBN: 978-989-758-219-6

Copyright

c

2017 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

129

2 RELATED WORK

Global optimization of non-convex, non-linear pro-

blems has long been subject to research (B

¨

ack et al.,

1997; Horst and Pardalos, 1995). Approaches can

roughly be classified into deterministic and probabi-

listic methods. Deterministic approaches like inter-

val methods (Hansen, 1980) or Lipschitzian methods

(Hansen et al., 1992) often suffer from intractability

of the problem or from getting stuck in local optima

(Simon, 2013). In case of a rugged fitness lands-

cape of multimodal, non-linear functions, probabilis-

tic heuristics are indispensable. Often derivative free

methods for black-box problems are needed, too.

Several evolutionary algorithms have been intro-

duced to solve nonlinear, hard optimization problems

with complex shaped fitness landscapes. Each of

these methods has its own characteristics, strengths

and weaknesses. A common characteristic is the ge-

neration of an offspring solution set by exploring the

characteristics of the objective function in the neig-

hborhood of an existing set of solutions. When the

solution space is hard to explore or objective evalu-

ations are costly, computational effort is a common

drawback for all population-based schemes. Much

effort has already been spent to accelerate conver-

gence of these methods. Example techniques are: im-

proved population initialization (Rahnamayan et al.,

2007), adaptive populations sizes (Ahrari and Shariat-

Panahi, 2015) or exploiting sub-populations (Rigling

and Moore, 1999).

Sometimes a surrogate model is used in case

of computational expensive objective functions

(Loshchilov et al., 2012) to substitute a share of ob-

jective function evaluations with cheap surrogate mo-

del evaluations. The surrogate model represents a le-

arned model of the original objective function.

For faster execution, different approaches for pa-

rallel problem solving haven ben scrutinized in the

past; partly with a need for problem specific adaption

for distribution. Four main questions define the de-

sign decisions for distributing a heuristic: which in-

formation to exchange, when to communicate, who

communicates, and how to integrate received infor-

mation (Nieße, 2015; Talbi, 2009). Examples for

traditional meta-heuristics that are available as distri-

buted version are: Particle swarm (Vanneschi et al.,

2011), ant colony (Colorni et al., 1991), or parallel

tempering (Li et al., 2009). Distribution for gaining

higher solution accuracy is a rather rare use case. An

example is given in (Bremer and Lehnhoff, 2016b).

Another class of algorithms for global optimiza-

tion that has been popular for years by practitioners

rather than scientists (Wright, 2015) are coordinate

descent algorithms (Ortega and Rheinboldt, 1970).

Coordinate descent algorithms iteratively search the

optimum in high dimensional problems by fixing

most of the parameters (components of variable vec-

tor x

x

x) and doing a line search along a single free coor-

dinate axis. Often, all components of x

x

x a cyclically

chosen for approximating the objective with respect

to the (fixed) other components (Wright, 2015). In

each iteration, a lower dimensional or even scalar sub-

problem is solved. The multivariable objective f (x

x

x) is

solved by looking for the minimum in one direction at

a time. There are several approaches for choosing the

step size for the step towards the local minimum, but

as long as the sequence f (x

x

x

0

), f (x

x

x

1

),... , f (x

x

x

n

) is mo-

notonically decreasing the method converges to an at

least local optimum. Like any other gradient based

method this approach gets easily stuck in case of a

non-convex objective function.

We are going to extend the ideas of coordinate

descent towards non-linear optimization by combi-

ning it with ideas for the escape of local optima

and integrating it into the agent-based approach of

combinatorial optimization heuristics for distributed

agents (Hinrichs et al., 2014; Hinrichs et al., 2013b).

This agent-based heuristics works after the perceive-

decide-act paradigm (Picard and Glize, 2006). It col-

lects and aggregates information on intermediate re-

sults of other agents from received information with

possibly different age. Due to acting on informa-

tion of different age the algorithm asynchronously

searches different parts of the search space at the

same time (Hinrichs et al., 2014) after the coopera-

tive algorithmic-level parallel model (Talbi, 2009).

Moreover, instead of using a line search or gradient

step method like in conventional coordinate descent

methods, we use a Brent solver (Brent, 1971) for

the agent’s decision method when deciding on the

1-dimensional sub-problem. In this way, the whole

coordinate axis (at least within the allowed data dom-

ain) is used instead of just doing a 1-dimensional step

downwards. Hence, our approach may also jump in-

side the search space and thus escape local optima

more easily.

3 ALGORITHM

We start the description of our approach with the

underlying baseline algorithm and the negotiation

process between the agents. For global optimiza-

tion we exchange the problem specific part that had

been developed to solve the combinatorial problem

of predictive scheduling in smart grids by agents

being responsible for optimizing a 1-dimensional sub-

ICAART 2017 - 9th International Conference on Agents and Artificial Intelligence

130

problem.

3.1 COHDA

The combinatorial optimization heuristics for distri-

buted agents (COHDA) was originally introduced in

(Hinrichs et al., 2013b; Hinrichs et al., 2014). Since

then it has been applied to a variety of smart grid ap-

plications (Hinrichs et al., 2013a; Nieße et al., 2014;

Nieße and Sonnenschein, 2013; Bremer and Lehn-

hoff, 2016a). Although this method has been deve-

loped for a specific use case in the smart grid field, it

is in general applicable to arbitrary distributed (com-

binatorial) problems.

With our explanations we follow (Hinrichs et al.,

2014). Originally, COHDA has been designed as a

fully distributed solution to the predictive scheduling

problem (as distributed constraint optimization for-

mulation) in smart grid management (Hinrichs et al.,

2013b). In this scenario, each agent in the multi-agent

system is in charge of controlling exactly one distri-

buted energy resource (generator or controllable con-

sumer) with procuration for negotiating the generated

or consumed energy. All energy resources are drawn

together to a virtual power plant and the controlling

agents form a coalition for decentralized control. It is

the goal of predictive scheduling to find exactly one

schedule for each energy unit such that the difference

between the sum of all schedules and a desired given

target schedule is minimized.

An agent in COHDA does not represent a com-

plete solution as it is the case for instance in

population-based approaches (Poli et al., 2007; Ka-

raboga and Basturk, 2007). Each agent represents a

class within a multiple choice knapsack combinato-

rial problem (Lust and Teghem, 2010). Applied to

predictive scheduling each class refers to the feasible

region in the solution space of the respective energy

unit. Each agent chooses schedules as solution can-

didate only from the set of feasible schedules that be-

longs to the DER controlled by this agent. Each agent

is connected with a rather small subset of other agents

from the multi-agent system and may only communi-

cate with agents from this limited neighborhood. The

neighborhood (communication network) is defined by

a small world graph (Watts and Strogatz, 1998). As

long as this graph is at least simply connected, each

agent collects information from the direct neighbor-

hood and as each received message also contains (not

necessarily up-to-date) information from the transi-

tive neighborhood, each agent may accumulate in-

formation about the choices of other agents and thus

gains his own local belief of the aggregated schedule

that the other agents are going to operate. With this

belief each agent may choose a schedule for the own

controlled energy unit in a way that the coalition is put

forward best while at the same time own constraints

are obeyed and own interests are pursued. In a way,

the choice of an optimal schedule for a single energy

unit can be seen as solving a lower dimensional sub-

problem of the high-dimensional scheduling problem.

All choices of schedules are rooted in incomplete

knowledge and beliefs in what other agents are pro-

bably going to do; gathered from received messages.

The taken choice (result of the decision phase) to-

gether with the decision base (agent’s workspace) is

communicated to all neighbors and in this way know-

ledge is successively spread throughout the coalition

without any central memory. This process is repeated.

Because all spread information about schedule choi-

ces is labeled with an age, each agent may decide ea-

sily whether the own knowledge repository has to be

updated. Any update results in recalculating the own

best schedule contribution and spreading it to the di-

rect neighbors. By and by all agents accumulate com-

plete information and as soon as no agent is capable

of offering a schedule that results in a better solution,

the algorithm converges and terminates.

More formally, each time an agent receives a mes-

sage, three successive steps are conducted. First, du-

ring the perceive phase an agent a

j

updates its own

working memory K

j

with the received working me-

mory K

i

from agent a

i

. From the foreign working me-

mory the objective of the optimization is imported (if

not already known) as well as the configuration that

constitutes the calculation base of neighboring agent

a

i

. An update is conducted if the received configura-

tion is larger or has achieved a better objective value.

Currently, best solutions for sub-problems from other

agents are merged into the own working memory and

temporarily taken as fixed for the following decision

phase that solves the sub-problem for one unit.

In this way, sub-solutions that reflect the so far

best choices of other agents and that are not alre-

ady known in the own working memory are impor-

ted from the received memory. During the decision

phase agent a

j

has to decide on the best choice for his

own schedule based on the updated belief about the

system state γ

k

. Index k indicates the age of the sy-

stem state information. The agent knows which sche-

dules of a subset (or all) of other agents are going to

operate. Based on a set of feasible schedules sampled

from an appropriate simulation model for flexibility

prediction, the schedule putting forward the overall

solution (with regard to the fixed other sub-solutions)

can e. g. be found by line searching the sample.

If the objective value for the configuration with

this new candidate is better, this new solution candi-

An Agent-based Approach to Decentralized Global Optimization - Adapting COHDA to Coordinate Descent

131

perceive:

update knowledge

act:

send workspace

𝑎

2

𝑎

3

𝑎

1

𝑎

5

𝑎

4

Κ

𝑖

Κ

𝑗

integrate

Κ

𝑗

subset of 1-

dimensinal

solutions

decide:

optimize sub-problem

𝑎

𝑗

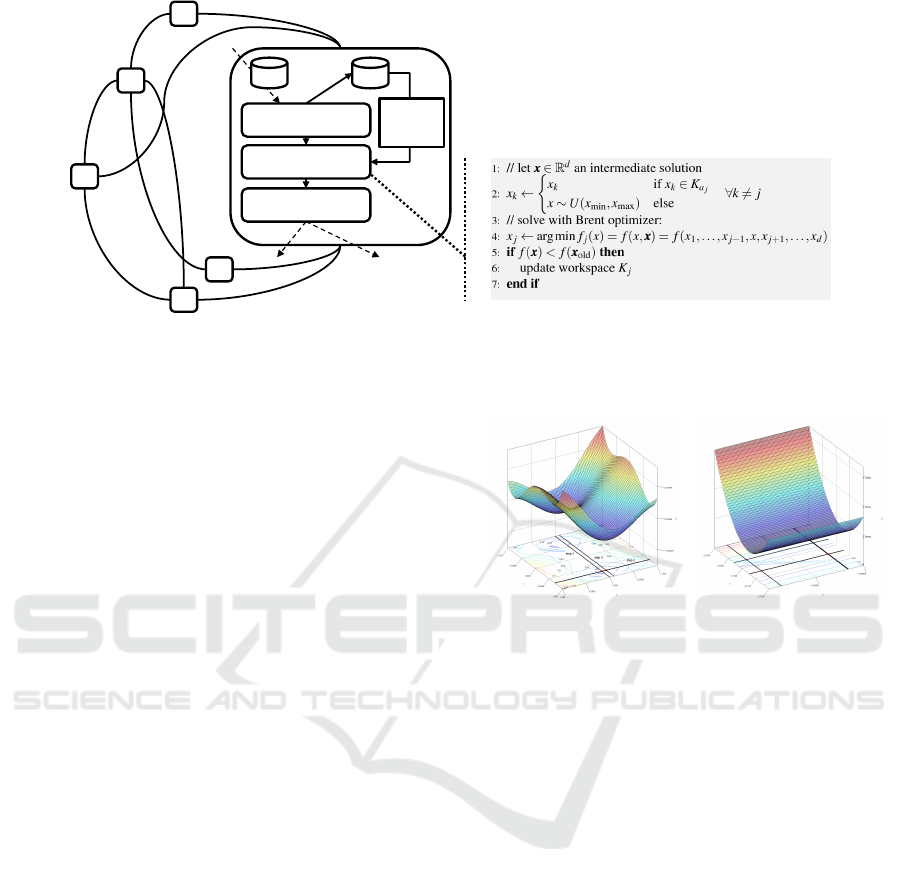

Figure 1: Internal receive-decide-act architecture of an agent with decision process. The agent receives a set of optimum

coordinates from another agent, decides on the best coordinate for the dimensions the agent accounts for and sends the

updated information to all neighbors.

date is kept as selected one. Finally, if a new solution

candidate has been found, the working memory with

this new configuration is sent to all agents in the lo-

cal neighborhood. The procedure terminates, as soon

as all agents reach the same system state and no new

messages are generated. In this case no agent is able

to find a better solution. Finally, all agents know the

same final result.

3.2 COHDAgo

In global optimization we want to find the global

minimum of a real valued objective function. Usu-

ally, decomposition for distributed, parallel solvers

is achieved by either domain decomposition (parallel

processing of different pieces of data) or functional

decomposition (solving smaller problems and aggre-

gating the results). But also a partition on algorithmic

level (independent or cooperative self-contained enti-

ties) is sometimes applied (Talbi, 2009).

We used an agent based approach for the latter de-

composition scheme and implemented a fully decen-

tralized algorithm. Each agent is responsible for one

dimension of the objective function. The intermediate

solutions for other dimensions (represented by decisi-

ons published by other agents) are temporarily fixed.

Thus, each agent only searches along a 1-dimensional

cross-section of the objective and solves a simplified

sub-problem. Nevertheless, for evaluation of the so-

lution, the full objective function is used. In this way,

we achieve an asynchronous coordinate descent with

the ability to escape local minima by parallel sear-

ching different regions of the search space. We denote

our extension to the COHDA protocol for global opti-

mization with COHDAgo. Figure 2 shows the general

scheme of the agent approach for global optimization.

(a) (b)

Figure 2: General optimization scheme along the example

of the Six Hump Camel Back function. On the floor plan,

the successive cross-sections are depicted. In step 1, the

agent responsible for the y-axis optimizes with a fixed x-

value. The found optimum for y (evaluated at point (x,y))

is submitted to the agent for the x-axis who optimizes along

the line from step 2. This process is mutually repeated. Fi-

gure 2(b) shows an enlarged cropping from the later steps

approaching the global optimum (no longer distinguishable

in Fig. 2 after merely 3 steps).

Each agent is responsible for solving one dimension.

Each time an agent receives a message from one of

its neighbors, the own knowledgebase with assumpti-

ons about optimal coordinates x

x

x

∗

of the optimum of

f (with x

x

x

∗

= argmin f (x

x

x)) is updated. Let a

j

be the

agent that just has received a message from agent a

i

.

Then, the workspace K

j

of agent a

j

is merged with

information from the received workspace K

i

. Each

workspace K of an agent contains a set of coordina-

tes x

k

such that x

k

reflects the kth coordinate of the

current solution x

x

x so far found from agent a

k

.

In general, each coordinate x

`

that is not yet

in K

j

is temporarily set to a random value x

`

∼

U(x

min

,x

max

) for objective evaluation. W.l.o.g. all

unknown values could also be set to zero. But, as

many of the standard benchmark objective function

have their optimum at zero, this would result in an

ICAART 2017 - 9th International Conference on Agents and Artificial Intelligence

132

unfair comparison as such behavior would unintenti-

onally induce some priori knowledge. Thus, we have

chosen to initialize unknown values randomly.

After the update procedure, agent a

j

takes all ele-

ments x

k

∈ x

x

x with k 6= j as temporarily fixed and

starts solving a 1-dimensional sub-problem: x

j

=

argmin f (x,x

x

x); where f is the objective function with

all values except element x

j

fixed. This problem with

only x as the single degree of freedom is solved using

Brent’s method (Brent, 1971). Figure 2 illustrates the

successive approach to the optimum.

Brent’s method originally is a root finding proce-

dure that combines the previously known bisection

method and the secant method with an inverse qua-

dratic interpolation. Whereas the latter are known for

fast convergence, bisection provides more reliability.

By combining these methods – a first step was alre-

ady undertaken by (Dekker, 1969) – convergence can

be guaranteed with at most O(n

2

) iterations (with n

iterations for the bisection method). In case of a well-

behaved function the method converges even superli-

nearly (Brent, 1971). After x

j

has been determined by

Brent’s method, x

j

is communicated (along with all x

`

previously received from agent a

i

) to all neighbors if

f (x

x

x

∗

) with x

j

gains a better result than the previous

solution candidate.

4 RESULTS

We evaluated our agent approach with a set of well-

known test functions developed for benchmarking op-

timization heuristics. We used the following functi-

ons: Six Hump Camel Back, Elliptic, Different Po-

wers, Levy, Ackley, Katsuura, Alpine, Egg Hol-

der, Rastrigin, Weierstrass, Griewank, Zakharov, Sa-

lomon, Quadric, Quartic, and Rosenbrock (Ulmer

et al., 2003; Ahrari and Shariat-Panahi, 2015; Yao

et al., 1999; Li and Wang, 2014; Salomon, 1996;

Mishra, 2006; Suganthan et al., 2005; Liang et al.,

2013). These functions represent a mix of multi-

modal, multi-dimensional functions, partly with a

huge number of local minima and steep as well as

shoal surroundings of the global optimum and broad

variations in characteristics and domain sizes. The

Quartic function also introduces noise (Yao et al.,

1999).

We compared our approach with the well-known

covariance matrix adaption evolution strategy (CMA-

ES) by (Hansen and Ostermeier, 2001). CMA-ES

aims at learning from previous successful evolution

steps for future search directions. A new population

is sampled from a multi variate normal distribution

N (0,C

C

C) with covariance matrix C

C

C which is adapted

0

0.5

1

1.5

2

2.5

·10

4

10

−15

10

−9

10

−3

10

3

10

9

time ticks

log error

d = 5

d = 10

d = 20

(a)

0

0.5

1

1.5

·10

7

10

−15

10

−9

10

−3

10

3

10

9

objective evaluations

log error

d = 5

d = 10

d = 20

(b)

Figure 3: Convergence of an example objective: the Rosen-

brock function with different dimensions. The left figure

shows the convergence’s relation to the number of time tick

for the agent simulation (for this experiment, agents have

been executed at discrete time ticks instead of an otherwise

asynchronous execution in order to measure the relation);

the right one relates the convergence to the number of ob-

jective evaluations.

to maximize the occurrence of improving steps accor-

ding to previously seen distributions for good steps.

Sampling is weighted by a selection of parent soluti-

ons. In a way, the method learns a second order model

of the objective function and exploits it for structure

information and for reducing calls of objective eva-

luations. An a priori parametrization with structure

knowledge of the problem by the user is not neces-

sary as the method is capable of adapting unsupervi-

sed. A good introduction can for example be found

in (Hansen, 2011). Especially for non-linear, non-

convex black-box problems, the approach has shown

excellent performance (Hansen, 2011).

For our experiments, we used default settings for

the CMA-ES after (Hansen, 2011). These settings are

specific to the dimension d of the objective function.

An in-depth discussion of these parameters is also gi-

ven in (Hansen and Ostermeier, 2001). The COHDA

approach is parameter-less and hence there are no pa-

rameters to tune. In general, there are some degrees

of freedom for choosing the structure of the commu-

nication network between the agents. Here, we have

chosen to use the recommended (Watts and Strogatz,

1998) small world topology. For the Brent solver in-

side the decision routine of the agents a bracketing

parameter has to be chosen. As we want to allow sear-

ching the whole data domain in each step, we always

set the bounds to the specific data domain interval for

each objective function respectively.

First, we evaluated the convergence of our agent

approach. Figure 3 shows the results. The CO-

HDA algorithm guarantees by its protocol a sequence

f (x

x

x

0

) < f (x

x

x

1

) < · · · < f (x

x

x

n

) and thus ensures conver-

gence to an at least local optimum (Tseng, 2001; Luo

and Tseng, 1992).

In all cases, the algorithm converges rather fast

as soon as a sufficient number of agents takes part

with a reasonable assumption about optimum loci in

An Agent-based Approach to Decentralized Global Optimization - Adapting COHDA to Coordinate Descent

133

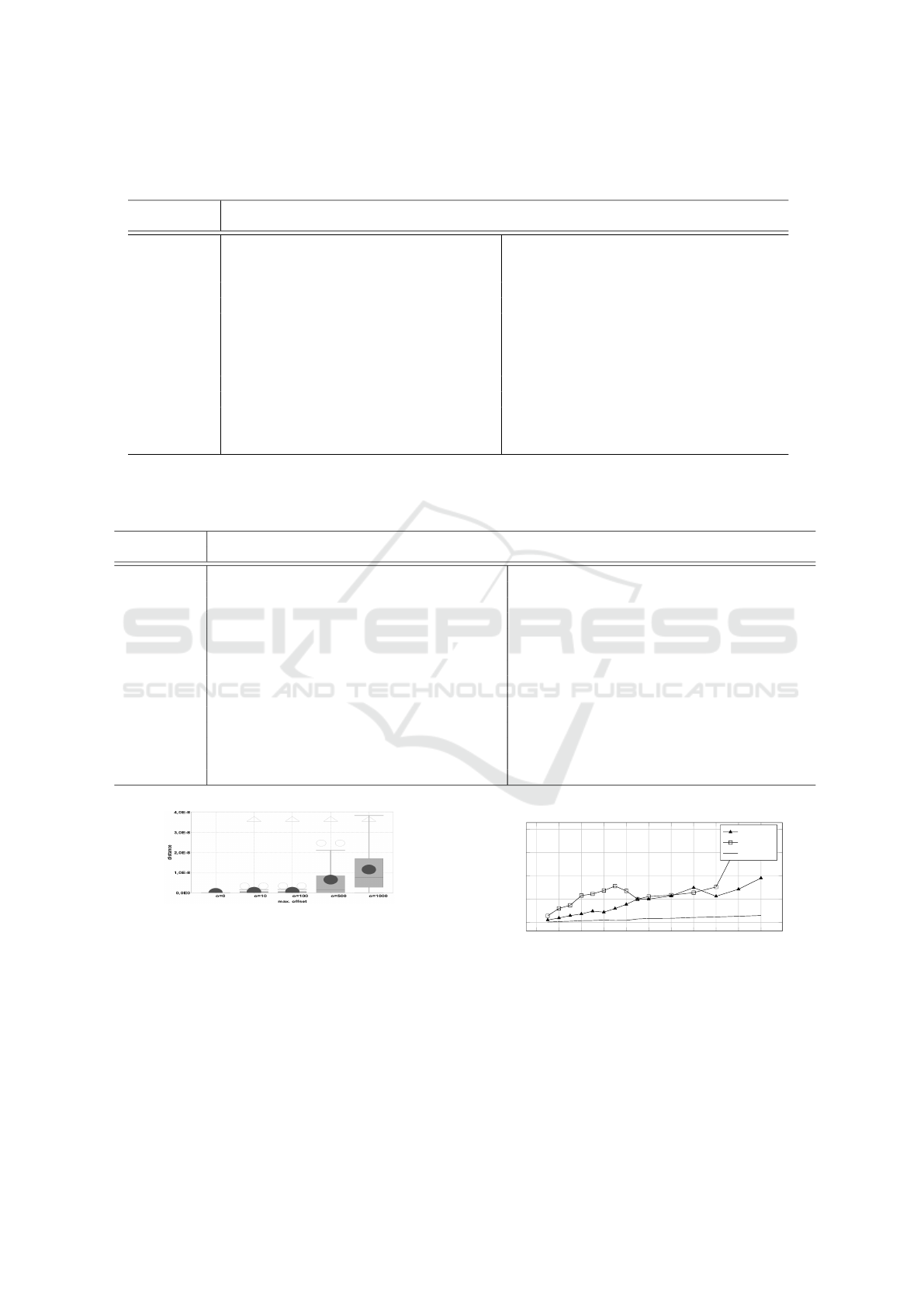

Table 1: Comparison of CMA-ES and COHDAgo for different 50-dimensional objective functions. We compare the number

of objective evaluations and the residual error denoted as the difference between the objective value of the found optimum

and the known global optimum. An error of zero denotes an error below the accuracy of the Java programming language.

function CMA-ES COHDAgo

evaluations error evaluations error

Elliptic 7772.7 ± 4644.7 1.163 × 10

7

± 1.881 × 10

7

6379.5 ± 479.1 0.00 × 10

0

± 0.00 × 10

0

DifferentPowers 29557.0 ± 924.4 2.815 × 10

−12

± 5.432 × 10

−12

6875.6 ± 431.6 0.00 × 10

0

± 0.00 × 10

0

Levy 8178.3 ± 1414.1 3.941 × 10

1

± 1.502 × 10

1

10758.8 ± 1694.0 3.554 × 10

1

± 5.883 × 10

−1

Ackley 3828.5 ± 195.1 2.00 × 10

1

± 6.602 × 10

−13

6991.6 ± 501.7 0.00 × 10

0

± 0.00 × 10

0

Katsuura 25857.6 ± 13699.7 1.407 × 10

2

± 3.083 × 10

2

4087.5 ± 469.5 0.00 × 10

0

± 0.00 × 10

0

Alpine 21497.8 ± 7257.1 2.221 × 10

−3

± 5.639 × 10

−3

5955.6 ± 415.2 0.00 × 10

0

± 0.00 × 10

0

EggHolder 5822.9 ± 793.4 1.194 × 10

4

± 1.054 × 10

3

86329.8 ± 52296.7 9.394 × 10

3

± 6.169 × 10

2

Rastrigin 4292.2 ± 235.6 1.639 × 10

2

± 3.563 × 10

1

6991.0 ± 591.4 0.00 × 10

0

± 0.00 × 10

0

Weierstrass 8135.1 ± 488.6 1.994 × 10

0

± 1.367 × 10

0

1633.6 ± 118.5 0.00 × 10

0

± 0.00 × 10

0

Griewank 6255.4 ± 213.8 2.465 × 10

−3

± 4.148 × 10

−3

15193.0 ± 2903.9 5.601 × 10

−2

± 7.406 × 10

−2

Zakharov 7220.2 ± 300.9 2.261 × 10

−15

± 1.219 × 10

−15

422513.5 ± 20052.7 2.212 × 10

−28

± 1.82 × 10

−28

Salomon 7288.8 ± 1538.3 1.539 × 10

2

± 1.936 × 10

1

29531.5 ± 6191.7 8.039 × 10

−1

± 2.557 × 10

−1

Quadric 7486.1 ± 213.0 2.344 × 10

−15

± 1.336 × 10

−15

711144.1 ± 74108.7 3.839 × 10

−27

± 2.806 × 10

−27

Quartic 2870.0 ± 113.6 2.437 × 10

−17

± 3.464 × 10

−17

5385.2 ± 737.6 0.00 × 10

0

± 0.00 × 10

0

Table 2: Comparison of CMA -ES and COHDAgo for different 200-dimensional objective functions. We compare the number

of objective evaluations and the residual error denoted as the difference between the objective value of the found optimum

and the known global optimum.

function CMA-ES COHDAgo

evaluations error evaluations error

Elliptic 173835.8 ± 1379.5 2.945 × 10

−15

± 7.088 × 10

−16

178917.1 ± 31764.2 0.00 × 10

0

± 0.00 × 10

0

DifferentPowers 2008373.2 ± 58889.4 2.429 × 10

−10

± 7.94 × 10

−11

725581.9 ± 19524.4 0.00 × 10

0

± 0.00 × 10

0

Levy 54696.3 ± 3746.0 2.11 × 10

2

± 1.456 × 10

1

977256.7 ± 66146.3 2.154 × 10

2

± 5.674 × 10

−2

Ackley 28197.0 ± 1977.4 2.00 × 10

1

± 1.397 × 10

−11

704084.8 ± 66484.7 0.00 × 10

0

± 0.00 × 10

0

Katsuura 240121.1 ± 130948.6 6.611 × 10

2

± 1.926 × 10

2

388407.6 ± 43832.3 0.00 × 10

0

± 0.00 × 10

0

Alpine 5000001.0 ± .0 6.406 × 10

−2

± 1.514 × 10

−1

599155.8 ± 62472.5 0.00 × 10

0

± 0.00 × 10

0

EggHolder 107651.2 ± 20221.5 1.186 × 10

5

± 1.651 × 10

3

13261832.7 ± 4022307.4 9.458 × 10

4

± 1.245 × 10

3

Rastrigin

30518.8 ± 1599.3 1.456× 10

3

± 1.758 × 10

2

691825.7 ± 78236.8 0.00 × 10

0

± 0.00 × 10

0

Weierstrass 74532.3 ± 2477.9 5.901 × 10

1

± 1.056 × 10

1

165435.1 ± 7768.5 0.00 × 10

0

± 0.00 × 10

0

Griewank 63831.5 ± 1811.9 2.218 × 10

−13

± 3.13 × 10

−13

933569.6 ± 148528.6 0.00 × 10

0

± 0.00 × 10

0

Zakharov 175889.7 ± 3540.9 2.422 × 10

−15

± 4.317 × 10

−16

49256596.0 ± 23886590.2 5.105 × 10

10

± 1.614 × 10

11

Salomon 231348.8 ± 32512.4 4.829 × 10

2

± 1.92 × 10

1

11727996.8 ± 1010802.6 5.15 × 10

0

± 1.353 × 10

0

Quadric 203135.7 ± 2184.8 6.097 × 10

−14

± 1.23 × 10

−14

53963223.4 ± 63121931.5 8.474 × 10

4

± 8.369 × 10

4

Quartic 27714.4 ± 327.3 3.143 × 10

−17

± 1.223 × 10

−17

487672.8 ± 100830.5 0.00 × 10

0

± 0.00 × 10

0

Figure 4: Comparison of the effect of a position shift using

the 50-dimensional Griewank function. The position of the

optimum is shifted each run by adding a random vector. De-

picted are the mean Euclidean distances of the found posi-

tion and the real (shifted) position of the optimum for diffe-

rent strength of translation and for 200 runs each.

other dimensions instead of using random numbers

for so far missing information. This fact also leaves

room for later improvements distributing better gues-

ses prior to the actual optimization procedure.

Table 1 list some results from a comparison of

0 100 200 300 400

500 600

700 800 900 1,000

0

2

4

6

8

·10

5

dimension

evaluations

Ackley

Alpine

Zakharov

Figure 5: Relationship between number of problem dimen-

sions (and thus number of agents) and number of used ob-

jective evaluations.

COHDAgo and CMA-ES performing on different 50-

dimensional objective functions. In this experiment,

we solved each problem 200 times. The CMA-ES

needs a smaller budget of less objective evaluations

in many cases, but the agent approach produces the

ICAART 2017 - 9th International Conference on Agents and Artificial Intelligence

134

more accurate results. Table 2 lists the results for a

set of 200-dimensional objective functions. Notwit-

hstanding the larger number of objective evaluations,

the agent COHDAgo approach executes in most cases

faster due to the parallel execution of the agents but

mainly due to the fact that CMA-ES needs longer pro-

cessing time for high-dimensional problems, because

the eigenvalue decompositions of the covariance ma-

trix have to be conducted in O(n

3

), with number of

dimensions n (Knight and Lunacek, 2007).

In order to scrutinize the impact of the position

of the optimum (many benchmark function have it

at x

x

x

∗

= (0, . . . , 0)), we conducted an experiment with

randomly shifted objectives (cf. (Liang et al., 2013;

Suganthan et al., 2005)) f

T

= f +(t

1

,.. . ,t

d

), with t ∼

U(− max

o

,max

o

); max

o

denotes the maximum offset

and depends on the domain of the objective function.

Figure 4 shows the result for the 50-dimensional Grie-

wank function with the domain [−1000,1000]

50

. Five

experiments with different magnitude of random shift

have been conducted. Depicted is the distribution of

the residual Euclidean distance to the real optimum

position. As expected the residual error grows with

magnitude of randomly shifted optimum position but

clearly stays within acceptable bounds with an mean

error (distance to the real optimum) not growing sig-

nificantly larger than 10

−8

. Finally, we scrutinized

the relationship between the number of used objective

evaluations and number of objective dimension and

thus number of agents. Figure 5 shows the result for

three example objective functions.

5 CONCLUSION

We presented a new, decentralized approach for glo-

bal optimization. The agent-based approach extends

ideas from coordinate descent to decentralized gossi-

ping protocols from the agent domain. An agent pro-

tocol for combinatorial problems from the smart grid

domain has been adapted by integrating a Brent sol-

ver into the agent’s decision process. In this way, we

achieved an approach that conducts coordinate des-

cent asynchronously with the ability to escape local

minima by parallel searching different regions of the

search space. In this way, we get improved results

and gain speed by parallel execution, dimension re-

duction, and problem simplification.

At the same time, distribution of the objective can

be achieved easier because merely the parameter vec-

tor is constructed by information gathered from dif-

ferent agents; the objective function stays unchanged.

We achieve an algorithmic level problem distribution

(Talbi, 2009) by automatically decomposing the ob-

jective into a set of 1-dimensional problems but use

the full objective function for evaluation. The com-

petitiveness of the proposed approach has been de-

monstrated compared to the well-established central

approach CMA-ES.

REFERENCES

Ahrari, A. and Shariat-Panahi, M. (2015). An improved

evolution strategy with adaptive population size. Op-

timization, 64(12):2567–2586.

B

¨

ack, T., Fogel, D. B., and Michalewicz, Z., editors (1997).

Handbook of Evolutionary Computation. IOP Publis-

hing Ltd., Bristol, UK, UK, 1st edition.

Boyd, S., Ghosh, A., Prabhakar, B., and Shah, D. (2005).

Gossip algorithms: Design, analysis and applications.

In Proceedings IEEE 24th Annual Joint Conference of

the IEEE Computer and Communications Societies.,

volume 3, pages 1653–1664. IEEE.

Bremer, J. and Lehnhoff, S. (2016a). Decentralized coa-

lition formation in agent-based smart grid applicati-

ons. In Highlights of Practical Applications of Scala-

ble Multi-Agent Systems. The PAAMS Collection, vo-

lume 616 of Communications in Computer and Infor-

mation Science, pages 343–355. Springer.

Bremer, J. and Lehnhoff, S. (2016b). A decentralized PSO

with decoder for scheduling distributed electricity ge-

neration. In Squillero, G. and Burelli, P., editors, Ap-

plications of Evolutionary Computation: 19th Euro-

pean Conference EvoApplications (1), volume 9597

of Lecture Notes in Computer Science, pages 427–

442, Porto, Portugal. Springer.

Brent, R. P. (1971). An algorithm with guaranteed conver-

gence for finding a zero of a function. Comput. J.,

14(4):422–425.

Colorni, A., Dorigo, M., Maniezzo, V., et al. (1991). Distri-

buted optimization by ant colonies. In Proceedings of

the first European conference on artificial life, volume

142, pages 134–142. Paris, France.

Dekker, T. (1969). Finding a zero by means of successive

linear interpolation. Constructive aspects of the fun-

damental theorem of algebra, pages 37–51.

Hansen, E. (1980). Global optimization using interval ana-

lysis – the multi-dimensional case. Numer. Math.,

34(3):247–270.

Hansen, N. (2011). The CMA Evolution Strategy: A Tuto-

rial. Technical report.

Hansen, N. and Ostermeier, A. (2001). Completely derand-

omized self-adaptation in evolution strategies. Evol.

Comput., 9(2):159–195.

Hansen, P., Jaumard, B., and Lu, S.-H. (1992). Global op-

timization of univariate lipschitz functions ii: New al-

gorithms and computational comparison. Math. Pro-

gram., 55(3):273–292.

Hinrichs, C., Bremer, J., and Sonnenschein, M. (2013a).

Distributed Hybrid Constraint Handling in Large

Scale Virtual Power Plants. In IEEE PES Conference

on Innovative Smart Grid Technologies Europe (ISGT

Europe 2013). IEEE Power & Energy Society.

An Agent-based Approach to Decentralized Global Optimization - Adapting COHDA to Coordinate Descent

135

Hinrichs, C., Lehnhoff, S., and Sonnenschein, M. (2014).

A Decentralized Heuristic for Multiple-Choice Com-

binatorial Optimization Problems. In Operations Re-

search Proceedings 2012, pages 297–302. Springer.

Hinrichs, C., Sonnenschein, M., and Lehnhoff, S. (2013b).

Evaluation of a Self-Organizing Heuristic for Interde-

pendent Distributed Search Spaces. In Filipe, J. and

Fred, A. L. N., editors, International Conference on

Agents and Artificial Intelligence (ICAART 2013), vo-

lume Volume 1 – Agents, pages 25–34. SciTePress.

Horst, R. and Pardalos, P. M., editors (1995). Handbook of

Global Optimization. Kluwer Academic Publishers,

Dordrecht, Netherlands.

Karaboga, D. and Basturk, B. (2007). A powerful and ef-

ficient algorithm for numerical function optimization:

artificial bee colony (ABC) algorithm. Journal of Glo-

bal Optimization, 39(3):459–471.

Knight, J. N. and Lunacek, M. (2007). Reducing the space-

time complexity of the CMA-ES. In Genetic and Evo-

lutionary Computation Conference, pages 658–665.

Li, G. and Wang, Q. (2014). A cooperative harmony se-

arch algorithm for function optimization. Mathemati-

cal Problems in Engineering, 2014.

Li, Y., Mascagni, M., and Gorin, A. (2009). A decentrali-

zed parallel implementation for parallel tempering al-

gorithm. Parallel Computing, 35(5):269–283.

Liang, J., Qu, B., Suganthan, P., and Hern

´

andez-D

´

ıaz, A. G.

(2013). Problem definitions and evaluation criteria for

the cec 2013 special session on real-parameter optimi-

zation. Technical Report 201212, Computational In-

telligence Laboratory, Zhengzhou University, Zheng-

zhou, China and Nanyang Technological University,

Singapore, Technical Report.

Loshchilov, I., Schoenauer, M., and Sebag, M. (2012). Self-

adaptive surrogate-assisted covariance matrix adapta-

tion evolution strategy. CoRR, abs/1204.2356.

Luo, Z. Q. and Tseng, P. (1992). On the convergence of

the coordinate descent method for convex differentia-

ble minimization. Journal of Optimization Theory and

Applications, 72(1):7–35.

Lust, T. and Teghem, J. (2010). The multiobjective multi-

dimensional knapsack problem: a survey and a new

approach. CoRR, abs/1007.4063.

Mishra, S. K. (2006). Some new test functions for glo-

bal optimization and performance of repulsive parti-

cle swarm method. Technical Report SSRN 926132,

North-Eastern Hill University, Shillong (India).

Nieße, A. (2015). Verteilte kontinuierliche Einsatzplanung

in Dynamischen Virtuellen Kraftwerken. PhD thesis.

Nieße, A., Beer, S., Bremer, J., Hinrichs, C., L

¨

unsdorf,

O., and Sonnenschein, M. (2014). Conjoint dynamic

aggrgation and scheduling for dynamic virtual power

plants. In Ganzha, M., Maciaszek, L. A., and Papr-

zycki, M., editors, Federated Conference on Compu-

ter Science and Information Systems - FedCSIS 2014,

Warsaw, Poland.

Nieße, A. and Sonnenschein, M. (2013). Using grid related

cluster schedule resemblance for energy rescheduling

- goals and concepts for rescheduling of clusters in

decentralized energy systems. In Donnellan, B., Mar-

tins, J. F., Helfert, M., and Krempels, K.-H., editors,

SMARTGREENS, pages 22–31. SciTePress.

Ortega, J. M. and Rheinboldt, W. C. (1970). Iterative solu-

tion of nonlinear equations in several variables.

Picard, G. and Glize, P. (2006). Model and analysis of

local decision based on cooperative self-organization

for problem solving. Multiagent Grid Syst., 2(3):253–

265.

Poli, R., Kennedy, J., and Blackwell, T. (2007). Particle

swarm optimization. Swarm Intelligence, 1(1):33–57.

Rahnamayan, S., Tizhoosh, H. R., and Salama, M. M.

(2007). A novel population initialization method for

accelerating evolutionary algorithms. Computers &

Mathematics with Applications, 53(10):1605 – 1614.

Rigling, B. D. and Moore, F. W. (1999). Exploitation of

sub-populations in evolution strategies for improved

numerical optimization. Ann Arbor, 1001:48105.

Salomon, R. (1996). Re-evaluating genetic algorithm per-

formance under coordinate rotation of benchmark

functions. a survey of some theoretical and practical

aspects of genetic algorithms. Biosystems, 39(3):263

– 278.

Simon, D. (2013). Evolutionary Optimization Algorithms.

Wiley.

Suganthan, P. N., Hansen, N., Liang, J. J., Deb, K., Chen,

Y. P., Auger, A., and Tiwari, S. (2005). Problem Defi-

nitions and Evaluation Criteria for the CEC 2005 Spe-

cial Session on Real-Parameter Optimization. Techni-

cal report, Nanyang Technological University, Singa-

pore.

Talbi, E. (2009). Metaheuristics: From Design to Imple-

mentation. Wiley Series on Parallel and Distributed

Computing. Wiley.

Tseng, P. (2001). Convergence of a block coordinate

descent method for nondifferentiable minimization.

Journal of Optimization Theory and Applications,

109(3):475–494.

Ulmer, H., Streichert, F., and Zell, A. (2003). Evolu-

tion strategies assisted by gaussian processes with im-

proved pre-selection criterion. In in IEEE Congress

on Evolutionary Computation,CEC 2003, pages 692–

699.

Vanneschi, L., Codecasa, D., and Mauri, G. (2011). A com-

parative study of four parallel and distributed pso met-

hods. New Generation Computing, 29(2):129–161.

Watts, D. and Strogatz, S. (1998). Collective dynamics of

’small-world’ networks. Nature, (393):440–442.

Wright, S. J. (2015). Coordinate descent algorithms. Mat-

hematical Programming, 151(1):3–34.

Yao, X., Liu, Y., and Lin, G. (1999). Evolutionary program-

ming made faster. IEEE Trans. Evolutionary Compu-

tation, 3(2):82–102.

ICAART 2017 - 9th International Conference on Agents and Artificial Intelligence

136