Model-based Learning Assessment Management

Antonello Calabr

`

o

2

, Francesca Lonetti

2

, Eda Marchetti

2

, Sarah Zribi

1

and Tom Jorquera

1

1

Linagora, 75 route de revel, 31400 Toulouse, France

2

Istituto di Scienza e Tecnologie dell’Informazione “A. Faedo”, CNR, Pisa, Italy

Keywords:

Model-based Learning, Simulation, Monitoring, Business Process.

Abstract:

Model-based simulation and monitoring are becoming part of advanced learning environments. In this paper,

we propose a model-based simulation and monitoring framework for management of learning assessment and

we describe its architecture and main functionalities. The proposed framework allows user-friendly learning

simulation with a strong support for collaboration and social interactions. Moreover, it monitors the learners’

behavior during simulation execution and it is able to compute the learning scores useful for the learner knowl-

edge assessment. The preliminary experimental feedback of the evaluation of the simulation and monitoring

framework inside a real environment is also reported.

1 INTRODUCTION

Nowadays simulation and monitoring activities are

not a novelty and their application, in conjunction or

separately, can be experienced and proven in the most

different contexts: from the medical to the avionic,

from the banking to the automotive and so on. Inde-

pendently by the context, usually simulation attempts

to mimic real-life or hypothetical behavior to see how

processes, systems or hardware devices can be im-

proved and to predict their performance under dif-

ferent circumstances. Commonly, monitoring focuses

on data collection and supervision of activities during

the real-life execution of a process, systems or hard-

ware components to ensure they are on-course and on-

schedule in meeting the objectives and performance

targets. Currently, inside the software engineering

area simulation and monitoring activities are moving

towards the use of Business Process Modeling Nota-

tion (BPMN) (Brocke and Rosemann, 2014) mainly

due to the possibility to provide accepted and con-

cise definitions and taxonomies, and develop an exe-

cutable framework for overall managing of the pro-

cess itself. Indeed Business Process modeling lets

the use of methods, techniques, and tools to sup-

port the design, enactment and analysis of the busi-

ness process and to provide an excellent basis for

simulation and monitoring purposes. Examples can

be found in different environments such for instance

the clinical one, for assessing and managing the pa-

tient treatment, the financial sector for verifying and

checking the bank processes, or the learning context,

for guiding and assessing the training activity. In all

these application contexts, a key role is played by the

data collected during the business process execution

or simulation, which lets the possibility of reasoning

about and/or improving the overall performance of the

business process itself. Considering in particular the

learning context commonly Business Process (BP)

simulation and monitoring enhance student’s learning

and problem-solving so to improve their knowledge.

Thus, these two activities are becoming a foundation

for improving the learners’s skill, enhancing teaching

performance and providing a comprehensive frame-

work. Indeed different conceptual and mathematical

models have been proposed for model-based learn-

ing and several type of simulations, including discrete

event and continuous process simulations have been

considered (Blumschein et al., 2009). However, the

main challenges of existing learning simulation and

monitoring proposals are about collaborative simula-

tion, gamification and the derived learning benefits. In

particular, gamification is becoming one of the main

challenges in the simulation activity, that can be in-

corporated with the aim of using game-based mecha-

nisms and game thinking to engage, motivate action,

promote learning and solve problems (Kapp, 2012).

Moreover, rewarding strategies are encouraged in or-

der to stimulate intrinsic motivations within the mem-

bers of a community.

In this paper, we address model-based learning fo-

cusing on BPMN and we present a simulation and

monitoring framework able to support collaboration

and social interactions, as well as process visualiza-

tion, monitoring and learning assessment. The pro-

posed approach can be compared to a collaborative

game where a team of players composed of one coach

and any number of learners work together in order to

Calabrò, A., Lonetti, F., Marchetti, E., Zribi, S. and Jorquera, T.

Model-based Learning Assessment Management.

DOI: 10.5220/0005846007430752

In Proceedings of the 4th International Conference on Model-Driven Engineering and Software Development (MODELSWARD 2016), pages 743-752

ISBN: 978-989-758-168-7

Copyright

c

2016 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

743

achieve a common goal. The main objective is con-

sequently to provide an easy to use and user-friendly

environment for the learners in order to let them take

part of the process when their turn comes, assuming

different roles according to the content they have to

learn. The principal contribution of this paper is the

architecture of a framework for simulation and mon-

itoring of model-based learning able to provide feed-

back for evaluating the learner competency and the

collaborative learning activities.

The proposed simulation and monitoring frame-

work has been applied to a case study developed in-

side the Learn PAd project in the context of Marche

Region public administration and important feedback

and hints have been collected for the improvement of

framework itself over the Learn PAd project duration.

In the rest of the paper we first briefly introduce

some background concepts and related work (Section

2), then is Section 3 we present the main components

of the simulation and monitoring framework architec-

ture whereas in Section 4 we describe its main func-

tionalities. Finally, Section 5 shows the application of

proposed framework to a case study and conclusion

concludes the paper.

2 BACKGROUND AND RELATED

WORK

The proposal of a simulation and monitoring frame-

work for model-based learning originated in the con-

text of the Model-Based Social Learning for Pub-

lic Administrations (Learn PAd) European project

(LearnPAd, ) addressing the challenges set out in the

“ICT-2013.8.2 Technology-enhanced learning” work

programme. Learn PAd project envisions an inno-

vative holistic e-learning platform for Public Admin-

istrations (PAs) that enables process-driven learning

and fosters cooperation and knowledge sharing. The

main Learn PAd objectives include: i) a new concept

of model-based e-learning (both process and knowl-

edge); ii) an open and collaborative e-learning con-

tent management; iii) an automatic, learner-specific

and collaborative content quality assessment; and fi-

nally iv) an automatic model-driven simulation-based

learning and assessment. The developed Learn PAd

platform will support an informative learning ap-

proach based on enriched BP models, as well as a

procedural learning approach based on simulation and

monitoring that will allow users to learn by doing.

In learning context, BP simulation approaches are

very popular since learners prefer simulation exer-

cises to either lectures or discussions (Anderson and

Lawton, 2008). Simulations have been used to teach

procedural skills and for training of software appli-

cations and industrial control operations as well as

for learning domain specific concepts and knowledge,

such as business management strategies (Clark and

Mayer, 2011). Nowadays, more attention is given

to business process oriented analysis and simula-

tion (Jansen-Vullers and Netjes, 2006). Studies have

shown that the global purpose of these existing busi-

ness process simulation platforms is to evaluate BPs

and redesign them, whereas in the last years simula-

tion/gaming is establishing as a discipline (Crookall,

2010). However, these platforms present several

shortcomings regarding their applicability to a col-

laborative learning approach. Namely, no existing

platform regroups all of the main functionalities of

a learning simulation solution such as facilities for

providing a controlled and flexible simulated environ-

ment (for example allowing to switch between possi-

ble outcomes of a task, in order to explore the differ-

ent paths of a process), good visualization and moni-

toring of a process execution flow (in order both to as-

sist and evaluate the learners) (Crookall, 2010). The

main challenges of a learning simulation are about

collaborative simulation and the derived learning ben-

efits. To answer all of these concerns a new learning

simulation and monitoring framework is designed in

this paper, providing a flexible simulation framework

with a strong support for collaboration and social in-

teractions, as well as process visualization, monitor-

ing and learners assessment.

Concerning monitoring, existing works (Bertoli

et al., 2013) combine modeling and monitoring fa-

cilities of business process. PROMO (Bertoli et al.,

2013) allows to model, monitor and analyze business

process. It provides an editor for the definition of

interesting KPIs (Key Performance Indicator) to be

monitored as well as facilities for specifying aggre-

gation and monitoring rules. Our proposal is differ-

ent since it addresses a flexible, adaptable and dy-

namic monitoring infrastructure that is independent

from any specific business process modeling nota-

tion and execution engine. Other approaches (Maggi

et al., 2011) focus on monitoring business constraints

at runtime by means of temporal logic and colored

automata. They allow continuous compliance with re-

spect to predefined business process constraint model

and recovery after the first violation. Differently from

these approaches, the proposed solution does not al-

low to take counter measures for recovering from vio-

lation of defined performance constraints. Moreover,

in our solution these constraints are not specified in

the business process but they are dynamically defined

as monitoring proprieties that can be applied to differ-

ent business process notations. In the context of learn-

LMCO 2016 - Special Session on Learning Modeling in Complex Organizations

744

ing, monitoring solutions can be used for providing

feedback on training sessions and allow KPI evalua-

tion. Some learning systems such as that in (Adesina

and Molloy, 2010) propose customized learning paths

that learners can follow according to their knowledge,

learning requirements or learning disability. Chang-

ing and management of learning pathways as well as

adaptation of learning material are made according to

the monitored data. However, contemporary Learn-

ing Content Management Systems (LCMS) provide

rather basic feedback and monitoring facilities about

the learning process, such as simple statistics on tech-

nology usage or low-level data on students activities

(e.g., page view). Some tools have been developed

for providing feedback on the learning tasks by the

analysis of the user tracking data and monitoring of

the simulation activity. The authors of (Ali et al.,

2012), for instance, propose LOCO-Analyst, an edu-

cational tool aimed at providing educators with feed-

back on the relevant aspects of the learning process

taking place in a web-based learning environment

such as the usage and the comprehensibility of the

learning content or contextualized social interactions

among students (i.e., social networking). The main

goal of these tools is to support educators for creat-

ing courses, viewing the feedback on those courses,

and modifying the courses accordingly. Differently

from these solutions, other proposals (Calabr

`

o et al.,

2015b; Calabr

`

o et al., 2015a) focus on model-based

learning and monitoring of business process execu-

tion. Specifically, (Calabr

`

o et al., 2015b) presents a

flexible and adaptable monitoring infrastructure for

business process execution and a critical comparison

of the proposed framework with closest related works

whereas (Calabr

`

o et al., 2015a) presents an integrated

framework that allows modeling, execution and anal-

ysis of business process based on a flexible and adapt-

able monitoring infrastructure. The main advantage

of this last solution is that it is independent from any

specific business process modeling notation and exe-

cution engine and allows for the definition and evalu-

ation of user-specific KPI measures. The monitoring

framework presented in this paper has been inspired

by the monitoring architecture presented in (Calabr

`

o

et al., 2015b; Calabr

`

o et al., 2015a). It includes new

components specifically devoted to the computation

of the evaluation scores useful for the learning assess-

ment.

3 SIMULATION AND

MONITORING FRAMEWORK

ARCHITECTURE

In this section, we describe the high level architecture

of the proposed simulation and monitoring frame-

work, its main components, their purpose, the inter-

faces they expose, and how they interact with each

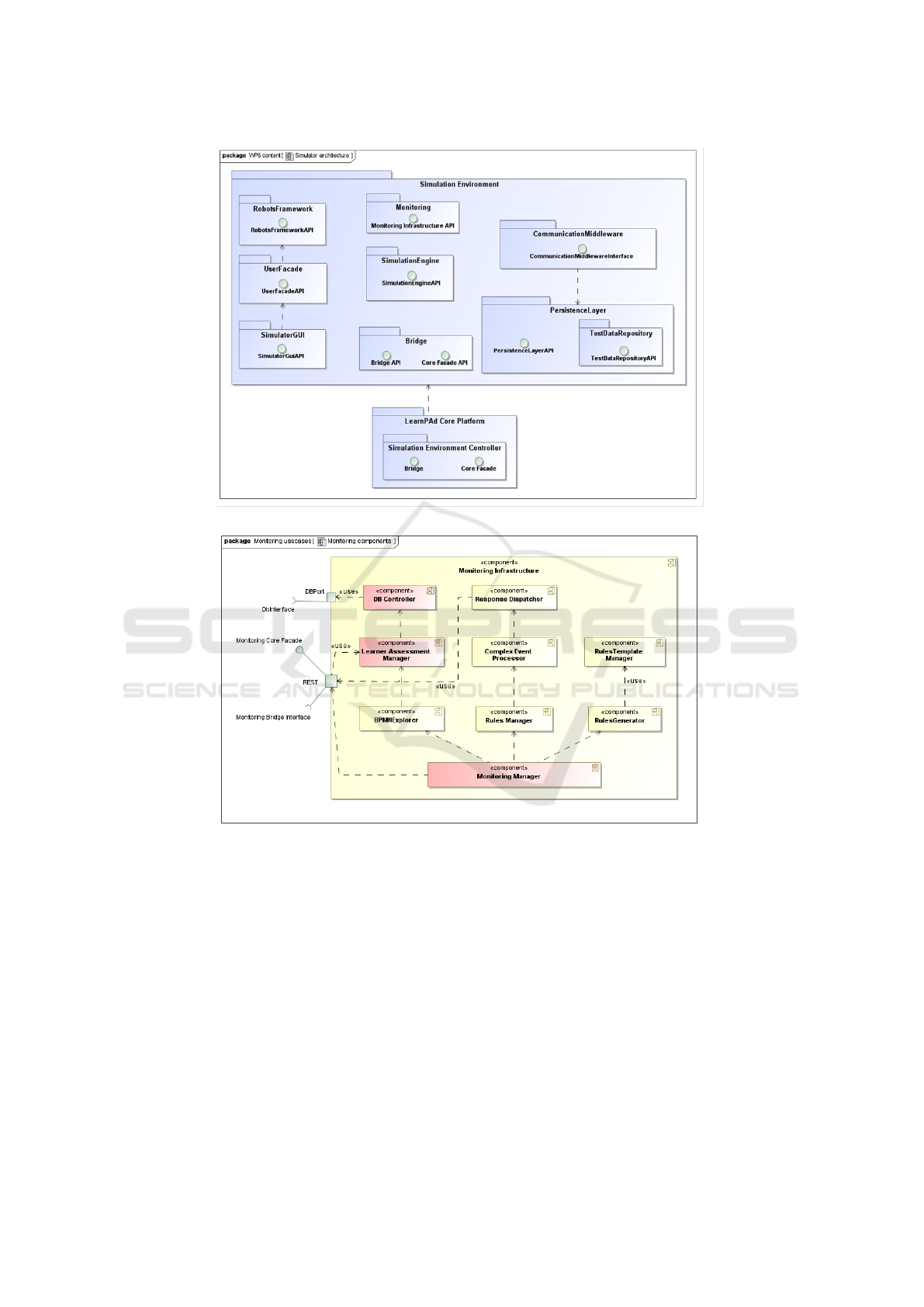

others. In particular, as depicted in Figure 1 each

component is exposed as a service and provides an

API as a unique point of access. Inside the Learn PAd

infrastructure, the proposed simulation framework in-

teracts with the Learn PAd components by means of

the Learn PAd Core Platform and specifically through

the Bridge and the Core Facade interfaces. Moreover,

in the Learn PAd vision two levels of learners have

been considered: the civil servant who is the stan-

dard learner, and the civil servant coordinator

who is a generalization of the civil servant who is in

charge to activate and manage a simulation session.

The simulation framework components are:

SimulationGUI: it is in charge of the interac-

tions between learners and simulator’s components.

It provides different features such as the possibility of

chatting, receiving notification, interacting with other

learners, reading and searching documents or links to

material useful during the simulation activity.

PersistenceLayer: it stores the status of the

simulation at each step (i.e. BP executed task)

in order to give to the civil servant the ability to

stop it and restart when needed. Its main sub-

components are: i) the Logger that is in charge

of storing time-stamped event data coming from

the simulation engine; ii) the BPStateStorage

that allows to store/retrieve/delete/update the state

of a given simulation associated to a BP; iii) the

TestDataRepository that collects the historical data

that relate to the simulations executions.

RobotFramework: it allows to simulate the be-

havior of civil servants by means of robots. The

Robots are implemented on the basis of the availabil-

ity of historical data, i.e. the data saved in the Test-

DataRepository during a previous simulation session

and provided by an expert who takes the role of the

civil servant.

SimulationEngine: this is the core component

of the simulation framework. It enacts business pro-

cesses and links activities with corresponding civil

servants or robots.

Monitoring: it collects the events occurred during

the simulation and infers rules related to the business

process execution.

Communication Middleware: it provides event-

based communication facilities between the simula-

Model-based Learning Assessment Management

745

Figure 1: Simulation Framework Architecture.

Figure 2: Monitoring Framework Architecture.

tion components according to the publish/subscribe

paradigm.

UserFacade: it is in charge of encapsulating real

or simulated civil servants (i.e. robots) in order to

make the learner interaction transparent to the other

components of the architecture.

In the following more details about the simulation

engine and monitor components are provided. More

details about the simulation and monitoring design are

in (Zribi et al., 2016a).

3.1 Simulation Engine

Simulation engine takes in charge the simulation of a

given business process instance. It takes the form of

an orchestration engine that invokes treatments asso-

ciated to each activity of the current process. Such

workflow may involve multiple civil servants taking

different roles that may be present or not. For those

that are not available, robots are used in order to

mimic their behavior. A simulation manager is pro-

vided in order to manage BP lifecycle according to

the current context (create, stop, resume, kill, etc.).

Business processes are made of two kinds of activ-

LMCO 2016 - Special Session on Learning Modeling in Complex Organizations

746

ities: i) Human activities involve civil servants who

should provide information in order to complete the

task. The concept of human activity is used to spec-

ify work which has to be accomplished by people; ii)

Mocked activities involve robots to compute the treat-

ment associated to the activity. When the simulation

engine invokes a human activity the corresponding

civil servant is asked to provide input through a form.

Those forms are managed by a form engine that dele-

gates task to a robot if necessary. All the state infor-

mation necessary to restart a specific simulation are

stored “on the fly”. The civil servant may decide to

freeze a running simulation, to store it, to backtrack

to a previous stored state and to logout. He/she will

be able to resume it later.

Business Process orchestrator takes in charge the

step by step execution of a given BP instance. Such

BP instance is made of a BPMN description enriched

with necessary run-time information such as end-

points of software applications mocks, user id, etc.

The BP engine is connected with the Forms Engine in

order to take in charge users and robots input/output.

In this paper we rely on Activiti (act, 2015) as busi-

ness process execution engine. In order to collect

inputs from learners during a simulation session, a

form engine has been defined so to design and run

the proper corresponding forms. Forms Engine allows

dynamic forms creation and complex forms process-

ing for web applications. The processing of a form

involves the verification of the input data, calculation

of the input based on the information from other in-

put fields as well as dynamic activation or hiding of

the data fields depending on the user input. Inside

our solution the javascript Form editor, called For-

maaS, has been adopted. It allows to design and run

javascript forms and to quickly define forms and exe-

cutable code.

3.2 Monitoring

The simulation framework is equipped with a mon-

itoring facility that allows to provide feedback on

the business process execution and learning activi-

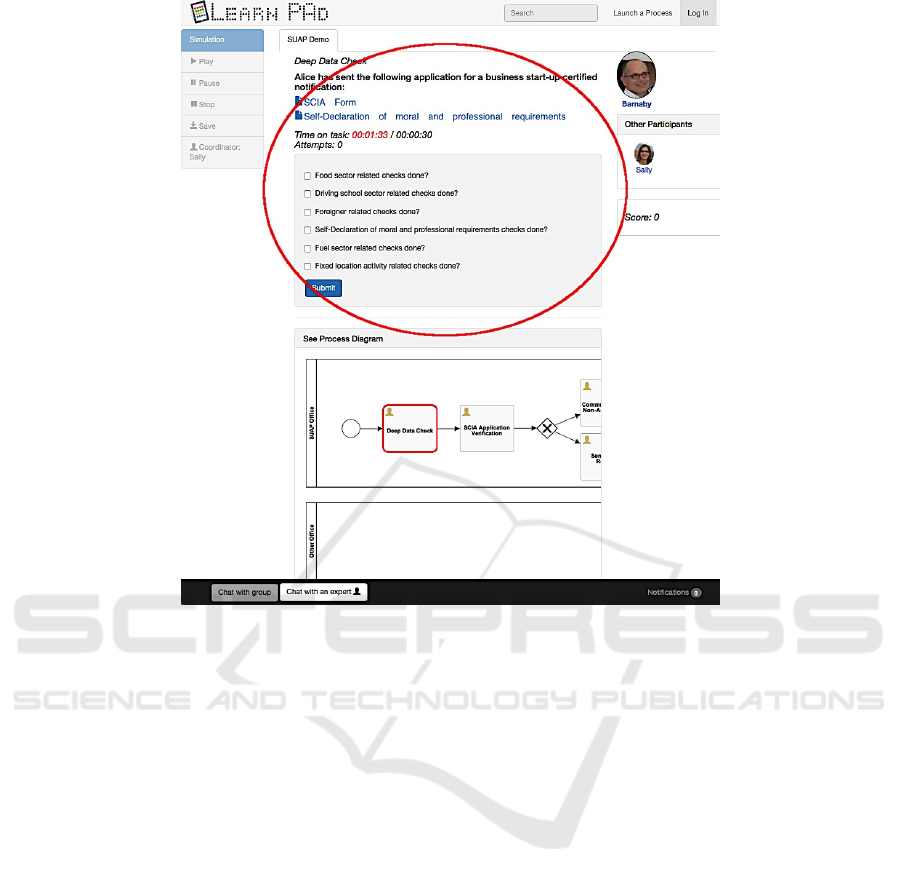

ties. Figure 2 shows the architecture of the proposed

monitoring infrastructure. The design of this monitor-

ing infrastructure has been inspired by (Calabr

`

o et al.,

2015b).

For aim of readability, we list below the monitor-

ing components presented in (Calabr

`

o et al., 2015b)

and refer to (Calabr

`

o et al., 2015b) for the complete

description of their functionalities:

• Complex Event Processor (CEP). It is the rule en-

gine, which analyzes the events, generated by the

business process execution.

• BPMN explorer. It is in charge to explore and save

all the possible entities (Activity Entity, Sequence

Flow Entity, Path Entity) reachable on a BPMN.

• Rules Generator. It is the component in charge

to generate the rules needed for the monitoring of

the business process execution.

• Rules Template Manager. It is an archive of pre-

determined rules templates that will be instanti-

ated by the Rules Generator.

• Rules Manager. It is in charge to load and unload

set of rules into the complex event processor and

fire it when needed.

• Response Dispatcher. It is a registry that keeps

track of the requests for monitoring sent to the

monitoring infrastructure.

In this section a refined and complete design of

the monitoring infrastructure is presented as depicted

in Figure 2. It includes three new components (shown

in pink in Figure 2) that are:

• DBController. This component has been in-

troduced to satisfy the Learn PAd requirements

of having storage of simulation executions data.

Specifically the DB Controller manages the up-

dating of the civil servant score during a simula-

tion or the retrieval of historical data concerning

the assessment level of the civil servants. The DB

Controller interacts with the Learner Assessment

Manager to get the different evaluation scores that

will be defined in Section 3.2.1.

• Learner Assessment Manager. It evaluates the

learner activities and is in charge to calculate the

different scores. More details about this compo-

nent are in Section 3.2.1.

• Monitoring Manager component. It is the or-

chestrator of the overall Monitoring Infrastruc-

ture. It interacts with the Learn PAd Core Plat-

form through the REST interfaces (core facade

and bridge interface) and is in charge to query the

Rules Manager. It also interacts with the BPMN

Explorer and the Rules Generator. This compo-

nent initializes the overall monitoring infrastruc-

ture allocating resources, instantiating the Com-

plex Event Processor and instrumenting channel

on which events coming from the simulation en-

gine will flow.

3.2.1 Learner Assessment Manager

During learning simulation, it is important to asses

learning activities as well as to visualize to the civil

servants their success incrementally by displaying the

achieved evaluation scores. To this end, the proposed

Model-based Learning Assessment Management

747

simulation and monitoring component integrates a

scoring mechanism in order to generate ranking of

the civil servants and data useful for rewarding. The

Learner Assessment Manager component evaluates

the learner activities and is in charge to calculate dif-

ferent scores useful for the civil servant assessment.

In addition, independently from any ongoing simula-

tion, this component is in charge of retrieving the data

necessary for the score evaluation and updating them

on a database. Data collected during monitoring of

business process execution can be used for providing

feedback for the continuous tracking of the process

behavior and measurement of learning-specific goals.

All scores computed by the Learner Assessment Man-

ager are then stored in the DB by the interaction with

the DB Controller component. The evaluation scores

computed by the Learner Assessment Manager relate

both to the simulation of a session of the business pro-

cess (session score(s)) and to the simulation of the

overall business process (Business Process scores).

Specifically we define the session score(s) and Busi-

ness Process score(s) as detailed below.

Session Scores. The civil servant may simulate dif-

ferent learning sessions on the same business process,

each one referring to a (different) path. During a

simulation session the Learner Assessment Manager

computes the following scores:

• The session score (called session score), i.e. the

ongoing session score of each participating civil

servant.

• An assessment value (called abso-

lute session score) useful as boundary value

for the session score.

Specifically, the session score is calculated using a

weighted sum of scores attributed to the civil servant

for each task of the Business Process realized during

the simulation. Considering n the number of tasks ex-

ecuted by the civil servant during the learning session

simulation and P the weight of the task, the session

score is computed as follows:

session score =

n

∑

i=1

task score

i

P

i

Each task of the Business Process is associated

with a weight specified as a metadata. These metadata

are attributed in the Business Process definition and

defined by the modeler. The calculation of the score’s

task is based on several criteria, namely number of

attempts, Success/Fail and finally some pre-defined

performance indicators named KPI (e.g. response

time). The formula below allows calculating this

score:

task score = success ∗ (

1

nb attempts

+

k

∑

i=1

expected KPI value

i

observed KPI value

i

)

where k is the number of KPI considered in the

evaluation of the civil servants performances and suc-

cess is a Boolean. For what concerns the bound-

ary values useful for the learning assessment, the

Learner Assessment Manager can provide the ab-

solute session score, which represents the maximum

score that could be assigned to the civil servant during

a simulation session. Supposing that the maximum

obtained value of the task score is equal to k+1, the

absolute session score is computed as:

absolute session score =

n

∑

i=1

(k + 1)P

i

This absolute session score computes an accu-

racy measure of the session score. A session score

value closer to the absolute session score represents

a better performance of the civil servant for the con-

sidered simulation session.

Business Process Scores. During the learning sim-

ulation, the civil servant can execute different learn-

ing sessions on the same Business Process, each one

referring to a different path. Therefore, the cumula-

tive score obtained by the civil servant on the exe-

cuted sessions is a good indicator of the knowledge of

the civil servant about the overall Business Process.

The learner assessment manager is able to compute

the following scores related to the business process:

• Business Process Score (called bp score), i.e. the

cumulative score obtained by the civil servant af-

ter the execution of different simulation sessions

on the same business process. It represents the de-

gree of acquired knowledge of the Business Pro-

cess activities obtained by the civil servant.

• Two assessment values (called relative bp score

and absolute bp score) used as boundary values

for the bp score to evaluate the acquired civil ser-

vant competency on the executed business pro-

cess. Specifically, the relative bp score is the

maximum score that the civil servant can obtain

on the set of simulated paths whereas the abso-

lute bp score is the maximum score that the civil

servant can obtain on all the possible paths of the

business process.

• A business process coverage percentage (called

bp coverage), i.e. the percentage of different

learning sessions (paths) executed by the civil ser-

vant during the simulation of a business process.

LMCO 2016 - Special Session on Learning Modeling in Complex Organizations

748

It represents the completeness of the civil servant

knowledge about the overall business process.

In the following we provide more details about the

above-mentioned scores. The bp score is computed

as the sum of the maximum values of session score(s)

obtained by the civil servant during the simulation of

a set of different k paths (over the overall number of

paths) on a business process, according to the follow-

ing formula:

bp score =

k

∑

i=1

max(session score

i

)

Considering a bp score and the set of k paths to

which the bp score is related to, the relative bp score

is the boundary value representing the maximum

score that the civil servant can obtain on the set

of k paths. It is computed as the sum of the ab-

solute session score according to the following for-

mula:

relative bp score =

k

∑

i=1

absolute session score

i

Considering all paths of a business process to

which a bp score is related to, the absolute bp score

is an additional boundary value representing the max-

imum score that the civil servant can reach. It is com-

puted as the sum of the absolute bp score for all the

paths of the business process according to the follow-

ing formula:

absolute bp score =

#path

∑

i=1

absolute session score

i

The more the bp score is close to the rela-

tive bp score the more the civil servant reaches the

maximum cumulative learning performance on the

different simulated sessions. The more the values of

bp score are close to the absolute bp score the more

the civil servant knowledge about the overall business

process is complete.

Finally, the bp coverage value is an additional

measure for evaluating the completeness of the civil

servant knowledge about the overall business process.

It is computed as the percentage of different paths (k),

executed by the civil servant during the simulation of

a business process, over the paths cardinality as in the

following:

bp coverage =

k

#path

When the civil servant executes all paths of the

business process, the computed bp coverage is 1. A

bp coverage value closer to 1 represents a better per-

formance of the civil servant for the considered busi-

ness process simulation.

4 FUNCTIONAL SPECIFICATION

OF THE LEARNING

SIMULATION AND

MONITORING FRAMEWORK

The simulation and monitoring framework provides

the subsystem where learners can simulate the busi-

ness process interactively and is used by one or mul-

tiple civil servant(s) in order to learn processes. As

mentioned in Section 3, the simulation and monitor-

ing framework distinguishes between the two follow-

ing actors: the civil servant coordinator who is in

charge of starting a simulation session and the civil

servant who represents a generic participant to a sim-

ulation session. In particular, the civil servant coor-

dinator can request to start a new simulation execu-

tion of a Public Administration business process or

he/she can manage an ongoing one by for instance

inviting/cancelling other civil servants. The civil ser-

vant coordinator can also restart/stop a current simu-

lation session and redefine a new coordinator. On its

turn, each civil servant has different possibilities like

for instance joining, disconnecting or pausing a simu-

lation session, chatting, asking for evaluation/help, or

managing his/her own profile.

The simulation and monitoring framework func-

tionalities have been split into three different phases:

i) Initialization in which the simulation framework

is set up; ii) Activation in which the participants to

the simulation are invited; iii) Execution in which the

participants effectively collaborate each other during

a learning session. During the Activation phase, the

civil servant can select the type of simulation he/she

wants to execute. Specifically, three different types of

simulation are provided:

Individual Simulation. The civil servant decides to

execute the simulation without interacting with other

human participants. In this case the other participants

are emulated by means of Robots (see section 3 for

more details). The creation of robots instances is per-

formed before the simulation execution.

Collaborative Simulation. This option of simula-

tion involves the collaboration of several human par-

ticipants (no robots instances are involved). During

the collaborative simulation, users can interact be-

tween them using chat instruments. This will improve

performances of the overall learning session due to

the possibility to rapidly share experience between

human participants. This kind of simulation can

be considered the most interesting from the learning

point of view, because cooperation can make learning

procedures more intensive and productive. Diversi-

ties will raise up and the opportunity to reflect upon

Model-based Learning Assessment Management

749

Figure 3: Process Execution - Step1.

encountered issues will help learners to improve their

knowledge and better understand the problem. For

activating a simulation, the system requires that all

the civil servants involved have joined the session in

order to provide an online collaborative environment.

Moreover, the simulation and monitoring framework

also supports also some asynchronous tasks execution

among simulation participants. If a civil servant does

not satisfy the simulation requirements or time con-

straints, the civil servant coordinator may decide ei-

ther to kick the civil servant, or to swap him with an-

other one among those available, or replace him with

a Robot.

Mixed Simulation. This type of simulation re-

quires the participation of both humans and robots.

This usually happens when there are not enough civil

servants to cover all the necessary roles to execute a

BP or if one or more civil servants leave the ongoing

simulation (disconnection or kick). The activation of

a mixed simulation can be done only if the following

two constraints are met: i) the required instances of

robots are ready; ii) all the invited civil servants have

completed the connection procedures.

Both gamification and serious game concepts are

also included in the proposed simulation and moni-

toring framework so to engage civil servants during

training tasks and activities to be learned. Specifi-

cally, two main gamification elements are included in

the proposed simulation and monitoring framework

for educational purposes: i) progression that allows

the learner to see success visualized incrementally by

the achieved evaluation scores; ii) virtual rewards that

allows learners who satisfy some conditions to be au-

tomatically awarded by the platform with a specific

certificate that gives to him/her additional rights. For

more details about the gamification model used in

the proposed simulation and monitoring framework

we refer to (Zribi et al., 2016b). During the differ-

ent types of simulation, the monitoring component

checks if execution patterns will be respected during

the simulation of a business process. In order to do

that, the simulation engine interacts with the moni-

toring component through a pre-fixed set of messages

specifying the set of events, detected failures and time

values useful for evaluating the learner’s competency

and simulation non-functional properties such as the

overall simulation time completion.

LMCO 2016 - Special Session on Learning Modeling in Complex Organizations

750

Figure 4: Simulation Execution - Step2.

5 LEARN PAD SIMULATION AND

MONITORING FRAMEWORK:

AN APPLICATION EXAMPLE

In this section, we show the application of the pro-

posed simulation and monitoring framework to a case

study developed inside the Learn PAd project with

the collaboration of SUAP (Sportello Unico per le At-

tivit

`

a produttive) officers from both Public Adminis-

trations Senigallia and Monti Azzurri. The scenario

refers to the activities that the Italian Public Adminis-

trations have to put in place in order to permit to en-

trepreneurs to set up a new company. In particular the

application scenario describes the Titolo Unico pro-

cess, i.e. the standard request to start business activity

1

. Using the simulation and monitoring framework,

the Marche Region personnel has the possibility to

learn the steps necessary to organize a Service Confer-

ence, i.e. a meeting in which all involved participants

(municipality offices, third party administrations, and

entrepreneur) discuss about a case and decide if the

application is acceptable or not.

In this case before running a simulation, through

the available GUI, the user could set up data needed

for the simulation such as for instance the process

he/she wants to simulate among the available ones.

Once activated thought the interface of the simula-

1

Italian law D.P.R. 160/2010 in the article 7.

tion and monitoring framework, the different tasks to

be completed are shown one by one and information

about time, number of attempts and errors are col-

lected (Figure 3).

Once a task is completed the associated score are

computed and updated. In particular, if all the inputs

provided by the user during the task simulation have

been evaluated correct, the framework indicates that

the task has been validated, and will display new tasks

corresponding to the continuation of the process (Fig-

ure 4). Otherwise the simulator will indicate that the

submission is incorrect.

During this first validation, the simulation and

monitoring framework has been used by different end

users inside the Italian Public Administration and

comments and suggestions have been collected. If

from one side all users agreed that the framework rep-

resents a very good means for improving the under-

standing and practice of the administrative process,

from the other side requests for improvements have

been collected. This meanly concerns the usability

of the framework especially in case of collaborative

simulation as well as score visualization and manage-

ment. This validation provides a positive assessment

of the simulation and monitoring framework and a

very important starting point for the next release of

the learning system.

Model-based Learning Assessment Management

751

6 CONCLUSIONS

In this paper, a simulation and monitoring framework

for learning is presented with a particular focus on

the definition of its components and main function-

alities. The proposed framework supports collabora-

tion and social interactions, as well as process visual-

ization, monitoring of learning activities and assess-

ment. The proposed approach includes gamification

concepts that are applied to the simulation of tasks

and activities of the business process to be learned,

so to engage users while training them. Evaluation

scores related to both the simulation sessions and the

overall business process simulation are defined and

computed by the proposed simulation and monitoring

framework for learning assessment purposes.

The application of the proposed framework to a

case study developed inside the Learn PAd project

in the context of Marche Region public administra-

tion evidenced its importance in improving the under-

standing and practice of the administrative process, as

well as the possibility of executing collaborative sim-

ulation and providing learners assessment. Moreover,

this real case study also provided important feedback

for the improvement and extension of the framework

itself during the project duration. Specifically, in the

future we plan: i) to refine the design of some parts of

the architecture, such as the Test Data Repository and

Robot; ii)improve usability concepts of the frame-

work as well as the evaluation score visualization and

management; iii) provide others learner’s evaluation

scores that could take into account also the number of

errors made during the execution of a path; and finally

iv) evaluate the industrial significance and benefits of

the proposed framework in different application areas

of technology enhanced learning.

ACKNOWLEDGEMENTS

This work has been partially funded by the Model-

Based Social Learning for Public Administrations

project (EU FP7-ICT-2013-11/619583).

REFERENCES

(2015). Activiti BPM Platform. http://activiti.org/.

Adesina, A. and Molloy, D. (2010). Capturing and moni-

toring of learning process through a business process

management (bpm) framework. In Proc. of 3rd Inter-

national Symposium for Engineering Education.

Ali, L., Hatala, M., Ga

ˇ

sevi

´

c, D., and Jovanovi

´

c, J. (2012).

A qualitative evaluation of evolution of a learning an-

alytics tool. Computers & Education, 58(1):470–489.

Anderson, P. H. and Lawton, L. (2008). Business simula-

tions and cognitive learning: Developments, desires,

and future directions. Simulation & Gaming.

Bertoli, P., Dragoni, M., Ghidini, C., Martufi, E., Nori, M.,

Pistore, M., and Di Francescomarino, C. (2013). Mod-

eling and monitoring business process execution. In

Proc. of Service-Oriented Computing, pages 683–687.

Springer.

Blumschein, P., Hung, W., and Jonassen, D. H. (2009).

Model-based approaches to learning: Using systems

models and simulations to improve understanding and

problem solving in complex domains. Sense Publish-

ers.

Brocke, J. v. and Rosemann, M. (2014). Handbook on busi-

ness process management 1: Introduction, methods,

and information systems.

Calabr

`

o, A., Lonetti, F., and Marchetti, E. (2015a). Kpi

evaluation of the business process execution through

event monitoring activity. In Proc. of Third Interna-

tional Conference on Enterprise Systems.

Calabr

`

o, A., Lonetti, F., and Marchetti, E. (2015b). Moni-

toring of business process execution based on perfor-

mance indicators. In Proc. of 41st Euromicro-SEAA,

pages 255–258.

Clark, R. C. and Mayer, R. E. (2011). E-learning and

the science of instruction: Proven guidelines for con-

sumers and designers of multimedia learning. John

Wiley & Sons.

Crookall, D. (2010). Serious games, debriefing, and simu-

lation/gaming as a discipline. Simulation & gaming,

41(6):898–920.

Jansen-Vullers, M. and Netjes, M. (2006). Business process

simulation–a tool survey. In Workshop and Tutorial

on Practical Use of Coloured Petri Nets and the CPN

Tools, Aarhus, Denmark, volume 38.

Kapp, K. M. (2012). The gamification of learning and

instruction: game-based methods and strategies for

training and education. John Wiley & Sons.

LearnPAd. Model-Based Social Learning for Public Ad-

ministrations European Project (EU FP7-ICT-2013-

11/619583). http://www.learnpad.eu/.

Maggi, F. M., Montali, M., Westergaard, M., and Van

Der Aalst, W. M. (2011). Monitoring business con-

straints with linear temporal logic: An approach based

on colored automata. In Business Process Manage-

ment, pages 132–147. Springer.

Zribi, S., Calabr

`

o, A., Lonetti, F., Marchetti, E., Jorquera,

T., and Lorr

´

e, J.-P. (2016a). Design of a simulation

framework for model-based learning. In Proc. of Mod-

elsward.

Zribi, S., Jorquera, T., and Lorr

´

e, J.-P. (2016b). Towards

a flexible gamification model for an interoperable e-

learning business process simulation platform. In

Proc. of I-ESA.

LMCO 2016 - Special Session on Learning Modeling in Complex Organizations

752