Switching Behavioral Strategies for Effective Team Formation by

Autonomous Agent Organization

Masashi Hayano, Yuki Miyashita and Toshiharu Sugawara

Dept. of Computer Science and Communications Engineering, Waseda University, 169-8555, Tokyo, Japan

Keywords:

Allocation Problem, Agent Network, Bottom-up Organization, Team Formation, Reciprocity.

Abstract:

In this work, we propose agents that switch their behavioral strategy between rationality and reciprocity de-

pending on their internal states to achieve efficient team formation. With the recent advances in computer

science, mechanics, and electronics, there are an increasing number of applications with services/goals that are

achieved by teams of different agents. To efficiently provide these services, the tasks to achieve a service must

be allocated to agents that have the required capabilities and the agents must not be overloaded. Conventional

distributed allocation methods often lead to conflicts in large and busy environments because high-capability

agents are likely to be identified as the best team member by many agents, resulting in inefficiency of the entire

system due to concentration of task allocation. Our proposed agents switch their strategies in accordance with

their local evaluation to avoid conflicts occurring in busy environments. They also establish an organization in

which a number of groups are autonomously generated in a bottom-up manner on the basis of dependability

in order to avoid the conflict in advance while ignoring tasks allocated by undependable/unreliable agents.

We experimentally evaluate our proposed method and analyze the structure of the organization that the agents

established.

1 INTRODUCTION

Recent advances in information technologies such

as the Internet have enabled computerized systems

to achieve on-demand and real-time controls/services

using timely data captured in the real world. Exam-

ples of applications based on such viewpoints include

the Internet of Things (IoT) (Stankovic, 2014), the

Internet of Services (IoS) (Nain et al., 2010), sensor

networks (Glinton et al., 2008), and grid/cloud com-

puting (Foster, 2002). Tasks to achieve required ser-

vices in these applications are realized within teams,

i.e., by combining a number of different software and

hardware nodes or agents that have specialized func-

tions. In these systems, the nodes are massive, are

located in a variety of positions, and operate in the

Internet autonomously since they are usually created

by different developers. Even so, they have to be

appropriately identified by their functions and per-

formance and then be allocated the suitable and ex-

ecutable components of a task (called a subtask here-

after) to achieve it. Mismatching or excessive allo-

cations of subtasks to agents, which are autonomous

programs to control hardware/software and/or to exe-

cute the allocated subtasks, result in the delay or fail-

ure of services. The teams of agents for the required

tasks need to simultaneously be formed in a timely

manner for timely service provision.

The task allocation problem described above is a

fundamental problem in computer science and many

studies have been conducted in the multi-agent sys-

tems (MAS) context to examine it. For example,

coalitional formation is a theoretical approach in

which (rational) agents find the optimal coalitional

structure (the set of agent groups) that provides the

maximal utilities for a given set of tasks (Dunin-

Keplicz and Verbrugge, 2010; Sheholy and Kraus,

1998). However, this approach assumes that the sys-

tems are static, relatively small and unbusy because it

assumes the (static) characteristic function to calcu-

late the utility of an agent set. It also requires high

computational costs to find (semi-)optimal solutions,

making it impractical when the systems are large and

busy. Another approach that is more closely related to

our method is team formation by rational agents. In

this framework, a number of leaders that commit to

form teams for tasks first select the agent appropriate

for executing each of the subtasks on the basis of the

learning of past interaction and solicit them to form a

team to execute the task. Agents that receive a num-

56

Hayano, M., Miyashita, Y. and Sugawara, T.

Switching Behavioral Strategies for Effective Team Formation by Autonomous Agent Organization.

DOI: 10.5220/0005748200560065

In Proceedings of the 8th International Conference on Agents and Artificial Intelligence (ICAART 2016) - Volume 1, pages 56-65

ISBN: 978-989-758-172-4

Copyright

c

2016 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

ber of solicitations accept one or a few of them de-

pending on their local viewpoints. When a sufficient

number of agents has accepted the solicitations, the

team can succeed in executing the target task. How-

ever, conflicts occur if many solicitations by leaders

are concentrated to only a few capable agents, espe-

cially in large and busy MAS, so the success rate of

team formation decreases in busy environments.

In the real world, people also often form teams to

execute tasks. Of course, we usually behave ratio-

nally, i.e., we decide who will provide the most util-

ity. However, if conflicts in forming teams are ex-

pected to occur and if no prior negotiation is possi-

ble, we often try to find reliable people with whom to

work in advance. Reliable people are usually identi-

fied through past success with cooperative work (Fehr

and Fischbacher, 2002). Furthermore, if the oppor-

tunities for group work are frequent, we try to form

implicit or explicit collaborative structures based on

(mutual) reliability. In an extreme case where team

work with unreliable people is required, we may ig-

nore or understate offers for the sake of possible fu-

ture proposals with more reliable people. Such be-

havior based on reciprocity may be irrational because

offers from non-reciprocal persons are expected to be

rewarding in at least some way. However, it can sta-

bilize collaborative relationships and reduce the pos-

sibility of conflicts in team formations. Thus, we can

expect steady benefits in the future through working

based on reciprocity. To avoid conflicts and improve

efficiency in group work in computerized systems, we

believe that agents should identify which agents are

cooperative and build the agent network on the basis

of mutual reliability that is appropriate for the patterns

and structures of the service requirements.

To avoid conflicts in team formation in large and

busy MAS, we propose a computational method of

enabling efficient team formations that have fewer

conflicts (thereby ensuring stability) by autonomously

generating reliability from reciprocity. The proposed

agents switch between two behavioral strategies, ra-

tionality and reciprocity: they initially form teams ra-

tionally and identify reliable agents through the suc-

cess of past team works and then identify a number

of reliable agents that behave reciprocally. Of course,

they return to the rational strategy if the reliable re-

lationships are dissolved. The concept behind this

proposal is that many conflicts occur in the regime of

only rational agents because such agents always pur-

sue their own utilities. Conversely, the regime of only

reciprocal agents experiences less conflict but seems

to constrain the behavior of some agents in the co-

operative structure without avail. We believe that the

appropriate ratio between rationality and reciprocity

will result in better performances. However, the re-

lationship between the ratios and performance, and

which agents in an agent network should behave ra-

tionally, remains to be clarified. Thus, we propose

agents that switch strategies in a bottom-up manner

by directly observing the reciprocal behavior of oth-

ers and the success rates of team formation.

This paper is organized as follows. In the next

section, we describe related work in task allocation

and reciprocity in human society. Section 3 presents

the model of our problem and framework and Sec-

tion 4 describes the proposed agents that adaptively

switch their behavioral strategies. Then, in Section 5

we experimentally evaluate the performance of team

formation with the regime of the proposed agents by

comparing it with those with regimes of only rational

agents and only cooperative agents with static collab-

orative relationships. We also investigate how the ra-

tios between rational and reciprocal agents vary ac-

cording to the workload. We conclude in Section 6

with a brief summary and mention of future work.

2 RELATED WORK

Achieving allocations using a certain negotiation

method or protocol is a fundamental approach in the

MAS research. The conventional contract net proto-

col (CNP) (Smith, 1980) approach and its extensions

has been studied by many researchers. For exam-

ple, Sandholm (Sandholm and Lesser, 1995) extended

CNP by introducing levels of commitment to make a

commitment breakable with some penalty. One of the

key problems in negotiation protocols is that the num-

ber of messages exchanged for agreement increases

as the number of agents increases. Thus, several stud-

ies have attempted to reduce the number of messages

and thereby improve performance (Parunak, 1987;

Sandholm and Lesser, 1995). Although recent broad-

band networks have eased this problem at the link

level, agents (nodes) are now overloaded by exces-

sive messages, instead. Furthermore, it has been

pointed out that the eager-bidder problem, where a

number of tasks are announced concurrently, occurs

in large-scale MAS , in which case CNP with lev-

els of commitment does not work well (Schillo et al.,

2002). Ishida (Gu and Ishida, 1996) also reported that

busy environments negatively affect the performance

of CNP. Thus, these methods cannot be used in large-

scale, busy environments.

Coalitional formation is a theoretical approach

based on an abstraction in which agents find the op-

timal coalitional structure that provides the maximal

utilities for a given set of tasks (Dunin-Keplicz and

Switching Behavioral Strategies for Effective Team Formation by Autonomous Agent Organization

57

Verbrugge, 2010; Sheholy and Kraus, 1998; Sims

et al., 2003; Sless et al., 2014). Although this tech-

nique has many applications, it assumes static and

relatively small environments because high compu-

tational costs to find (semi-)optimal solutions are re-

quired and the static characteristic function for pro-

viding utilities of agent groups is assumed to be

given. Market-based allocation is another theoreti-

cal approach based on game theory and auction pro-

tocol. In this approach, information concerning allo-

cations is gathered by auction-like bidding. Although

it can allocate tasks/resources optimally in the sense

of maximizing social welfare, it cannot be applied to

dynamic environments where optimal solutions fre-

quently vary. Team formation (or coalitional forma-

tion in a task-oriented model) is another approach in

which individual agents identify the most appropri-

ate member agent for each subtask on the basis of the

learning of functionality and the capabilities of other

agents (Coviello and Franceschetti, 2012; Hayano

et al., 2014; Genin and Aknine, 2010; Abdallah and

Lesser, 2004). However, this may cause conflicts in

large-scale and busy MAS, as mentioned in Section 1.

Our aim is to reduce such conflicts by building upon

our previous work (Hayano et al., 2014) and introduc-

ing agents that switch from rational behavior to recip-

rocal behavior (and vise versa) on the basis of results

of past collaboration.

Many studies in computational biology, sociology,

and economics have focused on the groups that have

been organized in human societies (Smith, 1976). For

example, many studies have tried to explain irrational

behaviors for collaboration in group work using reci-

procity. The simplified findings of these studies are

that people do not engage in selfish actions toward

others and do not betray those who are reciprocal and

cooperative, even if selfish/betraying actions could re-

sult in higher utilities (Gintis, 2000; Fehr et al., 2002;

Panchanathan and Boyd, 2004). For example, Pan-

chanathan and Boyd (Panchanathan and Boyd, 2004)

stated that cooperation could be established from in-

direct reciprocity (Fehr and Fischbacher, 2002), while

the authors of (Fehr et al., 2002; Gintis, 2000) insisted

that fairness in cooperation may produce irrational be-

havior because rational agents prefer a higher payoff

even though it may reduce the payoff to others. How-

ever, agents do not betray relevant reciprocal agents

because such a betrayal would be unfair. Fehr and

Fischbacher (Fehr and Fischbacher, 2002) demon-

strated how payoffs shared among collaborators af-

fected strategies and found that punishment towards

those who distribute unfair payoffs is frequently ob-

served, although administering the punishment can be

costly (Fehr and Fischbacher, 2004). In this paper,

we attempt to introduce the findings above into the

behaviors of computational agents.

3 MODEL

3.1 Agent and Tasks

We use a simpler model for representing tasks and the

associated utilities than that used in (Hayano et al.,

2014) because our focus is more on identifying which

learning parameters and mechanisms contribute to the

self-organization of groups for team formation.

Let A = {1, .. . , n} be a set of agents. Agent i ∈ A

has its associated resources (corresponding to func-

tions or capabilities) H

i

= (h

1

i

, . . . , h

p

i

), where h

k

i

is 1

or 0 and p is the number of resource types. Parame-

ter h

k

i

= 1 means that i has the capability for the k-th

resources. Task T consists of a number of subtasks

S

T

= {s

1

, . . . , s

l(T )

}, where l(T ) is a positive integer.

If there is no confusion, we denote l = l(T) simply.

Subtask s

j

requires some amount of resources, which

is denoted by (r

1

s

j

, . . . , r

p

s

j

), where r

k

s

j

= 0 or 1 and

r

k

s

j

= 1 means that k-th resource is required to execute

s

j

. Agent i can execute s

j

only when

h

k

i

≥ r

k

j

for 1 ≤ ∀k ≤ p

is satisfied. We often identify subtask s and its asso-

ciated resource s = (r

1

s

, . . . , r

p

s

). We can say that task

T is executed when all the associated subtasks are ex-

ecuted.

3.2 Execution by a Team

Task T is executed by a set of agents by appropriately

allocating each subtask to an agent. A team for exe-

cuting task T is defined as (G, σ, T ), where G is the

set of agents. Surjective function

σ : S

T

−→ G

describes the assignment of S

T

where subtask s ∈ S

T

is allocated to σ(s) ∈ G. We assume that σ is a one-

to-one function for simplicity, but we can omit this

assumption in the discussion below. The team for

executing T has been successfully formed when the

conditions

h

k

σ(s)

≥ r

k

s

(1)

hold for ∀s ∈ S

T

and 1 ≤ ∀k ≤ p.

After the success of team formation for task T ,

the team receives the associated utility u

T

≥ 0. In

general, the utility value may be correlated with, for

example, the required resources and/or the priority.

ICAART 2016 - 8th International Conference on Agents and Artificial Intelligence

58

However, here we focus on improving the success rate

of team formation by autonomously establishing re-

liable groups, so we simplify the utility calculation

and distributions; hence, all agents involved in form-

ing the team receive u

T

= 1 equally when they have

succeeded but receive u

T

= 0 otherwise. Note that

agents are confined to one team and cannot join an-

other team simultaneously. This assumption is rea-

sonable in some applications: for example, agents in a

team are often required to be synchronized with other

agents. Another example is in robotics applications

where the physical entities are not sharable due to

spatial restrictions (Zhang and Parker, 2013). Even

in a computer system that can schedule multiple sub-

tasks, selecting one team corresponds to the decision

on which subtasks should be done first.

3.3 Forming Teams

For a positive number λ, λ tasks per tick are requested

by the environment probabilistically and stored in the

system’s task queue Q = hT

1

, T

2

, . . . i, where Q is an

ordered set and tick is the unit of time used in our

model. Parameter λ is called the workload of the sys-

tem. Agents in our model are in either an inactive or

active state, where an agent in the active state is in-

volved in forming a team and otherwise is inactive.

Inactive agents first decide to play a role, leader or

member; how they select the role is discussed later.

Inactive agent i playing a leader role picks up task

T from the head of Q , and becomes active. If i can-

not find any task, it stays inactive. Active agent i then

finds subtask s ∈ S

T

that i can execute. Then, i iden-

tifies |S

T

| − 1 agents to allocate subtasks in S

T

\ {s}.

(If i cannot find any executable subtask in S

T

, it must

identify |S

T

| agents. In our explanation below, we as-

sume that i can execute one of the subtasks, but we

can omit this assumption if need.) How these agents

are identified will be discussed in Section 4. The set

of i and the identified agents is called the pre-team

and is denoted by G

p

T

. Agent i sends the agents in G

p

T

messages soliciting them to join the team and then

waits for the response. If the agents that accept the

solicitations satisfy condition (1), the team (G, σ, T )

is successfully formed, where G is the set of agents

to which the subtask in S

T

is allocated, and the as-

signment σ is canonically defined on the basis of the

acceptances. Then, i notifies G \ {i} of the success-

ful team formation and all agents in G continue to be

active for d

T

ticks for task execution. At this point,

i (and agents in G) return to inactive. However, if an

insufficient number of agents for T accept the solici-

tation, the team formation by i fails and i discards T

and notifies the agents of the failure. The agents in G

then return to inactive.

When an agent i that decides to play a member,

it looks at the solicitation messages from leaders and

selects the message whose allocated subtask is exe-

cutable in i. The strategy for selecting the solicitation

message is described in Section 4. Note that i selects

only one message, since i can join only one team at

a time. Agent i enters the active state and sends an

acceptance message to the leader j of the selected so-

licitation and rejection messages to other leaders if

they exist. Then, i waits for the response to the accep-

tance. If it receives a failure message, it immediately

returns to the inactive state. Otherwise, i joins the

team formed by j and is confined for duration d

T

to its

execution, after which it receives u

T

= 1 and returns

to inactive. If i receives no solicitation messages, it

continues in the inactive state.

Note that we set the time required for forming a

team to d

G

ticks, thus, the total time for executing a

task is d

G

+ d

T

ticks. We also note that leader agent

i can select pre-team members redundantly; for ex-

ample, i selects R ≥ 1 agents for each subtask in S

T

(where R is an integer). This can increase the suc-

cess rate of team formation but may restrain other

agents redundantly. We make our model simpler by

setting R = 2, as our purpose is to improve efficiency

by changing behavioral strategies.

4 PROPOSED METHOD

Our agents have three learning parameters. The first

is called the degree of expectation for cooperation

(DEC) and is used to decide which agents they should

work with again. The other two are called the degree

of success as a leader (DSL) and the degree of success

as a member (DSM) and are used to identify which

role is likely to be successful for forming teams. We

define these parameters and explain how agents learn

and use them in this section.

4.1 Learning for Cooperation

Agent i has the DEC parameter c

i j

for ∀ j (∈ A \ {i})

with which i has worked in the same team in past. The

DEC parameters are used differently depending on

roles. When i plays a leader, i selects pre-team mem-

bers in accordance with the DEC values, i.e., agents

with higher DEC values are likely to be selected. How

pre-team members are selected is discussed in Sec-

tion 4.3. Then, the value of c

i j

is updated by

c

i j

= (1 − α

c

) · c

i j

+ α

c

· δ

c

, (2)

where 0 ≤ α

c

≤ 1 is the learning rate. When j replies

to i’s solicitation message with an acceptance, c

i j

is

Switching Behavioral Strategies for Effective Team Formation by Autonomous Agent Organization

59

updated with δ

c

= 1; otherwise, it is updated with

δ

c

= 0. Therefore, j with a high DEC value is ex-

pected to accept the solicitation by i.

After i agrees to join the team that is initiated by

leader j, i also updates c

i j

using Eq. (2), where δ

c

is

the associated utility u

T

, i.e., δ

c

= 1 when the team

is successfully formed and δ

c

= 0 otherwise. Agent i

also selects the solicitation messages according to the

DEC values with the ε-greedy strategy.

After the value of c

i j

in i has increased, j may

become uncooperative for some reasons. To forget

the outdated cooperative behavior, the DEC values are

slightly decreased in every tick by

c

i j

= max(c

i j

− ν

F

, 0), (3)

where 0 ≤ ν

F

1.

4.2 Role Selection and Learning

Agent i learns the values of DSL and DSM to decide

which role, leader or member, would result in a higher

success rate of team formation. For this purpose, after

the team formation trial for task T , parameters e

leader

i

and e

member

i

are updated by

e

leader

i

= (1 − α

r

) · e

leader

i

+ α

r

· u

T

and

e

member

i

= (1 − α

r

) · e

member

i

+ α

r

· u

T

,

where u

T

is the received utility value that is 0 or 1 and

0 < α

r

< 1 is the learning rate for the DSL and DSM.

When i is inactive, it compares the values of DSL

and DSM: specifically, if e

leader

i

> e

member

i

, i decides to

play a leader, and if e

leader

i

< e

member

i

, i plays a mem-

ber. If e

leader

i

= e

member

i

, its role is randomly selected.

Note that when i selects the leader as the role but can

find no task in Q , i does nothing and will select its

role again in the next tick.

4.3 Agent Switching Behavioral

Strategies

Our main objective in this work is to propose a new

type of agent that switches its behavioral strategy, ra-

tional or reciprocal, depending on its internal state. In

this section, we first discuss how worthy-to-cooperate

agents (called dependable agents) are identified and

then go over the behaviors of rational and reciprocal

agents. Finally, we explain how agents select their

behavioral strategies.

4.3.1 Dependable Agents

Agent i has the set of dependable agents D

i

⊂ A \ {i}

with the constraint |D

i

| ≤ X

F

, where X

F

is a positive

integer and is the upper limit of dependable agents.

The elements of D

i

are decided as follows. For the

given threshold value T

D

> 0, after c

i j

is updated, if

c

i j

≥ T

D

and |D

i

| < X

F

are satisfied, i identifies j as

dependable by setting D

i

= D

i

∪ { j}. Conversely, if

∃k ∈ D

i

s.t. c

ik

< T

D

, k is removed from D

i

.

4.3.2 Behaviors of Agents with Rational and

Reciprocal Strategies

Behavioral strategies mainly affect to decide collab-

orators. Both leader agents with rational and recip-

rocal behavioral strategies select the members of the

pre-team based on the DEC values with ε-greedy se-

lection. Initially, agent i sets G

p

T

= {i} and allocates

itself to the subtask s

0

(∈ S

T

) executable in i.

1

Then,

˜

S

T

= S

T

\ {s

0

} and i sorts the elements of A by de-

scending order of the DEC values. For each subtask

s

k

∈

˜

S

T

, i seeks from the top of A an agent that can ex-

ecute s

k

and is not in G

p

T

and then adds it to G

p

T

with

probability 1 − ε. However, with probability ε, the

agent for s ∈ G

p

T

is selected randomly. If R = 1, the

current G

p

T

is the pre-team member for T. If R > 1,

i repeats the seek-and-add process for subtasks in

˜

S

T

R − 1 times.

Behavioral differences appear when agents play

members. An agent with rational behavioral strat-

egy selects the solicitation message sent by the leader

whose DEC value is the highest among the received

ones. An agent with reciprocal behavioral strategy

selects the solicitation message in the same way but

ignores any solicitation messages sent by leaders not

in D

i

. Note that by ignoring non-dependable agents,

no solicitation messages may remain in i (i.e., all so-

licitations will be declined). We understand this sit-

uation in which i does not accept the messages for

the sake of possible future proposals from dependable

agents, and thus, we can say that this ignorance may

be irrational. All agents also adopt the ε-greedy se-

lection of solicitation messages, whereby the selected

solicitation message is replaced with another message

randomly selected from the received messages with

probability ε.

4.4 Selection of Behavioral Strategies

When agent i decides to play a member, it also de-

cides its behavioral strategy on the basis of the DSM

values e

member

i

and D

i

. If the DSM e

member

i

is larger

than the parameter T

m

(> 0), and if D

i

6=

/

0, i adopts

the reciprocal strategy; otherwise, it adopts rational-

ity. The parameter T

m

is a positive number and is used

in the threshold for the criterion of whether or not i

1

s

0

may be null, as mentioned before.

ICAART 2016 - 8th International Conference on Agents and Artificial Intelligence

60

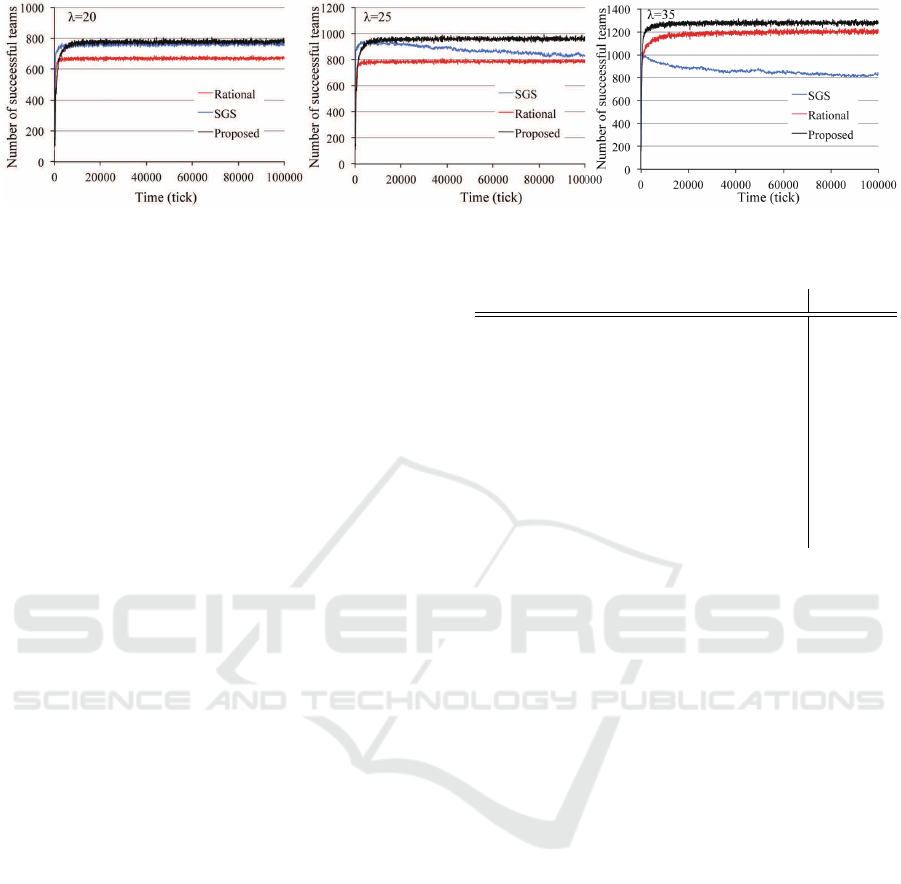

Figure 1: Team formation performance.

has had a sufficient degree of success working as a

member. Thus, T

m

is called the member role thresh-

old for reciprocity. When i plays a leader, its strategy

is not affected by how members are selected.

We have to note that i memorizes dependable

agents on the basis of DEC values that reflect the suc-

cess rates of team formation so that it can expect the

utility after that. In this sense, the DEC values are

involved in rational selections, and therefore depend-

able agents are identified on the basis of rational deci-

sion. In our framework, after a number of dependable

agents are identified, i changes its behavior. There-

fore, we can say that at first, i pursues only the utili-

ties, but when it has identified a number of dependable

agents that may bring utilities, i tries not only to work

with them preferentially but also to reduce chances of

unexpected uncooperative behaviors.

5 EXPERIMENTS

5.1 Experimental Setting

We investigated the performance (the success rates) of

team formation in the society of the proposed agents,

the structure of behavioral strategies, and the net-

works of dependability and team formation achieve-

ment. We also experimentally compared it with the

performance of those in the society of rational agents

and that of the proposed agents of the static group

regime whose structures are initially given and fixed.

A rational agent always behaves on the basis of ratio-

nality, thus corresponding to the case where X

F

= 0.

The agents with the static group regime are initially

grouped into teams of six random agents, and any

agent that initiates a task always allocates the associ-

ated subtasks to other agents in the same team. Thus,

this type of agent corresponds to the case where D

i

is

fixed to the members of the same group and R = 1.

We call this type of agent the static group-structured

agents or the SGS agents.

Let the number of agents |A| be 500 and the num-

ber of resource types p be six. The amount of k-th

Table 1: Parameter values in experiments.

Parameter Value

Initial value of DEC c

i

0.1

Initial value of DSL e

leader

i

0.5

Initial value of DSM e

member

i

0.5

Learning rate α (= α

c

, α

r

) 0.05

Epsilon in ε-greedy selection ε 0.01

Decrement number γ

F

0.00005

Threshold for dependability T

D

0.5

Max. number of dependable agents X

F

5

Member role threshold for reci-

procity T

m

0.5

resource of agent i, h

k

i

, and the amount of the k-th re-

source required for task s, r

k

s

, is 0 or 1. We assume

that at least one resource in H

i

is set to 1 to avoid

null-capability agents. On the other hand, only one

resource is required in s, so ∃k, r

k

s

= 1, and r

k

0

s

= 0

if k

0

6= k. A task consists of three to six subtasks, so

l(T ) (= |S

T

|) is the integer between three and six. The

duration for forming a team, d

G

, is set to two and the

duration for executing a task, d

T

, is one. Other param-

eters used in Q-learning for agent behaviors are listed

in Table 1. Note that while ε-greedy selection and Q-

learning often used for parameter learning, where we

use the shared learning rate α and random selection

rate ε. The experimental data shown below are the

mean values of ten independent trials.

5.2 Performance Results

Figure 1 plots the number of successful teams ev-

ery 50 ticks in societies consisting of the SGS, ra-

tional, and proposed agents when workload λ is 20,

25, and 35. Note that since all agents individually

adopted ε-greedy selection with ε = 0.01 when se-

lecting member roles and solicitation messages, ap-

proximately four to five tasks per tick according to

the value of workload λ (so, 200 to 250 tasks every

50 ticks) were used for challenges to find new so-

lutions, but in these situations, forming teams was

likely to fail. We also note that λ = 20, 25, and 35

Switching Behavioral Strategies for Effective Team Formation by Autonomous Agent Organization

61

-10

0

10

20

30

40

50

60

70

10 15 20 25 30 35 40

SGS

Rational

Workload λ

Improvement ratio I(str)

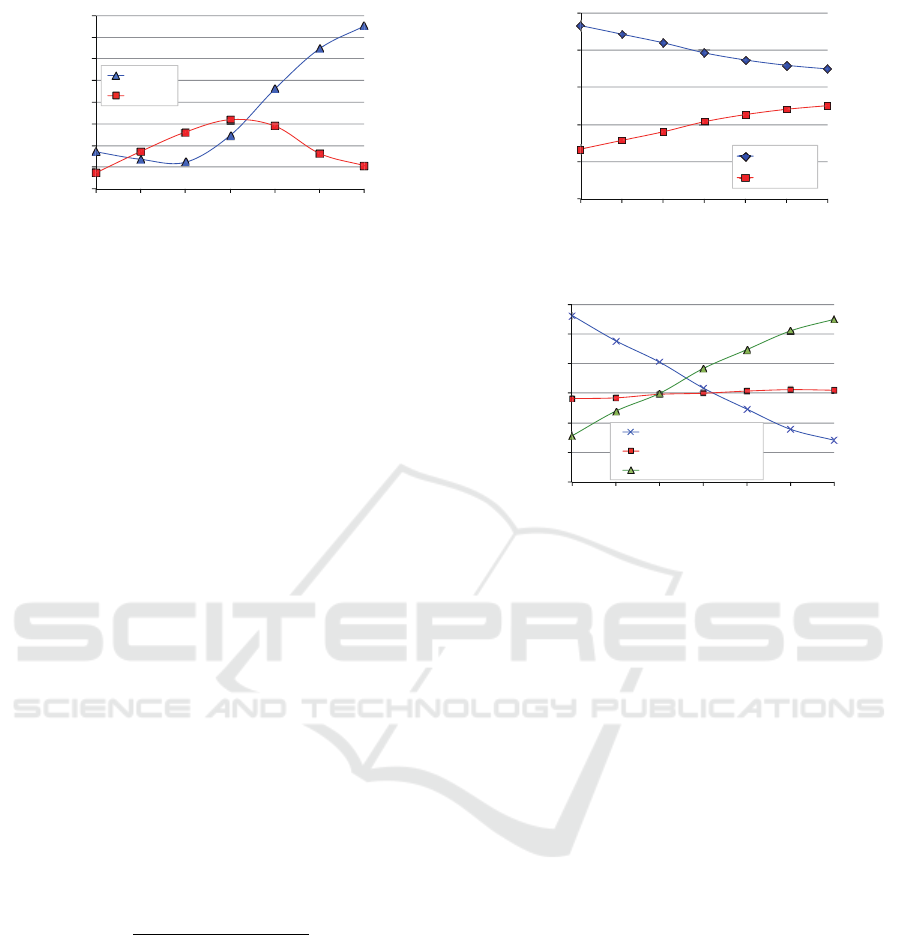

Figure 2: Performance improvement ratios.

corresponds to the environment where work is low-

loaded, balanced (slightly lower than the system’s

limit of performance when λ is around 25 and 30),

and overloaded, respectively. Figure 1 shows that the

performance with the proposed agents always outper-

formed those with other strategies. When the system

load was low, the performance with the SGS agents

was (slightly lower but) almost identical to that with

the proposed agents. However, when λ = 25 and 35,

their performance gradually decreased. In the busy

environment, an agent that learned to play a leader

in a group encountered many team formation failures,

thereby starting to learn that it was ineffective as a

leader. In such cases, other agents started to play

the leader roles instead, but among the SGS agents,

groups are static and no leaders existed in a number

of groups. Thus, the number of team formation fail-

ures increased. Because many conflicts occurred in

the society of only the rational agents, their perfor-

mance was always lower than that with the proposed

agents. However, in a busy environment (λ = 35), the

performance by the proposed agents also reached a

ceiling and their difference became smaller.

As Fig. 1 suggests that the improvement ratios

might vary depending on the work load, we plotted

the ratios in Fig. 2, where the improvement ratio I(str)

was calculated as

I(str) =

N(proposed) − N(str)

N(proposed)

× 100,

where N(str) is the number of successful teams per

50 ticks with agents whose behavioral strategy is str

(“proposed,” “SGS,” or “rational.”

Figure 2 indicates that the performance improve-

ment ratio of the society of the SGS agents, I(SGS),

was small when the workload was low (λ ≤ 20) but

that it monotonically increased in accordance with the

system’s workload when λ > 20. The improvement

ratios to the rational agents I(rational) depict a char-

acteristic curve, becoming maximal around λ = 25

and 30, which is near but below the system’s limit,

as mentioned above. We think this is the effect of au-

tonomous organization in the society of the proposed

100

150

200

250

300

350

10 15 20 25 30 35 40

Reciprocal

Rational

Workload λ

Number of agents

Figure 3: Selected Behavioral Strategies at 100000 ticks.

0

50

100

150

200

250

300

10 15 20 25 30 35 40

Recipriocity (stable)

Rationality (stable)

Fractional

Workload λ

Number of agents

Figure 4: Stability of Behavioral Strategies.

method, as reported in (Corkill et al., 2015); we will

discuss this topic later. Finally, when the workload

was low (λ less than ten), we could not observe any

clear difference in their performances since conflict in

team formation rarely occurred.

5.3 Behavioral Analysis

To understand why teams were effectively formed

in the society of the proposed agents, we first ana-

lyzed the characteristics of the behavioral strategy and

role selections. Table 2 lists the numbers of leader

agents in which e

leader

i

> e

member

i

were satisfied at the

time of 100,000 ticks (so they played leaders) when λ

was varied. As shown, we found that the number of

leader agents slightly decreased when the workload

increased, but were almost invariant around 100, and

thus, 400 agents were likely to play member roles.

The number of subtasks required to complete a single

task fixed between three and six with uniform prob-

ability and the structures of the task distribution did

not change in our experiments. Hence, the number of

leaders that initiate the team formation also seems to

be unchanged.

On the other hand, behavioral strategies were se-

lected differently depending on the workload. The re-

lationships between the workload and the structures

of behavioral strategies at the end of the experiment

are plotted in Fig. 3. It indicates that reciprocity was

selected by over half of the agents, but this number

ICAART 2016 - 8th International Conference on Agents and Artificial Intelligence

62

Table 2: Number of leaders.

Workload (λ) 10 15 20 25 30 35 40

Number of leaders 107.8 104.7 102.4 99.5 97.9 97.3 97.6

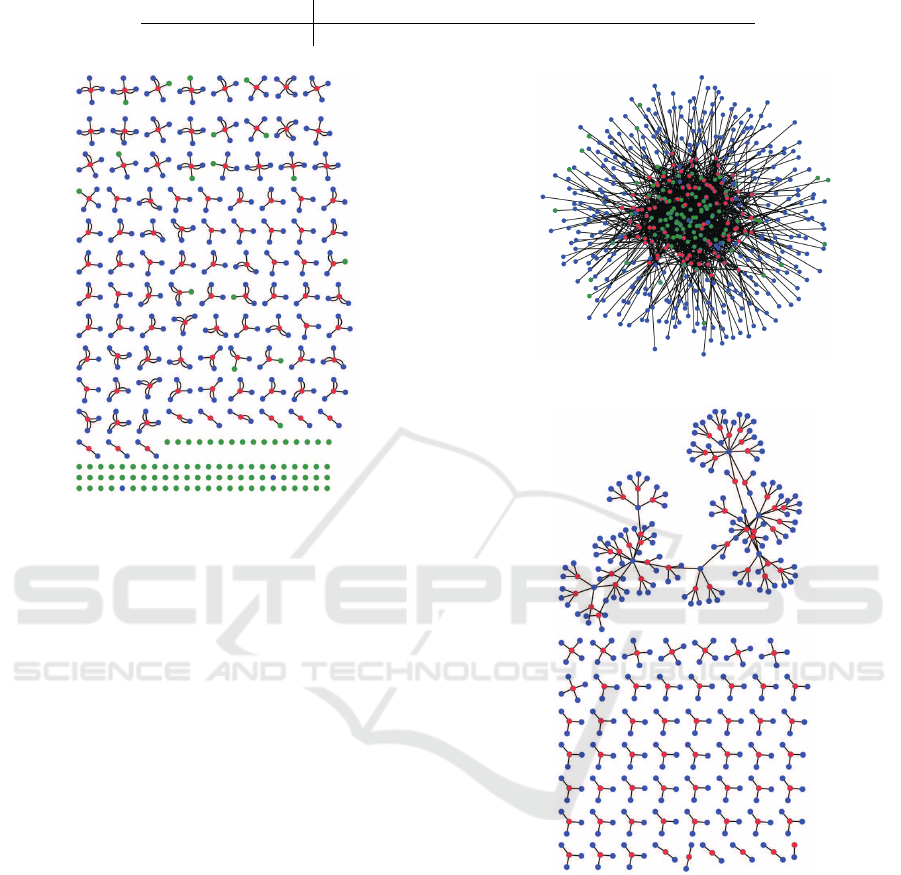

Figure 5: Structure of dependability.

gradually decreased with the increase of the work-

load. Furthermore, in Fig. 4 we plot the number of se-

lected behavioral strategies during 95,000 to 100,000

ticks, where the agents that stably selected, for exam-

ple, reciprocity mean that they always selected reci-

procity during 95,000 to 100,000 ticks and “fractional

strategy” means agents that changed their strategy at

least once during this period.

First, we can observe that the number of agents

stably selecting reciprocity as their behavioral strat-

egy decreased. This is expected because they have

a greater chance of forming teams as the workload

increases, and thus they may have more chances to

change their strategies. Nevertheless, the number

of agents stably selecting rationality barely changed

around 30% of the agent population and if anything

slightly increased in accordance with the workload.

Hence, we can say that a number of the recipro-

cal agents occasionally become rational agents and

worked like freelancers. We discuss this further in

Section 5.5.

5.4 Structural Analysis

We investigated the structure of dependability based

on the elements of D

i

for ∀i ∈ A. The structure

of the proposed agents in a certain trial at the time

of 100,000 ticks is shown in Fig. 5, where λ = 25

and green nodes are agents with rationality that were

Figure 6: Structure based on formed teams (all agents).

Figure 7: Structure based on formed teams (reciprocal).

isolated. Blue and red nodes correspond to agents

with reciprocity that played members (blue) and lead-

ers (red). We can see that, although the upper limit

of dependable agents X

F

is set to five, all mem-

ber agents with reciprocity have only one dependable

agent. This is because agents can belong to only one

team at the same time.

We believe that all agents formed their team on the

basis of the network of dependability. Figure 6 shows

the network based on teams actually formed during

the period from 95,000 to 100,000 ticks. A link be-

tween agents is generated only when leader-member

relationships were established more than or equal to

Switching Behavioral Strategies for Effective Team Formation by Autonomous Agent Organization

63

ten times. Figure 6 appears dense and complicated

because agents with rationality (green nodes) pursued

their utilities and formed with any agents. Thus, in

Fig. 7 we omit the green nodes from Fig. 6. This fig-

ure shows when compared with Fig. 5 that almost all

reciprocal agents formed teams with only dependable

agents having higher priority. However, they were

sometimes required to form larger teams, and there-

fore solicited non-dependable agents that were not

in D

i

. The agents selected in this situation behaved

rationally. Note that there is a single but relatively

larger connected component in the upper part of Fig 7.

A number of the reciprocal agents in this connected

component changed their behavioral strategies dur-

ing the observation period. However, their selected

leaders were limited, so it was neither dense nor com-

plicated like the network in Fig. 6, where a majority

of rational agents were connected with more than ten

leader agents during the period.

5.5 Discussion

We believe that the mixture of reciprocity and ratio-

nality produces an efficient and effective society. The

appropriate ratio between these behavioral strategies

is still unknown and probably depends on a variety of

factors such as task structure, workload, and topology

of the agent network. Our study is the first attempt to

pursue this ratio by introducing autonomous strategy

decision making through social and local efficiency.

We also believe that bottom-up construction of or-

ganization, such as the group/association structures

based on dependability discussed in this paper, is an-

other important issue to achieve a truly efficient soci-

ety of computer agents like a human society. Thus,

another aim of this study is to clarify the mechanism

to establish such an organization in a bottom-up man-

ner. Our experimental results suggest that reciprocity

is probably what generates the organization, but fur-

ther experimentation is required to clarify this.

As shown in Fig. 2, if we look at the curve of

I(rational) from the society of the proposed agents, it

peaked around λ = 25 to 30, which is near but below

the system’s limit of task execution. This peak, called

the sweet spot in (Corkill et al., 2015), is caused by the

appropriate organizational structure of the agent soci-

ety. In our case, the proposed agents established their

groups on the basis of dependability through their ex-

perience of cooperation. We want to emphasize that

this curve indicates an important feature of the organi-

zation: namely, that its benefit rises up to the surface

when the efficiency is really required. When the sys-

tem is not busy, any simple method works well, and

when the system is beyond the limit of the theoretical

performance, no method can help the situation. When

the workload is near the system’s limit, the poten-

tial capabilities of agents must be maximally elicited.

The experimental results suggest that the organization

generated by the proposed agents partly elicited their

capabilities in situation where it was really required.

Figures 5 and 7 indicate that agents generate

groups of mostly four or five members on the basis

of their dependability, and actually they form teams

from only within their groups if the number of sub-

tasks is less than or equal to four or five. Even if

they generated larger groups for larger tasks, only

one or two agents were solicited from outside of the

groups. Because these agents were not beneficial

enough for them to stay in the groups of dependabil-

ity, they dropped out of the groups and behaved ra-

tionally. If the solicited agents behaved reciprocally,

the solicitation messages might be ignored, and ratio-

nal agents are thus likely to be solicited. Therefore,

rational agents work like freelancers, compensating

for the lack of member agents in larger tasks. The

role of rational agents from this viewpoint is essen-

tial, especially in busy environments: when the work-

load is high, the rational agents can earn more utili-

ties, thereby increasing the ratio of rational agents as

shown in Fig. 3.

6 CONCLUSION

We proposed agents that switch their behavioral strat-

egy between rationality and reciprocity in accordance

with internal states on the basis of past cooperative

activities and success rates of task executions in or-

der to achieve efficient team formation. Through their

cooperative activities, agents with reciprocal behav-

ior established groups of dependable agents, thus im-

proving the efficiency of team formation by avoid-

ing conflicts, especially in large and busy environ-

ments. We experimentally investigated the perfor-

mance of the society of the proposed agents, the struc-

tures of selected roles and behavioral strategies, and

the agent network through actual cooperation in the

same teams.

Our future work is to more deeply investigate the

bottom-up organization by the proposed agents. For

example, we have to examine how the entire perfor-

mance is affected by the ratios of reciprocity to ratio-

nality. We also need to clarify the protocols to explic-

itly form an association based on mutual dependabil-

ity.

ICAART 2016 - 8th International Conference on Agents and Artificial Intelligence

64

REFERENCES

Abdallah, S. and Lesser, V. R. (2004). Organization-

based cooperative coalition formation. In 2004

IEEE/WIC/ACM Int. Conf. on Intelligent Agent Tech-

nology (IAT 2004), pages 162–168. IEEE Computer

Society.

Corkill, D., Garant, D., and Lesser, V. (2015). Exploring

the Effectiveness of Agent Organizations. In Proc.

of the 19th Int. Workshop on Coordination, Organiza-

tions, Institutions, and Norms in Multiagent Systems

(COIN@AAMAS 2015), pages 33–48.

Coviello, L. and Franceschetti, M. (2012). Distributed team

formation in multi-agent systems: Stability and ap-

proximation. In Proc. on 2012 IEEE 51st Annual

Conf. on Decision and Control (CDC), pages 2755–

2760.

Dunin-Keplicz, B. M. and Verbrugge, R. (2010). Teamwork

in Multi-Agent Systems: A Formal Approach. Wiley

Publishing.

Fehr, E. and Fischbacher, U. (2002). Why Social Prefer-

ences Matter - The Impact of Non-Selfish Motives on

Competition. The Economic Journal, 112(478):C1–

C33.

Fehr, E. and Fischbacher, U. (2004). Third-party punish-

ment and social norms. Evolution and Human Behav-

ior, 25(2):63–87.

Fehr, E., Fischbacher, U., and G

¨

achter, S. (2002). Strong

reciprocity, human cooperation, and the enforcement

of social norms. Human Nature, 13(1):1–25.

Foster, I. (2002). What is the grid? a three point checklist.

Grid Today, 1(6).

Genin, T. and Aknine, S. (2010). Coalition formation

strategies for self-interested agents in task oriented do-

mains. 2010 IEEE/WIC/ACM Int. Conf. on Web Intel-

ligence and Intelligent Agent Technology, 2:205–212.

Gintis, H. (2000). Strong reciprocity and human sociality.

Journal of Theoretical Biology, 206(2):169 – 179.

Glinton, R., Scerri, P., and Sycara, K. (2008). Agent-based

sensor coalition formation. In 2008 11th Int. Conf. on

Information Fusion, pages 1–7.

Gu, C. and Ishida, T. (1996). Analyzing the Social Behav-

ior of Contract Net Protocol . In de Velde, W. V. and

Perram, J. W., editors, Proc. of 7th European Work-

shop on Modelling Autonomous Agents in a Multi-

Agent World (MAAMAW 96), LNAI 1038, pages 116

– 127. Springer-Verlag.

Hayano, M., Hamada, D., and Sugawara, T. (2014). Role

and member selection in team formation using re-

source estimation for large-scale multi-agent systems.

Neurocomputing, 146(0):164 – 172.

Nain, G., Fouquet, F., Morin, B., Barais, O., and Jezequel,

J. (2010). Integrating IoT and IoS with a Component-

Based Approach. In Proc. of the 36th EUROMICRO

Conf. on Software Engineering and Advanced Appli-

cations (SEAA 10), pages 191–198.

Panchanathan, K. and Boyd, R. (2004). Indirect reci-

procity can stabilize cooperation without the second-

order free rider problem. Nature, 432(7016):499–502.

Parunak, H. V. D. (1987). Manufacturing experience with

the contract net. In Huhns, M., editor, Distributed Ar-

tificial Intelligence, pages 285–310.

Sandholm, T. and Lesser, V. (1995). Issues in automated

negotiation and electronic commerce: Extending the

contract net framework. In Lesser, V., editor, Proc.

of the First Int. Conf. on Multi-Agent Systems (IC-

MAS’95), pages 328–335.

Schillo, M., Kray, C., and Fischer, K. (2002). The Eager

Bidder Problem: A Fundamental Problem of DAI and

Selected Solutions. In Proc. of First Int. Joint Conf.

on Autonomous Agents and Multiagent Systems (AA-

MAS2002), pages 599 – 606.

Sheholy, O. and Kraus, S. (1998). Methods for task alloca-

tion via agent coalition formation. Journal of Artificial

Intelligence, 101:165–200.

Sims, M., Goldman, C. V., and Lesser, V. (2003). Self-

organization through bottom-up coalition formation.

In Proc. of the Second Int. Joint Conf. on Autonomous

Agents and Multiagent Systems, AAMAS ’03, pages

867–874, New York, NY, USA. ACM.

Sless, L., Hazon, N., Kraus, S., and Wooldridge, M. (2014).

Forming coalitions and facilitating relationships for

completing tasks in social networks. In Proc. of the

2014 Int. Conf. on Autonomous Agents and Multi-

agent Systems, AAMAS ’14, pages 261–268.

Smith, J. M. (1976). Group Selection. Quarterly Review of

Biology, 51(2):277–283.

Smith, R. G. (1980). The Contract Net Protocol: High-

Level Communication and Control in a Distributed

Problem Solver. IEEE Transactions on Computers,

C-29(12):1104–1113.

Stankovic, J. (2014). Research Directions for the Internet

of Things. IEEE Internet of Things Journal, 1(1):3–9.

Zhang, Y. and Parker, L. (2013). Considering inter-task

resource constraints in task allocation. Autonomous

Agents and Multi-Agent Systems, 26(3):389–419.

Switching Behavioral Strategies for Effective Team Formation by Autonomous Agent Organization

65