RGB-D and Thermal Sensor Fusion

Application in Person Tracking

Ignacio Rocco Spremolla

1,2∗

, Michel Antunes

1

, Djamila Aouada

1

and Bj

¨

orn Ottersten

1

1

Interdisciplinary Centre for Security, Reliability and Trust, University of Luxembourg, Luxembourg City, Luxembourg

2

ENS Cachan, Universit

´

e Paris-Saclay, Paris, France

Keywords:

Sensor Fusion, RGB-D, Thermal Sensing, Person Tracking.

Abstract:

Many systems combine RGB cameras with other sensor modalities for fusing visual data with complementary

environmental information in order to achieve improved sensing capabilities. This article explores the possi-

bility of fusing a commodity RGB-D camera and a thermal sensor. We show that using traditional methods, it

is possible to accurately calibrate the complete system and register the three RGB-D-T data sources. We pro-

pose a simple person tracking algorithm based on particle filters, and show how to combine the mapped pixel

information from the RGB-D-T data. Furthermore, we use depth information to adaptively scale the tracked

target area when radial displacements from the camera occur. Experimental results provide evidence that this

allows for a significant tracking performance improvement in situations with large radial displacements, when

compared to using only a tracker based on RGB or RGB-T data.

1 INTRODUCTION

There are many applications that make simultaneous

use of visual data and other sensing modalities. In

the past few years, extensive research has been car-

ried out for fusing RGB and Depth sensors (RGB-

D). A non-exhaustive list of examples where this type

of multi-modal systems are employed include human

pose estimation (Shotton et al., 2011), action recog-

nition (Vemulapalli et al., 2014), simultaneous local-

ization and mapping (Endres et al., 2012), and people

tracking (Luber et al., 2011). Combining RGB and

thermal sensors (RGB-T) has been investigated to a

lesser extent, and mainly used for robust person track-

ing (Stolkin et al., 2012; Kumar et al., 2014).

Currently, approaches that combine the three sen-

sor modalities discussed previously are being inves-

tigated (Mogelmose et al., 2013; Vidas et al., 2013;

Nakagawa et al., 2014; Matsumoto et al., 2015; Sus-

perregi et al., 2013). Each modality supports a partic-

ular capability: depth sensors provide real-time and

robust 3D scene structure information; RGB cameras

provide rich visual information; and thermal sensors

allow to compute discriminative temperature signa-

tures. In this paper, we pursue this line of work, and

extend the RGB-T fusion scheme presented in (Talha

∗

The totality of this work was performed while the au-

thor was an intern at SnT, University of Luxembourg.

and Stolkin, 2012) to RGB-D-T. We present a cali-

bration pipeline (intrinsics and extrinsics) that allows

to fuse the information acquired by the different sen-

sor modalities, and propose a person tracking algo-

rithm based on RGB-D-T data. Experimental results

provide evidence that combining the three modalities

for the purpose of people tracking using a traditional

particle filter based framework is more robust when

compared to using only a single or pairs of sensors.

1.1 Contributions

A common problem when fusing the data captured

with multiple sensor modalities is to find the corre-

sponding regions between the data. In this paper, we

address this problem and present a pipeline for the

intrinsic and extrinsic calibration of all the sensors

(RGB, D and T). This allows to generate registered

RGB, depth and thermal images, which have a one-

to-one pixel correspondence. From the best of our

knowledge, using such mapped data for the purpose

of person tracking has not been addressed before.

Moreover, this data registration process is not partic-

ular to the followed tracking approach, and could be

applied as a pre-processing step for other tracking al-

gorithms as well.

Furthermore, we present a simple scheme based

on a particle filter for fusing the RGB-D-T data for the

612

Spremolla, I., Antunes, M., Aouada, D. and Ottersten, B.

RGB-D and Thermal Sensor Fusion - Application in Person Tracking.

DOI: 10.5220/0005717706100617

In Proceedings of the 11th Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2016) - Volume 3: VISAPP, pages 612-619

ISBN: 978-989-758-175-5

Copyright

c

2016 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

purpose of person tracking. Compared to the previous

work based on RGB-T data only (Talha and Stolkin,

2012), we use the additional depth information in two

different ways: 1) in computing additional descriptors

extracted from the depth data; and 2) in continuously

adapting the tracked target size with an appropriate

scaling factor (adaptive scaling), yielding increased

robustness to radial motion.

1.2 Organization

This paper is organized as follows: in the next sec-

tion, we briefly discuss the most relevant works that

use RGB, RGB-D, RGB-T or RGB-D-T data for the

purpose of object or people tracking. In Section 3, we

present the experimental setup, the calibration pro-

cedure, and the process of image registration. Sec-

tion 4 discusses the theory behind the proposed per-

son tracking algorithm, and how we take advantage

of the different sensor modalities. Finally, Section 5

presents the experimental results, where the tracking

accuracy using RGB-D-T is evaluated with respect to

using only RGB or RGB-T.

2 RELATED WORK

This section briefly reviews the most relevant works

that use RGB, RGB-D, RGB-T or RGB-D-T data for

tracking purposes.

In the past, extensive work has been done in ob-

ject tracking from RGB video sequences (P

´

erez et al.,

2002; Nummiaro et al., 2002; Nummiaro et al., 2003).

More recently, with the popularization of RGB-D sen-

sors, objects or people trackers based RGB-D data

have started to be studied. In practice, the use of

depth sensors makes the process more robust against

illumination changes at a lower computational cost,

and the provided 3D structure information simplifies

many tasks such as background subtraction and ob-

ject segmentation. Choi et al. (Choi and Christensen,

2013) describe a system for detecting people from im-

age and depth sensors on board of a robot, where de-

tection algorithms using the two different sources of

information are fused using a sampling based method.

Choi and Christensen (Choi and Christensen, 2013)

present a particle filtering approach for object pose

tracking, where the likelihood of each particle is eval-

uated using features extracted from RGB and D data.

Going one step further, Jafari et al. (Jafari et al., 2014)

present a multi-person detection and tracking system

suitable for mobile robots and head-worn cameras.

The authors use an extended Kalman filter framework

and use different types of algorithms for extracting

relevant information from the multi-modal data (e.g.

3D point classification, visual odometry, RGB based

sliding window pedestrian detection).

There are also multi-modal systems based on

RGB-T data for tracking applications. Stolkin et

al. (Stolkin et al., 2012) present a Bayesian fusion

method for combining pixel information from thermal

imaging and conventional colour cameras for tracking

a moving target. Very recently, Kumar et al. (Kumar

et al., 2014) integrate a low-resolution thermal sensor

with an RGB camera into a single system. The basic

idea is to apply an RGB tracker and use the thermal

information for eliminating a variety of false detec-

tions. Talha and Stolin (Talha and Stolkin, 2012) em-

ploy a particle filter tracking approach that fuses both

sources of data, and adaptively weights the different

imaging modalities based on a new discriminability

cue.

Only very recently, RGB-D-T based systems have

started to be used. Some applications include person

re-identification (Mogelmose et al., 2013), and 3D

temperature visualization (Vidas et al., 2013; Naka-

gawa et al., 2014; Matsumoto et al., 2015). From the

best of our knowledge, there is only one work that

fuses RGB-D-T data for people tracking and follow-

ing (Susperregi et al., 2013). The algorithm is based

on a particle filter for merging the information pro-

vided by the different sensors, which includes also a

Laser Rangefinder. The overall pipeline includes spe-

cial detectors, such as an emergency-vest and a leg de-

tector. Our aim is different in the sense that we want

to fuse the multi-modal RGB-D-T data following a

low-level strategy, not requiring application specific

high-level reasoning, such as special environment or

target based detectors.

Our work extends the previously discussed frame-

work (Talha and Stolkin, 2012) for the case of RGB-

D-T data, where the additional depth information pro-

vides additional cues that, as evaluated experimen-

tally in Section 5, shows to be very effective for the

purpose of person detection and tracking.

3 EXPERIMENTAL SETUP

This section briefly introduces the experimental setup

we used to acquire and map RGB-D-T data.

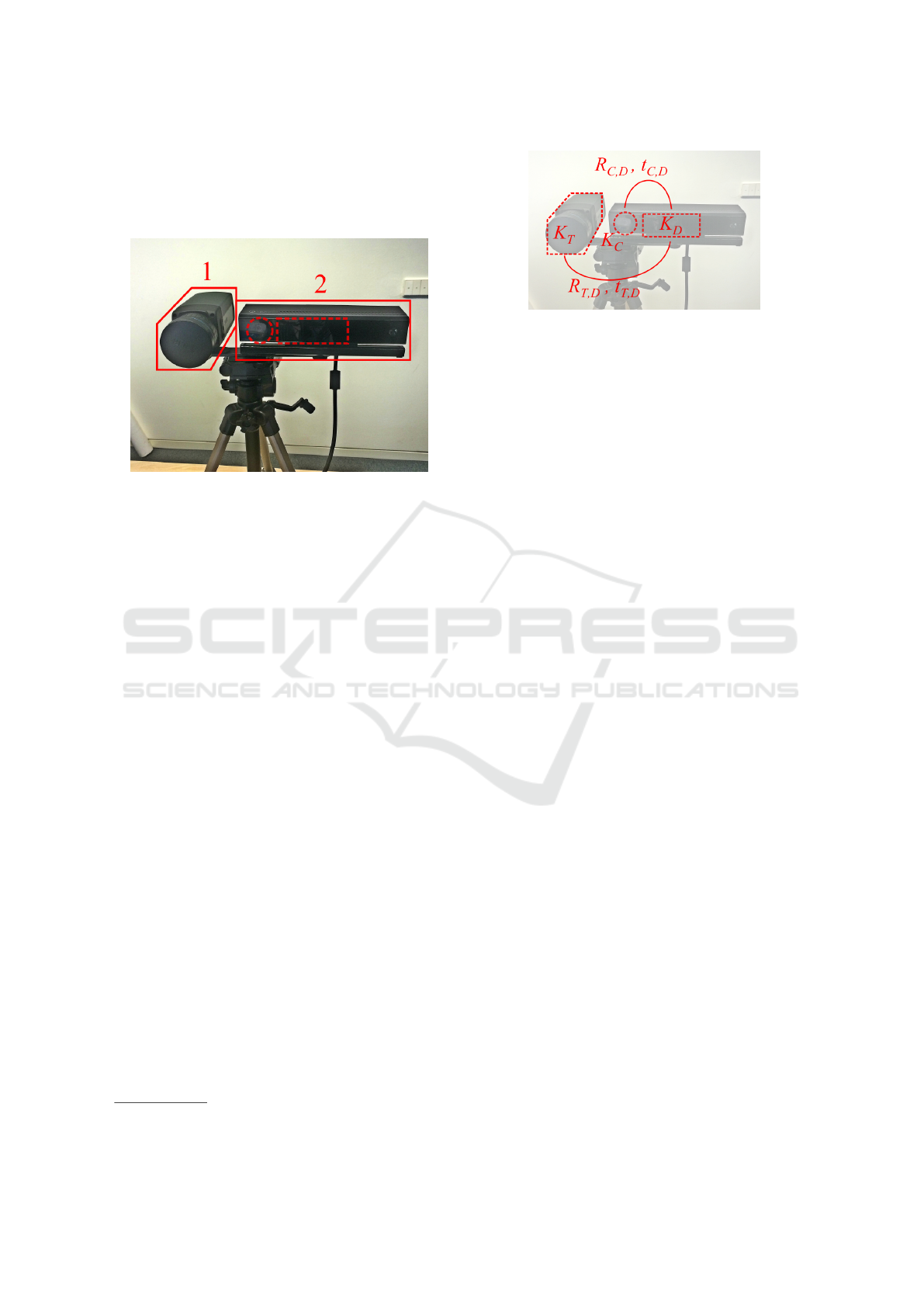

The hardware used for this research was a Mi-

crosoft Kinect v2 RGB-D sensor, and a FLIR A655sc

Thermal camera. The two sensors were positioned

side-by-side using a rigid support, as depicted in Fig.

1. Using this setup, the following image data is ac-

quired:

• RGB or colour image (C): 1920×1080 pixels at

RGB-D and Thermal Sensor Fusion - Application in Person Tracking

613

30 fps

1

,

• Depth/IR image (D): 512×424 pixels at 30 fps,

• Thermal image (T): 640×480 pixels at 50 fps.

Figure 1: The setup: 1. FLIR A655sc thermal camera, 2.

Microsoft Kinect v2 RGB-D sensor (dashed circle: RGB

camera, dashed rectangle: ToF depth/IR sensor).

In order to fuse the data captured by each sensor,

two steps are required: sensor calibration and image

registration. These steps will be explained in the fol-

lowing sections.

3.1 System Calibration

System calibration is required in order to find the

transformation of 3D points to image points in each

camera. This transformation involves two steps, the

determination of the pin-hole camera model param-

eter matrix K (intrinsic calibration) and the estima-

tion of the relative sensor poses [R,t](extrinsic cali-

bration).

Before we move on, it is important to mention

that the depth stream from the Kinect v2 comes from

a time-of-flight camera that produces an additional

IR stream from the amplitude information. As the

two streams come from the same sensor, we call it

the Depth/IR sensor. Although we do not use the IR

stream for tracking purposes, we do use it for calibrat-

ing the sensor. This IR stream does not contain any

thermal information, and should not to be confused

with the stream coming from the thermal camera.

The cameras are calibrated using the well-known

toolbox of Bouguet (Bouguet, 2004). The following

steps are performed:

• Intrinsic calibration of the RGB camera using a

checkerboard pattern, obtaining its intrinsic ma-

trix K

C

,

1

fps: frame per second.

Figure 2: The intrinsic and extrinsic calibration parameters.

• Intrinsic calibration of the Depth/IR sensor using

a checkerboard pattern, obtaining its intrinsic ma-

trix K

D

,

• Intrinsic calibration of the Thermal camera using

a disjoint squares pattern, obtaining its intrinsic

matrix K

T

,

• Computation of the relative pose of the RGB cam-

era with respect to the Depth/IR sensor using a

checkerboard pattern, obtaining the transforma-

tions [R

C,D

,t

C,D

],

• Computation of the relative pose of the Thermal

camera with respect to the Depth/IR sensor using

a disjoint squares pattern, obtaining the transfor-

mations [R

T,D

,t

T,D

].

The computed calibration parameters and trans-

formations are illustrated in Figure 2. Note that

the depth camera frame is used as the reference

frame to extrinsically calibrate the RGB and the ther-

mal cameras. In the calibration steps involving the

RGB and/or the Depth/IR cameras, a standard pa-

per checkerboard pattern was used, as it is visible in

both modalities. However, for the calibration steps

involving the thermal camera, a disjoint-squares pat-

tern with cut-out squares and placed against a thermal

backdrop was employed, as previously done in (Vidas

et al., 2012).

3.2 Image Registration

In order to find the corresponding regions in the dif-

ferent image modalities, we perform a registration

process on the raw images from the different sen-

sors to generate registered C, T and D images having

pixel-to-pixel correspondence. This is accomplished

by computing a 3D point cloud from the depth image,

and then projecting each 3D point to the RGB and

Thermal images to assign to it a colour and thermal

intensity. These points are then re-projected to the

depth image plane, obtaining two new images: one

with the corresponding RGB colour information, and

the other with the corresponding thermal intensities.

VISAPP 2016 - International Conference on Computer Vision Theory and Applications

614

(a) Color image (RGB data) (b) Thermal image (T data) (c) Depth image (D data)

Figure 3: Registered images with pixel-to-pixel correspondence.

The final registered images, having pixel-to-pixel cor-

respondences are shown in Figure 3

The advantage of using this strategy is that the reg-

istered set of images simplifies the subsequent fusion

step, and the pixel-to-pixel correspondence persists,

even when the target has significant radial motion. It

is important to note, however, that some pixels are

marked as invalid in the final images (e.g. red points

in Figure 3(a)), corresponding to pixels that are out-

of-range or detected as being occluded in at least one

of the cameras. These unassigned pixels are discarded

when computing the descriptors for tracking.

4 RGB-D-T BASED PERSON

TRACKING

This section describes the tracking algorithm which

we applied with the RGB-D-T system presented in the

previous section, for the purpose of person tracking.

In order to assess the advantages of the RGB-D-T

system, we employ a simple particle filter approach,

and avoid using specific motion models and com-

plex particle re-sampling strategies based on previous

frames.

For each frame, the probability distribution of the

target is estimated using a discrete set of N parti-

cles, where the impact of each particle i is appropri-

ately weighted using w

i

. Each particle i is defined

by its centre position (p

i

x

, p

i

y

), and its foreground re-

gion width and height (p

i

w

, p

i

h

), respectively. It also

encodes a local background region, defined between

the foreground region, and an outer rectangular region

of dimensions (λp

w

,λp

h

), where λ is a user defined

constant. A particle example is shown in Figure 4.

The estimated target state, or the tracker output

for each frame, is computed by weighting the particle

features:

p

α

=

N

∑

i=1

p

i

α

w

i

,

Figure 4: Each particle is defined by the parameters

p

x

, p

y

, p

w

, p

h

of the foreground region ( f g), and by a back-

ground region (bg).

where p

α

corresponds to any of the parameters

p

x

, p

y

, p

w

, p

h

.

4.1 Data Descriptors

For each particle and for each image modality, the

foreground and background regions are used for com-

puting appropriate descriptors. As described next, we

use histogram based descriptors because they are fast

to compute, and exhibit some invariance to rotations,

partial occlusions and moderate non-rigid deforma-

tions (Nummiaro et al., 2002).

RGB based Descriptor. For the colour modality,

we convert the RGB image to a normalized colour-

space which we will denote by rgb, where r = R/(R+

G + B), g = G/(R + G + B), and b = B/(R + G +

B). The colour normalization discards the illumina-

tion information for achieving robustness to lighting

changes. Since two components are enough for char-

acterizing the normalized colour space (r +g+b = 1),

we compute a 2D histogram H

C

using the pair (r,g).

D based Descriptor. For the depth modality, we

first compute a 3D normal vector for each data point

RGB-D and Thermal Sensor Fusion - Application in Person Tracking

615

by fitting a 3D plane to a pre-defined local neighbour-

hood. A 2D histogram H

D

is then computed using

the corresponding polar angle θ and azimuthal angle

ϕ information.

T based Descriptor. For the thermal modality,

we compute a 1D histogram H

T

using directly the

intensity values of the thermal image.

All the three descriptors are computed for the

manually-selected target region in the first frame of

the sequence, and defined as being the target model

{H

t

C

,H

t

D

,H

t

T

}. The size of the target region (t

w

,t

h

)

and its mean depth t

d

are also stored and later used

for constructing appropriate particle hypotheses. Re-

mark that the target model is not relearned along the

sequence.

For each particle hypothesis, the three descriptors

are computed using the corresponding foreground re-

gion, obtaining {H

f g

C

,H

f g

D

,H

f g

T

}. Additionally, the

same descriptors are also computed for the particle

background, obtaining {H

bg

C

,H

bg

D

,H

bg

T

}.

A usual measure for the comparison of two his-

tograms p = {p

(i)

}

n

i=1

and q = {q

(i)

}

n

i=1

of n bins, is

the Bhattacharyya similarity coefficient B[p,q] (Num-

miaro et al., 2003):

B[p, q] =

n

∑

i=1

q

p

(i)

q

(i)

We employ it for the comparison of particle

and target histograms. Each particle foreground

descriptor is compared against the correspond tar-

get model descriptor, obtaining three coefficients

{B

f g,t

C

,B

f g,t

D

,B

f g,t

T

} for the colour, depth and thermal

data, respectively. By computing these coefficients,

we are comparing the particle foreground descriptors

with the target model, and are able to assess its simi-

larity. Next, the same histogram comparison approach

is used for analysing the particle background descrip-

tors and the target model, obtaining three coefficients

{B

bg,t

C

,B

bg,t

D

,B

bg,t

T

}. These coefficients are used as-

sessing how similar the background is to the target

model, which is called the level of camouflaging of

that particular particle.

4.2 Multi-modal Fusion

In order to fuse the information from the different

modalities and determine the overall appropriateness

of each particle, we extend the RGB-D based method

presented in (Talha and Stolkin, 2012) to RGB-D-

T data. The idea is to compute an enhanced Bhat-

tacharyya coefficient B

f

, which combines the param-

eters B

f g,t

C

, B

f g,t

D

and B

f g,t

T

in a way such that less

weight is given to modalities where camouflaging oc-

curs. The computation of B

f

is done as follows:

B

f

= αB

f g,t

C

+ β B

f g,t

T

+ γ B

f g,t

D

,

where

α =

B

bg,t

T

+ B

bg,t

D

2(B

bg,t

C

+ B

bg,t

T

+ B

bg,t

D

)

,

β =

B

bg,t

C

+ B

bg,t

D

2(B

bg,t

C

+ B

bg,t

T

+ B

bg,t

D

)

,

and γ =

B

bg,t

C

+ B

bg,t

T

2(B

bg,t

C

+ B

bg,t

T

+ B

bg,t

D

)

.

By doing so, we are essentially computing a weighted

average of B

f g,t

T

, B

f g,t

C

and B

f g,t

D

using adaptive

weights that depend on the camouflaging of the com-

plementary modalities. This ensures the desired effect

of reducing the importance of modalities that could

increase the uncertainty of the estimation approach.

Regarding the particle weight assignment, we first

apply an exponential function on B

f

, with the aim of

stretching the range of values

ˆw = e

−(1−B

f

)/(2σ

2

)

,

where σ = 0.2 is an empirical constant. The final

weight w

i

of the ith particle is computed by normaliz-

ing ˆw

i

by the sum of the weights over the N particles:

w

i

=

ˆw

i

∑

N

j=1

ˆw

j

.

4.3 Adaptive Target Scale

In many tracking applications, the tracked objects do

not present significant radial distance changes with

respect to the camera reference frame when com-

pared to their lateral displacements. This produces the

“size” of the tracked object to remain approximately

constant along the tracking sequence.

In this work, we tried to address the problem of

tracked objects at a short range from the camera, and

which can present significant radial motion. In order

to address this issue, we used the depth information

to adjust the width and height of the particle window.

This is achieved by extracting an image section from

the depth image around each particle centre using the

previous particle size (p

w

, p

h

). Then, a histogram is

computed using the depth values from this region, and

the particle depth centre p

d

is estimated by determin-

ing the position of the mode of the histogram. Finally,

the particle size

p

w

=t

w

s,

p

h

=t

h

s,

(1)

VISAPP 2016 - International Conference on Computer Vision Theory and Applications

616

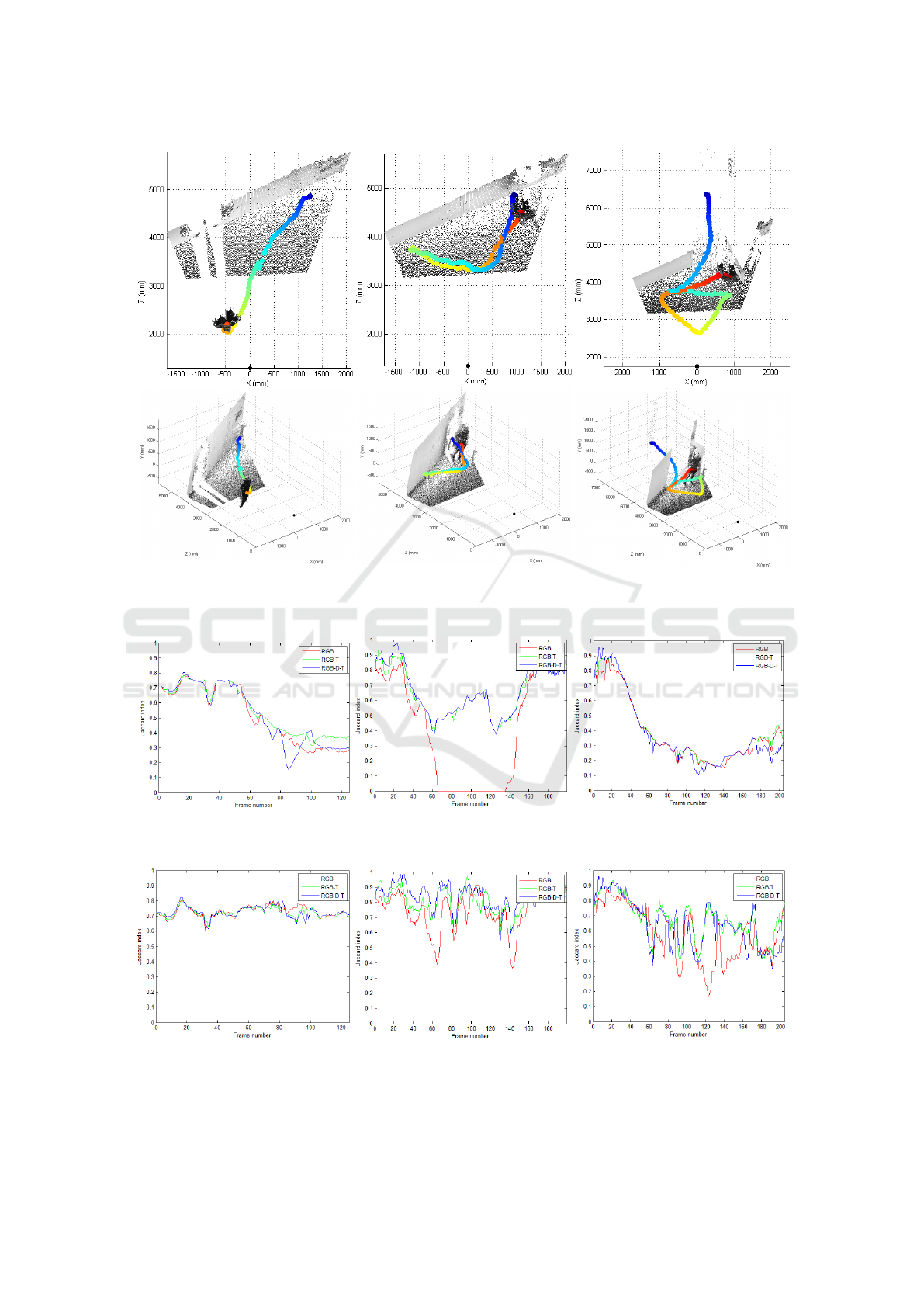

(a) Sequence 1

(b) Sequence 2

(c) Sequence 3

Figure 5: Trajectories of the different sequences: the colouring from blue to red is used for identifying different time instants

(blue - start, red - finish); the black dot represents the camera position. Top: top view; bottom: perspective view.

(a) Sequence 1 (b) Sequence 2 (c) Sequence 3

Figure 6: Tracking accuracy - Constant target size.

(a) Sequence 1 (b) Sequence 2 (c) Sequence 3

Figure 7: Tracking accuracy - With adaptive scaling.

RGB-D and Thermal Sensor Fusion - Application in Person Tracking

617

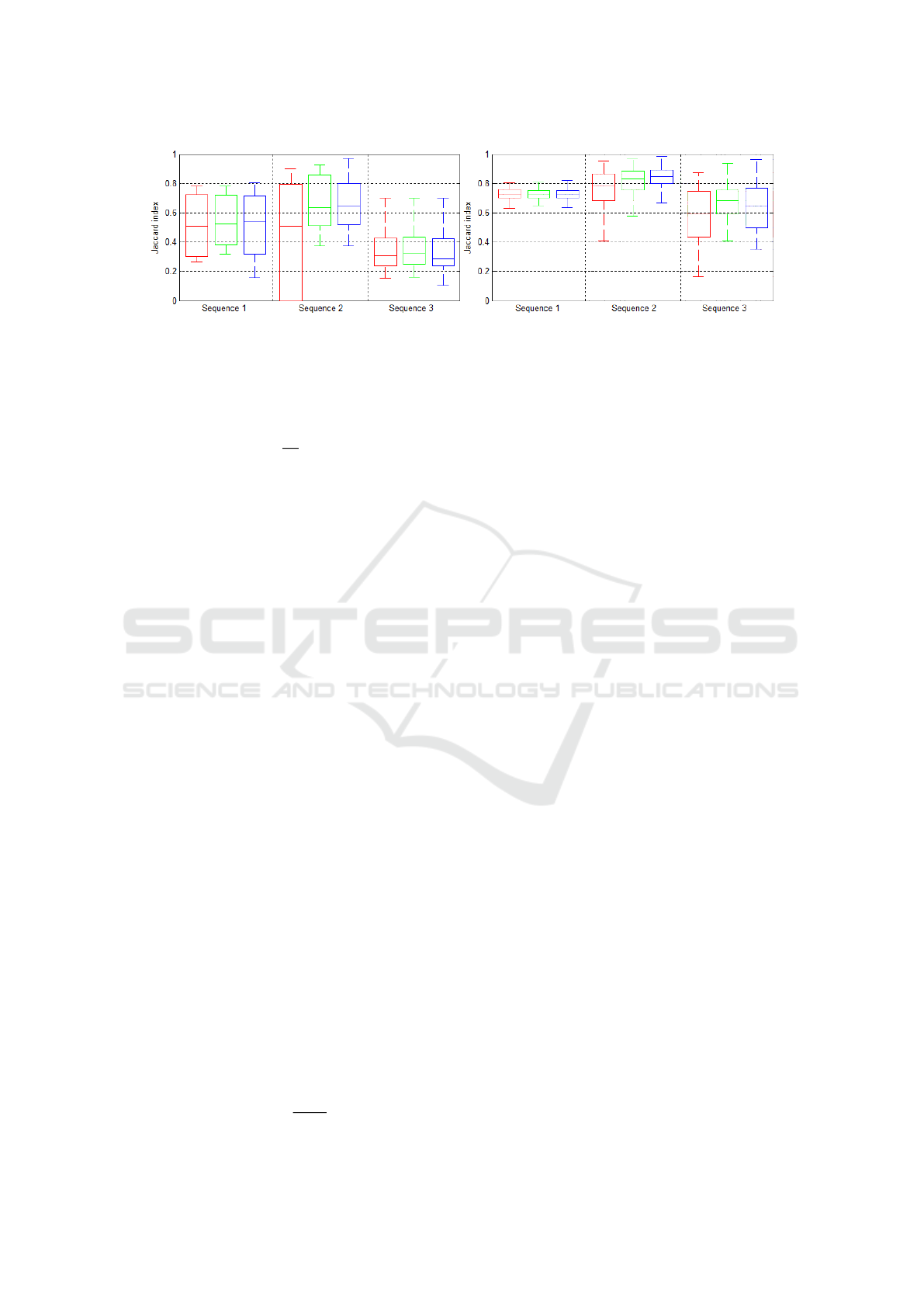

(a) Constant target size scaling (b) With adaptive scaling

Figure 8: Tracking accuracy - Red: RGB, green: RGB-T, blue: RGB-D-T.

is adjusted by scaling the target size (t

w

,t

h

) using a

scale-factor s, based on the depth-ratio between the

target size and depth

s =

t

d

p

d

. (2)

5 EXPERIMENTAL EVALUATION

For the experimental evaluation, we use three video

sequences of a person moving in an indoor scene,

which are composed of about 200 frames each, and

were taken at 20fps. All the sequences include signif-

icant radial motion.

The first sequence corresponds to a person per-

forming a diagonal motion, going from the far right

to the near left of the scene. The trajectory described

by the person can be observed in Figure 5(a). In the

second sequence, the person moves closer and farther

from the camera twice, and the returns to the origi-

nal position. The trajectory is shown in Figure 5(b).

Finally, in the third sequence, the person is initially

positioned at a large distance from the camera setup

(≈ 6.3m), and then performs a sequence of fast move-

ments around the room (including jumps). This tra-

jectory can be observed in Figure 5(c).

5.1 Evaluation

For the evaluation of the proposed tracking algorithm,

ground-truth data was generated for the three video

sequences. For this, a human operator manually se-

lected a rectangular region covering the tracked per-

son from head to feet and shoulder width, for one ev-

ery five frames of each video. The parameters for the

intermediate frames were then linearly interpolated.

Regarding the tracking quality, we decided to use

a measure that considers the area of overlap between

the ground-truth target region and the tracked region.

For this, the Jaccard index was selected:

J(A,B) =

A ∩ B

A ∪ B

.

From the definition, we can see that the Jaccard index

is bounded between 0 and 1, where 0 corresponds to

completely disjoint regions (no overlap), and 1 cor-

responds to identical regions (perfect overlap). This

measure is widely used in the literature, e.g. in (Ev-

eringham et al., 2010) for object category recognition

and detection.

5.2 Results

For each video, three different sensor combinations

were considered: RGB, RGB-T and RGB-D-T. More-

over, for each of these options, two runs were con-

ducted: one using constant window size, and another

one using adaptive window size scaling as described

in Section 4.3.

For each case, the Jaccard index of the tracked re-

gion against the ground-truth region was computed.

The results can be observed in Figures 6 and 7, while

in Figure 8 the mean Jaccard index values are shown.

As expected, the accuracy is considerable low

when using constant target sizes due to the radial mo-

tion of the person moving in the scene. The combi-

nations RGB-T and RGB-D-T show superior perfor-

mance when compared to the single RGB modality.

For the case where adaptive scaling is used, we

can see that the three sensing combinations have con-

siderably better performance in all the sequences.

Note that this is only possible in a multi-modal frame-

work where depth data is available. Furthermore, the

descriptors based on RGB-D-T show slight overall ac-

curacy improvements when compared to the other ap-

proaches.

6 CONCLUSIONS

We have investigated the problem of fusing the data

captured by a low-cost RGB-D camera with a ther-

mal sensor. We showed how to completely calibrate

the multi-modal system, and proposed a simple per-

son tracking algorithm using mapped RGB-D-T data.

VISAPP 2016 - International Conference on Computer Vision Theory and Applications

618

By using the depth data to adaptively scale the target

size, we proved that the tracker can resist to signifi-

cant radial motions with good accuracy based on the

Jaccard index. Moreover, we presented a simple way

to extend the RGB-T tracker presented in (Talha and

Stolkin, 2012) to RGB-D-T, by using a histogram of

3D normals as depth descriptor. Although the depth

feature we used did not significantly improve the ac-

curacy of the tracker in the tested video sequences,

we believe it could improve its robustness in other

more complicated sequences involving the interaction

of several persons.

In this work, we modelled the target model using

a single histogram for each data source. An inter-

esting extension would be to use a multi-part model,

and investigate how to efficiently compute histogram

descriptors for each target using part specific fusion

schemes. Finally, we the usage of a depth descriptor

based on local shape information such as curvature

distributions, instead of 3D normals, could add addi-

tional robustness to human deformations.

REFERENCES

Bouguet, J.-Y. (2004). Camera calibration toolbox for mat-

lab.

Choi, C. and Christensen, H. I. (2013). Rgb-d object track-

ing: A particle filter approach on gpu. In Intelligent

Robots and Systems (IROS), 2013 IEEE/RSJ Interna-

tional Conference on, pages 1084–1091. IEEE.

Endres, F., Hess, J., Engelhard, N., Sturm, J., Cremers, D.,

and Burgard, W. (2012). An evaluation of the rgb-

d slam system. In Robotics and Automation (ICRA),

2012 IEEE International Conference on.

Everingham, M., Van Gool, L., Williams, C. K., Winn, J.,

and Zisserman, A. (2010). The pascal visual object

classes (voc) challenge. International journal of com-

puter vision, 88(2):303–338.

Jafari, O. H., Mitzel, D., and Leibe, B. (2014). Real-time

rgb-d based people detection and tracking for mobile

robots and head-worn cameras. In Robotics and Au-

tomation (ICRA), 2014 IEEE International Confer-

ence on, pages 5636–5643. IEEE.

Kumar, S., Marks, T. K., and Jones, M. (2014). Improv-

ing person tracking using an inexpensive thermal in-

frared sensor. In Computer Vision and Pattern Recog-

nition Workshops (CVPRW), 2014 IEEE Conference

on, pages 217–224. IEEE.

Luber, M., Spinello, L., and Arras, K. O. (2011). People

tracking in rgb-d data with on-line boosted target mod-

els. In Proc. of The International Conference on Intel-

ligent Robots and Systems (IROS).

Matsumoto, K., Nakagawa, W., Saito, H., Sugimoto, M.,

Shibata, T., and Yachida, S. (2015). Ar visual-

ization of thermal 3d model by hand-held cameras.

In Proceedings of the 10th International Conference

on Computer Vision Theory and Applications, pages

480–487.

Mogelmose, A., Bahnsen, C., Moeslund, T. B., Clap

´

es,

A., and Escalera, S. (2013). Tri-modal person re-

identification with rgb, depth and thermal features. In

Computer Vision and Pattern Recognition Workshops

(CVPRW), 2013 IEEE Conference on, pages 301–307.

IEEE.

Nakagawa, W., Matsumoto, K., de Sorbier, F., Sugimoto,

M., Saito, H., Senda, S., Shibata, T., and Iketani,

A. (2014). Visualization of temperature change us-

ing rgb-d camera and thermal camera. In Com-

puter Vision-ECCV 2014 Workshops, pages 386–400.

Springer.

Nummiaro, K., Koller-Meier, E., and Van Gool, L. (2002).

Object tracking with an adaptive color-based parti-

cle filter. In Pattern Recognition, pages 353–360.

Springer.

Nummiaro, K., Koller-Meier, E., and Van Gool, L. (2003).

An adaptive color-based particle filter. Image and vi-

sion computing, 21(1):99–110.

P

´

erez, P., Hue, C., Vermaak, J., and Gangnet, M. (2002).

Color-based probabilistic tracking. In Computer vi-

sionECCV 2002, pages 661–675. Springer.

Shotton, J., Fitzgibbon, A., Cook, M., Sharp, T., Finocchio,

M., Moore, R., Kipman, A., and Blake, A. (2011).

Real-time human pose recognition in parts from sin-

gle depth images. In Proceedings of the 2011 IEEE

Conference on Computer Vision and Pattern Recogni-

tion.

Stolkin, R., Rees, D., Talha, M., and Florescu, I. (2012).

Bayesian fusion of thermal and visible spectra camera

data for region based tracking with rapid background

adaptation. In Multisensor Fusion and Integration for

Intelligent Systems (MFI), 2012 IEEE Conference on,

pages 192–199. IEEE.

Susperregi, L., Mart

´

ınez-Otzeta, J. M., Ansuategui, A.,

Ibarguren, A., and Sierra, B. (2013). Rgb-d, laser and

thermal sensor fusion for people following in a mobile

robot. Int. J. Adv. Robot. Syst.

Talha, M. and Stolkin, R. (2012). Adaptive fusion of infra-

red and visible spectra camera data for particle filter

tracking of moving targets. In Sensors, 2012 IEEE,

pages 1–4. IEEE.

Vemulapalli, R., Arrate, F., and Chellappa, R. (2014). Hu-

man action recognition by representing 3d skeletons

as points in a lie group. In Computer Vision and Pat-

tern Recognition (CVPR), 2014 IEEE Conference on.

Vidas, S., Lakemond, R., Denman, S., Fookes, C., Sridha-

ran, S., and Wark, T. (2012). A mask-based approach

for the geometric calibration of thermal-infrared cam-

eras. Instrumentation and Measurement, IEEE Trans-

actions on, 61(6):1625–1635.

Vidas, S., Moghadam, P., and Bosse, M. (2013). 3d ther-

mal mapping of building interiors using an rgb-d and

thermal camera. In Robotics and Automation (ICRA),

2013 IEEE International Conference on, pages 2311–

2318. IEEE.

RGB-D and Thermal Sensor Fusion - Application in Person Tracking

619