Comparing Electronic Health Record Usability of Primary Care

Physicians by Clinical Year

Martina A. Clarke

1

, Jeffery L. Belden

2

and Min Soon Kim

3, 4

1

Department of Internal Medicine, University of Nebraska Medical Center, Omaha, NE, U.S.A.

2

Department of Family and Community Medicine, University of Missouri, Columbia, MO, U.S.A.

3

Department of Health Management and Informatics, University of Missouri, Columbia, MO, U.S.A.

4

Informatics Institute, University of Missouri, Columbia, MO, U.S.A.

Keywords: Electronic Health Record, Usability, Primary Care.

Abstract: Objectives: To examine usability gaps among primary care resident physicians by clinical year: year 1 (Y1),

year 2 (Y2), and year 3 (Y3) when using electronic health record (EHR). Methods: Twenty-nine usability tests

with video analysis were conducted involving triangular method approach. Performance metrics of percent

task success rate, time on task, and mouse activities were compared along with subtask analysis among the

three physician groups. Results: Our findings showed comparable results for physicians of all three years in

mean performance measures, specifically task success rate (Y1: 95%, Y2: 98%, Y3: 95%). However, varying

usability issues were identified among physicians from all three clinical years. Twenty-nine common usability

issues across five themes emerged during sub task analysis: inconsistencies, user interface issues, structured

data issues, ambiguous terminologies, and workarounds. Discussion and Conclusion: This study identified

varying usability issues for users of the EHR with different experience level, which may be used to potentially

increase physicians’ performance when using an EHR. While three physician groups showed comparable

performance metrics, these groups encountered numerous usability issues that should be addressed for

effective EHR training and patient care.

1 INTRODUCTION

The Office of the National Coordinator for Health

Information Technology (Washington, D.C, USA)

and Centers for Medicare & Medicaid Services

(CMS) (Baltimore, MD) has proposed the Health

Information Technology for Economic and Clinical

Health (HITECH) act to successfully adopt electronic

health records (EHRs) in health care. EHRs are

“records of patient health information generated by

visits in any health care delivery setting” (Hsiao and

Hing, 2012). The use of Health information

technology’s (HIT) clinical practice is increasing and

physicians are adopting EHRs in part due to the

financial incentives pledged by CMS (2012).

National Center for Health Statistics (NCHS)

communicated, in a 2013 data brief that 78% of

office-based physicians in the U.S. have adopted

EHRs in their practice (Hsiao and Hing, 2012). Some

advantages conveyed by EHR users for adopting an

EHR comprised of: improvement in preventive care

guidelines adherence, lessen paperwork for

providers, and an enhancement to the quality of

patient care (Chaudhry et al., 2006; Miller et al.,

2005; Shekelle et al., 2006). There are also barriers in

adopting EHRs, which include: large financial

investments, an imbalance of human and computer

workflow models, and a fall in productivity likely

caused by ‘usability’ issues (Menachemi and Collum,

2011; Goldzweig et al., 2009; Chaudhry et al., 2006;

Miller et al., 2005; Grabenbauer et al., 2011; Li et al.,

2012). Usability is described as how sufficiently a

software can be used to perform a particular task with

effectiveness, efficacy, and content (1998).

EHR usability issues may have an unfavorable

impact on clinicians’ EHR learning experience. This

may contribute to elevated cognitive load, medical

errors, and a loss of patient care quality (Love et al.,

2012; McLane and Turley, 2012; Viitanen et al.,

2011; Clarke et al., 2013; Sheehan et al., 2009;

Kushniruk et al., 2005). Learnability is defined as the

degree to which a system enables users to learn how

to utilize its application (2011a). Learnability is in

regard to the aggregate time and effort essential for a

user to cultivate proficiency with a system over time

and after multiple use (Tullis and Albert, 2008).

68

Clarke, M., Belden, J. and Kim, M.

Comparing Electronic Health Record Usability of Primary Care Physicians by Clinical Year.

DOI: 10.5220/0005692900680075

In Proceedings of the 9th International Joint Conference on Biomedical Engineering Systems and Technologies (BIOSTEC 2016) - Volume 5: HEALTHINF, pages 68-75

ISBN: 978-989-758-170-0

Copyright

c

2016 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

While there is diversity in defining usability and

learnability (Elliott et al., 2002; Nielsen, 1993;

2011a), definitions of learnability are strongly

correlated with usability and proficiency (Elliott et

al., 2002; Whiteside et al., 1985; Lin et al., 1997).

Giving physicians the opportunity to efficiently finish

clinical tasks within the EHR, may mitigate some

time restraints experienced by physicians amid

patient visits.

EHRs demand a large contribution of effort for

physicians to gain a certain degree of proficiency.

Resident physicians were chosen for this study

because residents who are insufficiently prepared on

how to operate an EHR, may encounter a steep

learning curve when their residency program

commences (Yoon-Flannery et al., 2008). In an

endeavor to boost physician proficiency with the

EHR, hospitals and clinics supply resident physicians

with thorough EHR education. However, it is difficult

finding adequate time to educate physicians to use

new EHR systems (Carr, 2004; Terry et al., 2008;

Lorenzi et al., 2009; Whittaker et al., 2009).

Clarke et al., (2015) conducted a longitudinal

study to determine learnability gaps between expert

and novice primary care resident physicians. They

compared performance measures of the novice and

expert resident physicians when using an EHR after

two rounds of lab-based usability tests using video

analysis with 7-month interval. This study found

comparable results in novice and expert physicians’

performance, demonstrating that physicians’

proficiency did not increase with EHR experience.

For this paper, we report the results of a confirmation

study where a more granular cross sectional study

was conducted with a larger sample size. We aimed

to examine if we could obtain similar performance

measures and usability issues of primary care resident

physicians in relation to their year in residency. To

achieve the objective of this study we measured the

differences in quantitative performance and

qualitative usability issues of primary care resident

physicians by clinical year (year 1, year 2, year 3).

2 METHOD

2.1 Study Design

To measure the usability of primary care physicians

by experience when using an EHR, data was collected

through usability testing using video analysis

software, Morae® (TechSmith, Okemos, MI). Morae

was used to record the laptop screen, the user’s facial

expressions using the laptop’s video camera. The

software also recorded each task separately and

collected performance measures (time on task, mouse

clicks, and mouse movements) while residents

completed each task. Finally the software collected

and analyzed system usability surveys and scores.

Family and Internal medicine resident physicians

attempted nineteen artificial, scenarios-based tasks in

a lab-based setting. Mixed methods technique was

employed to determine the difference in performance

and usability issues by primary care physicians. This

involved four types of quantitative performance

measures, system usability scale (SUS), a survey

instrument (Brooke, 1996) and subtask analysis. This

study was approved by the University of Missouri

Health Sciences Institutional Review Board.

2.2 Organizational Setting

This study took place at the University of Missouri

Health System (UMHS), which is a 536 bed, tertiary

care academic medical hospital based in Columbia,

Missouri. The Healthcare Information and

Management Systems Society (HIMSS), a non-profit

organization that ranks hospitals on their electronic

medical record (EMR) application implementation,

has recognized UMHS with Stage 7 of the EMR

Adoption Model (2011b). UMHS employs over 70

primary care physicians throughout clinics in central

Missouri and in 2012, had approximately 553,300

clinic visits. UMHS’ EHR includes a database that

consists of data from all the university’s clinics and

hospitals. The computerized physician order entry

(CPOE) within the EHR, grants clinicians access to

securely access and place electronic lab and

medication orders for patients, and pass on the orders

directly to the department in charge of processing the

requisition.

2.3 Participants

We recruited 14 physicians from our family medicine

department (FCM) and 16 physicians from our

internal medicine department (IM). FCM and IM

physicians were selected for the sample because, as

primary care residents, they have comparable clinical

duties. There is presently no evidence-based way to

determine users’ EHR experience so resident

physicians were categorized by clinical years using an

EHR. Therefore, to identify differences in use

patterns that arise between resident physicians by

clinical year when using an EHR, nine first year

residents, eight second year, and twelve third year

residents physicians participated in the study. Both

FCM and IM run three-year residency programs. This

Comparing Electronic Health Record Usability of Primary Care Physicians by Clinical Year

69

study was a cross sectional comparison. Physicians

were grouped by year of residency to determine if

physicians become more proficient with EHR

experience and to identify workflow differences

between physician groups. Convenience sampling

method was applied when selecting physicians. FCM

physicians were recruited during weekly residents

meetings and IM residents were enlisted through

MU's secure email client group emails.

2.4 Scenario and Tasks

In this study, the scenario presented to the residents

was a ‘scheduled follow up visit after a

hospitalization for gastroenteritis with dehydration

and hyponatremia.’ Nineteen tasks that are generally

completed by primary care physicians were included.

The tasks included are tasks that physicians were

trained to complete in the EHR training at the

beginning of their residency. The tasks covered the

critical and commonly used features and

functionalities of the EHR that physicians would most

likely use in daily clinical activities. To measure

usability of physicians more effectively, we

confirmed that the tasks in our study were also a part

of the EHR training resident physicians were required

to attend before they began their residency. The tasks

had a clear objective that physicians were able to

follow without nonessential clinical cognitive load or

ambiguity, which was not the study’s aim. The tasks

were:

Task 1: Start a new note

Task 2: Include visit information

Task 3: Include Chief Complaint

Task 4: Include History of Present Illness

Task 5: Review current medications contained in the

note

Task 6: Review problem list contained in the note

Task 7: Document new medication allergy

Task 8: Include Review of Systems

Task 9: Include Family History

Task 10: Include Physical exam

Task 11: Include last comprehensive metabolic

panel (CMP)

Task 12: Save the note

Task 13: Include diagnosis

Task 14: Place follow up visit in 1 month

Task 15: Place order for basic metabolic panel (BMP)

Task 16: Change a Medication

Task 17: Add a medication to your favorites list

Task 18: Renew one of the existing medications

Task 19: Sign the Note

2.5 Data Analysis

Performance measures depend on both user behavior

and the use of scenarios and tasks. Performance

measures are useful in estimating the effectiveness

and efficiency of a particular tasks. Four important

performance metrics were used in this study:

1. Percent task success calculates the percentage of

subtasks that participants effectively complete.

2. Time-on-task is the how long each participant

takes to complete each task.

3. Mouse clicks is defined as the number of times

the participant clicks on the mouse when

completing a specified task.

4. Mouse movement is defined as the distance of the

navigation path in pixels by the mouse to finish a

specified task.

For percent task success rate, a greater number

generally imply better performance, signifying

participants’ skillfulness with the system. For time on

task, mouse clicks, and mouse movements, a greater

value usually indicates poorer performances

(Khajouei et al., 2010; Koopman et al., 2011; Kim et

al., 2012). As such, greater values may signify that

the participant had difficulties while using the system.

Geometric mean were calculated for the performance

measures with confidence interval at 95% (Cordes,

1993). Geometric mean was calculated because

performance measures have a strong tendency to be

positively skewed and geometric mean offers a more

precise measure for sample sizes less than twenty-five

(Sauro and Lewis, 2010).

Sub task analysis was conducted as a part of the

usability analysis to understand how participants

interact with the system on a more granular level. The

video recorded sessions from Morae were reviewed

individually and the tasks were partitioned into

smaller sub-tasks, that were analyzed and compared

across both the participants and tasks to determine

subtle usability challenges, such as, errors, workflow,

and navigation pattern differences that otherwise

would gone unnoticed. To categorize our findings,

thematic analysis was employed to report our

usability findings (Braun and Clarke, 2006). Some

themes included in this study were adopted from a

study by Walji et al., (2013) but were modified to

include other themes for further granularity. Themes

were then reviewed over multiple iterations along

with physician champion and an informatics expert

and then revised.

HEALTHINF 2016 - 9th International Conference on Health Informatics

70

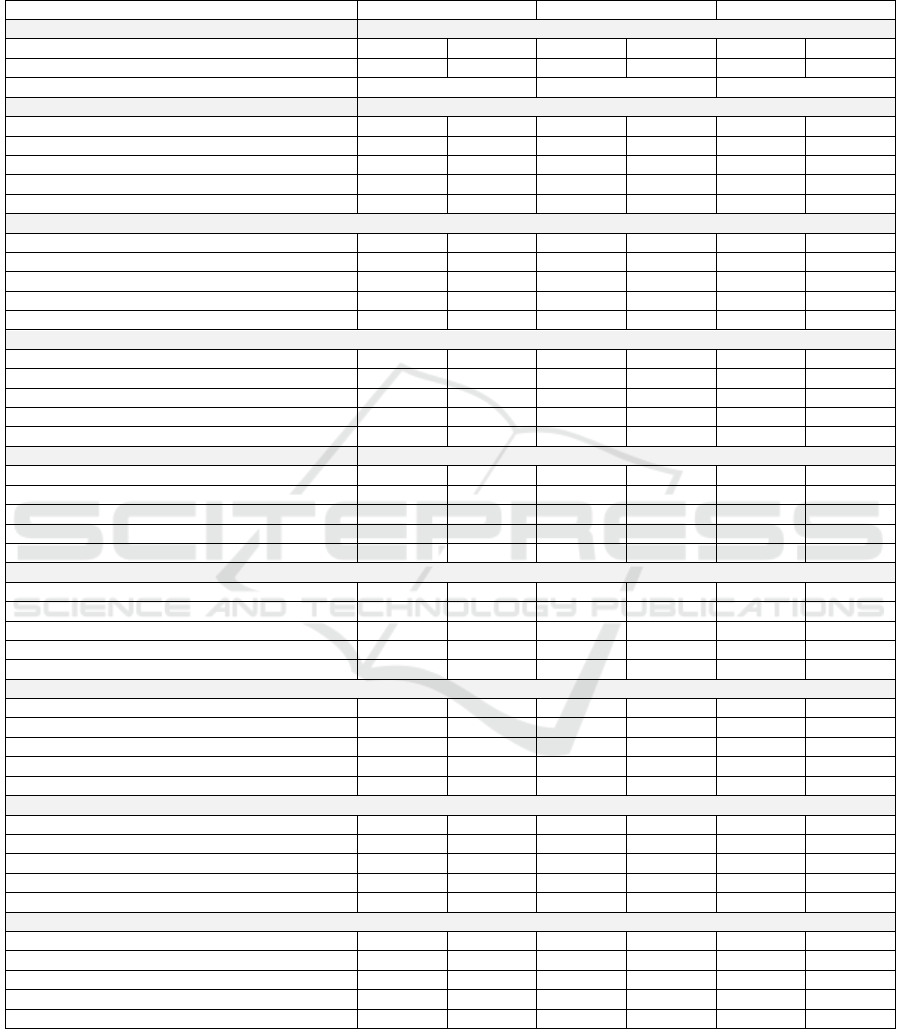

Table 1: Demographics of 9 first year resident physicians, 8 second year resident physicians, and 12 third year resident

physicians that participated in the usability test presented as percentages. Examined demographics include gender, age, race,

and use of EHR. *One resident physician did not provide information on birth date and was excluded in the calculation of

age range experience.

Demographics Year 1 Year 2* Year 3

Sex

Male 4 44% 5 63% 4 33%

Female 5 56% 3 38% 8 67%

Age (mean)

30 years 29 years 30 years

Race/Ethnicity

Black 0 0% 0 0% 0 0%

Asian 2 22% 3 38% 1 8%

White 7 78% 5 63% 11 92%

American Indian/Alaskan Native 0 0% 0 0% 0 0%

Pacific Islander 0 0% 0 0% 0 0%

Experience other than current EHR

None 2 22% 4 50% 8 67%

Less than 3 months 2 22% 1 13% 0 0%

3 months – 6 months 1 11% 0 0% 0 0%

7 months – 1 year 2 22% 2 25% 2 17%

Over 2 years 2 22% 1 13% 2 17%

What is your skill level when using a computer?

Do not use 0 0% 0 0% 0 0%

Very Unskilled 0 0% 0 0% 0 0%

Unskilled 0 0% 0 0% 1 8%

Skilled 9 100% 7 88% 9 75%

Very Skilled 0 0% 1 13% 2 17%

I am confident when using this EHR

Not at all 0 0% 0 0% 0 0%

Slightly 1 11% 0 0% 0 0%

Moderately 5 56% 2 25% 5 42%

Very 3 33% 5 63% 6 50%

Extremely 0 0% 1 13% 1 8%

Satisfaction with documenting in this EHR

Not satisfied 0 0% 0 0% 0 0%

Slightly satisfied 2 22% 0 0% 0 0%

Moderately satisfied 4 44% 3 38% 8 67%

Very satisfied 3 33% 4 50% 4 33%

Extremely satisfied 0 0% 1 13% 0 0%

Satisfaction with creating orders in this EHR

Not satisfied 0 0% 0 0% 1 8%

Slightly satisfied 2 22% 0 0% 0 0%

Moderately satisfied 4 44% 3 38% 7 58%

Very satisfied 3 33% 4 50% 4 33%

Extremely satisfied 0 0% 1 13% 0 0%

Satisfaction with seeking information in this EHR

Not satisfied 0 0% 0 0% 0 0%

Slightly satisfied 2 22% 0 0% 2 17%

Moderately satisfied 5 56% 3 38% 8 67%

Very satisfied 2 22% 3 38% 2 17%

Extremely satisfied 0 0% 2 25% 0 0%

Satisfaction with reading notes in this EHR

Not satisfied 0 0% 0 0% 0 0%

Slightly satisfied 0 0% 0 0% 0 0%

Moderately satisfied 3 33% 1 13% 7 58%

Very satisfied 6 67% 5 63% 3 25%

Extremely satisfied 0 0% 2 25% 2 17%

Comparing Electronic Health Record Usability of Primary Care Physicians by Clinical Year

71

3 RESULTS

3.1 Participants

Table 1 shows the demographics of primary care

resident physicians that participated in the usability

test presented as percentages. Examined

demographics are: sex, age, race, experience with

EHR other than current EHR, and other EHR

satisfaction questions. Responses from the

demographic question ‘Experience other than current

EHR’ implies that residents are coming into their

residency with some EHR experience, which shows a

possible increase in EHR training during medical

school.

3.2 Performance Measures

Percent task success rates (Table 2): There was a 3

percent point increase in physicians’ percent task

success rate between year 1 and year 2 (Y1: 95%, CI

[90%, 100%]; Y2: 98% CI [90%, 100%]). There was

a 3 percent point decrease in physicians’ percent task

success rate between year 2 (Y2: 98%, CI [90%,

100%]; Y3: 95% CI [90%, 100%]) and year 3. From

year 1 to year 3 there was only a 0 percent point

increase in physicians’ percent task success rate.

Time-On-Task (TOT): There was a 5% decrease in

physicians’ time on task between year 1 and year 2

(Y1: 38s CI [28s, 52s], Y2: 36s CI [25s, 52s]).

However, there was a 6% increase in physicians’ time

on task between year 2 and year 3 (Y2: 36s CI [25s,

52s], Y3: 38s CI [28s, 53s]). From year 1 to year 3

there was only no increase in physicians’ time on

task.

Mouse Clicks: There was a 13% decrease in

physicians’ mouse clicks between year 1 and year 2

(Y1: 8 clicks CI [5 clicks, 13 clicks], Y2: 7 clicks CI

[4 clicks, 12 clicks]). There was a 14% increase in

physicians’ mouse clicks between year 2 and year 3

(Y2: 7 clicks CI [4 clicks, 12 clicks], Y3: 8 clicks CI

[6clicks, 12 clicks]). From year 1 to year 3 there was

no improvement in physicians’ mouse clicks.

Mouse Movement (Length of the Navigation Path to

Complete a Given Task): There was a 7% decrease in

physicians’ mouse movements from year 1 to year 2

(Y1: 8,480 pixels CI [6,273 pixels, 11,462 pixels],

Y2: 7,856 pixels CI [5,380 pixels, 11,471 pixels]).

There was a 6% increase in physicians’ mouse

movements from year 2 to year 3 (Y2: 7,856 pixels

CI [5,380 pixels, 11,471 pixels], Y3: 8,319pixels CI

[6,101 pixels, 11,343 pixels]). From year 1 to year 3

there was a 2% decrease in physicians’ mouse

movements (Y1: 8,480 pixels CI [6,273 pixels,

11,462 pixels], Y3: 8,319pixels CI [6,101 pixels,

11,343 pixels]).

Table 2: Geometric mean values of performance measures

were compared between the physicians by clinical year:

year 1 (Y1), year 2 (Y2), and year 3 (Y3). We observed

similar trends for other performance measures. T = task.

Performance Measures Y1 Y2 Y3

Task Success 95% 98% 95%

Time on Task 38s 36s 38s

Mouse Clicks 8 7 8

Mouse Movements 8480 7856 8319

System Usability Scale: first year resident physicians

ranked the system’s usability at a mean of 51 (low

marginal), second year resident physicians ranked the

system’s usability at a mean of 64 (high marginal) and

third year resident physicians ranked the system’s

usability at a mean of 62 (high marginal) This result

may indicate that resident physicians’ length of time

using the system does not affect their acceptance of

the system.

3.3 Usability Issues Identified by

Sub-task Analysis

Five themes emerged during sub task analysis:

inconsistencies, user interface issues, structured data

issues, ambiguous terminologies, and workarounds.

Six common inconsistencies were identified among

both resident physician groups. Eight common user

interface issues were identified through subtask

analysis. Five usability issues related to ambiguous

terminologies were identified through subtask

analysis. Six common structured data issues were

identified through subtask analysis. Four common

workaround usability issues were identified through

subtask analysis. We did not include screen shots due

to copyright laws.

The most common usability issues identified was

found by physicians attempting to complete Task 7:

Document new medication allergy, Task 13: Include

diagnosis, Task 15: Place order for Basic Metabolic

Panel (BMP), and Task 16: Change a Medication,

Task 17: Add a medication to a favorite list.

The most common usability issues identified was

found by physicians attempting to complete Task 7:

Document new medication allergy, Task 13: Include

diagnosis, Task 15: Place order for Basic Metabolic

Panel (BMP), and Task 16: Change a Medication,

Task 17: Add a medication to a favorite list. Seven

first year resident physicians were able to

HEALTHINF 2016 - 9th International Conference on Health Informatics

72

successfully complete Task 7, one first year resident

physician was not able to include the reaction ‘hives’

to the allergy documentation, and one first year

resident physician was not able to successfully

complete Task 7. All second year resident physicians

were able to complete task 7. Ten third year resident

physicians successfully completed Task 7, one first

year resident physician was not able to include the

reaction ‘hives’ to the allergy documentation, and one

first year resident physician was not able to

successfully complete Task 7.

When completing Task 13: Include diagnosis,

some resident physicians were unclear on how to

import a list of diagnoses from the Problem list into

the visit note. Two first year resident physicians and

four third year resident physicians were not aware

that they should highlight all the diagnoses before

clicking ‘Include’ to get the entire list of diagnoses

into the visit note. One third year resident physician

did not move ‘hypertension’ from the problem list to

the current diagnosis list so they re-added

‘hypertension’ as a new problem. Three first year

resident physicians, two second year resident

physicians, and seven third year resident physicians

did not use IMO Search field to shorten steps to add

a diagnosis to the note.

When completing Task 15, four first year resident

physicians, three second year resident physicians, and

four third year resident physicians did not place the

two Basic metabolic panel (BMP) orders

concurrently.

When completing Task 16: Change a Medication,

resident physicians had to choose from the right click

menu options ‘Renew’, ‘Cancel/DC’, or

‘Cancel/Reorder.’ Physicians were able to complete

task 16 by use the option “Modify without resending”

by changing the number of tablets the patient needed

to take. To complete task 16, three first year resident

physicians used the ‘Cancel/DC’ option, two first

year resident physicians used the ‘Cancel/Reorder’

options, three first year resident physicians used the

‘Modify without resending,’ and one first year

resident physicians used the ‘Complete’ option. Six

second year resident physicians used the

‘Cancel/Reorder’ options and two second year

resident physicians used the ‘Modify without

resending.’ Five third year resident physicians used

the ‘Cancel/Reorder’ options, four third year resident

physicians used the ‘Modify without resending,’ and

three third year resident physicians used the

‘Reconcile’ option.

When completing Task17: Add a medication to a

favorite list, resident physicians were asked to add a

medication to a list of their frequently used

medications. Five first year resident physicians, three

second year resident physicians, and five third year

resident physicians were not able to complete task 17.

This functionality was not intuitive because this

feature was not accessible directly from the

medication list, which defeats the purpose and

reduces the likelihood of physicians using this

feature.

4 DISCUSSION

While the use of EHRs have many advantages, there

are many issues that have surfaced because of

usability design flaws. In this study and the previous

longitudinal study by Clarke et al, there was no

difference in physicians’ performance measures

whether we compared expert to novice physicians

across two rounds or physicians by clinical year.

More experienced physician users experienced the

same usability issues as less experienced physician

users. Both studies demonstrate that longer EHR use

is not indicative of physicians being an expert at using

the EHR.

Previous studies have shown that physicians with

varying lengths of EHR experience have comparable

success when completing tasks in an EHR. Novice

EMR users in Lewis et al’s study determining the

efficiency of novices compared to predicted skilled

use when using an EMR with a touchscreen interface,

were able to perform at a skilled level some of the

time within the first hour of system use. Kim et al’s

study, investigating usability gaps between novice

and expert nurses using an emergency department

information system, found no statistical difference

between the two nurse groups’ geometric mean

values for both scenarios (Kim et al., 2012). When

fully completed tasks were analyzed in Kjeldskov et

al’s study identifying the nature of usability issues

that novice and expert users experience and whether

these issues disappear over time, there was no

statistical significance between novice and expert

participants based on a chi-square test (p = 0.0833).

These results are similar to our study because

residents of all three years had comparable task

success rates. One of the primary goal of the EHR is

to allow new users to perform tasks efficiently and

effectively so it is important for new EHR physician

users to become experts in the shortest amount of

time.

Although the studies show no difference in

effectiveness, some studies demonstrate that there

was a difference in efficiency among physicians with

longer EHR experience Experts showed higher

Comparing Electronic Health Record Usability of Primary Care Physicians by Clinical Year

73

efficiency than novice participants in studies done by

Lewis et al., (2010) and Kim et al., (2012). Although

not significant, expert participants in Kjeldskov et

al’s study were faster for simple data entry tasks.

Similar to Kjeldskov et al’s study, physicians in our

study did not show differences in time on task

regardless of clinical year. These results suggest that

new users may complete tasks as successful as the

experienced users.

This study was constrained to family and internal

medicine physicians and only tested the usability of

one EHR from one healthcare institution which

suggests that results may not interchangeable with

other healthcare institutions and other specialties.

There were similar themes found in the study by

Walji et al and this study, therefore future research is

needed to further confirm generalizability. The study

also included just a small sample of clinical tasks

performed by physicians and may not be

representative of functions that may be accessed

based on other clinical scenarios. Although there are

some methodological limitations to this study,

directions given to the physicians were unambiguous

which granted participants to understand what was

required of them.

Our study identified varying usability issues for

users of the EHR with different experience level,

which may be used to potentially increase physicians’

performance when using an EHR. Although most

physicians reported a high level of computer skills

and EHR use, both quantitative and qualitative results

did not show substantial difference in usability

measures. These results show that length of exposure

to EHR may not be equivalent to physicians’

proficiency when using an EHR. Future studies

should include a larger sample of resident physicians

and expand the scope to specialist physicians for

transferability of results.

REFERENCES

1998. ISO 9241-11: Ergonomic Requirements for Office

Work with Visual Display Terminals (VDTs): Part 11:

Guidance on Usability, International Organization for

Standardization.

2011a. Systems and software engineering -- Systems and

software Quality Requirements and Evaluation

(SQuaRE) -- System and software quality models. 1

ed.: International Organization for Standardization.

2011b. U.S. EMR Adoption Model Trends [Online].

Chicago, IL: Health Information Management Systems

Society Analytics. Available: http://app.himssanalytics.

org/hc_providers/emr_adoption.asp (Archived by

WebCite® at http://www.webcitation.org/6cHM

M9kmb) [Accessed 02/21 2014].

2012. Meaningful Use [Online]. Baltimore, MD. Available:

http://www.cms.gov/Regulations-and-

Guidance/Legislation/EHRIncentivePrograms/Meanin

gful_Use.html (Archived by WebCite® at

http://www.webcitation.org/6cHEh1HVi).

2013. University of Missouri Health Care Achieves Highest

Level of Electronic Medical Record Adoption [Online].

Columbia, MO. Available: http://www.muhealth.org/

body.cfm?id=103&action=detail&ref=311 (Archived

by WebCite® at http://www.webcitation.org/

6cHLgcLsU).

Braun, V. & Clarke, V. 2006. Using thematic analysis in

psychology. Qualitative research in psychology, 3, 77-

101.

Brooke, J. 1996. SUS-A quick and dirty usability scale.

Usability evaluation in industry, 189, 194.

Carr, D. M. 2004. A team approach to EHR implementation

and maintenance. Nurs Manage, 35 Suppl 5, 15-6, 24.

Chaudhry, B., Wang, J., Wu, S., Maglione, M., Mojica, W.,

Roth, E., Morton, S. C. & Shekelle, P. G. 2006.

Systematic review: impact of health information

technology on quality, efficiency, and costs of medical

care. Ann Intern Med, 144, 742-52.

Clarke, M. A., Belden, J. L. & Kim, M. S. 2015. What

Learnability Issues Do Primary Care Physicians

Experience When Using CPOE? In: KUROSU, M.

(ed.) Human-Computer Interaction: Users and

Contexts. Springer International Publishing.

Clarke, M. A., Steege, L. M., Moore, J. L., Belden, J. L.,

Koopman, R. J. & Kim, M. S. 2013. Addressing human

computer interaction issues of electronic health record

in clinical encounters. In: MARCUS, A. (ed.)

Proceedings of the Second international conference on

Design, User Experience, and Usability: health,

learning, playing, cultural, and cross-cultural user

experience - Volume Part II. Las Vegas, NV: Springer-

Verlag.

Cordes, R. E. 1993. The effects of running fewer subjects

on time-on-task measures. International Journal of

Human-Computer Interaction, 5

, 393 - 403.

Elliott, G. J., Jones, E. & Barker, P. 2002. A grounded

theory approach to modelling learnability of

hypermedia authoring tools. Interacting with

Computers, 14, 547-574.

Goldzweig, C. L., Towfigh, A., Maglione, M. & Shekelle,

P. G. 2009. Costs and benefits of health information

technology: New trends from the literature. Health

Affairs, 28, w282-w293.

Grabenbauer, L., Fraser, R., Mcclay, J., Woelfl, N.,

Thompson, C. B., Cambell, J. & Windle, J. 2011.

Adoption of electronic health records: a qualitative

study of academic and private physicians and health

administrators. Appl Clin Inform, 2, 165-76.

Hsiao, C.-J. & Hing, E. 2012. Use and characteristics of

electronic health record systems among office-based

physician practices: United States, 2001–2012. NCHS

Data Brief, 1-8.

Khajouei, R., Peek, N., Wierenga, P. C., Kersten, M. J. &

Jaspers, M. W. 2010. Effect of predefined order sets and

HEALTHINF 2016 - 9th International Conference on Health Informatics

74

usability problems on efficiency of computerized

medication ordering. Int J Med Inform, 79, 690-8.

Kim, M. S., Shapiro, J. S., Genes, N., Aguilar, M. V.,

Mohrer, D., Baumlin, K. & Belden, J. L. 2012. A pilot

study on usability analysis of emergency department

information system by nurses. Applied Clinical

Informatics, 3, 135-153.

Koopman, R. J., Kochendorfer, K. M., Moore, J. L., Mehr,

D. R., Wakefield, D. S., Yadamsuren, B., Coberly, J. S.,

Kruse, R. L., Wakefield, B. J. & Belden, J. L. 2011. A

diabetes dashboard and physician efficiency and

accuracy in accessing data needed for high-quality

diabetes care. Ann Fam Med, 9, 398-405.

Kushniruk, A. W., Triola, M. M., Borycki, E. M., Stein, B.

& Kannry, J. L. 2005. Technology induced error and

usability: the relationship between usability problems

and prescription errors when using a handheld

application. Int J Med Inform, 74, 519-26.

Lewis, Z. L., Douglas, G. P., Monaco, V. & Crowley, R. S.

2010. Touchscreen task efficiency and learnability in an

electronic medical record at the point-of-care. Stud

Health Technol Inform, 160, 101-5.

Li, A. C., Kannry, J. L., Kushniruk, A., Chrimes, D.,

Mcginn, T. G., Edonyabo, D. & Mann, D. M. 2012.

Integrating usability testing and think-aloud protocol

analysis with "near-live" clinical simulations in

evaluating clinical decision support. Int J Med Inform,

81, 761-72.

Lin, H. X., Choong, Y.-Y. & Salvendy, G. 1997. A

proposed index of usability: a method for comparing

the relative usability of different software systems.

Behaviour & Information Technology, 16, 267-277.

Lorenzi, N. M., Kouroubali, A., Detmer, D. E. &

Bloomrosen, M. 2009. How to successfully select and

implement electronic health records (EHR) in small

ambulatory practice settings. Bmc Medical Informatics

and Decision Making, 9.

Love, J. S., Wright, A., Simon, S. R., Jenter, C. A., SORAN,

C. S., Volk, L. A., Bates, D. W. & Poon, E. G. 2012.

Are physicians' perceptions of healthcare quality and

practice satisfaction affected by errors associated with

electronic health record use? J Am Med Inform Assoc,

19, 610-4.

Mclane, S. & Turley, J. P. 2012. One Size Does Not Fit All:

EHR Clinical Summary Design Requirements for

Nurses. Nurs Inform, 2012, 283.

Menachemi, N. & Collum, T. H. 2011. Benefits and

drawbacks of electronic health record systems. Risk

Manag Healthc Policy, 4

, 47-55.

Miller, R. H., West, C., Brown, T. M., Sim, I. & Ganchoff,

C. 2005. The Value Of Electronic Health Records In

Solo Or Small Group Practices. Health Affairs, 24,

1127-1137.

Nielsen, J. 1993. Usability engineering, Boston, Academic

Press.

Sauro, J. & Lewis, J. R. 2010. Average task times in

usability tests: what to report? Proceedings of the

SIGCHI Conference on Human Factors in Computing

Systems. Atlanta, Georgia, USA: ACM.

Sheehan, B., Kaufman, D., Stetson, P. & Currie, L. M.

2009. Cognitive analysis of decision support for

antibiotic prescribing at the point of ordering in a

neonatal intensive care unit. AMIA Annu Symp Proc,

2009, 584-8.

Shekelle, P. G., Morton, S. C. & Keeler, E. B. 2006. Costs

and benefits of health information technology. Evid Rep

Technol Assess (Full Rep), 1-71.

Terry, A. L., Thorpe, C. F., Giles, G., Brown, J. B., Harris,

S. B., Reid, G. J., Thind, A. & Stewart, M. 2008.

Implementing electronic health records: Key factors in

primary care. Can Fam Physician, 54, 730-6.

Tullis, T. & Albert, W. 2008. Measuring the User

Experience: Collecting, Analyzing, and Presenting

Usability Metrics, Morgan Kaufmann Publishers Inc.

Viitanen, J., Hypponen, H., Laaveri, T., Vanska, J.,

Reponen, J. & Winblad, I. 2011. National questionnaire

study on clinical ICT systems proofs: physicians suffer

from poor usability. Int J Med Inform, 80, 708-25.

Walji, M. F., Kalenderian, E., Tran, D., Kookal, K. K.,

Nguyen, V., Tokede, O., White, J. M., Vaderhobli, R.,

Ramoni, R., Stark, P. C., Kimmes, N. S., Schoonheim-

Klein, M. E. & Patel, V. L. 2013. Detection and

characterization of usability problems in structured data

entry interfaces in dentistry. Int J Med Inform, 82, 128-

38.

Whiteside, J., Jones, S., Levy, P. S. & Wixon, D. 1985. User

performance with command, menu, and iconic

interfaces. Proceedings of the SIGCHI Conference on

Human Factors in Computing Systems. San Francisco,

California, USA: ACM.

Whittaker, A. A., Aufdenkamp, M. & Tinley, S. 2009.

Barriers and facilitators to electronic documentation in

a rural hospital. Journal of Nursing Scholarship, 41,

293-300.

Yoon-Flannery, K., Zandieh, S. O., Kuperman, G. J.,

Langsam, D. J., Hyman, D. & kaushal, R. 2008. A

qualitative analysis of an electronic health record

(EHR) implementation in an academic ambulatory

setting. Inform Prim Care, 16,

277-84.

Comparing Electronic Health Record Usability of Primary Care Physicians by Clinical Year

75