A Multi-context Framework for Modeling an Agent-based

Recommender System

Amel Ben Othmane

1

, Andrea Tettamanzi

2

, Serena Villata

3

, Nhan Le Thanh

1

and Michel Buffa

1

1

WIMMICS Research Team, Inria and I3S Laboratory, Sophia Antipolis, France

2

Univ. Nice Sophia Antipolis, I3S, UMR 7271, Sophia Antipolis, France

3

CNRS, I3S Laboratory, Sophia Antipolis, France

Keywords:

Multi-Agent Systems, BDI Architecture, Multi-Context Systems, Possibility Theory, Ontology, Recom-

mender Systems.

Abstract:

In this paper, we propose a multi-agent recommender system based on the Belief-Desire-Intention (BDI)

model applied to multi-context systems. First, we extend the BDI model with additional contexts to deal

with sociality and information uncertainty. Second, we propose an ontological representation of planning

and intention contexts in order to reason about plans and intentions. Moreover, we show a simple real-world

scenario in healthcare in order to illustrate the overall reasoning process of our model.

1 INTRODUCTION

Human activities take place in particular locations at

specific times. The increasing use of wearable devices

enables the collection of information about these ac-

tivities from a diverse population varying in physical,

cultural, and socioeconomic characteristics. Gener-

ally, the places you have been and spent regularly or

occasionally time on, reflect your lifestyle, which is

strongly associated to your socioeconomic features.

This amount of information about people, their rela-

tions, and their activities are valuable elements to per-

sonalize healthcare being sensitive to medical, social,

and personal characteristics of individuals. Besides,

the decision-making process in human beings uses

not only logical elements, but also emotional com-

ponents that are typically extra-logical. As a result,

behavior can also be explained by other approaches,

which additionally consider emotions, intentions, be-

liefs, motives, cultural and social constraints, impul-

sive actions, and even the simple willingness to try.

Hence, building recommender systems that take user

behavior into account requires a step toward person-

alization.

To the best of our knowledge, there are no rec-

ommender systems that combine all these features at

the same time. The following is a motivating example

that had driven this research. Bob, a 40 year-old adult,

wants to get back to a regular physical activity (pa).

Bob believes that a regular physical activity reduces

the risk of developing a non-insulin dependant dia-

betes mellitus (rd). Mechanisms that are responsible

for this are weight reduction (wr), increased insulin

sensitivity, and improved glucose metabolism. Due

to his busy schedule (bs), Bob is available only on

weekends (av). Hence, he would be happy if he can

do his exercises only on weekends (w). Bob prefers

also not to change his eating habits (eh). Besides all

the aforementioned preferences, Bob should take into

account his medical concerns (c) and certainly refer

to a healthcare provider for monitoring. This scenario

exposes the following problem: how can we help Bob

to select the best plan to achieve his goal based on

his current preferences and restrictions? This prob-

lem raises differents challenges. First, the proposed

solution should take into account Bob’s preferences

and restrictions (e.g. medical and physical concerns)

in the recommendation process. Second, information

about the environment in which Bob acts and people

that might be in relationship with him may have im-

pact in his decision-making process. Third, the sys-

tem should be able to keep a trace of Bob’s activities

in order to adapt the recommendation according to his

progress. Finally, the information or data about Bob’s

activities is distributed geographically and temporar-

ily.

In order to address these challenges, multi-agent

systems stand as a promising way to understand, man-

age and use distributed, large-scale, dynamic, and het-

erogeneous information. The idea is to develop rec-

Othmane, A., Tettamanzi, A., Villata, S., Thanh, N. and Buffa, M.

A Multi-context Framework for Modeling an Agent-based Recommender System.

DOI: 10.5220/0005686500310041

In Proceedings of the 8th International Conference on Agents and Artificial Intelligence (ICAART 2016) - Volume 2, pages 31-41

ISBN: 978-989-758-172-4

Copyright

c

2016 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

31

ommender systems to help users confronted with situ-

ations in which they have too many options to choose

from with the aim of assisting them to explore and

to filter out their preferences from a number of dif-

ferent possibilities. Based on this real-world appli-

cation scenario, we propose in this paper a multi-

agent-based recommender system where agents are

described using the BDI model as a multi-context sys-

tem. The system’s goal is to recommend a list of

activities according to user preferences. We propose

also an extension of the BDI model to deal with so-

ciality and uncertainty in dynamic environments.

The originality of what we are proposing with re-

spect to existing works is the combination of an ex-

tended possibilistic BDI approach with multi-context

systems. The resulting framework is then used as a

healthcare recommender system.

There are several advantages of such combination.

First, the use of a multi-context architecture allows us

to have different syntaxes, e.g. ontology to represent

and reason about plans and intentions. Besides, we

believe that extending the classical BDI model with

goals and social contexts better reflects human be-

havior. The proposed approach deals with goal-belief

consistency and proposes also a a belief revision pro-

cess. The idea of extending the BDI model with social

contexts is not novel. Different works explored trust

or reputation (Koster et al., 2012; Pinyol et al., 2012)

while in our approach we consider trust measures be-

tween two agents only if they are similar.

The rest of this paper is organized as follows. Sec-

tion 2 includes a literature overview on the related

work. In Section 3 we summarize the main concepts

on which is based this work. We introduce after, in

Section 4, the multi-context BDI agent framework. In

order to give a view of how the model works, we show

in Section 5 a real-world scenario in healthcare do-

main. Conclusions end the paper.

2 RELATED WORK

Recommender systems (RS) are information-filtering

systems that help users to deal with the problem of

information overload by recommending only relevant

items. (Bobadilla et al., 2013) undertook a literature

review and classification of recommender systems.

They came up with the conclusion that approaches

reviewed still require further improvements to make

recommendation methods more effective in a broader

range of applications. For example, in order to ex-

ploit information coming from various sensors and

devices on the Internet of things and the acquisition

and integration of trends related to the habits, con-

sumption and tastes of individual users in the recom-

mendation process. The main recommendation algo-

rithms can be divided into four categories: content-

based (CB), collaborative filtering (CF), Knowledge-

based (KB) and hybrid recommendation (HR). The

CB method recommends objects that are similar to the

ones the user showed to prefer in the past. However,

this method has a tendency to produce recommen-

dations with a limited degree of novelty (serendip-

ity). CF has been the most successful recommenda-

tion system technology. In CF, we make recommen-

dations according to the assumption that users who

share similar preferences choose similar items. How-

ever, the performance of CF is significantly limited

by data sparsity. Knowledge-based recommender ap-

proaches (Trewin, 2000) appear to be more promis-

ing to tackle those challenges by exploiting explicit

user requirements and specific domain knowledge.

There are two approaches to knowledge-based rec-

ommendation: case-based (Bridge et al., 2005) and

constraint-based recommendation (Felfernig et al.,

2015). Case-based recommenders determine recom-

mendations on the basis of similarity metrics while

constraint-based recommenders exploit a predifined

knowledge base that contains explicit rules to rely

user requirements with item features. Finally, HR is

currently the most popular approach. As its name sug-

gests, it combines at least two recommendation algo-

rithms to determine a recommendation. New trends

of recommender system appeared with the abundance

of smart devices like smartphones. This trend of

recommender systems is called context-aware RS.

Context-aware recommender systems (Adomavicius

and Tuzhilin, 2011) focus on additional contextual in-

formation, such as time, location, and wireless sen-

sor networks (Gavalas and Kenteris, 2011). How-

ever, those traditionnal recommendation approaches

are not well-suited for the recommendation of com-

plex products and services.

Despite all these advances, the current genera-

tion of recommender systems still requires further im-

provements to make recommendation methods more

effective and applicable to broader range of real-life

applications, including recommending holidays plans

(flight, places, and accommodation), certain types of

financial services to investors, and workout plans for

healthcare purpose. These improvements include bet-

ter methods for representing user behavior and the in-

formation about the items to be recommended, more

advanced recommendation modeling methods, incor-

poration of contextual information into the recom-

mendation process, utilization of multi-criteria rat-

ings, development of less intrusive and more flexi-

ble recommendation methods that also reason beyond

ICAART 2016 - 8th International Conference on Agents and Artificial Intelligence

32

user preferences.

A solution to this research problem can be pro-

vided by the use of recommender agents, which are

agent-based recommender systems that take into ac-

count users preferences to generate relevant recom-

mendations. Agents are also well suited for au-

tonomous applications operating in a dynamic envi-

ronment. Building systems based on agents gives a

more natural way to simulate complex real-world sit-

uations (Jennings, 2000). A complete taxonomy of

this kind of recommendation systems can be found in

(Adomavicius and Tuzhilin, 2005). They are widely

used in the tourism domain as (Casali et al., 2011;

Batet et al., 2012; Gavalas et al., 2014), healthcare ap-

plications (Amir et al., 2013), and traffic ones aiming

at improving the travel efficiency and mobility (Chen

and Cheng, 2010).

3 BACKGROUND

In this section, we summarize the main insights and

notions, which the present contribution is based on.

An agent in a BDI architecture is defined by its

beliefs, desires and intentions. Beliefs encode the

agent’s understanding of the environment, desires are

those states of affairs that an agent would like to ac-

complish and intentions those desires that the agent

has chosen to act upon. Many approaches tried to

formalize such mental attitudes (e.g. (Cohen and

Levesque, 1990), (Rao et al., 1995), (Wooldridge

et al., 2000) and (Singh, 1998)). However, all these

works concentrated on the human decision-making

process as a single approach without considering so-

cial influences. They did not take the gradual nature

of beliefs, desires, and intentions into account. Incor-

porating uncertainty and different degrees of attitudes

will help the agent in the decision-making process. In

order to represent and reason about uncertainty and

graded notions of beliefs, desires, and intentions, we

follow the approach proposed by (da Costa Pereira

and Tettamanzi, 2010) where uncertainty reasoning

is dealt with by possibility theory. Possibility the-

ory is an uncertainty theory dedicated to handle in-

complete information. It was introduced by (Negoita

et al., 1978) as an extension to fuzzy sets which are

sets that have degrees of membership in [0, 1]. Possi-

bility theory differs from probability theory by the use

of dual set functions (possibility and necessity mea-

sures) instead of only one. Possibility distribution as-

signs to each element ω in a set Ω of interpretations

a degree of possibility π(ω) ∈ [0, 1] of being the right

description of a state of affairs. It represents a flexible

restriction on what is the actual state with the follow-

ing conventions:

• π(ω) = 0 means that state ω is rejected as impos-

sible;

• π(ω) = 1 means that state ω is totally possible

(plausible).

While we chose to adopt a possibilistic BDI

model to include gradual mental attitudes, unlike

(da Costa Pereira and Tettamanzi, 2010), to represent

our BDI agents we use multi-context systems (MCS)

(Parsons et al., 2002). According to this approach,

a BDI model is defined as a group of interconnected

units {C

i

}, i ∈ I, ∆

br

, where:

• For each i ∈ I, C

i

= hL

i

, A

i

, ∆

i

i is an axiomatic for-

mal system where L

i

, A

i

and ∆

i

are the language,

axioms, and inference rules respectively. They de-

fine the logic for context C

i

whose basic behavior

is constrained by the axioms.

• ∆

br

is a set of bridge rules; i.e. rules of inference,

which relate formulas in different units.

The way we use these components to model BDI

agents is to have separate units for belief B, desires

D and intentions I, each with their own logic. The

theories in each unit encode the beliefs, desires, and

intentions of specific agents and the bridge rules (∆

br

)

encode the relationships between beliefs, desires and

intentions. We also have two functional units C and

P, which handle communication among agents and

allow to choose plans that satisfy users desires. To

summarize, using the multi-context approach, a BDI

model is defined as follows:

Ag = ({BC, DC, IC, PC,CC}, ∆

br

)

where BC, DC, IC represent respectively the Belief

Context, the Desire Context and the Intention Con-

text. PC and CC are two functional contexts corre-

sponding to Planning and Communication Contexts.

The use of MCS offers several advantages when mod-

eling agent architectures. It gives a neat modular way

of defining agents, which allows from a software per-

spective to support modular architectures and encap-

sulation.

4 THE MULTI-CONTEXT BDI

FRAMEWORK

The BDI agent architecture we are proposing in this

paper extends Rao and Georgeffs well-known BDI ar-

chitecture (Rao et al., 1995). We define a BDI agent

as a multi-context system being inspired by the work

of (Parsons et al., 2002). Following this approach, our

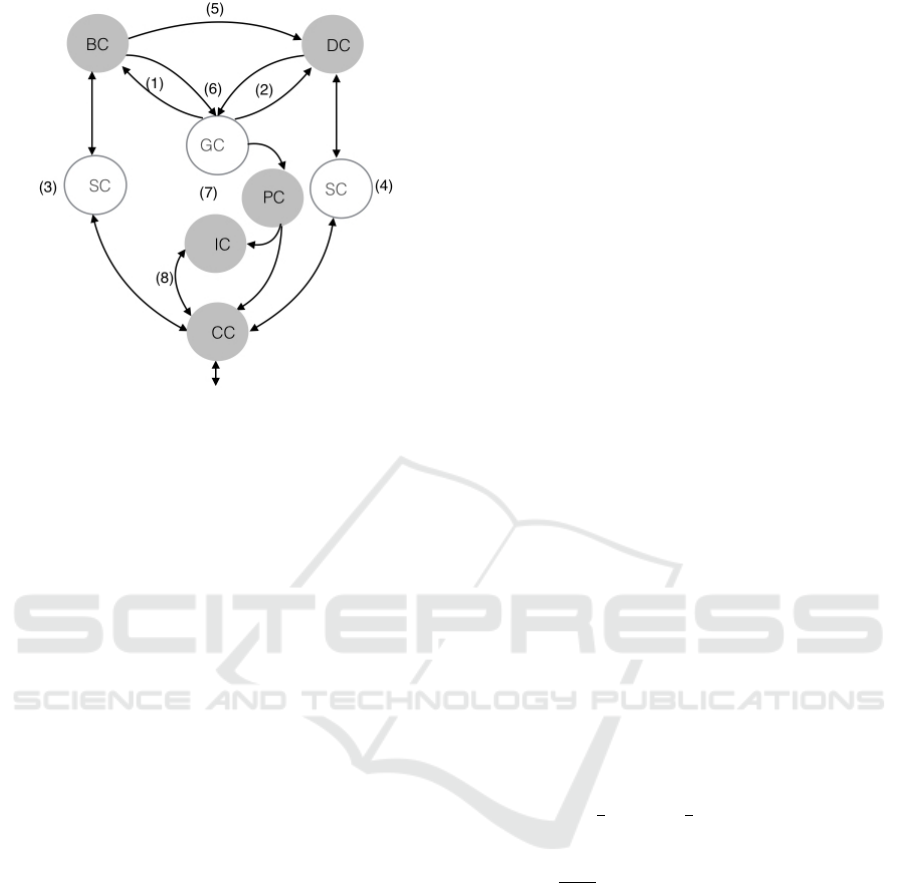

BDI agent model visualized in Figure 1 is defined as

follows:

A Multi-context Framework for Modeling an Agent-based Recommender System

33

Figure 1: Extended Multi-context BDI Agent Model.

Ag = ({BC, DC, GC, SC, PC, IC,CC}, ∆

br

)

where GC and SC represent respectively the Goal

Context and the Social Context.

In order to reason about beliefs, desires, goals

and social contexts we follow the approach devel-

oped by (da Costa Pereira and Tettamanzi, 2010;

da Costa Pereira and Tettamanzi, 2014) where they

adopt a classical propositional language for represen-

tation and possibility theory to deal with uncertainty.

Let A be a finite set of atomic propositions and L be

the propositional language such that A ∪ {>, ⊥} ⊆ L

and ∀φ, ψ ∈ L , ¬φ ∈ L, φ ∨ ψ ∈ L , φ ∧ ψ ∈ L . These

propositions can contain temporal elements that are

left as future work. As in (da Costa Pereira and Tetta-

manzi, 2010), L is extended and we will denote with

Ω = {0, 1}

A

the set of all interpretations on A . An

interpretation ω ∈ Ω is a function ω : A → {0, 1} as-

signing a truth value p

ω

to every atomic proposition

p ∈ A and, by extension, a truth value φ

ω

to all for-

mula φ ∈ L . [φ] denotes the set of all interpretations

satisfying φ. (i.e., [φ] = {ω ∈ Ω : ω φ}).

In the planning and intentions contexts, we propose

an ontological representation for plans and intentions

in order to offer to agents a computer-interpretable de-

scription of the services they offer and the information

they have access to (workout plans in our case). In the

following subsections, we will outline the different

theories defined for each context in order to complete

the specification of our multi-context agent model.

4.1 Belief Context

4.1.1 The BC Language and Semantics

In order to represent beliefs, we use the classical

propositional language with additional connectives,

following (da Costa Pereira and Tettamanzi, 2010).

We introduce also a fuzzy operator B over this logic to

represent agents beliefs. The belief of an agent is then

represented as a possibility distribution π. A possibil-

ity distribution π can represent a complete preorder on

the set of possible interpretations ω ∈ Ω. This is the

reason why, intuitively, at a semantic level, a possibil-

ity distribution can represent the available knowledge

(or beliefs) of an agent. When representing knowl-

edge, π(ω) acts as a restriction on possible interpreta-

tions and represents the degree of compatibility of in-

terpretation ω with the available knowledge about the

real world. As in (da Costa Pereira and Tettamanzi,

2010), a graded belief is regarded as a necessity de-

gree induced by a normalized possibility distribution

π on the possible worlds ω. The degree to which an

agent believes that a formula φ is true is given by:

B(φ) = N([φ]) = 1 − max

ω2φ

{π(ω)} (1)

An agent’s belief can change over time because new

information arrives from the environment or from

other agents. A belief change operator is proposed

in (da Costa Pereira and Tettamanzi, 2010), which al-

lows to update the possibility distribution π accord-

ing to new trusted information. This possibility dis-

tribution π

0

which induces the new belief set B

0

after

receiving information φ is computed from the possi-

bility distribution π with respect to the previous belief

set B (B

0

= B ∗

τ

φ

, π

0

= π ∗

τ

φ

) as follows: for all inter-

pretation ω,

π

0

(ω) =

π(ω)

Π([φ])

if ω and B(¬φ) < 1;

1 if ω φ and B(¬φ)=1;

min{π(ω), (1 − τ)} if ω 2 φ.

(2)

where τ is the trust degree toward a source about an

incoming information φ.

4.1.2 BC Axioms and Rules

Belief context axioms include all axioms from classi-

cal propositional logics with weight 1 as in (Dubois

and Prade, 2006). Since a belief is defined as a ne-

cessity measure, all the properties of necessity mea-

sures are applicable in this context. Hence, the be-

lief modality in our approach is taken to satisfy these

properties that can be regarded as axioms. The fol-

lowing axiom is then added to the belief unit:

ICAART 2016 - 8th International Conference on Agents and Artificial Intelligence

34

BC : B(φ) > 0 → B(¬φ) = 0

This axiom is a straightforward consequence of the

properties of possibility and necessity measures. It

means that if an agent believes φ to a degree then it

cannot believe ¬φ at all. Other consequences are:

B(φ ∧ ψ) ≡ min{B(φ), B(ψ)}

B(φ ∨ ψ) ≥ max{B(φ), B(ψ)}

The inference rules are:

• B(¬p ∨ q) ≥ α, B(p) ≥ β ` B(q) ≥ min(α, β)

(modus ponens)

• β ≤ α, B(p) ≥ α ` B(p) ≥ β(weight weakening)

where ` denote the syntactic inference of possibilistic

logic.

4.2 Desire Context

Desires represent a BDI agent’s motivational state re-

gardless its perception of the environment. Desires

may not always be consistent. For example, an agent

may desire to be healthy, but also to smoke; the two

desires may lead to a contradiction. Furthermore, an

agent may have unrealizable desires; that is, desires

that conflict with what it believes possible.

4.2.1 The DC Language and Semantics

In this context, we make a difference between de-

sires and goals. Desires are used to generate a list

of coherent goals regardless to the agent’s percep-

tion of the environment and its beliefs. Inspired from

(da Costa Pereira and Tettamanzi, 2014), the language

of DC (L

DC

) is defined as an extension of a classi-

cal propositional language. We define a fuzzy opera-

tor D

+

, which is associated with a satisfaction degree

(D

+

(φ) means that the agent positively desires φ) in

contrast with a negative desire, which reflects what is

rejected as unsatisfactory. For sake of simplicity, we

will only consider the positive side of desires in this

work and the introduction of negative desire is left as

future work.

In this theory, (da Costa Pereira and Tettamanzi,

2010) use possibility measures to express the degree

of positive desires. Let u(ω) be a possibility distri-

bution called also qualitative utility (e.g. u(ω) = 1 ,

means that ω is fully satisfactory). Given a qualitative

utility assignment u (formally a possibility distribu-

tion), the degree to which the agent desires φ ∈ L

DC

is

given by:

D(φ) = ∆([φ]) = min

ωφ

{u(ω)} (3)

where ∆ is a guaranteed possibility measure that,

given a possibility distribution π, is defined as fol-

lows, for all set S ⊆ Ω:

∆(S) = min

ω∈S

{π(ω)}. (4)

4.2.2 DC Axioms and Rules

The axioms consist of all properties of possibility

measures such as D(φ ∨ ψ) ≡ min{D(φ), D(ψ)}. The

basic inference rules, in the propositional case, asso-

ciated with ∆ are:

• [D(¬p ∧ q) ≥ α], [D(p ∧ r) ≥ β] ` [D(q ∧ r) ≥

min(α, β)](resolution rule)

• if p entails q classically, [D(p) ≥ α] ` [D(q) ≥

α](formula weakening)

• for β ≤ α, [D(p) ≥ α] ` [D(p) ≥ β] (weight weak-

ening)

• [D(p) ≥ α]; [D(p) ≥ β] ` [D(p) ≥ max(α,β)]

(weight fusion).

4.3 Goal Context

Goals are sets of desires that, besides being logically

“consistent”, are also maximally desirable, i.e., max-

imally justified. Even though an agent may choose

some of its goals among its desires, nonetheless there

may be desires that are not necessarily goals. The

desires that are also goals represent those states of the

world that the agent might be expected to bring about

precisely because they reflect what the agent wishes

to achieve. In this case, the agent’s selection of goals

among its desires is constrained by three conditions.

First, since goals must be consistent and desires may

be inconsistent, only the subsets of consistent desires

can be the potential candidates for being promoted to

goal-status, and also the selected subsets of consistent

desires must be consistent with each other. Second,

since desires may be unrealizable whereas goals

must be consistent with beliefs (justified desires),

only a set of feasible (and consistent) desires can be

potentially transformed into goals. Third, desires that

might be potential candidates to be goals should be

desired at least to a degree α. Then only the most

desirable, consistent, and possible desires can be

considered as goals.

Example: Let us consider one agent representing Al-

ice. Alice believes that her usual road to work is con-

gested and that there are other alternative routes that

she probably did not know. She would like to be at her

office at time without leaving earlier. She also prefers

a route without stops. Some of Alice desires can not

be moved to goal status such as desiring a route with-

out stops because Alice’s agent does not believe that

a route without stops is possible.

A Multi-context Framework for Modeling an Agent-based Recommender System

35

4.3.1 The GC Language and Semantics

The language L

GC

to represent the Goal context is de-

fined over the propositional language L extended by

a fuzzy operator G having the same syntactic restric-

tions as D

+

. G(φ) means that the agent has goal φ. As

explained above, goals are a subset of consistent and

possible desires. Desires are adopted as goals because

they are justified and achievable. A desire is justified

because the world is in a particular state that warrants

its adoption. For example, one might desire to go for

a walk because he believes it is a sunny day and may

drop that desire if it starts raining. A desire is achiev-

able, on the other hand, if the agent has a plan that

allows it to achieve that desire.

4.3.2 GC Axioms and Rules

Unlike desires, goals should be consistent, meaning

that they can be expressed by the D

G

axiom (D from

the KD45 axioms (Rao et al., 1995)) as follows:

D

G

GC : G(φ) > 0 → G(¬φ) = 0

Furthermore, since goals are a set of desires ,we

use the same axioms and deduction rules as in DC.

Goals-beliefs and goals-desires consistency will be

expressed with bridge rules as we will discuss later

on the paper.

4.4 Social Context

One of the benefits of the BDI model is to consider

the mental attitude in the decision-making process,

which makes it a more realistic than a purely logi-

cal model. However, this architecture overlooks an

important factor that influences this attitude, namely

the sociality of an agent. There are a number of ways

in which agents can influence one another’s mental

states such as authority where an agent may be influ-

enced by another to adopt a mental attitude whenever

the latter has the power to guide the behavior of the

former, trust where an agent may be influenced by an-

other to adopt a mental attitude merely on the strength

of its confidence in the latter or persuasion where an

agent may be influenced to adopt another agents men-

tal state via a process of argumentation or negotiation.

In this work we will only consider trust as a way by

which agents can influence each others.

4.4.1 The SC Language and Semantics

In our model, we consider a multi-agent system MAS

consisting of a set of N agents {a

1

, .., a

i

, ..a

N

}. The

idea is that those agents are connected in a social net-

work such as agents with the same goal. Each agent

Figure 2: An example of a social multi-agent trust network.

has links to a number of other agents (neighbors) that

change over time. In this paper, we do not consider

dynamic changes in the social network, but we as-

sume to deal with the network in a specific time in-

stant. Between neighbors, we consider a trust rela-

tionship. The trustworthiness of an agent a

i

toward

an agent a

j

about an information φ is interpreted as a

necessity measure τ ∈ [0, 1] as in (Paglieri et al., 2014)

and is expressed by the following equation:

T

a

i

,a

j

(φ) = τ (5)

where a

i

, a

j

∈ MAS = {a

1

, .., a

i

, .., a

N

}. Trust is tran-

sitive in our model, which means that, trust is not

only considered between agents having a direct link

to each others but, as showed in Figure 2, indirect

links are also considered. Namely if agent a

i

trusts

agent a

k

to a degree τ

1

which trusts agent a

j

with a

trust degree τ

2

then a

i

can infer its trust to agent a

j

and T

a

i

,a

j

(φ) = min{τ

1

, τ

2

}. We only consider first

and second order neighbors in our work e.g. agent a

i

can be influenced by agent a

k

and agent a

j

.

4.4.2 SC Axioms and Rules

As sociality is expressed as a trust measure, which is

interpreted as a necessity measure, SC axioms include

properties of necessity measures as in BC (e.g. N(φ ∧

ψ) ≡ min{N(φ), N(ψ)}).

When an agent is socially influenced to change its

mental attitude, by adopting a set of beliefs and/or

desires, the latter should maintain a degree of consis-

tency. Those rules will be expressed with bridge rules

that link the social context to the belief and the desire

contexts.

ICAART 2016 - 8th International Conference on Agents and Artificial Intelligence

36

Figure 3: Main Concepts and relationships in the 5W ontology.

4.5 Planning and Intention Contexts

The aim of this functional context is to extend the BDI

architecture in order to represent plans available to

agents and provide a way to reason over them. In this

context, we were inspired from (Batet et al., 2012) to

represent and reason about plans and intentions.

Plans are described using ontologies. (Gruber, 2009)

defines an ontology as ‘the specification of conceptu-

alizations, used to help programs and humans share

knowledge’. According to the World Wide Web Con-

sortium

1

(W3C), ontologies or vocabularies define the

concepts and relationships used to describe and repre-

sent an area of concern. We use the 5W

2

(Who, What,

Where, When, Why) vocabulary which is relevant for

describing different concepts and constraints in our

scenario. The main concepts and relationships of this

ontology are illustrated by Figure 3.

The main task of this context is to select plans that

satisfy maximally the agents goals. To go from the

abstract notions of desires and beliefs to the more

concrete concepts of goals and plans, as illustrated by

Figure 4, the following steps are considered: (1) new

information arrives and updates beliefs or/and desires

which trigger goals update; (2) these goal changes in-

voke the Plan Library.

The selection process is expressed by Algorithm 1

which looks in a knowledge base (KB) for all plans

that satisfy maximally these goals; CB and/or CF

techniques can be used in the selection process but

1

http://www.w3.org/standards/semanticweb/ontology

2

http://ns.inria.fr/huto/5w/

Figure 4: Planning and Intention Contexts.

will be investigated more thoroughly in further work.

The algorithm complexity is significantly reduced

since we discard from the beginning goals without

plans. (3) one or more of these plans are then chosen

and moved to the intention structure; finally (4) a

task (intention) is selected for execution and once

executed or failed this lead to the update of the agents

beliefs (5).

Example (continued): Suppose that Alice’s agent ac-

cepts to change its belief regarding ‘the route without

stops’. Then this desire will become a goal. So Al-

A Multi-context Framework for Modeling an Agent-based Recommender System

37

Data: G

Result: S //S is a list of plans

G

∗

= {φ

1

, φ

2

, .., φ

n

}

m ← 0;S

0

←

/

0;G

0

←

/

0;

for each φ

i

in G

∗

do

//Search in the KB for a plan satisfying φ

i

S

φ

i

← SearchInKB(φ

i

);

if S

φ

i

<>

/

0 then

//Discard goals without plans

Append(G

0

, S

φ

i

);

end

end

for i in 1..Lenght(G

0

) do

//Combination of i elements in G’

S

0

← Combination(G

0

, i);

for j in 1..Length(S

0

) do

if S

0

[ j] <>

/

0 then

//Compute the satisfaction degree of S

0

α

i

= G(S

0

[ j]);

//Select the maximum α

i

if α

i

> m then

m ← α

i

;

Initialize(S);

Append(S, S’);

else

if α

i

= m then

Append(S,S’);

end

end

end

end

end

Return S;

Algorithm 1: RequestForPlan Function.

ice’s agent will look in its KB for alternatives routes

without stops. Suppose that Algorithm 1 returns two

plans (routes) A and B. If Alice chooses Route A then

the first action a

1

=“Take Route des Dolines” becomes

the agent intention. Once done, Alice’s agent updates

its beliefs with information that a

1

is completed suc-

cessfully.

4.6 Bridge Rules

There are a number of relationships between contexts

that are captured by so-called bridge rules. A bridge

rule is of the form:

u1 : φ, u2 : ψ → u3 : θ

and it can be read as: if the formula φ can be deduced

in context u1 and ψ in u2 then the formula θ is to be

added to the theory of context u3. A bridge rule al-

lows to relate formulae in one context to those in an-

other one. In this section we present the most relevant

rules illustrated by numbers in Figure 1.∀a

i

∈ MAS,

the first rule relating goals to beliefs can be expressed

as follows:

(1) GC : G(a

i

, φ) > 0 → BC : B(a

i

, ¬φ) = 0

which means that if agent a

i

adopt a goal φ with a

satisfaction degree equal to β

φ

then φ is believed pos-

sible to a degree β

φ

by a

i

. Concerning rule (2) relating

goal context to desire context, if φ is adopted as goal

then it is positively desired with the same satisfaction

degree.

(2) GC : G(a

i

, φ) = δ

φ

→ DC : D

+

(a

i

, φ) = δ

φ

An agent may be influenced to adopt new beliefs or

desires. Beliefs coming from other agents are not

necessarily consistent with agent’s individual beliefs.

This can be expressed by the following rule:

(3) BC : B(a

j

, φ) = β

φ

, SC : T

a

i

,a

j

(φ) = t → BC :

B(a

i

, φ) = β

0

φ

where β

0

φ

is calculated using Equation 1 with τ =

min{β

φ

,t} to compute the possibility distribution and

Equation 1 to deduce the Belief degree.

Similarly to beliefs, desires coming from other agents

need not to be consistent with agent’s individual de-

sires. For example, an agent may be influenced by

another agent to adopt the desire to smoke, and at the

same time having the desire to be healthy as shown by

the following rule:

(4) DC : D

+

(a

j

, ψ) = δ

ψ

, SC : T

a

i

,a

j

(ψ) = τ →

DC : D

+

(a

i

, ψ) = δ

0

ψ

where δ

0

ψ

= min{δ

ψ

, τ}. Desire-generation rules can

be expressed by the following rule:

(5) BC : min{B(φ

1

) ∧ ... ∧ B(φ

n

)} = β, DC :

min{D

+

(ψ

1

) ∧ ... ∧ D

+

(ψ

n

))} = δ → DC : D

+

(Ψ) ≥

min{β, δ}

Namely, if an agent has the beliefs B(φ

1

) ∧ ... ∧ B(φ

n

)

with a degree β and positively desires D

+

(ψ

1

) ∧ ... ∧

D

+

(ψ

n

) to a degree δ, then it positively desires Ψ to

a degree greater or equal to min{β, δ}.

According to (da Costa Pereira and Tettamanzi,

2014), goals are a set of desires that, besides being

logically ‘consistent”, are also maximally desirable,

i.e., maximally justified and possible. This is ex-

pressed by the following bridge rule:

(6) BC : B(a

i

, φ) = β

φ

, DC : D

+

(a

i

, ψ) = δ

ψ

→

GC : G(χ(φ, ψ)) = δ

where χ(φ, ψ) = ElectGoal(φ, ψ), as specified in Al-

gorithm 2, is a function that allows to elect the most

desirable and possible desires as goals. If ElectGoal

returns

/

0 then G(

/

0) = 0, i.e. no goal is elected.

As expressed by the bridge rule above, once goals

are generated, our agent will look for plans satisfy-

ing goal φ by applying RequestForPlan function and

intend to do the first action of the recommended plan.

ICAART 2016 - 8th International Conference on Agents and Artificial Intelligence

38

Data: B,D

Result: G

∗

1: γ ← 0;

2: Compute G

γ

by Algorithm 3;

if G

γ

6= ø then

terminate with γ

∗

= 1 − γ, G

∗

= G

γ

;

else

//Move to the next more believed value in B

γ ←

min{α ∈ Img(B)} i f α > γ

1 i f @ α

end

if γ < 1 then

go back to Step 2;

end

terminate with G

∗

= ø;//No goal can be elected

Algorithm 2: Goal Election Function.

Data: B,D

Result: G

γ

//Img(D) is the degree of Desire

1: δ ← maxImg(D);

//Verify if ψ is believed possible

2: if min

ψ∈D

δ

B(¬ψ) ≤ γ then

terminate with G

γ

= D

δ

;

else

//move to the next less desired value of D

δ ←

max{α ∈ Img(D)} i f α < δ

0 i f @ α

end

if δ > 0 then

go back to Step 2;

end

terminate with G

γ

= ø;

Algorithm 3: Computation of G

γ

.

(7) GC : G(a

i

, φ) = δ, PC : RequestForPlan(φ) →

IC : I(act

i

, PostConditon(act

i

))

where RequestForPlan is a function that looks for

plans satisfying goal φ in the plan library as spec-

ified in Algorithm 1. Rule (8) means that if an

agent has the intention of doing an action act

i

with

PostCondition(act

i

) then it passes this information to

the communication unit and via it to other agents and

to the user.

(8) IC : I(act

i

, PostConditon(act

i

)) → CC :

C(does(act

i

, PostConditon(act

i

)))

If the communication unit obtains some informa-

tion that some action has been completed then the

agent adds it to its beliefs set using rule (3) with

B(PostConditon(act

i

)) = 1.

5 A RUNNING EXAMPLE

To illustrate the reasoning process of our BDI archi-

tecture, we use the example introduced in Introduc-

tion and illustrated by Figure 5.

Figure 5: An Illustrating Example.

To implement such a scenario using the BDI formal-

ism, a recommender agent has a knowledge base (KB)

like that shown in Table 1 initially specified by Bob.

Table 1: Initial Knowledge Base of Bob’s Recommender

Agent.

Beliefs Desires

B(pa → rd) = 0.75 D

+

(pa) = 0.8

B(wr → rd) = 0.8 D

+

(wr) = 0.8

B(eh) = 0.4 D

+

(¬eh) = 0.9

B(bs) = 0.9 D

+

(w) = 0.75

D

+

(wr ∧ ¬eh) = 0.95

The belief set is represented by formulae describing

the world (e.g. B(ψ

1

) = 1, means that ψ

1

is necessary

and totally possible). Desires are all possible states

that the agent wishes to achieve. Notice that they can

be conflicting like D

+

(wr) and D

+

(¬eh) or unachiev-

able like D

+

(wr ∧¬eh). D

+

(wr) = 0.8, means that wr

is desired to a degree equal to 0.8. Desire-generation

rules from bridge rule (5) can be described as follows:

R

5

1

: B(pa → rd), D

+

(rd) → D

+

(pa),

R

5

2

: B(wr → rd), D

+

(rd) → D

+

(wr),

R

5

3

: B(bs), D

+

(pa) → D

+

(w),

R

5

4

: B(pa → wr), D

+

(wr) → D

+

(¬eh).

Then the desire base of Bob, derived from desire-

generation rules will be as follows:

D = {(pa, 0.8), (wr,0.8), (w, 0.75), (¬eh, 0.9)}

We may now apply rule (6) to elect Bob’s goals,

given his belief base and his desire base. This rule

will apply the function electGoal() which will choose

from the desire base the most desirable and possi-

ble desires. Then, Img(B) = {0.75, 0.8, 0.9, 0.4} and

Img(D) = {0.75, 0.8, 0.9}. We begin by calling Algo-

rithm 2 with γ = 0; δ is set to maxImg(D) = 0.9 and

A Multi-context Framework for Modeling an Agent-based Recommender System

39

the corresponding desire in D is D

δ

= {¬eh}. Now if

we verify B(¬(¬eh)) = 0.4 > γ we move to the next

less desired value which sets δ to Img(D) = 0.8 <

δ = 0.9. δ = 0.8 > 0, then we go back to Step 2. In

this case D

δ

= {(pa, wr}. Now B(¬pa) = B(pa) = 0

because we ignore yet whether pa is possible or nor.

Similarly, B(¬wr) = 0 and Algorithm 2 will terminate

with G

∗

= G

γ

= {pa, wr}, i.e. Bob’s recommender

agent will elect as goal ‘get back to a regular physical

activity and reduce weight’.

Given these goals, Bob’s agent (a

1

) will look in the

plan library for a plan satisfying them. As explained

in rule (7), the agent will invoke function Request-

ForPlan, which will look for a plan satisfying pa and

wr. Applying Algoritm 1, we have G

0

= {pa, wr} and

S

0

= [pa, wr, {pa, wr}] with the same satisfaction de-

gree α

1

= α

2

= α

3

= 0.8. Suppose that it returns three

plans p

1

, p

2

and p

3

satisfying respectively goals pa,

wr and {pa, wr}. Bob’s recommender agent will pro-

pose plan p

3

to the user because it meets more Bob’s

requirements with the same satisfaction degree. We

suppose that Bob chooses Plan p

3

. Therefore, the first

action (activity) in Plan p

3

will become the agent’s in-

tention. The intended action will be proposed to the

user via the communication unit by applying rule (8).

Finally, if Bob starts executing the activity, informa-

tion such as speed, distance or heart rate are collected

via sensors (i.e. smart watch) and transmitted to the

communication unit in order to update the agent’s be-

liefs. The revision mechanism of beliefs is the same

as in (da Costa Pereira and Tettamanzi, 2010) de-

fined by Equation 2. Once the activity is completed,

rule(3) is triggered in order to update the belief set

of Bob’s agent with B(postCondition(action1) = 1)

which will permit to move to the next action in Plan

α.

In order to illustrate the social influence between

agents, we suppose that Bob’s Doctor uses our appli-

cation with the same goal as Bob i.e. reduce his dia-

bete risk. Then, there is a direct link between agents

a

1

, a

2

representing respectively Bob and Bob’s doctor

with T

a

1

,a

2

(φ) = 0.9 where φ represents any message

coming from Bob’s doctor (see (Paglieri et al., 2014)

for more details). Now that Bob is executing his plan

in order to get back to a physical activity, his rec-

ommender agent recieves the following information

from a

2

: B(¬pa) = 1 which means that Bob’s doc-

tor believes that physical activity is not possible (not

recommended). This information will trigger bridge

rule (3). Knowing the belief degree of a

2

about pa

and given the trust degree of a

1

toward a

2

about infor-

mation pa (T

a

1

,a

2

(pa)), a

1

decides to update its men-

tal state according to Equation 2, and sets the new

belief to B

0

(pa) = 0 according to Equation 1. This

will trigger the goal generation process, which up-

dates the elected goals. pa will be removed because

B(¬pa) = 1. Hence, a new plan is proposed to Bob.

6 CONCLUSIONS

We have presented in this paper a multi-context for-

malisation of the BDI architecture. We use a possi-

bilistic approach, based on (da Costa Pereira and Tet-

tamanzi, 2010), to deal with graded beliefs and de-

sires which are used to determine agent’s goals as sug-

gested by (Casali et al., 2011) in their future works.

We also take into account the social aspect of agents

as a similarity-trust measure. The proposed model

is conceived as a multi-context system where we de-

fine a mental context containing the beliefs, desires

and intentions, a social context representing the so-

cial influence among agents in an implicit relation-

ship, two functional contexts allowing to select a fea-

sible plan among a list of precompiled plans, and a

communication context that enables to communicate

with other agents and with users. Short-term objec-

tives of our research concern the realisation of a sim-

ulation of the MAS presented using Netlogo (Sakel-

lariou et al., 2008). This simulation will help to have

a testing of initial design ideas and choices and also

to understand how the system will behave when it will

be implemented. For the implementation of the proof-

of-concept MCS framework, we have investigated the

approaches of (Casali et al., 2008) and (Besold and

Mandl, 2010). We will explore also approaches such

as (Costabello et al., 2012) for multi-context access

in RDF graph for our planning module. We consider

that extending this model in order to handle temporal

reasoning in dynamic environments (e.g. when exe-

cuting recommended plan) will be more representa-

tive of real world applications. This includes a revi-

sion mechanism of the mental attitudes (beliefs, de-

sires and intentions) and taking into account the evo-

lution of the social relationship over time. Extending

the social context in order to get into a communica-

tion process with agent via argumentation (Mazzotta

et al., 2007) is also part of our future work.

ACKNOWLEDGEMENTS

The authors would like to thank the French Agency

of the Environment and the Energy Management

(ADEME) and the Provence Alpes Cote d’Azur

(PACA) region for the scholarship that is funding this

research and the reviewers for their insightful com-

ments.

ICAART 2016 - 8th International Conference on Agents and Artificial Intelligence

40

REFERENCES

Adomavicius, G. and Tuzhilin, A. (2005). Toward the

next generation of recommender systems: A survey of

the state-of-the-art and possible extensions. Knowl-

edge and Data Engineering, IEEE Transactions on,

17(6):734–749.

Adomavicius, G. and Tuzhilin, A. (2011). Context-aware

recommender systems. In Recommender systems

handbook, pages 217–253. Springer.

Amir, O., Grosz, B. J., Law, E., and Stern, R. (2013). Col-

laborative health care plan support. In Proceedings

of the 2013 international conference on Autonomous

agents and multi-agent systems, pages 793–796. In-

ternational Foundation for Autonomous Agents and

Multiagent Systems.

Batet, M., Moreno, A., S

´

anchez, D., Isern, D., and Valls, A.

(2012). Turist@: Agent-based personalised recom-

mendation of tourist activities. Expert Systems with

Applications, 39(8):7319–7329.

Besold, T. R. and Mandl, S. (2010). Towards an imple-

mentation of a multi-context system framework. MRC

2010, page 13.

Bobadilla, J., Ortega, F., Hernando, A., and Guti

´

errez, A.

(2013). Recommender systems survey. Knowledge-

Based Systems, 46:109–132.

Bridge, D., G

¨

oker, M. H., McGinty, L., and Smyth, B.

(2005). Case-based recommender systems. The

Knowledge Engineering Review, 20(03):315–320.

Casali, A., Godo, L., and Sierra, C. (2008). A tourism rec-

ommender agent: from theory to practice. Inteligen-

cia artificial: Revista Iberoamericana de Inteligencia

Artificial, 12(40):23–38.

Casali, A., Godo, L., and Sierra, C. (2011). A graded

bdi agent model to represent and reason about pref-

erences. Artificial Intelligence, 175(7):1468–1478.

Chen, B. and Cheng, H. H. (2010). A review of the applica-

tions of agent technology in traffic and transportation

systems. Intelligent Transportation Systems, IEEE

Transactions on, 11(2):485–497.

Cohen, P. R. and Levesque, H. J. (1990). Intention is choice

with commitment. Artificial intelligence, 42(2):213–

261.

Costabello, L., Villata, S., and Gandon, F. (2012). Context-

aware access control for rdf graph stores. In ECAI,

pages 282–287.

da Costa Pereira, C. and Tettamanzi, A. G. (2010). An inte-

grated possibilistic framework for goal generation in

cognitive agents. In Proceedings of the 9th Interna-

tional Conference on Autonomous Agents and Multia-

gent Systems: volume 1-Volume 1, pages 1239–1246.

da Costa Pereira, C. and Tettamanzi, A. G. (2014). Syntactic

possibilistic goal generation. In ECAI 2014-21st Eu-

ropean Conference on Artificial Intelligence, volume

263, pages 711–716. IOS Press.

Dubois, D. and Prade, H. (2006). Possibility theory and

its applications: a retrospective and prospective view.

Springer.

Felfernig, A., Friedrich, G., Jannach, D., and Zanker, M.

(2015). Constraint-based recommender systems. In

Recommender Systems Handbook, pages 161–190.

Springer.

Gavalas, D. and Kenteris, M. (2011). A web-based

pervasive recommendation system for mobile tourist

guides. Personal and Ubiquitous Computing,

15(7):759–770.

Gavalas, D., Konstantopoulos, C., Mastakas, K., and

Pantziou, G. (2014). Mobile recommender systems

in tourism. Journal of Network and Computer Appli-

cations, 39:319–333.

Gruber, T. (2009). Ontology. Encyclopedia of database

systems, pages 1963–1965.

Jennings, N. R. (2000). On agent-based software engineer-

ing. Artificial intelligence, 117(2):277–296.

Koster, A., Schorlemmer, M., and Sabater-Mir, J. (2012).

Opening the black box of trust: reasoning about trust

models in a bdi agent. Journal of Logic and Compu-

tation, page exs003.

Mazzotta, I., de Rosis, F., and Carofiglio, V. (2007). Por-

tia: A user-adapted persuasion system in the healthy-

eating domain. Intelligent Systems, IEEE, 22(6):42–

51.

Negoita, C., Zadeh, L., and Zimmermann, H. (1978). Fuzzy

sets as a basis for a theory of possibility. Fuzzy sets

and systems, 1:3–28.

Paglieri, F., Castelfranchi, C., da Costa Pereira, C., Falcone,

R., Tettamanzi, A., and Villata, S. (2014). Trusting

the messenger because of the message: feedback dy-

namics from information quality to source evaluation.

Computational and Mathematical Organization The-

ory, 20(2):176–194.

Parsons, S., Jennings, N. R., Sabater, J., and Sierra, C.

(2002). Agent specification using multi-context sys-

tems. In Foundations and Applications of Multi-Agent

Systems, pages 205–226. Springer.

Pinyol, I., Sabater-Mir, J., Dellunde, P., and Paolucci, M.

(2012). Reputation-based decisions for logic-based

cognitive agents. Autonomous Agents and Multi-

Agent Systems, 24(1):175–216.

Rao, A. S., Georgeff, M. P., et al. (1995). Bdi agents: From

theory to practice. In ICMAS, volume 95, pages 312–

319.

Sakellariou, I., Kefalas, P., and Stamatopoulou, I. (2008).

Enhancing netlogo to simulate bdi communicating

agents. In Artificial Intelligence: Theories, Models

and Applications, pages 263–275. Springer.

Singh, M. P. (1998). Semantical considerations on intention

dynamics for bdi agents. Journal of Experimental &

Theoretical Artificial Intelligence, 10(4):551–564.

Trewin, S. (2000). Knowledge-based recommender sys-

tems. Encyclopedia of Library and Information Sci-

ence: Volume 69-Supplement 32, page 180.

Wooldridge, M., Jennings, N. R., and Kinny, D. (2000).

The gaia methodology for agent-oriented analysis and

design. Autonomous Agents and multi-agent systems,

3(3):285–312.

A Multi-context Framework for Modeling an Agent-based Recommender System

41