Implementation of a Framework for e-Assessment on Students’ Devices

Bastian K

¨

uppers

1

and Ulrik Schroeder

2

1

IT Center, RWTH Aachen University, Seffenter Weg 23, 52074 Aachen, Germany

2

Learning Technologies Research Group, RWTH Aachen University, Ahornstraße 55, 52074 Aachen, Germany

Keywords:

Computer based Examinations, Computer Aided Examinations, e-Assessment, Bring Your Own Device,

BYOD.

Abstract:

Following the trend of digitalization in university education, lectures and accompanying exercises and tutorials

incorporate more and more digital components. These digital components spread from the usage of comput-

ers and tablets in tutorials to incorporating online learning management systems into the lectures. Despite

e-Assessment being a valuable component in form of self-tests and formative assessment, the trend of digital-

ization has not yet been transferred on examinations. That is among other things caused by financial reasons,

because maintaining a suitable IT-infrastructure for e-Assessment is expensive in terms of money as well as

administrative effort. This paper presents a Bring Your Own Device approach to e-Assessment as potential

solution to this issue.

1 RESEARCH PROBLEM

1.1 Introduction

Following the general trend of digitalization, univer-

sity teaching at German universities incorporates in-

creasingly digital elements, for example a learning

management system (LMS) or mobile apps (Politze

et al., 2016). The incorporation of digital elements

at this point is not limited to lectures, but also tu-

torials keep up with this trend. It is, for exam-

ple, rather common by this time, that computer sci-

ence students use their own devices during tutorials.

Examinations, however, are not part of this devel-

opment in a similar manner (Hochschulforum Dig-

italisierung, 2016)(Themengruppe ’Innovationen in

Lern- und Pr

¨

ufungsszenarien’, 2015)(Deutsch et al.,

2012). Similar trends can be observed also in other

countries like the United Kingdom (Walker and Han-

dley, 2016), Greece (Terzis and Economides, 2011),

the United States of America (Luecht and Sireci,

2011) or Australia (Birch and Burnett, 2009). Due

to the slow progression of integrating e-Assessment

into higher education, there is a media disruption

between the particular elements of a study course.

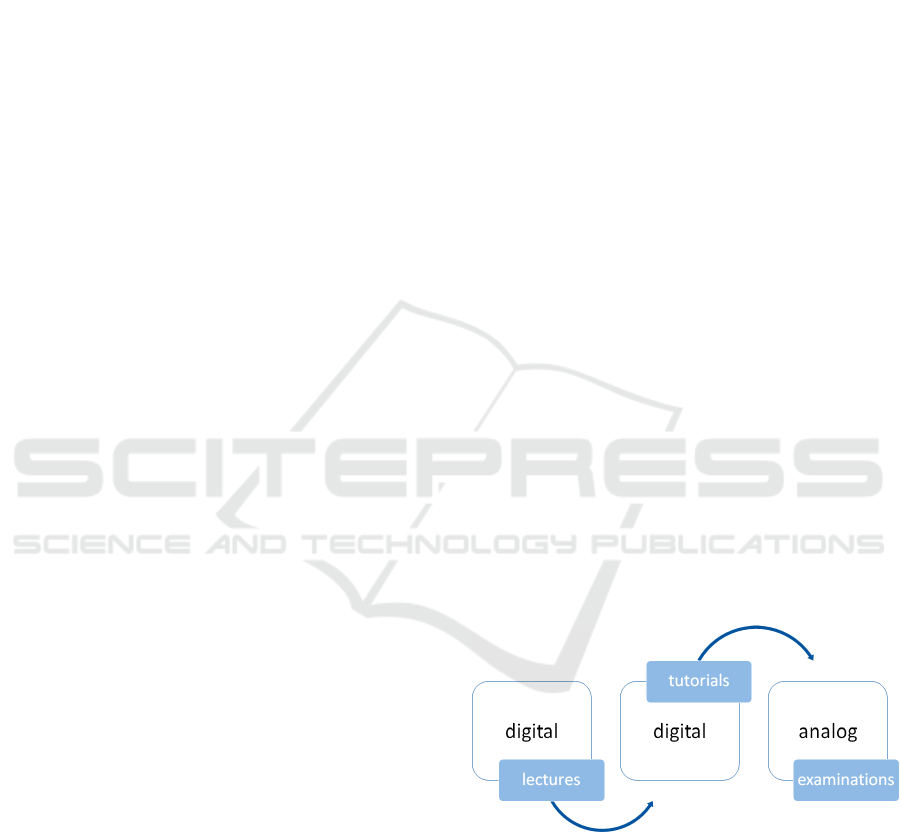

Figure 1 illustrates this situation. Retaining exam-

inations on paper is often caused by reservations

against e-Assessment. These reservation concern for

example the fairness or reliability of digital exami-

nation systems (Vogt and Schneider, 2009). Espe-

cially the students have to accept e-Assessments in

order to successfully introduce those to higher ed-

ucation (Fluck et al., 2009)(Terzis and Economides,

2011). There are, however, likewise significant ben-

efits, which makes e-Assessment worth considering,

especially in computer science education. (see Sec-

tion 1.2).

Figure 1: Actual state of the digitalization in university

courses.

Beyond reservations against e-Assessment, also

financial reasons interfere with the introduction of

e-Assessment, because maintaining a centrally man-

aged IT infrastructure for digital examinations is

costly in terms of money as well as administra-

tive effort. The latter is reported by several uni-

versities, which have such an infrastructure, for

example the University of Duisburg-Essen (Biella

et al., 2009) and the University of Bremen (B

¨

ucking,

KÃijppers B. and Schroeder U.

Implementation of a Framework for e-Assessment on Studentsâ

˘

A

´

Z Devices.

In Doctoral Consortium (CSEDU 2017), pages 11-20

Copyright

c

2017 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

2010). Since most students possess already devices

that are suitable for e-Assessment (Dahlstrom et al.,

2015)(Poll, 2015)(Willige, 2016), Bring Your Own

Device (BYOD) is a potential solution to overcome

this issue. This paper presents an approach to the de-

velopment of a software framework that enables insti-

tutes of higher education to introduce e-Assessment

on the basis of BYOD.

1.2 e-Assessment in Computer Science

Utilizing BYOD to create an affordable setting could

crucially boost the usage of e-Assessments, which do

not only overcome a media disruption between lec-

tures, tutorials and the examination, but can offer a

lot more of advantages. Especially in the field of

computer science the implementation of (summative)

e-Assessments for certification would greatly bene-

fit examinations in various aspects. First and fore-

most, e-Assessments bring the main subject of study,

namely the computer, into the examination, but there

are also other facets, which improve the setting of the

examinations for both, students and lecturers.

1.2.1 Usage of Domain-specific Tools

Often, tutorials are held in addition to the lectures to

provide hands-on experience besides theoretical ed-

ucation. These tutorials introduce domain specific

tools to the students. In a programming course the

students normally get used to integrated development

environments, e.g. Eclipse

1

or NetBeans

2

, during the

tutorials, but are currently most often asked to write

the examinations on paper. Therefore, these tools can

also be used in the examinations, closing the previ-

ously described gap between tutorial and examina-

tion.

1.2.2 Simplified Correction

The same tools that the students can use through-

out the examinations, are also available to be used

by the correctors, reducing the effort for correction

considerably (Jara and Molina Madrid, 2015)(Vogt

and Schneider, 2009). Parts of the examination can

even be corrected semi-automatically. In program-

ming courses, for example, a set of unit tests can be

used to determine whether a student’s code fulfills

all the requirements demanded by the examination.

Only if some of these tests fail, the corrector has to

have a deeper look into the student’s exam. Addition-

ally, also the effort for correcting the other parts of

1

http://www.eclipse.org

2

http://netbeans.org

the examination is lowered, because the readability is

clearly improved in comparison to handwritten exam-

inations.

1.2.3 Improved Level of Assessment

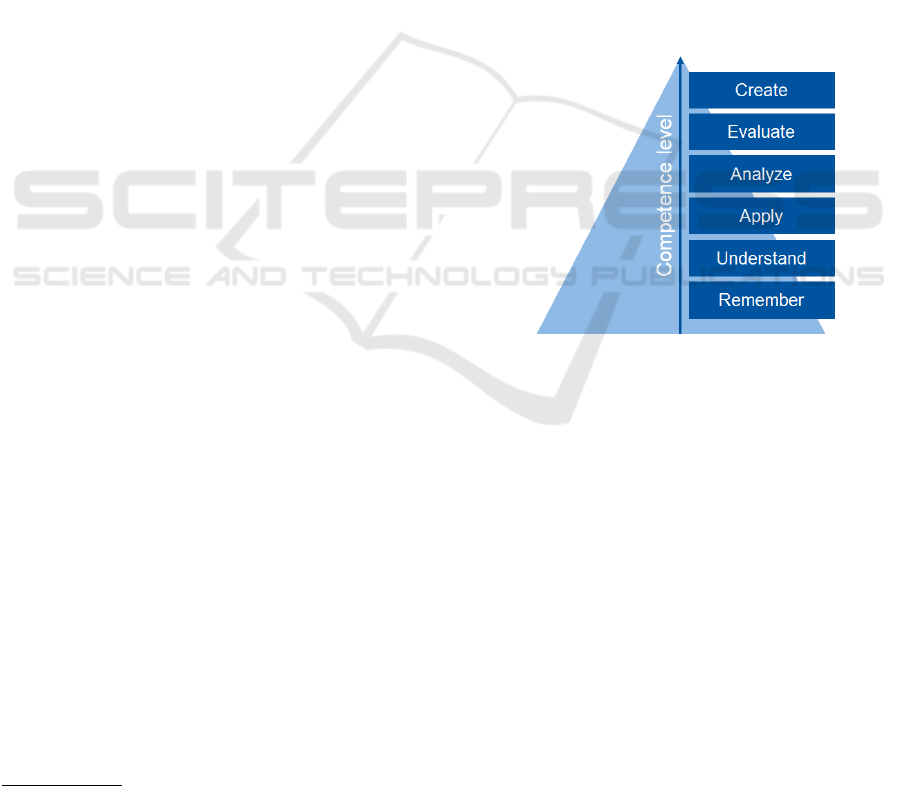

By providing domain-specific tools, the proficiency

level of the examinations can be increased re-

markably. Considering Krathwohl’s revised ver-

sion of Bloom’s Taxonomy of Educational Objectives

(Krathwohl, 2002) (see Fig. 2), assessing the more

complex levels of the taxonomy, like Evaluate and

Create, can be achieved in a more realistic fashion,

because the domain-specific tools can take care of the

lower levels of the taxonomy, so that the students can

focus on the higher levels. In programming courses,

the integrated development environment for example

provides auto completion, therefore the students do

not need to remember every keyword of a program-

ming language.

Figure 2: Revised Taxonomy of Educational Objectives by

Krathwohl.

1.2.4 Innovative Methods of Examination

With the implementation of e-Assessments, innova-

tions in the methods of examination become pos-

sible (Themengruppe ’Innovationen in Lern- und

Pr

¨

ufungsszenarien’, 2015)(Winkley, 2010). In pro-

gramming courses, for example, modern methods like

test driven development (Beck, 2015) can be assessed,

which is not possible with an analogous examination.

1.3 Basic Conditions

During an examination, students have to be treated

equally. This is an ethical guideline, which examin-

ers should obey. Besides being ethically important,

this principle can be required by law, depending on

a country’s particular law. In Germany, for example,

Article 3 of the Basic Law for the Federal Republic of

Germany enforces an equality of treatment for all peo-

ple (’Equality before the law’) (German Bundestag,

2012). Since the students’ devices are, in the worst

case, all different from each other, it is practically im-

possible to provide every student with the exact same

conditions than every other student. This is an issue

that has to be taken into account when designing the

e-Assessment framework.

1.4 Wording

For the ease of readability, the rest of this paper will

stick to the following wording:

• e-Assessment Framework. The unity of all

pieces of software that are developed throughout

the PhD project.

• e-Assessment Application. The software that

runs on the students’ devices and is used by them

to work on the examination.

• Lockdown Application. The software that runs

beside the e-Assessment Application and prevents

the students from cheating.

2 OUTLINE OF OBJECTIVES

2.1 Equality of Treatment

As described in section 1.3, all students have to be

treated equally during an examination. Since the stu-

dents’ devices are beyond control of the examiners,

the design of the e-Assessment framework has to

tackle this issue. Therefore it was concluded, that a

certain level of features regarding processing power

and memory capacity on the students’ devices has

to be required. The e-Assessment application has

then to be designed in a way that students whose

equipment is beyond these requirements do not have

an advantage over the students whose devices fulfill

the requirements only just. Beyond the different

hardware specifications of the students’ devices, also

the potentially different operating systems have to

be taken into account. The e-Assessment application

should be platform independent, so that no group

of students has a disadvantage, because they have

a not-supported operating system installed on their

devices. Thereof the first research objective can be

concluded:

Research Objective 1: How has a software frame-

work that fulfills the previously described require-

ments to be designed?

2.2 Reliability

In order to successfully introduce e-Assessment,

reliability is one of the key elements that have to

be present, as pointed out by Dahinden (Dahinden,

2014). Reliability in this context refers to two differ-

ent things. First, the hardware infrastructure itself has

to be reliable. Second, also the data that is processed

has to be reliable. The latter includes saving the data

securely during and beyond the examination. Safety

has to be ensured regarding hardware errors and

human manipulation of the data. These requirements

hold obviously also when doing BYOD. Once the

examination is over, there is no difference between

a ’regular’ and a BYOD e-Assessment. During the

examination, however, there is a difference, because

the reliability of the data has to be ensured although

the data is created on a computer, which is not per

se under full control of the examiner (’untrusted

platform’). Hence, the second research objective can

be concluded:

Research Objective 2: Can the system proposed by

Dahinden be used in a BYOD setting? If not, what

changes have to be made in order to ensure reliability

in a BYOD context?

2.3 Lockdown

Since e-Assessments in a BYOD setting are carried

out on the students’ devices, precautions against

cheating have to be taken. Otherwise, students would

have the possibility to prepare their devices before the

exam to provide themselves with illegal advantages.

Therefore a so-called lockdown application can

be used. This is an application, which prevents

the students from unauthorized actions during the

examinations. These actions include especially

starting prohibited programs and accessing illegal

information, e.g. on the devices’ hard drives or on

the internet. At this point the potentially different

operating systems on the students’ devices have to be

taken into account, since each of these operating sys-

tems possibly offers different ways of cheating to the

students so that also different countermeasures have

to be implemented. However, there are also doubts

about the general security of lockdown applications,

for example Safe Exam Browser

3

(Søgaard, 2016).

This leads to the third research objective:

Research Objective 3: Is a lockdown application a

generally secure approach? If yes, can a lockdown

3

http://safeexambrowser.org

application be implemented platform independent? If

not, are there other ways of ensuring security?

2.4 Advanced Security

The previously described lockdown application can

only reliably prevent cheating if it deployed and used

on the students’ devices as intended. If, however,

the students would find a way to circumvent the

lockdown application or to modify the deployed

executable, the integrity of the examination could not

longer be ensured. An easy way to render the lock-

down application useless would be, for example, to

start the lockdown application and the e-Assessment

application in a virtual machine, therefore having

access to the unsecured host system during the

examination. Therefore another security-related

research objective can be concluded:

Research Objective 4: Which additional security

measures have to be implemented, in order to ensure

that the previously described lockdown application

works as intended?

2.5 Acceptance

An e-Assessment framework or, more general,

e-Assessment as a whole has to be accepted by both,

teachers and students, in order of being successfully

introduced at an institute of higher education. There-

fore it is very important to measure the acceptance

of the e-Assessment framework, once it was imple-

mented. Hence the last research objective can be

concluded:

Research Objective 5: Is an e-Assessment frame-

work, which is designed and implemented in accor-

dance with the previously described research objec-

tives, accepted throughout teachers and students? If

not, why?

3 STATE OF THE ART

The following sections described the state of the art

of key aspects of the previously described research

objectives.

3.1 Bring Your Own Device

Bring your own device itself is not a new phenomenon

and is already used in context of higher education

around the globe (K

¨

uppers and Schroeder, 2016). The

approaches in use cover a lot of different scenarios.

The differences between these scenarios result from

two main questions:

1. Which software is used on the students’ devices?

2. How are the students’ devices connected?

The following paragraphs describe briefly, the key

facts for both questions.

Software. The students’ devices can either be used

as a workstation or as a thin client. In the first case,

fully functional software is utilized on the students’

devices, using the processing power of the these de-

vices. That software can be for example a program-

ming IDE, a CAD program or a simple web browser

for accessing a LMS or the like. This scenario can be

further subdivided, since it is possible to use the op-

erating system that is already present on the devices

or to provide the students with a pre-configured op-

erating system (mostly linux distributions), which the

students have to boot on their devices, for example

from a USB-stick. In difference to that, the students’

devices can be used as thin client, i.e. with a soft-

ware that connects to a virtual desktop infrastructure

(VDI). In that case, the server provides the processing

capacities and the students’ devices only serve as an

interface to the server.

Connections. Depending on the software sce-

nario, that is used, there are different possibilities

for the students’ devices how these are connected

to a network. If no online resources are needed

throughout the examination, the devices can be not

connected at all. Once online resources are needed,

for example the connection to a LMS, not being

connected is obviously no longer an option. In that

case there can either be a special, secure network

for the examination or not. If, for example, the

standard WiFi network of a university is used to

provide connectivity throughout the examination,

the software on the students’ devices would have

to ensure that no illegal online resources are accessed.

All of the approaches described in the cited review

paper, however, are either not platform independent

or not technically secured in a sound way. Especially

the latter causes a lot of effort for the invigilation dur-

ing the examinations. Additionally, it is pointed out

that backup devices have to be held by the invigila-

tors. These are going to be used if one student’s de-

vice breaks down during the examination.

3.2 Cross-platform e-Assessment

LMS are potentially cross-platform e-Assessment ap-

plications, since they allow for quizzes or the like.

Examples are Moodle

4

or ILIAS

5

. Since these LMS

are accessible via a web browser, every platform for

which a web browser is available can be used for e-

Assessment in that context. The regular LMS, how-

ever, have only a very limited set of available types

of assignments (Eilers, 2006), for example multiple

choice questions. Therefore, specialized types of as-

signments have to be implemented as a plugin in order

to make use of those in an examination. This was, for

example, done in (Amelung et al., 2006) and (Ramos

et al., 2013).

In addition to LMS, which lack features particu-

lar to a certain subject, there a specialized learning

management systems (SLMS) (R

¨

oßling et al., 2008).

Examples are (Amelung et al., 2011) for computer

science and (Gruttmann et al., 2008) for mathemat-

ics. These cover a lot more types of assignments for a

particular subject, but do not generalize. Therefore in-

terdisciplinary use, for example as the e-Assessment

application of choice for an institute of higher educa-

tion, is not well supported.

It has also to be taken into account, that these

systems are designed to work within a regular web

browser. Therefore additional security, for example

by using a lockdown application (see Section 3.3),

has to be provided in order to prevent cheating. Since

a regular web browser is not per se designed to be a

secure environment for e-Assessment, the implemen-

tation of a lockdown is more difficult than in a setting

where the e-Assessment application is specifically de-

signed to run within a secure context.

3.3 Lockdown

There is a wide variety of lockdown applications

available, for example the SafeExamBrowser

6

by

ETH Z

¨

urich, LockDown Browser

7

by Respondus,

KioWare Lite

8

by KioWare or Questionmark Se-

cure

9

by Questionmark (Frank, 2010). These tools

are, however, not platform independent. SafeExam-

Browser for example is available for windows oper-

ating systems and macOS, LockDown Browser is ac-

4

https://moodle.org/

5

http://www.ilias.de/

6

http://safeexambrowser.org

7

http://www.respondus.com/products/lockdown-

browser

8

http://www.kioware.com/kwl.aspx

9

https://www.questionmark.com/content/questionmark-

secure

tually only available for windows operating systems.

The other tools cover also platforms like Android

or iOS, but none of the tool is available for Linux,

let alone ChromeOS. Additionally, some of the tools

must be purchased in order to use them, therefore in-

troducing new costs.

Additionally, online proctoring system are avail-

able, for example Kryterion Online Proctor

10

, Proc-

torFree

11

or ProctorU

12

. These services make use of

the webcam to monitor the student sitting in front of

the computer, therefore verifying the student’s iden-

tity. Some of these services provide additionally a

special application, which has to be used throughout

an exam. These tools provide some security, which

goes into the same direction as a full-blown lockdown

application, but do not cover the same set of security

features (Foster and Layman, 2013).

4 METHODOLOGY

The research carried out in this PhD project is based

on the design based research methodology (Wang and

Hannafin, 2005). The research process consists of the

following main steps:

1. Identification of the Problem

2. Design and Implementation of Prototypes

3. Evaluation of the Prototypes / Refinement of Re-

quirements

4. Implementation of the final e-Assessment Frame-

work

5. Final Evaluation

The particular steps will be described in the fol-

lowing sections.

4.1 Identification of the Problem

Based on experience in teaching at institutes of higher

education, it became obvious that e-Assessment is not

well integrated into higher education. Especially in

the field of computer science, however, e-Assessment

would greatly benefit the examinations by bringing

the main subject of study - the computer - into the

examination. Therefore it was decided to investi-

gate why e-Assessment is not better integrated into

higher education. Two main reasons became obvi-

ous: money and a lack of available tools that work

10

https://www.onlineproctoring.com/home.html

11

http://proctorfree.com/

12

https://www.proctoru.com/

out of the box. Especially the latter keeps reserva-

tions against e-Assessment existent. Hence the im-

plementation of an e-Assessment framework, fulfill-

ing certain requirements and supporting BYOD, was

considered a potential solution to the identified prob-

lems.

4.2 Design and Implementation of

Prototypes

4.2.1 Conception of the Prototypes

Based on the identified problems and the proposed

solution, different BYOD approaches will be imple-

mented as prototypes and evaluated afterwards. These

prototypes will be based on scenarios described in

(K

¨

uppers and Schroeder, 2016).

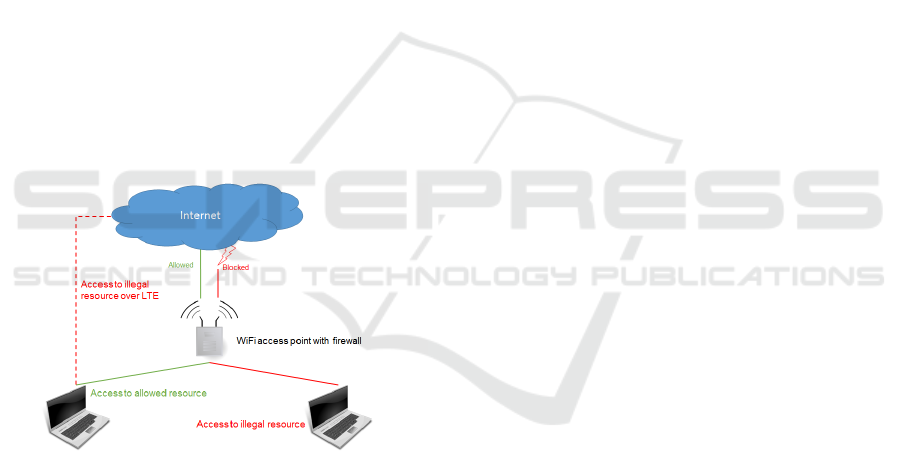

It seems most promising to provide the students

with a special software to ensure security throughout

the examination, instead of relying on a secured net-

work. Especially since mobile internet is available to

everyone at an affordable price, it would not be con-

trollable if any of the students would bypass the se-

cure network by utilizing an LTE adapter or the like.

This situation is depicted in Figure 3.

Figure 3: Bypassing a secured network over mobile inter-

net.

However, if mobile internet can be suppressed ef-

fectively, for example by structural measures, then

a secure network could be added on top of the e-

Assessment framework in order to provide an addi-

tional layer of security. For the design of the pro-

totypes it is assumed, that access to an uncontrolled

network is available, therefore the prototypes have to

take care of the security themselves. Hence, three sce-

narios remain as basis for a prototype: the students’

devices as...

1. ... workstation with a lockdown application

2. ... workstation with a provided operating system

3. ... thin client for a VDI

Since a VDI can provide only small savings - if

any - in comparison to a traditional centrally man-

aged IT infrastructure (Microsoft, 2010)(Microsoft,

2014)(Intel, 2011), this scenario would contradict the

starting point of utilizing BYOD in order to save

money. Therefore only two scenarios remain, which

will be implemented as prototypes. Both prototypes

are described briefly in the following sections.

4.2.2 Students’ Devices as Workstation with a

Lockdown Application

For this scenario, the prototype will consist of three

main components:

1. The e-Assessment Application

2. The Lockdown Application

3. The Examination-Server

The e-Assessment application will be a program

that serves as the front-end of the examination. It will

present the assignments of the examination, which

are received from the examination-server, to the stu-

dents and also provide suitable ways of entering

their results, which are sent back to the examina-

tion server. For a programming assignment, for ex-

ample, the e-Assessment application could provide a

text editor with syntax highlighting. Beyond the basic

examination-related capabilities, the e-Assessment

application will provide an interface, which the lock-

down application can use in order to work properly.

The lockdown application will prevent cheating

during the examination. Since the e-Assessment ap-

plication is intended to be platform independent, the

lockdown application has to be adapted to the particu-

lar operating systems. In order to lower the threshold

for problems with the functioning of the lockdown ap-

plication on the students’ devices, it is desirable to not

require administrative privileges, because not all stu-

dents may have these on their devices. This could be,

for example, the case if a student employee is allowed

to use a device that is provided by its employer. By

not requiring administrative privileges it may be pos-

sible that not all actions, that could be used for cheat-

ing, can be prevented. The lockdown application can,

however, detect these actions. In its simplest case,

the lockdown application could check whether the e-

Assessment application has lost the focus. If an illegal

action was detected, the lockdown application reports

to the examination-server.

The examination-server has multiple tasks. First

and foremost it provides the assignments of the ex-

amination to the e-Assessment application running on

the students’ devices and collects the results. Second,

it provides logging functionality, which is used by the

lockdown application. Additionally it provides fur-

ther features, which may be needed by different types

of assignments. For programming assignments, for

example, it could provide a functionality that allows

the students to compile and run their source code on

the server and get feedback during the examination.

The examination-server additionally keeps track of

the students’ progress during the examination. There-

fore, if a student’s device breaks down and a backup

device has to be used, the student’s progress is not

lost, but the examination can be seamlessly resumed.

The e-Assessment application and the lockdown

application have to be implemented in a lightweight

way in order to ensure the previously described equal-

ity of treatment. It is also because of the equal-

ity of treatment, that the examination-server imple-

ments additional features and not the e-Assessment

application itself. By outsourcing operations and fea-

tures to the examination server, all students are treated

equally, because they all access the same server.

4.2.3 Students’ Devices as Workstation with a

Provided Operating System

For this scenario the students have to boot their de-

vice with a provided USB stick. This USB stick con-

tains a pre-configured operating system, for example

a linux distribution. This operating system is con-

figured in a way that allows the students to do only

things that are wanted during the examination. There-

fore, only allowed programs are installed and only al-

lowed information are contained on the USB stick.

Online resources can be blocked by a firewall, that

blocks all online resources except white-listed ones.

Since the students do not have administrative privi-

leges on that operating system, they are not able to

alter the system’s configuration. At first sight, this

approach seems to be very promising, but there are

potential drawbacks. First, there may be technical

problems with booting a device from a USB stick

(Frankl et al., 2012)(Alfredsson, 2014). Second, the

equality of treatment could be violated if just some

software is used on the operating system, for exam-

ple a regular programming IDE or a CAD program.

These programs tend to work better on devices that

have more processing power and memory available

than others. Therefore it could be an option to use the

e-Assessment application as software on the provided

operating system.

The results of the students can either be saved on

the USB sticks or uploaded to a server. The first

case would make a network connection potentially ex-

pendable. If a student’s device breaks down, the USB

stick can principally be used on a backup device to

resume the examination without loss of data. If the

breakdown of a students device, however, damages

also the USB stick, all of the student’s results so far

are lost. Therefore, in order to guarantee reliability

during the examination, for the prototype the previ-

ously described examination-server could be used to

keep track of the students’ progress.

4.3 Evaluation of the Prototypes /

Refinement of Requirements

Once the previously described prototypes are imple-

mented, a survey will be carried out to evaluate how

the prototypes perform in an examination-like sce-

nario. For this purpose voluntary teachers and stu-

dents will be asked to simulate examinations with the

e-Assessment framework. Different issues will be ad-

dressed by this survey as listed in the sections below.

Both perspectives, the students and the lecturers, will

be evaluated to get the entire picture. The results of

the survey will then support the decision which pro-

totype will be the basis for the implementation of the

final e-Assessment framework (see section 4.4).

4.3.1 Usability

The objective usability is intended to determine how

usable a prototype really is in an examination. There-

fore the focus lies on newly developed or adjusted

software, e.g. the e-Assessment application or the

provided operating system. Basically, the aim is to

get aware of conceptional issues that decrease usabil-

ity. Additionally, suggestions for improvement from

teachers and students will be collected.

4.3.2 Security

The objective security is intended to determine how

secure a prototype really is. Security in this regard

has two meanings, which basically come down to the

following questions:

• Are students able to cheat despite the lockdown

application?

• Are the students’ results appropriately saved?

Therefore it will be analyzed whether problems

in this regard occurred during the simulated exami-

nations. Additionally the teachers and students will

be asked whether they came across a potential flaw in

the conception of security related aspects.

4.3.3 Acceptance

The objective acceptance is intended to determine if

the e-Assessment framework is accepted by teachers

and students. As already described previously, the ac-

ceptance of e-Assessment by teachers and students is

an important factor for the success of introducing e-

Assessment at institutes of higher education. There-

fore the goal is to find out whether there are still

reservations against e-Assessment after the simulated

examinations with the e-Assessment framework and

possibly find ways to sort out these reservations.

4.4 Implementation of the Final

e-Assessment Framework and Final

Evaluation

Based on the results that were collected during the

survey, one prototype will be selected as the most

promising one. This prototype will serve as basis for

the final e-Assessment framework. The suggestions

for improvement from the survey will be used dur-

ing the implementation. It may be possible, however,

that issues are mentioned during the survey that do

not come with a proposed solution. To possibly find

solutions for this issues, purposeful literature review

and potentially another survey could be the tools of

choice. This survey would have to be carried out with

a broader audience and be specifically aimed at the

open issues.

After the second implementation phase, the result

has to be evaluated again in order to make sure that

the implemented improvements indeed improve us-

ability, security and acceptance of the e-Assessment

framework.

5 EXPECTED OUTCOME

The expected outcome of the PhD project is an e-

Assessment framework, that fulfills all the previously

mentioned requirements. First and foremost it should

be accepted by teachers and students and therefore

possibly serve as a booster for e-Assessment in gen-

eral. Since it utilizes students’ devices instead of

requiring an institute of higher education to main-

tain a costly IT infrastructure, it potentially low-

ers the entry hurdle for starting with e-Assessment.

Hence, institutes of higher education could simply

test the e-Assessment framework without the need

of big investments and see if it fulfills their needs

or not. Even if the framework does not fulfill some

institutes’ needs, if these institutes gave feedback,

the e-Assessment framework could be improved fur-

ther. More generally, despite being labeled as the

’final e-Assessment framework’ in this paper, the e-

Assessment framework could potentially be improved

if more institutes of higher education would test it and

report issues as well as suggestions for improvement.

Therefore it is planned to release the e-Assessment

framework as an open-source project with a suitable

license, for example the GPL (Free Software Founda-

tion, 2007). This should lower the entry hurdle even

more, because on the one side everyone has the possi-

bility to comprehend in detail how the e-Assessment

framework works, on the other side it can be adapted

to own needs if necessary. Therefore everyone can

actively contribute to the further development of the

e-Assessment framework.

Beyond the already discussed features of e-

Assessment, some additional features were yet men-

tioned during discussions with peers and teachers.

Some of them will be discussed here to give an im-

pression which issues e-Assessment can tackle be-

yond changing the mode of examination itself.

5.1 Ask a Question

From experience, it is a rather common scenario

throughout an examination, that students ask ques-

tions about the assignments. For example how a spe-

cific part is meant or what is asked for at all. It is

also common that the same questions are asked over

and over again, especially if the assignment lacks in

clarity. So, at some point, the invigilator has to de-

cide to give the hints, which were given to each ask-

ing student, to the whole audience. But then, even

if the invigilator asks for the whole audience’s atten-

tion, some students may not listen. So they will keep

asking the same question as before. Hence, one ad-

ditional feature suggested was a kind of Q&A system

for the e-Assessment framework. This feature would

enable students to post questions to the invigilator,

who then could be rejected or answered. If the ques-

tion would be answered, the questions and the answer

would be available to all students. Therefore, each

student would get the same information at the same

time. Additionally, the other students are no longer

disturbed during the examination, either by other stu-

dents asking questions or by the invigilator giving in-

formation to the whole audience.

5.2 Individual Time Measurement

Sometimes, even if the examination sheets have been

placed on the tables before the students were allowed

to enter the room, it is not possible to track whether

all students turn the sheets all at the same time. Or,

despite starting to write something being forbidden,

while the examiners explains some things after the

students were allowed to read the assignments, some

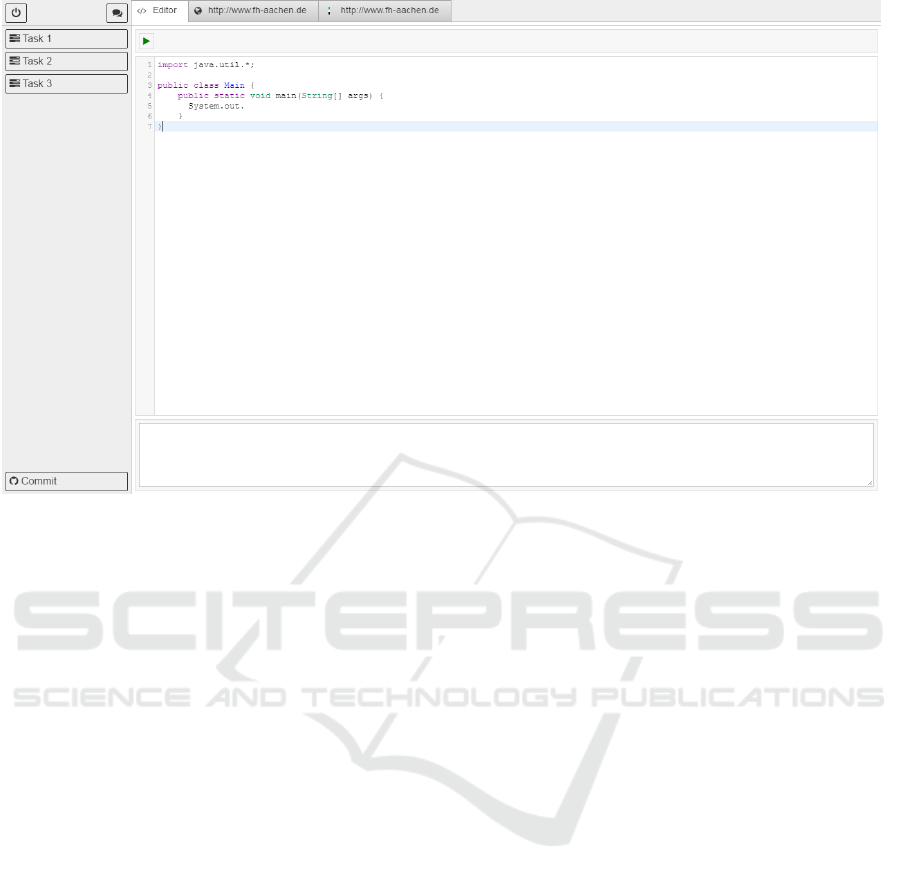

Figure 4: First version of the e-Assessment application.

students may chose not to obey that. These issues can

potentially lead to situations, where some students

have in practice a few more minutes to work on the

examination, than other students. Therefore, another

suggestion was made regarding the time measurement

of the examination. The e-Assessment framework

could not measure time globally, but individually for

each students. Hence, each student would have n

minutes to work on the examination, regardless of

whether the student chose to listen to the examiner at

the start of the examination or wanted to start working

right away.

6 STAGE OF THE RESEARCH

In this paper a proposal for an e-Assessment frame-

work was presented. At the moment a first version

of the e-Assessment application is implemented. A

screenshot is shown in Figure 4. The previously pro-

posed feature set is admittedly not fully implemented

at the moment. The examination-server is available as

far as the actual state of the e-Assessment application

needs it for testing. Additionally, the lockdown appli-

cation for windows operating systems (starting from

Windows

R

7) is also available. A bachelor thesis that

deals with the lockdown application on linux-based

operating systems is written at the moment. Alto-

gether, it is planned to have everything implemented

and to carry out the first survey in fall 2017.

Quite recently the PhD project was granted a fund-

ing by the German Stifterverband (Stifterverband f

¨

ur

die Deutsche Wissenschaft e.V., 2016). Therefore the

project is already known to a general public. That

fuels the hopes that the PhD project can indeed be a

booster to e-Assessment once it has been finished.

REFERENCES

Alfredsson, F. (2014). Bring-your-own-device Exam Sys-

tem for Campuses.

Amelung, M., Krieger, K., and Rosner, D. (2011). E-

Assessment as a Service. IEEE Transactions on

Learning Technologies, 4(2):162–174.

Amelung, M., Piotrowski, M., and R

¨

osner, D. (2006).

EduComponents. In Davoli, R., Goldweber, M., and

Salomoni, P., editors, the 11th annual SIGCSE confer-

ence, page 88.

Beck, K. (2015). Test-driven development: By example. A

Kent Beck signature book. Addison-Wesley, Boston,

20. printing edition.

Biella, D., Engert, S., and Huth, D. (2009). Design and De-

livery of an E-assessment Solution at the University of

Duisburg-Essen. In Proceedings EUNIS 2009, EUNIS

Proceedings.

Birch, D. and Burnett, B. (2009). Bringing academics on

board: Encouraging institution-wide diffusion of e-

learning environments. Australasian Journal of Ed-

ucational Technology, 25(1).

B

¨

ucking, J. (2010). eKlausuren im Testcenter der Univer-

sit

¨

at Bremen: Ein Praxisbericht.

Dahinden, M. (2014). Designprinzipien und Evaluation

eines reliablen CBA-Systems zur Erhebung valider

Leistungsdaten.

Dahlstrom, E., Brooks, C., Grajek, S., and Reeves, J.

(2015). Undergraduate Students and IT.

Deutsch, T., Herrmann, K., Frese, T., and Sandholzer, H.

(2012). Implementing computer-based assessment –

A web-based mock examination changes attitudes.

Computers & Education, 58(4):1068–1075.

Eilers, B. (2006). Entwicklung eines integrierbaren Sys-

tems zur computergest

¨

utzten Lernfortschrittskontrolle

im Hochschulumfeld.

Fluck, A., Pullen, D., and Harper, C. (2009). Case study of

a computer based examination system. Australasian

Journal of Educational Technology, 25(4).

Foster, D. and Layman, H. (2013). Online Proctoring Sys-

tems Compared.

Frank, A. J. (2010). Dependable distributed testing: Can the

online proctor be reliably computerized? In Marca,

D. A., editor, Proceedings of the International Con-

ference on E-Business. SciTePress, S.l.

Frankl, G., Schartner, P., and Zebedin, G. (2012). Se-

cure online exams using students’ devices. In

2012 IEEE Global Engineering Education Confer-

ence (EDUCON), pages 1–7.

Free Software Foundation (2007). GNU GENERAL PUB-

LIC LICENSE.

German Bundestag (2012). Basic Law for the Federal Re-

public of Germany.

Gruttmann, S., B

¨

ohm, D., and Kuchen, H. (2008). E-

assessment of Mathematical Proofs: Chances and

Challenges for Students and Tutors. In 2008 Interna-

tional Conference on Computer Science and Software

Engineering, pages 612–615.

Hochschulforum Digitalisierung (2016). The Digital Turn:

Hochschulbildung im digitalen Zeitalter.

Intel (2011). Benefits of Client-Side Virtualization.

Jara, N. and Molina Madrid, M. (2015). Bewertungsschema

f

¨

ur eine abgestufte Bewertung von Programmierauf-

gaben. In Pongratz, H., editor, DeLFI 2015 - die 13. E-

Learning Fachtagung Informatik der Gesellschaft f

¨

ur

Informatik e.V, GI-Edition Lecture Notes in Informat-

ics Proceedings, pages 233–240. Ges. f

¨

ur Informatik,

Bonn.

Krathwohl, D. R. (2002). A Revision of Bloom’s Taxon-

omy: An Overview. Theory Into Practice, 41(4):212–

218.

K

¨

uppers, B. and Schroeder, U. (2016). BRING YOUR

OWN DEVICE FOR E-ASSESSMENT - A RE-

VIEW. In G

´

omez Chova, L., L

´

opez Mart

´

ınez, A.,

and Candel Torres, I., editors, International Confer-

ence on Education and New Learning Technologies,

EDULEARN proceedings, pages 8770–8776. IATED.

Luecht, R. M. and Sireci, S. G. (2011). A Review of Models

for Computer-Based Testing: Research Report 2011-

2012.

Microsoft (2010). VDI TCO Analysis for Office Worker

Environments.

Microsoft (2014). Deploying Microsoft Virtual Desktop In-

frastructure Using Thin Clients: Business Case Study.

Politze, M., Schaffert, S., and Decker, B. (2016). A se-

cure infrastructure for mobile blended learning appli-

cations. In Proceedings EUNIS 2016, volume 22 of

EUNIS Proceedings, pages 49–56.

Poll, H. (2015). Student Mobile Device Survey 2015: Na-

tional Report: College Students.

Ramos, J., Trenas, M. A., Guti

´

errez, E., and Romero,

S. (2013). E-assessment of Matlab assignments in

Moodle: Application to an introductory programming

course for engineers. Computer Applications in Engi-

neering Education, 21(4):728–736.

R

¨

oßling, G., Korhonen, A., Oechsle, R., Iturbide, J.

´

A. V.,

Joy, M., Moreno, A., Radenski, A., Malmi, L., Kerren,

A., Naps, T., Ross, R. J., and Clancy, M. (2008). En-

hancing learning management systems to better sup-

port computer science education. ACM SIGCSE Bul-

letin, 40(4):142.

Søgaard, T. M. (2016). Mitigation of Cheating Threats in

Digital BYOD exams.

Stifterverband f

¨

ur die Deutsche Wissenschaft e.V. (2016).

Fellowships f

¨

ur Innovationen in der Hochschullehre.

Terzis, V. and Economides, A. A. (2011). The acceptance

and use of computer based assessment. Computers &

Education, 56(4):1032–1044.

Themengruppe ’Innovationen in Lern- und

Pr

¨

ufungsszenarien’ (2015). E-Assessment

als Herausforderung: Handlungsempfehlungen

f

¨

ur Hochschulen.

Vogt, M. and Schneider, S. (2009). E-Klausuren an

Hochschulen: Didaktik - Technik - Systeme - Recht

- Praxis.

Walker, R. and Handley, Z. (2016). Designing for learner

engagement with computer-based testing. Research in

Learning Technology, 24(0):88.

Wang, F. and Hannafin, M. J. (2005). Design-based

research and technology-enhanced learning environ-

ments. Educational Technology Research and Devel-

opment, 53(4):5–23.

Willige, J. (2016). Auslandsmobilit

¨

at und digitale Medien:

Arbeitspapier Nr. 23.

Winkley, J. (2010). E-assessment and innovation.