An Ontological Model for Assessment Analytics

Azer Nouira, Lilia Cheniti-Belcadhi and Rafik Braham

PRINCE Research Lab ISITCom, H- Sousse, Sousse University, Sousse, Tunisia

Keywords: Learning Analytics, Learning Analytics Models, Assessment Analytics, Ontology.

Abstract: Today, there is a growing interest in data and analytics in the learning environment resulting in a highly

qualified research concerning models, methods, tools, technologies and analytics. This research area is

referred to as learning analytics. Metadata becomes an important item in an e-learning system, many

learning analytics models are currently developed. They use metadata to tag learning materials, learning

resources and learning activities. In this paper, we firstly give a detailed injection of the existing learning

analytics models in the literature. We particularly observed that there is a lack of models dedicated to

conceive and analyze the assessment data. That is why our objective in this paper is to propose an

assessment analytics model inspired by the Experience API data model. Hence, an assessment analytics

ontology model is developed supporting the analytics of assessment data by tracking the assessment

activities, assessment result and assessment context of the learner.

1 INTRODUCTION

Learning analytics (LA) analyses the educational

data derived from student interaction with the

learning environment such as LMS (Learning

Management System) and MOOC (Massive Open

Online Courses) who generate large amounts of data

(Big Data). In the literature, many definitions have

been proposed for the term learning analytics. For

example, according to (Siemens., 2011) learning

analytics is the measurement, collection, analysis

and reporting of data about learners and their

contexts, for purposes of understanding as well as

optimizing learning and the environments in which it

occurs. The majority of learning analytics definitions

share a particular emphasis on converting

educational data into useful actions to improve the

learning processes (Lukarov et al., 2014).

Learning modeling is a key task in the emerging

research areas of learning analytics (LA). A learner

model represents information about a learner's

characteristics, states and activities, such as

knowledge, motivation and attitudes. In this research

work, we investigate the existing learning analytics

models by specifying some of their characteristics.

In fact, these models focus essentially on modeling

learning data (traces). However learning

environments generate different types of educational

data. Indeed, beyond the learning data there is

assessment data and communication data, etc.

Assessment is one of the major steps in the learning

process. In addition, assessment traces must be well

conceived in the same way as the learning traces, to

build a flexible and correct assessment model that

can support the analytic of assessment data. Our

research questions can be summarized in two major

questions:

How can we model assessment data to build a

flexible assessment analytics model?

What are the different assessment data that can

be conceived to build our assessment analytics

model?

This paper is structured as follows: in section 2 we

identify the most well known learning analytics

models by detailing some of their features. In section

3 we explore the assessment analytics concept. In

section 4 we present our assessment analytics

scenario and its analysis and requirements then, we

describe the assessment analytics process. Finally, in

section 5 and 6, we propose more details and we

describe semantically our proposed ontological data

model for assessment analytics.

2 RELATED WORK

Actually there are various formats of data

representation for usage data and they focus on the

Nouira, A., Cheniti-Belcadhi, L. and Braham, R.

An Ontological Model for Assessment Analytics.

DOI: 10.5220/0006284302430251

In Proceedings of the 13th International Conference on Web Information Systems and Technologies (WEBIST 2017), pages 243-251

ISBN: 978-989-758-246-2

Copyright © 2017 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

243

user’s activities. The user activities and their usage

of learning data objects in the different learning

environment are called usage metadata. Indeed

different models are proposed by researchers to

characterize usage data across learning systems.

Based on this data representation via models,

learning analytics can realize different analysis and

provide personalized and meaningful information to

improve the learning process. (Niemann et al., 2012;

Lukarov et al., 2014) present in their work the most

commonly used data models representations such a

CAM (Contextualized Attention Metadata) (Schmitz

et al., 2012), Activity Streams (J. Snell, M. Atkins,

W. Norris, C. Messina, M. Wilkinson, and R. Dolin.,

2015), Learning Registry Paradata (Paradata

specification., 2011) and NSDL Paradata (NSDL’s

TSPE., 2012).

2.1 CAM (Contextualized Attention

Metadata)

The CAM Model (Schmitz et al., 2012) allows

people to control the user interactions with the

learning environments. This model focuses on the

event itself rather than on the user or data object. So

many attributes are assigned for each event such as

its id, the event type, the timestamp, and a sharing

level reference. Each entity and also each session

can be described in a different and suitable way and

no information is duplicated. Each event can be

conducted in a session. The information can be

stored with different formats such XML, RDF,

JSON or in a relational database. In the literature we

found various researches concerning CAM. (Najjar

et al., 2006) discuss how CAM enables the

collection of rich usage to enhance user’s models,

predict usage patterns and feed personalization.

(Ochoa and Duval., 2006) provide a study showing

how CAM can be used to rank and recommend

learning objects. (Wolpers et al., 2007) propose a

CAM framework that is able to capture the

observations about the user activities with digital

content from different applications such as web

browser, multimedia player, LMS etc.

2.2 Activity Streams

Activity Streams (Snell et al., 2015) is a data format

for encoding and transferring activity/event metadata

published in 2011. An Activity Stream is a

collection of one or more individual activities

carried out by users. Each activity comprises a

certain number of attributes such as verbs, ids and

contents. An activity has three properties e.g. the

actor, the object, and the target. Many social

networks like facebook actually use Activity

Streams to store and manage user’s activities. Some

learner management systems (e.g. Canvas) are also

starting to develop analytics tools to generate

activity stream profiles for learners and teachers.

2.3 Learning Registry Paradata

Learning Registry Paradata (Paradata specification.,

2011) is an extended or modified version of Activity

Streams for storing aggregated usage information

about resources such as description, measure, and

date. The three main elements of Learning Registry

Paradata are actor, verb, and object. The verb refers

to a learning action and detailed information can be

stored. It’s important here to clear the difference

between metadata and paradata. Metadata describes

what a resource is, while paradata records how the

resource is being used. The learning registry is a

metadata agnostic. This means that it adds the

paradata about resource which is used, reused,

adapted, contextualized, tweeted, shared, etc.

Paradata complements metadata by providing an

additional layer of contextual information.

2.4 Learning Context Data Model with

Interest

The Learning Context Data Model with interest

(LCDM) (Thus et al., 2015). It mainly describes the

learner’s activities and the characteristics of the

learning environment. The learning context data

model is based on CAM representation. According

to (Thus et al., 2015) this data model considers two

points: the first one is to take into account which

type of learning activities that should be filtered and

the second point is how to mountain the semantic of

context information. The interest extension of

LCDM takes into account the weights of the

interests as well as their evolution over time. The

authors follow an iterative approach to develop a

new version of LCDM which satisfies specific

interest because context and interests represent

important features in the lifelong learner model.

2.5 The Experience API (xAPI)

xAPI called also Tin Can API is developed by

Advanced Distributed Learning Initiative (ADL)

(Experience API Working Group, 2013), and is

aimed at defining a data model for logging data

about students’ learning paths (Kelly and Thorn.,

2013). The xAPI presents a flexible data model for

WEBIST 2017 - 13th International Conference on Web Information Systems and Technologies

244

logging data about the learner experience and

performance. The xAPI specification is suitable with

the learning analytics purpose, since it tracks and

stores the experience and the performance of the

learner (learning traces).

The xAPI specification is based on two main

parts. The first part is the format of learning activity

statement and the second part is the Learning Record

Store (LRS). LRS is the element responsible for

storage and exchange of learning activities traces

presented as activities statements. The activity

statement is a key part of the xAPI data model. All

learning activities are stored as statements such as:

“I did this” of the form actor, verb and object and it

can be extended with some optional properties like

result and context.

The xAPI specification is flexible. Hence among

web-based formal learning, xAPI is capable of

tracking informal learning, social learning, and real

world experiences. A wealth of

examples related to

the learning

activities that can be tracked include

reading an article, watching a training video or

having a conversation with a mentor. As a result the

LRS stores various statements concerning content

view, video consumption and assessment result. As a

result, it is possible to access and query the data

stored in Learning Record Store (LRS) and therefore

we could provide different services such as

statistical service, reporting service, assessment

service and semantic analysis.

In the literature xAPI has been widely

implemented. Hence, we found a several research

works related to learning analytics using xAPI. For

instance (Kitto et al., 2015) present a solution for

Learning Analytics beyond the LMS which is the

Connected Learning Analytics (CLA) toolkit, which

enables data to be extracted from social media such

as Google+, Twitter, Facebook, etc and imported

into a Learning Record Store (LRS), the way it is

defined by the new xAPI standard. Many other

works also can be founded in (Brouns et al., 2014),

(Corbi and Burgos, 2014), (Del Blanco et al, 2013).

All data models mentioned above are learning

analytics centric. That is to say that they focus on

how to present well and to conceive learning

activities, users and data objects. These models will

be used for analytical purpose to improve the quality

of the learning process. However learning

environments generate different types of educational

data. Among them there is assessment data and

communication data, etc. Assessment is one of the

major steps in the learning process. In addition,

assessment data must be tracked as well and

processed

According to our research, there is a lack of models

that focus on assessment analytics, that is to say, a

model which is interested in assessment data. The

only learning analytics data model which is

previously detailed and which can support analyzing

assessment data is the TIN CAN API (xAPI) since it

contains an optional property in its sentence format

named result that records information about

assessment result. But xAPI is presented as an e-

learning standard for tracking data interoperability in

the whole learning process and does not focus

particularly on assessment. Besides, during our

research we did not found any paper that focuses on

tracking assessment data with xAPI specification. Is

this due to the weakness of xAPI standard in

tracking assessment data? When we investigate the

result property which is an optional property, we

noticed that is described with different metadata that

can record information about the assessment result

such as the score, the success, the completion the

duration and the response. All these assessment

results are very important, but according to our point

of view, these results are insufficient and need to be

extended and annotated to ensure a several

assessment result tracked that can help later for

assessment analytics. The investigation of the

context property of the xAPI data model leads as to

deduce that the context metadata of xAPI data model

are not related to assessment context. In fact, all of

them represent information about the context of

learning activity such as the instructor and the team

that the statement is related to, the platform used, the

language of the statement recorded, etc. Any

information is recorded about the context of

assessment such as the type of assessment, the form

of assessment and the technique of assessment.

Our contribution is based on the weakness of the

existing xAPI data model dedicated to assessment

data summarized into two major points:

Insufficiency of information dedicated to the

track of the assessment result.

Lack of information dedicated to the assessment

context.

3 ASSESSMENT ANALYTICS

One of the most important steps in the learning

process is assessment; a successful learning

environment must provide effective assessment of

learners. Assessment is both ubiquitous and very

meaningful as far as students and teachers are

concerned (Ellis., 2013). Actually the new learning

environments such as MOOCs generate big

An Ontological Model for Assessment Analytics

245

assessment data (Big Data) given the massive

number of courses proposed and the great number of

learners enrolled. These assessment data must be

processed and analyzed too.

When we focus on assessment data that means,

we study the assessment activities and the

assessment result left by learners, we can launch a

new source of data that can be analyzed and give

new and different indicators to be interpreted and

hence contribute to the improvement of the field of

learning analytics. This research area is called

assessment analytics. According to (Ellis., 2013)

The role that assessment analytics could play in the

learning process is significant. Yet it is

underdeveloped and underexplored. Assessment

analytics has the potential to make valuable

contribution to the field of learning analytics by

extending its scope and increasing its usefulness.

The assessment analytics is the analytics of

assessment data within learning analytics strategy.

(Cooper., 2015) offers an assessment analytics

definition, which is based on the learning analytics

definition of (Siemens., 2011) with a little

modification: assessment analytics is the

measurement, collection, analysis and reporting of

data about learners and their contexts, for purposes

of understanding and optimizing learning and the

environments from which the data derives

assessment.

This open research area and

development topic is addressed by this paper in

order to propose an assessment analytics model.

4 ASSESSMENT ANALYTICS

SCENARIO

4.1 Assessment Analytics Scenario

Description

This section illustrates the context of our research

using a use case scenario that includes an assessment

analytics scenario. Let’s consider the following

situation:

Peter is very passionate of computer sciences, so

he decides to register in a MOOC environment for

studying a course on object oriented modeling. After

the sign stage, Peter can start the learning process. A

set of different learning activities are made by Peter

when he starts interaction with the learning content

such as watching course video, reading texts,

commenting etc. Then Peter is invited to start the

stage of assessment (formative assessment,

summative assessment or it can be also diagnostic

assessment and in this case it will be done before the

learning step). Hence a set of assessment activities

are triggered such as answering, completing, failing

and scoring during the interaction with quizzes or

MCQ (multiple choice question). The MOOC

environment that Peter uses must keep track about

every activity done by this learner including the

learning activities and the assessment activities.

In our case we will focus only on the assessment

data that are particularly the assessment activities

and the assessment results left by Peter. Assessment

data will be conceived and analyzed properly using

analytics strategy, then interpreted for improving the

quality of the learning process and identifying the

opportunities for feedbacks, interventions,

adaptations, recommendation, personalization, etc.

4.2 Assessment Analytics Scenario

Analysis

According to the above scenario, we can identify the

following challenges.

We suppose here that the learning standard chosen

for tracking data interoperability is xAPI, hence all

learning and assessment activities are stored in the

LRS (Learning record store).

Since our objective here is to analyze the

assessment activities, we will focus only on the set

of assessment activities stored in the LRS.

Now here is an example of a set of an assessment

activities stored in the LRS of the learner Peter:

1. Peter attempted quiz_1

2. Peter completed the quiz_1 with a passing score

75%

3. Peter passed the quiz_1

4. Peter completed the quiz_1 with completion false

5. Peter completed the quiz_1 in 6 minutes

6. Peter attempted quiz_2

7. Peter completed the quiz _2 with a passing score

15%

8. Peter failed the quiz_2

9. Peter attempted quiz_2

10. …

To ensure a consistent assessment analytics

engine, it is necessary to track and manage a set of

metadata in relation with assessment activities and

results, the tracked assessment result by the xAPI

specification seems to be insufficient and need to be

more annotated. Besides the assessment activities

and the assessment result it’s necessary to track and

manage also the assessment context data such as: the

assessment types, forms and techniques, the

assessment environment and session. Here is an

WEBIST 2017 - 13th International Conference on Web Information Systems and Technologies

246

example of the metadata values corresponding to the

different assessment analytics that can be recorded

by the specification of our proposed assessment

analytics model:

Environment: MOOC

Assessment Form: Automated Assessment

Assessment Type: Formative Assessment

Assessment Technique: Closed question format:

MCQ

Session: The date and the hour of the logging.

ID: a unique number given to each assessment

activity

All these assessment data must be conceived in

an appropriate format supporting analytics in such a

way that it can help providing users with efficient

personalization and adaptation services. An

important question can be posed now, how can we

conceive assessment data to build a correct and

complete assessment analytics model?

5 ONTOLOGICAL MODEL FOR

ASSESSMENT ANALYTICS

Our objective in this section is to propose an

assessment analytics model dedicated to conceive

assessment data such as assessment activity,

assessment result and assessment context. This

model will be inspired from xAPI data model

specification. In this section, we propose an

assessment analytics model based on the data

derived from assessment. This model allows people

to control user interaction with assessment

resources. More precisely we will focus only on

assessment data. According to (Moody., 1998) there

are eight general requirements which describe data

models. These are: completeness, correctness,

integrity, flexibility, understandability, simplicity,

integration and implementability.

To ensure a consistent representation of our

proposed model for assessment analytics, it will be

interesting to develop an ontological model for

assessment analytics considering the several

advantages given by the use of ontologies like

improving reusability and interoperability,

aggregation of the scattered data in the web,

permitting inferences and contribute coherence and

consistency rules. The proposed model is called

assessment analytics ontology (AAO). In order to

develop our ontology, we follow the most important

steps detailed in (Noy and McGuiness., 2005), the

first step is to enumerate the most important terms in

our ontology through specification of classes such

as: assessment type, assessment statement, etc. Then

it’s necessary to define the classes and the class

hierarchy. After that, we need to define the class

properties and attributes and finally determine the

facets of attributes. To develop our AAO model,

several tools are available and can be used such as

SWOOP, Protégé and WebOnto. In our case, we

used Protégé that offers a simple, complete and

expressive graphical formalism. It also facilitates the

design activity. All the figures below are designed

with VOWL (Lohmann and al., 2014) plug-in,

which can be integrated very easily to protégé and

used to represent graphically the different types of

properties such as object properties, data type

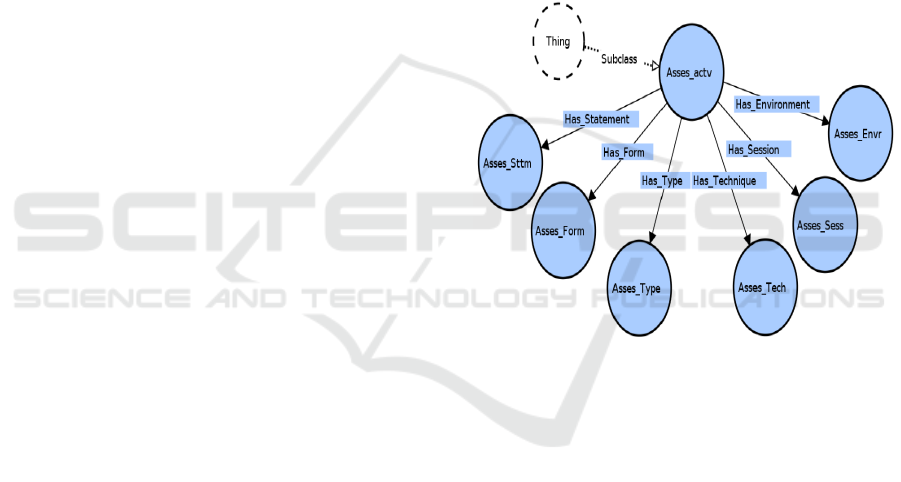

properties and subclasses relations. Figure 2 below

shows a graphical representation of our assessment

analytics ontology with the tool Protégé.

Figure 1: Graphical representation of assessment analytics

ontological model.

The developed ontology gives us the opportunity

to model the main concepts of our assessment

analytics model in terms of its classes and its

hierarchy. The main class of our assessment

analytics ontology is the assessment activity class.

This class is linked with has-a relations to a set of

classes describing the assessment activity context

metadata and the assessment activities. For instance,

we cite the assessment environment class and his

possible instances such as MOOC (Massive Open

Online courses), LMS (Learning Management

system) or PLE (Personal Learning Environment).

Technically, it can be described by using the element

of enumeration OWL:one Of.

<owl:class rdf: id = “Asses_Envr”

<owl:oneOf rdf:parseType="Collection">

<owl:Thing rdf:about="#MOOC"/>

<owl:Thing rdf:about="#LMS"/>

<owl:Thing rdf:about="#PLE"/>

An Ontological Model for Assessment Analytics

247

</oneOf>

The second class is the assessment session class that

contains information about the assessment session

such as activity id and the date of logging as

datatype properties. It serves to ensure that no

information is duplicated.

Also we have the assessment technique class and his

possible instances such as MCQ (Multiple Choice

Question), MRQ (Multiple Response Question), T/F

(True or False question) and Fill in Blanks question.

<owl:class rdf: id = “Asses_Tech”

<owl:oneOf rdf:parseType="Collection">

<owl:Thing rdf:about="#MCQ"/>

<owl:Thing rdf:about="#MRQ"/>

<owl:Thing rdf:about="#T/F"/>

<owl:Thing rdf:about="#Fill in Blanks"/>

</oneOf>

The third class is the assessment form class and its

three possible instances: thediagnostic assessment,

the formative assessment or the summative

assessment.

<owl:class rdf: id = “Asses_Form”

<owl:oneOf rdf:parseType="Collection">

<owl:Thing rdf:about="#Diagnostic"/>

<owl:Thing rdf:about="#Formative"/>

<owl:Thing rdf:about="#Summative"/>

</oneOf>

Then, we have the assessment type class that may

consist of automated assessment, self assessment or

peer assessment.

<owl:class rdf: id = “Asses_Type”

<owl:oneOf rdf:parseType="Collection">

<owl:Thing rdf:about="#Automated"/>

<owl:Thing rdf:about="#Peer assessment"/>

<owl:Thing rdf:about="#Self assessment"/>

</oneOf>

It’s important to mention that all the instances of the

classes cited above have exactly one value for a

particular property such as has_Environment,

has_session, has_form, has_type and has_technique.

Technically, we can use the cardinality constraint

owl:cardinality.

<owl:Restriction>

<owl:onProperty rdf:resource="#Has_Form" />

<owl:cardinality rdf:datatype =

"&xsd;nonNegativeInteger">1</owl:cardinality>

</owl:Restriction>

All these metadata are very helpful for enriching our

assessment analytics model. They are useful later in

the stage of analytics.

Finally, we have the most important class and the

core of our ontological model which is the

assessment statement class used to represent the

assessment experience of the learner. The

assessment statement class of the proposed ontology

is able to capture and formulate sentences of the

form: Peter completed the quiz with a passing score

of 80%, Jane completed the quiz in 10 minutes, and

Daniel failed the quiz with 20%. This leads us to

conclude that each assessment activity has at least

one or more assessment statements.

<owl:Restriction>

<owl:onProperty

rdf:resource="#Has_Statement" />

<owl:minCardinality rdf:datatype =

"&xsd;nonNegativeInteger">1</owl:minCardinality

> </owl:Restriction>

In the next section, we will focus on describing in

details the different properties and data type

properties of the assessment statement class of our

proposed ontological assessment analytics model.

6 SEMANTIC DESCRIPTION OF

THE ONTOLOGICAL

ASSESSMENT ANALYTICS

MODEL (OAA)

In this section we will present the semantic

description of some classes of our ontological model

for assessment analytics.

6.1 Assessment Statement Class

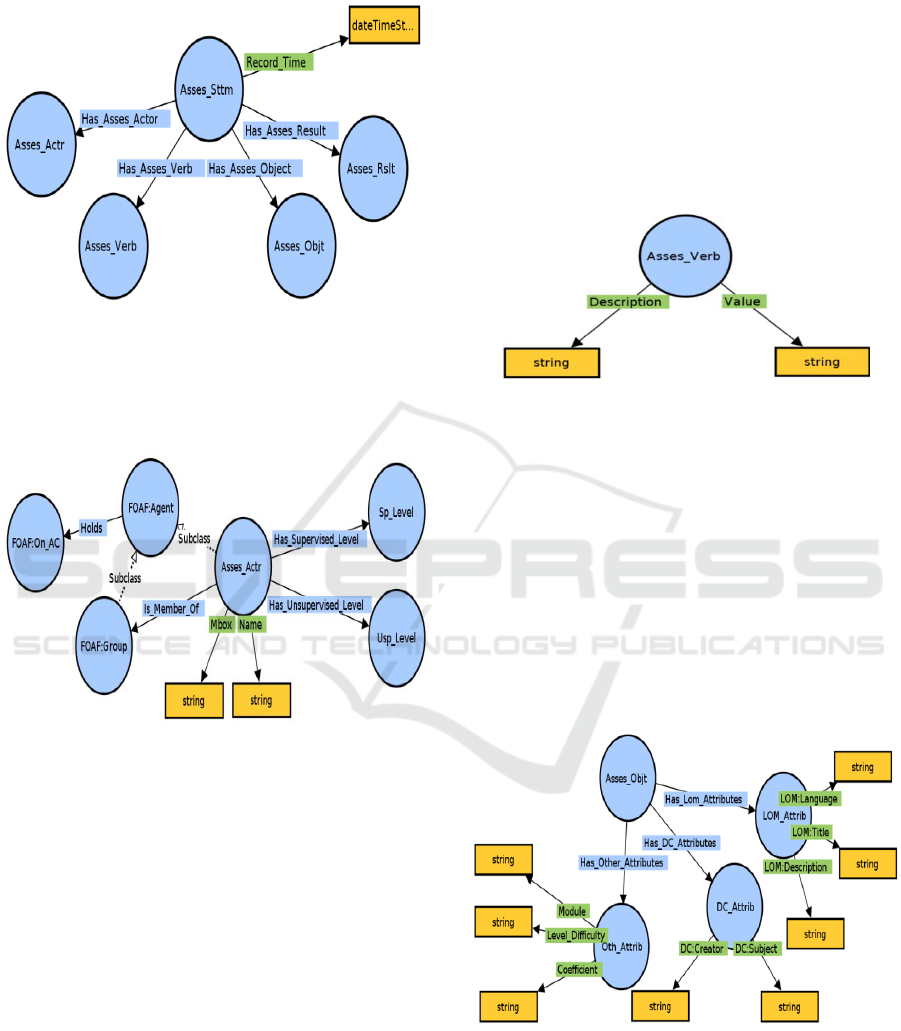

From figure 3 below, we can observe that the

assessment statements have 4 required properties

conform to its format such as Peter failed the quiz

with 20%. From this example we can extract four

properties: the verb, the actor, the object and the

result. Let us began with the first property which is

the actor property that refers to whom? e.g., Peter

that means the learner in our context. The second

one is the verb property which is a key part of an

assessment analytics sentence; it describes the action

performed by the learner when he interacts with an

assessment resource. As examples of assessment

verbs we may cite: answered, completed, and

scored.

The third property is the object that form the

third part of the statement, which refers to what was

experienced in the action defined by the verb e.g. the

quiz. And finally we have one of the most important

properties of the assessment analytics engine which

is the result property, that record information about

assessment result such as score, completion and

duration. Besides we can annotate the assessment

statement class more thoroughly by some data

WEBIST 2017 - 13th International Conference on Web Information Systems and Technologies

248

properties like the record time of the assessment

statement in the LRS (Learning Record Store).

Figure 2: The semantic description of the assessment

statement model.

6.1.1 Semantic Network of Agent Model

Figure 3: The semantic description of the actor model.

As described in the figure 4, the assessment actor

class is described by using some classes of FOAF

ontology (Brickley and Miller., 2014) which is an

ontology describing individuals, their activities and

relations with other people to define agent and

group. Hence the assessment actor is a subclass of

FOAF:Agent and is a member of FOAF:Group

which is also a subclass of FOAF:Agent. Each

assessment actor holds an online account which

represents the provision of some form of online

service. Each assessment actor has two different

type of levels, the second one is the unsupervised

level which is the level of the learner before starting

the assessment process and the second one is the

supervised level which is the real and the concrete

level of the learner related to his performance in the

assessment process identified automatically by

analyzing the assessment traces of the learner.

6.1.2 Semantic Network of Verb Model

The verb class describes the action performed during

the learning experience and more specifically in the

assessment experience. Figure 5 below, shows that

the verb class is described by 2 attributes. The first

one is the language of the verb and the second one is

the value of the verb. The assessment verb is an

important class of this model. Its possible instances

are the verbs related to the assessment experience

like scored, failed and passed.

Figure 4: The semantic description of the verb model.

6.1.3 Semantic Description of Assessment

Object Model

The assessment object can be annotated and

described through using the standards LOM

(Learning Object Metadata) (IEEE Learning

Technology Standards Committee,. 2002) and

Dublin Core (The Dublin Core Metadata Initiative.,

2001)

and can be described more thoroughly by

some other attributes like the coefficient , the

module and the level of difficulty of each

assessment object.

Figure 5: The semantic description of the assessment

object model.

An Ontological Model for Assessment Analytics

249

6.1.4 Semantic Description of the

Assessment Result Model

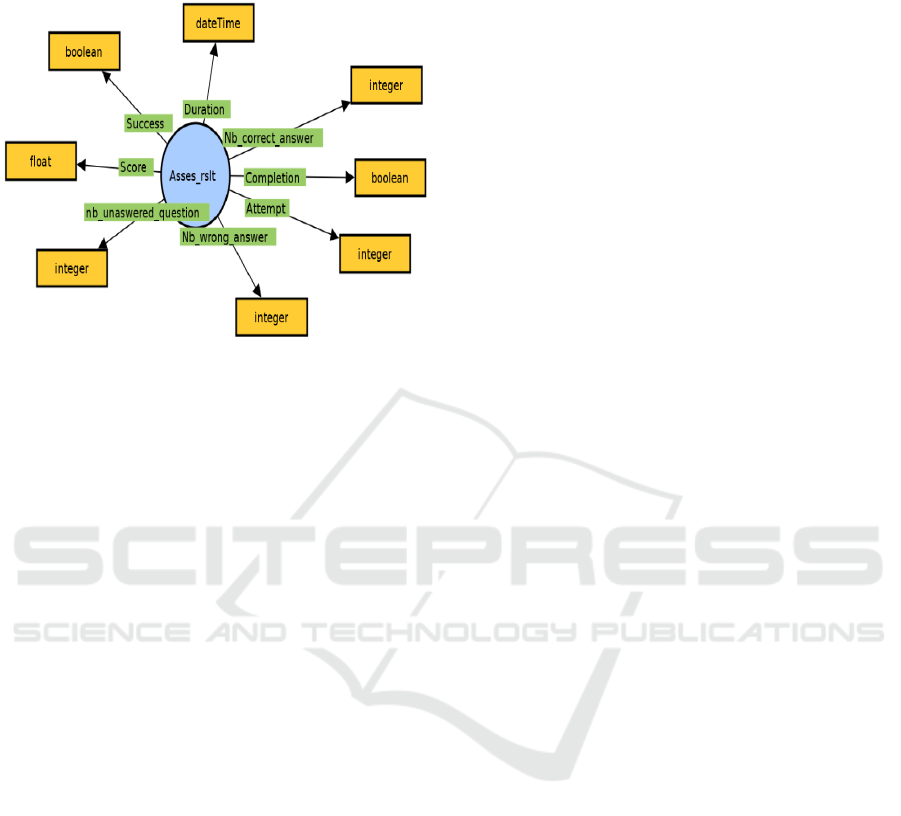

Figure 6: The semantic description of the assessment

result model.

The assessment result class is one of the main

classes in our assessment analytics models since it

contains a several datatype properties that records

information about assessment results such as score,

completion, duration, success inspired from the

xAPI specification and others assessment result

metadata proposed such as the attempt, number of

correct answer, number of wrong answer and

number of unanswered question. This is particularly

helpful for future assessment analytics. The

assessment result class should be described with a

rich metadata. These bits of information are

important and we should record and use them since

our objective is to conceive a complete, correct and

flexible assessment analytics models. These

attributes can be very helpful later in the stage of

analytics.

The design of our AAO model brings some

advantages for future use. For instance, one of the

most important advantages of using ontology resides

in its structure that is consistent with the logic

description and its coupling with the inference

engines allowing expert system to deduce logical

reasoning and conclusions. In our case, the AAO

model can be a consistent model supporting the

assessment analytics purpose by deducing logical

reasoning and conclusions about the assessment

activities, result and context of each learner.

7 CONCLUSION AND FUTURE

WORK

According to (Lukarov et al., 2014) learning

analytics can perform different analysis and provide

personalized and meaningful information to improve

the learning and teaching process. In this paper, we

presented a detailed presentation of the set of

learning analytics models existing in the literature.

The existing learning analytics models focus

essentially on learning data, according to our

research there is a remarkable lack of models that

focus on assessment data. That means a model that

is interested in data derived from assessment. That is

why we tried to propose an ontological assessment

analytics model (AAO) inspired by the Experience

API data model. This model focus essentially on

assessment data, meaning that it tracks the

assessment activities of the learner, then tries to

conceive them in a flexible and consistent way and

finally store them in a specific module for

assessment data storage to be later accessed for

analytics purposes.

Concerning our further work we will try to extend

our ontology with additional metadata and hence

capture the semantic description to deduce logical

reasoning and conclusions for assessment analytics.

REFERENCES

Brickley, D. and L. Miller (2014). FOAF Vocabulary

Specification 0.99.

Brouns, F., Tammets, K., and Padrón-Nápoles, C. L.

(2014). How can the EMMA approach to learning

analytics improve employability?.

Corbi, A., and Burgos, D. (2014). Review of Current

Student-Monitoring Techniques used in eLearning-

Focused recommender Systems and Learning

analytics. The Experience API & LIME model Case

Study. IJIMAI, 2(7), 44-52.

Cooper, A. (2015). Assessment Analytics. In Eunis E-

learning task force workshop.

Del Blanco, Á., Serrano, Á., Freire, M., Martínez-Ortiz, I.,

and Fernández-Manjón, B. (2013). E-Learning

standards and learning analytics. Can data collection

be improved by using standard data models?.

In Global Engineering Education Conference

(EDUCON), 2013 IEEE (pp. 1255-1261). IEEE.

Ellis, C. (2013). Broadening the scope and increasing the

usefulness of learning analytics: The case for

assessment analytics. British Journal of Educational

Technology, 44, 4, 662-664.

Experience API Working Group (2013). Experience API.

Version 1.0.1.

IEEE Learning Technology Standards Committee (2002).

WEBIST 2017 - 13th International Conference on Web Information Systems and Technologies

250

Draft standard for learning object.

J. Snell, M. Atkins, W. Norris, C. Messina, M. Wilkinson,

and R. Dolin (2015). Activity streams 2.0 w3c

working draft. W3C Working Draft, W3C.

http://www.w3.org/TR/2015/WD-activitystreamscore/

Kelly, D. and Thorn, K. (2013). Should Instructional

Designers care about the Tin Can API? In eLearn, 3, 1.

Kitto, K., Cross, S., Waters, Z., and Lupton, M. (2015).

Learning analytics beyond the LMS: the connected

learning analytics toolkit. In Proceedings of the Fifth

International Conference on Learning Analytics And

Knowledge (pp. 11-15). ACM.

Lohmann, S., Negru, S., and Bold, D. (2014). The

ProtégéVOWL plugin: ontology visualization for

everyone. In European Semantic Web Conference,

395-400. Springer.

Lukarov, V., Chatti, M. A., Thus, H., Kia, F.S., Muslim,

A., Greven, C., and Schroeder, U. (2014).Data Models

in Learning Analytics. In DeLFI Workshops 88-95.

Moody, D. L. (1998). Metrics for evaluating the quality of

entity relationship models. In International

Conference on Conceptual Modeling, 211-225.

Niemann, K., Scheffel, M., and Wolpers, M. (2012). An

overview of usage data formats for recommendations

in TEL. In 2 nd Workshop on Recommender Systems

for Technology Enhanced Learning, 95.

NSDL’s Technical Schema for Paradata Exchange.

(2011). Retrieved from

http://nsdlnetwork.org/stemexchange/paradata/schema.

Noy, N. F. and McGuinness, D. L. (2005). Développement

d’une ontologie 101: Guide pour la création de votre

première ontologie. Université de Stanford. Rapport

technique.

Ochoa, X. and Duval, E. (2006). Use of contextualized

attention metadata for ranking and recommending

learning objects. In Proceedings of the 1st

international workshop on Contextualized attention

metadata: collecting, managing and exploiting of rich

usage information, 9-16.

Paradata Specification. v1.0, (2011). Retrieved from

https://docs.google.com/document/d/1IrOYXd3S0FU

wNozaEG5tM7Ki4_AZPrBn-pbyVUz-

Bh0/edit?hl=en_US.

Schmitz, H. C., Wolpers, M., Kirschenmann, U., and

Niemann, K. (2012). Contextualized Attention

Metadata’. Human Attention in Digital Environments,

186-209.

Siemens, G. (2011). What Are Learning Analytics? In 1st

international conference on Learning Analytics and

Knowledge. 2015.

The Dublin Core Metadata Initiative. (2001) DC-Library

Application Profile, Dublin Core.

Thus, H, Chatti, M.A, Brandt, R., and Schroeder, U.

(2015) Evolution of Interests in the Learning Context

Data Model. In Design for Teaching and Learning in a

Networked World. Springer.

Wolpers, M., Najjar, J., Verbert, K., and Duval, E. (2007).

Tracking actual usage: the attention metadata

approach. Educational Technology & Society, 10, 3,

106-121.

An Ontological Model for Assessment Analytics

251