The Ideomotor Principle Simulated

An Artificial Neural Network Model for Intentional Movement

and Motor Learning

Neri Accornero and Marco Capozza

Neuroscience Department, Sapienza University, Viale dell’Università 30, 00185, Rome, Italy

Keywords: Ideomotor Principle, Intentional Movement, Motor Learning, Artificial Neural Network, Simulation.

Abstract: Although the ideomotor principle (IMP), the notion positing that the nervous system initiates voluntary

actions by anticipating their sensory effects, has long been around it still struggles to gain widespread

acknowledgement. Supporting this theory, we present an artificial neural network model driving a simulated

arm, designed as simply as possible to focus on the essential IMP features, that demonstrates by simulation

how the IMP could work in biological intentional movement and motor learning. The simulation model

shows that IMP motor learning is fast and effective and shares features with human motor learning. An IMP

extension offers new insights into the so-called mirror neuron and canonical neuron systems.

1 INTRODUCTION

The ideomotor principle (IMP) claims that the

nervous system initiates voluntary actions by

anticipating their typical sensory consequences

(Kiesel and Hoffmann 2004, Stock and Stock 2004).

Over the past twenty years increasing evidence

favoring this theory emerged from both behavioral

studies (reviews in Wulf and Prinz, 2001;

Wohlschläger et al., 2003; Shin et al., 2010;

Hommel, 2013) and fMRI studies (Eran Dayan et

al., 2007; Melcher et al., 2008, 2013; Pfister et al.,

2014). Unfortunately these findings centered on

individual IMP features and they provided no overall

view of a working IMP. Given this background,

simulations with artificial neural networks (ANN)

that demonstrate how the IMP works may lead to its

wider acknowledgement. Although few reported

simulations of this type explicitly mention IMP

(Karniel and Inbar, 1997; Sauser and Billard, 2006;

Butz et al., 2007; Galtier, 2014), many involve

IMP’s underlying rationale, namely sensorimotor

mapping. Existing simulations nevertheless provide

scarce help in understanding the IMP because they

use non-IMP procedures, such as supervised

learning or complicated modularity and flowcharts,

or they add complex details that make the essential

IMP features even harder to understand.

In this paper we take an opposite approach: to

highlight how the IMP works and make it easy to

understand, we present an unsupervised ANN

system that is as simple and basic as possible and

learns to move a three-joint arm in a workspace

using the IMP and sensorimotor mapping. We

examine its main features and compare them with

those of human motor learning. We then suggest

how an IMP extension also offers new ways to look

at the so-called mirror neuron system.

2 METHODS

2.1 Model Design

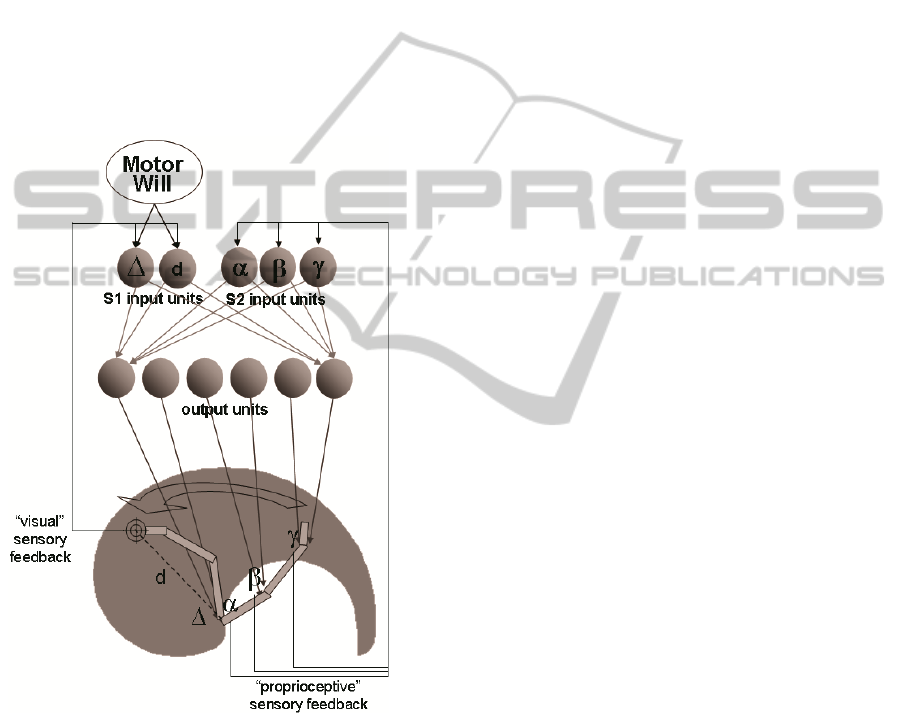

Our simulation consisted of an ANN controlling a

three-joint simulated limb moving on a two-

dimensional plane (Fig. 1). The network received on

its input units sensory information on the limb

position, and sent limb commands from its output

units. Each limb movement was defined by three

vectors: the initial sensory state (before the

movement); the final sensory state (after the

movement); and the neuromuscular activations

needed to pass from the initial to the final state. The

first two vectors were given to the ANN input units,

and the ANN had to compute the third vector on its

output units. Because IMP states that intentional

limb movements depend on anticipation of their

226

Accornero N. and Capozza M..

The Ideomotor Principle Simulated - An Artificial Neural Network Model for Intentional Movement and Motor Learning.

DOI: 10.5220/0005081002260233

In Proceedings of the International Conference on Neural Computation Theory and Applications (NCTA-2014), pages 226-233

ISBN: 978-989-758-054-3

Copyright

c

2014 SCITEPRESS (Science and Technology Publications, Lda.)

sensory effects, the ANN input units receiving

sensory information on the final limb state (S1 units)

also received motor commands from a component

outside the network that established where the

moving hand should be positioned and therefore

acted as Motor Will. Commands from it to the ANN

consisted of sensory representations of the desired

final hand position, coded as visuospatial

coordinates in agreement with the observation that

motion planning in human takes place in the visually

perceived space (Flanagan and Rao 1995, Shadmehr

2005). Unlike S1 units, the ANN input units

receiving sensory information on the initial limb

state (S2 units) did not receive motor commands,

they only received “proprioceptive” sensory

information from the limb joint angles.

Figure 1: General architecture for the Ideomotor Principle

(IMP) simulation model. The artificial neural network

(ANN) controls a 3-joint limb moving in a two-

dimensional plane. The ANN receives sensory feedback

information on the limb and motor commands from Motor

Will. Δ, d: polar coordinates for the hand (Δ = angle with

respect to the posterior-anterior axis, d = distance from the

shoulder point). α, β, γ: shoulder, elbow, wrist joint angle.

Not all input-to-output connections depicted; actually each

input unit sends connection to all output units.

Given that velocity information was not

indispensable to the key IMP mechanism as long as

the limb was assumed to start from still and end still,

we decided to give the ANN only sensory

information about limb position (joint angles and

spatial hand position), not velocity.

2.1.1 Limb

The limb was designed to represent a simplified

model of the human right arm comprising three

segments, “arm”, “forearm” and “hand” articulated

with three joints “shoulder”, “elbow” and “wrist”,

with the shoulder situated in a fixed point in space,

and the hand able to move freely in the reachable

space. The arm measured 70 pixels in length, the

forearm 70 pixels and the hand 20 pixels (because

the model involved a simulation displayed on a

computer screen, for simplicity lengths are given in

pixels). The three joints opened and closed within

angular limits in a similar way to a human arm: the

shoulder from 23 to 190 degrees, the elbow from 20

to 180, the wrist from -90 to 72. The overall area

reachable with the hand (grey area in Fig. 1)

therefore assumed a drop-like shape measuring 298

x 200 pixels.

The three joints were each controlled by an

agonist-and-antagonist muscle couple. Each muscle

was controlled by a neural network output unit.

Muscle actions were simulated in a simplified

manner, without recourse to spring models or

tension-length diagrams. Analog outputs from 0 to 1

from the two units acting on muscle flexion and

extension for every joint were assumed to determine

variations in joint opening or closing according to

the following equations:

a

j,

t+1

= a

j,t

+ (e

j,t

– f

j,t

+ p

j,t

) / m

j

(1)

p

j,t

= [ (amax

j

+ amin

j

) / 2 - a

j,t

] · k

j

(2)

where:

a

j,t

= degree of joint j opening at time t, in radians;

e

j,t

= output (0 to 1) from the unit controlling the

extensor muscle for joint j at time t;

f

j,t

= output (0 to 1) from the unit controlling the

flexor muscle for joint j at time t;

p

j,t

= passive elastic muscle and ligament force

acting on the joint j, maximum when the joint is

fully opened or closed;

m

j

= mass in the segment distal to the joint j,

normalized for the upper arm (1 for the upper arm,

0.6 for the forearm, 0.2 for the hand);

amax

j

= degree of maximum opening for joint j, in

radians (1.05 for the shoulder, 1 for the elbow, 0.4

for the wrist);

amin

j

= degree of maximum closure for joint j, in

radians (0.13 for the shoulder, 0.11 for the elbow, -

0.5 for the wrist);

TheIdeomotorPrincipleSimulated-AnArtificialNeuralNetworkModelforIntentionalMovementandMotorLearning

227

k

j

= force intensity p

j,t

for the joint j, empirically

chosen (0.5 for the elbow and shoulder, 0.14 for the

wrist).

2.1.2 Neural Network

The ANN was a two-layer neural network

comprising 5 input units and 6 output units, fully

connected with anterograde connections from input

to output. There were no hidden units. The input

units were simple linear units. The output units were

classic sigmoid units, having an analogic output

ranging from 0 to 1 and equipped with modifiable

learning bias. Their output values were copied into

equation (1) (variables e

j,t

and f

j,t

for any joint j) to

compute limb movements. The first two input units

(S1 units) received “visuospatial” information on the

hand position, encoded in polar coordinates (angle in

radians with respect to the posterior-anterior axis

and distance from the shoulder, normalized for the

overall length of the fully extended limb). The last

three input units (S2 units) received

“proprioceptive” information on the opening angle

for each of the three joints, normalized between -1

and 1. Before each movement the two S1 units also

received commands from Motor Will.

2.2 Simulation Flow

When the simulation began, the connection weights

and the output unit biases were initialized with

random values ranging from -0.25 to +0.25. The arm

was positioned with all the joints partly opened.

After the initialization stage, the simulation

proceeded in turns, each turn comprising the two

phases, movement and learning, each comprising

three steps.

2.2.1 Movement

1. The input units received sensory information

from the arm: S1 units received the spatial location

of the hand, and S2 units the angles from the three

joints.

2. Motor Will overwrote S1 input unit activations

with activations corresponding to a random desired

hand position.

3. The input units activated the output units, and the

joint opening angles therefore changed according to

equations (1) and (2). The actual output values were

recorded for use in the ensuing learning phase,

during which they yielded the desired output, target

activations. The difference in pixels (spatial errors)

between the desired hand position (target position)

and the hand position actually reached, were

measured and recorded. This spatial error

measurement served only to evaluate network

performance and not to assess motor learning.

2.2.2 Learning

1. The sensory pathways conveyed to the S1 units

information on the new hand position.

2. The input units activated the output units again,

this time using the new activation values obtained

from the S1 units corresponding to the hand position

actually reached. These outputs left the joint angles

unchanged, they served only for learning. These new

outputs were the ones the network would produce if

the desired movement were actually the movement

achieved in movement phase 2.2.1, step 3. The

difference between the current outputs and the

outputs recorded in that phase was the error to

minimize during learning.

3. A standard delta rule (Rumelhart, Hinton &

Williams 1986) was applied to minimize the error

vector calculated in the former step. The results we

describe were obtained with a learning rate = 0.1 and

momentum = 0.25.

2.3 Tests

Besides evaluating the “online” spatial error after

every movement (section 2.2.1 step 3), after every

5000 movements the program temporarily stopped

the simulation, and submitted the network to an

“offline” test entailing a predefined set of 588

movements (Fig. 2A) commanded by the Motor Will

transmitting to the S1 units the polar coordinates for

the 588 successive turns. During testing, the learning

phase (section 2.2.2) was skipped. For each of the

588 test movements the position actually reached by

the hand and the corresponding spatial error were

recorded for later evaluation offline.

We used these procedures to conduct several

simulations. In some simulations we introduced a

sort of “sensory blind spot”, a wide circular area,

covering up to 50% of the workspace and

differentially positioned in the various simulations

(Fig. 3A), where we skipped the learning phase

(2.2.2) when the hand ended up in this area.

In other trials, to assess whether learning

depended on precise physical values inherent to the

system, and to verify whether the controller system

adapted to changes in the controlled system, we

varied the sensory code used for hand position or the

limb segment mass (variable m

j

in equation 1), right

from the beginning, or after advanced learning

(30000 movements).

NCTA2014-InternationalConferenceonNeuralComputationTheoryandApplications

228

Figure 2: Progressive improvement in performance during

the 588 test movements with motor experience. Small

circle = hand starting point; black points = hand

movement arrival point. A: target points; B: points

effectively reached before learning; C: after 5000 random

movements and D: after 30000 random movements.

3 RESULTS

In all the simulations the tested ANN system

improved from a mean spatial error of more than

150 pixels when simulation began to an error of less

than 15 pixels after 10,000 movements (few tens of

seconds on a modern pc) and fewer than 7 pixels

after 30000 movements. These results underwent

minimum variability owing to random elements used

for initializing weights and movement choices

decided by Motor Will.

The network’s motor performance, as assessed

with periodic testing using the 588 target points,

progressively improved with experience, improving

from a mean spatial error of about 95 pixels at turn

zero (Fig. 2B) to 53 pixels at turn 5000 (Fig. 2C),

and 13 pixels at turn 30000 (Fig. 2D) (these values

differ from those for the mean spatial error

mentioned above because they only refer to the 588

test movements instead of all movements). Before

motor learning started, the points effectively reached

clustered in an area corresponding to the

intermediate arm positions (Fig. 2B). As the network

acquired experience, arm movements gradually

expanded and after 30000 movements covered the

workspace in a fairly uniform manner (Fig. 2D)

acceptably matching the targets.

After 30000 movements, spatial error

distributions showed that the system performed well

over the whole workspace, except in the extreme tail

in the drop-shaped area corresponding to extreme

extension (Fig. 3B). The sensory blind spot had

scarce influence on learning improvements (Fig.

3C,D). These results remained uninfluenced by the

hand sensory code used, nor did they significantly

suffer from mass changes in limb segments, before

or after motor learning.

Figure 3: Spatial error distribution for the 588 test

movements after 30000 random movements with and

without no-learning areas (“sensory blind spot”). A:

workspace area (dark grey area) with a generic blind spot

(white disk); B: errors (in grey color code) without the

sensory blind spot; C, D: with the sensory blind spot

(black outline circle) in two different sizes and positions.

Values are for spatial error in pixels.

4 DISCUSSION

4.1 Comments on the Model

The simplified ANN simulation, focusing on the

basic IMP features insofar as motor commands and

sensory feedback reach the same S1 input units,

effectively learned to move the arm in the

workspace. It learned acceptably well even when we

varied influential experimental variables such as the

sensory code used for hand position, the mass for the

limb segments to move, and when the ANN was

able or unable to receive sensory feedback about

movements performed in the workspace (sensory

blind spot). Our decision to disregard velocity

sensory information or hidden neural network units

had no apparent influence on our model’s functional

ability thus confirming that these variables are

unessential to model functioning.

Our IMP model reproduces with acceptable

approximation the various human motor learning

properties, such as learning from experience, ability

to work regardless of the specific body segment

features, ability to adapt to changes in these features,

and the fact that even randomly-generated

movements contribute to learning (infantile motor

babbling). Like the human motor learning system,

TheIdeomotorPrincipleSimulated-AnArtificialNeuralNetworkModelforIntentionalMovementandMotorLearning

229

our ANN underwent completely unsupervised

learning. We never used external sample sets. The

ANN itself generated learning examples from its

random movements and errors. For learning we

never measured spatial errors between the desired

movements and those actually made. Conversely, as

the learning error we used the difference between

output unit activations in two different functional

phases (phase 2.2.1 step 3 and in phase 2.2.2 step 2),

values completely and locally available to the net.

In our proposed model the movement learned is

not the desired movement but the movement

effectively done (section 2.2.2). The system

nevertheless succeeds in performing with reasonable

precision even movements never previously done

(the 30000 learned movements taken as a typical

number of movements for simulation are less than a

mere 0.0001% of the over 300 million movements

possible in the workspace, and the test movements

described in section 2.3 and those finishing in the

sensory blind spot were even explicitly excluded

from learning). This system ability evidently stems

from an ANN’s well-known ability to generalize

(Caudill & Butler 1992), a feature allowing our

ANN to interpolate and extrapolate information

from the movements done, thus filling in unexplored

movements and forming the general sensorimotor

map valid for all movements.

Even though these model features are

biologically plausible, other features are biologically

less plausible, at least with the essential model

architecture we used. For example, in particular, the

proposed learning system requires special timing.

After the movement, when sensory feedback from

the hand position returns to the S1 units, the S2 units

must still retain information on the limb state before

the movement, and the output units must still retain

information about the activation that caused the limb

muscles to contract. Hence during learning, the

network must have constantly available all three

components mentioned in section 2.1: neuronal

activations coding the initial limb state, the final

state, and those causing the limb to pass from its

initial to its final state, that we will henceforth call

learning triplets, or simply triplets. In a computer

software algorithm this requirement poses no

problems whereas in biological nervous systems it

raises several concerns. A more realistic model to

simulate a biological motor system should therefore

include accessories such as memory units and units

that regulate activation flow to and from the

network. These accessories become even more

essential as the possible time shift between the three

triplet components increases, as it does in the

extended model we propose in the next section.

4.2 Triplet Chaining

The model we propose here extends IMP from

elementary movements to more complex behaviors

thus unifying the various intentional movement

scales under a single principle. The ANN model we

have examined so far applies to elementary

movements. Conversely, the chained triplet model

can also account for more complex actions, where it

can also provide a new insight into neuronal

populations such as canonical neurons (Shepherd

1992) and mirror neurons (Di Pellegrino et al. 1992;

Casile 2013) that have been found in biological

nervous systems and whose real function remains

debatable.

The extended triplet model that we propose here

involves several triplet-networks, linked so that the

output units for each preceding triplet-net also act as

the S1 input units for the ensuing triplet-net. The S1

and S2 input units can receive sensory information

not only from the osteo-muscular system, but from

the whole body and external environment. In this

chain, the S1 input units on the first net receive the

actions desired by Will (actions that are more

abstract than the simple and concrete desire to bring

the hand to a desired position), and the ensuing nets

progressively increase the level of detail and

concreteness for the actions needed to satisfy the

desire. Finally, the final net (the net described in the

basic model) generates the neuromuscular

activations required to perform the selected

action(s).

For example, if a person is hungry and sees an

apple at hand, the S2 units for the triplet-nets in the

chain receive this information as the actual/initial

state. If Will generates and transmits to the first net’s

S1 units the desired state “no longer hungry”, then

the first net, which has learned from experience that

when one is hungry the action for curbing hunger is

to eat, generates the sensory-coded desired action

“eat” as activations on its output units. These output

units in the first net are also the S1 input units in the

second net, so “eat” becomes the final desired state

(in this case a desired action) for the second net.

The second net has learned from experience

which objects are edible, and when the desired

action is to eat and the object is edible, it generates

on its output units the sensory-coded action “eat the

object”, which becomes the desired action for the

third net S1 units.

NCTA2014-InternationalConferenceonNeuralComputationTheoryandApplications

230

Having learned from experience that when faced

with an apple and a desire to eat it the action to eat it

is to get it, the third net now generates on its output

units the sensory-coded action “get the apple”,

which becomes the desired action for the fourth net.

“Get the apple” is still sensory encoded though,

so it actually pictures “arm extended to the apple and

fingers tightened on the apple”. This is the desired

final state for the fifth net, the net described in the

basic model, the one that also receives on its input

units sensory information about the current arm state

and activates the arm muscles.

Essentially, we suggest that in the nervous

system voluntary actions or behaviors are triggered

by formulating their end-effects as high-level

sensory representations of the desired results. These

representations are generated in the prefrontal

cortex, especially in the dorsal and lateral prefrontal

areas (Haber 2003; Watanabe & Sakagami 2007;

Tanji & Hoshi 2008). These areas act as a high-

level, ‘strategic’ Motor Will by generating sensory

representation for the desired result (goal), without

focusing on details in its execution, other than

possibly enforcing context-related constraints (e.g.

to avoid an obstacle in grasping an object).

These sensory representations consist of neuron

activation-and-inactivation combinations in the

prefrontal areas, which in turn evoke sensory

representations in the frontal premotor areas and in

parietal, occipital, and temporal sensory and

associative areas. These parietal, occipital, and

temporal areas encode both sensory-specific

representations for the goal (symbolized by the S1

units in our model) and actual sensations from the

body and the environment relevant to the task

(symbolized by the S2 units in our model). These

representations and sensations are locally sensory-

specific: tactile or proprioceptive in the parietal lobe,

visual in the occipital lobe, and acoustic or visceral

in the temporal lobe. Unlike these areas, the

premotor areas encode the goal in a more abstract

and multisensory way. Premotor area neurons are S1

units in our model. Other S1 and S2 units are

probably located in sub-cortical structures,

especially in the basal ganglia (S1 units) and the

thalamus (S2 units).

All these representations then travel throughout

these areas, converging towards the primary sensory

(S1, postcentral gyrus) and motor (M1, precentral

gyrus) areas and the sub-cortical motor structures

through subsequent elaboration steps, represented in

our model by the chained triplets that progressively

detail the appropriate elementary actions needed to

reach the goal. These representations gain motor

detail as they converge to the S1 and M1 brain areas.

Until the very last step, the first and only one that

really encodes the former sensory action

representation into the motor effector

(neuromuscular) space, all these representations are

sensory-coded. The neurons making the final

sensorimotor translation (the output group in our

basic model) are probably located in sub-cortical

motor structures, or even in the spinal chord.

This model is consistent with increasing

evidence from motor research in primates and

humans (reviews in Lebedev & Wise 2002,

Graziano 2006, Cisek & Kalaska 2010. See also

Miller 2000, Miller & Cohen 2001, Haber 2003,

Tanji & Hoshi 2008 for specific reviews on the role

of the prefrontal areas in voluntary movement;

Rizzolatti & Luppino 2001, Rozzi et al. 2008, Koch

et al. 2010 for the role of parietal areas; Burnod et

al. 1999 for flow and distribution of movement-

related sensory representations; and Zinger et al.

2013 for the functional organization of information

flow in the corticospinal pathway and joint

specificity of M1 sites). The stages progressively

elaborating and subdividing motor goals into triplet-

nets are not necessarily exactly those we describe.

What our simplified model allows us to conclude is

that the general features underlying triplet network

chaining concord well with current knowledge on

intentional movement.

Along the triplet chain, the role and function of

some known as well as elusive neuron populations

become clearer. In particular, the function of the

second network in the chain reasonably recalls

known canonical neuron properties. The function of

the third network recalls known mirror neuron

properties, at least those described for certain major

mirror neuron subpopulations, which seem

essentially to encode the subject’s ability to interact

with objects (Caggiano et al. 2009, 2011; Casile,

Caggiano & Ferrari 2011) and reasons for grasping

an object (Casile, Caggiano & Ferrari 2011). Hence

the interpretation our sensorimotor model offers for

mirror neurons is that they primarily exist to allow

us to move intentionally, being a step in

sensorimotor mapping that descends from general,

high-level sensations (“I am hungry”) and Will-

desired sensations (“no longer hungry”) to the

actions (“get the apple”) able to make the desired

sensations real. This is a more basic and critical

function than functions other explanations propose,

for example that mirror neurons are essential for

learning by imitation, for the theory of mind, or for

empathy (Gallese & Goldman 1998; Gallese 2001;

Gallese, Eagle & Migone 2007; Iacoboni 2009).

TheIdeomotorPrincipleSimulated-AnArtificialNeuralNetworkModelforIntentionalMovementandMotorLearning

231

These earlier conjectural explanations remain

unproven and highly controversial (Borg 2007;

Hickok 2009; Dinstein et al. 2010; Heyes 2010;

Decety 2011; Lamm, Decety & Singer 2011) and are

somewhat disconcerting when we consider them in

the monkey, the species in which mirror neurons

have been primarily found. Conversely, more

recently emerging findings (Caggiano et al. 2009,

2011; Casile, Caggiano & Ferrari 2011; Casile 2013)

seem in line with the model we propose, insofar as

they showed that many mirror neurons exist to

encode the subject’s interaction with objects, rather

than similar interactions by others. These “special”

mirror neurons and the classic mirror neurons that

also respond to seeing “their” action performed by

others should be considered together rather than

individually. Hypotheses considering single neurons

isolated from neuron combinations should be

regarded with caution, especially given that the only

study that demonstrated mirror neurons in man

(Mukamel et al. 2010) found confusing and even

contradictory individual neuron responses.

5 CONCLUSIONS

Our unsupervised ANN simulation confirms, as the

IMP claims, that voluntary actions can be initiated

by imagining (desiring) their sensory effects. IMP

seems a valid model for understanding human

sensorimotor mapping, intentional movement and

motor learning. Detailing and extending the IMP in

what we termed the “chained triplet-net” model

makes the IMP also helpful in explaining voluntary

behavior besides elementary actions. Along this

chain, elusive neuronal systems such as the

canonical and mirror neuron systems acquire

definite meanings. Future research should endeavor

to identify which other non-motor nervous functions,

such as cognitive functions, the extended IMP and

the triplet model might help to explain.

ACKNOWLEDGEMENTS

We thank Miss Alice M. Crossman for her

contribution in revising the text.

This work received financial support from

Castello della Quiete Srl (Rome, Italy).

REFERENCES

Borg, E., 2007. If mirror neurons are the answer, what was

the question? Journal of Consciousness Studies, 14(8),

5-19.

Burnod, Y., Baraduc, P., Battaglia-Mayer, A., Guigon, E.,

Koechlin, E., Ferraina, S., Lacquaniti, F. & Caminiti,

R., 1999. Parieto-frontal coding of reaching: an

integrated framework. Experimental Brain Research,

129(3), 325-346.

Butz, M. V., Herbort, O. & Hoffmann, J., 2007. Exploiting

redundancy for flexible behavior: unsupervised

learning in a modular sensorimotor control

architecture. Psychological Review, 114(4), 1015.

Caggiano V., Fogassi L., Rizzolatti G., Thier P. & Casile

A. 2009. Mirror neurons differentially encode the

peripersonal and extrapersonal space of monkeys.

Science, 324(5925), 403–406.

Caggiano V., Fogassi L., Rizzolatti G., Thier P., Giese

M.A. & Casile A., 2011. View-based encoding of

actions in mirror neurons in area F5 in macaque

premotor cortex. Current Biology, 21, 144–148.

Casile, A., Caggiano, V. & Ferrari, P. F., 2011. The mirror

neuron system a fresh view. The Neuroscientist, 17(5),

524-538.

Casile, A., 2013. Mirror neurons (and beyond) in the

macaque brain: an overview of 20 years of research.

Neuroscience Letters, 540, 3-14.

Caudill, M. & Butler, C., 1992. Naturally Intelligent

Systems, MIT press.

Cisek, P. & Kalaska, J. F., 2010. Neural mechanisms for

interacting with a world full of action choices. Annual

Review of Neuroscience, 33, 269-298.

Dayan, E., Casile, A., Levit-Binnun, N., Giese, M. A.,

Hendler, T. & Flash, T., 2007. Neural representations

of kinematic laws of motion: evidence for action-

perception coupling. Proceedings of the National

Academy of Sciences, 104(51), 20582-20587.

Decety, J., 2011. Dissecting the neural mechanisms

mediating empathy. Emotion Review, 3(1), 92-108.

Di Pellegrino, G., Fadiga, L., Fogassi, L., Gallese, V. &

Rizzolatti, G., 1992. Understanding motor events: a

neurophysiological study. Experimental Brain

Research, 91(1), 176-180.

Dinstein, I., Thomas, C., Humphreys, K., Minshew, N.,

Behrmann, M. & Heeger, D. J., 2010. Normal

movement selectivity in autism. Neuron, 66(3), 461-

469.

Flanagan, J. R. & Rao, A. K., 1995. Trajectory adaptation

to a nonlinear visuomotor transformation: evidence of

motion planning in visually perceived space. Journal

of Neurophysiology, 74(5).

Gallese, V. & Goldman, A., 1998. Mirror neurons and the

simulation theory of mind-reading. Trends in

Cognitive Sciences, 2(12), 493-501.

Gallese, V., 2001. The 'shared manifold' hypothesis. From

mirror neurons to empathy. Journal of Consciousness

Studies, 8(5-7), 5-7.

Gallese, V., Eagle, M. N. & Migone, P., 2007. Intentional

attunement: Mirror neurons and the neural

NCTA2014-InternationalConferenceonNeuralComputationTheoryandApplications

232

underpinnings of interpersonal relations. Journal of

the American Psychoanalytic Association, 55(1), 131-

175.

Galtier, M., 2014. Ideomotor feedback control in a

recurrent neural network. (Online)

http://arxiv.org/pdf/1402.3563v3

Graziano, M., 2006. The organization of behavioral

repertoire in motor cortex. Annual Review of

Neuroscience, 29, 105-134.

Haber, S. N., 2003. The primate basal ganglia: parallel and

integrative networks. Journal of Chemical

Neuroanatomy, 26(4), 317-330.

Heyes, C., 2010. Mesmerising mirror neurons.

Neuroimage, 51(2), 789-791.

Hickok, G., 2009. Eight problems for the mirror neuron

theory of action understanding in monkeys and

humans Journal of Cognitive Neuroscience, 21(7),

1229-1243.

Hommel, B., 2013. Ideomotor action control: on the

perceptual grounding of voluntary actions and agents.

Action Science: Foundations of an Emerging

Discipline, 113-136.

Iacoboni, M., 2009. Imitation, empathy, and mirror

neurons. Annual Review of Psychology, 60, 653-670.

Karniel, A. & Inbar, G. F., 1997. A model for learning

human reaching movements. Biological Cybernetics,

77(3), 173-183.

Kiesel, A. & Hoffmann, J., 2004. Variable action effects:

Response control by context-specific effect

anticipations. Psychological Research, 68(2-3), 155-

162.

Koch, G., Cercignani, M., Pecchioli, C., Versace, V.,

Oliveri, M., Caltagirone, C., Rothwell, J. & Bozzali,

M., 2010. In vivo definition of parieto-motor

connections involved in planning of grasping

movements. Neuroimage, 51(1), 300-312.

Lamm, C., Decety, J. & Singer, T., 2011. Meta-analytic

evidence for common and distinct neural networks

associated with directly experienced pain and empathy

for pain. Neuroimage, 54(3), 2492-2502.

Lebedev, M. A. & Wise, S. P., 2002. Insights into seeing

and grasping: distinguishing the neural correlates of

perception and action. Behavioral and Cognitive

Neuroscience Reviews, 1(2), 108-129.

Melcher, T., Weidema, M., Eenshuistra, R. M., Hommel,

B. & Gruber, O., 2008. The neural substrate of the

ideomotor principle: An event-related fMRI analysis.

Neuroimage, 39(3), 1274-1288.

Melcher, T., Winter, D., Hommel, B., Pfister, R., Dechent,

P. & Gruber, O., 2013. The neural substrate of the

ideomotor principle revisited: evidence for

asymmetries in action-effect learning. Neuroscience,

231, 13-27.

Miller, E. K., 2000. The prefontral cortex and cognitive

control. Nature Reviews Neuroscience, 1(1), 59-65.

Miller, E. K. & Cohen, J. D., 2001. An integrative theory

of prefrontal cortex function. Annual Review of

Neuroscience, 24(1), 167-202.

Mukamel, R., Ekstrom, A. D., Kaplan, J., Iacoboni, M. &

Fried, I., 2010. Single-neuron responses in humans

during execution and observation of actions. Current

Biology, 20(8), 750-756.

Pfister, R., Melcher, T., Kiesel, A., Dechent, P. & Gruber,

O., 2014. Neural correlates of ideomotor effect

anticipations. Neuroscience, 259, 164-171.

Rizzolatti, G. & Luppino, G., 2001. The cortical motor

system. Neuron, 31(6), 889-901.

Rozzi, S., Ferrari, P. F., Bonini, L., Rizzolatti, G. &

Fogassi, L., 2008. Functional organization of inferior

parietal lobule convexity in the macaque monkey:

electrophysiological characterization of motor, sensory

and mirror responses and their correlation with

cytoarchitectonic areas. European Journal of

Neuroscience, 28(8), 1569-1588.

Rumelhart, D. E., Hinton, G. E. & Williams, R. J., 1986.

Learning Internal Representations by Error

Propagation. In Rumelhart, D. E., McClelland, J. L. &

The PDP Research Group, Parallel Distributed

Processing. Explorations in the Microstructure of

Cognition, MIT Press.

Sauser, E. L. & Billard, A. G., 2006. Parallel and

distributed neural models of the ideomotor principle:

An investigation of imitative cortical pathways.

Neural Networks, 19(3), 285-298.

Shadmehr, R., 2005. The computational neurobiology of

reaching and pointing: a foundation for motor

learning. MIT press.

Shepherd, G. M., 1992. Canonical neurons and their

computational organization. In Single Neuron

Computation. Academic Press Professional, Inc., 27-

60

Shin, Y. K., Proctor, R. W. & Capaldi, E. J., 2010. A

review of contemporary ideomotor theory.

Psychological Bulletin, 136(6), 943-974.

Stock, A. & Stock, C., 2004. A short history of ideo-motor

action. Psychological Research, 68(2-3), 176-188.

Tanji, J. & Hoshi, E., 2008. Role of the lateral prefrontal

cortex in executive behavioral control. Physiological

Reviews

, 88(1), 37-57.

Watanabe, M. & Sakagami, M., 2007. Integration of

cognitive and motivational context information in the

primate prefrontal cortex. Cerebral Cortex, 17(suppl

1), 101-109.

Wulf, G. & Prinz, W., 2001. Directing attention to

movement effects enhances learning: A review.

Psychonomic Bulletin & Review, 8(4), 648-660.

Wohlschläger, A., Gattis, M. & Bekkering, H., 2003.

Action generation and action perception in imitation:

an instance of the ideomotor principle. Philosophical

Transactions of the Royal Society of London. Series B:

Biological Sciences, 358(1431), 501-515.

Zinger, N., Harel, R., Gabler, S., Israel, Z. & Prut, Y.,

2013. Functional Organization of Information Flow in

the Corticospinal Pathway. The Journal of

Neuroscience, 33(3), 1190-1197.

TheIdeomotorPrincipleSimulated-AnArtificialNeuralNetworkModelforIntentionalMovementandMotorLearning

233