Comparison of Different Powered-wheelchair Control Modes for

Individuals with Severe Motor Impairments

Alfredo Ch´avez

1

, H´ector Caltenco

2

and V´ıtˇezslav Beran

1

1

Faculty of Information Technology (FIT), Brno University of Technology, Brno, Czech Republic

2

Certec, Dept. of Design Sciences, Lund University, Lund, Sweden

Keywords:

Shared Control, Autonomous Wheelchair System.

Abstract:

This paper presents the preliminary evaluation of different powered-wheelchair control modes for individuals

with severe motor impediments. To this end, a C400 Permobil wheelchair has been updated with a control

command communication interface and equipped with a scanning laser sensor to carry out the automation al-

gorithms that are part of the robot operating system framework. A pilot test was performed with three different

modalities; hand-joystick mode, tongue-joystick mode and autonomous mode. The results of the tests have

proven the feasibility of using a power wheelchair either autonomously or controlled by users interchangeably

in order to continue the development towards a better user/wheelchair shared-control paradigm.

1 INTRODUCTION

Electrically powered wheelchairs (PWC) are used to

assist mobility of individuals with severe motor dis-

abilities, such as those with tetraplegia. Users that

still maintain some degree of motor control of arms

or hands use a joystick in order to control the direc-

tion and speed of the PWC. In the other hand, users

with more severe or total motor disabilities have to

rely on alternative interfaces to control a PWC. To

this end, research has been done to develop devices

that can interface the remaining functional parts of

such individuals. Such as interfaces based on detect-

ing head movement (Christensen and Garcia, 2003),

chin movement (Guo et al., 2002), the eyes (Agustin

et al., 2009), the tongue (Huo and Ghovanloo, 2010;

Lund et al., 2010) and even forehead muscular activ-

ity and brain waves(T. Felzer and R. Nordman, 2007).

However, most of these interfaces require high

levels of concentration for navigating in environments

with many obstacles. The eye-tracking system maybe

tedious and tiresome when it is used constantly to ma-

neuver a wheelchair. Furthermore, it can also affect

the normal use of the user’s vision, especially in cases

where the user, either consciously or unconsciously,

looks at a point in the surrounding environment rather

than at the desired path. In which case the system may

believe that the user wants to go to that position (Huo

and Ghovanloo, 2009). Similar problems might occur

with head tracking interfaces. In general, driving the

wheelchair with a specific interface and doing an ac-

tivity that require the use of the same part of body, e.g.

the eyes, the head or speech at the same time, might

not be efficient.

Brain-computer interfaces (BCI) often used elec-

troencephalographs (EEG) to detect voltage fluctua-

tions in the scalp. There are few limitations of using

BCIs to control PWC. Due to a small signal amplitude

in the brain waves, EEG signals need to be amplified

by a factor on the order of 10

4

, thus any noise con-

tamination or whenever the subject blinks, swallows,

laughs, talks etc. makes the corresponding EEG sam-

ple unusable (Huo and Ghovanloo, 2009; T. Felzer

and R. Nordman, 2007). Moreover, the input rate is

also quite slow for a real-time control, e.g. it is up to

25 bits per minute making less than 1 bit every 2 sec-

onds, meaning that it will take more than 2 seconds to

stop it with a command yes/no.

Using the tongue to control a PWC seems to be

a promising alternative. The tongue is able to per-

form sophisticated motor control and manipulation

tasks with many degrees of freedom. It is able to

move rapidly and accurately and does not fatigue eas-

ily and can be controlled naturally without requiring

too much concentration. Georgia Institute of Technol-

ogy, has developed a tongue drive system (TDS) that

consists of a headset and a magnetic tongue barbell,

which is able to interpret tongue movement as com-

mands (Huo et al., 2008). The user drives the PWC

with the tongue using five different commands: for-

353

Chávez A., Caltenco H. and Beran V..

Comparison of Different Powered-wheelchair Control Modes for Individuals with Severe Motor Impairments.

DOI: 10.5220/0005026403530359

In Proceedings of the 11th International Conference on Informatics in Control, Automation and Robotics (ICINCO-2014), pages 353-359

ISBN: 978-989-758-040-6

Copyright

c

2014 SCITEPRESS (Science and Technology Publications, Lda.)

ward (FD), backward (BD), turning right (TR), left

(TL) and stopping (N) (Huo and Ghovanloo, 2010).

A smartphone (iPhone), directly connected to the

wheelchair, can serve as a bridge between the TDS

and a PWC (Kim et al., 2012), eliminating the need of

bulky computers or specialized hardware. Similarly,

an inductive tongue control system (ITCS) has been

designed at Aalborg University, which is an intra-oral

dental retainer with 18 inductive sensors that can pre-

cisely detect the position of a metallic tongue barbell

(Lotte and Andreasen, 2006). The ITCI can interpo-

late the sensors signals to emulate an intra-oral touch-

pad that can proportionally control the direction and

speed of the PWC, just as if it was controlled by a

standard joystick. Moreover, it can provide 10 dif-

ferent function commands with the remaining sensors

(Caltenco et al., 2011).

In the previous approaches the system lacks of

full autonomy, meaning that the user has to use the

tongue all time to conduct the wheelchair to a de-

sire goal location. A survey showed that 973, 706 to

1, 700, 107 persons in the U.S. would benefit from an

autonomous PWC (Richard et al., 2008). Especially

patients with diagnoses of ALS, cerebral palsy (CP),

cerebrovascular accident (CVA), multiple sclerosis

(MS), multiple system atrophy (MSA), severe trau-

matic brain injury (TBI), among others. Moreover,

1, 389, 916 to 2, 133, 280 would benefit from a PWC

that provides obstacle avoidance, duties of planning

and navigating, but where the user still has control of

high-level tasks, such as destination point selection,

emergency stop and direction change. For instance

users with Diagnoses of Alzheimer disease (AD),

ALS, CP, CVA, blindness or low vision, MS, MSA,

Parkinson disease (PD), spinal cord injury (SCI) at

or above fourth cervical vertebra (C4), among others.

There has been several advances in the development

of fully auntonomous wheelchair navigation systems

using laser range finders (Demeester et al., 2008),

depth sensors/cameras (Theodoridis et al., 2013) and

other combination of navigation sensors and equip-

ment. An extensive review of several autonomous

wheelchairs has been performed by Simpson (Simp-

son, 2004).

However a fully autonomous system is not de-

sired, since users should be allowed as much control

of the wheelchair as their capabilities and the degree

of disability allows them to. There has been several

proposes of semi-autonomous wheelchair navigation

systems (Demeester et al., 2008; Andrea et al., 2012;

Bonarini et al., 2013; Galindo et al., 2006a; Galindo

et al., 2006b; Fern´andez-Madrigalet al., 2004). These

studies propose to facilitate the participation of hu-

mans into the robotic (autonomous) system and ther-

fore improve the overall performance of the robot as

well as its dependability. However, not all levels of

motor impairment lead to the same available human-

input capacity to the system. Some users might be

able to generage richer input to the system than oth-

ers. The research work done in this paper will be

used as a prelude for a novel user-wheelchair con-

trol paradigm that shall take into consideration the

needs and abilities of individuals with severe motor

impairments to control a PWC in a user-controlled, to

a semi-autonomous to a fully autonomous way. The

previous depends on the degree of disability and the

amount of input the user can give in a fast and efficient

way.

Section 2 describes the system used in this re-

search. Whereas, section 3 deals with the descrip-

tion of the methos that have been choosen in order

to achive the scope of the project. A pilot test has

been planned to test the different modalities, which is

handle in Section 4. Furthermore, a comparison of the

different modes under the pilot test is achieved in Sec-

tion 5. And, finally, section 6 presents the conclusion

and future research work.

2 SYSTEM DESCRIPTION

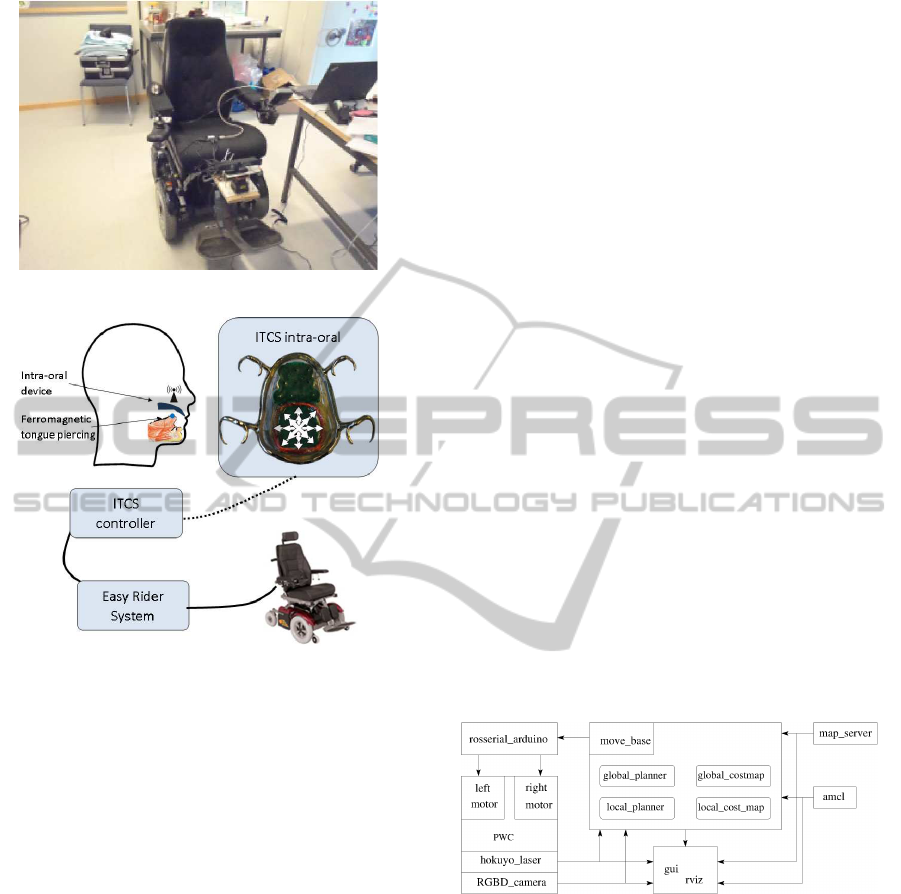

The C400 Permobil PWC is depicted in Figure 1. It

comes with an Easy Rider wheelchair interface, from

HMC International, an Easy Rider display unit and a

joystick. It offers 8 modes of operation (1-way joy-

stick, 4-way joystick, 1, 2, 3, 4 or 5-switches, and

Sip and Puff Control), and the one of interest is the

standard 4-way joystick mode to control the PWC.

The standard joystick mode accepts as input signals

a reference value of 5V and two analog voltage val-

ues in the range of 4V to 6V to proportionally move

the PWC from right to left and from back to for-

ward. To this end, an interface to send velocity con-

trol commands (VCC) from the computer to the mo-

tors has been designed using an Arduino UNO board.

The arduino board receives two VCC bytes (one for

left-right and one for forward-backward) and gener-

ates two pulse width modulated (PWM) signals in the

range of 0 to 5V. The signals are converted to analog

voltage using a simple RC low-pass filter and stepped-

up using a single supply non-inverting DC Summing

Amplifier. The resulting voltage is used to emulate

the analog joystick position as an input to the Easy

Rider interface. The Easy Rider interface then sends

the necessary control signals to the wheelchair’s CAN

bus motor controller.

The ITCS (Caltenco et al., 2011), consists of two

separate parts, the intra-oral device and an external

ICINCO2014-11thInternationalConferenceonInformaticsinControl,AutomationandRobotics

354

Figure 1: C400 permobil wheelchair.

Figure 2: Overview of the Inductive Tongue Control System

(ITCS).

controller. The intra-oral device detects tongue move-

ments and wirelessly transmit signals to the external

controller, which connects to the Easy Rider inter-

face via the Joystick Input and to the computer via

bluetooth. The ITCS’s external controller interprets

and process tongue movement signals and transforms

them into joystick or mouse commands that can be

sent to the wheelchair or the computer 2. The com-

puter is a Lenovo T540p with an Intel(R) Core(TM)

i5-4200M CPU @ 2.50GHz running Ubuntu 12.04

(precise).

The interaction to the external world is carried out

by a Hokuyo UTM-30LX scanning laser range finder.

It has a sensing range from 0.1m to 30m. Measure-

ment accuracy is within 3mm tolerance up to 10m of

the sensor’s range. The scanning rate is 25 millisec-

onds across a 270 range.

3 METHODS

Robot operating system (ROS) (Quigley et al., 2009)

is proposed as the software architecture to achieve

the different modes the user can select to drive the

PWC. The navigation stack (NS), which is a set of

configurable nodes, has been configured properly to

the shape and dynamics of the PWC to be performed

at a high level. Broadly speaking, the heart of the nav-

igation stack is the move base node which provides

a high level interface between odometry, PWC base

controller, sensors, sensor transforms, map server and

Monte Carlo localization algorithm (AMCL) nodes to

the local and global planners.

The global map is created by the gmapping pack-

age, which is an odometry-laser based SLAM (simul-

taneous localization and mapping). Then, during the

functioning of the PWC, the NS uses sensors to avoid

obstacles on the path. And, also uses these sensors to

feed a costmap package to build a local map.

The localization and tracking position of the PWC

in the map is achieved by the AMCL node, which is a

type of particle filter obtained by a proper substitution

of the probabilistic motion and perceptual models into

the algorithm of particle filter, (Dieter et al., 1999)

To ensure a collision-free path planning, the NS

uses the dynamic window approach planner (DWAP)

(Fox et al., 1997) and the Dijkstra’s algorithm nodes.

Thus, given a global plan to follow and a costmap, the

DWAP creates the velocity commands that drives the

PWC in the collision-free configuration space from a

start to a final goal location. For this purpose, an Ar-

duino rosserial command velocity interface node has

been created. Figure 3 depicts the system interaction

methods between the PWC, sensors and the NS.

Figure 3: System interaction methods.

4 PILOT TESTS

The C400 Permobil wheelchair as it is shown in Fig-

ure 1 serves as experimental testbed. In this work,

three modes; hand-joystick mode (JM

H

, tongue-

joystick mode (JM

T

, and autonomous mode (AM)

are tested by the research team, which is comprise

of two males aged 30 and 48 years. Each of these

tests were run once and carried out with real data in

an indoor environment. Which was an L-shaped cor-

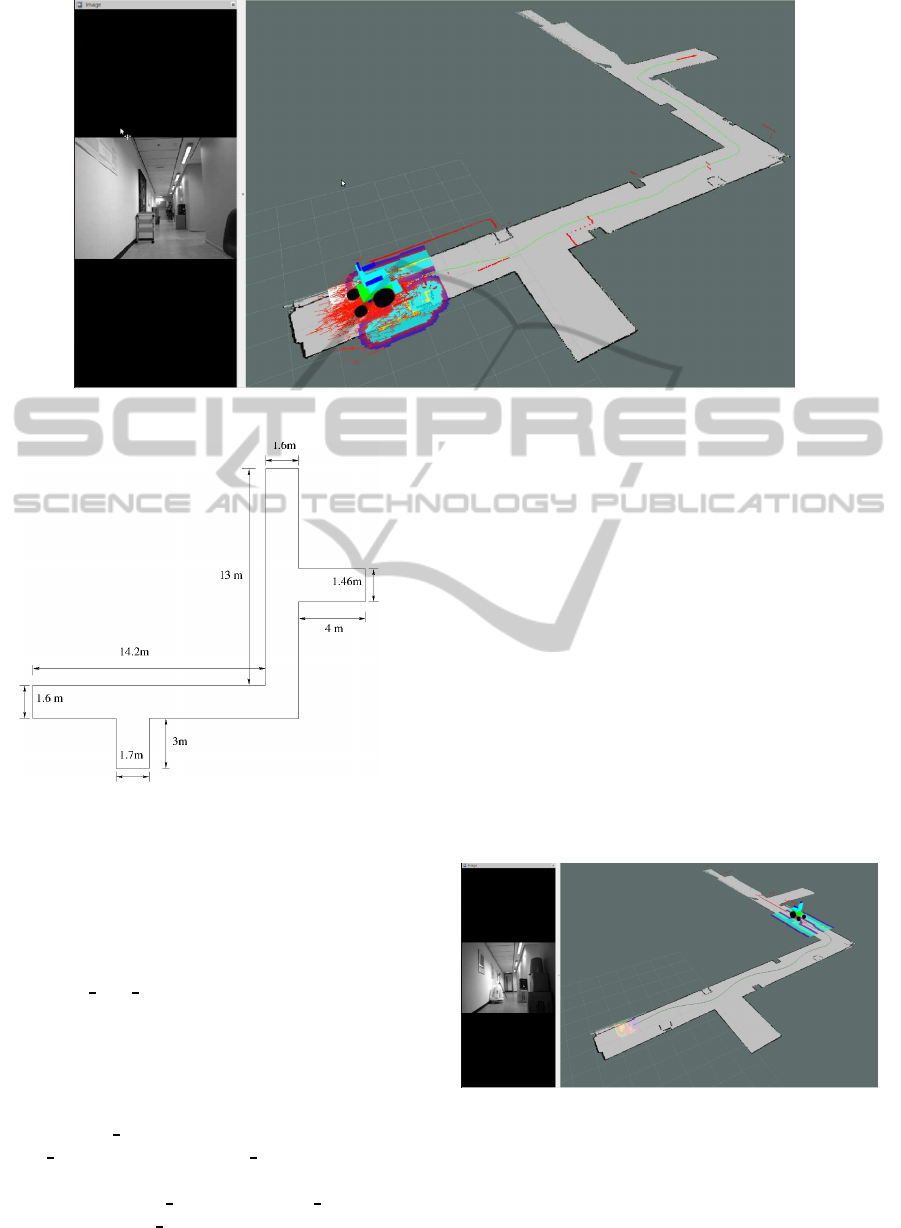

ridor as depicted in Figure 4. To this end, the map

of the indoor environment was built prior to the tests,

ComparisonofDifferentPowered-wheelchairControlModesforIndividualswithSevereMotorImpairments

355

Figure 5: RVIZ set up for showing the different modes.

Figure 4: Schematics of the map.

this was achieved by using the Hokuyo laser, which

has been placed in front of the PWC. In each mea-

surement the laser scans a total of 712 readings dis-

tributed along 180

0

. Obstacles where placed on a cor-

ridor to test the accuracy of the navigation in AM.

Then, while driving the PWC in JM

H

, the gmapping

and the laser

scan matcher nodes interact to build up

the map.

To visualize the map making process and the nav-

igation in the three different test modes, the RVIZ vi-

sualization tool node is used. And, it has been set

up to be able to show the following topics: /map

from the map

server node, the raw/obstacles and

inflated obstacles from costmap 2d node, the /scan

from hokuyo node, /particlecloud from amcl node and

the /camera/rgb/image

mono from image view which

is part of the oppenni

launch driver. Finally, the robot

description format (URDF) which is an XML format

for representing a robot model is used to create a dif-

ferential driving vehicle model corresponding to the

wheelchair.

Figure 5 shows the RVIZ setup. The mono camera

is depicted in the left part. Whereas, in the right part

the particle cloud is represented as red arrows that sur-

rounds the URDF model, the local map is represented

as inflated obstacles and obstacles, these are shown

as a sky-blue and yellow colours respectively. The

obstacles in the global map are represented as black,

while the light and dark grays represents the empty

and unknown areas.

In JM

H

, the user is able to manipulate the PWC

using commands emulated from the control level, e.g.

forward (F), backward (B), right (R), left (L) and stop-

ping (S), which is the neutral joystick position. The

trajectory of the PWC for the JM

H

test can be de-

picted in Figure 6.

Figure 6: Permobil C400 in Joystick-mode.

In JM

T

, the user is able to use the ITCS to emu-

late the joystick in the cavity of the mouth. During

the execution of the velocity commands, the user has

to hold the position of the tongue over the correspond-

ing sensor in order to direct the PWC towards that di-

ICINCO2014-11thInternationalConferenceonInformaticsinControl,AutomationandRobotics

356

rection and to avoid obstacles and have a successful

trajectory. This test is depicted in Figure 7

Figure 7: Permobil C400 in ITCS-mode.

In AM, the user is able to select the coordinates

of a destination point on a map of the known envi-

ronment using the ITCS to control the mouse pointer

with the tongue. Then, the NS takes care of local-

ization of the PWC in the environment and also the

path planning and the avoidance of obstacles during

the navigation. The previous action avoids the need

of constant input from the user. Figure 8 shows the

smooth path traveled by the PWC, in which the ob-

stacles where successfully avoided and the goal has

been reached.

Figure 8: Permobil C400 in autonomous mode.

5 RESULTS OF THE TESTS

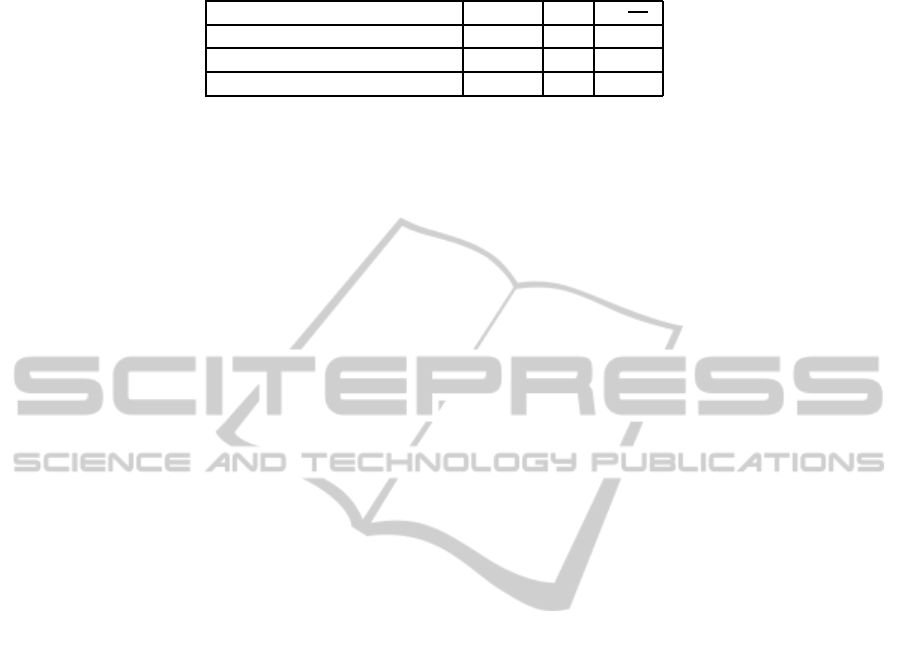

The comparison of the three modes of operation is

done in their dependent variables of measurement

(t, CO, V). Where, t is the time from an initial to a fi-

nal position, CO is the number of obstacle collisions,

and V is the average velocity.

Table 1 presents the different modes of operation

together with their dependent variables.

In JM

H

, the PWC takes from initial to a final po-

sition 1 : 40 minutes, the path is smooth and the user

does not collide with any obstacle. In JM

T

, the PWC

takes 2 : 15 minutes and it does not collide with any

obstacle. Whereas, in AM the PWC takes 2 : 00 min-

utes and it just slightly touches an obstacle with its

right front wheel as it shown in Figure 9. But this ac-

tion does not prevent the PWC to achieve the desired

goal.

Figure 9: The PWC slightly touches the box with the front

right wheel.

In the previous Table, it can be noticed that JM

H

is

faster than the other two modes, because there is a di-

rect interaction between the PWC and the user. More-

over, the user is proficient and has experience in con-

trolling hand-operated joysticks. In the other hand,

in AM the algorithms for localization, path planning,

obstacle avoidance and control have to interact as one

unit in other for the PWC to achieve its desired goal,

making the system to have a delay with respect to

the JM

H

. In JM

T

is the slowest of the three modes,

this fact could be because the user was inexperienced

in using the tongue to control the wheelchair and in

interacting with the ITCS, this situation can be im-

proved by mastering the interaction with the ITCS.

6 CONCLUSIONS

A C400 Permobil PWC has been updated with a com-

munication interface for sending and receiving veloc-

ity commands and a Hokuyo sensor for automation

purposes. So then, a pilot test was performed in a cor-

ridor’s laboratory where three control modalities were

tested and compared.

• JM

H

: The wheelchair was controlled using the in-

cluded joystick

• JM

T

: The PWC is controlled by velocity com-

mands that are given by a ITCS device which has

been placed in the cavity of the mouth.

• AM: Automation algorithms that are part of the

ROS-NS were tested. In this mode, the destina-

tion selection was performed by the ITCS device,

while the wheelchair control was autonomous.

ComparisonofDifferentPowered-wheelchairControlModesforIndividualswithSevereMotorImpairments

357

Table 1: Comparison Between operation modes.

t (min) CO V

m

sec

Hand-joystick mode (JM

H

) 1 : 40 0 0.260

Tongue-joystick mode (JM

T

) 2 : 11 0 0.198

Autonomous-mode (AM) 2 : 00 1 0.216

The tests carried out in the present research have

serve as a prelude for the development of a shared-

control paradigm for individuals with severe motor

impairments. There is a necessity to broad the capa-

bilities of the shared control algorithms, where they

need to be tested in clutter environments and narrow

doorways. In these tests, users with severe upper-

limb motor impairments should be taken into account

when driving the power wheelchair. The abilities and

needs of users with high-level spinal cord injury is

different from those of spastic users, or users with

ALS. Therefore, the amount of user-control and the

amount of automation should be differentfor different

users, depending on the amount and quality of input

the user can give to the system. We believe that this

new paradigm will revolutionize the way the power

wheelchair users with severe upper-limb impairments

interact with a wheelchair and the environment.

ACKNOWLEDGEMENTS

This work was supported by The European So-

cial Fund (ESF) in the project Excellent Young Re-

searchers at BUT (CZ.1.07/2.3.00/30.0039). This

project is part of the IT4Innovations Centre of Ex-

cellence (CZ.1.05/1.1.00/02.0070). It was performed

in collaboration between Brno University of Technol-

ogy, Czech Republic and Lund University, Sweden.

REFERENCES

Agustin, J. S., Mateo, J. C., Hansen, J. P., and Villanueva,

A. (2009). Evaluation of the potential of gaze input

for game interaction. PsychNology Journal, 7(2):213–

236.

Andrea, B., Simone, C., Giulio, F., and Matteucci, M.

(2012). Introducing lurch: a shared autonomy robotic

wheelchair with multimodal interfaces. In In Proceed-

ings of IROS 2012 Workshop on Progress, Challenges

and Future Perspectives in Navigation and Manipula-

tion Assistance for Robotic Wheelchairs, pages 1–6.

Bonarini, A., Ceriani, S., Fontana, G., and Matteucci, M.

(2013). On the development of a multi-modal au-

tonomous wheelchair. Medical Information Science

Reference (an imprint of IGI Global), Hershey PA.

Caltenco, H., Lontis, E., and Andreasen Struijk, L. (2011).

Fuzzy inference system for analog joystick emula-

tion with an inductive tongue-computer interface. In

Dremstrup, K., Rees, S., and Jensen, M., editors, 15th

Nordic-Baltic Conference on Biomedical Engineer-

ing and Medical Physics (NBC 2011), volume 34 of

IFMBE Proceedings, pages 191–194. Springer Berlin

Heidelberg.

Christensen, H. and Garcia, J. (2003). Infrared Non-Contact

Head Sensor, for Control of Wheelchair Movements.

Assistive Technology: From Virtuality to Reality, A.

Pruski and H. Knops (Eds) IOS Press, pages 336–340.

Demeester, E., H¨untemann, A., Vanhooydonck, D.,

Vanacker, G., Van Brussel, H., and Nuttin, M.

(2008). User-adapted plan recognition and user-

adapted shared control: A bayesian approach to semi-

autonomous wheelchair driving. Autonomous Robots,

24(2):193–211.

Dieter, F., Wolfram, B., Frank, D., and Sebastian, T. (1999).

Monte carlo localization: Efficient position estima-

tion for mobile robots. In IN PROC. OF THE NA-

TIONAL CONFERENCE ON ARTIFICIAL INTELLI-

GENCE (AAAI, pages 343–349.

Fern´andez-Madrigal, J.-A., Galindo, C., and Gonz´alez, J.

(2004). Assistive navigation of a robotic wheelchair

using a multihierarchical model of the environment.

Integrated Computer-Aided Engineering, 11(4):309–

322.

Fox, D., Burgard, W., and Thrun, S. (1997). The dynamic

window approach to collision avoidance. Robotics &

Automation Magazine, IEEE, 4(1):23–33.

Galindo, C., Cruz-Martin, A., Blanco, J., Fern´andez-

Madrigal, J., and Gonzalez, J. (2006a). A multi-agent

control architecture for a robotic wheelchair. Applied

Bionics and Biomechanics, 3(3):179–189.

Galindo, C., Gonzalez, J., and Fernandez-Madrigal, J.-A.

(2006b). Control architecture for human&# 8211;

robot integration: Application to a robotic wheelchair.

Systems, Man, and Cybernetics, Part B: Cybernetics,

IEEE Transactions on, 36(5):1053–1067.

Guo, S., Cooper, R., Boninger, M., Kwarciak, A., and Am-

mer, B. (2002). Development of power wheelchair

chin-operated force-sensing joystick. In [Engineering

in Medicine and Biology, 2002. 24th Annual Confer-

ence and the Annual Fall Meeting of the Biomedical

Engineering Society] EMBS/BMES Conference, 2002.

Proceedings of the Second Joint, volume 3, pages

2373–2374. IEEE.

Huo, X. and Ghovanloo, M. (2009). Using unconstrained

tongue motion as an alternative control mechanismfor

wheeled mobility. IEEE Transactions on Biomedical

Engineering, 56(6).

ICINCO2014-11thInternationalConferenceonInformaticsinControl,AutomationandRobotics

358

Huo, X. and Ghovanloo, M. (2010). Evaluation of a wire-

less wearable tongue-computer interface by individ-

uals with high-level spinal cord injuries. Journal of

Neural Engineering, 7(2).

Huo, X., Wang, J., and Ghovanloo, M. (2008). Wire-

less control of powered wheelchairs with tongue mo-

tion using tongue drive assistive technology. An-

nual International Conference of the IEEE Engineer-

ing in Medicine and Biology Society. IEEE Engi-

neering in Medicine and Biology Society. Conference,

2008:4199–4202.

Kim, J., Huo, X., Minocha, J., Holbrook, J., Laumann, A.,

and Ghovanloo, M. (2012). Evaluation of a smart-

phone platform as a wireless interface between tongue

drive system and electric-powered wheelchairs. IEEE

Trans. Biomed. Engineering, 59(6):1787–1796.

Lotte, N. and Andreasen, S. (2006). An inductive tongue

computer interface for control of computers and assis-

tive devices. IEEE Transactions on biomedical Engi-

neering, 53(12):2594–2597.

Lund, M. E., Christensen, H. V., Caltenco Arciniega, H. A.,

Lontis, E. R., Bentsen, B., and Andreasen Struijk, L.

N. S. (2010). Inductive tongue control of powered

wheelchairs. In Proceedings of the 32nd Annual In-

ternational Conference of the IEEE Engineering in

Medicine and Biology Society, pages 3361–3364.

Quigley, M., Conley, K., Gerkey, B., Faust, J., Foote, T. B.,

Leibs, J., Wheeler, R., and Ng, A. Y. (2009). ROS: an

open-source robot operating system. In ICRA Work-

shop on Open Source Software.

Richard, C., Edmund, F., and Rory, A. (2008). How

many people would benefit from a smart wheelchair?

Journal of Rehabilitation Research and Development,

45(1):53–72.

Simpson, R. C. (2004). Smart wheelchairs: A literature

review. Journal of rehabilitation research and devel-

opment, 42(4):423–436.

T. Felzer and R. Nordman (2007). Alternative wheelchair

control. In Proc. Int. IEEE-BAIS Symp., Res. Assistive

Technol., pages 67–74.

Theodoridis, T., Hu, H., McDonald-Maier, K., and Gu, D.

(2013). Kinect enabled monte carlo localisation for a

robotic wheelchair. In Intelligent Autonomous Systems

12, pages 153–163. Springer.

ComparisonofDifferentPowered-wheelchairControlModesforIndividualswithSevereMotorImpairments

359