Paris-rue-Madame Database

A 3D Mobile Laser Scanner Dataset for Benchmarking Urban Detection,

Segmentation and Classification Methods

Andr´es Serna

1

, Beatriz Marcotegui

1

, Franc¸ois Goulette

2

and Jean-Emmanuel Deschaud

2

1

MINES ParisTech, CMM-Center for Mathematical Morphology, 35 rue St Honor´e, 77305 Fontainebleau, France

2

MINES ParisTech, CAOR-Center for Robotics, 60 boulevard Saint-Michel, 75272 Paris, France

Keywords:

3D Database, Mobile Laser Scanner, Urban Analysis, Segmentation, Classification, Point-wise Evaluation.

Abstract:

This paper describes a publicly available 3D database from the rue Madame, a street in the 6

th

Parisian district.

Data have been acquired by the Mobile Laser Scanning (MLS) system L3D2 and correspond to a 160 m long

street section. Annotation has been carried out in a manually assisted way. An initial annotation is obtained

using an automatic segmentation algorithm. Then, a manual refinement is done and a label is assigned to

each segmented object. Finally, a class is also manually assigned to each object. Available classes include

facades, ground, cars, motorcycles, pedestrians, traffic signs, among others. The result is a list of (X, Y, Z,

reflectance, label, class) points. Our aim is to offer, to the scientific community, a 3D manually labeled dataset

for detection, segmentation and classification benchmarking. With respect to other databases available in the

state of the art, this dataset has been exhaustively annotated in order to include all available objects and to

allow point-wise comparison.

1 INTRODUCTION

Nowadays, LiDAR technology (“light detection and

ranging”) has been prospering in the remote sensing

community thanks to developments such as: Aerial

Laser Scanning (ALS), useful for large scale build-

ings survey, roads and forests; Terrestrial Laser Scan-

ning (TLS), for more detailed but slower urban sur-

veys in outdoor and indoor environments; Mobile

Laser Scanning (MLS), less precise than TLS but

much more productive since the sensors are mounted

on a vehicle; and more recently, “stop and go” sys-

tems, easy transportable TLS systems making a trade

off between precision and productivity.

Thanks to all these technologies, the amount of

available 3D geographical data and processing tech-

niques has bloomed in recent years. Many semi-

automatic and automatic methods aiming at analyzing

3D urban point clouds can be found in the literature. It

is an active research area. However, there is not a gen-

eral consensus about the best detection, segmentation

and classification methods. This choice is application

dependent. One of the main drawbacks is the lack of

publicly available databases allowing benchmarking.

In the literature, most available urban data consist

in close-range images, aerial images, satellite images

but a few laser datasets (ISPRS, 2013; IGN, 2013).

Moreover, manual annotations and algorithm outputs

are rarely found in available 3D repositories (N¨uchter

and Lingemann, 2011; CoE LaSR, 2013).

Some available data include Oakland dataset

(Munoz et al., 2009), which contains 1.6 million

points collected around Carnegie Mellon University

campus in Oakland, Pittsburgh, USA. Data are pro-

vided in ASCII format: (X, Y, Z, label, confidence)

one point per line, vrml files and label counts. The

training/validation and testing data contains 5 labels

(scatter misc, default wires, utility poles, load bear-

ing and facades). Ohio database (Golovinskiy et al.,

2009) is a combination of ALS and TLS data in Ot-

tawa city (Ohio, USA). It contains 26 tiles 100 ×

100 meters each with several objects such as build-

ings, trees, cars and lampposts. However, ground

truth annotations only consists in a 2D labeled point

in the center of each object. In that sense, segmenta-

tions results cannot be evaluated point by point. En-

schede database (Zhou and Vosselman, 2012) con-

tains residential streets approximatively 1 km long in

the Enschede city (The Netherlands). Ground truth

annotation consists in 2D geo-referenced lines mark-

ing curbstones. A well- defined evaluation method

is available using buffers around each 2D line. The

819

Serna A., Marcotegui B., Goulette F. and Deschaud J..

Paris-rue-Madame Database - A 3D Mobile Laser Scanner Dataset for Benchmarking Urban Detection, Segmentation and Classification Methods.

DOI: 10.5220/0004934808190824

In Proceedings of the 3rd International Conference on Pattern Recognition Applications and Methods (USA-2014), pages 819-824

ISBN: 978-989-758-018-5

Copyright

c

2014 SCITEPRESS (Science and Technology Publications, Lda.)

drawback of this dataset is that no other objects are

annotated. Paris-rue-Soufflot database (Hern´andez

and Marcotegui, 2009) contains MLS data from a

street 500 m long in the 5

th

Parisian district. Six

classes have been annotated: facades, ground, cars,

lampposts, pedestrians and others.

In this paper, we present a 3D MLS database for

benchmarking detection, segmentation and classifica-

tion methods. Each point in the 3D point cloud has

been segmented and classified, allowing point-wise

evaluations. Additionally, our annotation includes all

available objects in the urban scene. Data have been

acquired and processed in the framework of TerraMo-

bilita project (http://cmm.ensmp.fr/TerraMobilita/).

Paper organization is as follows. Section 2 re-

minds some basic definitions. Section 3 describes

Paris-rue-Madame database. Section 4 explains the

MLS system and acquisition details. Section 5 briefly

presents our manually assisted annotation protocol.

Finally, Section 7 concludes the work.

2 BACKGROUND

A typical 3D urban analysis method includes 5 main

steps: i) data filtering/down-sampling in order to re-

duce outliers and redundant data; ii) Digital Terrain

Model (DTM) generation; iii) object detection in or-

der to define object hypotheses and regions of interest;

iv) object segmentation in order to extract each indi-

vidual object; and v) object classification in order to

assign a semantic category to each object. In the sci-

entific community several definitions can be found for

detection, segmentation and classification steps. For

clarity, let us define these concepts in the way we in-

tend they should be understood with this dataset:

Detection: An object is considered detected if it is

included in the list of object hypotheses, i.e. it

has not been suppressed by any filtering/down-

sampling method and it has not been included as

part of the DTM. Note that an object hypothe-

sis may contain several connected objects or even

contain only a part of an object. In the detection

step, we are only interested in keeping all pos-

sible objects. This is important because in most

works reported in the literature, non-detected ob-

jects cannot be recovered in subsequent algorithm

steps.

Segmentation: An object is considered segmented if

it is correctly isolated as a single object, i.e. con-

nected objects are correctly separated (there is no

sub-segmentation) and each individual object is

entirely inside of only one connected component

(there is no over-segmentation). This is important

because many algorithms based on clustering and

connected components can wrongly gather ob-

jects touching each other, e.g. motorcycles parked

next to the facade, pedestrians walking together,

cars closely parked to others, among others.

Classification: In the classification step, a category

is assigned to each segmented object. Each class

represents an urban semantic entity. Depending

on the application, several classes can be defined:

facade, ground, curbstone, pedestrian, car, lamp-

post, etc.

3 DATA DESCRIPTION

Paris-rue-Madame dataset contains 3D MLS data

from rue Madame, a street in the 6

th

Parisian district

(France). Figure 1 shows an orthophoto from the test

zone, approximatively a 160 m long street section be-

tween rue M´ezi`eres and rue Vaugirard. The acquisi-

tion was made on February 8, 2013 at 13:30 Universal

Time (UT).

Figure 1: Rue Madame, Paris (France). Orthophoto from

IGN-Google Maps.

The dataset contains two PLY files with 10

million points each. Each file contains a list of

(X, Y, Z, reflectance, label, class) points, where

XYZ correspond to geo-referenced (E,N,U) coordi-

nates in Lambert 93 and altitude IGN1969 (grid

RAF09) reference system, reflectance is the laser in-

tensity, label is the object identifier obtained after

segmentation and class determines the object cate-

gory. An offset has been subtracted from XY co-

ordinates with the aim of increasing data precision:

X

0

= 650976 m and Y

0

= 6861466 m, respectively.

The available files are “GT

Madame1 2.ply” and

ICPRAM2014-InternationalConferenceonPatternRecognitionApplicationsandMethods

820

“GT Madame1 3.ply”, both of them coded as binary

big endian version 1.

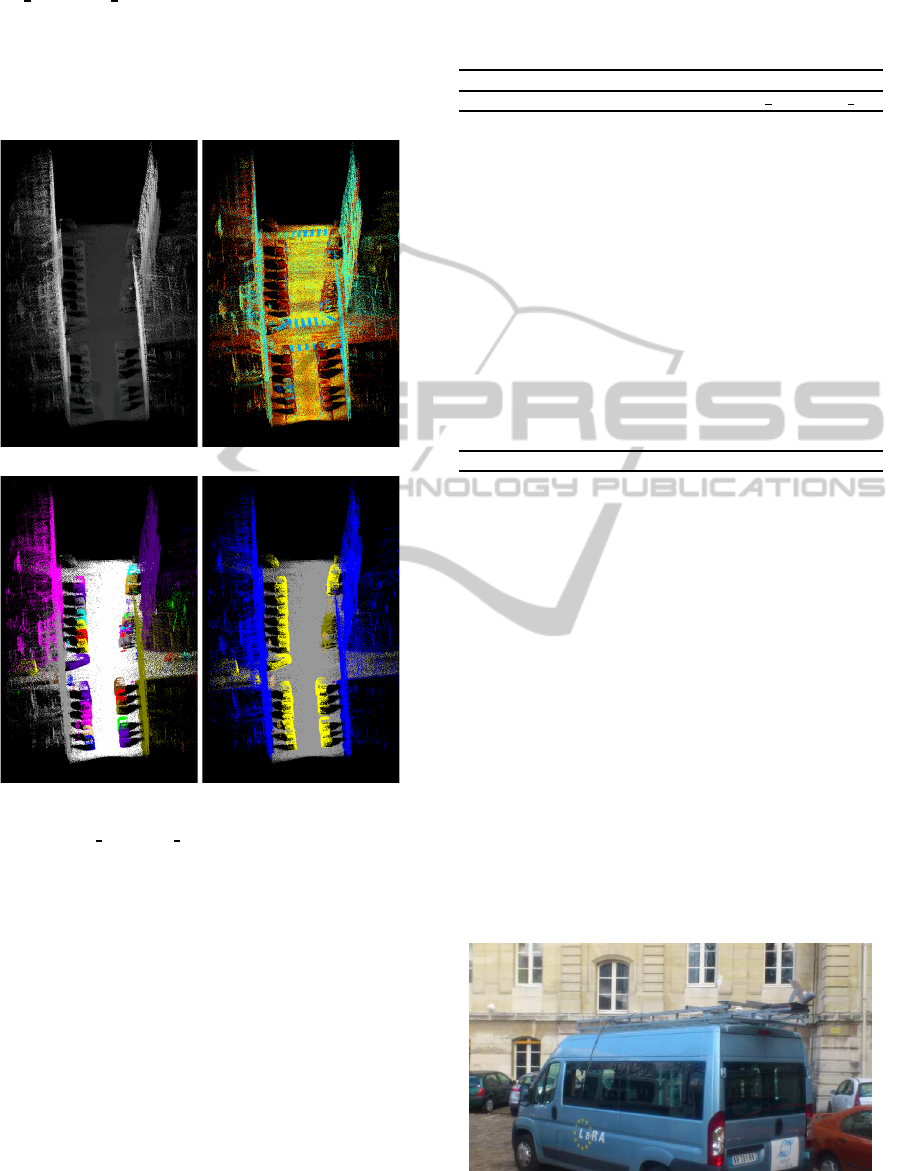

Figure 2 presents one of the 3D point clouds of

this database colored by the point height (Z coordi-

nate), the reflectance, the object label and the object

class.

(a) Height (Z coordinate). (b) Laser reflectance.

(c) Object label. (d) Object class.

Figure 2: “GT

Madame1 2.ply” file: 3D point cloud col-

ored by its available fields. For the object label, each color

represents a different object (only for visualization pur-

poses, some colors have been repeated). For object class

visualization: facades (blue), ground (gray), cars (yellow),

motorcycles (olive), traffic signs (goldenrod), pedestrians

(pink).

This database contains 642 objects categorized in

26 classes, as shown in Table 1. It is noteworthy that

several objects inside buildings have been acquired

through windows and open doors, these objects have

been annotated as facades. Other several “special”

classes have been added because they are too different

to be mixed with others. For instance, fast pedestrians

and pedestrians+something have different geometri-

cal features than a simple pedestrian. The idea of this

annotation is including as much classes as possible,

then each user may gather or exclude classes depend-

ing on the application.

Table 1: Available classes and number of objects in the

Paris-Rue Madame dataset.

Number of objects

Class Class name file 1 2 file 1 3

0 Background 7 35

1 Facade 181 117

2 Ground 4 23

4 Cars 39 31

7 Light poles 0 1

9 Pedestrians 3 7

10 Motorcycles 23 9

14 Traffic signs 5 1

15 Trash can 2 1

19 Wall Light 6 1

20 Balcony Plant 3 2

21 Parking meter 1 1

22 Fast pedestrian 2 2

23 Wall Sign 1 3

24 Pedestrian + something 1 0

25 Noise 46 80

26 Pot plant 0 4

Total 324 318

It is noteworthy that this database is different from

others available in the state of the art since the entire

3D point cloud has been segmented and classified, i.e.

each point contains a label and a class. Thus, point-

wise evaluation of detection, segmentation and classi-

fication methods is possible.

4 ACQUISITION

The acquisition has been carried out by the MLS

system L3D2 from the robotics laboratory CAOR-

MINES ParisTech (Goulette et al., 2006). This sys-

tem is equipped with a Velodyne HDL32, as shown in

Figure 3. In this system, several lasers are mounted on

upper and lower blocks of 32 lasers each and the en-

tire unit spins, giving much denser point clouds than

classic Riegl sensors (Velodyne, 2012).

Figure 3: MLS system L3D2 from CAOR-MINES Paris-

Tech

Paris-rue-MadameDatabase-A3DMobileLaserScannerDatasetforBenchmarkingUrbanDetection,Segmentationand

ClassificationMethods

821

5 ANNOTATION

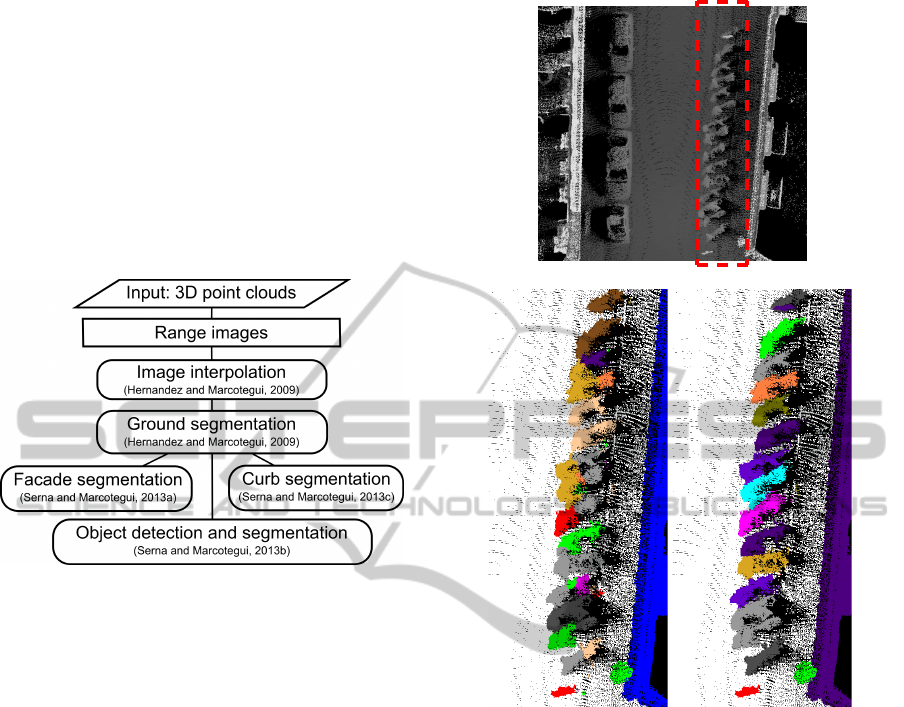

Annotation has been carried out in a manually as-

sisted way. An initial segmentation is obtained us-

ing an automatic method based on elevation im-

ages (Serna and Marcotegui, 2013b). The work-flow

is shown in Figure 4. For further details and complete

analyses in each step, the reader is also encouraged

to review the following three works (Hern´andez and

Marcotegui, 2009) (Serna and Marcotegui, 2013a)

(Serna and Marcotegui, 2013c).

Figure 4: Work-flow of our automatic segmentation

methodology.

After this automatic method, a manual refinement

is carried out in order to correct possible errors in

detection and segmentation steps. Then, a label is

assigned to each segmented object. Finally, a class

is also manually assigned in order to categorize each

segmented object.

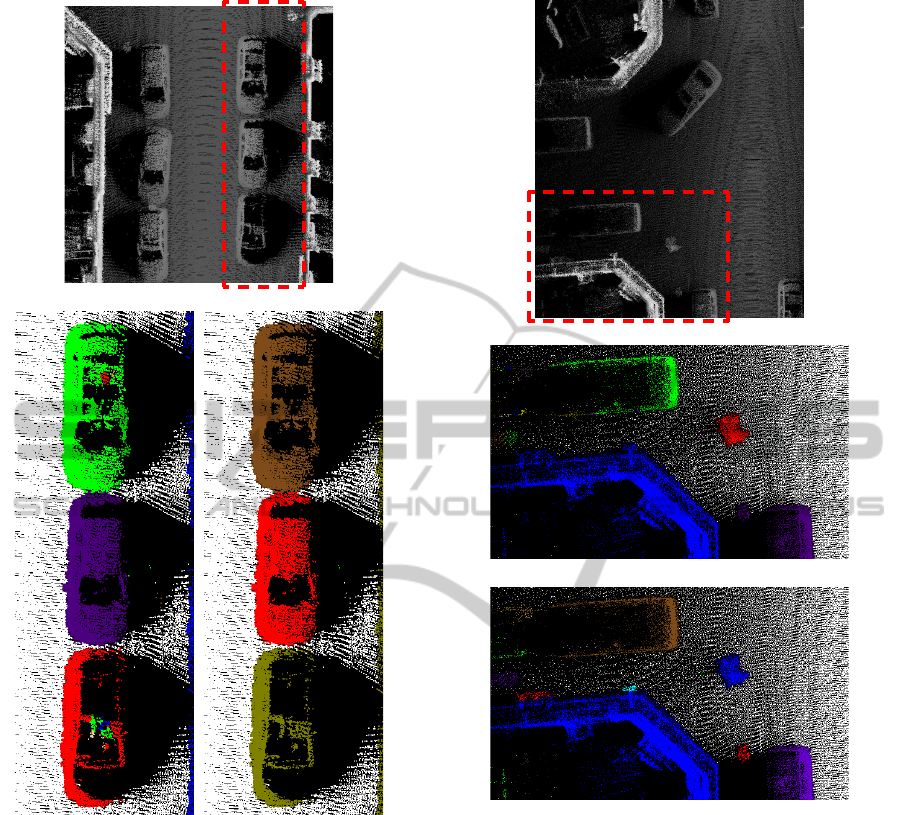

During manual refinement, three typical segmen-

tation errors are both found and corrected: i) bad seg-

mentation of some connected objects, e.g. motorcy-

cles parked close to each other are not correctly sep-

arated, as shown in Figure 5; ii) over-segmentation of

some objects due to artifacts or noise, i.e. some cars

are over-segmented on their roof, as shown in Fig-

ure 6; iii) sub-segmentation of some objects, i.e. some

objects touching the facades are not well separated, as

shown in Figure 7.

6 DOWNLOAD & LICENSE

Paris-rue-Madame database is available at:

http://cmm.ensmp.fr/ serna/rueMadameDataset.html

and it is made available under the Creative Commons

Attribution Non-Commercial No Derivatives (CC-

BY-NC-ND-3.0) Licence.

(Cette œuvre est mise `a disposition selon les termes

de la Licence Creative Commons Attribution - Pas

(a) Height

(b) Automatic segmen-

tation (zoomed)

(c) Manual refinement

(zoomed)

Figure 5: Manual refinement of connected motorcycles.

d’Utilisation Commerciale - Pas de Modification 3.0

France http://creativecommons.org/licenses/by-nc-

nd/3.0/fr/.

7 CONCLUSIONS

We have presented a 3D MLS database manually an-

notated from rue Madame, a street in the 6

th

Parisian

district. Each 3D point has been labeled and classi-

fied, resulting in a list of (X, Y, Z, reflectance, label,

class) points.

The database has been acquired by the L3D2 ve-

hicle, a MLS system from the Robotics laboratory

(CAOR) at MINES ParisTech. A distinctive feature

of this system is that it uses a Velodyne sensor aligned

in a similar way to a Riegl sensor but providing much

denser 3D point clouds.

ICPRAM2014-InternationalConferenceonPatternRecognitionApplicationsandMethods

822

(a) Height

(b) Automatic segmenta-

tion (zoomed)

(c) Manual refinement

(zoomed)

Figure 6: Manual refinement of over-segmented cars.

Annotation has been carried out in a manual as-

sisted way by the Center for mathematical morphol-

ogy (CMM) at MINES ParisTech. First, an automatic

segmentation method is applied. Then, manual refine-

ment and classification are done. This approach is

faster than a completely manual approach and it pro-

vides accurate results. This dataset is different from

others available in the state of the art since each point

has been segmented and classified, allowing point-

wise benchmarking.

In future works, other datasets acquired in the

framework of TerraMobilita project will be annotated

and made available to the scientific community.

(a) Height

(b) Automatic segmentation (zoomed)

(c) Manual refinement (zoomed)

Figure 7: Manual refinement of sub-segmented objects

touching the facade.

ACKNOWLEDGEMENTS

The work reported in this paper has been performed

as part of Cap Digital Business Cluster TerraMobilita

Project.

REFERENCES

CoE LaSR (2013). Centre of Excellence in Laser

Scanning Research. Finnish Geodetic Institut.

http://www.fgi.fi/coelasr/ (Last accessed: December

16, 2013).

Paris-rue-MadameDatabase-A3DMobileLaserScannerDatasetforBenchmarkingUrbanDetection,Segmentationand

ClassificationMethods

823

Golovinskiy, A., Kim, V., and Funkhouser, T. (2009).

Shape-based recognition of 3D point clouds in urban

environments. In IEEE International Conference on

Computer Vision, pages 2154–2161. Kyoto, Japan.

Goulette, F., Nashashibi, F., Ammoun, S., and Laurgeau,

C. (2006). An integrated on-board laser range sens-

ing system for On-the-Way City and Road Modelling.

The ISPRS International Archives of Photogramme-

try, Remote Sensing and Spatial Information Sciences,

XXXVI-1:1–6.

Hern´andez, J. and Marcotegui, B. (2009). Filtering of ar-

tifacts and pavement segmentation from mobile Li-

DAR data. The ISPRS International Archives of the

Photogrammetry, Remote Sensing and Spatial Infor-

mation Sciences, XXXVIII-3/W8:329–333.

IGN (2013). IGN - Geospatial and Terrestrial Im-

agery. IGN French National Mapping Agency.

http://isprs.ign.fr/home

en.htm (Last accessed: Octo-

ber 28, 2013).

ISPRS (2013). The ISPRS data set collection. ISPRS In-

ternational Society for Photogrammetry and Remote

Sensing. http://www.isprs.org/data/ (Last accessed:

October 28, 2013).

Munoz, D., Bagnell, J. A., Vandapel, N., and Herbert,

M. (2009). Contextual Classification with Functional

Max-Margin Markov Networks. In IEEE Computer

Society Conference on Computer Vision and Pattern

Recognition (CVPR).

N¨uchter, A. and Lingemann, K. (2011). Robotic 3D Scan

Repository. Jacobs University Bremen gGmbH and

University of Osnabr¨uck. http://kos.informatik.uni-

osnabrueck.de/3Dscans/ (Last accessed: December

16, 2013).

Serna, A. and Marcotegui, B. (2013a). Attribute controlled

reconstruction and adaptive mathematical morphol-

ogy. In 2013 International Symposium on Mathemat-

ical Morphology (ISMM), pages 207–218, Uppsala,

Sweden.

Serna, A. and Marcotegui, B. (2013b). Detection, seg-

mentation and classification of 3D urban objects us-

ing mathematical morphology and supervised learn-

ing. {ISPRS} Journal of Photogrammetry and Remote

Sensing, 0(0):1–. (Accepted).

Serna, A. and Marcotegui, B. (2013c). Urban accessibil-

ity diagnosis from mobile laser scanning data. ISPRS

Journal of Photogrammetry Remote Sensing, 84:23–

32.

Velodyne (2012). The HDL-32E Velodyne

LiDAR sensor. Velodyne Lidar 2012.

http://velodynelidar.com/lidar/hdlproducts/hdl32e.aspx

(Last accessed: December 16, 2013).

Zhou, L. and Vosselman, G. (2012). Mapping curbstones in

airborne and mobile laser scanning data. International

Journal of Applied Earth Observation and Geoinfor-

mation, 18(1):293–304.

ICPRAM2014-InternationalConferenceonPatternRecognitionApplicationsandMethods

824