Sensing Gestures for Business Intelligence

David Bell, Nikhil Makwana and Chidozie Mgbemena

Department of Information Systems and Computing (DISC), Brunel University, London, U.K.

Keywords: Motion Sensors, Gestures, Business Intelligence.

Abstract: The combination of sensor data with analytic techniques is growing in popularity for both practitioners and

researchers as an Internet of Things (IoT) offers new opportunities and insights. Organisations are trying to

use sensor technologies to derive intelligence and gain a competitive edge in their industries. Obtaining data

from sensors might not pose too much of a problem, however subsequent utilisation in meeting an

organisation’s decision making can be more problematic. Understanding how sensor data analytics can be

undertaken is the first step to deriving business intelligence from front line retail environments. This paper

explores the use of the Microsoft Kinect sensor to provide intelligence by identifying and sensing gestures

to better understand customer behaviour in the retail space.

1 INTRODUCTION

Organisations generate vast amounts of data from

their day to day operations (McRobbie, et al., 2012;

Vera-Baquero, et al., 2013). From these data

sources, some organisations are able to harvest

insightful information termed Business Intelligence

(BI) (Feng & Liu, 2010). It has evolved into a set of

computer based techniques used to identify, extract

and analyse business data to better understand

current business trends and more importantly predict

future business patterns in order to gain competitive

advantages and effectively take steps to deliver

better performances. Globally dispersed customers

and extended supply chains have further motivated

the use of BI. Clearly, key data from customers

moving around the commercial environment is

missing when only focusing on completed

purchasing etc.

Importantly though, with a growing Internet of

Things (IoT) making our environment smarter, it

provides opportunities to analyse data that is

generated from smart objects such as sensors

(Doody & Shields, 2012). Increasingly, businesses

are discovering that environments and machines

augmented with sensors are able to send information

back to headquarters that once analysed can provide

a competitive advantage (Hewlett-Packard, 2013).

Making sense of the plethora of data generated by

such devices and leveraging reality mining

applications (Doody & Shields, 2012) can provide

an ability to extract useful knowledge from real

world sensor data and provide the basis to identify

predictable patterns. Sensor applications are widely

reported in literature with examples of location

technology and smartphone devices used to request

cabs, effectively saving cab drivers valuable time

and money in looking for fares (Hailo, 2013).

Within retail industries, footfall sensors are used to

provide intelligence for staff scheduling that ensure

customer demands are met without overheads being

excessive (Ipsos, 2013). Together with an overall

trend in Point-of-Sale (POS) transactions, loyalty

cards used by major retailers are being utilised to

track customer purchases leading to a greater

understanding of shopping habits and enabling

businesses to track the success of marketing

initiatives (Zakaria, et al., 2012).

Despite uncovering a wealth of data about

shopper demographics and their purchase history,

insight into the customers in store behaviour is often

missing. Understanding where customers spend

most of the time when within a store, identifying

which products and promotional displays are most

popular, how long customers wait in lines etc. can

address this lack of ‘front-line’ knowledge. This

paper explores how motion sensors be used to better

understand the retail environment. Leveraging

motion sensing technology provides the ability to

precisely track customers and observe human

behaviour for pattern analysis and business

intelligence (Cao, 2008). The paper reports on

related work in both motion sensing and business

intelligence before coverage of our specific pattern

52

Bell D., Makwana N. and Mgbemena C..

Sensing Gestures for Business Intelligence.

DOI: 10.5220/0004878100520060

In Proceedings of the 3rd International Conference on Sensor Networks (SENSORNETS-2014), pages 52-60

ISBN: 978-989-758-001-7

Copyright

c

2014 SCITEPRESS (Science and Technology Publications, Lda.)

sensing approach and framework. A practical

experiment is then presented that leads to our BI

architecture discussion and concluding remarks.

2 RELATED WORK

Two areas of interest in this research paper are

Business Intelligence (BI) and Motion Sensors

technology. In light of this, the purpose of this

section is to highlight business intelligence currently

used by businesses and identify architectures used in

case studies. Secondly, research on motion sensors is

used to identify suitable sensors that are able to

provide motion data to a business intelligence

framework.

2.1 Business Intelligence

Luhn coined the term ‘Business Intelligence’ (BI) in

1958 (Luhn, 1958). He defined BI as “the ability to

apprehend the interrelationships of presented facts in

such a way as to guide actions towards desired

goals” (Luhn, 1958, pp. 314-319). Despite having

less significance at the time (when compared to its

relevance today), this key work underpins the theory

and practice of decision support systems. The work

carried out by Luhn has been fundamental in

providing reporting, Online Analytical Processing

(OLAP) and visualisation capabilities used business

decision making today. As a modern day term, BI is

used to describe the collection of processes, tools

and technology helping in achieving more profit

through improving productivity, sales and services

of an enterprise. With the aid of BI methods,

corporate data can be better analysed and

transformed into useful knowledge, which in turn is

required to achieve a profitable business action

(Martin, et al., 2012). Modern day business

intelligence tools include applications such as

Executive Information Systems (EIS), Customer

Relationship Management (CRM) and Corporate

Performance Management (CPM) (Tvrdikova,

2007). What all of these commercial applications

have in common is the ability to use multi-

dimensional data stores that have the benefits of

reporting rapid changes as opposed to waiting on

batch jobs that run overnight. However, despite their

rapid response to change, drawbacks include

increased complexity (Vassiliadis, 1998) and

maintenance.

Business Intelligence systems are often regarded

as business critical solutions and invaluable tools,

adding significant value to an enterprise. These

systems have often become of paramount

importance and are now considered a vital tool for

day-to-day business (Xu, et al., 2007). The main

purposes of employing business intelligence tools

are to provide the right information to the right

people at the right time, with the overall aim of

allowing effective decision making to take place

(Xu, et al., 2007; Krneta, et al., 2008). Many

examples of successful BI implementation include

Netflix (an on-demand internet streaming media

provider) that leverage analytics to analyse customer

behaviour to better serve its subscriber base by

recommending the right options for their viewers

(Microstrategy, 2013). Carphone Warehouse (an

independent retail telecommunications provider)

utilise business intelligence reporting tools for

making decisions such as adjusting headcount based

on footfall traffic and conducting in store employee

reviews for tracking performance (Computing,

2012). Barclays Bank has employed the use of BI

systems to reduce operational costs helping them in

efficient recruitment planning and training schedules

(Ridgian, 2011).

BI is a necessity in the retail industry. It is an

essential element when realising typical in store

activities such as those that further identify potential

intelligence that could be used to provide

competitiveness. Burke (2002) identifies consumer

behaviours such as: 1) Entering the store, 2) entering

a specific aisle, 3) checking out and paying for

items, and 4) post purchase of customer service

(Burke, 2002). These behaviours highlighted by

Burke (2002) are also applicable to

telecommunication retail environments however

consumers are viewing either live or dummy phones

(PhoneDog Media, 2013).

Many organisations now rely on and are heavy

users of Business Intelligence systems. Tesco for

example, is a multinational supermarket and now

rated as the second most powerful retailer on the

planet. A primary factor in their success is having BI

systems that enable them to gather data and

analytical skills to test ideas and turn insights into

customer and business relevant actions (Advanced

Performance Institute, 2013).

Leonidas, et al. (2012) present a middleware

framework named ‘PLATO’ to provide business

intelligence applications. “The main goal is to

provide a framework for the development of

applications involving heterogeneous sensors”

(Leonidas, et al., 2012, pp. 266-270). The

framework supports collection, processing and

analysis of the data originating from sensors while

enabling the development of business intelligence.

SensingGesturesforBusinessIntelligence

53

Wang, et al., (2012) present a system that obtains the

characteristic travel behaviour of an urban

population and builds a framework using large scale

transportation datasets to extract value added

information with the ultimate aim of reducing fuel

consumption, improving customer satisfaction and

business performance. Hondori et al., 2012 present a

tele-rehabilitation study that monitors post stroke

patients using sensor technology to provide

intelligence in monitoring their activities of daily

living and progress in a home environment. Despite

many studies presenting research from post stroke

patients using wearable sensors, (O’Keeffe, et al.,

2007; Zhang, et al., 2012; Hester, et al., 2006), there

are many reported drawbacks including the wearable

sensor being obtrusive, heavy, costly and generally

inconvenient (Benning, et al., 2007; Hondori, et al.,

2012). Hondori, et al., 2012 has leveraged motion

sensor technology using a Kinect sensor which is

completely wireless and a non-wearable technology

that provides a more natural experience to sense the

health status of the patient (Hondori, et al., 2012).

2.2 Motion Sensing

“Motion detection is the fundamental process of

detecting whether any entities exist and are moving

around the area of interest” (Xiao, et al., pp. 229-

235). The motion sensor/detector is a converter that

measures a physical quantity and coverts it into a

signal form which can be read by an electronic

instrument.

Various sensors exist for different purposes such

as accelerometers, gyroscopes and magnetometers.

Accelerometers are a type of device that measures

the acceleration. The modern day use of

accelerometers can be seen in user interface control

on smartphone technology as the tilting motion is

used to differentiate between portrait and landscape.

A gyroscope can be used to either measure, or

maintain, the orientation of a device. Unlike an

accelerometer, which measures the linear

acceleration of the device, a gyroscope measures the

orientation directly. Gyroscope technology has

created the ability of making the gaming experience

as real as possible. Magnetometers measure the

ambient magnetic field and provide digital compass

like applications on smartphone devices. These are

also more traditional sensors which are small and

come at a reasonable cost (Amma, et al., 2010) –

when compared to smartphones or tablets. The first

generation of sensors included pen based and hand

worn sensors known as ‘data gloves.’ However, the

latter form of these sensors has limitations such as

the feeling of unnaturalness, obtrusive and generally

a burden to wear (Ren, et al., 2013). In contrast to

the data gloves, and with the recent advancements in

HCI and motion sensor technology, many products

have surfaced, repurposing traditional sensing

technology into new forms. This is more prevalent in

gaming systems such as the Nintendo Wii Remote,

PlayStation Eye/Move and the Microsoft Kinect for

Xbox; each offering a more natural experience and

touch free way of communication.

The Microsoft Kinect sensor, released in 2010

for the Xbox, enables users to control and interact

with the Xbox 360 without the need for any physical

interaction of game controllers. This is realised by

the use of a natural user interface and using gestures

and spoken commands (Microsoft Corporation,

2013). In contrast, the Wiimote and PS Move

require physical contact for navigation. In 2011

Microsoft released a non-commercial software

development kit (SDK) for the Kinect sensor

allowing developers to build natural, intuitive

computing experiences using C#, C++ and Visual

Basic programming languages.

3 SENSING PATTERNS

This research project employs an objective-centred

approach to the Design Science Research

Methodology (DSRM) as outlined by Peffers et al.

(2007). The design problem is identified from the

literature review and in this case includes a lack of

smart analysis when providing business intelligence

in a ‘front-line’ retail space. Traditional technology

is still being used with an over-reliance on EPOS

systems and data. Our design explores how recent

gaming sensors can provide more effective sensory

input – with a specific focus on people in the retail

environment.

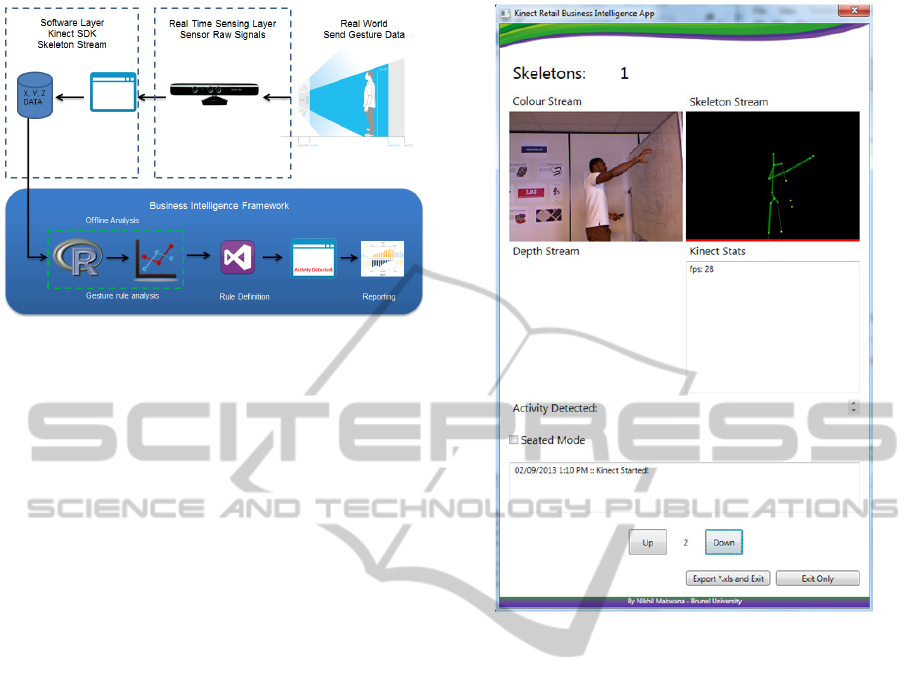

3.1 Sensing Architecture

A high level architectural overview is presented in

Figure 1. The framework separates the collection of

sensor data from a gesture analysis and codification

phase. A sensor augmented environment is able to

provide a basis for gesture identification rules. Once

identified, the gestures can be measured and

reported by a BI system.

The user is free to use their natural interactions

in the retail environment which includes activities

such as viewing in-store adverts, paying for products

at a cashier till and extracting dummy phones for

closer viewing. The Kinect sensor (from Microsoft)

SENSORNETS2014-InternationalConferenceonSensorNetworks

54

Figure 1: High Level Architecture.

captures these movements/gestures with the help of

the Kinect SDK. The depth stream available to the

Kinect sensor is used to process the depth data to

generate skeleton data of human body joints, which

is stored as X, Y and Z values using the C#

application created into a text file. Offline analysis

of the raw co-ordinate dataset is analysed (with the

use of R) to highlight rules that can automatically

identify user gestures. Observed analysis results are

then written into the sensing application to identify

only the rules identified from the analysis in order to

provide meaningful business intelligence of

customer activity.

3.2 Sensor Data Capture

In order to capture a customer’s natural interaction

in a retail space, a Kinect application has been

developed as shown in Figure 2. The user interface

has been kept relatively simple as this design phase

is focusing on the framework processes required to

undertake this new form of BI analysis. The main

functionalities of the application:

Provide 3 streams (RGB, Skeleton and Depth) to

provide visual feedback to the user for calibration

purposes.

Seated mode option to capture the top half of

skeleton (10 joints)

Kinect elevation angle to also provide in

calibration purposes.

Capture button, to provide the saving of tracked

skeleton body co-ordinates to a data file.

The Kinect sensor provides an easy to use API

and ready access to co-ordinates for each part of the

body, e.g. elbow_right.Position.X.

Initially, these co-ordinates are collected and stored

in a data file for rule identification.

Figure 2: Sensor Data Capture Application.

3.3 From Data to Gestures

The popular R software environment is typically

used for statistical computing and graphics. R has

been used in this research project for statistical

analysis and primarily visualisation of the body

coordinates that have been captured from Kinect

sensors in the retail environment. Command line

scripting is used for the analysis of sensor data and

rule identification. A third party package ‘ggplot2’

has been utilised in R to provide graph building

capabilities.

Although we have built a system with a single

sensor, it is envisaged that the same approach will

allow for multiple sensors in many retail

environments. Importantly, an IoT that connects

many motion sensors requires an architecture that is

able to identify gestures before forwarding to

integrated reporting platforms.

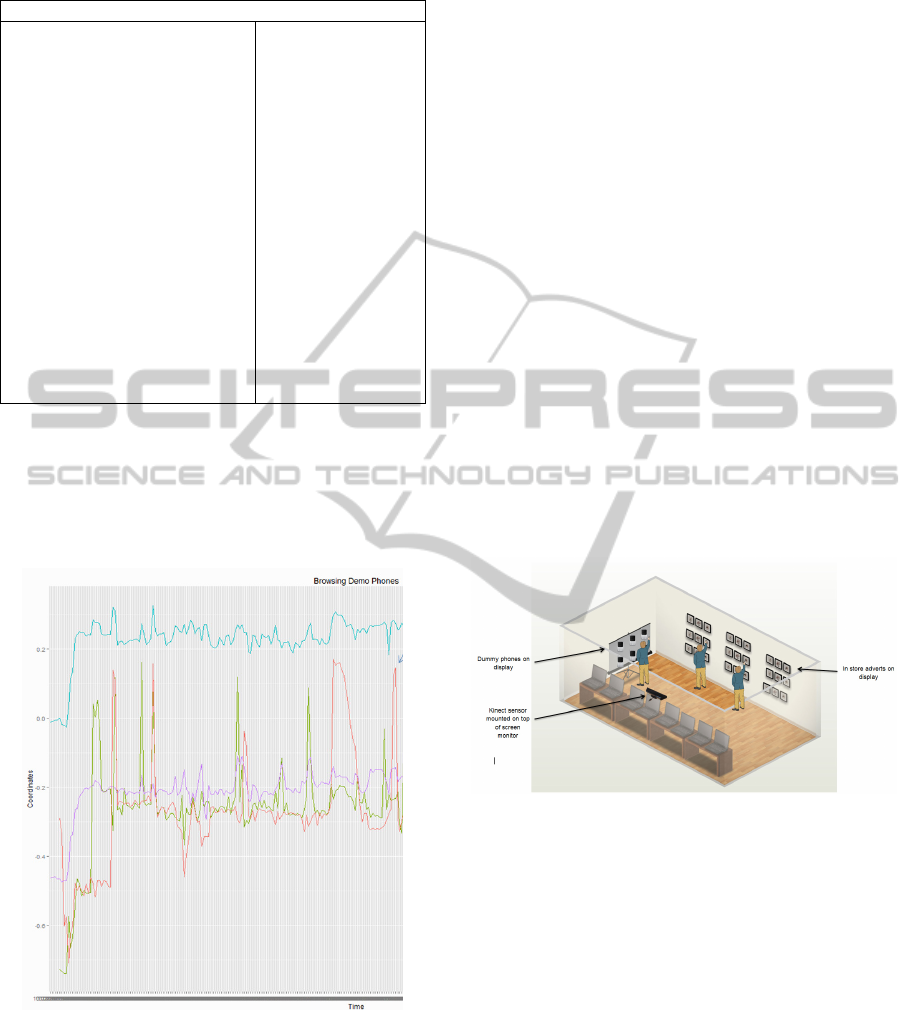

The resulting visualisations of sensor data allow

the programmer or data analyst to identify rules for

specific customer actions (gestured)– e.g. Browsing

and reaching for a phone. The graphical output can

be seen in Figure 3 and includes joints co-ordinates

in different colours. Rule identified in this process

SensingGesturesforBusinessIntelligence

55

Table 1: R Code Descriptions.

Browsing a Phone Display

ggplot(data=mydata,

aes(x=Time)) +

geom_line(aes(y=Hand.Rig

ht.Y,

colour="Hand.Right.Y",

group=1)) +

geom_line(aes(y =

Hand.Left.Y,

colour="Hand.Left.Y",

group=1)) +

geom_line(aes(y =

Head.Y, colour="Head.Y",

group=1)) +

geom_line(aes(y =

Spine.Y,

colour="Spine.Y",

group=1)) +

ylab("Coordinates") +

opts(title="Browsing

Demo Phones

Coordinates")

Time variable has

been used as the X

axis of the graph

against the other

joints listed in the

command line as the

Y axis. The

commencing

command displays

the colour of the

plot line along with

the variable and its

position.

are then coded within the same application presented

in Figure 2, adding the recorded gesture to the

output data file. Once coded, the application is now

ready to capture gestures for use in BI analysis and

reporting. The Kinect sensor and application can

now be started in the retail space.

Figure 3: Sensor Data Visualisation.

4 EXPERIMENATION

Instantiations operationalize constructs, models and

methods (March & Smith, 1995) – typically creating

a working implementation. Here we can use the

implementation to evaluate the effectiveness of the

design – a new sensor driven BI framework.

4.1 Retail Business Context

The aim of the lab experimentation was to define

gestures in terms of skeletal joint movement through

subject’s natural interactions via the use of motion

capture. Encompassed within this is the

identification of gesture rules (e.g. the heuristics for

gesture identification). The experiment took place

in the lab environment (see Figure 4). The aim of the

lab environment is to simulate a real world shopping

(Mobile Telephone Shop) experience for the

purposes of capturing interactions. A whiteboard has

been used to simulate a phone wall display, Point of

Sale (PoS) cashier and a gaming experience. A wall

has been used to display phone advertisements for

subjects to browse. A Kinect sensor has then been

mounted on top of a PC monitor, which provides

motion sensing capacities that capture the subject

standing in the simulated store environment. The

sensed user will have their skeleton joints captured

for motion activity and will be saved in a text format

as it allows interoperability with other applications.

Figure 4: Simulated Retail Environment.

4.2 Captured Data

A number of scenarios were tested in the simulated

environment in order to: 1) Capturing data with the

application, 2) analyse the data in order to identify

rules, 3) code the rules in the applications and then

4) capture gestures. The scenarios (and rules) can be

seen in Table 2. Importantly though, it is the

observation of humans early in this process that

allow for systematic analysis of key activities – and

their associated gestures.

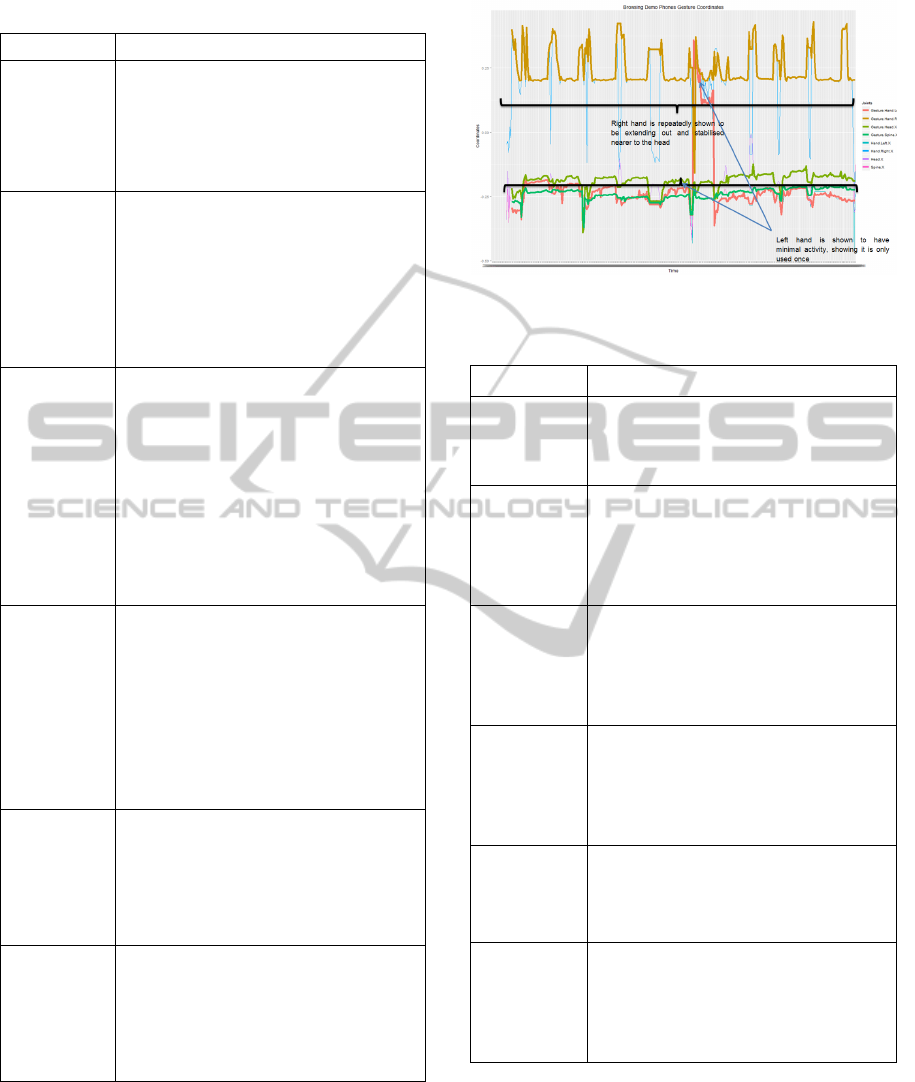

The various joints of the body are analysed to

uncover rules for scenario actions. These are then

coded within the capture application.

SENSORNETS2014-InternationalConferenceonSensorNetworks

56

Table 2: Scenarios and rules identified.

Scenario

Rule

Viewing In

store Ads

Either hand extending out for small

constant period.

Hand extending outwards at the same

position as the head.

Playing

Console

Games

Head and spine joint stay roughly in the

same position throughout activity.

Sudden spikes of hands going up indicate

a process of celebration as well as

expression of sadness when losing in

game.

Returning

Product at

Cashier

The right hand is observed to move in

front of spine and upwards towards the

counter for a moment (returning the

product).

The right hand is observed again to move

in front of spine and upwards towards the

counter which identifies the payment

process.

Paying for

Product at

Cashier

The right hand is observed to be extracted

near to both hips possibly indicating

reaching for wallet.

Right hand is then seen in front of spine

which suggests a payment is in progress

(handing card over to assistant) this is

also indicated by a period of stability as

the right hand is raised indicating a

payment.

Browsing

Demo

Phones

Left/Right hand is shown progressing

away considerably far from the head

(reaching and extracting demo phones

from display).

Right hand is then settled immediately in

front of head as it is being viewed.

Discussion

on Shop floor

Left/Right hand shows movement that is

directly beside of spine. Right hip seen

mostly in one place, also replicated

behaviour in the spine.

Right hand is extracted down next to right

hip.

The resulting system, with rules encoded, is able to

then capture that data necessary for BI reporting.

Iterating through each scenario resulted in a

framework for sensor data analysis and codification

(presented in Table 3).

Figure 5: Sensor Visualisation for rule identification.

Table 3: Sensor BI Framework.

Process Description

Platform

Acquisition

A motion capture solution is created or

acquired to support the capture of

customer’s natural interaction by

tracking their skeleton movement.

Skeletal

Capture

A motion sensor placed in the retail

environment captures customer’s

natural form of interaction. The capture

produces full bodily coordinates that

will be stored for post capture analysis.

Post-

capture

Human

Analysis

Joint coordinates are visualised in a

graph form in order to identify trending

patterns on the different bodily skeletal

joints. The trends provide a means to

define gesture rule identification.

Rule

Codification

The rules identified from the post

capture analysis are implemented into

the capture application, enabling the

capture to identify gestures triggered

by subject’s natural interaction.

Recapture

A motion sensor is placed in the retail

environment that captures gestures

(based on the earlier rule

identification).

End of Day

(EOD)

Business

Intelligence

Reporting

Aggregated gestures identification

reports are produced for viewing by

managers/stakeholders (or integration

with other data sources within a BI

tool)

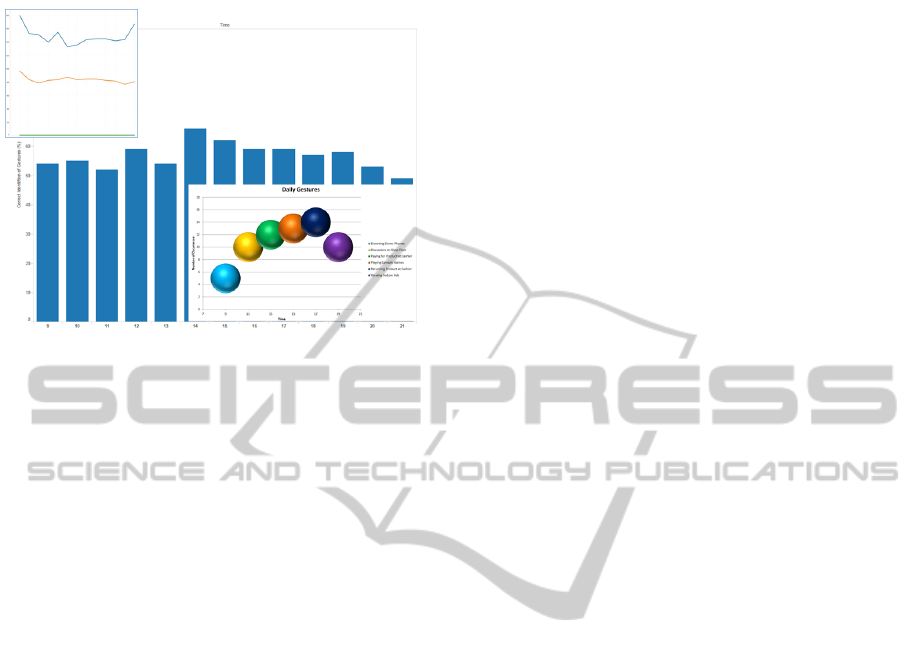

4.3 Business Intelligence

A number of visualisations were developed for

illustrative purposes. Primarily to put the data

capture process in a business context. A sample of

these is shown in Figure 6. The textual output was

easily loaded into commercial grade BI reporting

SensingGesturesforBusinessIntelligence

57

products (which is our case was Tableau).

Figure 6: BI Reporting.

Experimenting with our business intelligence views

and using a new group of human subjects, a bar

chart depicting hourly recognised gestures has been

created to identify the success rate of gesture

recognition (%) throughout the day. The average

identification success rate is just over 50%.

Although disappointing, this was largely due to the

Kinect application calibrated for the few subjects

that took part in the initial test. Despite this, the

framework has proved effective ‘in use’ and offers

interesting possibilities when part of a wider

business intelligence environment, linking data with

further data sources.

4.4 Discussion and Evaluation

The paper has made a contribution in the research

area of motion sensing in a retail space environment.

Utilising the Natural User Interface (NUI) paradigm

commonly found in the gaming industry and

applying it in a retail setting is an effective means of

gathering business intelligence. This paper has

identified that commodity sensor hardware, which in

this case is the Kinect motion sensor, offers a novel

approach to providing strategic organisational

insights into customer activity in the field. This has

the potential to offer substantial competitive benefits

are more nuanced customer interactions are visible.

Production of business intelligence reports provides

additional assistance to the traditional POS reporting

methods currently relied upon and promotes novel

intelligence gathering perspectives. Resulting from

the research is a novel framework that uncovers and

reports on gesture based business intelligence – also

having a wider applicability. The research

contributions of this research paper can be easily

extended and applied practically in other domains,

for example the health sectors and specifically tele-

health for purposes such as monitoring of post-

stroke patients.

4.5 Future Research

There is scope for this research to be progressed

further to form part of the larger business

intelligence platform. During the development

phase, areas of further work were identified:

1) Social Media – The rise of ‘citizen sensor

networks’ provides an opportunity to

understand and analyse data reported by citizen

sensors and the fusion of this data with the

gesture sensed data to identify further potential

trends. Gathering intelligence in this manner

may be able to add a new perspective,

identifying novel business intelligence

(combining physical action and opinion).

2) Data repositories – With the aforementioned

fusion of social data, data repositories stored by

organisations such as transaction histories,

customer data, and internal ERP systems can

also be integrated and fused into the sensed data

which then gives the possibility of building

customer profiles from past data. Data gathered

can be used by many departments in for-profit

organisations, such as marketing departments

for effective use of advertising.

3) Multiple sensors – Employing the use multiple

Kinect (or other) sensors and fusing the

captured date from the sensors is highly likely

to enhance the accuracy of the results obtained.

5 CONCLUSIONS

This paper presents an approach for monitoring and

understanding customer behaviour in-store using

motion sensors. In the design experiment presented,

users performed natural activities in a retail space

that included actions such as browsing phones,

viewing ads and paying for products at the cashier

till. The experiment was carried out in a lab

environment with posters of phones, ads and cashier

point to simulate a retail space. A Kinect sensor was

used to capture movements and R was used to define

rules which automatically captured user gestures. A

framework is outlined that separates the capture of

motion from the gesture identification and business

intelligence reporting. A number of interesting

avenues of further research are apparent from our

SENSORNETS2014-InternationalConferenceonSensorNetworks

58

exploratory work – adding intelligent data analysis

to automatically identify rules as well as integrating

related data sets.

REFERENCES

Advanced Performance Institute, 2013. Business Intelligence

(BI) - What is BI? Training, examples & case studies:

[Online] Available at: http://www.ap-institute.com/

Business Intelligence.html (Accessed 25 July 2013).

Amma, C., Gehrig, D. & Schultz, T., 2010. Airwriting

Recognition using Wearable Motion Sensors. ACM, pp.

10-11.

Benning, M. et al., 2007. A Comparative Study on Wearable

Sensors for Signal Processing on the North Indian

Tabla. Communications, Computers and Signal

Processing.

Burke, R. R., 2002. Technology and the customer interface:

what consumers want in the physical and virtual store.

Journal of the Academy of Marketing Science.

Cao, L., 2008. Behavior Informatics and Analytics: Let

Behavior Talk. Data Mining Workshops.

Computing, 2012. Case Study: Business intelligence at

Carphone Warehouse - 23 Aug 2012 - Computing

News:. (Online) Available at: http://

www.computing.co.uk/ctg/news/2200696/case-study-

business-intelligence-at-carphone-warehouse (Accessed

02 September 2013).

Doody, P. & Shields, A., 2012. Mining Network

Relationships in the Internet of Things. ACM.

Feng, Y. & Liu, Y., 2010. Design of the Low-Cost Business

Intelligence System Based on Multi-agent. Information

Science and Management Engineering (ISME).

Hailo, 2013. HAILO. The Black Cab App:. (Online)

Available at: https://www.hailocab.com/

(Accessed 1 September 2013).

Hester, T. et al., 2006. Using Wearable Sensors to Measure

Motor Abilities following Stroke. Wearable and

Implantable Body Sensor Networks.

Hewlett-Packard, 2013. From the Internet of Things, a

business intelligence bounty – HP Software Discover

Performance:. (Online) Available at: http://

h30458.www3.hp.com/us/us/discover-performance/info-

management-leaders/2013/apr/from-the-internet-of-

things--a-business-intelligence-bounty.html (Accessed 1

September 2013).

Hondori, H. M., Khademi, M. & Lopes, C. V., 2012.

Monitoring Intake Gestures using Sensor Fusion

(Microsoft Kinect and Inertial Sensors) for Smart Home

Tele-Rehab Setting. IEEE.

Ipsos, 2013. Why count customers? | Experts in retail footfall

counting | IPSOS Retail Performance:. (Online)

Available at: http://www.ipsos-retailperformance.com/

WhatWeDo/WhyCountCustomers (Accessed 01

September 2013).

Krneta, D., Radosav, D. & Radulovic, B., 2008. Realization

business intelligence in commerce using Microsoft

Business Intelligence. Intelligent Systems and

Informatics, pp. 1-6.

Leonidas, P., Drakoulis, D., Dres, D. & Smailis, C., 2012.

PLATO - Intelligent Middleware Platform for the

Collection, Analysis, Processing of Data from Multiple

Heterogeneous Sensor Systems and Application

Development for Business Intelligence. Informatics

(PCI).

Luhn, H. P., 1958. A Business Intelligence System. IBM

Journal of Research and Development, Volume 2, pp.

314-319.

March, S. T. & Smith, G. F., 1995. Design and natural

science research on information technology. Decis.

Support Syst., Volume 15, pp. 251-266.

Martin, A., Lakshmi, M. & Prasanna Venkatesan, V., 2012.

An analysis on business intelligence models to improve

business performance. Advances in Engineering,

Science and Management (ICAESM), pp. 503-508.

McRobbie, G., Talati, S. & Watt, K., 2012. Developing

business intelligence for Small and Medium Sized

Enterprises using mobile technology. Information

Society (i-Society).

Microsoft Corporation, 2013. Product Features | Microsoft

Kinect for Windows:. (Online)

Available at: http://www.microsoft.com/en-

us/kinectforwindows/develop/sdk-eula.aspx (Accessed

20 July 2013).

Microstrategy, 2013. MicroStrategy-Mobile-BI-Retail-

Apps.pdf:. (Online) Available at: https://

www.microstrategy.com/Strategy/media/downloads/pr

oducts/MicroStrategy-Mobile-BI-Retail-Apps.pdf.

O’Keeffe, D. T., Gates, D. H. & Bonato, P., 2007. A

Wearable Pelvic Sensor Design for Drop Foot

Treatment in Post-Stroke Patients. Engineering in

Medicine and Biology Society.

PhoneDog Media, 2013. How important are display models

in wireless retail stores? | PhoneDog:. (Online)

Available at: http://www.phonedog.com/2013/

04/23/how-important-are-display-models-in-wireless-

retail-stores/ (Accessed 02 September 2013).

Peffers, K., Tuunanen, T., Rothenberger, M. A. & Chatterjee,

S., 2007. A Design Science Research Methodology for

Information Systems Research. J. Manage. Inf. Syst.,

Volume 24, pp. 45-77.

Ren, Z., Yuan, J., Meng, J. & Zhang, Z., 2013. Robust Part-

Based Hand Gesture Recognition Using Kinect Sensor.

Multimedia, IEEE Transactions on, Volume 15, pp.

1110-1120.

Ridgian, 2011. RidgianBarclaysYourPeopleCS.pdf:. (Online)

Available at: http://www.ridgian.co.uk/downloads/

RidgianBarclaysYourPeopleCS.pdf (Accessed 03

September 2013).

Tvrdikova, M., 2007. Support of Decision Making by

Business Intelligence Tools. Computer Information

Systems and Industrial Management Applications, 2007.

Vassiliadis, P., 1998. Modeling multidimensional databases,

cubes and cube operations. Scientific and Statistical

Database Management.

Vera-Baquero, A., Colomo-Palacios, R. & Molloy, O., 2013.

Business process analytics using a big data approach. IT

Professional.

SensingGesturesforBusinessIntelligence

59

Wang, C. & Chen, H., 2012. From data to knowledge to

action: A taxi business intelligence system. s.l.,

Information Fusion (FUSION).

Xiao, J. et al., 2012. FIMD: Fine-grained Device-free

Motion Detection. Parallel and Distributed Systems

(ICPADS), pp. 229-235.

Xu, L. et al., 2007. Research on Business Intelligence in

enterprise computing environment. Systems, Man and

Cybernetics, pp. 3270-3275.

Zakaria, I., Rahman, B. A. & Othman, A. K., 2012. The

relationship between loyalty program and customer

loyalty in retail industry: A case study. Innovation

Management and Technology Research (ICIMTR).

Zhang, Z., 2012. Microsoft Kinect Sensor and Its Effect.

MultiMedia, IEEE, pp. 4-10.

SENSORNETS2014-InternationalConferenceonSensorNetworks

60