Fostering Information Literacy in German Psychology Students

Using a Blended Learning Approach

Nikolas Leichner, Johannes Peter, Anne-Kathrin Mayer and Günter Krampen

Leibniz Institute for Psychology Information, Universitätsring 15, 54296 Trier, Germany

Keywords: Information Literacy, Blended Learning, Distance Education, Psychology, College Students.

Abstract: This paper reports about the experimental evaluation of a blended learning course for information literacy

tailored to the needs of undergraduate Psychology students. The course consists of three modules delivered

online and two classroom seminars; the syllabus includes scholarly information resources, ways to obtain

literature, and criteria to evaluate publications. For evaluation purposes, a multimethod approach was used:

The students completed an information literacy knowledge test and three standardized information search

tasks (ordered by ascending difficulty) before and after taking the course. A sample of N = 67 undergraduate

Psychology students (n = 37 experimental group, n = 30 waiting control group) participated in the course.

As it was expected, students’ knowledge test scores as well as performance in the search tasks improved

markedly during the course. Results are discussed with regard to the soundness of the evaluation criteria

used and to further development of the course.

1 INTRODUCTION

The term Information literacy is used to describe the

ability to realize when there is a need for infor-

mation, and the ability to identify, locate, and evalu-

ate additional information which is required to meet

this need (National Forum on Information Literacy

n. d.; American Library Association 1989). Against

the background that advances in information tech-

nology lead to a growing number of information

resources, information literacy can be considered a

“basic skills set” (Eisenberg 2008).

As information literacy is of importance in near-

ly all circumstances (e.g. Eisenberg 2008), a clear

definition of information literacy has to be provided

first of all. Hence, we limit our research to infor-

mation literacy in higher education, especially in

Psychology. Our definition of information literacy is

based on the ACRL Psychology information literacy

standards (Association of College and Research

Libraries 2010), because this framework includes

detailed performance indicators. This definition

includes four standards of information literacy:

1) Determining the nature and amount of infor-

mation needed: exemplary performance indicator:

“understands basic research methods and scholarly

communication patterns in psychology necessary to

select relevant resources”;

(2) Assessing information effectively and effi-

ciently: exemplary performance indicator: “selects

the most appropriate sources for accessing the need-

ed information”;

(3) Evaluating information and incorporating in-

formation into one’s knowledge system: exemplary

performance indicator: “compares new information

with prior knowledge to determine its value, contra-

dictions, or other unique characteristics”;

(4) Using the information effectively to accom-

plish a specific purpose; exemplary performance

indicator: “applies new and prior information to the

planning and creation of a particular project, paper,

or presentation”.

We decided to focus on standards one to three, as

our course was devoted primarily to the improve-

ment of information seeking skills. Skills related to

standard four are part of the curriculum of academic

writing courses at German universities; consequent-

ly, they should not be part of our course. With re-

gard to our curriculum, we expected the participants

inter alia to be (better) able to understand scholarly

communication patterns, distinguish between several

research methods (e.g. meta-analysis and empirical

study), to select the most appropriate resource for

their information need and to evaluate the literature

found.

There is indication that incoming students are not

353

Leichner N., Peter J., Mayer A. and Krampen G..

Fostering Information Literacy in German Psychology Students Using a Blended Learning Approach.

DOI: 10.5220/0004795103530359

In Proceedings of the 6th International Conference on Computer Supported Education (CSEDU-2014), pages 353-359

ISBN: 978-989-758-021-5

Copyright

c

2014 SCITEPRESS (Science and Technology Publications, Lda.)

sufficiently information literate (Smith et al. 2013)

and that students do not become information literate

during the course of their studies (Warwick et al.

2009). To address this need, almost every university

library in Germany provides information literacy

courses that complement the academic writing

courses offered by university departments. However,

most of these courses are two-hour events covering

only a facet of academic information literacy (e.g.

the use of bibliographic databases). Due to limited

time, these courses offer few possibilities to practice

information seeking, or to ask questions and discuss

matters. As there is no standardized test to assess

information literacy for German speaking popula-

tions, in most cases, evaluation efforts are based on

feedback provided by participants. Finally, most

courses are not tailored to specific disciplines, or

fields (e.g. economics, social sciences). This is prob-

lematic, as scholarly communication patterns and

information resources differ between disciplines.

Course content should therefore be adapted to the

needs of Psychology students (e.g. Thaxton et al.

2004).

The aim of this is study is to create a blended

learning approach to teach information literacy to

undergraduate students in Psychology. At the start,

the course will exist alongside the courses offered by

university libraries. However; we hope that our

materials (at least some of them) will later on be

used by university libraries to offer courses tailored

to Psychology students. An important component of

our effort is carrying out an evaluation study based

on performance indicators using a control group.

The main reason for choosing a blended learning

approach was to give participants the chance to work

on the online materials adapted to their individual

schedule. This is particularly important as students

are often pressed for time. However, there should

also be traditional classroom teaching as online

learning alone seems to be fraught with higher drop-

out rates (Carr 2000). There is research indicating

that blended learning can reduce dropout rates

(López-Pérez et al. 2011) and is more effective than

traditional classroom teaching or online learning

(Clardy 2009).

2 OUTLINE OF THE COURSE

2.1 Content

The content of the course was determinated based on

the Psychology specific information literacy stand-

ards provided by the ACRL (Association of College

and Research Libraries 2010) and based on our own

considerations. The target group were undergraduate

students, so the content was mainly basic infor-

mation about

scholarly communication patterns in Psychology

and common publication types (e.g. empirical ar-

ticle, review article, edited books);

different information resources (inter alia biblio-

graphic databases, internet resources) and their

advantages and disadvantages;

appropriate use of these resources (e.g. under-

standing of the thesaurus and of Boolean opera-

tors);

inclusion of resources provided by related disci-

plines (e.g. PubMed, ERIC) in case the topic is

of interdisciplinary nature;

options for the acquisition of literature (e.g. use

of electronic journal subscriptions, the local li-

brary catalogue, or interlibrary loan);

criteria for the selecting publications beyond

their content, e.g. Journal Impact Factor.

2.2 Structure

As mentioned before, the course combined online

and traditional classroom teaching. In total, there

were three modules to be completed online and two

classroom seminars. We expected that completing

the online materials would take up to four hours;

both classroom seminars were designed to take 90

minutes each. The course was scheduled to be com-

pleted within two weeks, what seems a reasonable

workload for college students.

The concept of the course envisaged that most of

the knowledge should be imparted by the online

materials, while the main purpose of the classroom

seminars was to provide an opportunity to solve

information problems under the guidance of the

instructor and to ask questions. For this reason, par-

ticipants had to complete certain online materials

before attending the related classroom seminar.

To present an example, online modules 1 and 2

were related to the first classroom seminar. These

modules dealt mainly with scholarly communication

patterns, information sources (and their functions),

as well as the acquisition of literature. A central

element of the related first seminar was the task to

find scientific literature on the question how distrac-

tions impact car driving performance. At the begin-

ning of the seminar, the task was presented to the

participants. Then, the task was split into steps (de-

termining the information need, finding search

terms, conducting the search, selecting literature)

and participants worked on the steps either individu-

CSEDU2014-6thInternationalConferenceonComputerSupportedEducation

354

ally, or in small groups. The instructor was available

in case questions arose. After completing each step,

one of the participants (one group, respectively) had

to present his/her outcome to the other participants

and the outcome was discussed.

All online modules were provided via the e-

learning platform “Moodle”. Most of the content

was presented using short passages of text which

were enriched by illustrations or screenshots of the

relevant computer programs. This content was pro-

vided using lessons, or pages inside of Moodle. The

materials also included several videos. At the end of

every section of the course, short quizzes were pro-

vided, so that the participants could apply their

knowledge right after learning.

3 EMPIRICAL STUDY

3.1 Instruments

In most cases, information literacy is assessed using

knowledge tests consisting of multiple choice items

(e.g. Project SAILS 2013; Center for Assessment

and Research Studies 2013; Noe and Bishop 2005).

These tests have been shown to provide a reliable,

valid and economic way of measuring information

literacy. However, as information literacy is a com-

plex ability, it can be doubted whether a knowledge

test can assess information literacy comprehensive-

ly. For instance, appropriate information seeking

behavior is an elementary part of being information

literate (Timmers and Glas 2010) and the assessment

of information seeking behavior requires observation

(e.g. Julien and Barker 2009), or self-reports (e.g.

Timmers and Glas 2010).

Besides, several authors argue that competencies

should be assessed using real-life tasks instead of

knowledge tests (Shavelson 2010; McClelland

1973).

For these reasons, we decided to use a multi-

method approach consisting of two standardized

tests which were applied in a laboratory setting: a

knowledge test and information search tasks.

The knowledge test consisted of 35 multiple-

choice items and had previously been developed by

our research group. When developing the items, we

relied on Standards 1 to 3 of the aforementioned

information literacy definition (Association of Col-

lege and Research Libraries 2010). A sample item is:

Which differences exist between Internet search

engines (e.g. Google Scholar) and bibliographic

databases?

a. bibliographic databases usually have a thesau-

rus search

b. Boolean operators can only be used with biblio-

graphic databases

c. the order of items on the results page is not af-

fected by the number of clicks on each item

The test had been used in a previous study with a

sample of N = 184 participants who had completed

the test online. In this study, an acceptable internal

consistency of the test of Cronbach’s Alpha α = 0.49

was found. Furthermore, it was found that Master

level-students scored significantly higher than un-

dergraduate students in their first and second year.

These results can be considered an indication of the

validity of the test.

The information search tasks are based on a tax-

onomy of tasks from which instances of tasks can be

derived. When reviewing the literature on infor-

mation search tasks, we found that the existing tax-

onomies are of a descriptive nature and do not pro-

vide indications for the difficulty of a certain task

type (e.g. Kim 2009). Another problem with these

taxonomies was that they had been developed to

classify non-scholarly search tasks in electronic

resources. There are several differences between

academic and non-scholarly searches; the most im-

portant one might be the use of bibliographic data-

bases (e.g. PsycINFO) instead of internet search

engines (e.g. Google, Yahoo!).

For these reasons, we decided to develop a task

taxonomy specifically for academic information

search tasks in Psychology. The taxonomy provides

three types of information search tasks differing in

their difficulty. To be more precise, the tasks differ

in the abilities and competencies required to solve

the task. The taxonomy is designed in a way that

abilities required to solve tasks of the first type are

also required to solve tasks of type 2. However,

solving tasks of type 2 requires additional compe-

tencies, as do tasks of type 3. The taxonomy can be

used to develop several tasks of the same structure

and difficulty which can be used for assessing in-

formation literacy. For illustration purposes, a type 2

task (medium difficulty) is provided:

Are there meta-analyses published after 2005

investigating “risk factors” for the development

of a “Posttraumatic stress disorder”? If possi-

ble, indicate two publications.

To solve this task, the participant has to under-stand

the keyword search function in a bibliographic data-

base, and needs an understanding of Boolean opera-

tors and complex filter functions in bibliographic

databases to find publications using a certain meth-

FosteringInformationLiteracyinGermanPsychologyStudentsUsingaBlendedLearningApproach

355

odology (e.g. meta-analysis). Type 1 tasks are easier

as they do not require an understanding of Boolean

operators and complex filter functions. Type 3 tasks

are more difficult, as they additionally require the

participant to identify appropriate search terms be-

fore conducting the search. To score the tasks, ru-

brics for scoring the search task outcome (which

publications were found) and the procedure applied

by the students when completing the tasks were

created. In line with the rubric for scoring the out-

come, scores were awarded depending on how close

the publications found come to the requirements

mentioned in the task description (e.g. thematic

focus of the publication, publication date). As stated

in the rubric for scoring the procedure, scores were

awarded for working on the tasks in an efficient and

information literate way as defined by the infor-

mation literacy standards (Association of College

and Research Libraries 2010). For example, for a

type 2 task, the maximum number of procedure

scores was rewarded if the participant solved the

task using bibliographic databases, used Boolean

operators to combine two search terms and limited

the results using the corresponding functions of the

database.

3.2 Method

3.2.1 Participants

The sample consisted of N = 67 undergraduate Psy-

chology students who took the course. Out of these

students, 34 were first year students, while 33 were

second year students. The average age was 21.67

(SD = 2.38). Participants had agreed to additionally

participate in three data collection sessions for which

they were compensated. Participants were randomly

assigned to one of two groups; group 1 (experi-

mental group) consisted of n = 37 participants, group

2 (waiting control group) of n = 30.

3.2.2 Procedure

The duration of the evaluation study was four weeks,

while the actual course took only two weeks. Data

collection 1 took place right at the start of the evalu-

ation study. Subsequently, group 1 participated in

the course, while group 2 served as a waiting control

group. Two weeks later, when the course was com-

pleted for group 1, data collection 2 took place.

After that, group 2 participated in the course. The

final data collection took place after all participants

had completed the course.

The data collections were scheduled in the com-

puter lab of Trier University. Students were tested in

groups of 15 to 22 participants under the supervision

of two experimenters. They first completed three

information search tasks (one task of each type),

followed by two questionnaires concerning episte-

mological beliefs, and the information literacy

knowledge test.

To complete the information search tasks, the

participants could use all resources available from

these computers (access to the internet, to biblio-

graphic databases, and to the online library cata-

logue). The information search tasks were presented

ordered by difficulty. Participants were required to

record the publications found by using input boxes

that were provided by the software used. After the

completion of every task, the participants had to

answer several questions concerning the procedure

of their search. These data were the basis for scoring

the procedure. As it would be beyond the scope of

this paper, the results concerning the epistemological

beliefs questionnaires are not reported.

3.2.3 Hypotheses

With regard to the search tasks, we expected that

those tasks requiring more abilities should be more

difficult; in this case, we expected type 3 tasks to be

the most difficult followed by type 2 tasks, which, in

turn, should be more difficult than type 1 tasks (Hy-

pothesis 1).

As explained above, there were three variables

designed to assess information literacy: outcome and

procedure of the search tasks, and the knowledge

test. We expected to find significant correlations

among these instruments. As the knowledge test had

already been tried and tested in a different study,

finding correlations between the test and the search

tasks would corroborate the status of the search tasks

as indicator of information literacy (Hypothesis 2).

Furthermore, we expected that participants

would score higher on all instruments after partici-

pating in the course. Specifically, group 1 should

outperform group 2 at data collection 2. At the final

data collection, there should not be any difference

between the groups (Hypothesis 3).

3.3 Results

Before the course could be evaluated, the infor-

mation search tasks had to be scored independently

by two raters. The inter-rater-reliability (correlation

between the scores awarded by the two raters) was

in the range from r = 0.62 to r = 0.92; most correla-

tions were above r = 0.70. In those cases where the

scores differed, the raters agreed on one solution

which was used for the analyses.

CSEDU2014-6thInternationalConferenceonComputerSupportedEducation

356

The first hypothesis to be examined was whether

the expected order of task difficulties could be veri-

fied empirically. Data from data collection 1 is pre-

sented in table 1. As can be seen, tasks of type 3

were more difficult than tasks of type 2, which, in

turn, were more difficult than type 1 tasks. The table

shows the percentage of the maximum score for the

different tasks types.

Table 1: Percentage of maximum score for search task

outcome and procedure at data collection 1.

task type outcome procedure

1 77% 55%

2 50% 46%

3 32% 36%

For the following analyses, the outcome and proce-

dure scores of each data collection were summed up

separately, so that 2 scores for each data collection

resulted. These scores were scaled, in order to re-

strict their range from 0 to 1 and are presented in

table 2. Before using these scores for evaluating the

course, we determined whether there were differ-

ences between the two groups of participants before

the course started. Our analysis revealed that there

were no differences between the groups, neither on

the outcome scores (t[65] = 1.34, n.s.), nor on the

procedure scores (t[65] = 1.23, n.s.). Furthermore,

the two groups did not differ in their performance on

the knowledge test (t[65] = 0.78, n.s.). Scores on the

information literacy knowledge test were also scaled

to restrict their range from 0 to 1, and can be found

in table 3.

To examine the second hypothesis, correlations

between the scores on the knowledge test and the

two search task variables were computed using data

from data collection 1. It was decided to analyze

data from data collection 1 only, as the performance

at the following data collections reflects to a great

extent how much the participants have benefited

from the course, so the results might be distorted.

The outcome and procedure scores of the search

tasks correlated significantly (r = 0.22, p < 0.05),

even though the correlation was weak. Both scores

also correlated significantly with the performance on

the knowledge test (for the outcome scores r = 0.29,

p < 0.01, and the procedure scores r = 0.48, p < 0.01,

both one-tailed).

To evaluate the course (Hypothesis 3), the three

information literacy performance indicators were

analyzed separately. For each variable, a repeated

measures analysis of variance (ANOVA) was com-

puted. The time of data collection was a within sub-

jects factor, while group membership was a be-

tween-subjects factor. The respective information

literacy performance indicator was used as depend-

ent variable.

The performance on the knowledge test was ana-

lyzed first. The analysis revealed a significant main

effect of the within-subjects factor (F[2,130] =

216.53, p < 0.01) and a significant interaction of the

two factors (F[2,130] = 73.13, p < 0.01), what is

depicted in figure 1. To analyze group differences, a

t-test was computed for every data collection. At

data collection 1, there was no difference between

the groups (t[65] = 0.78, n.s.). At data collection

two, a significant difference could be found (t[65] =

10.47, p < 0.01), indicating that group 1 outper-

formed the other group. There was no significant

difference at data collection 3 (t[65] = 0.37, n.s.).

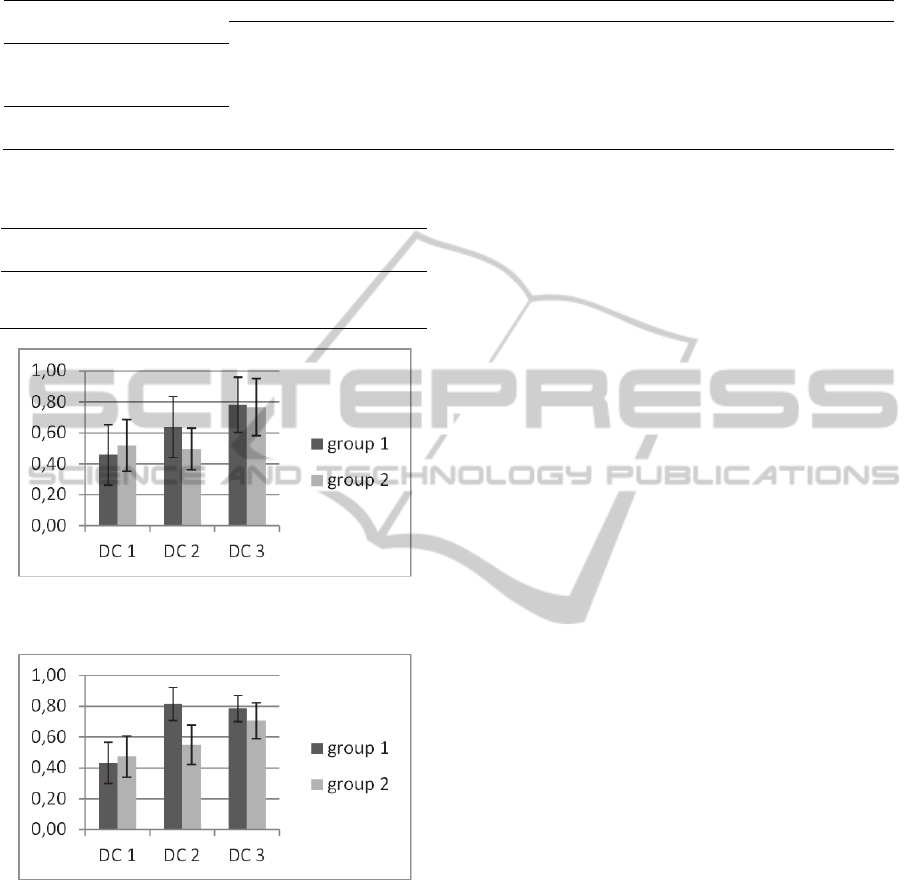

Next, the outcome scores of the search tasks

were analyzed. The ANOVA revealed a significant

main effect of the within-subjects factor F[2,130] =

45.77, p < 0.01) and a significant interaction of the

two factors (F[2,130] = 5.45, p < 0.01). To investi-

gate the pattern in more detail, t-tests were calculat-

ed to compare the two groups at each data collec-

tion. There were no significant differences at data

collections 1 and 3 (t[65] = 1.33 and t

[65] = 0.32,

respectively). However, the two groups differed at

data collection 2 (t[65] = 3.32, p < 0.01). Once

again, group 1 outperformed group 2, as can be seen

in figure 2.

Finally, the procedure scores of the search task

were analyzed. The ANOVA revealed a significant

main effect of the within-subjects factor F[2,130] =

148.46, p < 0.01) and a significant interaction of the

two factors (F[2,130] = 37.38, p < 0.01). Once

again, t-tests were applied to analyze group differ-

ences. There was no significant difference at data

collection 1 (t[65] = 1.23, n.s.), but significant dif-

ferences at data collections 2 (t[65] = 9.21, p <0.01)

and 3 (t[65] = 3.21, p < 0.01) in such a way that

group 1 scores higher than group 2, as is displayed

in figure 3.

Figure 1: Mean scores (and standard deviations) on the

information literacy test. DC = data collection.

0,00

0,20

0,40

0,60

0,80

1,00

DC1DC2DC3

group1

group2

FosteringInformationLiteracyinGermanPsychologyStudentsUsingaBlendedLearningApproach

357

Table 2: Mean scores (and standard deviations) for the outcome and procedure scores.

data collection 1 data collection 2 data collection 3

outcome

group 1 (n=37) 0.45 (0.19) 0.63 (0.19) 0.78 (0.17)

group 2 (n=30) 0.51 (0.16) 0.49 (0.13) 0.76 (0.18)

procedure

group 1 (n=37) 0.43 (0.13) 0.81 (0.10) 0.78 (0.08)

group 2 (n=30) 0.47 (0.13) 0.54 (0.12) 0.70 (0.11)

Table 3: Mean scores (and standard deviations) for the

information literacy knowledge test.

group

data

collection 1

data

collection 2

data

collection 3

group 1 0.59 (0.06) 0.76 (0.05) 0.75 (0.05)

group 2 0.61 (0.06) 0.62 (0.05) 0.75 (0.05)

Figure 2: Outcome scores (and standard deviations) of the

information search tasks.

Figure 3: Procedure scores (and standards deviations) of

the information search tasks.

3.4 Discussion

The results show that the hypotheses were con-

firmed. The first two hypotheses relate to the sound-

ness of the evaluation instruments. First, the analysis

of the search task difficulties indicated that the ex-

pected order of task difficulties could be verified

empirically. This shows that those tasks requiring

more competencies are also harder to solve for the

participants, what can be seen as an indication of

validity for the search tasks and the underlying task

taxonomy. The second hypothesis, postulating that

there would be significant correlations among all

three information literacy performance indicators,

could also be upheld. The fact that the correlations

are far from perfect lead us to the conclusion, that all

three instruments capture different facets of the

concept information literacy. It is of significance

that search task outcome and procedure scores both

correlated significantly with the knowledge test, as

this is a signal that the search tasks are valid, be-

cause the test had already been tested in a different

study.

The third and most important hypothesis was that

participation in the course improves information

literacy. As can be seen in figures 1 to 3, participa-

tion in the course improved information literacy on

all three performance indicators. To be exact, the

hypothesis was that group 1 would outperform group

2 at data collection 2. This was hypothesized be-

cause at that time, group 1 had already taken the

course, while group 2 had not. At data collection 3,

there should not be any group differences left. This

pattern could be observed when analyzing the scores

on the knowledge test and the outcome scores of the

search tasks. Analysis of the procedure scores dis-

played the same pattern at data collections 1 and 2.

At data collection 3, however, group 1 still per-

formed better, even though at this time both groups

had taken the course. A more detailed analysis re-

vealed that both groups improved; however, group 1

performance was enhanced stronger. No substantial

explanation for this result can be found except that

this might be random variations due to relatively

small sample size. As mentioned above, group sizes

were n = 30 and n = 37, respectively. Notwithstand-

ing that participants had been assigned to one of the

groups in a randomized way, it cannot be ruled out

that some group 1 participants learned more due to

individual differences. As this difference can only be

observed on one of the three performance indicators,

it can be ascribed to random variations. The hypoth-

esis can still be confirmed as both groups had im-

proved by taking the course. It should also be men-

tioned that there were significant differences be-

tween the groups at data collection 2. At this time, a

0,00

0,20

0,40

0,60

0,80

1,00

DC1DC2DC3

group1

group2

0,00

0,20

0,40

0,60

0,80

1,00

DC1DC2DC3

group1

group2

CSEDU2014-6thInternationalConferenceonComputerSupportedEducation

358

treatment group was compared to a waiting control

group. The group differences show that participants

did not become more information literate without

training, corroborating the attribution of information

literacy improvements to the participation in the

course.

To sum up, the results show that the information

literacy course is effective. So, this research adds to

the field a blended learning course that is tailored to

Psychology students and has been rigorously evalu-

ated. In the future, it might be developed further by

adding elements tailored to Master levels students.

As this course was tailored to Bachelor students, the

participants were mainly taught the essentials of

seeking academic information. To make carrying out

the course more resource efficient, it might also be

an idea to leave out the classroom seminars. A fur-

ther evaluation study might show whether this is

equally effective.

REFERENCES

American Library Association. 1989. Presidential commit-

tee on information literacy. Final Report [online].

Chicago, IL: American Library Association. Available

at: http://www.ala.org/acrl/publications/whitepapers/

presidential [Accessed 30 September 2013].

Association of College and Research Libraries. 2010.

Psychology information literacy standards [online].

Chicago, IL: American Library Association. Available

at: http://www.ala.org/acrl/standards/psych_info_lit

[Accessed 19 March 2013].

Carr, S. 2000. As distance education comes of age, the

challenge is keeping the students. Chronicle of Higher

Education 46(23), pp. A39-A41.

Center for Assessment and Research Studies. 2013. In-

formation literacy test (ILT) [online]. Harrisonburg,

VA: James Madison University. Available at:

http://www.madisonassessment.com/uploads/Manual-

ILT-2013.pdf [Accessed 29 January 2014].

Clardy, A. 2009. Distant, on-line education: effects, prin-

ciples and practices [online]. Available at:

http://files.eric.ed.gov/fulltext/ED506182.pdf [Ac-

cessed 29 January 2014].

Eisenberg, M. B. 2008. Information literacy: essential

skills for the information age. DESIDOC Journal Of

Library & Information Technology 28(2), pp. 39–47.

Julien, H. and Barker, S. 2009. How high-school students

find and evaluate scientific information: a basis for in-

formation literacy skills development. Library & In-

formation Science Research 31(1), pp. 12–17.

Kim, J. 2009. Describing and predicting information-

seeking behavior on the Web. Journal of the American

Society for Information Science and Technology 60(4),

pp. 679–693.

López-Pérez, M., Pérez-López, M. and Rodríguez-Ariza,

L. 2011. Blended learning in higher education: stu-

dents’ perceptions and their relation to outcomes.

Computers & Education 56(3), pp. 818–826.

McClelland, D. C. 1973. Testing for competence rather

than for "intelligence". American psychologist 28(1),

pp. 1–14.

National Forum on Information Literacy. n. d. What is

information literacy? [online]. Cambridge, MA: Na-

tional Forum on Information Literacy. Available at:

http://infolit.org/?page_id=3172 [Accessed 6 June

2012].

Noe, N. W. and Bishop, B. A. 2005. Assessing Auburn

University Library's tiger information literacy tutorial

(TILT). Reference Services Review 33(2), pp. 173–

187.

Project SAILS. 2013. Project SAILS information literacy

assessment [online]. Kent, OH: Kent State University.

Available at: https://www.projectsails.org/ [Accessed

16 August 2013].

Shavelson, R. J. 2010. On the measurement of competen-

cy. Empirical research in vocational education and

training 2(1), pp. 41–63.

Smith, J. K., Given, L. M., Julien, H., Ouellette, D. and

DeLong, K. 2013. Information literacy proficiency:

assessing the gap in high school students’ readiness

for undergraduate academic work. Library & Infor-

mation Science Research 35(2), pp. 88–96.

Thaxton, L., Faccioli, M.B. and Mosby, A.P. 2004. Lever-

aging collaboration for information literacy in psy-

chology. Reference Services Review 32(2), pp. 185–

189.

Timmers, C. F. and Glas, C. A. W. 2010. Developing

scales for information-seeking behaviour. Journal of

Documentation 66(1), pp. 46–69.

Warwick, C., Rimmer, J., Blandford, A., Gow, J. and

Buchanan, G. 2009. Cognitive economy and satisfic-

ing in information seeking: a longitudinal study of un-

dergraduate information behavior. Journal of the

American Society for Information Science and Tech-

nology 60(12), pp. 2402–2415.

FosteringInformationLiteracyinGermanPsychologyStudentsUsingaBlendedLearningApproach

359